A Hybrid Approach for Image Acquisition Methods Based on Feature-Based Image Registration

Abstract

1. Introduction

2. Related Work

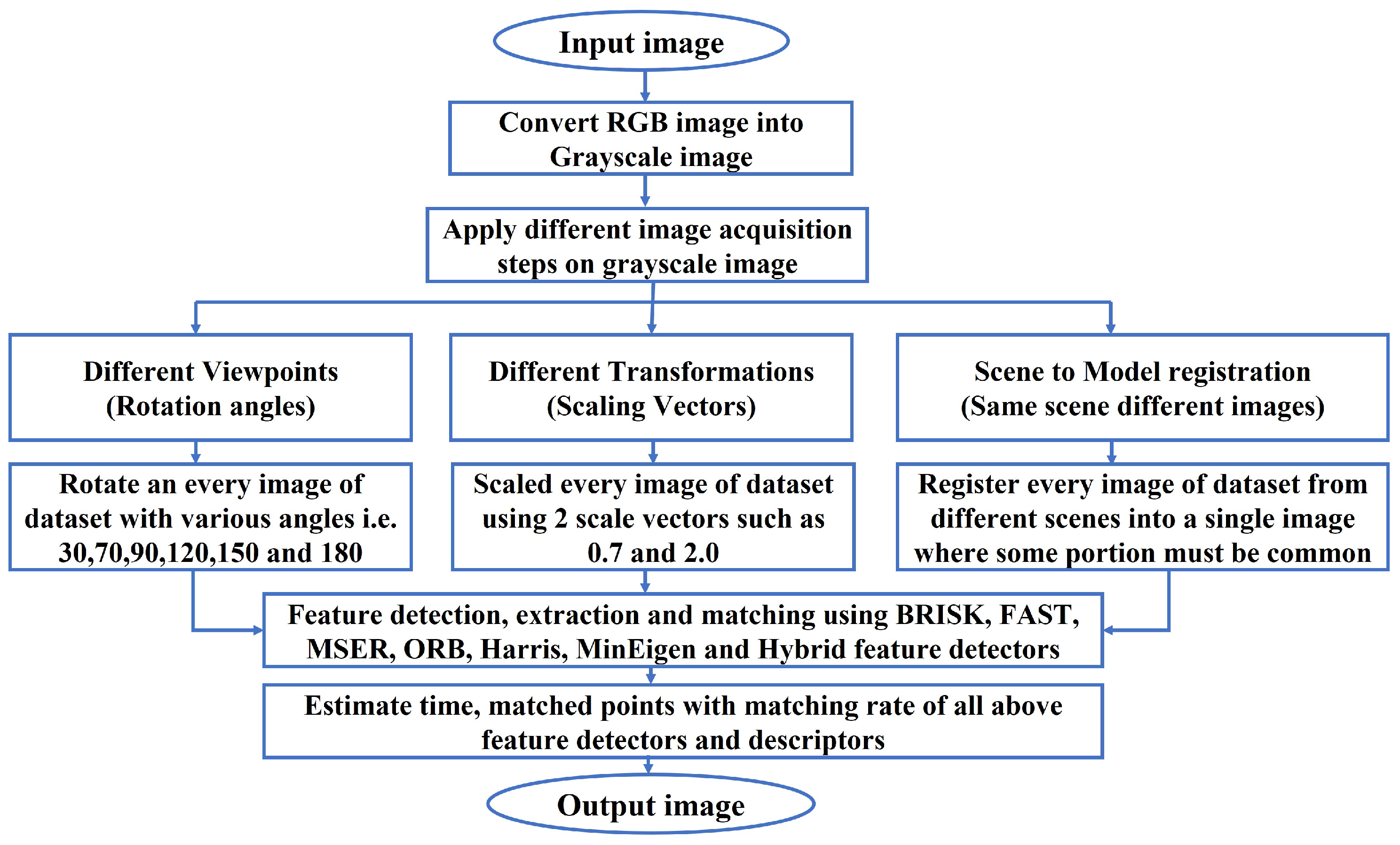

3. Methodology

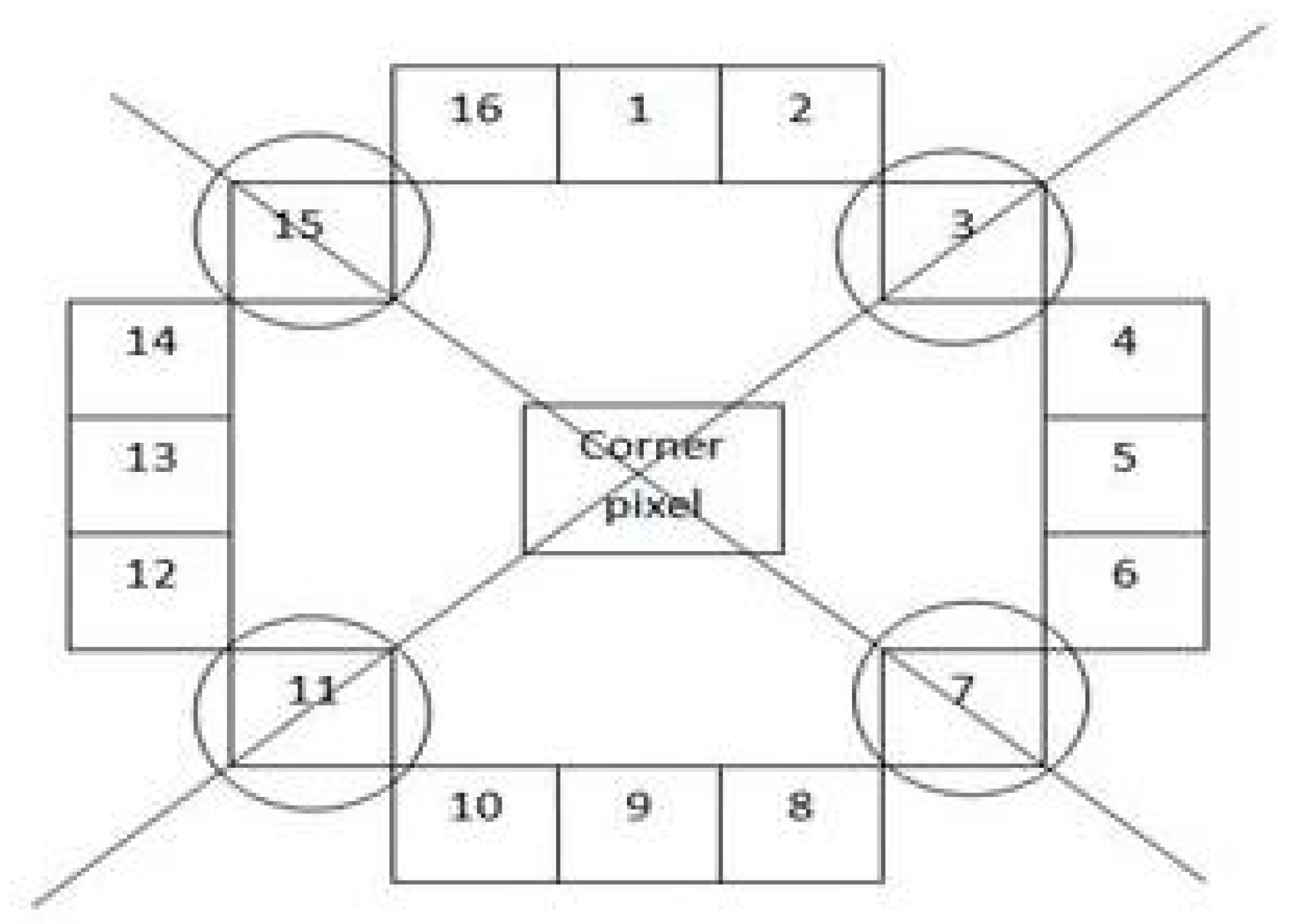

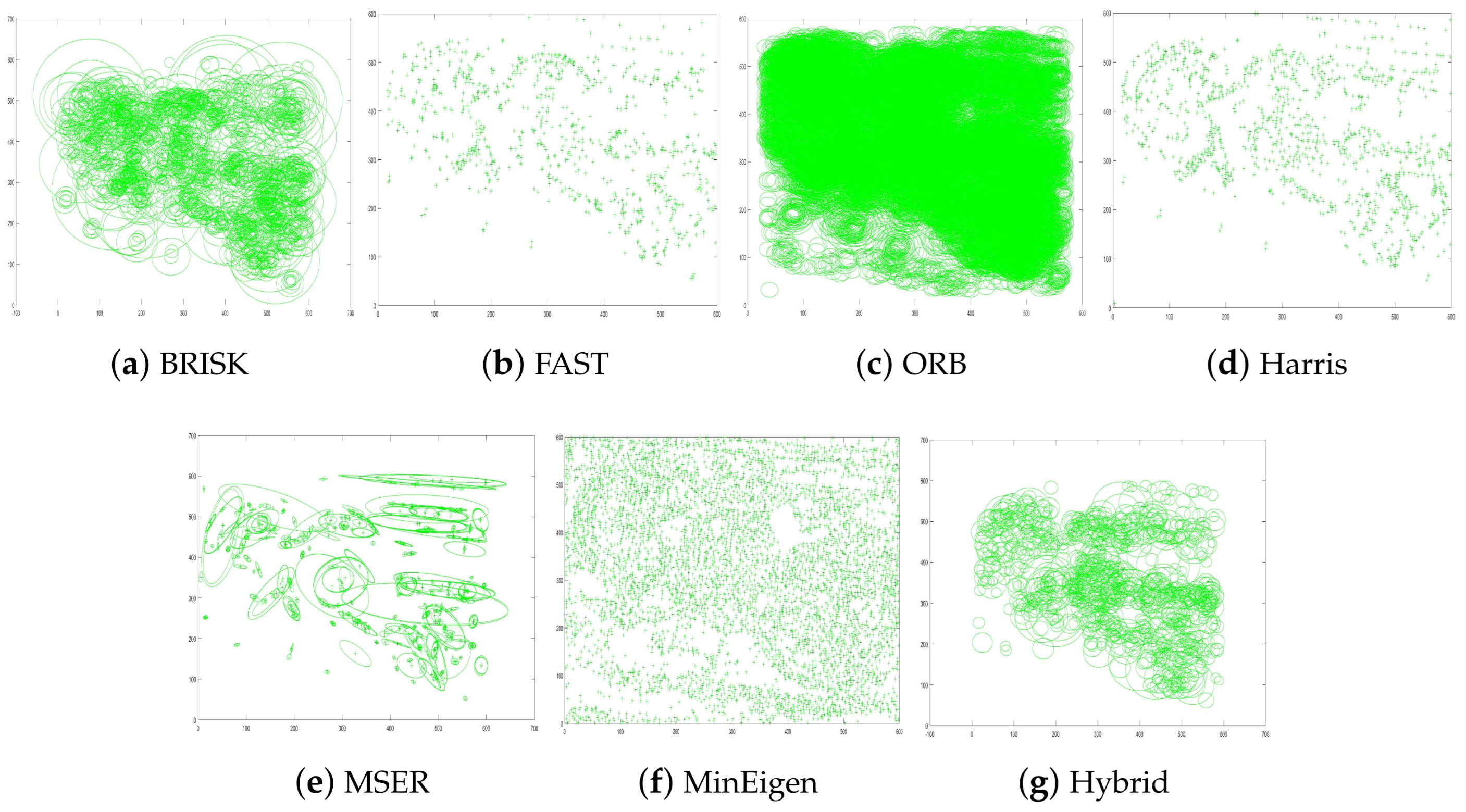

3.1. Feature Detectors and Descriptors

Hybrid Feature-Detection Technique

3.2. Feature-Based Image Registration (FBIR)

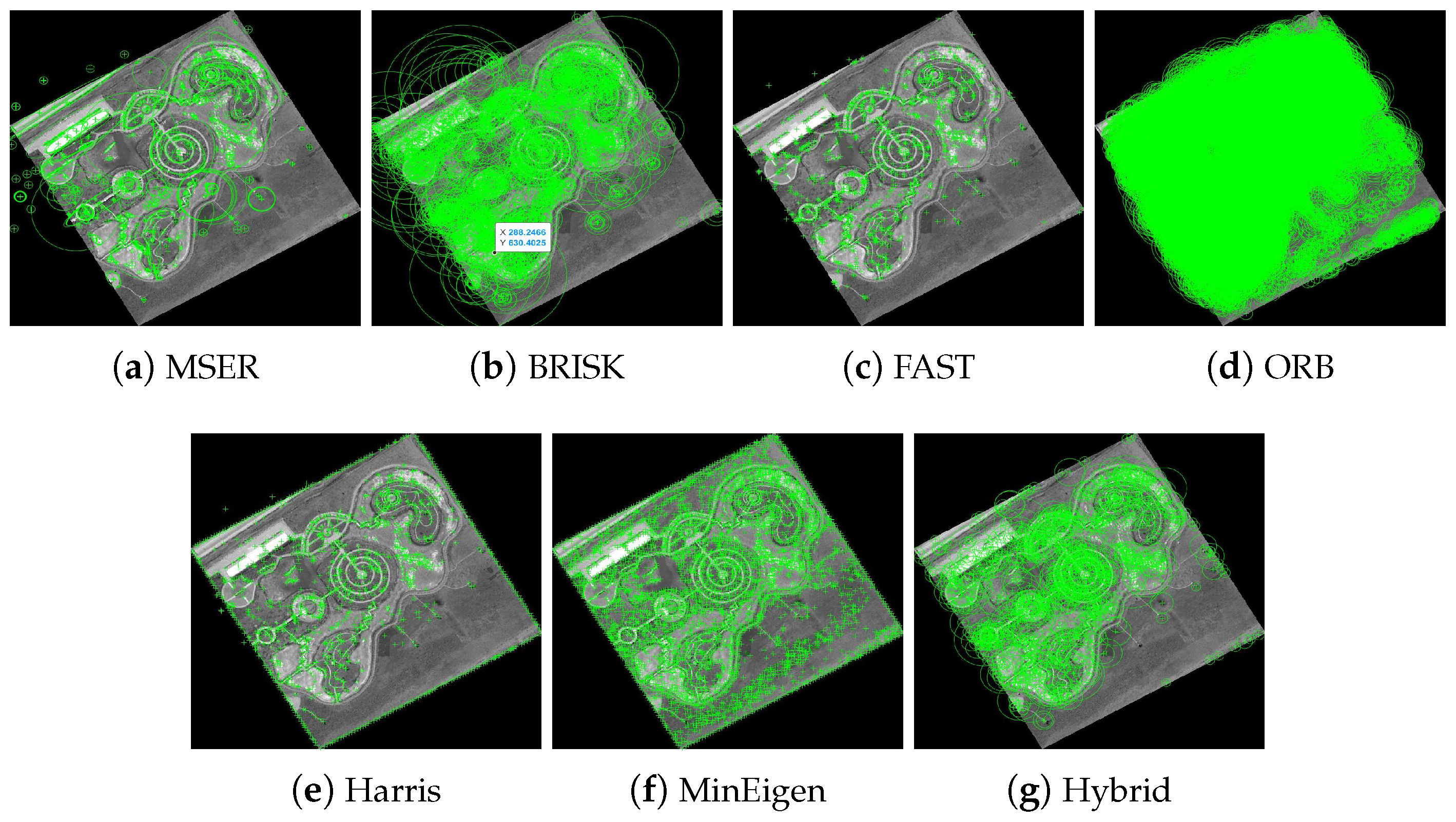

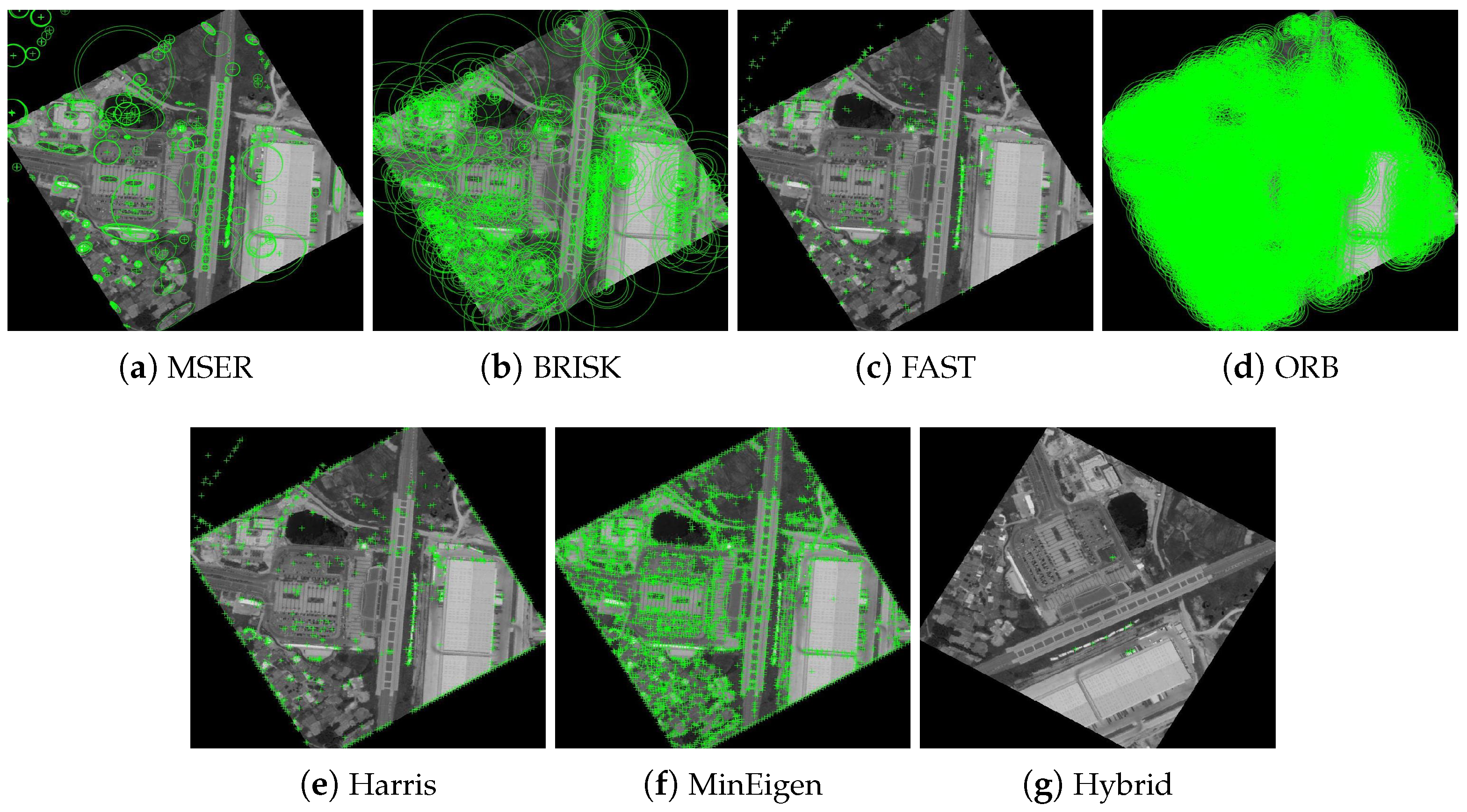

3.2.1. Feature Detection and Extraction Using Proposed Hybrid Feature Detector

| Algorithm 1 FBIR with Proposed Hybrid Algorithm. |

| Require: Original image Ensure: Registered image using FBIR with hybrid feature detector and descriptor

|

| Algorithm 2 Hybrid Feature-Detection Process. |

| Require: Image Ensure: Keypoints

|

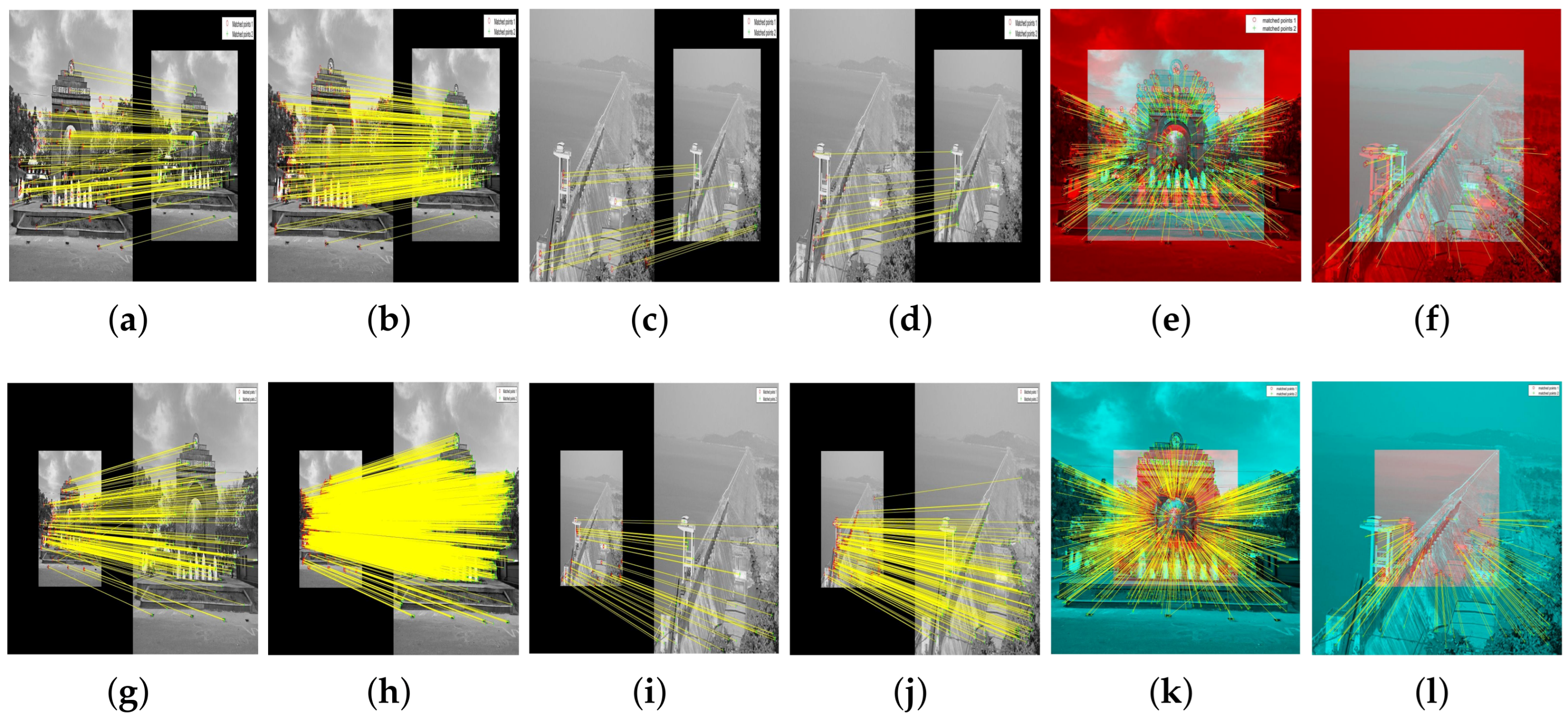

3.2.2. Feature Matching Using a Hybrid Algorithm

3.2.3. Feature-Based Transform Model Estimation

3.2.4. Image Resampling and Transformation

4. Simulation and Results

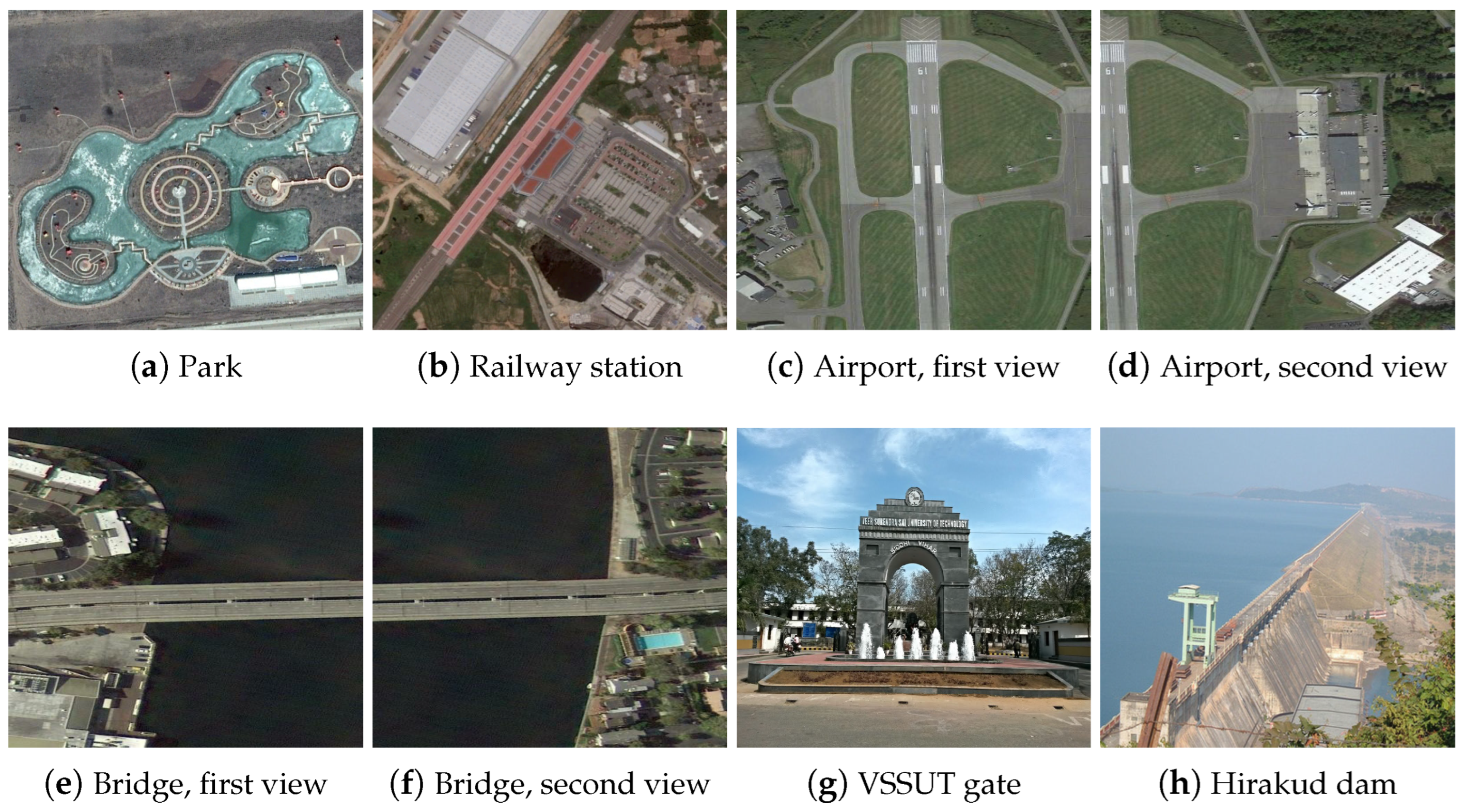

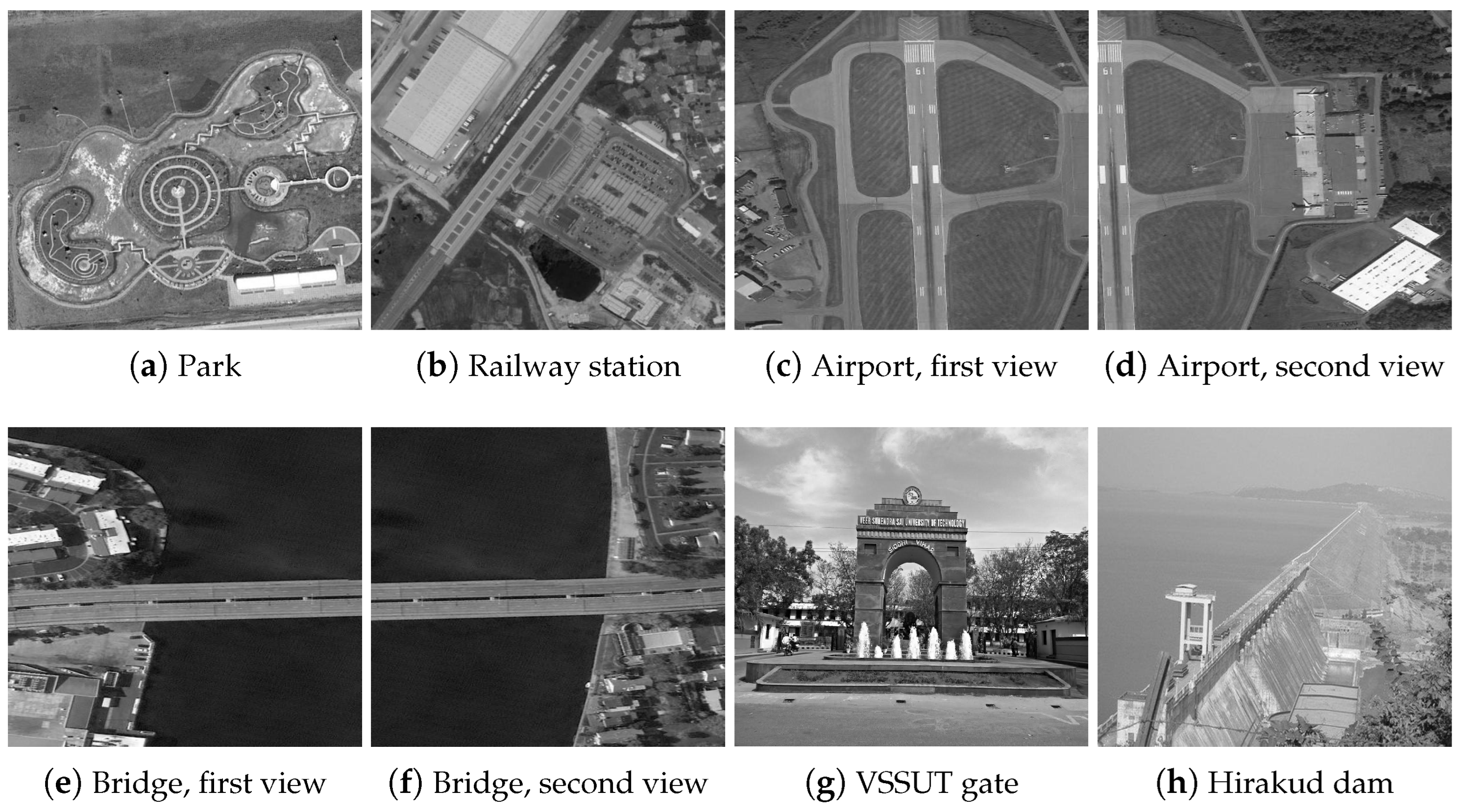

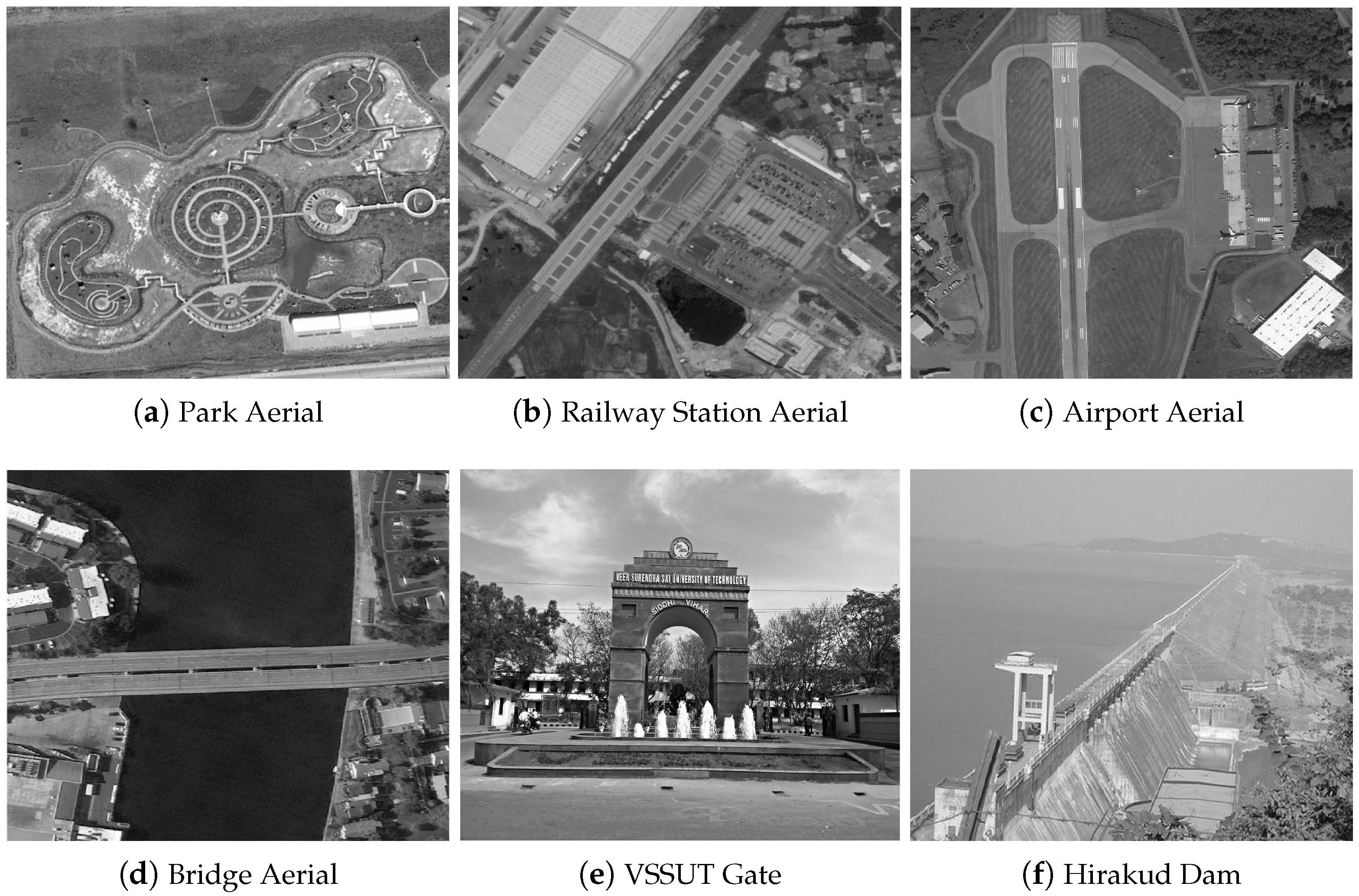

4.1. Experimental Setup and Image Data

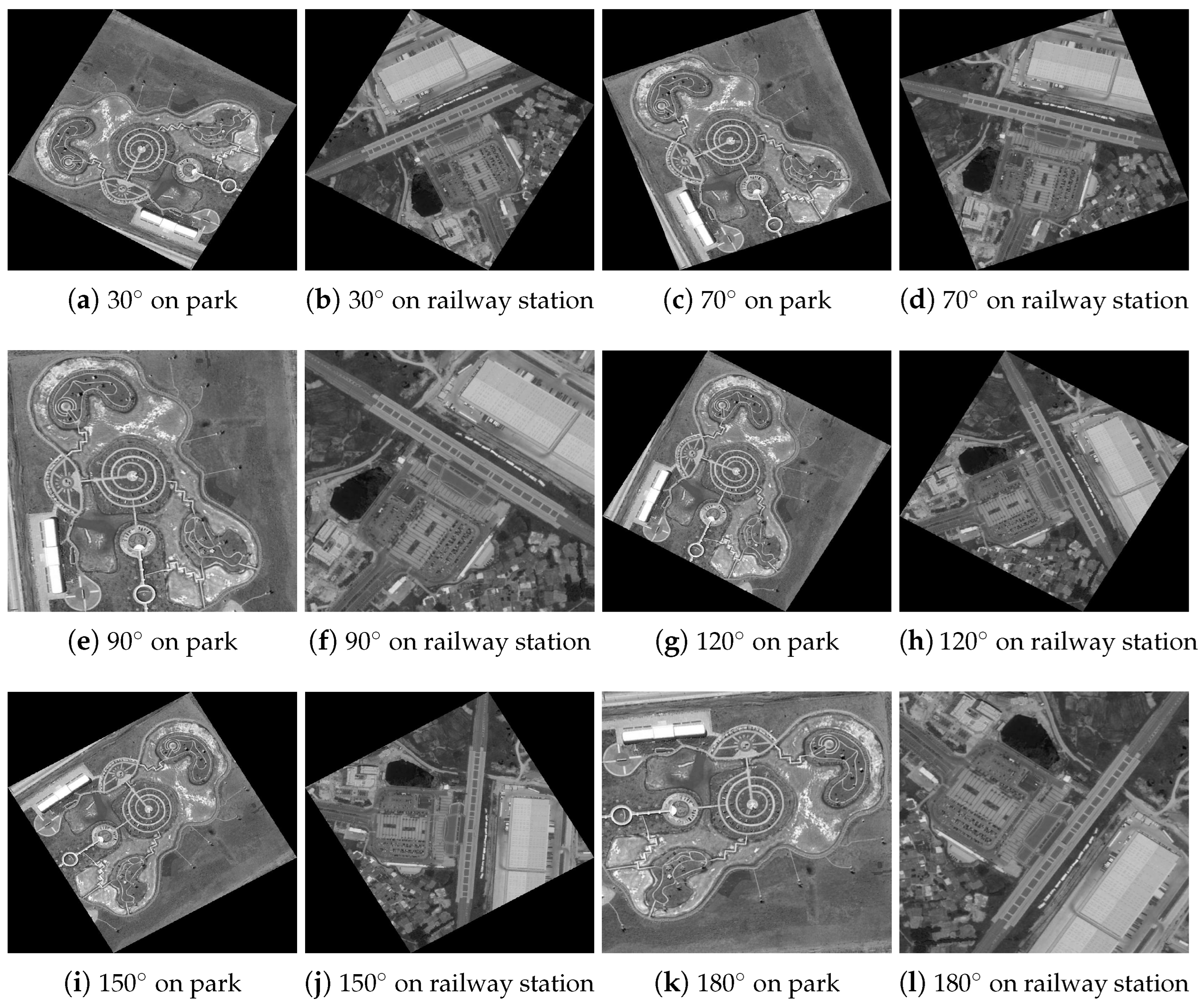

- Rotation: Images are rotated at angles of , , , , , and .

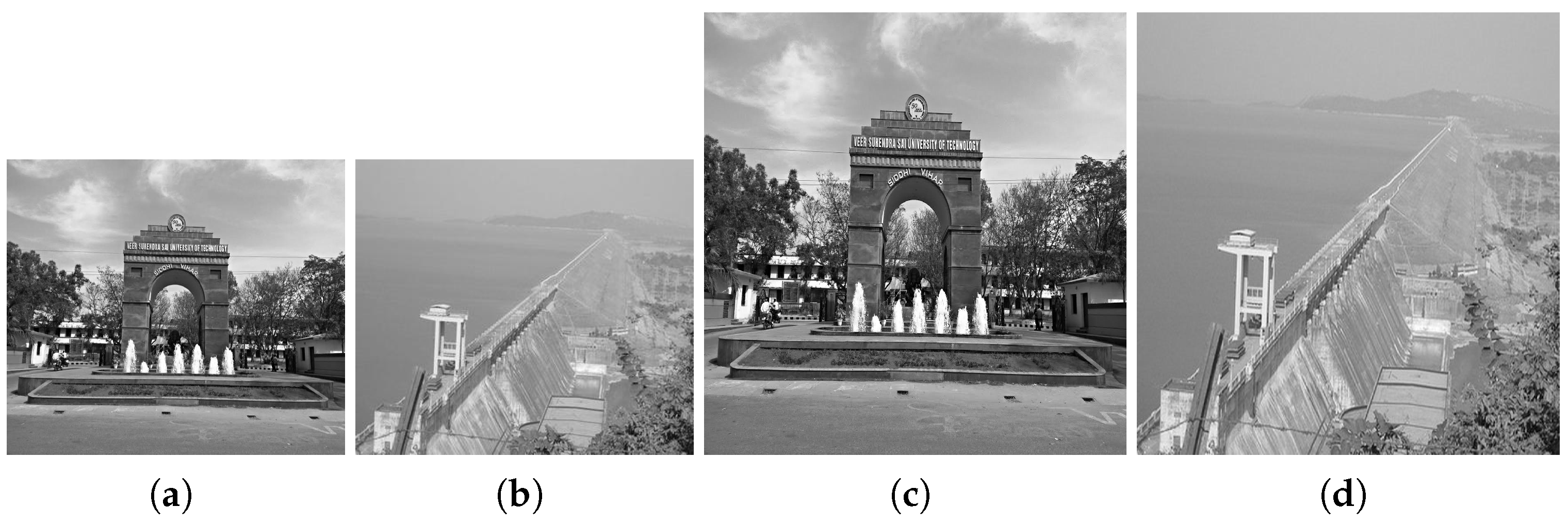

- Scene-to-Model Transformation: This involves using two different instances of the same scene (e.g., different views of an airport and a bridge) where parts of these images share common features.

- Scaling: Images are scaled by factors of 0.7 and 2.0 to evaluate the algorithm’s performance under size variations.

4.1.1. Time Measurement Definitions

- Elapsed Time: total time from the initiation to the completion of the feature-detection process.

- CPU Time: the amount of processing time the CPU spends to execute the feature-detection tasks, excluding any idle time.

- PMT (Performance Measuring Time): this metric assesses the performance efficiency of the algorithm, focusing on the active processing time.

4.1.2. Validation of Detected Keypoints

- Precision assesses the proportion of detected keypoints that are true positives, helping to confirm that the keypoints are genuine features of the images rather than noise or errors.

- Matching Rate evaluates how well the keypoints from different transformations of the same image correlate with each other. A high matching rate indicates a successful identification of consistent and reliable keypoints across different versions of the images.

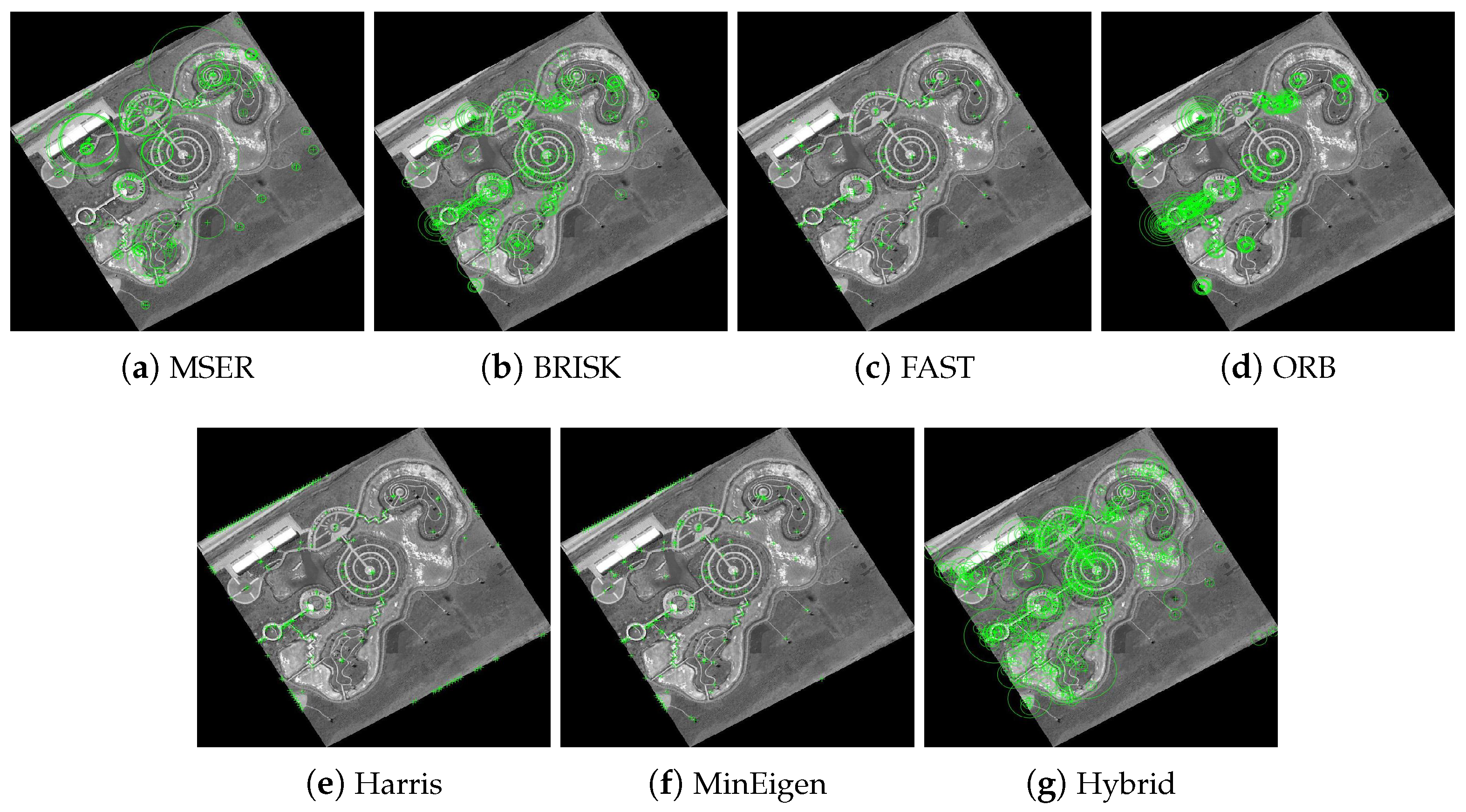

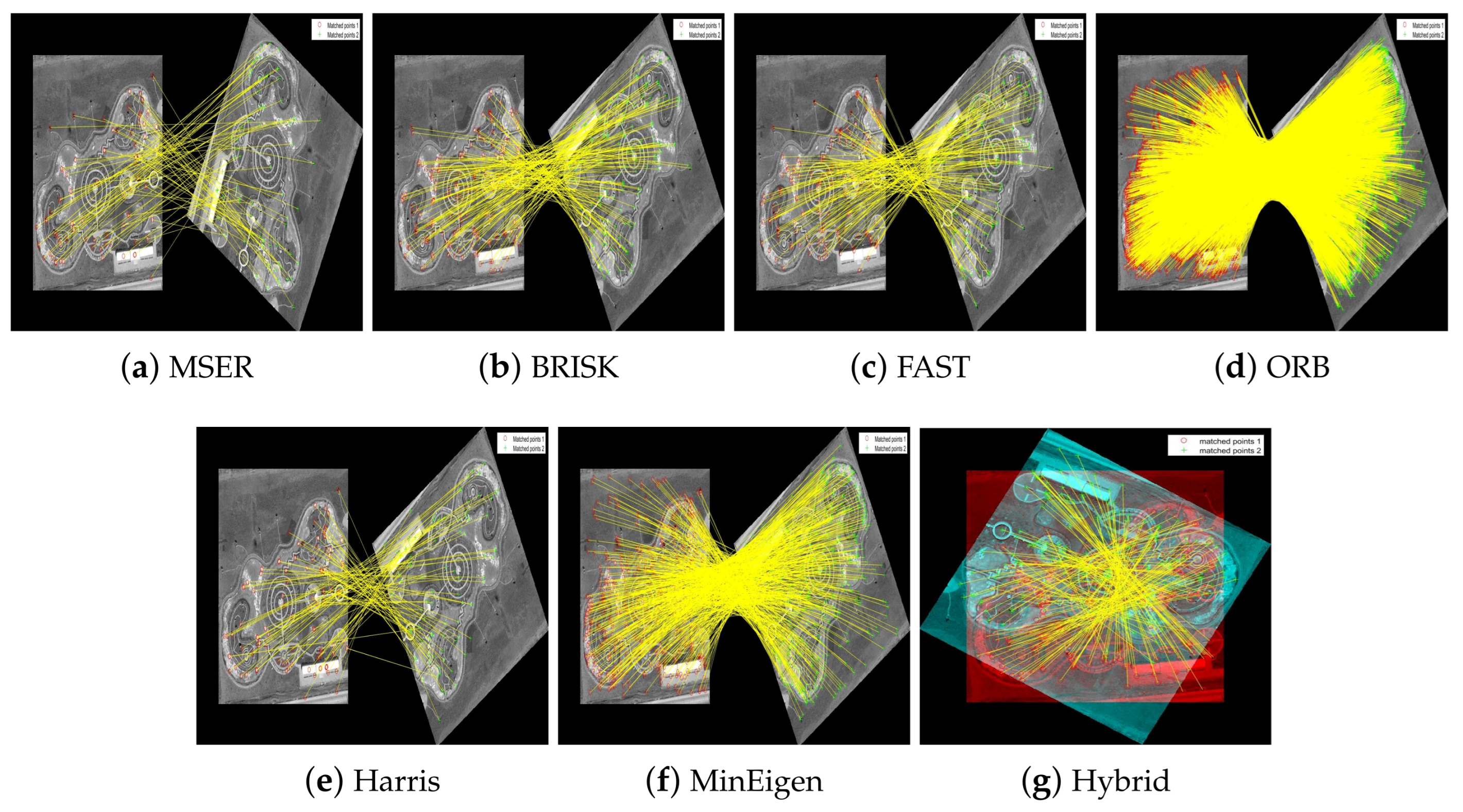

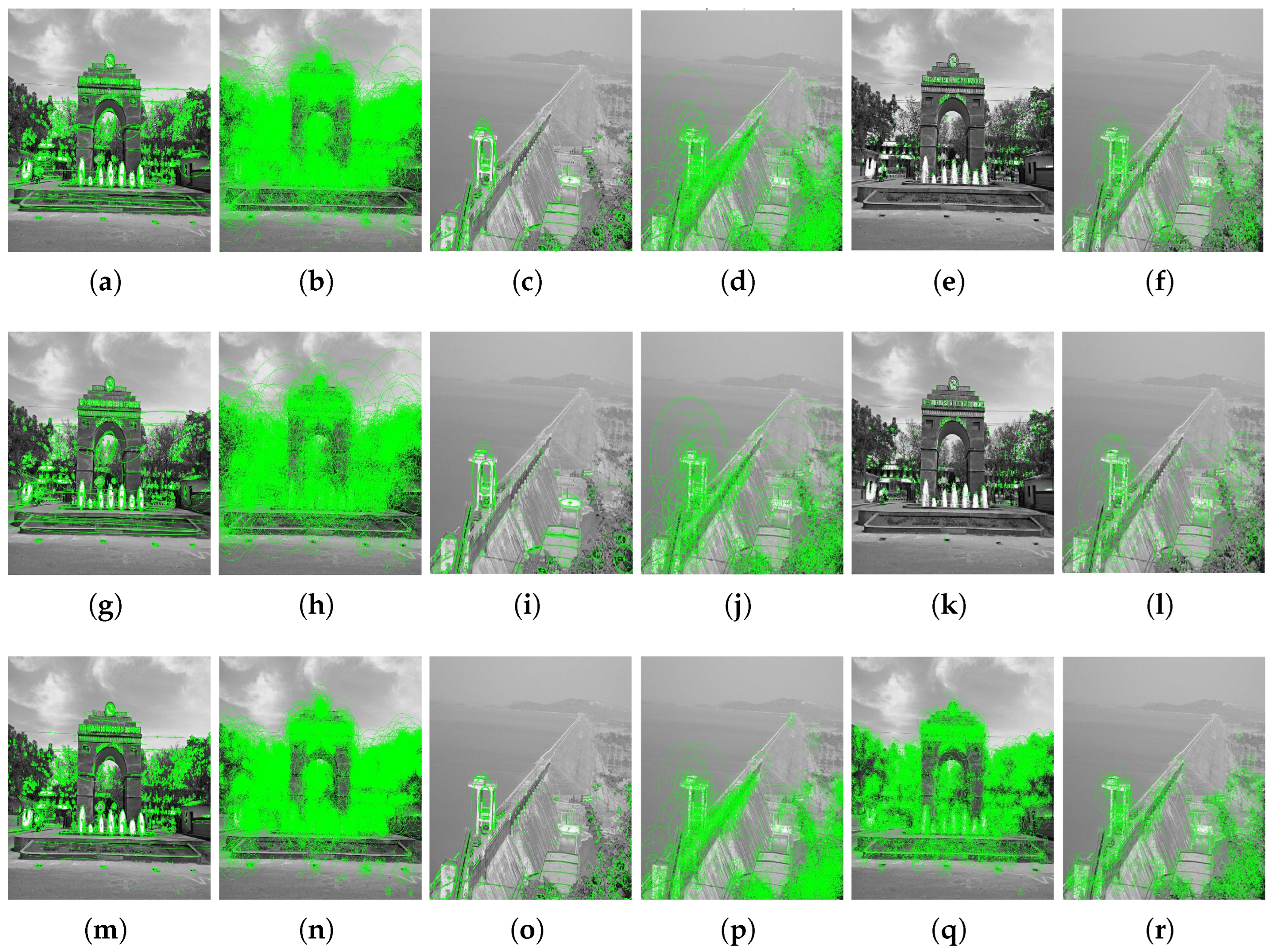

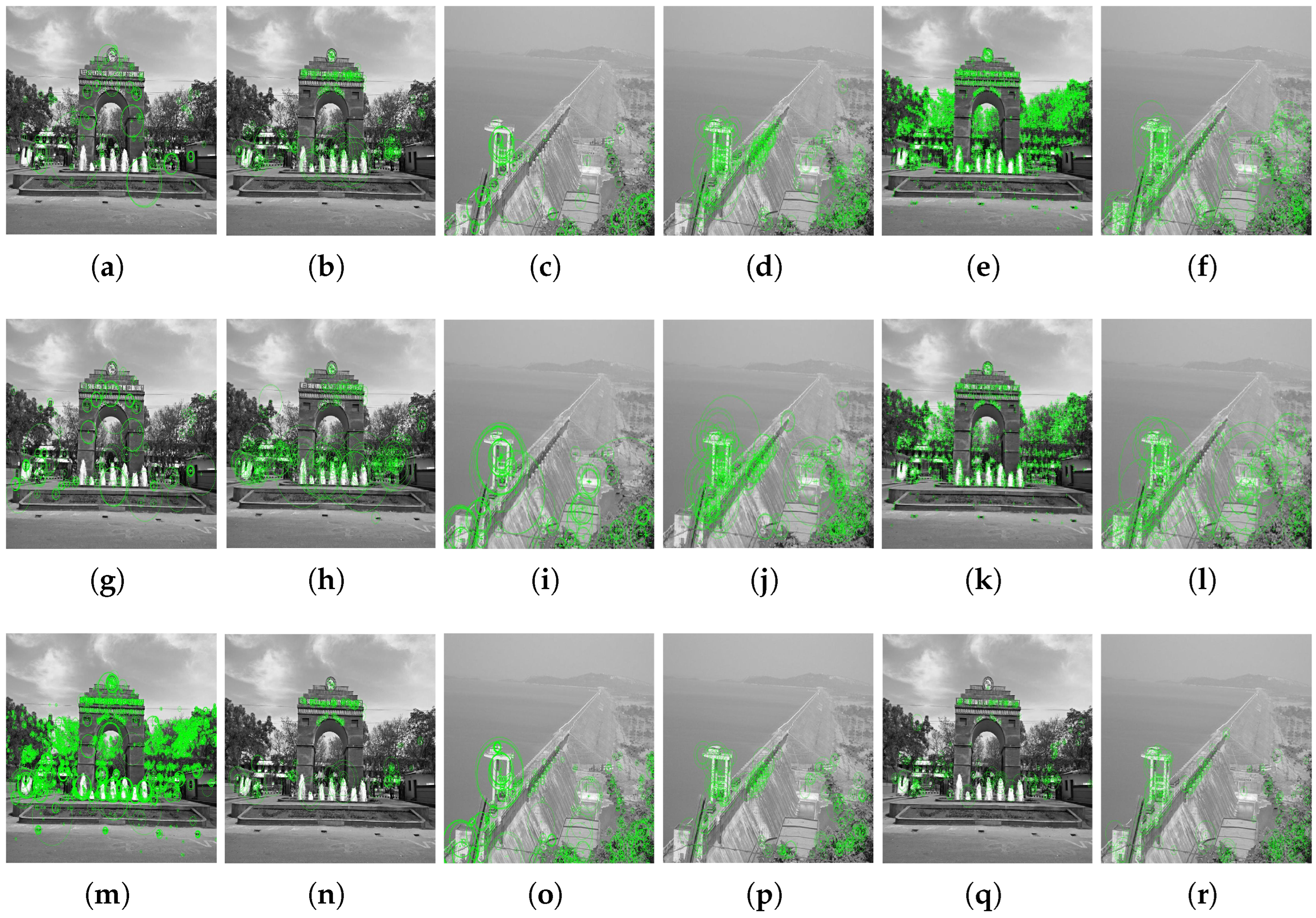

4.2. Rotation with Different Angles

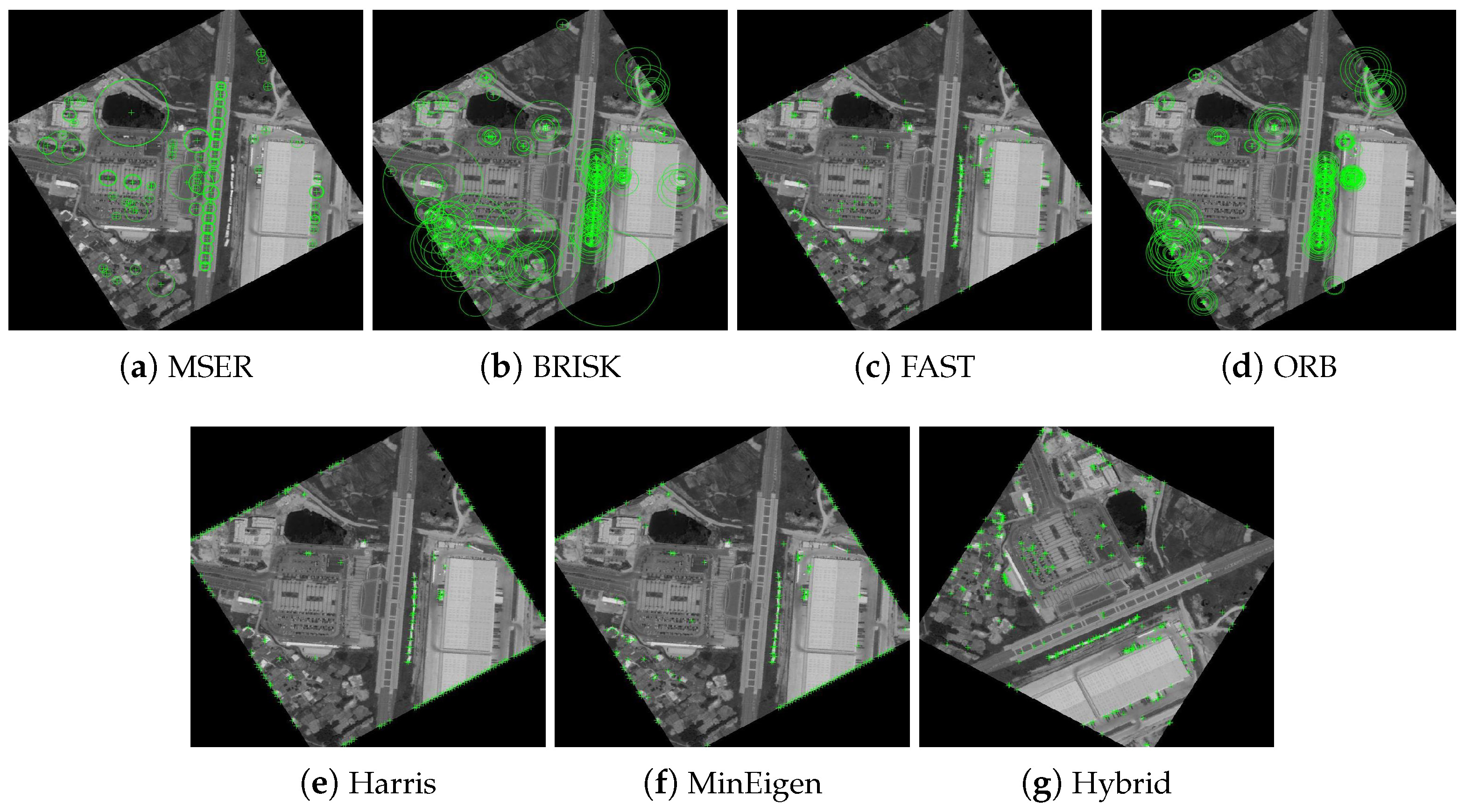

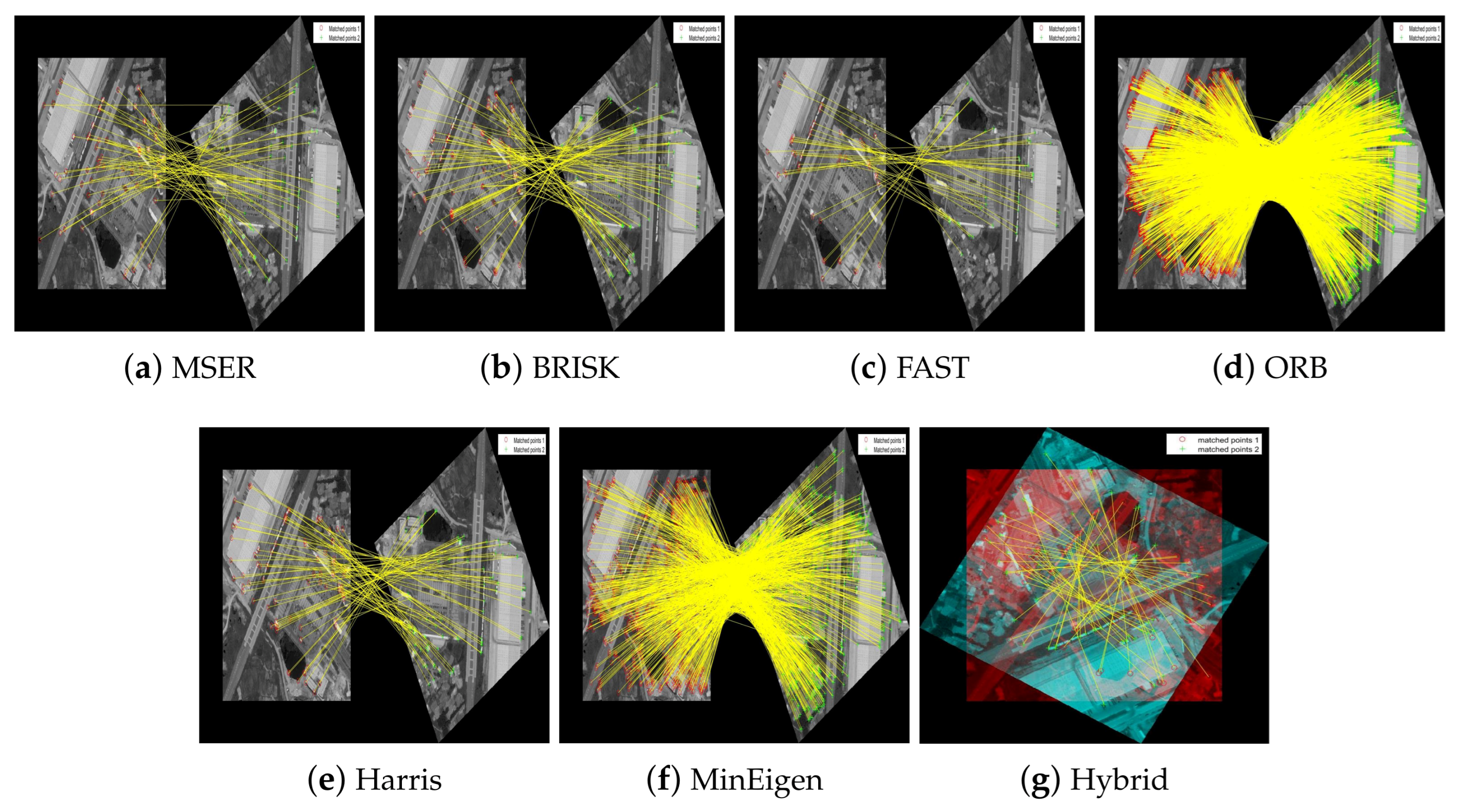

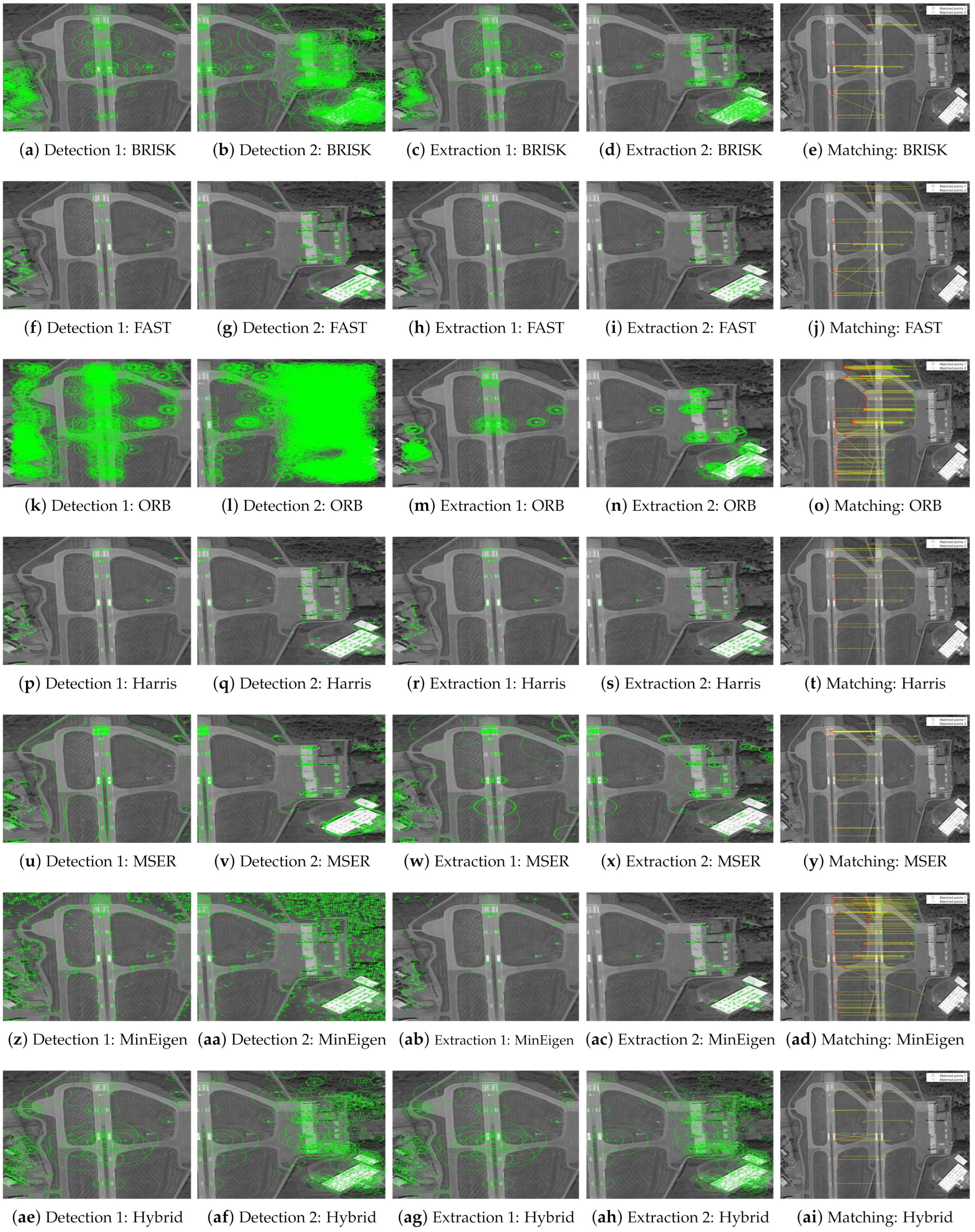

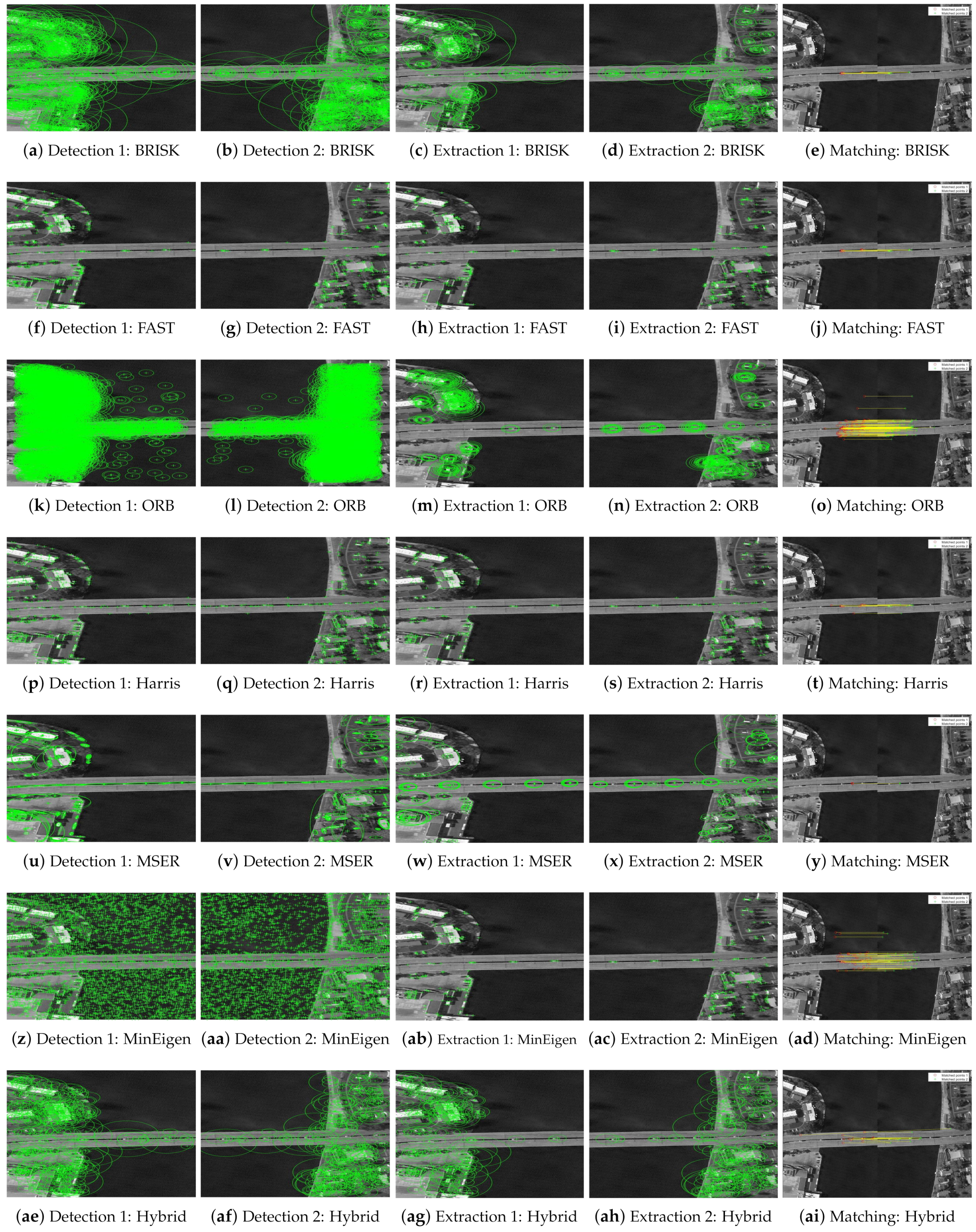

4.3. Scene-to-Model Registration

4.4. Scaling Transformations with Differet Scale Vectors

4.4.1. Comparative Analysis of Feature Detectors

4.4.2. Impact of Scaling on Feature Detection

4.4.3. Advanced Analysis Using Registered Images

4.4.4. Analysis of Feature-Detection Metrics

4.5. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yuan, W.; Poosa, S.R.P.; Dirks, R.F. Comparative Analysis of Color Space and Channel, Detector, and Descriptor for Feature-Based Image Registration. J. Imaging 2024, 10, 105. [Google Scholar] [CrossRef] [PubMed]

- Dai, X.; Khorram, S. A Feature-based Image Registration Algorithm using Improved Chain-Code Representation Combined with Invariant Moments. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2351–2362. [Google Scholar]

- Guan, S.Y.; Wang, T.M.; Meng, C.; Wang, J.C. A Review of Point Feature Based Medical Image Registration. Chin. J. Mech. Eng. 2018, 31, 76. [Google Scholar] [CrossRef]

- Kuppala, K.; Banda, S.; Barige, T.R. An Overview of Deep Learning Methods for Image Registration with Focus on Feature-based Approaches. Int. J. Image Data Fusion 2020, 11, 113–135. [Google Scholar] [CrossRef]

- Kumawat, A.; Panda, S. Feature Detection and Description in Remote Sensing Images Using a Hybrid Feature Detector. Procedia Comput. Sci. 2018, 132, 277–287. [Google Scholar] [CrossRef]

- Kumawat, A.; Panda, S. Feature Extraction and Matching of River Dam Images in Odisha Using a Novel Feature Detector. In Proceedings of the Computational Intelligence in Pattern Recognition (CIPR); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2020; pp. 703–713. [Google Scholar]

- Pratt, W.K. Digital Image Processing: PIKS Scientific Inside; Wiley Online Library: Hoboken, NJ, USA, 2007; Volume 4. [Google Scholar]

- Sridhar. Digital Image Processing; Oxford University Press, Inc.: Oxford, UK, 2011. [Google Scholar]

- Zitova, B.; Flusser, J.; Sroubek, F. Image Registration: A Survey and Recent Advances. In Proceedings of the International Conference on Image Processing, Genoa, Italy, 11–14 September 2005. [Google Scholar]

- Işık, Ş. A Comparative Evaluation of Well-Known Feature Detectors and Descriptors. Int. J. Appl. Math. Electron. Comput. 2014, 3, 1–6. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A Performance Evaluation of Local Descriptors. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2005, 27, 1615–1630. [Google Scholar] [CrossRef]

- Mamadou, D.; Gouton, P.; Adou, K.J. A Comparative Study of Descriptors and Detectors in Multispectral Face Recognition. In Proceedings of the 12th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Naples, Italy, 28 November–1 December 2016; pp. 209–214. [Google Scholar]

- Rana, S.; Gerbino, S.; Crimaldi, M.; Cirillo, V.; Carillo, P.; Sarghini, F.; Maggio, A. Comprehensive Evaluation of Multispectral Image Registration Strategies in Heterogenous Agriculture Environment. J. Imaging 2024, 10, 61. [Google Scholar] [CrossRef]

- Abraham, E.; Mishra, S.; Tripathi, N.; Sukumaran, G. HOG Descriptor Based Registration (A New Image Registration Technique). In Proceedings of the 15th International Conference on Advanced Computing Technologies (ICACT), Rajampet, India, 21–22 September 2013; pp. 1–4. [Google Scholar]

- Tondewad, M.P.S.; Dale, M.M.P. Remote Sensing Image Registration Methodology: Review and Discussion. Procedia Comput. Sci. 2020, 171, 2390–2399. [Google Scholar] [CrossRef]

- Zhang, X.; Leng, C.; Hong, Y.; Pei, Z.; Cheng, I.; Basu, A. Multimodal Remote Sensing Image Registration Methods and Advancements: A Survey. Remote Sens. 2021, 13, 5128. [Google Scholar] [CrossRef]

- Min, C.; Gu, Y.; Li, Y.; Yang, F. Non-Rigid Infrared and Visible Image Registration by Enhanced Affine Transformation. Pattern Recognit. 2020, 106, 107377. [Google Scholar] [CrossRef]

- Kahaki, S.M.M.; Nordin, M.J.; Ashtari, A.H.; Zahra, S.J. Invariant Feature Matching for Image Registration Application Based on New Dissimilarity of Spatial Features. PLoS ONE 2016, 11, e0149710. [Google Scholar]

- Salahat, E.; Qasaimeh, M. Recent Advances in Features Extraction and Description Algorithms: A Comprehensive Survey. In Proceedings of the International Conference on Industrial Technology (ICIT), Toronto, ON, Canada, 22–25 March 2017; pp. 1059–1063. [Google Scholar]

- Xu, G.; Wu, Q.; Cheng, Y.; Yan, F.; Li, Z.; Yu, Q. A Robust Deformed Image Matching Method for Multi-Source Image Matching. Infrared Phys. Technol. 2021, 115, 103691. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Liang, D.; Ding, J.; Zhang, Y. Efficient Multisource Remote Sensing Image Matching Using Dominant Orientation of Gradient. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2194–2205. [Google Scholar] [CrossRef]

- Liu, X.; Xue, J.; Xu, X.; Lu, Z.; Liu, R.; Zhao, B.; Li, Y.; Miao, Q. Robust Multimodal Remote Sensing Image Registration Based on Local Statistical Frequency Information. Remote Sens. 2022, 14, 1051. [Google Scholar] [CrossRef]

- Hazra, J.; Chowdhury, A.R.; Dasgupta, K.; Dutta, P. A Hybrid Structural Feature Extraction-Based Intelligent Predictive Approach for Image Registration. In Innovations in Systems and Software Engineering; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–9. [Google Scholar]

- Liu, Z.; Xu, G.; Xiao, J.; Yang, J.; Wang, Z.; Cheng, S. A Real-Time Registration Algorithm of UAV Aerial Images Based on Feature Matching. J. Imaging 2023, 9, 67. [Google Scholar] [CrossRef]

- Madhu; Kumar, R. A Hybrid Feature Extraction Technique for Content Based Medical Image Retrieval Using Segmentation and Clustering Techniques. Multimed. Tools Appl. 2022, 81, 8871–8904. [Google Scholar] [CrossRef]

- Zhang, P.; Luo, X.; Ma, Y.; Wang, C.; Wang, W.; Qian, X. Coarse-to-Fine Image Registration for Multi-Temporal High Resolution Remote Sensing Based on a Low-Rank Constraint. Remote Sens. 2022, 14, 573. [Google Scholar] [CrossRef]

- Karim, S.; Zhang, Y.; Brohi, A.A.; Asif, M.R. Feature Matching Improvement Through Merging Features for Remote Sensing Imagery. 3D Res. 2018, 9, 52. [Google Scholar] [CrossRef]

- Zhang, D.; Wei, H.; Huang, X.; Ni, H. Research on High Precision Image Registration Method Based on Line Segment Feature and ICP Algorithm. In Proceedings of the International Conference on Optics and Machine Vision (ICOMV), Changsha, China, 6–8 January 2023; Volume 12634, pp. 90–96. [Google Scholar]

- Gui, P.; He, F.; Ling, B.W.K.; Zhang, D.; Ge, Z. Normal Vibration Distribution Search-Based Differential Evolution Algorithm for Multimodal Biomedical Image Registration. Neural Comput. Appl. 2023, 35, 16223–16245. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Zhao, Y. SAR and Optical Image Registration Based on Uniform Feature Points Extraction and Consistency Gradient Calculation. Appl. Sci. 2023, 13, 1238. [Google Scholar] [CrossRef]

- Lin, X.; Sun, S.; Huang, W.; Sheng, B.; Li, P.; Feng, D.D. EAPT: Efficient Attention Pyramid Transformer for Image Processing. IEEE Trans. Multimed. 2023, 25, 50–61. [Google Scholar] [CrossRef]

- Jiang, N.; Sheng, B.; Li, P.; Lee, T.Y. PhotoHelper: Portrait Photographing Guidance Via Deep Feature Retrieval and Fusion. IEEE Trans. Multimed. 2023, 25, 2226–2238. [Google Scholar] [CrossRef]

- Sheng, B.; Li, P.; Ali, R.; Chen, C.L.P. Improving Video Temporal Consistency via Broad Learning System. IEEE Trans. Cybern. 2022, 52, 6662–6675. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Sheng, B.; Li, P.; Yang, P.; Feng, D.D.; Qi, J. Automatic Detection and Classification System of Domestic Waste via Multimodel Cascaded Convolutional Neural Network. IEEE Trans. Ind. Inform. 2022, 18, 163–173. [Google Scholar] [CrossRef]

- Chen, Z.; Qiu, J.; Sheng, B.; Li, P.; Wu, E. GPSD: Generative Parking Spot Detection Using Multi-Clue Recovery Model. Vis. Comput. 2021, 37, 2657–2669. [Google Scholar] [CrossRef]

- Xie, Z.; Zhang, W.; Sheng, B.; Li, P.; Chen, C.L.P. BaGFN: Broad Attentive Graph Fusion Network for High-Order Feature Interactions. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 4499–4513. [Google Scholar] [CrossRef]

- Donoser, M.; Bischof, H. Efficient Maximally Stable Extremal Region (MSER) Tracking. In Proceedings of the Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006; pp. 553–560. [Google Scholar]

- Rosten, E.; Drummond, T. Machine Learning for High-Speed Corner Detection. In Proceedings of the 9th European Conference on Computer Vision (ECCV), Graz, Austria, 7–13 May 2006; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 430–443. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R. BRISK: Binary Robust Invariant Scalable Keypoints. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Rosten, E.; Porter, R.B.; Drummond, T. Faster and Better: A Machine Learning Approach to Corner Detection. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2010, 32, 105–119. [Google Scholar] [CrossRef]

- Jeyapal, A.; Ganesan, J.; Savarimuthu, S.R.; Perumal, I.; Eswaran, P.M.; Subramanian, L.; Anbalagan, N. A Comparative Study of Feature Detection Techniques for Navigation of Visually Impaired Person in an Indoor Environment. J. Comput. Theor. Nanosci. 2020, 17, 21–26. [Google Scholar] [CrossRef]

- Tareen, S.A.K.; Saleem, Z. A Comparative Analysis of SIFT, SURF, KAZE, AKAZE, ORB, and BRISK. In Proceedings of the International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–10. [Google Scholar]

- Sánchez, J.; Monzón, N.; Salgado, A. An Analysis and Implementation of the Harris Corner Detector. Image Process. Line 2018, 8, 305–328. [Google Scholar] [CrossRef]

- Hybrid Approach for FBIR. Available online: https://github.com/Anchal2016/Hybrid-approach-for-FBIR (accessed on 29 August 2024).

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A Benchmark Data Set for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

| Method/Algorithm | Characteristics | Applications |

|---|---|---|

| SIFT [13] | Scale-invariant, robust to rotation | Multispectral image registration |

| MSER [38] | Stable regions, distinctive features | Text detection, multi-source matching |

| SURF [13] | Fast, robust to scale and rotation | Multispectral matching |

| BRISK [10] | Fast, scale and rotation invariant | Generic image registration |

| FAST [39] | Very fast, lacks rotational invariance | High-speed feature detection |

| ORB [10] | Combines FAST and BRIEF, with rotation invariance | Cost-effective real-time applications |

| Harris–Affine [11] | High precision in detecting corners, not scale-invariant | Corner detection in images |

| Multispectral Facial Recognition [12] | Incorporates visible and IR images using various detectors | Facial recognition across spectrums |

| HOG [14] | Histogram of Oriented Gradients for keypoint matching | Offline transformation models |

| Image Quality Parameters | INR Techniques | Transformation Types | ||

|---|---|---|---|---|

| Affine | Similarity | Projective | ||

| MSE | Nearest Neighbor | 0.00438 | 0.00445 | 0.00431 |

| Bilinear | 0.00285 | 0.00293 | 0.00286 | |

| Bicubic | 0.00214 | 0.00219 | 0.00221 | |

| RMSE | Nearest Neighbor | 0.06620 | 0.06674 | 0.06565 |

| Bilinear | 0.05335 | 0.05411 | 0.05348 | |

| Bicubic | 0.04626 | 0.04678 | 0.04704 | |

| SNR | Nearest Neighbor | 18.28352 | 18.21146 | 18.35780 |

| Bilinear | 20.16101 | 20.03561 | 20.18970 | |

| Bicubic | 21.39797 | 21.29897 | 21.24642 | |

| PSNR | Nearest Neighbor | 23.58262 | 23.51229 | 23.65465 |

| Bilinear | 25.45772 | 25.33521 | 25.48598 | |

| Bicubic | 26.69549 | 26.59826 | 26.79086 | |

| Detector | Det. Kpts1 | Det. Kpts2 | Ext. Kpts1 | Ext. Kpts2 | Matched Kpts | Match Rate (%) | Elapsed Time (s) | CPU Time (s) | PMT Time (s) |

|---|---|---|---|---|---|---|---|---|---|

| Rotation Angle: , Sum: 146.7800, Mean: 20.9685, Variance: 64.7572, Std. Dev.: 8.0471 | |||||||||

| BRISK | 572 | 736 | 430 | 680 | 72 | 10.59 | 11.42 | 12.84 | 11.42 |

| FAST | 234 | 291 | 207 | 288 | 54 | 18.75 | 4.71 | 4.19 | 4.71 |

| MSER | 678 | 591 | 678 | 591 | 78 | 13.20 | 6.79 | 8.56 | 6.79 |

| ORB | 6753 | 9936 | 6753 | 9936 | 3037 | 30.57 | 4.92 | 5.03 | 4.93 |

| Harris | 665 | 525 | 588 | 504 | 97 | 19.25 | 4.80 | 5.27 | 4.80 |

| MinEigen | 4140 | 3785 | 3573 | 3748 | 847 | 22.60 | 3.58 | 3.80 | 3.59 |

| Hybrid | 569 | 746 | 569 | 748 | 238 | 31.82 | 3.34 | 3.22 | 3.34 |

| Rotation Angle: , Sum: 149.5300, Mean: 21.3614, Variance: 48.5798, Std. Dev.: 6.9699 | |||||||||

| BRISK | 572 | 730 | 430 | 677 | 74 | 10.93 | 11.69 | 13.83 | 11.70 |

| FAST | 234 | 263 | 207 | 256 | 68 | 26.56 | 4.47 | 4.09 | 4.45 |

| MSER | 678 | 586 | 678 | 586 | 142 | 24.23 | 7.46 | 7.30 | 7.46 |

| ORB | 6753 | 9535 | 6753 | 9535 | 3073 | 32.23 | 5.01 | 4.70 | 5.02 |

| Harris | 665 | 479 | 588 | 450 | 75 | 16.67 | 3.48 | 3.11 | 3.49 |

| MinEigen | 4140 | 3640 | 3573 | 3593 | 701 | 19.51 | 3.26 | 3.03 | 3.27 |

| Hybrid | 569 | 732 | 569 | 732 | 142 | 19.40 | 2.86 | 2.44 | 2.85 |

| Rotation Angle: , Sum: 637.2900, Mean: 91.0414, Variance: 186.4632, Std. Dev.: 13.6551 | |||||||||

| BRISK | 572 | 569 | 430 | 426 | 268 | 62.91 | 3.99 | 3.78 | 4.00 |

| FAST | 234 | 234 | 207 | 207 | 205 | 99.03 | 3.95 | 3.70 | 3.95 |

| MSER | 678 | 678 | 678 | 678 | 678 | 100.00 | 5.89 | 5.44 | 5.89 |

| ORB | 6753 | 6753 | 6753 | 6753 | 6753 | 100.00 | 4.18 | 3.89 | 4.19 |

| Harris | 665 | 665 | 588 | 589 | 518 | 87.95 | 3.57 | 3.25 | 3.57 |

| MinEigen | 4140 | 4140 | 3573 | 3572 | 3122 | 87.40 | 2.91 | 2.34 | 2.91 |

| Hybrid | 569 | 569 | 569 | 569 | 569 | 100.00 | 2.71 | 2.41 | 2.71 |

| Rotation Angle: , Sum: 148.2700, Mean: 21.1814, Variance: 56.2275, Std. Dev.: 7.4985 | |||||||||

| BRISK | 572 | 716 | 430 | 663 | 92 | 13.88 | 3.49 | 3.64 | 3.49 |

| FAST | 234 | 291 | 207 | 288 | 49 | 17.01 | 4.68 | 4.47 | 4.68 |

| MSER | 673 | 591 | 678 | 591 | 78 | 13.20 | 7.73 | 8.45 | 7.73 |

| ORB | 6753 | 9936 | 6753 | 9936 | 3037 | 30.57 | 5.17 | 5.42 | 5.19 |

| Harris | 665 | 525 | 588 | 504 | 101 | 20.04 | 4.07 | 4.13 | 4.07 |

| MinEigen | 4140 | 3735 | 3573 | 3747 | 815 | 21.75 | 3.15 | 2.72 | 3.15 |

| Hybrid | 569 | 748 | 569 | 748 | 238 | 31.82 | 2.98 | 2.61 | 2.99 |

| Rotation Angle: , Sum: 150.1200, Mean: 21.4457, Variance: 45.5085, Std. Dev.: 6.7460 | |||||||||

| BRISK | 572 | 722 | 430 | 673 | 95 | 14.12 | 6.49 | 7.86 | 6.48 |

| FAST | 234 | 295 | 207 | 289 | 42 | 14.53 | 3.89 | 3.30 | 3.88 |

| MSER | 678 | 580 | 678 | 580 | 107 | 18.45 | 7.57 | 7.86 | 7.57 |

| ORB | 6753 | 10,381 | 6753 | 10,381 | 2964 | 28.55 | 5.10 | 4.91 | 5.08 |

| Harris | 665 | 471 | 588 | 451 | 84 | 18.63 | 3.42 | 3.30 | 3.42 |

| MinEigen | 4140 | 3424 | 3573 | 3388 | 838 | 24.73 | 3.48 | 3.19 | 3.48 |

| Hybrid | 569 | 736 | 569 | 736 | 229 | 31.11 | 2.88 | 3.19 | 2.88 |

| Rotation Angle: , Sum: 682.85, Mean: 97.5500, Variance: 33.1407, Std. Dev.: 5.7567 | |||||||||

| BRISK | 572 | 568 | 430 | 426 | 360 | 84.51 | 3.60 | 2.44 | 3.59 |

| FAST | 234 | 234 | 207 | 207 | 207 | 100.00 | 3.98 | 3.03 | 3.98 |

| MSER | 678 | 678 | 678 | 678 | 674 | 99.41 | 6.23 | 7.11 | 6.23 |

| ORB | 6753 | 6753 | 6753 | 6753 | 6753 | 100.00 | 3.75 | 3.75 | 3.75 |

| Harris | 665 | 665 | 588 | 588 | 585 | 99.49 | 3.64 | 3.27 | 3.63 |

| MinEigen | 4140 | 4140 | 3573 | 3568 | 3548 | 99.44 | 3.04 | 2.88 | 3.04 |

| Hybrid | 569 | 569 | 569 | 569 | 569 | 100.00 | 2.84 | 2.58 | 2.84 |

| Detector | Detected Kpts1 | Detected Kpts2 | Extracted Kpts1 | Extracted Kpts2 | Matched Kpts | Matched Rate (%) | Elapsed Time (s) | CPU Time (s) | PMT Time (s) |

|---|---|---|---|---|---|---|---|---|---|

| Rotation Angle: | |||||||||

| BRISK | 1634 | 1973 | 1499 | 1951 | 173 | 8.86 | 13.92 | 16.08 | 13.93 |

| FAST | 894 | 1128 | 859 | 1125 | 179 | 15.91 | 4.84 | 6.72 | 4.84 |

| MSER | 767 | 779 | 767 | 779 | 126 | 16.71 | 7.51 | 7.78 | 7.50 |

| ORB | 13,704 | 18,521 | 13,704 | 18,521 | 5177 | 27.95 | 7.45 | 10.48 | 7.44 |

| Harris | 1176 | 1081 | 1119 | 1049 | 162 | 15.44 | 4.61 | 4.13 | 4.60 |

| MinEigen | 5213 | 4590 | 4608 | 4550 | 645 | 14.17 | 4.35 | 3.89 | 4.34 |

| Hybrid | 976 | 1009 | 976 | 1009 | 290 | 28.74 | 3.63 | 3.59 | 3.64 |

| Rotation Angle: | |||||||||

| BRISK | 1634 | 1906 | 1499 | 1872 | 177 | 9.45 | 13.29 | 13.25 | 13.29 |

| FAST | 894 | 951 | 859 | 944 | 170 | 18.00 | 4.88 | 5.11 | 4.88 |

| MSER | 767 | 739 | 767 | 739 | 179 | 24.22 | 6.74 | 7.88 | 6.74 |

| ORB | 13,704 | 17,713 | 13,704 | 17,713 | 5393 | 30.44 | 8.91 | 10.45 | 8.92 |

| Harris | 1176 | 1270 | 1119 | 1236 | 142 | 11.48 | 4.84 | 3.92 | 4.85 |

| MinEigen | 5213 | 4623 | 4608 | 4568 | 495 | 10.83 | 3.83 | 4.75 | 3.84 |

| Hybrid | 976 | 1063 | 976 | 1063 | 322 | 30.29 | 3.44 | 3.86 | 3.44 |

| Rotation Angle: | |||||||||

| BRISK | 1634 | 1648 | 1499 | 1512 | 938 | 62.03 | 4.74 | 4.45 | 4.75 |

| FAST | 894 | 894 | 859 | 859 | 797 | 92.78 | 3.42 | 3.13 | 3.43 |

| MSER | 767 | 767 | 767 | 767 | 755 | 98.43 | 16.69 | 23.30 | 16.68 |

| ORB | 13,704 | 13,704 | 13,704 | 13,704 | 13,704 | 100.00 | 5.67 | 7.22 | 5.66 |

| Harris | 1176 | 1176 | 1119 | 1119 | 933 | 83.37 | 4.23 | 3.61 | 4.23 |

| MinEigen | 5213 | 5213 | 4608 | 4613 | 3600 | 78.04 | 3.64 | 3.72 | 3.64 |

| Hybrid | 976 | 976 | 976 | 976 | 976 | 100.00 | 2.55 | 2.77 | 2.55 |

| Rotation Angle: | |||||||||

| BRISK | 1634 | 1972 | 1499 | 1948 | 156 | 8.00 | 4.31 | 4.72 | 4.31 |

| FAST | 894 | 1128 | 859 | 1125 | 185 | 16.44 | 3.61 | 4.41 | 3.61 |

| MSER | 767 | 779 | 767 | 779 | 126 | 16.17 | 6.58 | 7.91 | 6.59 |

| ORB | 13,704 | 18,521 | 13,704 | 18,521 | 5177 | 27.95 | 8.32 | 11.36 | 8.33 |

| Harris | 1176 | 1081 | 1119 | 1049 | 158 | 15.06 | 4.91 | 5.73 | 4.89 |

| MinEigen | 5213 | 4590 | 4608 | 4550 | 620 | 13.62 | 3.37 | 3.77 | 3.37 |

| Hybrid | 976 | 1009 | 976 | 1009 | 291 | 28.84 | 3.33 | 4.44 | 3.33 |

| Rotation Angle: | |||||||||

| BRISK | 1634 | 1932 | 1499 | 1899 | 179 | 9.42 | 4.55 | 5.19 | 4.55 |

| FAST | 894 | 1144 | 859 | 1137 | 163 | 14.33 | 4.55 | 4.77 | 4.56 |

| MSER | 767 | 726 | 767 | 726 | 163 | 22.45 | 6.49 | 6.64 | 6.49 |

| ORB | 13,704 | 18,282 | 13,704 | 18,282 | 5132 | 28.07 | 7.57 | 11.28 | 7.57 |

| Harris | 1176 | 1210 | 1119 | 1182 | 149 | 12.60 | 4.01 | 4.11 | 4.02 |

| MinEigen | 5213 | 4632 | 4608 | 4592 | 559 | 12.17 | 3.73 | 3.77 | 3.73 |

| Hybrid | 976 | 1022 | 976 | 1022 | 267 | 26.12 | 3.61 | 3.71 | 3.61 |

| Rotation Angle: | |||||||||

| BRISK | 1634 | 1634 | 1499 | 1501 | 1325 | 88.27 | 2.95 | 2.75 | 2.95 |

| FAST | 894 | 894 | 859 | 861 | 859 | 99.76 | 4.06 | 3.00 | 4.06 |

| MSER | 767 | 767 | 767 | 767 | 754 | 98.30 | 6.78 | 6.33 | 6.79 |

| ORB | 13,704 | 13,704 | 13,704 | 13,704 | 13,704 | 100.00 | 6.56 | 7.88 | 6.56 |

| Harris | 1176 | 1176 | 1119 | 1121 | 1118 | 99.73 | 4.24 | 3.22 | 4.24 |

| MinEigen | 5213 | 5213 | 4608 | 4615 | 4590 | 99.45 | 3.51 | 2.73 | 3.50 |

| Hybrid | 976 | 976 | 976 | 976 | 976 | 100.00 | 2.81 | 2.94 | 2.81 |

| Detection Method | Detected Kpts1 | Detected Kpts2 | Extracted Kpts1 | Extracted Kpts2 | Matched Kpts | Matched Rate (%) | Elapsed Time | CPU Time | PMT Time |

|---|---|---|---|---|---|---|---|---|---|

| Airport Aerial Images | |||||||||

| BRISK | 278 | 731 | 195 | 604 | 24 | 19.85 | 4.93 | 4.73 | 4.93 |

| FAST | 201 | 464 | 150 | 404 | 28 | 34.65 | 6.11 | 5.25 | 6.12 |

| MSER | 173 | 270 | 173 | 270 | 34 | 12.59 | 6.26 | 5.30 | 6.25 |

| ORB | 1253 | 3759 | 1253 | 3759 | 129 | 17.15 | 5.51 | 4.56 | 5.51 |

| Harris | 153 | 342 | 117 | 289 | 21 | 36.30 | 5.52 | 4.83 | 5.52 |

| MinEigen | 955 | 2176 | 697 | 1689 | 100 | 29.60 | 5.59 | 4.09 | 5.59 |

| Hybrid | 89 | 257 | 89 | 257 | 38 | 73.90 | 4.48 | 3.86 | 4.47 |

| Bridge Aerial Images | |||||||||

| BRISK | 830 | 577 | 644 | 412 | 7 | 8.45 | 5.69 | 4.69 | 5.68 |

| FAST | 475 | 294 | 397 | 239 | 9 | 18.80 | 4.53 | 4.14 | 4.53 |

| MSER | 558 | 385 | 558 | 385 | 7 | 9.05 | 6.80 | 6.98 | 6.80 |

| ORB | 3805 | 3573 | 3805 | 3573 | 126 | 17.60 | 5.08 | 5.02 | 5.08 |

| Harris | 435 | 382 | 350 | 329 | 12 | 18.20 | 5.11 | 4.64 | 5.13 |

| MinEigen | 3664 | 3465 | 3101 | 2897 | 48 | 8.25 | 4.94 | 4.95 | 4.94 |

| Hybrid | 367 | 282 | 367 | 282 | 14 | 24.80 | 4.45 | 4.54 | 4.45 |

| Image Name | Scaling Vector | Scaled Size | IQA | Bicubic | Bilinear | Nearest |

|---|---|---|---|---|---|---|

| VSSUT | 0.7 | 717 × 538 | PSNR | 30.31 | 29.52 | 26.74 |

| 1024 × 768 134 KB | 65.7 KB | MSE | 0.00093 | 0.00112 | 0.00212 | |

| Hirakud dam | 0.7 | 385 × 289 | PSNR | 31.75 | 29.33 | 26.70 |

| 550 × 412 34.7 KB | 15.4 KB | MSE | 0.00067 | 0.00117 | 0.00214 | |

| VSSUT | 2.0 | 2048 × 1536 | PSNR | 26.60 | 25.94 | 24.38 |

| 1024 × 768 134 KB | 330 KB | MSE | 0.00219 | 0.00249 | 0.00364 | |

| Hirakud dam | 2.0 | 1100 × 824 | PSNR | 31.31 | 30.20 | 28.81 |

| 550 × 412 34.7 KB | 73.2 KB | MSE | 0.00074 | 0.00095 | 0.00131 |

| Image Name | Scaling Vector | Scaled Size | IQA | Bicubic | Bilinear | Nearest |

|---|---|---|---|---|---|---|

| VSSUT | 0.7 | 717 × 538 | PSNR | 30.66 | 30.31 | 26.59 |

| 1024 × 768 134 KB | 65.7 KB | MSE | 0.00086 | 0.00093 | 0.00219 | |

| Hirakud dam | 0.7 | 385 × 289 | PSNR | 29.83 | 29.14 | 25.68 |

| 550 × 412 34.7 KB | 15.4 KB | MSE | 0.00104 | 0.00122 | 0.00270 | |

| VSSUT | 2.0 | 2048 × 1536 | PSNR | 26.87 | 25.92 | 24.21 |

| 1024 × 768 134 KB | 330 KB | MSE | 0.00206 | 0.00256 | 0.00379 | |

| Hirakud dam | 2.0 | 1100 × 824 | PSNR | 30.57 | 28.03 | 25.47 |

| 550 × 412 34.7 KB | 73.2 KB | MSE | 0.00088 | 0.00157 | 0.00283 |

| Image Name | Scaling Vector | Scaled Size | IQA | Bicubic | Bilinear | Nearest |

|---|---|---|---|---|---|---|

| VSSUT | 0.7 | 717 × 538 | PSNR | 31.47 | 30.34 | 27.02 |

| 1024 × 768 134 KB | 65.7 KB | MSE | 0.00071 | 0.00093 | 0.00198 | |

| Hirakud dam | 0.7 | 385 × 289 | PSNR | 34.11 | 31.66 | 26.78 |

| 550 × 412 34.7 KB | 15.4 KB | MSE | 0.00039 | 0.00068 | 0.00210 | |

| VSSUT | 2.0 | 2048 × 1536 | PSNR | 26.89 | 26.04 | 24.38 |

| 1024 × 768 134 KB | 330 KB | MSE | 0.00205 | 0.00249 | 0.00364 | |

| Hirakud dam | 2.0 | 1100 × 824 | PSNR | 31.31 | 29.75 | 25.93 |

| 550 × 412 34.7 KB | 73.2 KB | MSE | 0.00074 | 0.00106 | 0.00255 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kumawat, A.; Panda, S.; Gerogiannis, V.C.; Kanavos, A.; Acharya, B.; Manika, S. A Hybrid Approach for Image Acquisition Methods Based on Feature-Based Image Registration. J. Imaging 2024, 10, 228. https://doi.org/10.3390/jimaging10090228

Kumawat A, Panda S, Gerogiannis VC, Kanavos A, Acharya B, Manika S. A Hybrid Approach for Image Acquisition Methods Based on Feature-Based Image Registration. Journal of Imaging. 2024; 10(9):228. https://doi.org/10.3390/jimaging10090228

Chicago/Turabian StyleKumawat, Anchal, Sucheta Panda, Vassilis C. Gerogiannis, Andreas Kanavos, Biswaranjan Acharya, and Stella Manika. 2024. "A Hybrid Approach for Image Acquisition Methods Based on Feature-Based Image Registration" Journal of Imaging 10, no. 9: 228. https://doi.org/10.3390/jimaging10090228

APA StyleKumawat, A., Panda, S., Gerogiannis, V. C., Kanavos, A., Acharya, B., & Manika, S. (2024). A Hybrid Approach for Image Acquisition Methods Based on Feature-Based Image Registration. Journal of Imaging, 10(9), 228. https://doi.org/10.3390/jimaging10090228