Abstract

Mammography images are the most commonly used tool for breast cancer screening. The presence of pectoral muscle in images for the mediolateral oblique view makes designing a robust automated breast cancer detection system more challenging. Most of the current methods for removing the pectoral muscle are based on traditional machine learning approaches. This is partly due to the lack of segmentation masks of pectoral muscle in available datasets. In this paper, we provide the segmentation masks of the pectoral muscle for the INbreast, MIAS, and CBIS-DDSM datasets, which will enable the development of supervised methods and the utilization of deep learning. Training deep learning-based models using segmentation masks will also be a powerful tool for removing pectoral muscle for unseen data. To test the validity of this idea, we trained AU-Net separately on the INbreast and CBIS-DDSM for the segmentation of the pectoral muscle. We used cross-dataset testing to evaluate the performance of the models on an unseen dataset. In addition, the models were tested on all of the images in the MIAS dataset. The experimental results show that cross-dataset testing achieves a comparable performance to the same-dataset experiments.

1. Introduction

Breast cancer is one of the main cancer types in the female population, with a high mortality rate. Mammograms are images taken from two views of a compressed breast region. These views are craniocaudal (CC) and mediolateral oblique (MLO) views. CC is the view in which the breast is compressed horizontally, and in the MLO view, the compression is diagonal. Mammography images are the most commonly used tool for breast cancer screening due to their availability and lower cost. Therefore, the development of automated cancer detection methods for these images is of high importance due to the benefits they bring to patients by increasing survival chances by detecting the abnormalities accurately in the early stages [1].

While current methods have improved the performance considerably, several challenges hinder the performance of these methods for mammography images. For instance, in the MLO view, a portion of the pectoral muscle is usually visible in the final image. Pectoral muscles, fibroglandular tissue, and abnormalities all appear as brighter regions compared to the fatty tissues in the images. Therefore, detecting abnormalities becomes more challenging in cases with high breast tissue density or in the presence of the pectoral muscle. This emphasizes the need for approaches to address the challenges of abnormality detection in high-density cases and removal of the pectoral muscle in the images.

This paper targets the latter problem, which is also highly important for automated density estimation. Due to the fact that the segmentation mask of the pectoral muscle is not normally available in the publicly available datasets or in the examinations for breast cancer screening in current practice in clinical settings, most of the current pectoral muscle removal approaches are based on traditional machine learning approaches. As the muscle’s location, shape, density, and position vary between the images, the performance of these methods is limited. Ideally, utilizing deep learning-based approaches would help mitigate these hurdles to a great extent if annotations for the pectoral muscle were available.

We argue that providing annotations even for several datasets will enable researchers to train supervised pectoral muscle removal and utilize them for new unseen datasets. Hence, following the work of Aliniya et al. [2], we provide pectoral muscle segmentation for three benchmark datasets, INbreast [3], MIAS [4] and CBIS-DDSM [5]. The segmentation masks are available at https://github.com/Parvaneh-Aliniya/pectoral_muscle_groundtruth_segmentation, accessed on 1 December 2024. We separately trained AU-Net [6], a widely used segmentation method for mammography images, on the datasets. Same-dataset and cross-dataset tests were used to validate the proposed method. In same-dataset tests, train and test sets belong to one of the datasets; in the cross-dataset experiments, the train and test sets are from two different datasets. The models achieve high accuracy for both same-dataset and cross-dataset experiments.

Pectoral muscle removal methods are beneficial for tasks such as density estimation in which the presence of pectoral muscle decreases the accuracy of the estimation when the muscle is wrongly detected as dense tissue. Moreover, they could be used as a preprocessing step in segmentation and classification tasks. In addition, the pectoral muscle removal methods could also improve the performance of multi-view approaches that use both MLO- and CC-view images as input.

The contributions of this paper are three-fold:

- Generating the segmentation masks of pectoral muscle for INbreast, MIAS, and CBIS-DDSM datasets.

- Training pectoral muscle removal models using the AU-Net architecture separately for INbreast and CBIS-DDSM datasets.

- Evaluating the models by same-dataset and cross-dataset testing to measure the generalizability of the supervised trained models on the same and new datasets.

In the following, we first review the literature on pectoral muscle removal and segmentation methods for mammography images and then present the proposed method. Finally, the experimental results section provides the results of the experiments and comparisons with state-of-the-art methods.

2. Related Work

In this section, we provide a review of the previous methods proposed to tackle the pectoral muscle removal task [7,8,9,10,11] and a brief review of the segmentation method with a focus on those proposed for mammography images. Some methods consider the task as segmentation, and others use the phrase “pectoral muscle removal”. In the paper, we use both phrases according to the context. As it is a trivial task for radiologists to exclude the pectoral muscle visually while reading the mammograms, the segmentation of the pectoral muscle is not available in images in most datasets; hence, to the best of our knowledge, all of the proposed methods are traditional machine learning-based methods. These methods, in general, aim to use the appearance of the muscle and its location (after prepossessing to unify the alignment of the breast to be all left or right) to detect and eliminate it.

2.1. Pectoral Muscle Removal Methods

According to a recent study [1], thresholding [12,13,14,15] and region growing [16,17,18] are widely used approaches.

The general idea for thresholding is to use the observation that the brightness of the pectoral muscle is generally higher than the neighboring regions; therefore, by eliminating pixels lower than a certain threshold, the region for the pectoral muscle will be extracted. This idea, coupled with the utilization of the orientation of the breast (whether the breast is on the left or right side of the image) and the generic shape of the muscle, has been used in the literature. In this category, Subashini et al. [13] used a thresholding-based approach for pectoral muscle removal, in which they first extracted the rectangle in the image where the pectoral muscle was assumed to be located and then used thresholding within the rectangle to detect the muscle. The height of the rectangle was fixed relative to the image’s height, and its width was selected according to the width of the breast area on top of the image. Tayel et al. [14] proposed an approach that eliminates the need for a predefined region. To this end, they employed the idea of retaining only a region corresponding to the location of the muscle after thresholding. In the same category, Czaplicka et al. [15] proposed using multi-level thresholding, and Shrivastava et al. [19] developed a method using a sliding window for thresholding.

There are several drawbacks to these approaches. First, in many cases, the difference between the brightness of the pectoral muscle and the surrounding pixels is not high enough to lead to an accurate boundary for the pectoral muscle using thresholding. In addition, there are certain artifacts that are overlaid on the pectoral muscle region (such as tape or notes) in some images that introduce errors to the thresholding method. Moreover, the region for the pectoral muscle is not always consistent, so thresholding may lead to sub-optimal results in such cases. Finally, predefined regions for the selection are not generalizable to all the cases, and the pectoral muscle may exceed the region.

The second category of methods for pectoral muscle removal consists of region-growing-based methods. These methods generally start with initial seeds; then, according to certain similarity metrics, they continue adding a new neighboring pixel to a region until a termination criterion is met [16]. Chen et al. [20] proposed using a pixel near the border between the pectoral muscle and the breast tissue (which was approximated) as a starting seed. For the ending threshold value, they used growing thresholding, which stops near the edges of the image. This approach relies on the border of the pectoral muscle being well-defined, which does not apply to many samples, specifically for samples with higher breast tissue density. Instead of approximating the location for the border, Nagi et al. [21] used the approximate location for the pectoral muscle after determining the orientation for the breast to place the initial seed. Then, the region-growing algorithm was applied to the starting point. Maitra et al. [22] introduced several improvements to the previous method by using a triangle that encapsulated the pectoral muscle after flipping the images (to achieve left orientation for all images). For seed selection, they proposed to use the diagonal of a defined rectangle encapsulating the pectoral muscle from top-left to bottom-right. The points in the line that were located inside the triangle were selected as the seeds. In addition, they used new selection criteria based on the minimum, maximum, and average values for the pixels. Priyanka et al. [23] proposed a region-growing method by optimizing the initial seed selection stage. The flooding algorithm was used to grow the region, and the process of adding a new point was conditioned on a predetermined intensity standard.

Aside from the previous methods that aim to use region growing and thresholding, graph-cut [24], Hough Transform [25], line estimation, polynomial fitting, curve estimation [26], k-means [27], active contours [28], and contour growing [29] are also used in several methods. For instance, Dhimann et al. [30] proposed to first blur the image and then apply the Canny edge detector on the image. Finally, the Hough lines were detected from the detected edges, which were further processed to select the best line. This method has a few limitations. First, selecting the degree of blurring as a hyperparameter is challenging, specifically in mammograms where the grayscale range may change from one image to another. In addition, selecting a line as the border of the muscle decreases the detection accuracy. Mahaveera et al. [31] proposed a cluster-based method that used intensity as the value for clustering. Then, the connected-components-labeling algorithm was utilized to differentiate between the muscle and breast regions (the assumption about the location of the masses was used in this stage). Finally, they refined the region to improve the results. The main drawback of this method is that in more challenging cases in which the boundary between the muscle and the breast region is unclear (or the diversity of the pixel values is high in the breast region), a considerable number of pixels might be misplaced. Chen et al. [32] developed a method that relies on image binarization to help distinguish between bright and darker regions (as the pectoral muscle is usually brighter than the surrounding regions). A Canny edge detector was applied to the resulting image, and the edges were improved with interpolation. This method is sensitive to noise in the images and unclear borders between the muscle and the breast tissue.

2.2. Segmentation Methods

In recent years, many methods have been proposed for segmentation in mammography images and other domains. In this section, we focus on reviewing the segmentation methods, specifically for the segmentation of masses in mammography images. As masses are one of the dominant abnormalities in the breast, most of the segmentation methods for mammography images focus on this group.

The authors of [33,34] were among the first to design a segmentation method using deep learning. U-Net [35] is a pioneering work in deep learning-based segmentation methods for medical applications. The fully convolutional network (FCN) [33] introduced a network with skip connections and end-to-end training for segmentation tasks. U-Net extended the core idea of FCN by proposing a symmetric encoder–decoder network that differed from FCN in that the skip connections were more present throughout a network with a symmetric structure in the encoder and decoder parts. In addition, instead of summation, U-Net utilized concatenation for the feature maps and further processed the output of the concatenation.

The methods up to this point were generic segmentation methods, focusing on improvement of the performance. However, to achieve the best performance for specific tasks, such as applications in mammography images, it is vital to take the specific characteristics of the input and the desired output into consideration. Hence, following the success of U-Net and FCN, in recent years, studies [36,37,38,39,40,41,42,43,44,45] in the medical imaging domain have exceeded the performance limits of segmentation through the adaptation and advancement of these approaches. These approaches have been proposed for a variety of medical images, such as images for pelvic organs [36] and gland segmentation [44]. For instance, Drozdzal et al. [46] explored the idea of creating a deeper FCN by adding a short skip connection to the decoder and encoder paths in order to improve the performance of segmentation for biomedical images. Zhou et al. [45] developed more sophisticated skip connections to create more semantically compatible features before merging the feature maps from the contracting and expanding paths. This method was tested on images of liver, colon polyp, and cell nuclei.

Hai et al. [47] improved the design on UNet while considering the challenging features of the mammography data, such as the diversity of shapes and sizes. To this end, they utilized an Atrous Spatial Pyramid Pooling (ASPP) module in the transition between the encoder and decoder paths. The ASPP block consisted of conv plus three atrous convolutions [48] with sample rates of 6, 12, and 18; the outputs for these layers were concatenated and fed into a conv. FC-DenseNet [49] was selected as the backbone of the method. Shuyi et al. [50] is another U-Net-based approach based on the idea of utilizing densely connected blocks for mass segmentation in mammography images. In the encoder, the path is constructed from densely connected CNNs [51]. In the decoder, gated attention [39] modules are used when combining high- and low-level features, allowing the model to focus more on the target. Another line of research within the scope of multi-scale studies is [52], in which the generator is an improved version of U-Net for mass segmentation. Multi-scale segmentation results were created for three critics with identical structures and different scales in the discriminator. Ravitha et al. [53] developed an approach to use the error of the outputs of intermediate layers (in both encoder and decoder paths) relative to the ground truth labels as a supervision signal to boost the model’s performance. In every stage of the encoder and decoder, attention blocks with upsampling were applied to the outputs of the block. The resulting features were linearly combined with the output of the decoder and incorporated into the objective criterion of the network to enhance the robustness of the method.

Sun et al. [6] introduced an asymmetric encoder–decoder network (AU-Net). In the encoder path, ResBlocks (three conv layers with a residual connection) were used; in the decode path, basic blocks (including two conv layers) were utilized. The main contribution of the paper was a new upsampling method. In the new Attention-guided Upsampling (AU) Block, high-level features were upsampled through dense and bilinear upsampling. Then, the low-level features were combined with the output of dense upsampling through element-wise summation. The resulting feature maps from the previous step were concatenated with the output of bilinear upsampling and were fed into a channel-wise attention module. Finally, the input of the channel-wise attention module was combined with its output by channel-wise multiplication. AU-Net [6] was the baseline method in this study.

3. Materials and Methods

This section presents the process for segmenting pectoral muscles, followed by the proposed training scheme for pectoral muscle removal in mammography images.

3.1. Ground Truth Generation for Pectoral Muscle

LabelMe [54] was used to segment the pectoral muscle, in which polygons were fitted to the pectoral muscle for the MLO images in INbreast, CBIS-DDSM, and MIAS datasets. For images with higher density or lower visibility of the boundaries of the pectoral muscle, the portion of the muscle that was clearly distinguishable from the breast tissues was selected. The segmentation masks were generated in JSON and image formats with two classes, the pectoral muscle and background (the remaining breast tissues and image background).

3.2. Datasets and Preprocessing

INbreast contains a group of 150 cases with 410 high-resolution CC- and MLO-view images. The pectoral muscle masks for all of the MLO-view images in the INbreast dataset (except for several images in which the pectoral muscle was not presented or distinguishable) were provided in this study and used for the experiments. For the validation, due to limited samples, 5-fold cross-validation was used with the random division of 80%, 10%, and 10% for train, validation, and test sets, respectively. It should be noted that while the pectoral muscle masks were presented in the original INbreast dataset for consistency with two other datasets in the labeling process, we provided the labeling for INbreast as well.

CBIS-DDSM is an enhanced subset of the DDSM dataset. It consists of 1231 training images and 360 test images. CBIS-DDSM is commonly used for the segmentation task in the literature [6], and we provided the pectoral muscle segmentation masks for all of the MLO-view images in the CBIS-DDSM dataset. The standard split for the train and test sets was used in this study. For the validation set, 10% of the training set was randomly sampled.

The MIAS dataset contains only MLO-view images; therefore, it is widely used in proposed methods for the pectoral muscle removal task. MIAS has a total of 322 images. Providing the pectoral muscle segmentation masks is important for research in this domain, so we also included the segmentation masks for all the images in the MIAS dataset (unless the muscle was not visible in the images). We used MIAS for cross-dataset testing using models trained on INbreast and CBIS-DDSM datasets.

For all of the datasets, cropping, padding, resizing, and artefact removal were performed as needed.

3.3. Pectoral Muscle Segmentation

The main motivation for this study is to provide the segmentation of the pectoral muscle for several datasets, which enables the training of pectoral muscle removal methods that could also be applied to new unseen datasets. The main use-case of these segmentation masks will be in the removal of pectoral muscle in the preprocessing step for tasks such as the classification of images (for instance, benign/malignant), segmentation of the masses and other abnormalities, and density estimation. To use the segmentation masks for a new dataset, first, a segmentation model should be trained using the provided muscle segmentation masks, and then, the model can be used for the segmentation of the pectoral muscles in a new dataset.

Given the segmentation masks of the pectoral muscles, we proposed to use deep learning-based methods for pectoral muscle segmentation. To this end, we selected AU-Net, a method for segmenting mammography images. AU-Net [6] is an improved version of the U-Net [35] in which the encoding and decoding paths are not symmetrical. ResUnit and the basic decoder proposed in the AU-Net were used for the encoder and the decoder. The details of the novel idea of AU-Net, the AU Block, were presented in the AU-Net approach [6]. A binary cross-entropy loss function was used in the proposed method.

For the training stage, we used early stopping, with a learning rate of 0.00001, and Adam optimizer. The models were trained on an RTX 4090 GPU. Cross-validation was utilized for the INbreast dataset. We implemented the method using TensorFlow. After training on the INbreast, CBIS-DDSM, and a combination of both datasets, the models were used for the same- and cross-dataset tests to evaluate their performance.

3.4. Evaluation Metrics

Dice Similarity Coefficient, (DSC, Equation (1)), sensitivity (Equation (2)), and accuracy (Equation (3)) were selected as the evaluation metrics in all of the experiments due to the complementary information they provided. As pectoral muscles occupy a small portion of the images, using accuracy alone would not have been an informative means of evaluating the method. Hence, using an additional metric, such as sensitivity, allowed us to measure the false negative rate. DSC measures the ratio of the correctly predicted positive pixels over the number of positive areas in both the ground truth and the prediction mask, considering the false positive rate in the calculations alongside false negatives. Therefore, we also included DSC in the evaluation metrics.

Here, , , , and represent true positive, true negative, false positive, and false negative rates, respectively.

4. Experimental Results

In this section, an evaluation of the results of the same- and cross-dataset experiments, as well as a comparison with previous methods, is presented.

4.1. Results for INbreast and CBIS-DDSM

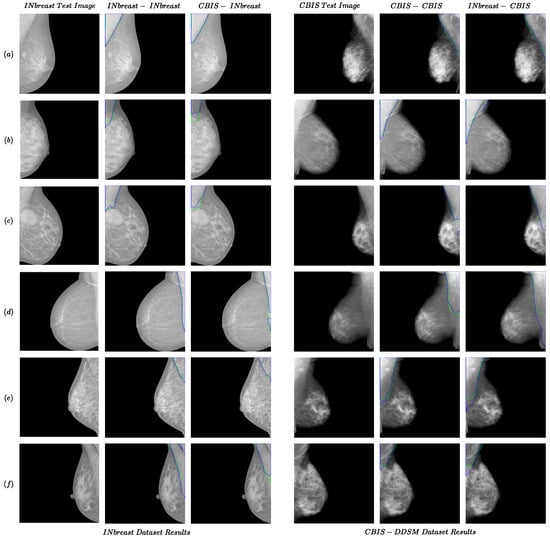

The results for the INbreast and CBIS-DDSM datasets are presented in Table 1. The following format was used for the names of the experiments: “train dataset name—test dataset name”. As shown in the first two rows of the table, the models trained with CBIS-DDSM generally outperformed those trained with INbreast in same-dataset experiments. The pectoral muscles generally have higher pixel intensities in the CBIS-DDSM (compared to the rest of the breast regions) compared to the INbreast. In addition, more training images are available in the CBIS-DDSM. These could be the reasons for the better performance of the model on the CBIS-DDSM dataset. Some of the results for same-dataset experiments are shown in Figure 1. For convenience, we used ‘CBIS’ instead of ‘CBIS-DDSM’ in the figures and tables. As shown in the INbreast-INbreast and CBIS-CBIS columns from Figure 1, the power of extracting the features from data automatically in a deep learning-based model enabled our methods to overcome challenges that would impact traditional methods. For instance, the extra line in the pectoral muscle area (Figure 1d (INbreast) and Figure 1b (CBIS)), the ambiguity of the boundary (Figure 1b,e (INbreast) and Figure 1f (CBIS)), presence of a mass in the muscle area (Figure 1c (INbreast)) would have posed challenges for the traditional method; however, the proposed method was able to perform well in these cases.

Table 1.

Results for pectoral muscle segmentation for same- and cross-dataset experiments. In the name of the experiments, the first term is the training dataset, and the second is the test dataset.

Figure 1.

Examples of the performance of the proposed method for the same- and cross-dataset tests for INbreast and CBIS-DDSM datasets. Each row from (a–f) presents two examples from INbreast and CBIS-DDSM datasets. The green and blue colors present boundaries for the ground truth and predicted segmentation.

4.2. Results for Cross-Dataset Tests

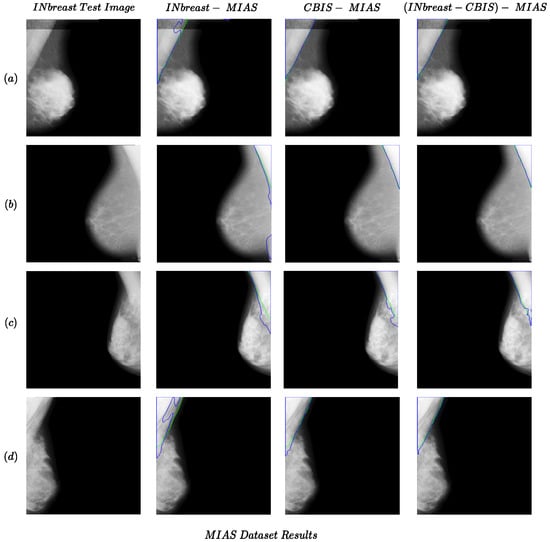

In order to measure the generalizability of the models, we conducted five cross-dataset tests (presented in the last five rows in Table 1). One important aspect of the results for cross-dataset experiments is that when trained or tested on INbreast, while being highly accurate, the performance was generally lower than other cross-dataset experiments. One reason could be the fact that images in these datasets were acquired separately in different conditions; therefore, they may also visually differ. This could be observed in the differences between samples from the INbreast dataset compared to the MIAS and CBIS-DDSM datasets (which seem to be more similar, specifically the brightness of pectoral muscle) in Figure 1 and Figure 2. Therefore, the similarities in the setting in which images were recorded also affect the cross-dataset experiments. With this observation, to make the cross-dataset model more robust, we also trained the model using the combination of the CBIS-DDSM and INbreast for the cross-dataset tests on the MIAS dataset (combined-MIAS row in Table 1). As shown in the last row, the combined-MIAS experiment achieved the best results in the cross-dataset setting. It should be noted that for experiments with the MIAS dataset as the test set, the whole dataset was used.

Figure 2.

Examples of the performance of the proposed method for cross-dataset tests for the MIAS dataset as the test set. Each row (a–d) presents results for one sample in the MIAS dataset. The green and blue colors present boundaries for the ground truth and predicted segmentation.

Some examples of the cross-dataset experiments are shown in Figure 1 (CBIS-INbreast and INbreast-CBIS columns) and Figure 2. The examples of cross-dataset models show comparable performance to the same-dataset models. This confirms the validity of our idea regarding the generalizability of the pectoral muscle removal method proposed in the study. Regarding the MIAS dataset results in Figure 2 and Table 1, the performance of the Combined-MIAS model is better than that of training on each of the INbreast and CBIS-DDSM dataset separately. In Figure 2, all the CBIS-MIAS samples have better results than the INbreast-MIAS.

4.3. Comparison with State-of-the-Art Methods

Table 2 compares the proposed method with previous approaches for pectoral muscle segmentation. The proposed method had a superior performance for both same- and cross-dataset experiments compared to the previous methods in terms of accuracy. The values in the second row in the table indicate the number of images used for testing each method. Compared to the best-performing approach among the methods that used all 322 images for testing, the proposed method improved the accuracy by 1.27% on average between three variations. Training a deep learning model enables the method to be more robust for samples that might not align well with the assumptions of traditional methods. It should be noted that accuracy was the shared metric used in the previous methods; hence, we used it as the comparison metric and were not able to include DSC and sensitivity, as they were not available in most of the previous works.

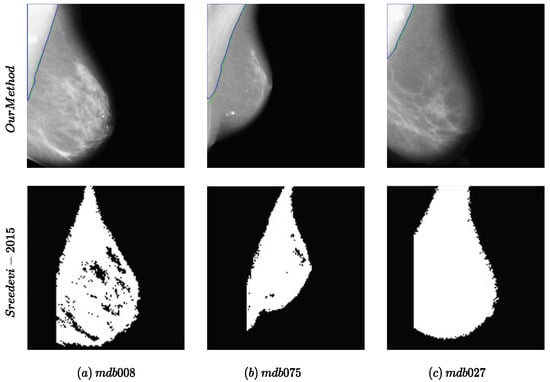

Regarding the visual comparison, as neither the implementation nor the full results on the datasets were available, we compared our method with one of the previous works that included visual results with the image ID [7] for the MIAS dataset. The results are presented in Figure 3. Our method achieved better results in comparison with [7] with smoother edges without any further postprocessing.

Figure 3.

Examples of the performance of the proposed method compared to the method proposed in [7] for the MIAS dataset. The names of the samples in the dataset are mentioned in (a–c).

5. Discussion

This study proposed a supervised segmentation method for the pectoral muscle in mammography images. The goal is to use this segmentation in the preprocessing steps for other tasks such as classification, segmentation of the abnormalities, and image registration for multi-view approaches. In mammography images, in some cases, the tissue and pectoral muscle are both visible as they are layered on top of each other. Therefore, in some cases, removing the pectoral muscle might remove the portion of the tissue in the images, which could be harmful for tasks such as density estimation. With this observation in mind, the following approach was used instead of solely focusing on the segmentation of the pectoral muscle in the annotation process. For cases with ambiguous boundaries for the muscle or very low visibility of the muscle, the annotation covered the portion that was mainly and more obviously part of the pectoral muscle to preserve more of the breast tissue in the images. One additional challenge is the diversity of the appearance of the pectoral muscle in the images. When there are limited data for complex appearances, the model’s performances suffer, which could be addressed by utilizing more training data or postprocessing. Moreover, the diversity in the images from the datasets affects the generalizability of the models for new unseen data. The results of cross-dataset tests in this study confirm the need for more diverse datasets in the training process.

Table 2.

Comparison between the proposed methods for pectoral muscle removal.

Table 2.

Comparison between the proposed methods for pectoral muscle removal.

| Method | [29] | [55] | [56] | [26] | [15] | [20] | [18] | (CBIS) | (INbreast) | (Combined) |

|---|---|---|---|---|---|---|---|---|---|---|

| Number of Images | 322 | 322 | 161 | 322 | 300 | 321 | 322 | 322 | 322 | 322 |

| Accuracy | 98.1 | 92.2 | 93 | 96.81 | 98 | 97.8 | 95 | 99.45 | 99.03 | 99.56 |

6. Conclusions

In this study, we provided segmentation masks for INbreast, MIAS, and CBIS-DDSM subset datasets. The segmentation masks provided in this paper will open the door to the use of supervised deep learning-based methods in research on pectoral muscle removal in mammography images. In addition, we trained AU-Net on the datasets separately and achieved an accuracy of 99.55% and 99.61% for the pectoral removal task on INbreast and CBIS-DDSM datasets, respectively. In order to examine the generalizability of these models for new datasets, we also performed cross-dataset tests, which achieved high performance as well. We also tested both models on the entire MIAS dataset, resulting in accuracies of 99.03% and 99.45% for models trained on the INbreast and CBIS-DDSM, respectively. To improve the diversity of the appearance of the samples, a third model was trained on the combination of the INbreast and CBID-DDSM, resulting in an accuracy of 99.56% for the MIAS dataset. The masks provided in this study could be employed for pectoral muscle removal as a preprocessing step in a variety of tasks for mammography images, including classification, segmentation, and density estimation. In addition, the models trained using this information could be utilized for pectoral muscle removal in new unseen data.

Author Contributions

Conceptualization, P.A., M.N. (Mircea Nicolescu), M.N. (Monica Nicolescu) and G.B.; methodology, P.A.; software, P.A.; validation, P.A.; formal analysis, P.A.; investigation, P.A.; resources, P.A.; data curation, P.A.; writing—original draft preparation, P.A.; writing—review and editing, P.A., M.N. (Mircea Nicolescu), M.N. (Monica Nicolescu) and G.B.; visualization, P.A., M.N. (Mircea Nicolescu), M.N. (Monica Nicolescu) and G.B.; supervision, M.N. (Mircea Nicolescu), M.N. (Monica Nicolescu) and G.B.; project administration, P.A., M.N. (Mircea Nicolescu), M.N. (Monica Nicolescu) and G.B.; funding acquisition; M.N. (Mircea Nicolescu), M.N. (Monica Nicolescu) and G.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

INbreat, CBIS-DDSM and MIAS datasets are publicly available at https://www.kaggle.com/datasets/tommyngx/inbreast2012 (accessed on 1 January 2023), https://wiki.cancerimagingarchive.net/pages/viewpage.action?pageId=22516629 (accessed on 1 January 2023) and and https://www.repository.cam.ac.uk/items/b6a97f0c-3b9b-40ad-8f18-3d121eef1459 (accessed on 1 January 2023), respectively.

Acknowledgments

We are immensely grateful for the contributions of Iliya Hajizadeh and Raha Hajizadeh in the annotation process.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Moghbel, M.; Ooi, C.Y.; Ismail, N.; Hau, Y.W.; Memari, N. A review of breast boundary and pectoral muscle segmentation methods in computer-aided detection/diagnosis of breast mammography. Artif. Intell. Rev. 2020, 53, 1873–1918. [Google Scholar] [CrossRef]

- Aliniya, P.; Nicolescu, M.; Nicolescu, M.; Bebis, G. Supervised Pectoral Muscle Removal in Mammography Images. In Artificial Intelligence in Medicine, Proceedings of the 22nd International Conference, AIME 2024, Salt Lake City, UT, USA, 9–12 July 2024; Springer Nature: Cham, Switzerland, 2024; pp. 126–130. [Google Scholar]

- Moreira, I.C.; Amaral, I.; Domingues, I.; Cardoso, A.; Cardoso, M.J.; Cardoso, J.S. Inbreast: Toward a full-field digital mammographic database. Acad. Radiol. 2012, 19, 236–248. [Google Scholar] [CrossRef] [PubMed]

- Suckling, J.; Parker, J.; Dance, D.; Astley, S.; Hutt, I. Mammographic Image Analysis Society (MIAS) Database v1.21. [Dataset]; Apollo-University of Cambridge Repository. 2015. Available online: https://www.repository.cam.ac.uk/items/b6a97f0c-3b9b-40ad-8f18-3d121eef1459 (accessed on 1 August 2022).

- Lee, R.S.; Gimenez, F.; Hoogi, A.; Miyake, K.K.; Gorovoy, M.; Rubin, D.L. A curated mammography data set for use in computer-aided detection and diagnosis research. Sci. Data 2017, 4, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Sun, H.; Li, C.; Liu, B.; Liu, Z.; Wang, M.; Zheng, H.; Feng, D.D.; Wang, S. AUNet: Attention-guided dense-upsampling networks for breast mass segmentation in whole mammograms. Phys. Med. Biol. 2020, 65, 055005. [Google Scholar] [CrossRef] [PubMed]

- Sreedevi, S.; Sherly, E. A novel approach for removal of pectoral muscles in digital mammogram. Procedia Comput. Sci. 2015, 46, 1724–1731. [Google Scholar] [CrossRef][Green Version]

- Rahimeto, S.; Debelee, T.G.; Yohannes, D.; Schwenker, F. Automatic pectoral muscle removal in mammograms. Evol. Syst. 2021, 12, 519–526. [Google Scholar] [CrossRef]

- Sansone, M.; Marrone, S.; Salvio, G.D.; Belfiore, M.P.; Gatta, G.; Fusco, R.; Vanore, L.; Zuiani, C.; Grassi, F.; Vietri, M.T.; et al. Comparison between two packages for pectoral muscle removal on mammographic images. Radiol. Med. 2022, 127, 848–856. [Google Scholar] [CrossRef]

- Mughal, B.; Muhammad, N.; Sharif, M.; Rehman, A.; Saba, T. Removal of pectoral muscle based on topographic map and shape-shifting silhouette. BMC Cancer 2018, 18, 778. [Google Scholar] [CrossRef]

- Sarada, C.; Lakshmi, K.V.; Padmavathamma, M. MLO Mammogram Pectoral Masking with Ensemble of MSER and Slope Edge Detection and Extensive Pre-Processing. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 135–144. [Google Scholar] [CrossRef]

- Mustra, M.; Grgic, M. Robust automatic breast and pectoral muscle segmentation from scanned mammograms. Signal Process. 2013, 93, 2817–2827. [Google Scholar] [CrossRef]

- Subashini, T.S.; Ramalingam, V.; Palanivel, S. Pectoral muscle removal and detection of masses in digital mammogram using CCL. Int. J. Comput. Appl. 2010, 1, 71–76. [Google Scholar] [CrossRef]

- Tayel, M.; Mohsen, A. Breast boarder boundaries extraction using statistical properties of mammogram. In Proceedings of the IEEE 10th International Conference on Signal Processing Proceedings, Beijing, China, 24–28 October 2010; pp. 2468–2471. [Google Scholar]

- Czaplicka, K.; Włodarczyk, J. Automatic breast-line and pectoral muscle segmentation. Schedae Inform. 2011, 20, 195–209. [Google Scholar]

- Ergin, S.; Esener, İ.I.; Yüksel, T. A genuine GLCM-based feature extraction for breast tissue classification on mammograms. Int. J. Intell. Syst. Appl. Eng. 2016, 4, 124–129. [Google Scholar] [CrossRef]

- Hazarika, M.; Mahanta, L.B. A novel region growing based method to remove pectoral muscle from MLO mammogram images. In Advances in Electronics, Communication and Computing: ETAEERE-2016; Springer: Singapore, 2018; pp. 307–316. [Google Scholar]

- Taghanaki, S.A.; Liu, Y.; Miles, B.; Hamarneh, G. Geometry-based pectoral muscle egmentation from MLO mammogram views. IEEE Trans. Biomed. Eng. 2017, 64, 2662–2671. [Google Scholar]

- Shrivastava, A.; Chaudhary, A.; Kulshreshtha, D.; Singh, V.P.; Srivastava, R. Automated digital mammogram segmentation using dispersed region growing and sliding window algorithm. In Proceedings of the 2017 2nd InternationalConference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 366–370. [Google Scholar]

- Chen, Z.; Zwiggelaar, R. A combined method for automatic identification of the breast boundary in mammograms. In Proceedings of the 2012 5th International Conference on BioMedical Engineering and Informatics, Chongqing, China, 16–18 October 2012; pp. 121–125. [Google Scholar]

- Nagi, J.; Kareem, S.A.; Nagi, F.; Ahmed, S.K. Automated breast profile segmentation for ROI detection using digital mammograms. In Proceedings of the 2010 IEEE EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 30 November–2 December 2010; pp. 87–92. [Google Scholar]

- Maitra, I.K.; Nag, S.; Bandyopadhyay, S.K. Technique for preprocessing of digital mammogram. Comput. Methods Programs Biomed. 2012, 107, 175–188. [Google Scholar] [CrossRef]

- Priyanka, S.; Sivakumar, S.; Selvam, P. Optimizing Breast Cancer Detection: Machine Learning for Pectoral Muscle Segmentation in Mammograms. In Proceedings of the 2024 International Conference on Integrated Circuits and Communication Systems (ICICACS), Raichur, India, 23–24 February 2024; pp. 1–6. [Google Scholar]

- Camilus, K.S.; Govindan, V.K.; Sathidevi, P.S. Computer-aided identification of the pectoral muscle in digitized mammograms. J. Digit. Imaging 2010, 23, 562–580. [Google Scholar] [CrossRef]

- Xu, W.; Li, L.; Liu, W. A novel pectoral muscle segmentation algorithm based on polyline fitting and elastic thread approaching. In Proceedings of the 2007 1st International Conference on Bioinformatics and Biomedical Engineering, Wuhan, China, 6–8 July 2007; pp. 837–840. [Google Scholar]

- Shen, R.; Yan, K.; Xiao, F.; Chang, J.; Jiang, C.; Zhou, K. Automatic pectoral muscle region segmentation in mammograms using genetic algorithm and morphological selection. J. Digit. Imaging 2018, 31, 680–691. [Google Scholar] [CrossRef]

- Slavković, I.M.; Gavrovska, A.; Milivojević, M.; Reljin, I.; Reljin, B. Breast region segmentation and pectoral muscle removal in mammograms. Telfor J. 2016, 8, 50–55. [Google Scholar] [CrossRef]

- Yin, K.; Yan, S.; Song, C.; Zheng, B. A robust method for segmenting pectoral muscle in mediolateral oblique (MLO) mammograms. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 237–248. [Google Scholar] [CrossRef]

- Rampun, A.; Morrow, P.J.; Scotney, B.W.; Winder, J. Fully automated breast boundary and pectoral muscle segmentation in mammograms. Artif. Intell. Med. 2017, 79, 28–41. [Google Scholar] [CrossRef]

- Dhimann, S.K.; Sawhney, T.; Yadav, R.K. Effect of Pectoral Muscles on CNN based Mammographic Cancer Detection. In Proceedings of the 2024 11th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 28 February–1 March 2024; pp. 981–985. [Google Scholar]

- Mahaveera, D.J.; Gujar, S.A.; Cen, S.Y.; Lei, X.; Hwang, D.H.; Varghese, B.A. Pectoral Muscle Segmentation from Digital Mammograms Using a Transformative Approach. In Proceedings of the 2023 19th International Symposium on Medical Information Processing and Analysis (SIPAIM), Mexico City, Mexico, 5–17 November 2023; pp. 1–5. [Google Scholar]

- Chen, S.; Bennett, D.L.; Colditz, G.A.; Jiang, S. Pectoral muscle removal in mammogram images: A novel approach for improved accuracy and efficiency. Cancer Causes Control 2024, 35, 185–191. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ciresan, D.; Giusti, A.; Gambardella, L.; Schmidhuber, J. Deep neural networks segment neuronal membranes in electron microscopy images. Adv. Neural Inf. Process. Syst. 2012, 25, 2843–2851. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Wu, S.; Wang, Z.; Liu, C.; Zhu, C.; Wu, S.; Xiao, K. Automatical segmentation of pelvic organs after hysterectomy by using dilated convolution u-net++. In Proceedings of the 2019 IEEE 19th International Conference on Software Quality, Reliability and Security Companion (QRS-C), Sofia, Bulgaria, 22–26 July 2019; pp. 362–367. [Google Scholar]

- Li, C.; Tan, Y.; Chen, W.; Luo, X.; Gao, Y.; Jia, X.; Wang, Z. Attention Unet++: A nested attention-aware U-net for liver ct image segmentation. In Proceedings of the 2020 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 25–28 October 2020; pp. 345–349. [Google Scholar]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 205–218. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention u-net: Learning where to look for the pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Song, T.; Meng, F.; Rodriguez-Paton, A.; Li, P.; Zheng, P.; Wang, X. U-next: A novel convolution neural network with an aggregation U-net architecture for gallstone segmentation in ct images. IEEE Access 2019, 7, 166823–166832. [Google Scholar] [CrossRef]

- Pi, J.; Qi, Y.; Lou, M.; Li, X.; Wang, Y.; Xu, C.; Ma, Y. FS-UNet: Mass segmentation in mammograms using an encoder-decoder architecture with feature strengthening. Comput. Biol. Med. 2021, 137, 104800. [Google Scholar] [CrossRef]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.-W.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020—2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Cho, P.; Yoon, H.-J. Evaluation of U-net-based image segmentation model to digital mammography. Med. Imaging Image Process. 2021, 11596, 593–599. [Google Scholar]

- Zhang, J.; Jin, Y.; Xu, J.; Xu, X.; Zhang, Y. MDU-net: Multi-scale densely connected u-net for biomedical image segmentation. arXiv 2018, arXiv:1812.00352. [Google Scholar] [CrossRef]

- Li, C.; Tan, Y.; Chen, W.; Luo, X.; Gao, Y.; Jia, X.; Wang, Z. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

- Drozdzal, M.; Vorontsov, E.; Chartrand, G.; Kadoury, S.; Pal, C. The importance of skip connections in biomedical image segmentation. In Proceedings of the International Workshop on Deep Learning in Medical Image Analysis, International Workshop on Large-Scale Annotation of Biomedical Data and Expert Label Synthesis, Granada, Spain, 20 September 2016; pp. 179–187. [Google Scholar]

- Hai, J.; Qiao, K.; Chen, J.; Tan, H.; Xu, J.; Zeng, L.; Shi, D.; Yan, B. Fully convolutional densenet with multiscale context for automated breast tumor segmentation. J. Healthc. Eng. 2019, 2019, 8415485. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Jégou, S.; Drozdzal, M.; Vazquez, D.; Romero, A.; Bengio, Y. The one hundred layers tiramisu: Fully convolutional densenets for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 11–19. [Google Scholar]

- Li, S.; Dong, M.; Du, G.; Mu, X. Attention dense-u-net for automatic breast mass segmentation in digital mammogram. IEEE Access 2019, 7, 59037–59047. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Chen, J.; Chen, L.; Wang, S.; Chen, P. A novel multi-scale adversarial networks for precise segmentation of x-ray breast mass. IEEE Access 2020, 8, 103772–103781. [Google Scholar] [CrossRef]

- Rajalakshmi, N.; Ravitha, R.; Vidhyapriya, N.; Elango; Ramesh, N. Deeply supervised u-net for mass segmentation in digital mammograms. Int. J. Imaging Syst. Technol. 2021, 31, 59–71. [Google Scholar]

- LabelMe Homepage. Available online: https://github.com/labelmeai/labelme (accessed on 1 August 2022).

- Yoon, W.B.; Oh, J.E.; Chae, E.Y.; Kim, H.H.; Lee, S.Y.; Kim, K.G. Automatic detection of pectoral muscle region for computer-aided diagnosis using MIAS mammograms. BioMed Res. Int. 2016, 2016, 5967580. [Google Scholar] [CrossRef] [PubMed]

- Qayyum, A.; Basit, A. Automatic breast segmentation and cancer detection via SVM in mammograms. In Proceedings of the 2016 International Conference on Emerging Technologies (ICET), Islamabad, Pakistan, 18–19 October 2016; pp. 1–6. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).