Abstract

Deep vision foundation models such as DINOv3 offer strong visual representation capacity, but their direct deployment in medical image segmentation remains difficult due to the limited availability of annotated clinical data and the computational cost of full fine-tuning. This study proposes an adaptation framework called StrDiSeg that integrates lightweight bottleneck adapters between selected transformer layers of DINOv3, enabling task-specific learning while preserving pretrained knowledge. An attention-enhanced U-Net decoder with multi-scale feature fusion further refines the representations. Experiments were performed on two publicly available ischemic stroke lesion segmentation datasets—AISD (Non Contrast CT) and ISLES22 (DWI). The proposed method achieved Dice scores of 0.516 on AISD and 0.824 on ISLES22, outperforming baseline models and demonstrating strong robustness across different clinical imaging modalities. These results indicate that adapter-based fine-tuning provides a practical and computationally efficient strategy for leveraging large pretrained vision models in medical image segmentation.

1. Introduction

Acute ischemic stroke (AIS) is one of the leading causes of mortality and long-term disability worldwide, and rapid and accurate assessment of ischemic brain injury is essential for treatment selection and clinical prognosis. Early imaging evaluation plays a decisive role in determining treatment windows, thrombolysis eligibility, and predicting functional outcomes [1,2]. Non-contrast computed tomography (NCCT) and diffusion-weighted magnetic resonance imaging (DWI) are the most widely used modalities for ischemic lesion assessment [1]. Automatically and accurately segmenting stroke lesions from these images can greatly accelerate clinical workflows, reduce the variability associated with manual interpretation, and provide objective biomarkers for decision support [3,4].

However, ischemic lesions on NCCT and DWI imaging are highly heterogeneous in terms of appearance, location, size, and intensity distribution. Early lesions on NCCT frequently exhibit extremely subtle density changes, often approaching the limit of human perceptual discrimination, while DWI lesions may demonstrate sharp lesion boundaries but can vary significantly across patients [1]. Traditional medical image segmentation methods, such as level set, region growing, or active contour models, require hand-crafted image features and heuristic parameters that are sensitive to noise and image quality. These methods often struggle to generalize across different scanners, patient populations, or lesion subtypes, leading to inconsistent or unstable clinical performance [5,6,7].

Deep learning has transformed medical image analysis by enabling data-driven feature representation and end-to-end optimization [8]. Convolutional neural networks (CNNs), such as U-Net and its variants [9,10,11,12,13], have achieved significant advances in brain lesion segmentation by leveraging hierarchical feature extraction and skip-connection architectures to preserve spatial details. More recently, the emergence of vision transformer (ViT) models [14] has introduced a new paradigm for visual recognition, replacing local convolutional operators with global self-attention mechanisms capable of modeling large-scale context and long-range relationships. ViTs have demonstrated strong success when trained on extremely large image collections, inspiring a broad range of transformer-based models for medical segmentation tasks. Hybrid and hierarchical designs have further extended their representational power, leading to state-of-the-art results on many clinical imaging benchmarks [15,16,17].

Despite these advances, a major bottleneck remains: medical imaging datasets are typically orders of magnitude smaller than natural image datasets, causing strong domain gaps when directly transferring models trained on large-scale natural images. One conventional approach to address this challenge is to fine-tune only a small portion of the model, such as by introducing adapter modules or freezing most backbone layers to reduce the risk of overfitting [18,19]. While these lightweight transfer strategies are computationally efficient, they inherently assume that high-level visual concepts learned from natural images sufficiently align with medical visual structures. In ischemic stroke imaging, this assumption does not always hold. NCCT lesions are often low-contrast, highly subtle, and have completely different texture statistics compared with natural photographs [1]; therefore, shallow and mid-level features extracted from a frozen backbone may not provide sufficiently discriminative representations. As a result, limited fine-tuning may fail to adapt the pretrained model to the fundamental characteristics of stroke imaging.

With the growing availability of large-scale pretrained vision models, recent work has begun to explore fully unfreezing the backbone during adaptation to medical imaging tasks. Although this approach entails a substantial computational and parameter-optimization burden, it offers the major advantage of allowing every layer of the pretrained model to adjust to the new domain [20,21]. For tasks where visual appearance deviates drastically from natural photographs, such as ischemic lesion segmentation on NCCT or DWI, full fine-tuning may be necessary to recover optimal representational alignment. However, relatively few studies have systematically evaluated whether fully unfreezing large-scale transformer backbones indeed leads to improved performance in clinical disease segmentation, and how such large feature extractors should be efficiently integrated with task-specific decoders.

In this study, we address this question by investigating the use of a fully fine-tuned DINOv3 [22] Vision Transformer (ViT-B backbone distilled from the version of 7 billon parameters, approximately 85 milion parameters) for ischemic stroke lesion segmentation on two public datasets: AISD (NCCT) [23] and ISLES22 (DWI) [24]. Unlike parameter-efficient tuning approaches explored in many recent studies, we unfreeze the entire DINOv3 backbone and train all parameters end-to-end. Motivated by clinical observations and empirical findings, we hypothesize that ischemic lesion appearance differs strongly from natural image feature distributions, while fully unfreezing the model allows better adaptation of both low-level and high-level feature representations, and this adaptation will translate into significantly improved segmentation accuracy compared with partially frozen or only adapter-based approaches.

To decode the refined transformer features into pixel-accurate segmentation masks, we design a lightweight U-Net-style multi-scale decoder equipped with both spatial and channel attention modules to better integrate high-resolution local cues and deep semantic representations [25]. This decoder preserves fine structural details while mitigating the tendency of ViTs to lose spatial precision due to global tokenization. The full model is trained end-to-end using a hybrid loss combining Dice and binary cross-entropy objectives, balancing overlap alignment with voxelwise classification fidelity.

Our contributions are as follows:

- 1.

- We present a comprehensive investigation into end-to-end fine-tuning of a large pretrained vision transformer for ischemic stroke lesion segmentation on NCCT and MRI;

- 2.

- We design a multi-scale U-Net-style decoder with attention mechanisms for precise structural reconstruction from transformer features;

- 3.

- We demonstrate experimentally that full fine-tuning significantly improves segmentation performance over partial or lightweight transfer, suggesting that large pretrained on natural imagees visual backbones may require full representational adaptation in clinical imaging domains with strong modality shifts.

2. Materials and Methods

2.1. Datasets

This study evaluates the proposed segmentation framework on two public ischemic stroke datasets—AISD [23] and ISLES22 [24]. AISD contains Non-Contrast CT examinations collected from acute stroke patients within 24 h of symptom onset, whereas ISLES22 provides magnetic resonance imaging with expert delineations of stroke lesions in the acute to subacute phase. These two datasets allow comprehensive assessment of segmentation robustness across different acquisition environments and imaging modalities.

For AISD, we follow the official data split provided by the dataset authors. AISD contains 397 NCCT scans acquired from acute ischemic stroke patients whose imaging was performed within 24 h after symptom onset. All patients subsequently underwent diffusion-weighted MRI within the same 24-h window, which serves as the reference standard for lesion annotation. The CT scans were acquired with a slice thickness of 5 mm. Lesion masks were generated by a trained radiologist using MRI as a diagnostic reference, and subsequently double-reviewed and confirmed by a senior expert to ensure annotation reliability. Among the 397 cases, 345 scans are used for model development and validation, while the remaining 52 constitute the official held-out test set. The segmentation labels categorize tissues into five classes—remote, clearly visible acute infarct, blurred acute infarct, invisible acute lesion, and general infarction—where we follow common practice and consider all labels 1, 2, 3, 5 as positive infarct regions for supervised segmentation.

In contrast, the ISLES22 dataset provides a large, multi-center MRI cohort collected from multiple vendors and imaging platforms, reflecting the significant heterogeneity of real-world stroke imaging. This dataset includes 400 cases with substantial variability in lesion volume, distribution, number, and etiology. Patient MRI was acquired as part of clinical workflow for treatment planning, risk stratification, and outcome assessment. In this study, we use the 250 publicly available training subjects and randomly divide them into 200 training cases and 50 testing cases. Although full MRI studies provide diffusion-weighted imaging (DWI), apparent diffusion coefficient (ADC), and FLAIR sequences, we restrict model training to the DWI modality only.

2.2. Proposed Model Architecture

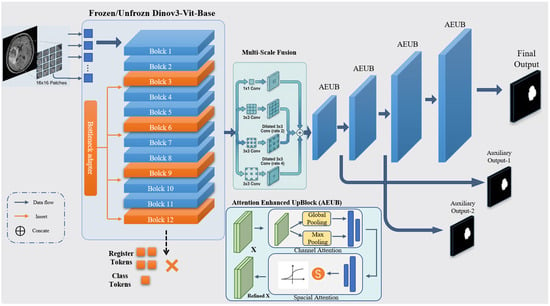

The proposed framework adapts the DINOv3 vision foundation model for ischemic stroke lesion segmentation. As shown in Figure 1, the architecture comprises three major components: (1) a pretrained DINOv3 encoder that extracts hierarchical visual representations; (2) lightweight bottleneck adapters inserted into selected Transformer layers to enable parameter-efficient domain adaptation; (3) an attention-enhanced U-Net decoder with multi-scale feature fusion for high-resolution lesion prediction. Since DINOv3 is pretrained on large-scale 2D natural images, the entire framework is designed as a slice-based 2D segmentation model, processing each axial NCCT or DWI slice independently. This design exploits the powerful pretrained representations of DINOv3 and supports efficient fine-tuning on limited medical datasets.

Figure 1.

Overall architecture of the proposed framework. The framework features a hybrid 2D slice-based design consisting of three core modules: (Left) the pre-trained DINOv3 Vision Transformer (ViT-Base) encoder with 12 transformer blocks, where selected blocks are fine-tuned or integrated with bottleneck adapters for domain-specific feature extraction; (Right) an attention-enhanced U-Net-like decoder comprising four upsampling stages with multi-scale feature fusion and attention modulation to produce high-resolution segmentation masks. Blue arrows indicate the data flow between modules, while red arrows denote the insertion of bottleneck adapters into specific transformer blocks. Transformer blocks highlighted in red correspond to the adapted layers (Blocks 3, 6, 9, and 12). The symbol “×” denotes tokens that are disabled and not used in the feature extraction pipeline.

2.2.1. Pretrained DINOv3 Encoder

DINOv3 [22] is a self-supervised vision foundation model trained on more than 1.4 billion natural images using a teacher–student distillation strategy. Unlike supervised models, DINOv3 learns robust visual representations by enforcing consistency between different views of an image, utilizing a centering and sharpening mechanism in the teacher network to avoid collapse. This self-supervised objective allows the model to capture high-level semantic features and precise spatial geometry without manual annotations. The distilled ViT-Base variant, containing approximately 85 million parameters, is employed as the backbone encoder in this study due to its strong representational capacity while low demand of memory.

Each 2D slice is partitioned into non-overlapping patches that are flattened and linearly projected into 768-dimensional tokens. Learnable positional embeddings are added to retain spatial structure. Besides the patch tokens, the original architecture introduces global class and register tokens; however, only patch tokens are utilized for constructing spatial feature maps during decoding.The token sequence passes through 12 Transformer blocks, each consisting of multi-head self-attention, feed-forward networks, layer normalization, and residual connections. This hierarchical structure allows the encoder to capture both fine-grained local textures and long-range anatomical context, which are essential for detecting subtle ischemic abnormalities.

Two encoder training strategies are investigated: (1) Frozen Backbone: freezing all backbone parameters to preserve the generic semantic pretrained features, ensuring that the model relies entirely on the lightweight adapters for domain adaptation; and (2) Unfrozen Backbone: fine-tuning the backbone with a small learning rate () to promote deep domain adaptation toward clinical imaging characteristics, allowing the large-scale pretrained weights to subtly align with the low-contrast gradients typical of ischemic stroke lesions in CT/MRI.

2.2.2. Adapter-Based Fine-Tuning

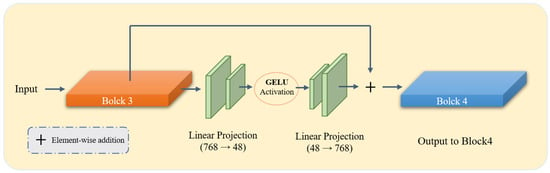

To enable parameter-efficient domain adaptation while preserving the pretrained representations, bottleneck adapters are inserted into the 3rd, 6th, 9th, and 12th Transformer layers. Each adapter adopts a lightweight residual bottleneck structure. Given a token feature vector , the adapter first projects it into a 48-dimensional latent space, applies GELU activation, and then restores it to the original dimension. The residual update is formulated as:

where denotes the bottleneck transformation consisting of a down-projection, GELU nonlinearity, and an up-projection. As illustrated in Figure 2, we employ the Gaussian Error Linear Unit (GELU) as the activation function within the bottleneck. Compared to the standard ReLU, GELU provides a smoother, non-monotonic, and differentiable alternative that weighs inputs by their percentile, which helps mitigate the vanishing gradient problem and enhances the stability of task-specific refinements during adaptation. This residual formulation ensures that the pretrained representation is preserved while allowing the model to learn task-specific refinements in a stable and controlled manner.

Figure 2.

Detailed architecture of the proposed Bottleneck Adapter integrated within the DINOv3 Vision Transformer backbone. As shown, the adapters are strategically inserted at specific transformer blocks (3, 6, 9, and 12) to capture hierarchical features while maintaining the pre-trained knowledge. Each adapter consists of a down-projection layer, a nonlinear activation function (GELU), and an up-projection layer.

The adapters introduce only 298,176 additional parameters, corresponding to less than 0.35% of the encoder. Despite their lightweight design, they provide effective domain-specific modulation with minimal computational overhead. A hierarchical learning rate schedule is employed during training: for the encoder backbone (if unfrozen), for the adapters, and for the decoder.

2.2.3. Attention-Enhanced U-Net Decoder

Following the encoder, the patch tokens are reshaped into a spatial feature map of size . A multi-scale feature aggregation module is first applied, comprising parallel convolutional branches with receptive fields of , , and dilated kernels with dilation rates of 2 and 4. Each branch reduces feature dimensionality to 192 channels, and their concatenated output is refined using a convolution with a residual connection.

The decoder utilizes a hierarchical upsampling structure where each stage incorporates a serial dual-path attention mechanism to facilitate Attention-based Feature Decoupling and Detection (AFDD) [26]. This design ensures that the foundation model’s features are refined to isolate subtle lesion signals from complex anatomical backgrounds. Specifically, after the transpose convolution , the feature map is sequentially recalibrated through channel-wise and spatial-wise dimensions [25].

First, the channel attention component recalibrates inter-channel dependencies to prioritize semantic features indicative of ischemia. By aggregating global spatial information via both average and maximum pooling, the channel weight map is formulated using a shared multi-layer network:

The intermediate feature is then generated as . To further suppress non-pathological noise, a spatial contextual refinement module is applied. It captures the local distribution of the infarct by concatenating channel-wise statistics and applying a convolution with a robust receptive field:

The final refined output provides the necessary inductive bias for delineating fragmented or low-contrast lesions. In our clinical context, this sequential refinement is important: the channel-wise path identifies “what” pathological intensity profiles are present, while the spatial path constrains the model’s focus to “where” the potential infarct zone lies. This explicit decoupling strategy aligns with recent advances in attention-guided medical feature refinement [13,27], effectively bridging the domain gap between natural image priors and clinical stroke morphology.

To improve optimization stability, auxiliary segmentation heads are attached to the first two upsampling stages, providing deep supervision at and resolutions. The final prediction is generated using a convolution followed by a classifier and upsampled via bilinear interpolation to the input size, yielding the final voxel-wise lesion probability map.

2.3. Loss Function

The network is trained using a hybrid loss that addresses both regional overlap and pixel-wise accuracy. The primary loss combines Dice loss and binary cross-entropy (BCE). Dice loss mitigates class imbalance, emphasizing correct overlap of predicted and ground truth lesions, while BCE penalizes misclassified pixels. Deep supervision is applied to decoder intermediate outputs, weighted at 0.3 relative to the main loss, yielding the total loss:

where denotes losses at intermediate decoder stages. The auxiliary-loss weight of 0.3 is chosen to encourage stable gradient propagation from intermediate layers without overwhelming the main segmentation objective, providing regularization while keeping the optimization primarily guided by the final prediction [11,28].

2.4. Evaluation Metrics and Implementation Details

2.4.1. Evaluation Metrics

To provide a rigorous quantitative assessment, we formally define the metrics used to evaluate model performance across 2D slice-level and 3D volume-level dimensions. Let P and G represent the set of foreground pixels in the predicted segmentation mask and the ground truth, respectively. The Dice Similarity Coefficient (DSC) and Intersection over Union (IoU) are defined as:

Boundary accuracy was assessed using the Hausdorff Distance (HD) and its 95th percentile variant (HD95). Let denote the shortest Euclidean distance from a point p to the set G. The HD95 is defined as the 95th percentile () of the symmetric distances to suppress the influence of segmentation outliers:

For 3D volumetric consistency, we employed the Volumetric Difference Percentage (VDP) and Volumetric Correlation (VC). Based on our implementation, VDP measures the proportion of the ground-truth lesion volume that is not correctly covered by the prediction:

To evaluate the linear consistency of lesion across the dataset, VC is defined as the Pearson correlation coefficient between the predicted volume and the ground truth:

where and denote the total counts of positive pixels, and represents their mean values. Together, these metrics provide a comprehensive evaluation of both spatial precision and clinical volumetric reliability.

2.4.2. Implementation Details

All baseline models (SegResNet, UNETR, SwinUNETR, nnU-Net, Unet++, and AttnUnet) were trained from scratch or using their official pre-trained weights under identical experimental conditions. All input slices were resized to pixels to align with the input resolution of DINOv3. All experiments were implemented in PyTorch 2.7.0 and executed on an NVIDIA RTX 4090 GPU (Nvidia, Santa Clara, CA, USA) with a fixed batch size of 8.

The data augmentation policy was standardized across all models to account for stroke lesion, including: (1) Random rotations within ; (2) Horizontal flipping (probability = 0.5); and (3) Intensity scaling and Gaussian noise () to simulate varying clinical image qualities. For the training schedule, all models were trained for 100 epochs, which was sufficient for convergence across all architectures. We utilized the Adam optimizer () with a weight decay of . For the baselines, the learning rate was optimized via a grid search within to ensure they reached their peak performance. For our proposed model, the initial learning rate was for the adapters and for the backbone fine-tuning, both controlled by a ReduceLROnPlateau scheduler (factor 0.5, patience 10).

The data augmentation policy was specifically tailored to the clinical characteristics of stroke lesions in NCCT and DWI scans. Random rotations were restricted to because head tilt in clinical scanners is typically minor; excessive rotation would create anatomically unrealistic orientations. Horizontal flipping was utilized with a probability of 0.5 to leverage the bilateral symmetry of the brain, encouraging the model to learn hemispheric-independent lesion features. For intensity-based transforms, Gaussian noise () and Intensity scaling were applied to simulate the inter-scanner variability and noise levels common in emergency clinical settings. These conservative parameter ranges ensure that the augmented samples remain within the manifold of plausible clinical presentations while improving the model’s generalization to subtle intensity variations.

3. Results

3.1. Quantitative Performance

Table 1 and Table 2 summarize the quantitative results on the AISD (NCCT) and ISLES22 (DWI) datasets, respectively. On AISD, our method achieves a Dice score of 0.516, outperforming representative CNN-based methods (U-Net, UNet++, SegResNet) and Transformer-based models (UNETR, SwinUNETR). The gains are particularly pronounced on NCCT images, where low contrast and heterogeneous intensity distributions pose challenges for conventional architectures. Our approach also yields the best HD95 and IoU among all comparisons, indicating improved overlap and boundary localization.

Table 1.

Quantitative Results of Brain NCCT Dataset AISD.

Table 2.

Quantitative Results of Brain DWI Dataset ISLES 22’.

For the ISLES22 DWI dataset, our method attains a Dice score of 0.824 and better IoU, VC, and VDP, demonstrating both accurate lesion contour prediction and highly consistent lesion volume estimation.

To evaluate the impact of different training strategies, we compared two specific regimes: the “Backbone Frozen” (Adapter-only) approach, where the DINOv3 encoder is used as a fixed feature extractor while only training the adapters and decoder, and the entire network is fine-tuned to adapt to the medical domain. As summarized in Table 1 and Table 2, fine-tuning the entire network outperforms the adapter-only variant across all metrics. For instance, on the AISD dataset, the Dice score increases from 0.372 to 0.516. This substantial gain confirms that while DINOv3 provides a robust parameter initialization, the inherent domain gap between natural scenes and clinical NCCT/DWI scans necessitates unfreezing the backbone to effectively refine representations for subtle lesion morphology.

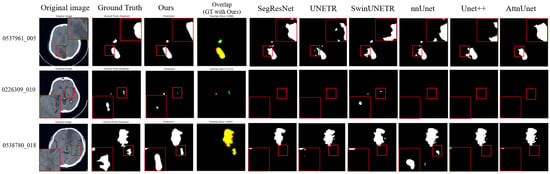

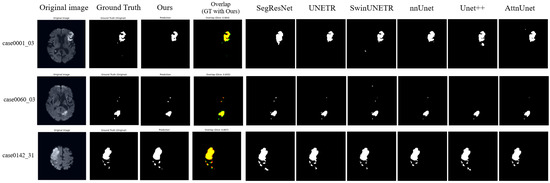

3.2. Qualitative Visualization

Representative segmentation results (Figure 3 and Figure 4) demonstrate that the proposed method accurately delineates ischemic lesions, providing sharper boundaries and more precise identification of small or fragmented infarcts compared to baseline architectures. The integration of multi-scale attention and deep supervision effectively suppresses false positives in healthy tissue and preserves spatial consistency across slices. These visual improvements are particularly evident in low-contrast NCCT images, highlighting the model’s capability to robustly capture lesion morphology under challenging imaging conditions.

Figure 3.

Visualization results on the AISD dataset. In the overlap column, red indicates our model predictions, green represents the ground truth, and yellow shows their overlapping regions. The magnified regions (red boxes) provide a zoom-in view of the segmented lesions, highlighting our model’s ability to accurately delineate blurred boundaries and detect small infarcts in challenging low-contrast imaging conditions.

Figure 4.

Visualization Results on ISLES22 dataset. In the overlap column, red indicates our model predictions, green represents the ground truth, and yellow shows their overlapping regions. While most models perform well on high-contrast DWI sequences, our method demonstrates better volumetric consistency and reduced false positives in the peripheral regions of the infarct, as evidenced by the high overlap with the ground truth.

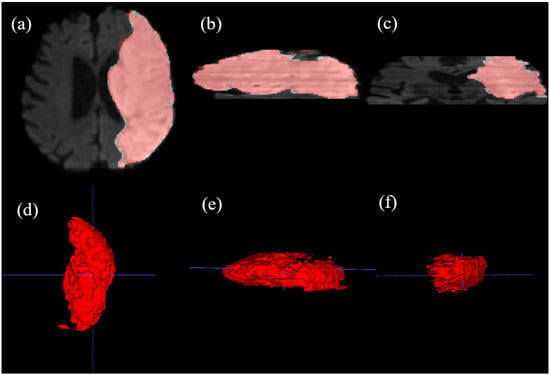

To further evaluate the spatial continuity of our 2D slice-based segmentation, a 3D reconstruction of a stroke lesion from the ISLES22 dataset was performed (Figure 5). The resulting 3D volume demonstrates that our model maintains morphological consistency across contiguous axial slices, capturing the volumetric extent of the infarct.

Figure 5.

Multi-planar visualization and multi-angle 3D volumetric reconstruction of a stroke lesion from the ISLES22 dataset, generated using ITK-SNAP v3.6.0. (a) Axial plane (AP-RL), (b) Sagittal plane (SI-AP), and (c) Coronal plane (SI-RL) views, where red contours indicate our model’s predictions. The results demonstrate the spatial consistency of our slice-based approach. (d–f) 3D volumetric renderings of the segmented lesion viewed from different orientations, illustrating the model’s ability to reconstruct the complex 3D morphology of the infarct. Blue dashed lines indicate standard reference lines. This comprehensive visualization confirms that the 2D DINOv3-based features maintain robust 3D clinical consistency.

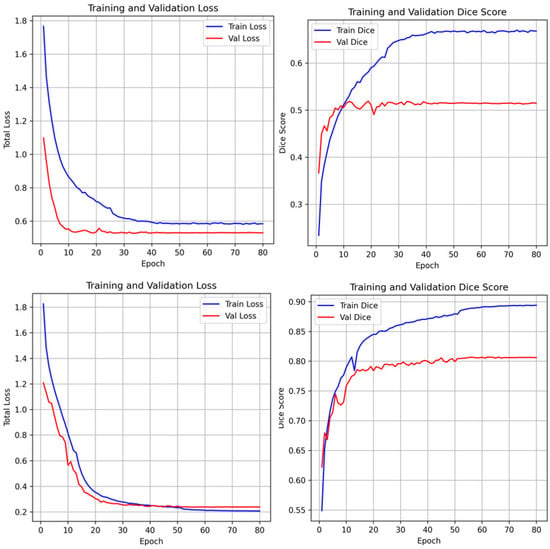

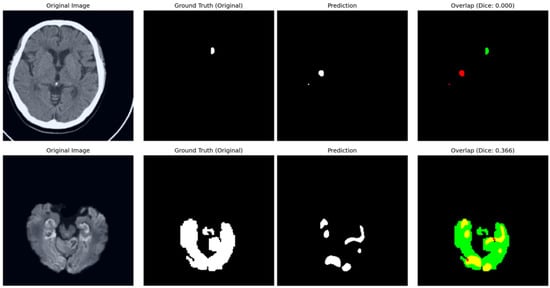

The training dynamics of the proposed framework are illustrated in Figure 6. Both training and validation losses exhibit a consistent downward trend, signifying stable convergence and robust optimization. To provide a transparent evaluation of the model’s limitations, typical failure cases are presented in Figure 7. In the NCCT-based AISD dataset, the model occasionally misses extremely small infarcts where the intensity profile is nearly indistinguishable from background noise. In the DWI-based ISLES22 dataset, challenges not only from small lesion but also arise from high-intensity artifacts or anatomical structures with appearances similar to acute lesions, leading to localized boundary confusion. These limitations stem from the inherent resolution constraints of 2D imaging and signal heterogeneity, rather than architectural deficiencies. Future work incorporating multi-modal fusion or 3D contextual refinement may further mitigate these challenges.

Figure 6.

Training and validation loss curves and dice scores of the unfrozen model. The stable convergence and stable increase of dice scores across epochs demonstrates that the foundation model adaptation avoids overfitting despite the specialized nature of the clinical datasets.

Figure 7.

Analysis of typical failure cases. In the overlap column, red contours denote model predictions, green areas represent the ground truth, and yellow indicates their intersection. (Top) In the AISD dataset, the model occasionally fails to localise ultra-small, “point-like” lesions due to low signal-to-noise ratios in NCCT. (Bottom) In the ISLES22 dataset, performance can be affected by the intensity similarity between infarct tissues and healthy structures, leading to under-segmentation.

3.3. Ablation Experiments

To assess the contribution of individual components, ablation studies were conducted on the AISD dataset and the results are summarized in Table 3. The Full model achieves the highest Dice score of 0.5161, which validates the synergistic effect of the proposed architectural components. First, comparing Setting 3 with the Full model, removing the adapter modules from the Transformer backbone led to a reduction in Dice score, highlighting their critical role in domain-specific feature adaptation without disrupting the pretrained foundational knowledge. Second, as shown in Setting 4, excluding the attention mechanisms in the decoder resulted in reduced boundary discrimination and increased misclassification in background tissue, demonstrating the importance of channel-wise and spatial-wise attention for filtering noise and focusing on fine-grained lesion segmentation. Finally, Setting 2 demonstrates that freezing the backbone during training greatly degraded performance (decreasing from 0.5161 to 0.3719), indicating that while the DINOv3 encoder provides powerful generic features, its natural-image priors require limited fine-tuning to further enhance feature alignment with the specific intensity distributions of medical imaging characteristics.

Table 3.

Results of components ablation study on AISD.

Furthermore, we investigated the impact of the loss function configuration and the auxiliary supervision weight, as detailed in Table 4. Comparing Setting 3 with Settings 1 and 2, the combination of Dice and Binary Cross-Entropy (BCE) loss yields a higher Dice score (0.5119) than using either term alone, confirming that Dice loss addresses class imbalance while BCE ensures pixel-level BCE convergence. To justify the auxiliary supervision, Setting 4 (Full model configuration) incorporates deep supervision with a weighting factor , resulting in the optimal score of 0.5161. A parameter study comparing (Setting 4) and (Setting 5) shows that while auxiliary supervision is essential for stabilizing early layer training, an excessively high weight can slightly detract from the final performance. The results demonstrate that provides the relatively better balance between deep feature guidance and final segmentation accuracy.

Table 4.

Results of loss function ablation study on AISD.

The ablation experiments confirm that the combination of pretrained foundation features, lightweight adapters, and attention-guided multi-scale decoding synergistically contributes to the improved segmentation accuracy and robustness of the proposed framework.The consistent performance drop observed across all ablation settings (Settings 2, 3, and 4) further justifies the necessity of each integrated module.

The observed performance gains are more prominent in the CT-based AISD dataset, reflecting the model’s ability to overcome the inherent challenges posed by CT imaging, including low contrast and heterogeneous appearance of ischemic lesions. In contrast, the high baseline performance on ISLES22 suggests that DWI provides inherently higher lesion contrast, making task adaptation less demanding but still benefiting from the proposed adapter-based fine-tuning strategy. These trends underscore the versatility of foundation model adaptation across diverse imaging modalities and lesion characteristics.

4. Discussion

The results of this study demonstrate that the adaptation of vision foundation models is an effective strategy for ischemic stroke lesion segmentation. By introducing lightweight bottleneck adapters into DINOv3, pretrained representations learned from large-scale natural images can be successfully transferred to clinical NCCT and DWI scans without requiring extensive medical datasets. The attention-enhanced U-Net decoder further improves lesion localization by refining feature discriminability through channel-wise and spatial attention mechanisms. Compared with conventional CNN or Transformer-based medical segmentation models, the proposed StrDiSeg framwork shows better generalization across heterogeneous lesion distributions, indicating that large-scale pretrained models encode transferable visual priors relevant to clinical imaging.

Furthermore, the clinical practicality of our StrDiSeg framework is underpinned by the choice of the distilled ViT-Base variant. While recently proposed models like the Encoder-only Mask Transformer (EoMT) [29] suggest that architectural simplicity can lead to higher speeds, such gains are often most prominent at scales (e.g., ViT-Large/Huge) and require massive pre-training datasets to internalize segmentation biases. Similarly, while Masked Autoencoders (MAE) [30] share a comparable ViT-Base architecture, their reconstruction-based pre-training often lacks the robust semantic discriminability of DINOv3. In contrast, our approach utilizes a distilled ViT-Base (85M parameters), which is significantly more lightweight than frontier models like InternVL [31,32,33]. By inheriting high-quality semantic priors from DINOv3, the model achieves a balance between parameter efficiency and diagnostic precision. This efficiency translates into rapid clinical inference, with an average processing time of 2.88 s per patient sequence on an RTX 4090 GPU.

Regarding the architecture, we leverage the unfrozen DINOv3 backbone as a highly specialized feature extractor. Unlike strategies that force a plain encoder to learn complex segmentation biases from scratch—as seen in EoMT—our method benefits from the extensive “visual feature cultivation” performed during DINOv3’s natural image pre-training. By using these pretrained weights as a better initialization for the fine-tuning stage, the encoder possesses an innate ability to capture fine-grained semantic structures even before seeing medical data. Our strategy, which incorporates lightweight bottleneck adapters (<0.35% parameters) and an unfreezing schedule, allows the model to refine these rich priors for the stroke domain. This approach ensures that the necessary inductive bias for lesion delineation is maintained even with limited labeled data, effectively mitigating the overfitting risks typically associated with training large-scale transformers on small medical cohorts.

Despite these promising results, several limitations remain. First, the current approach processes each slice independently, without leveraging full 3D spatial continuity. Second, while AISD and ISLES22 cover major imaging modalities in stroke, broader multi-center validation is needed to assess robustness across diverse clinical settings. Finally, the integration of clinical metadata, such as NIHSS scores or stroke onset time, could further enhance predictive power and interpretability in future studies.

5. Conclusions

In this work, we present StrDiSeg, a fine-tuning framework for adapting the DINOv3 vision foundation model to ischemic stroke lesion segmentation on NCCT and DWI. By incorporating bottleneck adapters and an attention-enhanced decoder, the proposed framework achieves competitive performance on AISD and ISLES22 datasets while maintaining a low level of complexity. The study highlights the potential of large-scale pretrained foundation models to provide transferable visual representations for clinical neuroimaging tasks. Our approach establishes a simple yet effective baseline for future medical AI systems that leverage self-supervised vision foundation models, offering a practical pathway for scaling robust lesion segmentation without extensive annotated datasets.

Author Contributions

Conceptualization, Q.C. and Y.C.; methodology, D.Z. and Y.C.; software, D.Z.; validation, Q.C., S.Z. and Y.C.; formal analysis, Q.C.; investigation, D.Z.; resources, Y.C.; data curation, Q.C.; writing—original draft preparation, D.Z.; writing—review and editing, Q.C., L.Y., F.R., Y.S., W.Q. and Y.C.; visualization, D.Z.; supervision, L.Y., Y.C., L.M.L. and H.J.; project administration, H.J. and W.Q.; funding acquisition, H.J. and L.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Hubei province key research and development project 2023BCB007.

Institutional Review Board Statement

Ethical review and approval were waived for this study due to the nature of open-public dataset usage. The public dataset providers requested only the citation.

Informed Consent Statement

Not applicable.

Data Availability Statement

The research data generated or analyzed during the study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors are grateful to the High-Performance Computing platform of Huazhong University of Science and Technology and the Supercomputing Platform of Hubei Medical Devices Quality Supervision and Test Institute.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| NCCT | Non-contrast computed tomography |

| DWI | Diffusion-weighted imaging |

| MRI | Magnetic resonance imaging |

| ViT-B | Vision transformer—base |

| CNNs | Convolutional neural networks |

| AIS | Acute ischemic stroke |

References

- Smith, A.G.; Rowland Hill, C. Imaging Assessment of Acute Ischaemic Stroke: A Review of Radiological Methods. Br. J. Radiol. 2018, 91, 20170573. [Google Scholar] [CrossRef] [PubMed]

- Saver, J.L.; Goyal, M.; van der Lugt, A.; Menon, B.K.; Majoie, C.B.L.M.; Dippel, D.W.; Campbell, B.C.; Nogueira, R.G.; Demchuk, A.M.; Tomasello, A.; et al. Time to Treatment with Endovascular Thrombectomy and Outcomes from Ischemic Stroke: A Meta-analysis. JAMA 2016, 316, 1279–1289. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Qi, G.; Cheng, X.; Shan, H.; Hong, L.; Dong, Q.; Liu, Y.; Zhou, F.; Li, S.; He, Z.; et al. Deep Learning for Identifying Ischemic Core in Acute Ischemic Stroke Based on Non-contrast Enhanced Computed Tomography. Stroke 2025, 56, ATP180. [Google Scholar] [CrossRef]

- Malik, M.; Chong, B.; Fernandez, J.; Shim, V.; Kasabov, N.K.; Wang, A. Stroke Lesion Segmentation and Deep Learning: A Comprehensive Review. Bioengineering 2024, 11, 86. [Google Scholar] [CrossRef] [PubMed]

- Pham, D.L.; Xu, C.; Prince, J.L. Current Methods in Medical Image Segmentation. Annu. Rev. Biomed. Eng. 2000, 2, 315–337. [Google Scholar] [CrossRef] [PubMed]

- Sharma, N.; Aggarwal, L.M. Automated Medical Image Segmentation Techniques. J. Med. Phys. 2010, 35, 3–14. [Google Scholar] [CrossRef] [PubMed]

- Hesamian, M.H.; Jia, W.; He, X.; Kennedy, P. Deep Learning Techniques for Medical Image Segmentation: Achievements and Challenges. J. Digit. Imaging 2019, 32, 582–596. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Jiang, Y.; Peng, Y.; Yuan, F.; Zhang, X.; Wang, J. Medical Image Segmentation: A Comprehensive Review of Deep Learning-Based Methods. Tomography 2025, 11, 52. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Isensee, F.; Jaeger, P.F.; Kohl, S.A.A.; Petersen, J.; Maier-Hein, K.H. nnU-Net: A Self-Configuring Method for Deep Learning-Based Biomedical Image Segmentation. Nat. Methods 2021, 18, 203–211. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: Redesigning Skip Connections to Exploit Multiscale Features in Image Segmentation. IEEE Trans. Med. Imaging 2019, 39, 1856–1867. [Google Scholar] [CrossRef] [PubMed]

- Myronenko, A. 3D MRI Brain Tumor Segmentation Using Autoencoder Regularization. In BrainLes 2018: Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries; Revised Selected Papers, Part II; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; Volume 4, pp. 311–320. [Google Scholar]

- Oktay, O.; Schlemper, J.; Le Folgoc, L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. In Proceedings of the Medical Imaging with Deep Learning, Amsterdam, The Netherlands, 4–6 July 2018. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Hatamizadeh, A.; Yang, D.; Roth, H.R.; Xu, D. UNETR: Transformers for 3D Medical Image Segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV); IEEE: Piscataway, NJ, USA, 2022; pp. 1748–1758. [Google Scholar]

- Tang, Y.; Yang, D.; Li, W.; Roth, H.R.; Landman, B.; Xu, D.; Nath, V.; Hatamizadeh, A. Self-Supervised Pre-training of Swin Transformers for 3D Medical Image Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: Piscataway, NJ, USA, 2022; pp. 20730–20740. [Google Scholar]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.; Xu, D. Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images. arXiv 2022, arXiv:2201.01266. [Google Scholar] [CrossRef]

- Raghu, M.; Zhang, C.; Kleinberg, J.M.; Bengio, S. Transfusion: Understanding Transfer Learning for Medical Imaging. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Chen, S.; Ma, K.; Zheng, Y. Med3D: Transfer Learning for 3D Medical Image Analysis. arXiv 2019, arXiv:1904.00625. [Google Scholar] [CrossRef]

- Liu, C.C.; Pfeiffer, J.; Vulić, I.; Gurevych, I. FUN with Fisher: Improving Generalization of Adapter-Based Cross-Lingual Transfer with Scheduled Unfreezing. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers); Duh, K., Gomez, H., Bethard, S., Eds.; Association for Computational Linguistics: Mexico City, Mexico, 2024; pp. 1998–2015. [Google Scholar]

- Han, C.; Wang, Q.; Cui, Y.; Wang, W.; Huang, L.; Qi, S.; Liu, D. Facing the Elephant in the Room: Visual Prompt Tuning or Full Finetuning? In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 7 May 2024. [Google Scholar]

- Siméoni, O.; Vo, H.V.; Seitzer, M.; Baldassarre, F.; Oquab, M.; Jose, C.; Khalidov, V.; Szafraniec, M.; Yi, S.; Ramamonjisoa, M.; et al. DINOv3. arXiv 2025, arXiv:2508.10104. [Google Scholar] [PubMed]

- Liang, K.; Han, K.; Li, X.; Cheng, X.; Li, Y.; Wang, Y.; Yu, Y. Symmetry-Enhanced Attention Network for Acute Ischemic Infarct Segmentation with Non-Contrast CT Images. In Proceedings of the MICCAI 2021, Strasbourg, France, 27 September–1 October 2021. [Google Scholar]

- Hernandez Petzsche, M.R.; de la Rosa, E.; Hanning, U.; Wiest, R.; Valenzuela, W.; Reyes, M.; Kirschke, J.S. ISLES 2022: A Multi-Center Magnetic Resonance Imaging Stroke Lesion Segmentation Dataset. Sci. Data 2022, 9, 762. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Liu, X.; Sanchez, P.; Thermos, S.; O’Neil, A.Q.; Tsaftaris, S.A. Learning Disentangled Representations in the Imaging Domain. Med. Image Anal. 2022, 80, 102516. [Google Scholar] [CrossRef] [PubMed]

- Zou, S.; Wang, G.; Zhang, Y.; Shen, Y.; Yuan, M.; Su, Y. Adaptive Feature Decoupled Network for Polyp Segmentation. Biomed. Signal Process. Control 2026, 111, 108327. [Google Scholar] [CrossRef]

- Xie, S.; Tu, Z. Holistically-Nested Edge Detection. arXiv 2015, arXiv:1504.06375. [Google Scholar] [CrossRef]

- Kerssies, T.; Cavagnero, N.; Hermans, A.; Norouzi, N.; Averta, G.; Leibe, B.; Dubbelman, G.; de Geus, D. Your ViT is Secretly an Image Segmentation Model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: Piscataway, NJ, USA, 2025. [Google Scholar]

- He, K.; Chen, X.; Xie, S.; Li, Y.; Doll’ar, P.; Girshick, R. Masked Autoencoders Are Scalable Vision Learners. arXiv 2021, arXiv:2111.06377. [Google Scholar] [CrossRef]

- Wang, W.; Gao, Z.; Gu, L.; Pu, H.; Cui, L.; Wei, X.; Liu, Z.; Jing, L.; Ye, S.; Shao, J.; et al. InternVL3.5: Advancing Open-Source Multimodal Models in Versatility, Reasoning, and Efficiency. arXiv 2025, arXiv:2508.18265. [Google Scholar]

- Zhu, J.; Wang, W.; Chen, Z.; Liu, Z.; Ye, S.; Gu, L.; Tian, H.; Duan, Y.; Su, W.; Shao, J.; et al. InternVL3: Exploring Advanced Training and Test-Time Recipes for Open-Source Multimodal Models. arXiv 2025, arXiv:2504.10479. [Google Scholar]

- Chen, Z.; Wu, J.; Wang, W.; Su, W.; Chen, G.; Xing, S.; Zhong, M.; Zhang, Q.; Zhu, X.; Lu, L.; et al. InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: Piscataway, NJ, USA, 2024; pp. 24185–24198. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.