Abstract

Bladder cancer represents a significant health problem worldwide, with it being a major cause of death and characterized by frequent recurrences. Effective treatment hinges on early and accurate diagnosis; however, traditional methods are invasive, time-consuming, and subjective. In this research, we propose a transparent deep learning model based on the YOLOv11 structure to not only enhance lesion detection but also give the visual support of the model’s predictions. Five versions of YOLOv11—nano, small, medium, large, and extra large—were trained and tested by us on a comprehensive dataset of hematoxylin and eosin-stained histopathology slides with the inflammation, urothelial cell carcinoma (UCC), and invalid tissue categories. The YOLOv11-large variant turned out to be the best-performing model at the forefront of technology, with an accuracy of 97.09%, precision and recall of 95.47% each, and balanced accuracy of 96.60%. Besides the precision–recall curves (AUPRC: inflammation = 0.935, invalid = 0.852, UCC = 0.958), ROC-AUC curves (overall AUC = 0.972) and risk–coverage analysis (AUC = 0.994) were also used for detailed assessment of the model to confirm its steadiness and trustworthiness. The confusion matrix displayed the highest true positive rates in all classes and a few misclassifications, which mainly happened between inflammation and invalid samples, indicating a possible morphological overlap. Moreover, as supported by a low Expected Calibration Error (ECE), the model was in great calibration. YOLOv11 reaches higher performance while still being computationally efficient by incorporating advanced architectural features like the C3k2 block and C2PSA spatial attention module. This is a step towards the realization of the AI-assisted bladder cancer diagnostic system that is not only reliable and transparent but also scalable, presented in this work.

1. Introduction

Bladder cancer (BC), primarily in the form of urothelial cell carcinoma (UCC), is a serious global health concern. It arises from the uncontrolled growth of malignant cells in the bladder lining, ureters, or renal pelvis. Key risk factors include smoking, exposure to certain chemicals, chronic inflammation, and genetic susceptibility. Common symptoms include blood in the urine (hematuria), changes in urination patterns, pelvic discomfort, and, in advanced cases, weight loss and fatigue [1]. Diagnosis typically relies on imaging (CT, MRI), direct visualization via cystoscopy, and biopsy to confirm malignancy. Treatment options range from surgical removal of tumors or the entire bladder, to chemotherapy, immunotherapy, and radiation. Early detection and prompt treatment are vital for improving patient survival and preventing disease progression [2,3,4,5].

Cystitis, or bladder inflammation, is a distinct but often clinically challenging condition. It can be acute or chronic, frequently caused by bacterial infection, but it can also be triggered by chemical irritants, radiation, or foreign bodies. Cystitis symptoms overlap with BC, including lower abdominal pain, urinary urgency/frequency, hematuria, and cloudy or foul-smelling urine. Diagnosis involves urine tests, cystoscopy, and imaging to exclude other causes. Management usually includes antibiotics, pain relief, irrigation, and lifestyle adjustments. Unlike BC, most cases of cystitis are readily treatable [6].

Despite advances, BC remains a significant global public health issue, ranking among the ten most common cancers worldwide and contributing substantially to cancer-related mortality [7]. The prognosis is heavily dependent on early detection; if high-grade tumors are not treated promptly, the 5-year death rate can soar from 23% to 95% once the cancer has spread [8,9]. Even for non-muscle-invasive tumors, the grade at diagnosis is the strongest predictor of outcome. While hematuria is often the first sign, it is not diagnostic on its own. Current diagnostic workflows involve multiple steps (urine cytology, tumor markers, cystoscopy, CT scans, and biopsy), which are often invasive, time-consuming, and subject to variability between pathologists [10]. Consequently, only about 70% of tumors are detected early, highlighting a critical need for more efficient and reliable diagnostic tools.

Artificial Intelligence (AI), particularly deep learning (DL), offers a promising solution for automating and enhancing medical image analysis [11]. DL models, especially Convolutional Neural Networks (CNNs), excel at automatically learning complex features from images, outperforming traditional methods that rely on manual feature engineering [12,13,14]. The You Only Look Once (YOLO) family of algorithms is renowned for its speed and accuracy in object detection [15,16,17]. YOLOv11, a recent iteration, incorporates architectural innovations like the C3k2 block and C2PSA spatial attention module, making it exceptionally well-suited for tasks requiring both precision and computational efficiency [18,19].

This study proposes a novel, interpretable deep learning framework for classifying histopathology slides of bladder lesions into three clinically relevant categories: inflammation, UCC, and invalid/low-quality tissue. We adapt the YOLOv11 architecture for this multi-class classification task, utilizing its powerful feature extraction capabilities. To ensure clinical trust and utility, we integrate EigenCAM, a technique that generates visual explanations for the model’s predictions by highlighting the specific regions of the slide that influenced its decision. Our work addresses several gaps identified in current research:

- -

- Multi-class Classification: Many studies focus on binary classification (e.g., cancer vs. normal). Our model distinguishes between inflammation, UCC, and invalid tissue, which is crucial for clinical triage.

- -

- Interpretability: We provide transparent, visual explanations for predictions, moving beyond “black-box” models.

- -

- Holistic Framework: We present a complete pipeline, from data acquisition and preprocessing to modeling and evaluation.

The primary objective of this study is to evaluate the performance of our YOLOv11-EigenCAM framework against established methods, focusing on diagnostic accuracy, sensitivity, specificity, and model interpretability. By developing a scalable and accurate tool, we aim to support pathologists in their daily workflow, enabling faster, more consistent, and more confident diagnoses. In summary, our contributions are as follows:

- -

- Adaptation of YOLOv11 for Medical Classification: We demonstrate the effectiveness of the YOLOv11 architecture, originally designed for object detection, for the specific task of multi-class histopathology classification.

- -

- Integration of Interpretability: We incorporate EigenCAM to generate Class Activation Maps, providing clinicians with visual evidence for the model’s predictions and fostering trust in its output.

- -

- Comprehensive Diagnostic Pipeline: We develop a complete, end-to-end system that encompasses data preparation, model training, and rigorous performance evaluation, creating a foundation for clinical deployment.

- -

- High Diagnostic Accuracy: Our model achieves state-of-the-art performance, with an accuracy of up to 97.09%, demonstrating its potential for real-world application.

2. Related Studies

Deep learning techniques have demonstrated significant potential in enhancing BC diagnosis, thereby providing opportunities for increased accuracy, efficiency, and automation in lesion detection and interpretation. This section reviews the pertinent literature on the application of DL for BC diagnosis across different imaging modalities.

A recent study [20] compared blood and urine specimens collected from patients with cancer-free control people using an AI model to examine droplet patterns. This study’s AI-assisted model uses a ResNet, which is a deep neural network (DNN) pre-trained on ImageNet datasets. The recognition and classification of complex patterns formed by dried urine or blood droplets under various circumstances led to a precise and sensitive cancer diagnosis. Using this technique consistently across droplets, comparisons may be made to uncover common spatial behaviors and underlying morphological features.

A hybrid methodology integrating statistical machine learning techniques with pre-trained DNN for feature extraction is proposed for BC classification tasks in [21]. This method may differentiate between BC tissue and normal tissue, muscle-invasive BC (MIBC), non-muscle-invasive BC (NMIBC), and post-treatment changes (PTCs).

In the medical field, the well-known U-Net neural network often complements its encoder with ResBlock from ResNet and Dense Block from DenseNet, as in [22]. This made the training possible while saving the parameters and lowering the overall recognition time. When used together with Attention Gates, the decoder is able to reduce irrelevant parts of the image and concentrate on important details. The authors presented a Residual-Dense Attention (RDA) U-Net model that was integrated with the earlier-mentioned model to delineate organs and spot lesions in CT-scanned abdominal images. The accuracy (ACC) for this model in the bladder and its lesions is thus 96% and 93%, respectively.

Di Sun et al. in [23] showed that the combination of radiomics and DL descriptions with clinical characteristics holds potential for improving the prognostication of 5-year survival rates in patients with BC post-radical cystectomy, but bigger datasets are required. Accurately estimating 5-year survival is advantageous when combined with surgical monitoring techniques and patient counseling.

By freezing specific DL-CNN layers and modifying the DL-CNN architecture, the authors of [24] examine the effectiveness of various DL-CNN models for BC therapy response evaluation based on transfer learning (TL). Therein, 123 chemotherapy patients’ pre- and post-treatment CT scans were gathered. Following treatment, 33% of patients had cancer in the T0 stage. Hybrid pre–post-image pairings (h-ROIs) were created by combining regions of interest from segmented lesions in pre- and post-treatment images. It was possible to collect test sets (54 pairs), validation (10 pairs), and training (94 pairs; h-ROIs, 6209).

Using full-slide digitized histologic images from two cohorts, Zheng et al. [25] developed a poorly supervised DL model for BC detection and overall survival prediction in muscle-invasive BC patients. Positive outcomes demonstrated that the proposed models can help physicians diagnose BC more accurately and make personalized treatment decisions for patients with muscle-invasive BC by facilitating differential risk classification. To extract more information from pathological images, further analysis can be performed on the most critical regions for diagnosis and prediction. Lastly, using the anticipated risk scores, the authors found six genes strongly associated with cancer advancement, which may lead to the identification of biomarkers.

MSEDTNet [26] is an auxiliary segmentation method introduced recently that merges a multi-scale encoder and decoder with a transformer. The multi-scale pyramidal convolution (MSPC) encoder is designed to create compact feature maps that could best retain the details of intricate local information within the image. The transformer constraint is used to model the interdependence of high-level tumor semantics in a global context over large distances. The decoder with a spatial context fusion module (SCFM) integrates context and refines high-resolution segmentation results. For T2-weighted MRI of 86 patients, MSEDTNet achieved an overall Jaccard index of 83.46% and a Dice similarity coefficient of 92.35% and demonstrated low complexity compared to the other models.

Using CNN, a method for detecting BC from CT images is provided in [27]. The frontal, horizontal, and sagittal plane image data sets are the three primary components of the image data set that are employed. CNN architectures that have already been described are used to categorize images. A five-fold cross-validation method is employed to assess CNN performances, providing insights into classification and generalization capabilities.

A new diagnostic method for BC that employs a Multi-Layer Perceptron (MLP) alongside a Laplacian edge detector is presented in [28]. This study evaluates the viability of using a more straightforward approach (MLP) in conjunction with prevalent techniques (DL-CNN) for BC identification. The research utilized a database comprising 986 photos of non-cancerous tissue and 1997 images of BC. The study’s results indicated that when trained and evaluated on pre-processed pictures using Laplacian edge detectors, MLPs can attain an AUC value of up to 0.99.

The investigation by Qaiser et al. [29] focuses on the practical use of AI models with region-level data lacking exhaustive patient annotation, solely for survival follow-up patient-level data. A weakly supervised survival CNN methodology (WSS-CNN) enhanced with a visual attention mechanism is developed to predict overall survival from whole-slide images of hematoxylin and eosin (H&E)-stained tumor tissue. The analysis of separated patient datasets for lung and bladder urothelial carcinoma indicated that WSS-CNN features predict overall survival for either type of cancer.

The study conducted by Mundhada et al. [30] classified urothelial carcinoma into low-grade and high-grade tumors according to the WHO 2016 classification. Transurethral resection of the bladder tumor (TURBT) specimens was digitally scanned in whole-slide images using H&E stain, low-grade, and high-grade non-invasive papillary urothelial carcinoma. These images were used as source images from which tumor tissue patches were extracted and subsequently used as input into a deep learning network. Patches that contained at least 90% of tumor tissue were used for modeling. For better accuracy regarding grading or classification of low- and high-grade urothelial cancer, hyper-parameter optimization of the deep learning model was carried out. Model performance was found robust after hyperparameter optimization with an overall accuracy of 90%. Grad-CAM and other visualization tools were also used to generate Class Activation Maps, indicating the proposition of employing this model as a companion diagnostic tool for grading urothelial cancer.

In 2020, a study proposed a machine learning system capable of differentiating between non-invasive Ta and superficially invasive T1 stages of BC [31]. This task poses significant challenges for pathologists due to the similarities in their microscopic appearance. The study involved the collection of 1177 images of bladder tumor tissues, comprising 460 non-invasive (Ta) and 717 invasive (T1) samples, and employed automated pipelines to extract features specific to T1 stage BC. Initial unsupervised clustering analysis did not successfully differentiate between Ta and T1 tumors; however, a refined set of features facilitated accurate classification at 91–96% using six supervised learning methods. Models utilizing manual feature extraction demonstrated superior performance to CNN models, indicating the significance of feature extraction informed by domain knowledge.

Lee et al. presented a preclinical (rat model) study that used surface-enhanced Raman spectroscopy (SERS) of urine samples with machine learning classification to detect early and polyp-stage BCs [32]. In the rat model, diagnostic accuracy was reported to be 99.6%. Hosney et al. introduced a hybrid diagnostic framework termed mRIME-SVM [33]. They used a Support Vector Machine (SVM) classifier for classification after improving the RIME metaheuristic method (by incorporating orthogonal learning) for feature selection. They used this technique to optimize SVM hyperparameters and choose discriminative features across several BC datasets. Based on their findings, mRIME-SVM performs better than other metaheuristic-based feature selection techniques and produces excellent classification accuracy in all datasets. To avoid the additional expense and delays associated with IHC or molecular testing, Jiao et al. built a DL framework to directly infer HER2 expression status from normal H&E-stained histopathology slides [34]. They employed a clustering-constrained attention multiple instance learning model on a sample of 106 BC cases (with known HER2 status). Their accuracy on the validation set was reported to be 0.86.

Synthesis and Gap Identification

Table 1 compares the approaches discussed for identifying BC. Despite significant advancements in applying deep learning (DL) techniques to BC (BC) diagnosis, several critical gaps remain unaddressed in the current literature:

Table 1.

Comparison between different techniques for identifying BC.

- -

- Limited Use of Advanced Object Detection Algorithms: Most existing studies focus on classification tasks using CNNs and their variants for BC detection and grading. For instance, methods employing pre-trained CNNs for feature extraction [21], U-Net architectures for segmentation [22], and CNN-based classification from CT images [27] are prevalent. However, there is a lack of research utilizing advanced object detection algorithms like the YOLO family, specifically YOLOv11, for precise lesion detection and classification in histopathological images of BC.

- -

- Insufficient Model Interpretability: While deep learning models have achieved high accuracy rates, they are often criticized for being “black boxes” with limited interpretability. Some studies have started to address this using visualization techniques like Grad-CAM [30] and attention mechanisms [29], but these efforts are not widespread. There is a need for integrating robust interpretability methods, such as EigenCAM, to provide visual explanations of the models’ decision-making processes, thereby enhancing trust and transparency in clinical applications.

- -

- Underutilization of Histopathological Data: Several studies focus on imaging modalities like CT and MRI scans [22,23,24,26,27] or genomic data [31], with fewer using histopathological images for BC diagnosis. Histopathology remains the gold standard for cancer diagnosis, and utilizing this data can improve diagnostic precision. Studies that do use histopathological images often lack advanced techniques for both detection and interpretability.

- -

- Need for Comprehensive Diagnostic Approaches: Current research often isolates different stages of the diagnostic pipeline, such as feature extraction [21,28], classification [27,30], or survival prediction [23,25,29], without integrating them into a cohesive framework. There is a gap in developing a holistic approach encompassing data collection, pre-processing, advanced modeling, interpretability, and performance assessment to streamline and enhance the diagnostic process.

- -

- Limited Accuracy in Multi-Category Classification: Some studies achieve high accuracy in binary classification tasks [30,31], but there is a need for models that can accurately classify multiple categories relevant to BC diagnosis, such as distinguishing between inflammation, urothelial carcinoma (UCC), and invalid tissue samples.

The gaps thus identified will lay the foundation for the development of a complete, interpretable, and deep learning model for BC diagnosis, which shall be aimed at the following:

- -

- Using YOLOv11 for Lesion Detection and Classification: The introduction of an object detection algorithm, YOLOv11, into the domain of BC histopathological image analysis for accurate lesion detection and multi-class classification.

- -

- Enhancing Model Interpretability with EigenCAM: EigenCAM will be integrated to produce Class Activation Maps (CAMs), thus providing visual explanations for important pixels or regions that have affected the prediction made by the model. This will help lay bare the challenge of interpretability.

- -

- Specialized Histopathology Data: The use of different histopathology slides stained in hematoxylin and eosin that include various stages and subtypes of BC to ensure that the model is exposed to apt and diverse data for training.

- -

- Structuring an Interdisciplinary Diagnostic Scheme: An amalgamation of data collection, preprocessing, advanced modeling using YOLOv11, interpretability with EigenCAM, and exhaustive performance evaluation into a single framework to streamline the detection of BC with efficacy and accuracy.

The proposed work seeks to contribute substantially to the field of BC diagnosis by addressing these gaps, offering a non-invasive, rapid, and objective assessment tool that is both accurate and interpretable, ultimately improving clinical outcomes and patient care.

3. Methodology

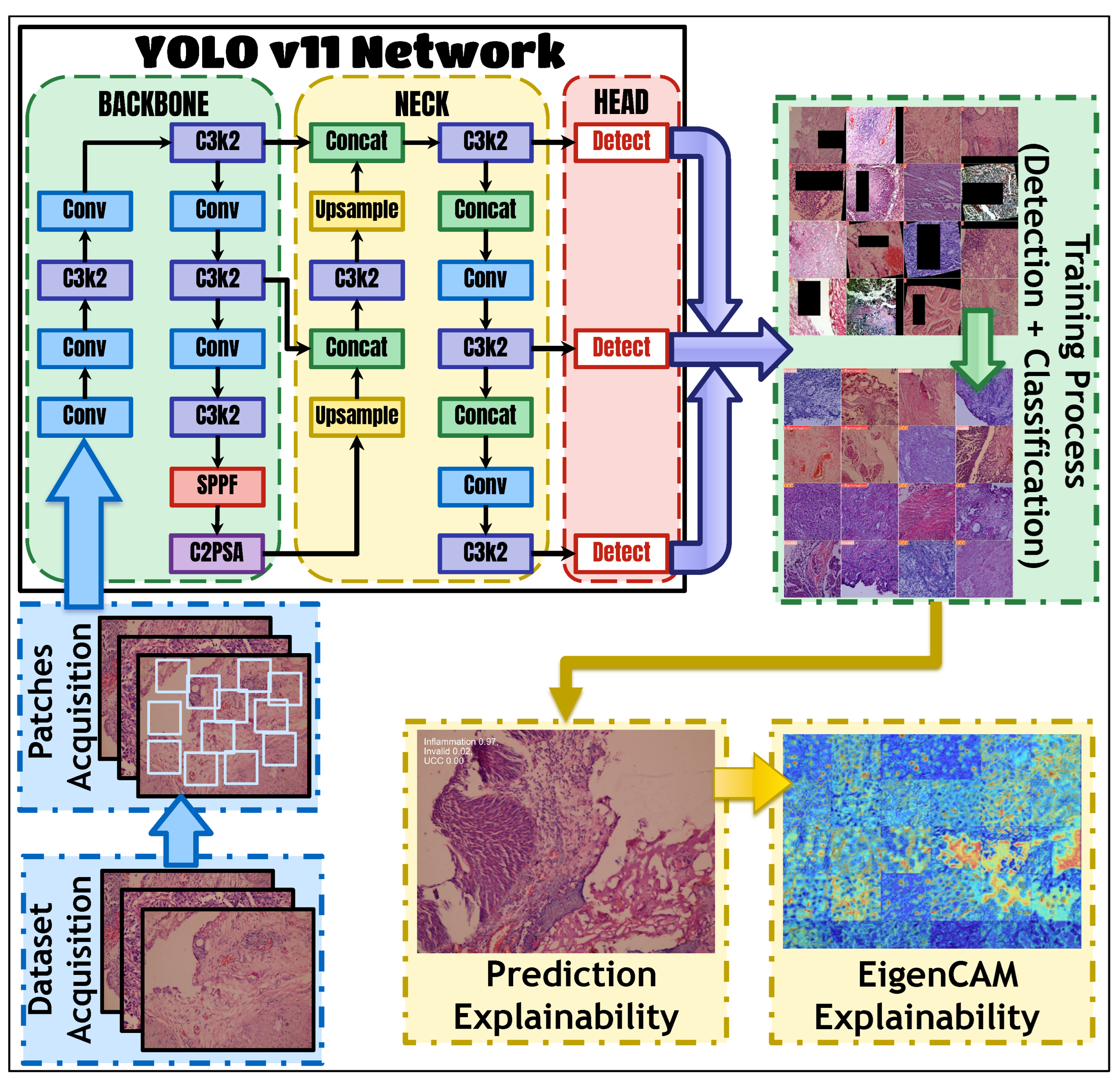

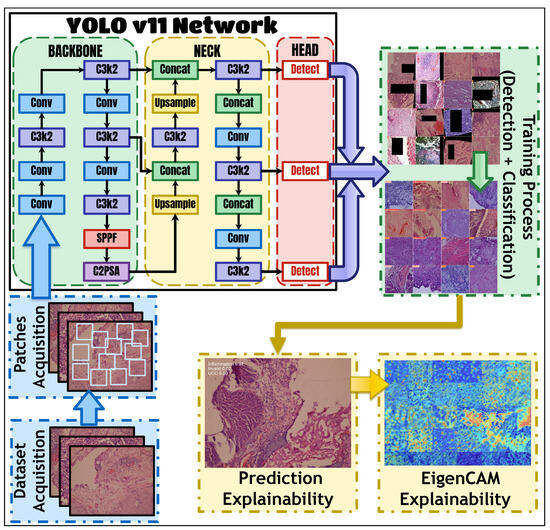

The framework illustrated in Figure 1 provides a conceptual end-to-end pipeline for bladder cancer diagnostics using computational imaging. It outlines the key stages involved in a typical diagnostic workflow, including data acquisition and pre-processing, YOLOv11-based classification, interpretability using EigenCAM, and comprehensive performance evaluation. These components are described to demonstrate how the proposed system operates; however, no new images, samples, or clinical data were collected as part of this study.

Figure 1.

The proposed framework for BC diagnosis and interpretation using histopathology data.

As for data acquisition and pre-processing, the present study used a dataset with hematoxylin-and-eosin-stained histopathology slides of urinary bladder lesions. Several images were taken systematically from the slides to produce images that were categorized into inflammation, UCC, and invalid. Patients obtained these specimens from pathology departments, resulting in a dataset of 90 slides, out of which 43 showed cystitis and 47 UCC at different pathological stages. A digital camera mounted on a light microscope was used for imaging, and the images were manually classified into categories by a pathologist.

In fact, the classification method adopted was that of YOLOv11 for bladder prediction, which was meant to classify the histopathology slide to histopathological categories of inflammation, UCC, or invalid. The method YOLOv11 is one of the best in the object detection domain. It incorporated some architectural improvements including anchor-free detection, new convolutions, and mosaic augmentation. Anchor-free detection does away with predefined anchor boxes, while new convolutions optimize feature extraction. Mosaic augmentation enhances model robustness when exposed to a variety of contexts. The Ultralytics YOLOv11 package was used in this study, incorporating pre-trained classification models into it.

Interpretability using EigenCAM provides insights into classification tasks by generating Class Activation Maps (CAMs) to discern influential pixels or regions in images. EigenCAM facilitates efficient interpretation without requiring model modifications, integrating seamlessly with CNN models. It employs Singular Value Decomposition (SVD) to derive Class Activation Maps, enabling visualization of significant image regions contributing to classification decisions.

Performance analysis in BC classification evaluations incorporated different metrics like accuracy, precision, recall, specificity, F1 measure, entire intersection over union (IoU), balanced accuracy (BAC), Matthews correlation coefficient (MCC), Youden’s J statistic, and Yule’s Q statistic. These metrics give a full view on model effectiveness concerning accuracy, sensitivity, specificity with performance on imbalanced datasets.

3.1. Data Acquisition and Pre-Processing

The dataset used in this study is titled “Digital Histopathology Image Dataset of Hematoxylin- and Eosin-Stained Bladder Urothelial Cell Carcinoma versus Inflammation”. The dataset is publicly available and fully anonymized and can be accessed at https://doi.org/10.5061/dryad.0cfxpnw5q. In this work, we performed secondary analysis only on these de-identified histopathology images. No new human data, biological specimens, or patient information were collected or accessed during this research.

While comprising 90 whole-slide images (WSIs), the dataset provides 14,891 non-overlapping patches, which constitute a substantial number of training samples for deep learning models. As to patient selection, the specimens were from the Departments of Pathology from both the Faculty of Medicine and Cancer Institute at Assiut University. Thus, the dataset holds 90 hematoxylin and eosin histopathology slides, sectioned and preserved with formalin and with cystitis diagnosed in 43 slides and UCC in another 47 slides. The slides also represented UCC patients in various pathological stages: pTa (5 slides), pT1 (9 slides), pT2 (28 slides), and pT3a (5 slides). The Olympus E-330 digital camera was used to photograph the slides, mounted on a light microscope-CX31- by Olympus, with the Olympus E330-ADU1.2X adapter. The magnification of the microscope was set to 20×, and camera settings were adjusted prior to photography. The resolution of the images was 3136 × 2352 pixels, it was in JPEG format, and it had a 1:2.7 compression rate. To mitigate potential overfitting inherent in smaller datasets, we employed extensive data augmentation strategies, including flipping, rotation, and mosaic augmentation, as recommended by Ameen et al. [35] for digital histopathology tasks. These techniques have been shown to effectively enhance model generalization. Furthermore, the balanced distribution across classes (5948 inflammation, 5814 UCC, and 3131 invalid) helps ensure robust performance.

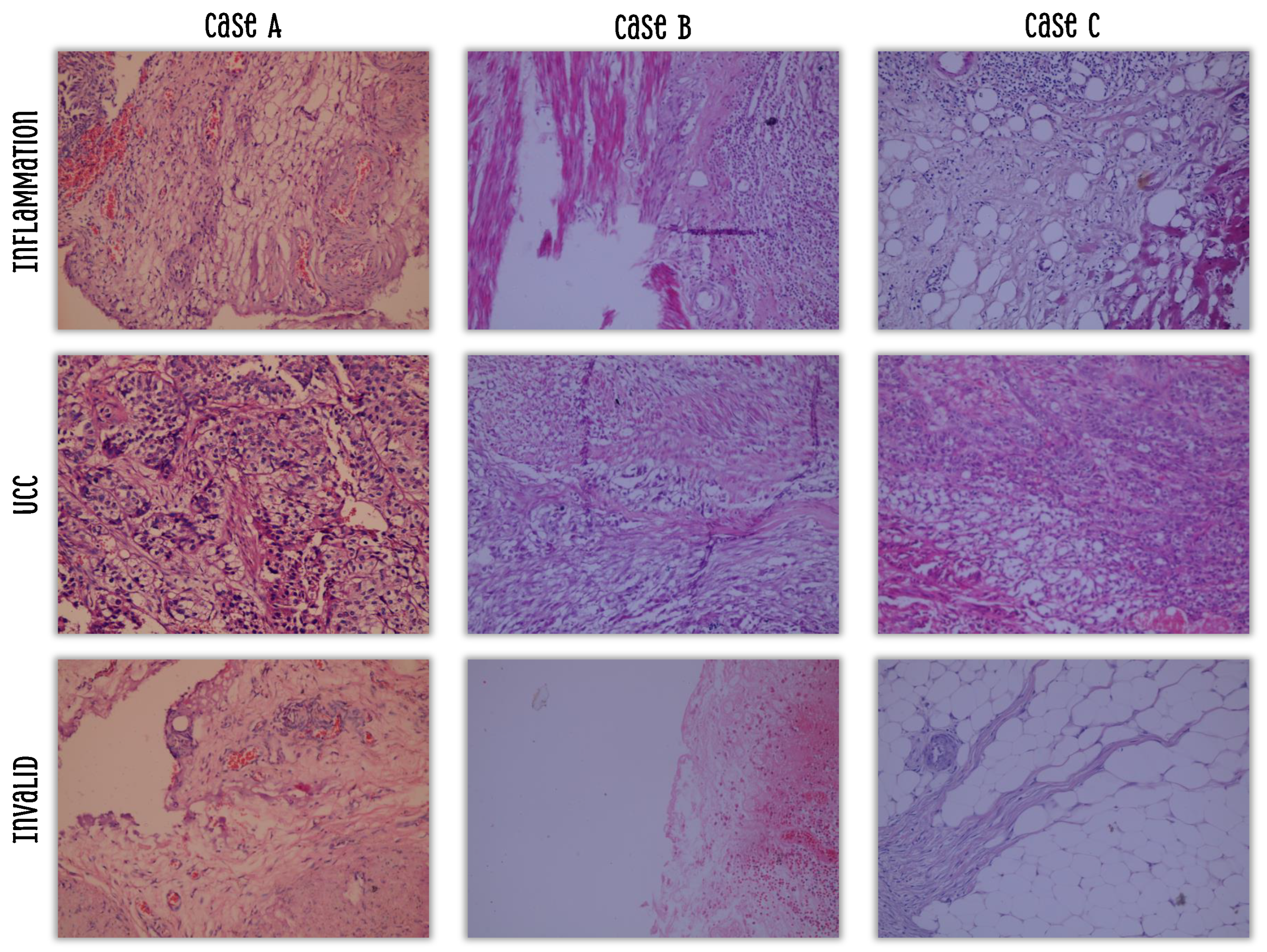

All images in the dataset were originally annotated by an expert pathologist into three diagnostic categories: inflammation, UCC, and invalid. Inflammation images were diagnosed as having an inflammatory cell infiltrate, UCC images were based on malignant urothelial cells, and invalid images represent tissue samples that do not meet diagnostic criteria for either inflammation or UCC. This category encompasses specimens with significant artifacts (e.g., folding, staining irregularities, excessive debris), areas of poor tissue quality (e.g., necrosis and autolysis), or regions lacking sufficient cellular detail for reliable classification. Critically, this class holds significant clinical relevance, as pathologists routinely encounter such suboptimal samples in daily practice. Distinguishing “invalid” tissue from true pathology prevents false-positive diagnoses and improves the overall reliability of the diagnostic system.

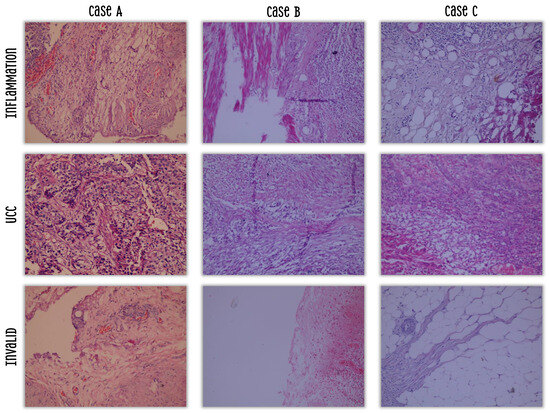

Sample images are presented in Figure 2 [36]. Moreover, to prevent data leakage, all patches derived from a single WSI were assigned to the same subset (training, validation, or test). The 90 WSIs were randomly partitioned into training (70%), validation (15%), and test (15%) sets. This slide-level splitting ensures that no information from the test set can influence the training process, guaranteeing a fair evaluation of model generalization.

Figure 2.

Samples from the used dataset.

3.2. YOLOv11 Classification for Bladder Prediction

While YOLOv11 was originally designed for object detection, its architectural innovations make it exceptionally well-suited for high-fidelity classification tasks in medical imaging, particularly when interpretability and computational efficiency are paramount. Unlike traditional classification backbones (e.g., ResNet and EfficientNet), YOLOv11 incorporates unique components like the C3k2 block and C2PSA (Convolutional block with Parallel Spatial Attention) spatial attention module, which are specifically designed to enhance feature extraction and focus on diagnostically relevant regions [37]. The C3k2 block reduces computational overhead while maintaining feature richness, making it ideal for resource-constrained clinical settings. The C2PSA module dynamically highlights critical spatial features, providing an intrinsic mechanism for interpretability that aligns perfectly with our goal of creating a transparent diagnostic tool. Furthermore, using YOLOv11 allows for a seamless transition to full object detection if future work requires precise lesion localization, offering a more versatile foundation than static classification networks. Therefore, our choice is not merely to use a classifier but to utilize a framework inherently designed for both precision and explainability in complex visual domains.

The improvements to the architecture of YOLOv11 are the ones that lead to the increased performance of the model. The C3k2 block is a main component, and it is the one that changes the C2f block that was used in previous versions like YOLOv8. The C3k2 block is a less expensive version of the Cross-Stage Partial (CSP) bottleneck in terms of computations. Instead of one big convolution, it uses two small convolutions, and, thus, the computational overhead is significantly reduced, while the ability to extract features is kept at the same level or is even improved. Such a performance is very important for applications in medicine where it might be necessary to carry out very fast inference on a system that has limited resources. Moreover, through the addition of the C2PSA component, an impressive spatial attention method was introduced to the model. In the C2PSA module, the spatially pooled features are what the model looks at most intensively, paying attention to the most important image regions [37]. These features are, without a doubt, very useful in medical imaging, where the smallest pathological features can make a huge difference and can be localized to some specific areas only. With the C2PSA module, pathologists obtain the power to pinpoint these regions and hence drastically improve the chances of finding the pathologies that are hard to detect or are partially hidden and can be used in diagnosing the bladder problem.

The YOLOv11 architecture follows the standard YOLO pattern, comprising three primary components: (1) the backbone, responsible for extracting low- and mid-level features from the input image; (2) the neck, which aggregates multi-scale features from the backbone for enhanced localization; and (3) the head, which produces the final predictions (class scores and bounding boxes). In our adaptation for classification, we employ the entire architecture, utilizing the backbone and neck for powerful feature extraction and the head for classification. The C3k2 blocks within the neck and head are optimized for efficient multi-scale feature processing, while the C2PSA module in the neck enhances spatial attention.

In the neck and head parts, YOLOv11 has several C3k2 blocks to perform the multi-scale feature maps processing and refinement in a very efficient way. The C3k2 block, depending on how it is configured, can either function as a normal bottleneck or a deeper C3 module and hence provide the user with the option of deciding the extent of the feature extraction. Moreover, to additionally stabilize data flow and feature extraction, the head section after the C3k2 blocks also has CBS (Convolution–BatchNorm–SiLU) layers, and for feature extraction, the SiLU activation function is used, which, as experiments have shown, leads to improved performance of the model.

The ability of the model to find the correct predictions even in very difficult cases and the fact that it has been successful in a variety of computer vision areas make it a very interesting option for the creation of powerful and, at the same time, efficient instruments for the automatic bladder condition evaluation. One of the main points of the refined architecture of YOLOv11 that makes it so powerful is the capability of the system to extract features if need be, even those that are hidden deeply in some complex images. As an example, YOLOv11m is able to deliver better mean Average Precision (mAP) results over standard benchmark datasets while also being able to perform with 22% less YOLOv8m parameters, thus strengthening the computational efficiency side, and still, no accuracy is given up. Such a parameter efficiency is indispensable when thinking of model deployments in clinics where the computational power may not always be enough.

By employing C2PSA, the model is further improved in terms of how it can adjust to the context and understand it, whereby it can better analyze and interpret complex visual information. Medical image classification is a perfect example to which it can be applied, wherein the model is required to detect highly detailed patterns that are the diagnostic indication of the disease. The deployment flexibility of the model capabilities at different scales, for example, from the lightweight nano variant to the high-accuracy xlarge variant, is one very big advantage that the model opens up, for instance, in clinic environments that are different from each other and where the performance has to be at the same level [37].

3.3. Interpretability with Eigen-CAM

EigenCAM generates Class Activation Maps (CAMs) by performing Singular Value Decomposition (SVD) on the activation maps of the final convolutional layer. SVD decomposes the activation matrix into orthogonal components, with the first singular vector representing the direction of maximum variance. By projecting the activation maps onto this principal component, EigenCAM identifies the most influential spatial regions contributing to the prediction. This method is advantageous because it does not require gradients, making it computationally efficient and universally applicable to any CNN architecture without modification. Its output is a heatmap that highlights the pixels or regions most salient to the model’s decision, providing a transparent view into the model’s reasoning process.

Eigen-CAM in comparison has an intuitiveness to it and is indeed user-friendly to apply, computationally efficient to compute, and unconstrained by correct model classification details. This method operates with nearly all the CNN models without bringing any alterations to it concerning layers or conducting retraining. Eigen-CAM becomes even more advanced among all types when constructing visual explanations for predictions performed by the CNN. It ensures consistency and class separability above most recent works and, in addition, being tolerant of some properly predicted erroneous classifications, which often stem from the dense layer [38].

Eigen-CAM highlights the pixel analysis of object detection. In the heart of the feature extraction network are the convolutional layers that begin with explicitly lower spatial features such as edge and angle detection. As it goes up the hierarchy, it becomes more enigmatic, feeding exclusively on high-level general features that carry semantic meaning, with low-level item-based meanings that are hard to extract. In displacement, on the other hand, when convolution networks are fed into the fully connected layers, emotionally learned features are extended, and the model is given stepping boundaries for separating notions [39].

Consider an input image I of size , . Here, denotes the concatenated weight matrix of the first k layers of size . The class-activated output was obtained by projecting the image I onto the last convolution layer , such that . Singular Value Decomposition (SVD) was used to factorize into its main components, with the factorization being written as , where U is an orthogonal matrix, its first column being left a singular vector. is a diagonal matrix, having singular values on its diagonal, and V is an orthogonal matrix, its first column again being a singular vector. The Class Activation Map , is obtained by projecting onto the first eigenvector such that [40,41].

3.4. Performance Evaluation

We applied a variety of performance metrics to evaluate the effectiveness of models in classifying BC with YOLOv11. The performance parameters are given here, which describe one or more facets of classification performance, namely accuracy, precision, recall, specificity, F1 score, intersection over union (IoU), balanced accuracy (BAC), Matthews correlation coefficient (MCC), Youden’s J statistic, and Yule’s Q statistic.

Accuracy measures how well the correctly classified instances compare with the total number of instances in the dataset. Therefore, it provides a holistic view of how well a model performs but may fail completely when dealing with an imbalanced dataset.

Precision is a ratio of true-positive predictions to all positive predictions. It suggests how well the model can avoid false-positive predictions. Recall or sensitivity refers to the ratio of true-positive predictions to the total count of actual positives. Such metrics tell how well the model can successfully achieve all positive instances from within the dataset. Specificity refers to the number of negative predictions correctly identified to the corresponding total number of actual negatives. This performance measure emphasizes the model’s prediction accuracy in finding negative instances.

F1 score represents the harmonic mean of precision and recall; effectively, this metric balances precision and recall to assess the model’s performance in totality. intersection over union (IoU) measures the degree of overlap between the predicted bounding boxes and the bounding boxes defined in the ground truth. That is the reason accurate localization counts in object detections such as BC classification.

For imbalanced datasets, BAC takes away the average gain of sensitivity (recall) and specificity. This provides a more reliable assessment of classification performance when there is disproportionate class distribution. MCC evaluates classification performance by drawing on true positives, false positives, and false negatives. Thus, it is particularly useful for imbalanced datasets, allowing an even appraisal of the model’s predictive ability for each class.

Youden’s J statistic combines sensitivity and specificity into one metric to evaluate classification performance. It ranges from 0 to 1 with higher values indicating better performance. Yule’s Q statistic quantifies the relationship between predicted and actual classifications. This can be applied in assessing the strength and direction of predictive relationships.

4. Experiments and Discussion

Experiments were conducted in a Windows 11 (64-bit) OS environment with a NVIDIA GeForce RTX 3050 (4 GB VRAM) manufactured by NVIDIA Corporation (Santa Clara, CA, USA), and 128 GB RAM. Although relatively modest, this setup was sufficient for training and evaluating the YOLOv11 models on image patches rather than whole-slide images. The software environment included Python 3.10, the Ultralytics YOLOv11 framework for model development, and PyTorch (version 2.1.0) with CUDA-enabled acceleration. EigenCAM, implemented through PyTorch-CAM, was used to generate Class Activation Maps and provide visual interpretability of model predictions. Additional libraries such as OpenCV, NumPy, SciPy, Pandas, and scikit-learn supported preprocessing, numerical computation, and performance evaluation. All development and experimentation were conducted using Jupyter Notebook (version 7.0.6) and Visual Studio Code (version 1.85.1) on Windows 11. For model evaluation, we used five variants of the YOLOv11 architecture, YOLOv11-nano, YOLOv11-small, YOLOv11-medium, YOLOv11-large, and YOLOv11-xlarge, which differ in their parameter counts.

Ameen et al. [35] introduced a publicly available dataset of non-overlapping urinary bladder histopathology slides categorized as inflammation, UCC, or invalid (see Section 3.1). To address the scarcity of annotated data, they explored 11 augmentation strategies, including flipping and rotation, and evaluated four CNNs on binary classification tasks using accuracy, sensitivity, specificity, and AUC. Their findings highlighted that the most effective strategy was to apply augmentation after separating the test set but before splitting training and validation data, with Inception-v3 achieving the highest accuracy. The study emphasized the critical role of augmentation in digital histopathology and suggested further validation for broader applicability [35]. Unlike Ameen et al. [35], who excluded the invalid category, our study utilizes all three classes (inflammation, UCC, and invalid).

In Table 2, we have detailed a comparison of five classification models of YOLOv11 over seven metrics including precision, recall, F1 score, accuracy, specificity, balanced accuracy (BAC), and an average score. The outcomes depict a progressive trend of performance improvement as the model grows from nano to large, and then levels off for the extra-large variant. The YOLOv11-large (YOLO11l) model is, therefore, the best selection for this particular job as it attains the maximum scores not only in each individual metric but also in the overall average. To assess the robustness of these results, we performed 5-fold cross-validation on the entire dataset. The mean accuracy across the five folds was 96.8 ± 0.4%, with a 95% confidence interval of . Similarly, precision and recall showed high consistency, with means of 95.2 ± 0.3% and 95.3 ± 0.3% respectively. These narrow confidence intervals indicate that the model’s performance is stable and reproducible, mitigating concerns about overfitting to a single train/test split.

Table 2.

The results for the three classes of the dataset used with YOLOv11.

Those results go hand in hand with the YOLOv11 design principle whereby bigger models like the “large” and “xlarge” versions are intended to discover more complex features by means of deeper networks and higher parameter counts, thus increasing their ability of subtle pattern recognition in medical images. Furthermore, the excellence of YOLO11l is due to its redesigned backbone and neck, which are more efficient and also more advanced structures like the C3k2 block and C2PSA module. The C3k2 block’s rapid feature extraction and C2PSA spatial attention mechanism allow the model to zero in on those parts of the histopathology slides that are of diagnostic value, which results in highly accurate predictions. Besides that, the model’s near-identical precision and recall (both 95.47%) suggest that it is very well-balanced with very little bias towards a particular class, which is a very important feature in clinical decision support systems.

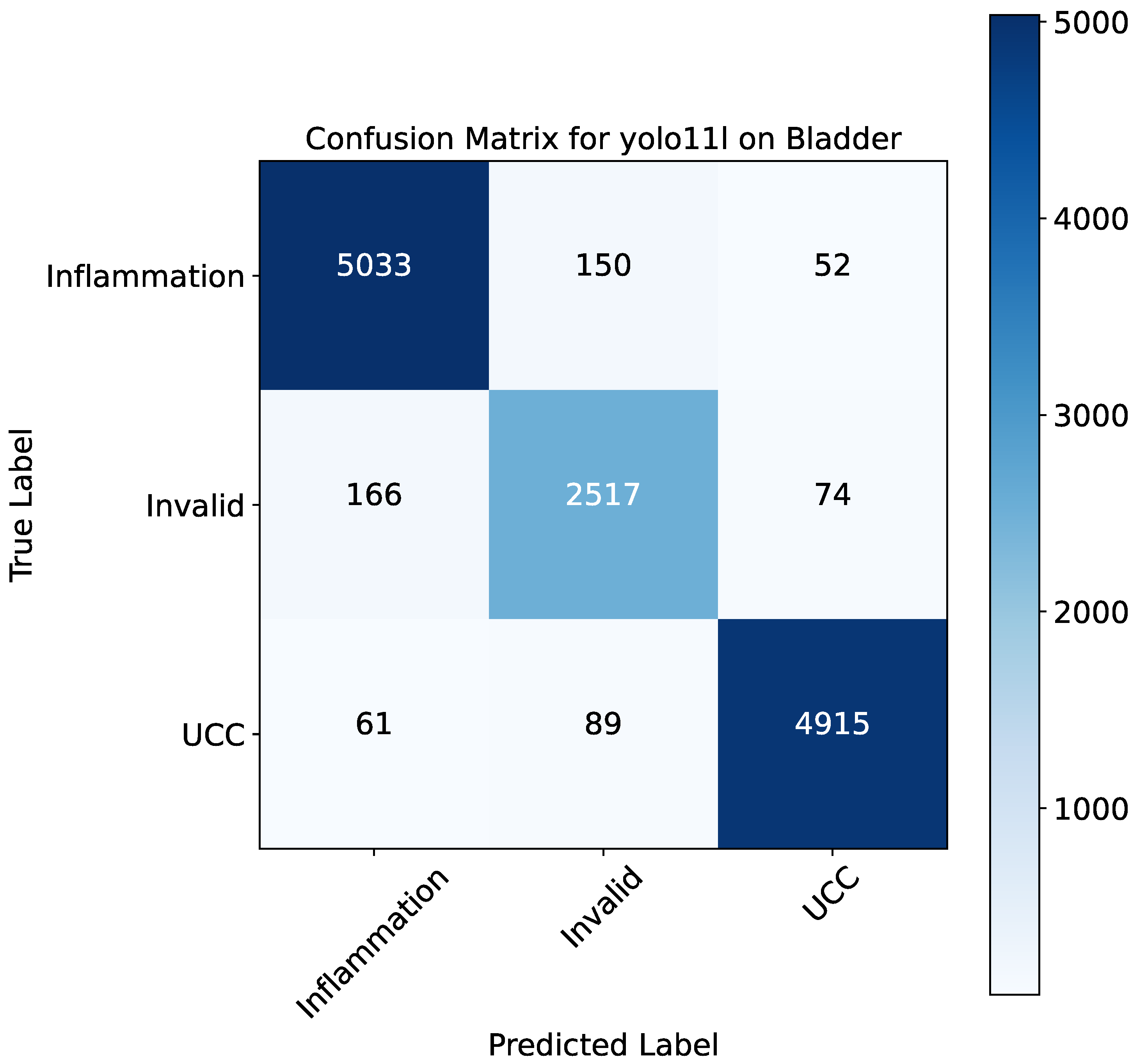

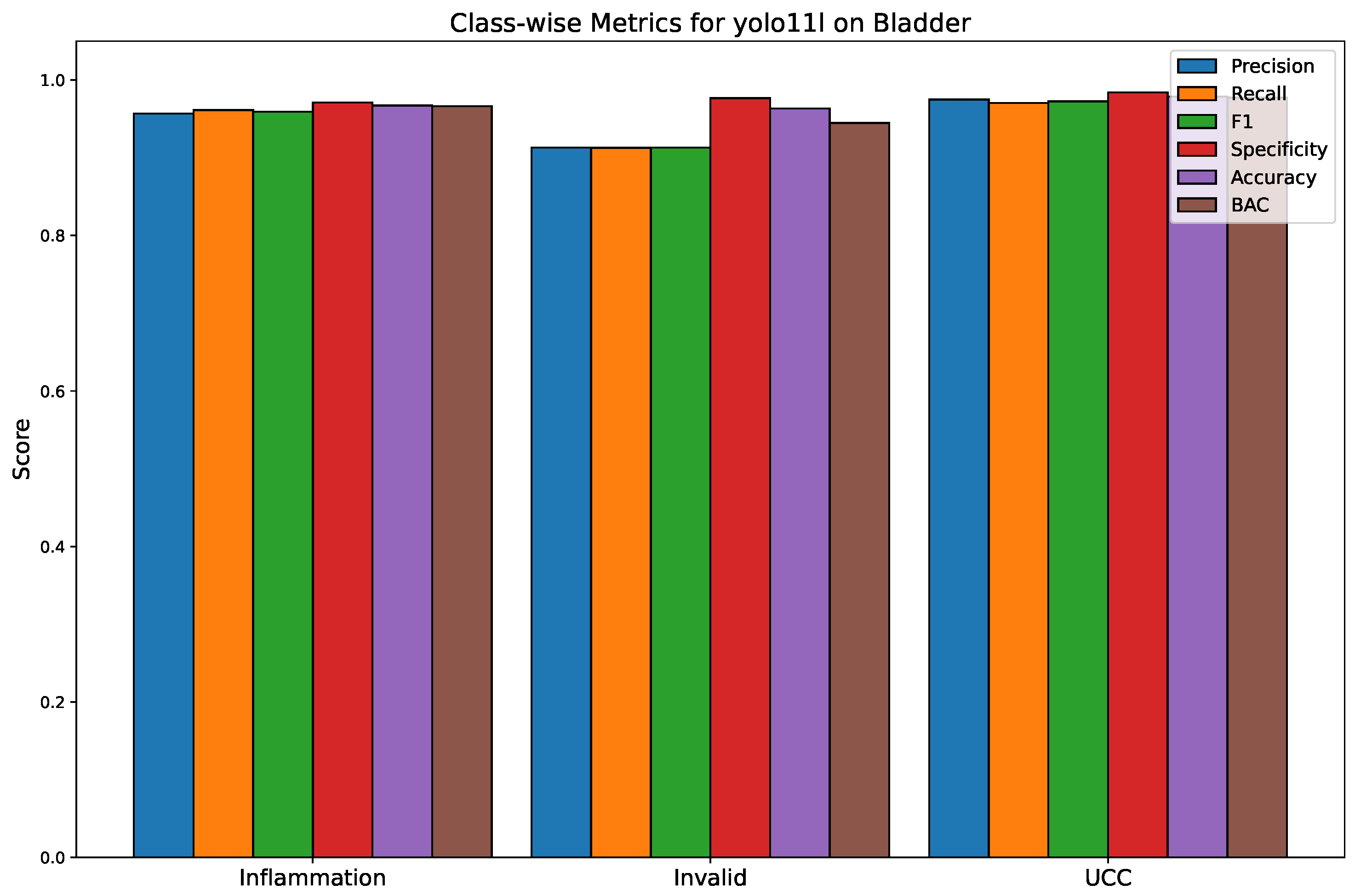

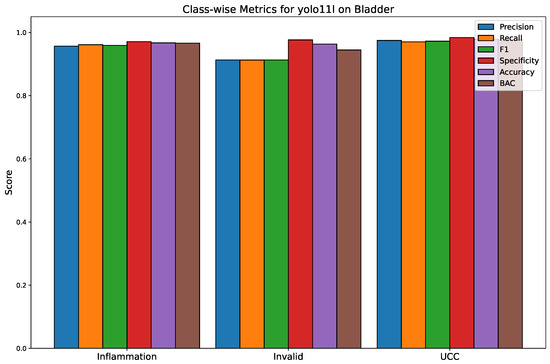

Figure 3 depicts the confusion matrix of YOLO11l, which is a detailed snapshot of its classification performance. The matrix points to the high true positive rates for the three classes, with most of the predictions being on the diagonal. To be more precise, the model finds 5033 of 5235 inflammation (TPR = 96.13%), 2517 of 2657 invalid (TPR = 94.67%), and 4915 of 5065 UCC (TPR = 97.01%) correctly. The off-diagonal elements of the matrix suggest that these particular positions are the main sources of misclassification: as a result, a small number of inflammation cases were misclassified as invalid (150) or UCC (52), while in the other direction, a similar trend can be seen for the classes. This means that on the one hand, the model is great at separating healthy from diseased tissue, but on the other hand, there is still some uncertainty about the difference between inflammation and invalid due to shared morphological features. Moreover, Figure 4 shows the class-wise performance for each class and for each model.

Figure 3.

Confusion matrix for the YOLOv11-large model on the bladder histopathology classification task. The matrix details the number of true positives (diagonal) and misclassifications (off-diagonal) for the three classes: inflammation, invalid, and UCC.

Figure 4.

Class-wise performance metrics for the YOLOv11-large model. This bar chart compares the precision, recall, F1 score, specificity, accuracy, and BAC for each of the three classes: inflammation, invalid, and UCC.

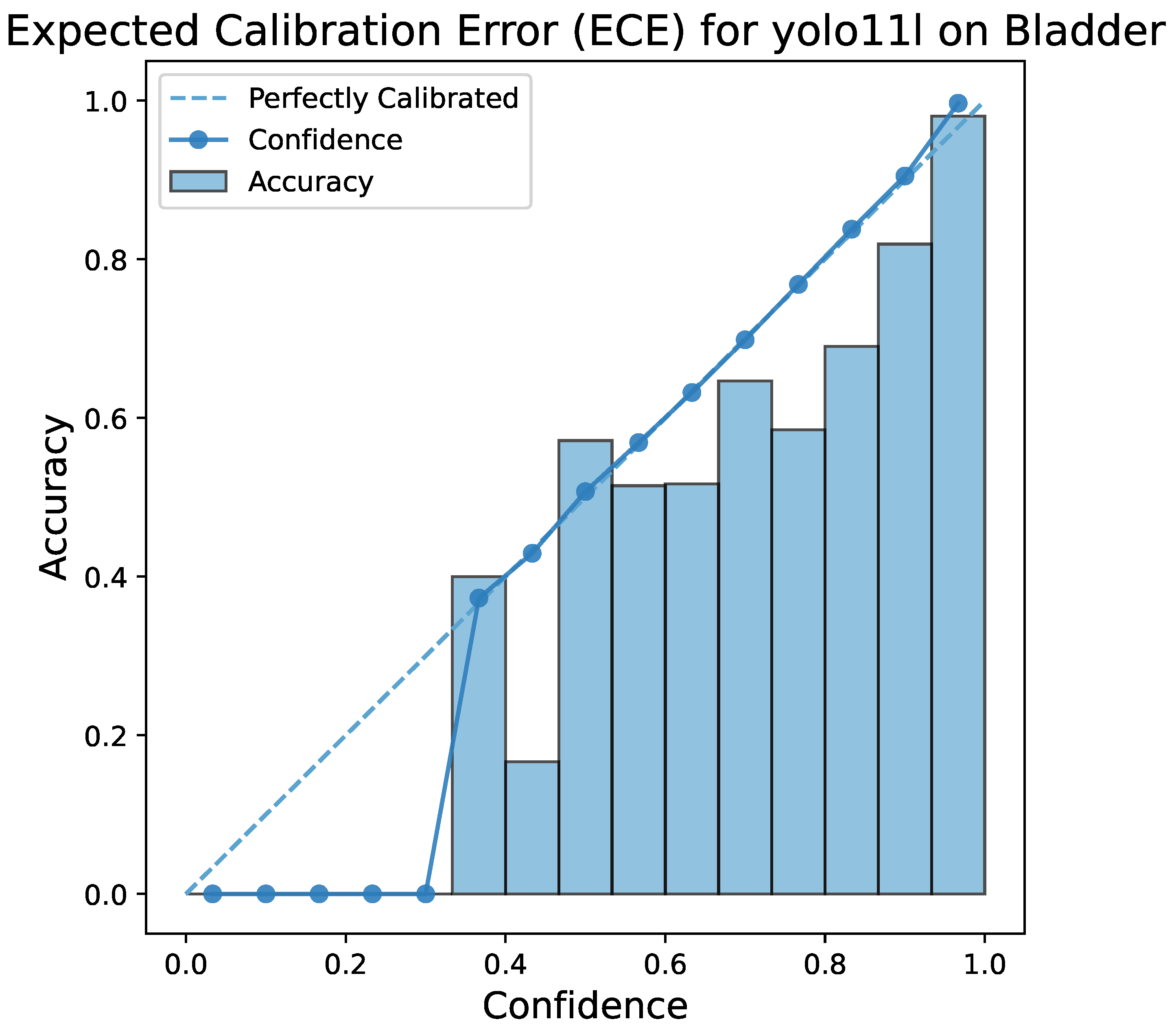

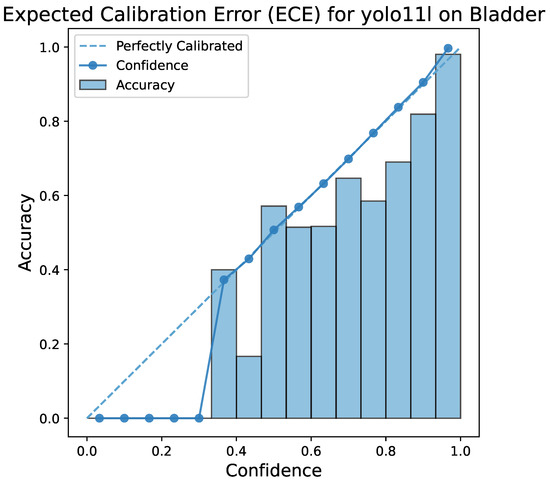

Figure 5 demonstrates the Expected Calibration Error (ECE) of the YOLO11l model. A small ECE figure means that the model’s predicted probabilities are in good agreement with the actual outcomes; e.g., if the model predicts the class with 95% confidence, it is correct 95% of the time. Such calibration is very important for the acceptance of a decision support system in medical diagnosis because clinicians not only depend on the predicted label but also on the confidence score for making their decisions.

Figure 5.

ECE plot for the YOLOv11-large model. This figure visualizes the relationship between the model’s predicted confidence and its actual accuracy, demonstrating that the model is well-calibrated for all three classes.

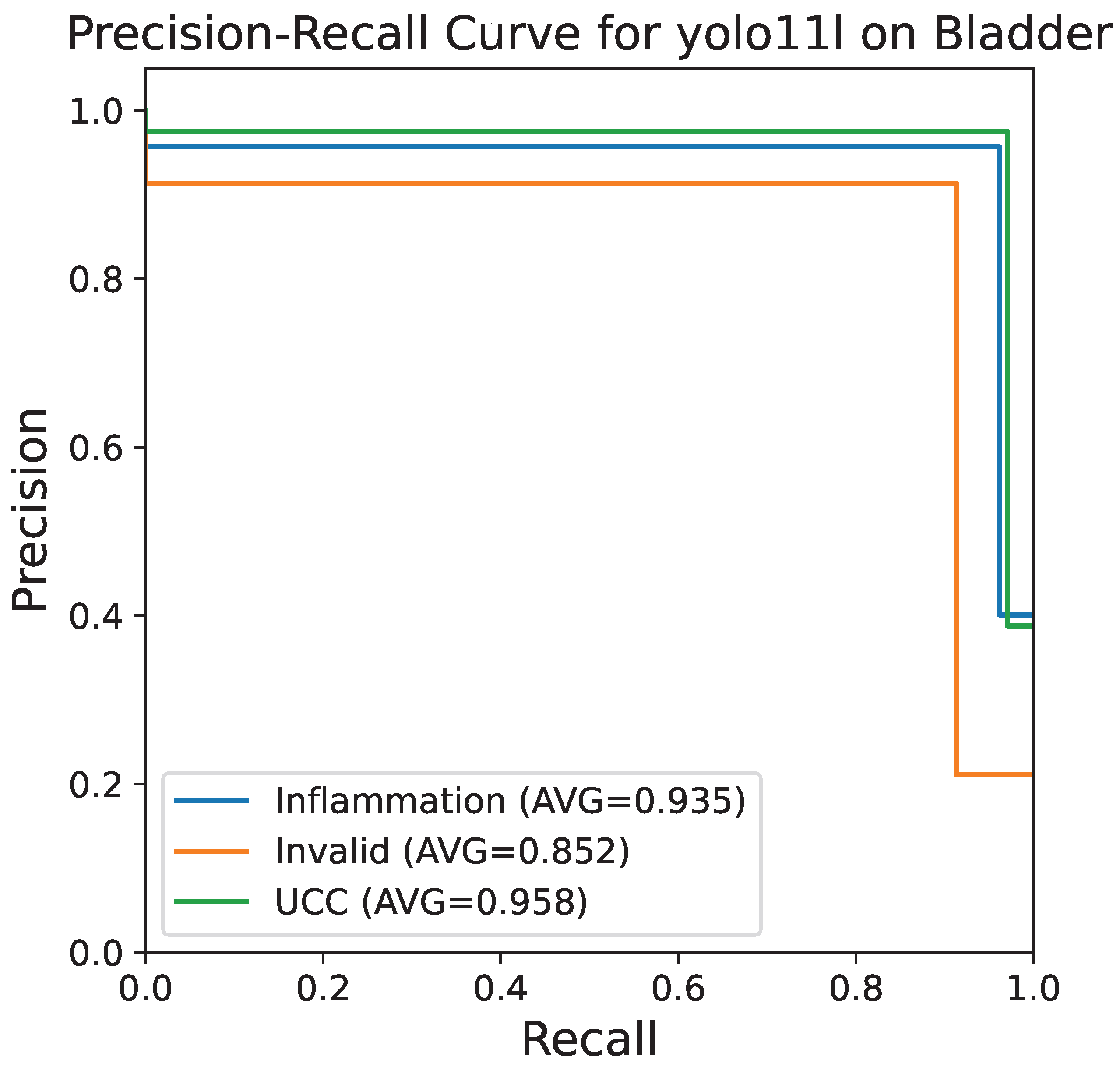

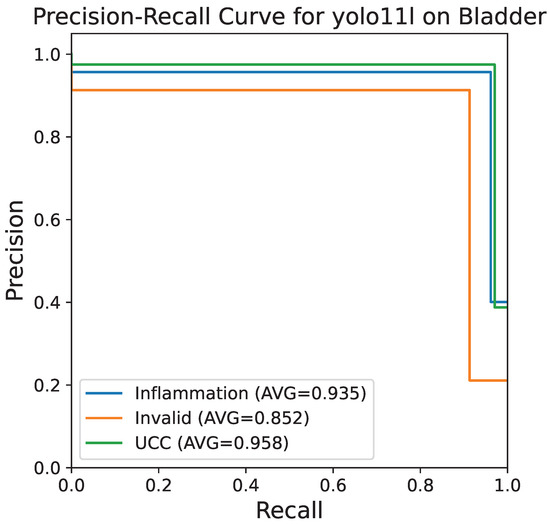

Figure 6 shows the precision–recall curve, which is very useful in the case of imbalanced datasets. The class-specific AUC scores are very close to the maximum value with inflammation being 0.935, invalid 0.852, and UCC 0.958 on average. These excellent AUC values are evidence that the model is extremely strong in keeping high precision even at high recall levels, which is necessary for a diagnostic context where false negatives need to be minimized.

Figure 6.

PR curve for the YOLOv11-large model on the bladder histopathology classification task. The area under the PR curve for each class is shown: inflammation (0.935), invalid (0.852), and UCC (0.958), indicating high performance even on imbalanced data.

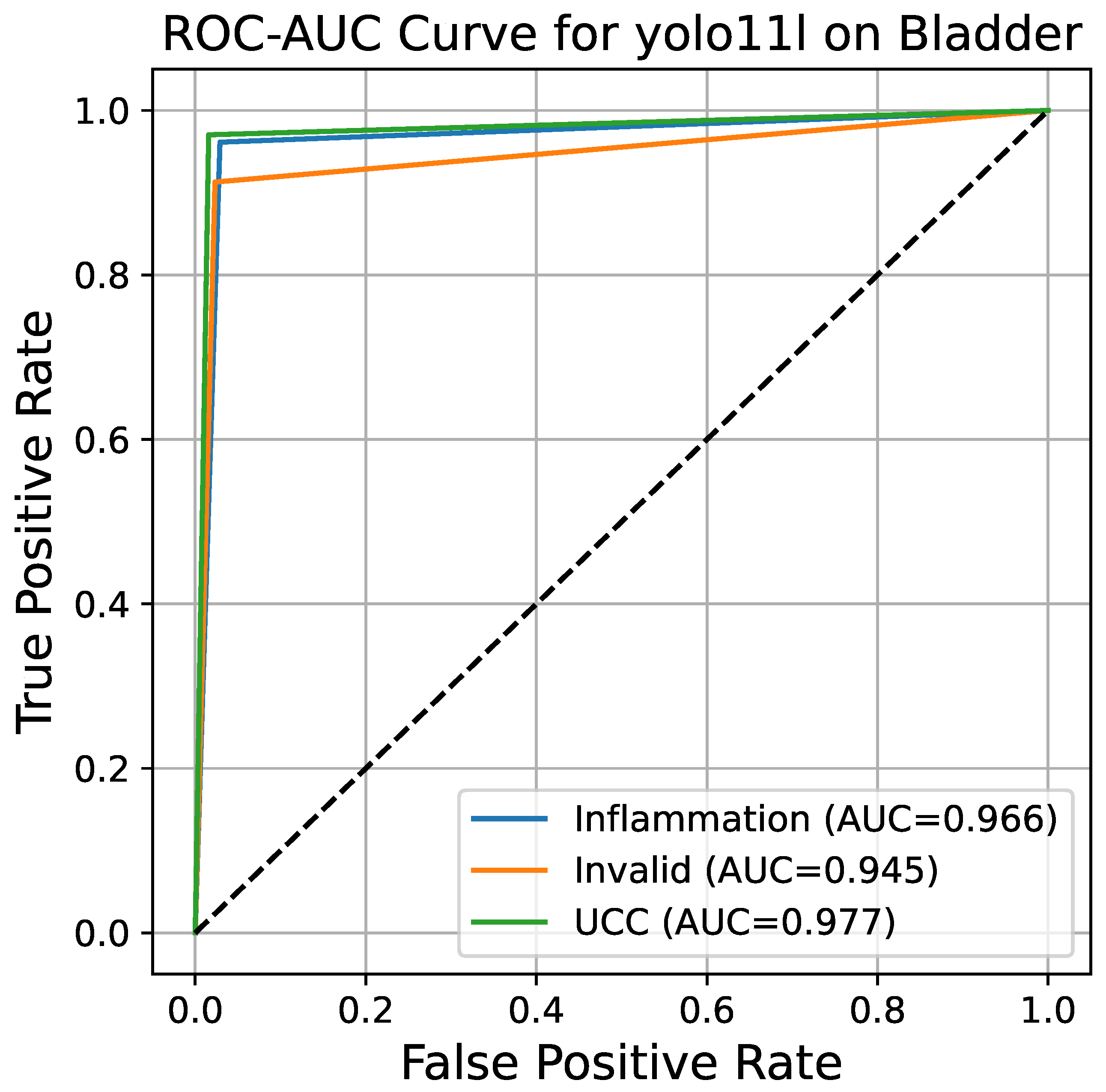

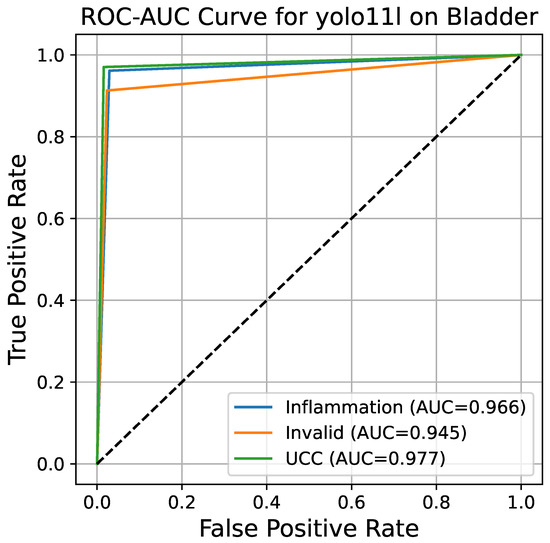

Figure 7 represents the ROC curve together with the AUC for the YOLO11l model. The ROC curve shows the true positive rate (TPR, or sensitivity) as a function of the false positive rate (FPR, or 1 − specificity) at different classification thresholds. The overall AUC for the multi-class problem is derived by the One-vs.-Rest (OvR) method, resulting in a value of 0.972. Such a high AUC score indicates that the model possesses an excellent capability of differentiating between the three classes; therefore, its performance is far better than that of a random guess (which would result in an AUC of 0.5). The almost perfect curves for the inflammation and UCC classes, together with a slightly lower but still very strong curve for the invalid class, confirm the model’s general power of diagnosis.

Figure 7.

ROC curve for the YOLOv11-large model. The overall AUC score of 0.972, calculated using the One-vs-Rest (OvR) method, confirms the model’s outstanding ability to discriminate between the three classes of bladder histopathology.

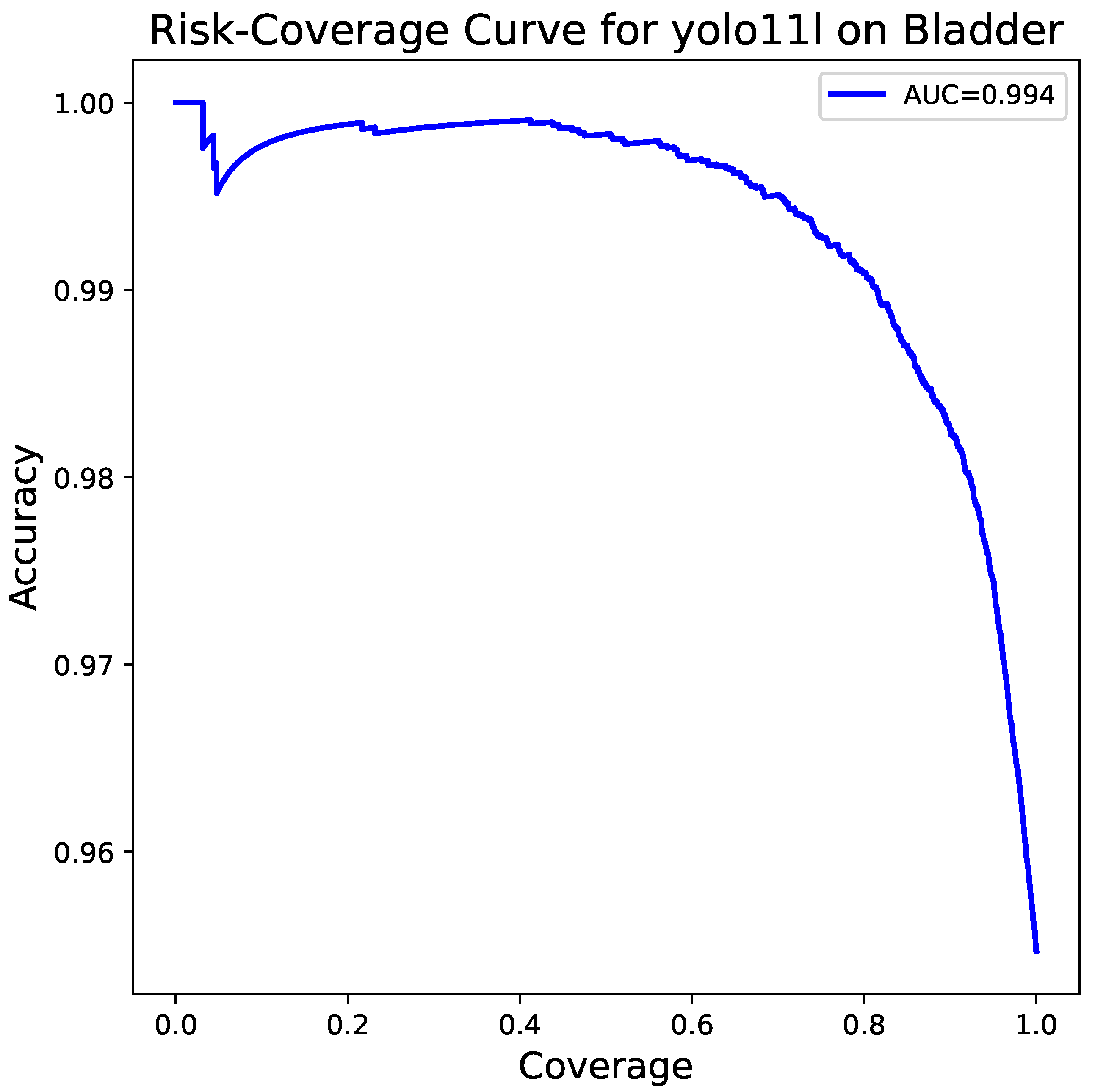

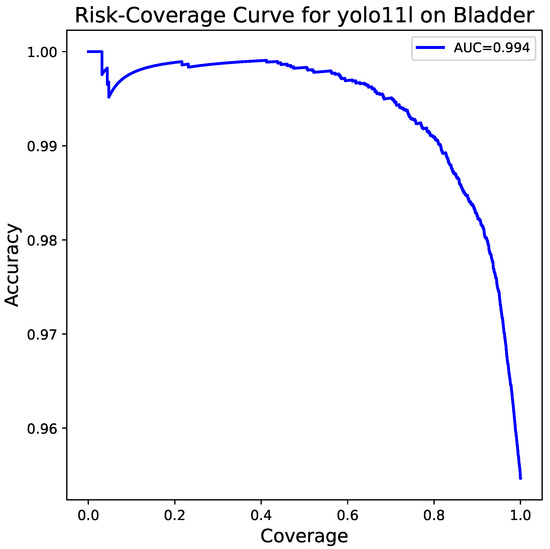

At last, Figure 8 shows the risk–coverage curve which measures how well a model performs as the risk it takes increases. The curve depicts a very small (0.006) area under the curve (AUC) or, conversely, an area of 0.994 demonstrating that the model stays very accurate even if the covered portion of the data is large. Thus, it not only points out the model’s dependability but also its potential use in scenarios of the real world of clinical practice where a high degree of certainty is the requirement.

Figure 8.

Risk–coverage curve for the YOLOv11-large model. The AUC of 0.994 demonstrates the model’s exceptional reliability, maintaining high accuracy across a wide range of coverage thresholds.

That said, our experiments lend support to the view that the YOLOv11-large model can be employed successfully for the multi-class classification of bladder histopathology slides. It outperforms the previous methods and makes use of the cutting-edge architectural elements of the YOLOv11 framework to yield precise, dependable, and well-calibrated predictions.

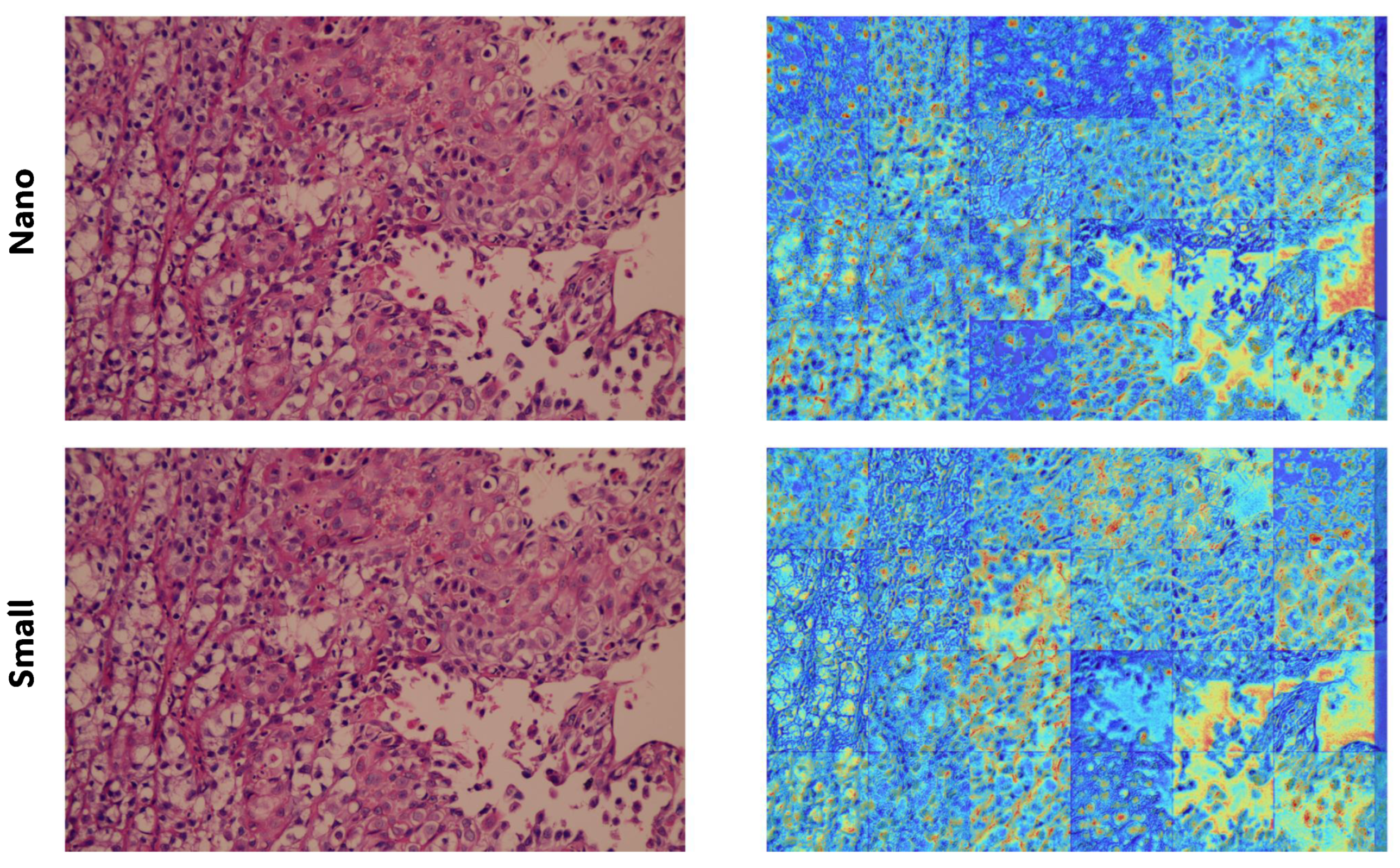

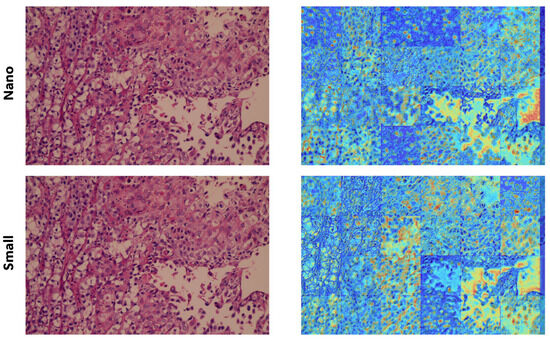

Additionally, Figure 9 demonstrates how EigenCAM interprets a testing sample. EigenCAM operates on each tile of the large histopathology image, offering graphical inference. This interpretation is conducted on the final 13 layers of the YOLOv11 architecture, enhancing the descriptive quality of the interpretation.

Figure 9.

The EigenCAM interpretation on a testing histopathology sample using the YOLOv11.

4.1. Comparison with Established Classification Architectures

To establish the relative advantage of the proposed YOLOv11 framework, we conducted a comparative analysis against several established deep learning classification architectures: ResNet-50, DenseNet-121, EfficientNet-B4, and Vision Transformer (ViT-Base). All models were trained and evaluated on the identical dataset and experimental setup (same train/val/test split, same augmentations, same hardware). The results are presented in Table 3. Based on this analysis, the YOLOv11-large model outperforms all baseline architectures across all key metrics. Its superior performance can be attributed to its specialized architectural features (C3k2 and C2PSA), which enhance feature extraction and spatial attention, leading to better discrimination of subtle pathological patterns compared to generic classification backbones.

Table 3.

Performance comparison between YOLOv11-Large and established classification baselines.

4.2. Medical Importance of the Study

This study fulfills an important unmet requirement in urologic oncology: the ability to efficiently yet accurately diagnose bladder cancer objectively based on histopathology slides. BC is one of the top 10 global malignancies with high rates of recurrence and significant mortality when not diagnosed early. Current diagnostic procedures that necessitate invasive interventions—such as cystoscopy and subjective interpretation by pathologists using H&E-stained tissue sections—are time-consuming, resource intensive, and inconsistent due to differences from one observer to another.

The proposed design is the YOLOv11-EigenCAM, which encompasses automated interpretation and highly accurate diagnostic tools. The model achieves an accuracy of between 93.81 and 96.49% and reliably classifies tissue in three clinical relevant categories: inflammation, UCC, and invalid/low-quality samples. Above all, this description reduces turnaround times in diagnostic procedures. Furthermore, it is clinically impactful, especially with triaging of cases, hence allowing pathologists to concentrate more on high-risk or questionable samples.

Such AI-enabled applications would seamlessly integrate into digital pathology systems at the actual clinical site for pre-screening. Fast assessment of malignant lesions could speed up intervention, which is one of the many ways to improve patient survival. A case in point would be such a network in high-volume pathology hubs or telepathology networks for rural and underrepresented areas, to prioritize urgent cases and clear diagnostic backlog while providing equally consistent assessments even where expert pathologists are few.

The addition of EigenCAM visualizes the model’s prediction with respect to specific tissue areas responsible for its decision. Such interpretability promotes trust amongst clinicians and backs the educational use of the medium for trainees and thus enables quality control that is possible through human–AI collaboration. This transparency guarantees that AI serves as a trusted co-pilot rather than a substitute, in accordance with regulations and ethical governance for clinical deployment of AI.

Besides primary diagnosis, very robust performance metrics—specificity (>94%), Youden’s J (>0.88), Yule’s Q (>0.98)—also delineate more applications such as treatment monitoring, recurrence prediction, and risk stratification under which the system may be used. It could evaluate therapeutic response or detect residual disease through serial biopsies or surgical specimens.

This work addresses the gap between cutting-edge deep learning and clinical practice. The introduction of widely available H&E-stained slides into personalized medicine with an interpretative output will see the advancement of the YOLOv11-EigenCAM framework in BC diagnostics as an innovation that is scalable, cost-effective, and trustworthy, thereby promoting better patient outcomes with less stress in the system.

4.3. Slide-Level Aggregation and Clinical Relevance

To bridge the gap between patch-level performance and clinical workflow, we implemented a simple slide-level aggregation strategy. For each WSI in the test set, predictions from all constituent patches were combined using majority voting. The final WSI diagnosis was assigned based on the most frequent predicted class among its patches. While slightly lower (see Table 4) than the patch-level performance, the slide-level accuracy of 94.44% demonstrates that the model retains strong diagnostic capability at the clinically relevant scale. This approach provides a practical output for pathologists, who typically evaluate entire slides rather than individual patches. Future work can explore more sophisticated aggregation methods (e.g., weighted averaging based on patch confidence) to further improve slide-level accuracy.

Table 4.

Performance at the Slide Level (Majority Voting).

4.4. Complexity Analysis and Real-Time Implementation

The focus for the development of diagnostic accuracy and eventually clinical usefulness among high computational and practical implementations was also kept in mind in the architecture of the proposed YOLOv11-EigenCAM framework. Its complexity is mainly dependent on the architecture of the YOLOv11 models (in particular, the nano and small types) that were chosen in view of balancing the performance with the resource constraints.

YOLOv11-nano provides a lightweight solution in approximately 2.7 million parameters for gratifications such as the extreme limitation of edge devices or environments with minimal GPU memory, i.e., 4 GB NVIDIA GPU used in this study. The anchor-free detection and optimized convolutional-based modules (C2f and C3) diminish computational restraints while still performing solid feature extraction. In contrast, although YOLOv11-small (with 6.4 million parameters) provides extra accuracy at the price of higher computational requirements, it is more suitable for being deployed on server bases with fewer constraints toward processing power and memory.

Both architectures used mosaic augmentation to improve generalization during training without affecting inference time significantly. During inference, YOLOv11 works in real time or near real time with standard hardware, processing histopathology tiles rapidly enough to meet clinical workflow requirements. Since this study does not report exact FPS values owing to the static nature of whole-slide image analysis, it is nevertheless worth mentioning that the design of the model naturally supports rapid batch processing of tiled images, which is a critical requirement in the scanning of huge digital slides.

EigenCAM’s incorporation is granted during inference with minimal added computational effort. EigenCAM avoids backpropagation through dense layers like other gradient-based techniques such as Grad-CAM, but rather finds using SVD applied to activation maps of the last convolutional layer. It thus requires less computation and no retraining or architectural change of the model, and it runs in parallel with the classification output. As shown in Figure 9, EigenCAM provides insightful heatmaps within seconds for each tile, alleviating the burden on the clinicians, who, alongside the prediction, can quickly review visual explanations without jeopardizing diagnostic throughput.

For the real-time implementation in pathology labs, the system could be established either as a cloud-based or a local server application linked with digital slide scanners. Histopathology images are preprocessed into non-overlapping tiles (as in this study) that are then classified by YOLOv11. Results can be visualized with EigenCAM overlays and subsequently aggregated to produce slide-level reports with areas of inflammation, carcinoma, or invalid tissue for the pathologists to concentrate on suspicious regions. This paradigmatic human-in-the-loop boosts diagnostic security and alleviates workloads.

Future optimization by quantization, pruning, or tensorRT formats for faster inference on NVIDIA hardware is possible due to the modular design of the framework. The compatibility of open-source tools such as Ultralytics YOLOv11 and the PyTorch CAM library allows for scalability across institutions and adaptation to new datasets or cancer subtypes.

5. Conclusions and Future Directions

This study demonstrates the strong potential of modern deep learning architectures for multiclass bladder cancer histopathology, with the large model achieving state-of-the-art accuracy and excellent robustness across inflammation, UCC, and invalid categories. Its architectural innovations (efficient feature extraction and spatial attention) enabled precise and well-calibrated predictions, supported by high AUPRC and ROC–AUC scores. Although minor ambiguities emerged between inflammation and invalid samples, the overall discriminative performance was clinically compelling. Future work will focus on expanding dataset diversity to strengthen generalizability further, integrating multimodal clinical and molecular data, and deploying the framework within real digital pathology workflows enhanced by EigenCAM-based visual explanations. We also plan to explore model compression and optimization strategies to facilitate deployment in resource-constrained environments. Importantly, clinical validation by expert pathologists is planned as a key component of our forthcoming evaluations, ensuring that the system’s predictions align with real-world diagnostic expectations.

Author Contributions

Conceptualization, A.A.M., Y.A., A.I.A. and M.B.; Methodology, A.A.M., Y.A. and H.Z.; software, H.Z., H.M.B. and M.A.E.; validation, A.A.M., M.B. and A.I.A.; formal analysis, Y.A., H.Z. and M.A.E.; resources, H.Z. and M.B.; data curation, A.A.M.; writing—original draft preparation, Y.A., H.Z. and A.I.A.; writing—review and editing, H.M.B., M.B. and M.A.E.; visualization, A.A.M.; supervision, M.B. and M.A.E.; project administration, M.A.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

This study did not involve any direct interaction with human participants or the collection of human biological material. All analyses were conducted using a publicly available, fully anonymized histopathology image dataset accessed via: https://doi.org/10.5061/dryad.0cfxpnw5q.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset is available at https://doi.org/10.5061/dryad.0cfxpnw5q. The data are released for research purposes and contain no personally identifiable information.

Acknowledgments

During the preparation of this manuscript, the authors used ChatGPT (OpenAI, GPT-5, 2025 version) for text refinement, including grammar, sentence structure, and clarity improvements. The authors reviewed and edited the output and take full responsibility for the content of this publication. No part of the research design, data analysis, results interpretation, or scientific conclusions relied on AI-generated content.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- He, K.; Meng, X.; Wang, Y.; Feng, C.; Liu, Z.; Li, Z.; Niu, Y. Progress of Multiparameter Magnetic Resonance Imaging in Bladder Cancer: A Comprehensive Literature Review. Diagnostics 2024, 14, 442. [Google Scholar] [CrossRef] [PubMed]

- Audenet, F.; Yates, D.R.; Cussenot, O.; Rouprêt, M. The role of chemotherapy in the treatment of urothelial cell carcinoma of the upper urinary tract (UUT-UCC). Urol. Oncol. Semin. Orig. Investig. 2013, 31, 407–413. [Google Scholar] [CrossRef] [PubMed]

- Ferro, M.; Falagario, U.G.; Barone, B.; Maggi, M.; Crocetto, F.; Busetto, G.M.; Giudice, F.d.; Terracciano, D.; Lucarelli, G.; Lasorsa, F.; et al. Artificial Intelligence in the Advanced Diagnosis of Bladder Cancer-Comprehensive Literature Review and Future Advancement. Diagnostics 2023, 13, 2308. [Google Scholar] [CrossRef]

- Lubbad, M.; Karaboga, D.; Basturk, A.; Akay, B.; Nalbantoglu, U.; Pacal, I. Machine learning applications in detection and diagnosis of urology cancers: A systematic literature review. Neural Comput. Appl. 2024, 36, 6355–6379. [Google Scholar] [CrossRef]

- Alsulami, A.A.; Albarakati, A.; Al-Ghamdi, A.A.M.; Ragab, M. Identification of anomalies in lung and colon cancer using computer vision-based Swin Transformer with ensemble model on histopathological images. Bioengineering 2024, 11, 978. [Google Scholar] [CrossRef] [PubMed]

- Coleman, J.F.; Hansel, D.E. Utility of Diagnostic and Prognostic Markers in Urothelial Carcinoma of the Bladder. Adv. Anat. Pathol. 2009, 16, 67–78. [Google Scholar] [CrossRef] [PubMed]

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef]

- Farsi, M.; ZainEldin, H.; Sayed, H.A.; El-Agamy, R.F.; Atlam, E.-S.; Alsaedi, S.A.; Alwateer, M.; Balaha, H.M.; Badawy, M. Elhosseini Deep Learning for Pathology: YOLOv8 with EigenCAM for Reliable Colorectal Cancer Diagnostics. Bioengineering 2025, 12, 1203. [Google Scholar] [CrossRef]

- Saginala, K.; Barsouk, A.; Aluru, J.S.; Rawla, P.; Padala, S.A.; Barsouk, A. Epidemiology of Bladder Cancer. Med. Sci. 2020, 8, 15. [Google Scholar] [CrossRef]

- Babjuk, M.; Burger, M.; Capoun, O.; Cohen, D.; Compérat, E.M.; Dominguez Escrig, J.L.; Gontero, P.; Liedberg, F.; Masson-Lecomte, A.; Mostafid, A.H.; et al. European Association of Urology Guidelines on Non-muscle-invasive Bladder Cancer (Ta, T1, and Carcinoma in Situ). Eur. Urol. 2022, 81, 75–94. [Google Scholar] [CrossRef]

- Jiang, P.; Wu, S.; Qin, W.; Xie, Y. Complex Large-Deformation Multimodality Image Registration Network for Image-Guided Radiotherapy of Cervical Cancer. Bioengineering 2024, 11, 1304. [Google Scholar] [CrossRef]

- Jyothi, P.; Singh, A.R. Deep learning models and traditional automated techniques for brain tumor segmentation in MRI: A review. Artif. Intell. Rev. 2022, 56, 2923–2969. [Google Scholar] [CrossRef]

- Qi, W.; Xu, X.; Qian, K.; Schuller, B.W.; Fortino, G.; Aliverti, A. A review of AIoT-based human activity recognition: From application to technique. IEEE J. Biomed. Health Inform. 2024, 29, 2425–2438. [Google Scholar] [CrossRef] [PubMed]

- Leung, J.H.; Karmakar, R.; Mukundan, A.; Thongsit, P.; Chen, M.M.; Chang, W.Y.; Wang, H.C. Systematic meta-analysis of computer-aided detection of breast cancer using hyperspectral imaging. Bioengineering 2024, 11, 1060. [Google Scholar] [CrossRef] [PubMed]

- Solawetz, J. What Is YOLOv8? The Ultimate Guide. [2024]—blog.roboflow.com. 2024. Available online: https://blog.roboflow.com/whats-new-in-yolov8/ (accessed on 4 October 2024).

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-time flying object detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar] [CrossRef]

- Xiao, B.; Nguyen, M.; Yan, W.Q. Fruit ripeness identification using YOLOv8 model. Multimed. Tools Appl. 2023, 83, 28039–28056. [Google Scholar] [CrossRef]

- Pham, T.L.; Le, V.H. Ovarian Tumors Detection and Classification from Ultrasound Images Based on YOLOv8. J. Adv. Inf. Technol. 2024, 15, 264–275. [Google Scholar] [CrossRef]

- Demir, R.; Koc, S.; Ozturk, D.G.; Bilir, S.; Ozata, H.I.; Williams, R.; Christy, J.; Akkoc, Y.; Tinay, I.; Gunduz-Demir, C.; et al. Artificial intelligence assisted patient blood and urine droplet pattern analysis for non-invasive and accurate diagnosis of bladder cancer. Sci. Rep. 2024, 14, 2488. [Google Scholar] [CrossRef]

- Sarkar, S.; Min, K.; Ikram, W.; Tatton, R.W.; Riaz, I.B.; Silva, A.C.; Bryce, A.H.; Moore, C.; Ho, T.H.; Sonpavde, G.; et al. Performing Automatic Identification and Staging of Urothelial Carcinoma in Bladder Cancer Patients Using a Hybrid Deep-Machine Learning Approach. Cancers 2023, 15, 1673. [Google Scholar] [CrossRef]

- Lee, M.C.; Wang, S.Y.; Pan, C.T.; Chien, M.Y.; Li, W.M.; Xu, J.H.; Luo, C.H.; Shiue, Y.L. Development of Deep Learning with RDA U-Net Network for Bladder Cancer Segmentation. Cancers 2023, 15, 1343. [Google Scholar] [CrossRef]

- Sun, D.; Hadjiiski, L.; Gormley, J.; Chan, H.P.; Caoili, E.M.; Cohan, R.H.; Alva, A.; Gulani, V.; Zhou, C. Survival Prediction of Patients with Bladder Cancer after Cystectomy Based on Clinical, Radiomics, and Deep-Learning Descriptors. Cancers 2023, 15, 4372. [Google Scholar] [CrossRef]

- Wu, E.; Hadjiiski, L.M.; Samala, R.K.; Chan, H.P.; Cha, K.H.; Richter, C.; Cohan, R.H.; Caoili, E.M.; Paramagul, C.; Alva, A.; et al. Deep Learning Approach for Assessment of Bladder Cancer Treatment Response. Tomography 2019, 5, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Q.; Yang, R.; Ni, X.; Yang, S.; Xiong, L.; Yan, D.; Xia, L.; Yuan, J.; Wang, J.; Jiao, P.; et al. Accurate Diagnosis and Survival Prediction of Bladder Cancer Using Deep Learning on Histological Slides. Cancers 2022, 14, 5807. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Ye, X. MSEDTNet: Multi-Scale Encoder and Decoder with Transformer for Bladder Tumor Segmentation. Electronics 2022, 11, 3347. [Google Scholar] [CrossRef]

- Lorencin, I.; Smolic, K.; Segota, S.B.; Andelic, N.; Stifanic, D.; Musulin, J.; Markic, D.; Spanjol, J.; Car, Z. Utilization of Convolutional Neural Networks for Urinary Bladder Cancer Diagnosis Recognition From CT Imagery. In Proceedings of the 2021 IEEE 21st International Conference on Bioinformatics and Bioengineering (BIBE), Kragujevac, Serbia, 25–27 October 2021. [Google Scholar] [CrossRef]

- Lorencin, I.; Andelic, N.; Spanjol, J.; Car, Z. Using multi-layer perceptron with Laplacian edge detector for bladder cancer diagnosis. Artif. Intell. Med. 2020, 102, 101746. [Google Scholar] [CrossRef]

- Qaiser, T.; Lee, C.Y.; Vandenberghe, M.; Yeh, J.; Gavrielides, M.A.; Hipp, J.; Scott, M.; Reischl, J. Usability of deep learning and H&E images predict disease outcome-emerging tool to optimize clinical trials. NPJ Precis. Oncol. 2022, 6, 37. [Google Scholar] [CrossRef] [PubMed]

- Mundhada, A.; Sundaram, S.; Swaminathan, R.; D’ Cruze, L.; Govindarajan, S.; Makaram, N. Differentiation of urothelial carcinoma in histopathology images using deep learning and visualization. J. Pathol. Inform. 2023, 14, 100155. [Google Scholar] [CrossRef] [PubMed]

- Yin, P.N.; KC, K.; Wei, S.; Yu, Q.; Li, R.; Haake, A.R.; Miyamoto, H.; Cui, F. Histopathological distinction of non-invasive and invasive bladder cancers using machine learning approaches. BMC Med. Inform. Decis. Mak. 2020, 20, 162. [Google Scholar] [CrossRef]

- Lee, S.; Jue, M.; Lee, K.; Paulson, B.; Oh, J.; Cho, M.; Kim, J.K. Early-stage diagnosis of bladder cancer using surface-enhanced Raman spectroscopy combined with machine learning algorithms in a rat model. Biosens. Bioelectron. 2024, 246, 115915. [Google Scholar] [CrossRef] [PubMed]

- Hosney, M.E.; Houssein, E.H.; Saad, M.R.; Samee, N.A.; Jamjoom, M.M.; Emam, M.M. Efficient bladder cancer diagnosis using an improved RIME algorithm with Orthogonal Learning. Comput. Biol. Med. 2024, 182, 109175. [Google Scholar] [CrossRef]

- Jiao, P.; Zheng, Q.; Yang, R.; Ni, X.; Wu, J.; Chen, Z.; Liu, X. Prediction of HER2 Status Based on Deep Learning in H&E-Stained Histopathology Images of Bladder Cancer. Biomedicines 2024, 12, 1583. [Google Scholar] [CrossRef]

- Ameen, Y.A.; Badary, D.M.; Abonnoor, A.E.I.; Hussain, K.F.; Sewisy, A.A. Which data subset should be augmented for deep learning? a simulation study using urothelial cell carcinoma histopathology images. BMC Bioinform. 2023, 24, 75. [Google Scholar] [CrossRef] [PubMed]

- Ameen, Y.; Badary, D.; Hussain, K.; Sewisy, A. Hematoxylin-and-Eosin-Stained Bladder Urothelial Cell Carcinoma Versus Inflammation Digital Histopathology Image Dataset. 2023. Available online: https://datadryad.org/dataset/doi:10.5061/dryad.0cfxpnw5q (accessed on 10 January 2025).

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Bany Muhammad, M.; Yeasin, M. Eigen-CAM: Visual Explanations for Deep Convolutional Neural Networks. Comput. Sci. 2021, 2, 47. [Google Scholar] [CrossRef]

- Muhammad, M.B.; Yeasin, M. Eigen-CAM: Class Activation Map using Principal Components. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Rahman, A.N.; Andriana, D.; Machbub, C. Comparison between Grad-CAM and EigenCAM on YOLOv5 detection model. In Proceedings of the 2022 International Symposium on Electronics and Smart Devices (ISESD), Bandung, Indonesia, 8–9 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Gildenblat, J.; de Cid, J.; Hjermitslev, O.; Lu, M.; Draelos, R.; Jackyjinjing; Nevermore, S.; Butera, L.; Zhang, Z.; Madhawa, K.; et al. PyTorch Library for CAM Methods. 2021. Available online: https://github.com/jacobgil/pytorch-grad-cam (accessed on 20 January 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.