1. Introduction

Depression constitutes a primary driver of global disability, affecting approximately 280 million individuals and contributing to over 13% of all disability-adjusted life-years [

1,

2]. Despite the availability of evidence-based interventions, a significant treatment gap exists, particularly in low- and middle-income countries (LMICs). In these regions, more than 75% of affected individuals remain untreated due to a systemic shortage of mental health professionals and the pervasive social stigma associated with psychiatric conditions. In Vietnam, cultural barriers and resource constraints often impede large-scale screening efforts, leading to late-stage diagnosis and exacerbating both individual suffering and the broader economic burden [

3].

Conventional diagnostic frameworks, including the Hamilton Rating Scale for Depression (HAM-D) and the Patient Health Questionnaire-9 items, remain the clinical gold standard; however, they are inherently limited by subjective interpretation and recall bias [

4]. Furthermore, the administration of these instruments in primary-care settings is often hindered by the requirement for specialized training and significant time investment. While objective alternatives such as biochemical markers (e.g., salivary cortisol, blood gene-expression assays) and neuroimaging offer higher precision, their invasive nature and high operational costs render them impractical for widespread deployment in resource-limited settings [

5]. This disparity underscores the urgent necessity for non-invasive, cost-effective, and objective screening modalities that can be seamlessly integrated into routine clinical workflows.

The pursuit of objective diagnostic tools has evolved from biochemical assays to sophisticated neurological assessments. Recent innovations, such as the NeuroFeat framework, have demonstrated that adaptive feature engineering—specifically utilizing the Logarithmic-Spatial Bound Whale Optimization Algorithm (L-SBWOA)—can refine feature spaces to achieve classification accuracies reported as high as 99.22% [

6]. While deep learning models often demonstrate superior performance in such contexts, traditional machine learning approaches remain highly relevant due to their lower computational requirements and superior interpretability within clinical environments [

7]. While these neuroimaging-based frameworks show high accuracy, the need for specialized hardware limits their scalability. Consequently, acoustic voice analysis has emerged as a compelling alternative, reconciling the tension between high-precision computational modeling and the need for accessible, non-invasive screening tools.

The field of vocal biomarkers is advancing rapidly, with contemporary research prioritizing the development of robust, multilingual models suitable for both clinical and remote monitoring [

8,

9]. A vocal biomarker is defined as an objective feature, or combination thereof, derived from an audio signal that correlates with clinical outcomes, thereby facilitating risk prediction and symptom monitoring [

8]. Speech production involves complex neuromotor coordination that reflects subtle affective shifts; core depressive symptoms, such as psychomotor retardation and anhedonia, manifest through altered acoustic properties, including reduced fundamental frequency (F0) variability, prolonged pauses, diminished intensity, and spectral shifts [

10,

11,

12,

13]. Crucially, these vocal characteristics are largely involuntary, mitigating the risk of deliberate response bias inherent in self-report measures.

Acoustic investigations typically categorize potential biomarkers into prosodic features, perturbation measures, and spectral qualities. Clinical observations consistently characterize the speech of depressed individuals as slow, monotonous, and breathy [

14,

15]. Quantitative studies have identified reduced mean F0, increased pause duration, and lower speech rates as significant indicators [

8]. Furthermore, perturbation metrics such as Jitter (frequency variation), Shimmer (amplitude variation), and the Noise-to-Harmonics Ratio are frequently utilized, with sustained vowel phonation studies suggesting that amplitude variability is highly discriminant in identifying depressive states [

16]. Spectral features, particularly Mel-Frequency Cepstral Coefficients (MFCCs), have also shown promise. For instance, the second dimension (MFCC 2) has been identified as an efficient classifier, reflecting physiological changes in the vocal tract and laryngeal motor control [

11].

Despite these technological strides, the inherent variability of human speech—compounded by linguistic and cultural nuances—poses a significant challenge to the cross-linguistic generalizability of automated systems [

9,

17]. The phonetic structure of a language is a central confounder; for example, tonal languages like Vietnamese utilize pitch lexically, which differs fundamentally from the prosodic structures of non-tonal languages like English or Japanese [

12,

18]. Research has indicated that the predictive reliability of MFCCs varies across Chinese and Japanese cohorts, likely due to language-specific articulation patterns [

11,

19]. Moreover, while some markers like articulation rate show cross-linguistic consistency, others—such as pausing behavior—may be significant only in specific linguistic groups [

20].

Recent methodological efforts have sought to identify translinguistic vocal markers. Large-scale longitudinal initiatives, such as the RADAR-MDD project spanning English, Dutch, and Spanish, have consistently linked depression severity to decreased speech rate and intensity, suggesting psychomotor impairment as a universal feature [

20]. Advanced frameworks, including the multi-lingual Minimum Redundancy Maximum Relevance (ml-MRMR) algorithm and lightweight multimodal fusion networks, have been developed to enhance model generalization across diverse languages such as Turkish, German, and Korean [

21,

22]. Furthermore, investigations into non-verbal semantic patterns suggest shared experiential states of depression across different cultures [

23].

A critical yet frequently underemphasized challenge is dataset heterogeneity and the resultant biases stemming from varied recruitment contexts. Systematic reviews highlight that environmental factors (e.g., recording quality), demographic variances (e.g., age, medication), and methodological inconsistencies (e.g., varying speech tasks) significantly influence model outcomes [

8,

24]. Specifically, comparing data from university volunteers with that of clinically diagnosed patients can introduce confounding variables related to symptom severity and motivational states [

18]. To address these issues, recent studies have employed rigorous statistical designs, such as two-stage meta-analyses and Linear Mixed Effects Models, to account for site-specific variability and within-participant clustering [

14,

20].

While robust evidence supports the existence of stable vocal phenomena across diverse populations [

14], most research remains focused on monolingual, culturally homogeneous cohorts. The generalizability of these biomarkers across the distinct linguistic landscapes of Southeast and East Asia remains largely unexplored [

17,

25,

26]. In Vietnam, although preliminary feasibility studies suggest that voice analysis can distinguish depressed individuals [

3,

27], a systematic identification of the most discriminative acoustic parameters within the Vietnamese language is currently lacking. Furthermore, direct cross-cultural comparisons—particularly between tonal languages like Vietnamese and non-tonal/pitch-accent languages like Japanese—are absent from the literature, despite evidence that language-specific norms meaningfully influence biomarker performance [

17].

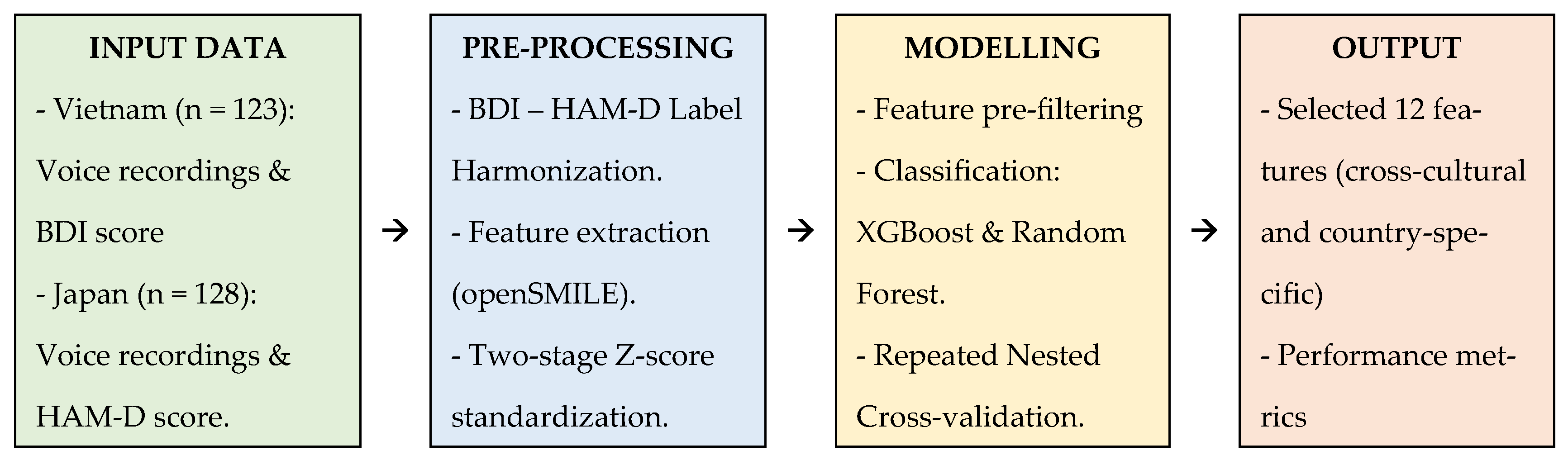

To address these empirical gaps, this study aims to: (1) systematically identify a robust set of Vietnamese-specific acoustic features for depression assessment; (2) establish a parallel set of Japanese-specific features; and (3) derive and validate a core set of cross-culturally consistent acoustic features using datasets from both nations. We hypothesize that a subset of acoustic features, primarily those reflecting physiological vocal tract dynamics and neuromuscular control rather than prosodic linguistic elements, will demonstrate consistent discriminative power across both Vietnamese and Japanese contexts. This research seeks to contribute to the development of culturally attuned yet universally applicable vocal biomarkers for mental health.

The remainder of this paper is organized as follows:

Section 2 (Materials and Methods) details the datasets, feature extraction pipelines, and validation frameworks;

Section 3 (Results) presents the selected feature sets and model performance metrics;

Section 4 (Discussion) interprets these findings within the context of cross-linguistic research; and

Section 5 (Conclusion) summarizes the clinical and technological implications.

4. Discussion

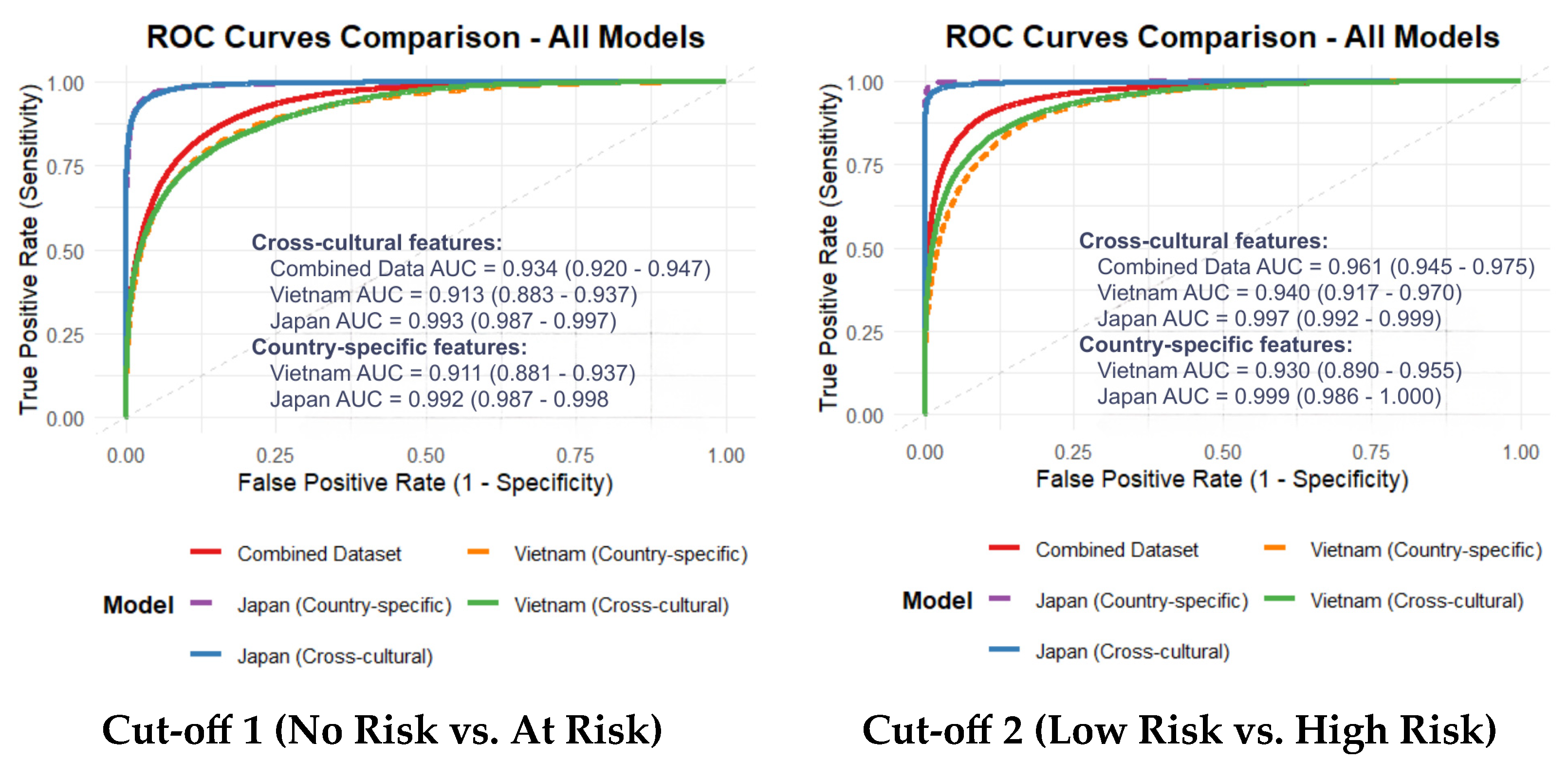

This study addresses a critical and persistent barrier in computational psychiatry: the lack of objective, scalable, and—most importantly—cross-culturally generalizable biomarkers for depression. Our primary contribution is the identification and validation of a compact 12-feature acoustic set, predominantly comprising spectral and cepstral descriptors. This feature set demonstrated robust and consistent classification performance across Vietnamese and Japanese cohorts. While language-specific models naturally attained the highest accuracy, the derived cross-cultural feature set maintained high discriminative power (AUC > 0.90), suggesting that specific acoustic manifestations of depression transcend linguistic and phonetic boundaries.

Our analysis further revealed subtle but meaningful nuances between the two languages. Formant-related features, which are sensitive to articulatory modulation, appeared more influential in the Vietnamese cohort. This may be attributed to the complex articulatory adjustments required for Vietnamese lexical tones. Conversely, energy-related features and higher-order MFCC dynamics were more prominent in the Japanese dataset, potentially reflecting reduced vocal effort and diminished articulatory variability. These findings suggest that while the underlying pathophysiology of depression may be universal, its acoustic expression is subtly modulated by language-specific phonetic demands.

4.1. Language-Specific Features and the Influence of Dataset Heterogeneity

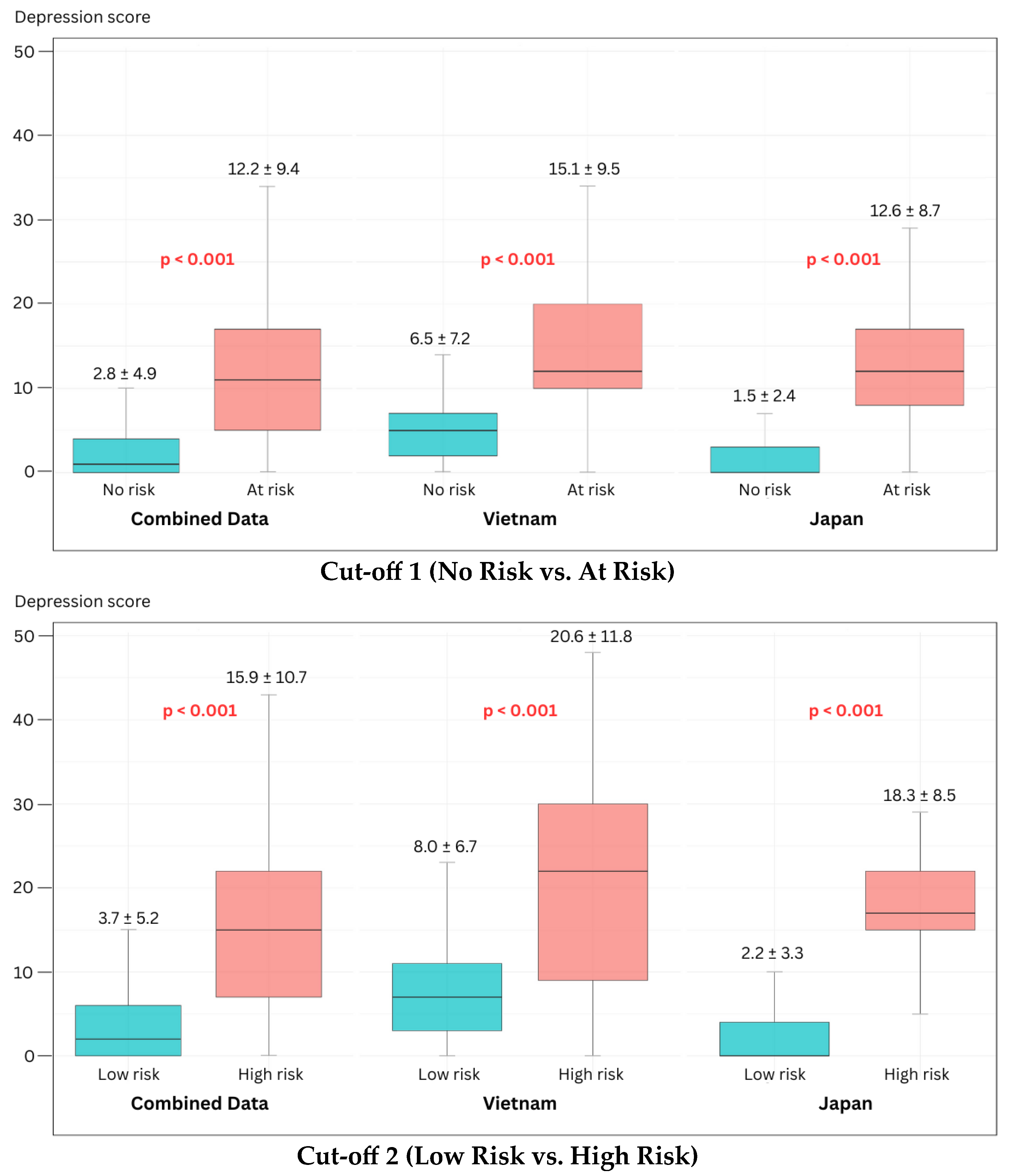

We first confirmed the feasibility of developing high-performance, language-specific models. The Vietnam-specific models achieved strong performance (AUC 0.911–0.930), while the Japanese models exhibited near-perfect classification (AUC 0.992–0.999). This performance disparity likely reflects a “label purity bias”; the Japanese dataset consisted of clinically diagnosed MDD patients versus healthy controls, whereas the Vietnamese cohort utilized a community-clinical mixed sample with self-reported BDI scores. The inherent noise and potential cultural response bias in self-reports often result in lower model performance compared to clinically gold-standard labels, a phenomenon well-documented in recent cross-lingual literature [

9,

29].

Across both datasets, the most discriminative features were consistently drawn from the MFCC, AudSpec, and PCM families. This corroborates extensive evidence indicating that spectral and cepstral descriptors are among the most resilient predictors of depression [

10,

11,

30]. Specifically, the high ranking of MFCC 2 (

mfcc_sma[2]) aligns with studies verifying its efficacy in capturing finer spectral details associated with vocal tract alterations and laryngeal tension [

8]. Our results reinforce the findings of previous studies [

20,

21], extending the list of languages where physiologically rooted markers—such as spectral harmonicity and reduced articulation dynamics—serve as reliable indicators of psychomotor impairment.

4.2. Implications of the Cross-Cultural Feature Set and Robustness Analyses

The study’s core finding is the isolation of a compact set of 12 cross-cultural features that were not dominated by language-dependent prosodic elements (e.g., F0 statistics). The predominance of spectral (AudSpec) and cepstral (MFCC) features suggests that this set captures the fundamental physiological underpinnings of depression, such as neuromuscular slowing and reduced vocal tract coordination, rather than surface-level linguistic variation. SHAP analysis confirmed that features representing spectral shape and perceived sharpness (psySharpness) were the primary drivers of model decisions, likely capturing the “flat” or “muffled” vocal quality characteristic of depressive speech across cultures.

Notably, the cross-cultural model’s performance was comparable to the language-specific models within this two-country context. For the Vietnamese dataset, the cross-cultural AUC (0.913) was similar to the country-specific model (0.911), showing stable performance even at Cut-off 2. These results suggest that our feature selection approach potentially mitigates some linguistic variance by identifying features with consistent patterns across both Vietnamese and Japanese datasets. The robustness of this feature set is further supported by its consistent performance across different machine learning algorithms. While XGBoost was chosen as the primary model for its optimal balance of performance and efficiency, a comparative analysis with a Random Forest classifier revealed that the feature set maintained robust discriminative power, indicating that the predictive signal is inherent to the acoustics and not an artifact of a specific algorithm.

Supplementary analyses reinforce the validity of these findings. First, our domain-balanced feature selection approach yielded performance comparable to the data-driven RFE benchmark (AUC 0.934 vs. 0.933), while potentially offering better alignment with established biological constructs. Second, label harmonization analysis indicated that the identified acoustic signatures remained relatively stable across different diagnostic instruments (BDI vs. HAM-D), suggesting these markers may reflect clinical severity rather than scale-specific artifacts. Third, demographic residualization showed that age and sex differences did not substantially alter model performance, confirming that the selected features primarily represent variance associated with depressive symptoms.

The high AUCs (0.913–0.993) observed in our models align with a trend in the recent literature reporting exceptional classification accuracies for depression using vocal biomarkers [

22,

28]. However, as critically noted in systematic reviews, such high performance metrics, often derived from optimized within-dataset validation, can be misleading and frequently fail to generalize in external or cross-linguistic validation [

8,

9]. This underscores a fundamental challenge in computational psychiatry: distinguishing robust, generalizable biomarkers from statistical patterns that overfit to specific datasets.

The performance disparity between our Japanese (near-perfect AUC) and Vietnamese (high but lower AUC) cohorts illustrates this challenge. While superior “label purity” from clinician-rated diagnoses in the Japanese sample is a primary explanation, alternative interpretations related to cultural and methodological biases must be considered. The self-report nature of the BDI in the Vietnamese cohort introduces potential cultural response biases, where individuals may under-report or over-report symptoms due to stigma or differing cultural expressions of distress, thereby increasing “label noise” and attenuating model performance [

9,

23]. This heterogeneity in ground truth definition itself represents a key challenge for the field, complicating direct comparisons.

Furthermore, the risk of overfitting extends beyond labels to linguistic and recording artifacts. The field has documented instances where features seemingly robust in one linguistic context fail in another, as seen in the language-specificity of pausing behaviors in the RADAR-MDD study [

20] or the significant performance drop of self-supervised learning features in cross-lingual tasks [

29]. A truly balanced view acknowledges that high AUCs, while encouraging, are not definitive proof of a biomarker’s validity. They must be tempered by the recognition that our validation, while cross-cultural, remains limited to two languages and specific dataset characteristics. Therefore, while this study identifies a promising, physiologically informed feature set, its performance must be interpreted as a strong initial signal within a defined context, rather than as a fully validated universal solution. This finding aligns with the objectives of advanced methodologies like domain adaptation and multi-lingual feature selection [

21,

31], but definitive establishment of these features as language-invariant requires further validation across a broader range of languages and controlled settings.

4.3. Strengths and Limitations

A major strength of this study is the validation of a non-inferior, cross-cultural feature set that facilitates scalable, low-cost screening in resource-constrained settings like Vietnam. A vocal biomarker that does not require extensive language-specific recalibration could significantly accelerate the deployment of mobile health interventions in diverse communities.

However, several limitations warrant consideration. First, the “ground-truth mismatch” between self-report and clinician-rated instruments remains a confounding factor in direct severity comparisons. Second, while standardized, the different recording protocols and number of phrases between datasets may introduce acoustic variability. Third, the use of scripted speech may not capture the full range of spontaneous affective expression. Fourth, the small sample size of the “High Risk” group (n = 33) limits the reliability of high-severity classification, suggesting that sensitivity metrics for severe cases should be interpreted with caution until validated in larger, prospective cohorts. This is evidenced by our RFE benchmark analysis, where both physiologically informed and data-driven approaches showed suboptimal sensitivity (0.628–0.758) for Cut-off 2. These results suggest that while our core features are cross-culturally stable for general screening, further refinement with larger clinical samples is essential to improve the detection of severe depression. A further methodological consideration for future research is the formal design of falsifiability tests—such as evaluating model performance on cohorts with comorbid non-psychiatric voice disorders or under acoustically adversarial conditions—to rigorously challenge the specificity and robustness of the proposed acoustic biomarkers beyond the current validation framework.

4.4. Future Directions

Building on these findings, several critical research avenues are proposed to validate and translate the identified biomarkers. First, prospective multilingual trials are essential, incorporating a third, typologically distinct language family (e.g., Indo-European) with fully standardized recording and clinical assessment protocols to rigorously test the universality of the 12-feature set. Second, longitudinal studies are needed to determine if these features act as state-dependent markers sensitive to treatment response, thereby enhancing their utility for monitoring clinical trajectories. Third, technical robustness must be advanced through the implementation of domain adaptation techniques (e.g., adversarial training, self-supervised learning) to minimize performance shifts caused by linguistic and environmental variability. Crucially, future work must also incorporate falsifiability tests—such as evaluating model performance on cohorts with non-psychiatric voice disorders (e.g., laryngitis, dysarthria) or under acoustically adversarial conditions—to challenge the specificity of the features to depression and safeguard against overfitting. Finally, research should progress toward real-world integration, conducting feasibility and acceptability studies for deploying voice-based screening in primary care or telehealth settings. This integrated pathway, from rigorous validation to practical implementation, aims to advance the development of clinically reliable, generalizable, and equitable digital mental health tools.