Abstract

The electroencephalogram (EEG), widely used for measuring the brain’s electrophysiological activity, has been extensively applied in the automatic detection of epileptic seizures. However, several challenges remain unaddressed in prior studies on automated seizure detection: (1) Methods based on CNN and LSTM assume that EEG signals follow a Euclidean structure; (2) Algorithms leveraging graph convolutional networks rely on adjacency matrices constructed with fixed edge weights or predefined connection rules. To address these limitations, we propose a novel algorithm: Dynamic Graph Convolutional Network with Dilated Convolution (DGDCN). By leveraging a spatiotemporal attention mechanism, the proposed model dynamically constructs a task-specific adjacency matrix, which guides the graph convolutional network (GCN) in capturing localized spatial and temporal dependencies among adjacent nodes. Furthermore, a dilated convolutional module is incorporated to expand the receptive field, thereby enabling the model to capture long-range temporal dependencies more effectively. The proposed seizure detection system is evaluated on the TUSZ dataset, achieving AUC values of 88.7% and 90.4% on 12-s and 60-s segments, respectively, demonstrating competitive performance compared to current state-of-the-art methods.

1. Introduction

Epilepsy is a neurological condition that impacts over 50 million people worldwide [1]. The analysis of brain waves, captured through electroencephalography (EEG), plays a vital role in diagnosing epileptic seizures [2]. However, manually labeling seizure events is a time-consuming and labor-intensive task for medical professionals. This has led to the pressing need for the development of automated seizure detection methods utilizing deep learning techniques. In parallel with advances in deep learning, initiatives like the Seizure Community Open-Source Research Evaluation (SzCORE) framework have been proposed to establish standardized benchmarks and reproducible evaluation pipelines for EEG-based seizure detection algorithms [3]. Such frameworks promote fair comparisons across different methods and enhance their clinical applicability by ensuring that algorithms are validated on common standards. By using SzCORE or similar evaluation platforms, researchers can more objectively assess performance, which is crucial for translating these algorithms into reliable clinical tools.

In recent years, traditional machine learning algorithms such as convolutional neural network (CNN) [4], recurrent neural network (RNN) [5], and long short-term memory (LSTM) [6] have achieved remarkable success in automated EEG event detection. However, with rapid advancements in deep learning technologies, integrating cutting-edge methods into existing frameworks holds the potential to significantly enhance the accuracy of EEG event detection. Improvements can be pursued in several critical areas. For CNN-based approaches, there are notable limitations. First, CNN models primarily focus on capturing short-term EEG features, often failing to account for the long-term dependencies that are intrinsic to EEG signals. Given that EEG data exhibits strong long-term correlations, it is crucial to explore more advanced models capable of effectively extracting these dependencies. Second, the spatial configuration of EEG electrodes provides valuable insights, but CNN are inherently limited in capturing this information due to their reliance on a Euclidean framework. This approach does not align with the non-Euclidean, irregular arrangement of EEG electrodes on the scalp. Similarly, RNN and LSTM also face significant challenges. First, they struggle to effectively capture relationships across different channels, which is critical in EEG analysis. Second, these models are computationally intensive to train and exhibit low efficiency when processing long sequences, further limiting their applicability to EEG event detection. Addressing these limitations is essential for advancing EEG event detection models. Graph Neural Networks (GNN), in particular, offer a promising solution by effectively overcoming the constraints of CNN, RNN, and LSTM.

In the early stages of research, GNN primarily focused on modeling spatial information. Kipf et al. proposed a method that directly operates on graph structures and performs convolutions on graph data, known as graph convolutional networks (GCN) [7]. A notable feature of this network is its first-order property, enabling a single-layer GCN to process first-order neighbor information in a graph, while multi-layer GCN models can further integrate K-order neighbor information. However, classical GCNs are more suitable for static graph data, where nodes and edges remain fixed and do not change over time. In 2018, the DCRNN model was proposed, leveraging an RNN architecture and bidirectional random walks to capture both temporal and spatial correlations [8]. Subsequently, Tang et al. utilized the encoder component of the DCRNN model to achieve state-of-the-art results in cross-subject generalized epileptic seizure detection [9]. However, this model also exhibits inherent limitations when applied to seizure detection. Firstly, the RNN architecture suffers from unstable gradients and high memory requirements. More importantly, the static adjacency matrix in the model relies on fixed edge weights or connection rules. When noise or outliers are present in the data, this static dependency can amplify the impact of such noise, preventing the model from dynamically adapting to changes in the input data. Graph Attention Networks (GAT) were introduced to address the rigidity of fixed adjacency matrices by learning attention weights for each node’s neighbors [10]. However, GAT integrates attention implicitly during message passing and does not explicitly decouple spatial and temporal correlations—an important property for biomedical signals such as EEG. Moreover, GAT requires dense attention computation at each layer, which incurs significant computational overhead and limits scalability in large-scale or high-density EEG data. To address the aforementioned challenges, we propose a Dynamic Graph Convolutional Network with Dilated Convolution (DGDCN). The main contributions of our work can be summarized as follows:

- The proposed model utilizes a spatiotemporal attention mechanism to adaptively generate a task-specific adjacency matrix, which in turn guides GCN to better model localized spatial and temporal relationships among neighboring nodes. Additionally, a dilated convolutional module is integrated to broaden the receptive field, enabling the network to more effectively capture long-term temporal dependencies.

- The effectiveness of the proposed DGDCN has been thoroughly evaluated using the TUSZ database, where it consistently demonstrates competitive performance compared to other state-of-the-art approaches. This exceptional performance underscores the model’s capability to handle complex EEG data, making it a valuable tool for advancing both research and practical applications in the field.

2. Methods

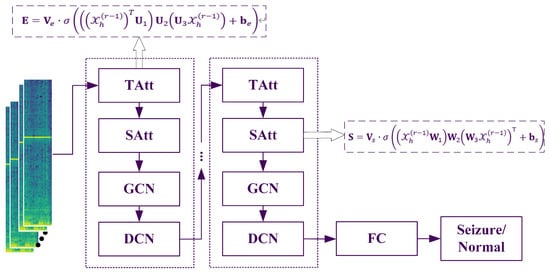

The proposed model incorporates a dynamic attention mechanism to effectively capture the evolving spatiotemporal correlations in epilepsy recognition networks. In parallel, a spatiotemporal convolution module is specifically designed to extract both spatial and temporal dependencies from neighboring nodes. Furthermore, the model employs a dilated convolutional network to stack multiple spatiotemporal blocks, enabling the extraction of more comprehensive and large-scale dynamic spatiotemporal correlations. The entire flowchart is illustrated in Figure 1.

Figure 1.

The flowchart of the proposed method (TAtt denotes the Time Attention module, SAtt represents the Space Attention module, and FC stands for the fully connected layer).

2.1. Temporal Attention

In the temporal dimension, there are correlations between the EEG states of preceding and succeeding time segments. Apart from the epileptic seizure state, the correlations in EEG induced by noise also exhibit slight differences. Similarly, an attention mechanism can be used to adaptively assign different levels of importance to the data [11].

Here, are learnable parameters. The learnable matrices , , and serve to project and combine spatial and feature information across time steps. Specifically, aggregates node-level activations, integrates spatial and channel information, and performs feature weighting across channels. These projections enable the attention mechanism to compute temporal correlations that reflect variations in EEG dynamics across different time steps. The temporal correlation matrix E is determined by the time-varying input data. Each element represents the strength of the correlation between times i and j. Finally, E is normalized using a softmax function. The normalized temporal attention matrix is directly applied to the input, resulting in , where can combine the correlation information to dynamically adjust the input.

2.2. Spatial Attention

In the spatial dimension, EEG signals from different channels influence one another, and this interaction is highly dynamic. An attention mechanism is employed here to adaptively capture the dynamic correlations between nodes in the spatial dimension. The spatial attention is computed as follows:

Let represent the input of the r-th spatial block at the r-th layer, where N is the number of channels in the input data at layer r. When , , and is the length of the temporal dimension at the r-th layer. are learnable parameters, and denotes the activation function, here chosen as the sigmoid function. The attention matrix S is computed based on the dynamics of the input data at the current layer. Each element in the matrix S indicates the correlation strength between EEG channels n and m. The softmax function is then applied to normalize S, ensuring that the attention weights across channels sum to 1. During subsequent convolutional operations, the normalized spatial attention matrix dynamically adjusts the influence between channels.

While conventional graph-based methods often enforce symmetry or sparsity constraints on the adjacency matrix, we do not explicitly apply such constraints to the spatial attention matrix . The learned is generally asymmetric, as it is computed in a data-driven manner without enforcing . This design allows the model to capture directed and potentially asymmetric dependencies between EEG channels, which may reflect meaningful patterns of information flow in neural systems. Furthermore, we do not apply thresholding or binarization to sparsify . In the context of EEG, each channel is part of a functionally integrated system, and even weak correlations between electrode pairs may carry subtle but informative neural signals. By retaining all pairwise weights, our model remains sensitive to both strong and weak dependencies, which may be crucial for decoding complex brain dynamics.

2.3. Spatiotemporal Convolution Based on GCN

The spatiotemporal attention module efficiently enables the network to adaptively focus on more valuable channel and temporal information. The input adjusted by the attention mechanism is fed into the spatiotemporal convolution module. The proposed spatiotemporal convolution module consists of graph convolutions in the spatial dimension, capturing spatial dependencies from neighboring nodes, and convolutions along the temporal dimension, leveraging temporal dependencies from adjacent time steps.

The Laplacian matrix of a graph is defined as , and it can further be normalized as , where A is the adjacency matrix, is the identity matrix, and is the degree matrix, a diagonal matrix formed by the node degrees . The eigenvalue decomposition of the Laplacian matrix is , where is a diagonal matrix of eigenvalues, and U is the Fourier basis of the graph. Taking the EEG signal at a specific time as an example, the signal over the entire graph is , and its graph Fourier transform is defined as . Based on the properties of the Laplacian matrix, U is an orthogonal matrix, so the corresponding inverse Fourier transform is . Graph convolution is implemented using a linear operator that is diagonalized in the Fourier domain, effectively replacing classical convolution operators. Based on this, the signal x on graph G is filtered using the kernel as follows:

The parameter is the direction of the polynomial coefficients. , where is the largest eigenvalue of the Laplacian matrix. The recursive definition of the Chebyshev polynomial is , where and . By using an approximation of the Chebyshev polynomial, this formulation allows extracting information from neighbors up to hops away for each node through the convolution kernel . The graph convolution module applies a rectified linear unit (ReLU) as the final activation function, expressed as: .

To dynamically capture correlations between source and destination nodes, for each term of the Chebyshev polynomial, the spatial attention matrix is incorporated. The resulting matrix is , where ⊙ denotes the Hadamard product (element-wise multiplication). Hence, the graph convolution formula becomes:

This definition is extended to multi-channel graph signals. For example, the input is , where each node’s feature vector contains channels at each time step. For the current graph G, convolution is applied using , where represents the learnable parameters of the kernel. Consequently, each node updates its information based on its neighbors within 0 to hops.

2.4. Dilated Convolutional Network

A dilated convolution is a convolutional operation that introduces gaps (or dilations) between the kernel elements, allowing the network to aggregate information from a broader context without increasing the number of parameters [12,13]. Specifically, with a dilation rate of d, the convolutional kernel skips elements between each sampled position. This mechanism effectively expands the receptive field, enabling the model to capture long-range dependencies. In the context of graph-based temporal modeling, dilated convolutions are particularly useful for incorporating information from distant time steps while maintaining computational efficiency.

The graph convolution operation captures the neighboring information of each node in the spatial dimension of the graph, followed by an aggregation step in the temporal dimension. By combining the information from neighboring time segments, the node signals are updated. The operation in the most recent component is expressed as follows:

where * represents a spatial convolution operation, contains the parameters of the convolution kernel, and its expansion rate is set to 2. The activation function of the convolution layer is ReLU.

We note that the input and output dimensions remain consistent throughout the main components of our model. The temporal attention and spatial attention + GCN modules are designed to preserve the original tensor shape, as they perform feature reweighting and aggregation without altering the spatial or temporal resolution. Furthermore, the DCN module is applied with appropriate padding to ensure that the temporal dimension remains unchanged. These design choices ensure that each module can be stacked multiple times without introducing dimensional inconsistencies, thereby simplifying model design and improving scalability.

In summary, the spatiotemporal convolution module effectively captures the spatiotemporal features of the data. With the spatiotemporal attention module, GCN and DCN form spatiotemporal blocks. Stacking multiple spatiotemporal blocks allows the model to capture dynamic spatiotemporal correlations at a broader scale. Finally, a fully connected layer is added, with ReLU serving as the activation function for the final fully connected layer.

3. Experimental Setup

3.1. Experimental Data

This study utilizes version 2.0.1 of the TUSZ (Temple University Seizure Corpus) database for experimental validation. Provided by the Temple University Neural Engineering Data Consortium, TUSZ is currently the largest publicly available epilepsy database [14]. The EEG recordings in this database were primarily collected between 2002 and 2013 (and subsequent years) at Temple University. Notably, TUSZ is one of the few epilepsy databases that continues to receive annual updates. The database contains data from a total of 675 patients, amounting to 5,312,996 s of recordings and 81 GB of data. It includes 7377 EDF files, data from 287 seizure patients, and 4,029 seizure events. All recordings were collected using 22-channel EEG equipment, with key channels including FP1-F7, F7-T3, T3-T5, T5-O1, FP2-F8, F8-T4, T4-T6, T6-O2, A1-T3, T3-C3, C3-CZ, CZ-C4, C4-T4, T4-A2, FP1-F3, F3-C3, C3-P3, P3-O1, FP2-F4, F4-C4, C4-P4, and P4-O2. To facilitate usage, the database is divided into three subsets: training (train), development (dev), and evaluation (eval). The total recording durations for these subsets are 3,277,229 s, 467,795 s, and 1,567,972 s, respectively. The proportion of seizure durations in these subsets is 5.34%, 5.09%, and 5.82%, respectively, and each subset contains data from distinct, non-overlapping patients. Typically, researchers use the training set for model training, the development set for hyperparameter tuning, and the evaluation set for assessing final model performance. One challenge of the TUSZ database is its high data diversity and significant noise, often leading to lower validation performance compared to other databases. However, since its subsets are pre-defined and independent of validation methods (e.g., leave-one-out or k-fold cross-validation), many researchers use TUSZ as a benchmark dataset for evaluating the performance of deep learning models. To support reproducibility and future research, we publicly release the implementation code at https://github.com/Open-EMG/DGDCN.git (accessed on 31 July 2025).

3.2. Data Preprocessing

To ensure consistency, EEG signals from the TUSZ, originally sampled at varying frequencies, are resampled to a uniform frequency of 200 Hz [14]. Given that seizures correlate with brain electrical activity in specific frequency bands, we preprocess the raw EEG signals by transforming them into the frequency domain [15]. Following prior studies, we compute the log-amplitudes of the fast Fourier transform (FFT) of the raw EEG data [9]. EEG recordings were divided into non-overlapping segments of 12 and 60 s. A segment was labeled as ictal if it contained at least one second of seizure activity, following a labeling strategy similar to [9]. Using both short (12 s) and long (60 s) segments allows us to evaluate the model under different temporal granularities, and captures both fine-grained ictal events and broader contextual patterns around seizure episodes. Specifically: (1) A sliding window of t seconds is applied over the EEG clips without overlap, where t matches the time step; (2) The FFT is computed for each t-s window using the “fft” function in the Scipy Python library, retaining the log-amplitudes of the non-negative frequency components as described in prior studies; (3) Finally, the EEG clips are z-normalized with respect to the mean and standard deviation of the training dataset. After preprocessing, each EEG clip is represented as , where (or for longer clips) represents the clip length, denotes the number of EEG channels/electrodes, and corresponds to the feature dimension derived from the Fourier transform. Each node in the graph corresponds to an EEG electrode, and the features associated with each node are frequency-domain representations of the EEG signals obtained through a Fourier transform. Specifically, for each time step, a 100-dimensional spectral feature vector is extracted for each of the 22 channels, capturing key frequency characteristics such as the power distribution across different bands. These representations are essential for modeling seizure-related dynamics. As a result, each node is associated with a sequence of spectral features over time, forming the structured input tensor used for subsequent spatiotemporal modeling.

3.3. Model Training

All models, including the baseline models, were implemented using the PyTorch library and trained on an NVIDIA GeForce RTX 4080 GPU with the Adam optimizer. The initial parameters were randomly initialized. The hyperparameter settings for DGDCN are as follows: the initial learning rate was set to 1e-4, the batch size was 20, and the number of terms for the Chebyshev polynomial was 3. All graph convolution layers used 64 convolutional kernels, and all temporal convolution layers also used 64 convolutional kernels. All models were trained using the cosine annealing learning rate scheduling strategy in PyTorch [16]. This strategy gradually updates the learning rate following a cosine waveform decay cycle. Additionally, in all experiments, early stopping was applied if the validation loss did not decrease for five consecutive iterations. To mitigate the impact of class imbalance between resting and ictal EEG segments, we applied two EEG-specific data augmentation techniques during training: (1) amplitude scaling within the range [0.8, 1.2], and (2) random reflection across the scalp midline.

3.4. Evaluating Indicator

For evaluation, in addition to accuracy, we report AUC, sensitivity, and specificity, which are more robust to imbalanced data distributions. AUC reflects the model’s ability to discriminate between classes across all decision thresholds, making it particularly suitable for seizure detection tasks with skewed class ratios. Accuracy measures the proportion of correctly classified samples to the total number of samples, reflecting the overall correctness of the model’s predictions.

where True Positive (TP) denotes a seizure-labeled EEG clip that is correctly predicted as a seizure; True Negative (TN) refers to a non-seizure EEG clip that is correctly predicted as non-seizure; False Positive (FP) represents a non-seizure EEG clip that is incorrectly classified as seizure; and False Negative (FN) indicates a seizure-labeled EEG clip that is incorrectly predicted as non-seizure. These definitions provide the basis for computing additional performance metrics such as sensitivity, specificity, and AUC.

Sensitivity indicates the model’s ability to identify positive (Seizure) cases, i.e., the proportion of actual positive samples correctly predicted as positive. It is particularly crucial in tasks where minimizing false negatives is a priority.

Specificity evaluates the model’s ability to identify negative (normal) cases, i.e., the proportion of actual negative samples correctly predicted as negative. It is particularly useful in reducing false positives and complementing sensitivity.

AUC represents the area under the Receiver Operating Characteristic (ROC) curve and serves as a comprehensive metric to evaluate the model’s ability to distinguish between positive and negative samples. An AUC value closer to 1 indicates a strong discriminative ability, while an AUC value of 0.5 suggests the model performs no better than random guessing. Unlike accuracy, AUC is robust to class imbalance.

Since the task of epilepsy detection involves imbalanced data and clinicians are primarily concerned with ensuring that all actual seizures are accurately identified, SEN and AUC are the most critical evaluation metrics for this task.

3.5. Benchmark Methods

To evaluate the effectiveness of the proposed model compared to other models, four baselines, including LSTM, CNN, EEGNET [17], and DCRNN [9], were selected. All baselines used the same data and preprocessing methods as DGDCN and were evaluated using the same metrics.

4. Results

This study conducted a comprehensive comparison between the proposed model and four benchmark methods: LSTM, CNN, EEGNET, and DCRNN. Table 1 presents the final performance evaluation results, covering accuracy, sensitivity, specificity, and AUC. From the table, it is evident that the DGDCN model consistently achieves competitive performance across all metrics compared to the benchmark methods. Notably, when processing 12-s data segments, DGDCN demonstrates superior performance across all evaluation criteria. Even when handling 60-s data segments, the model maintains its advantage in the two most critical metrics—sensitivity and AUC.

Table 1.

Comparison of average performance for different methods with segment lengths of 12 and 60 s.

For 12-s input segments, the proposed DGDCN model achieved an accuracy of 79.7%, sensitivity of 84.8%, specificity of 78.6%, and AUC of 88.7%. For 60-s input segments, the model achieved an accuracy of 73.5%, sensitivity of 94.3%, specificity of 68.7%, and AUC of 90.4%.

To evaluate the importance of each module, ablation experiments were conducted by removing individual modules from the model and testing its performance. The results of these experiments are summarized in Table 2.

Table 2.

Ablation Experiments.

1. Removal of the Temporal Attention Module: This corresponds to ignoring the correlations between different time segments. The results show that incorporating this module enhances both sensitivity and AUC. For 12-s data, sensitivity and AUC improved by 1.7% and 0.3%, respectively. Similarly, for 60-s data, these metrics increased by 1% and 0.2%, respectively.

2. Removal of the Spatial Attention Module: This involves disregarding the correlations between different spatial regions. The results indicate that removing this module leads to significant declines in sensitivity and specificity. For 12-s data, sensitivity dropped by 4.2%. For 60-s data, sensitivity and AUC decreased by 4.4% and 1.8%, respectively.

3. Removal of the Entire GCN Component: The results demonstrate that eliminating the GCN module results in a substantial reduction in sensitivity and AUC. For 12-s data, sensitivity and AUC decreased by 3.1% and 3%, respectively. For 60-s data, these metrics dropped by 3.4% and 0.7%, respectively.

4. Removal of the Entire DCN Component: The results show that removing the DCN module reduces sensitivity and AUC. For 12-s data, these metrics dropped by 0.7% and 0.2%, respectively. However, for 60-s data, sensitivity and AUC declined more drastically, by 9.1% and 2.2%, respectively. Notably, the performance degradation for 60-s data is more pronounced compared to 12-s data, further confirming that the dilated convolution within the DCN module enhances the model’s ability to process long sequence data effectively.

These results highlight the critical contributions of each module to the overall performance of the model, particularly in capturing spatiotemporal correlations and handling long-sequence data.

Further research explored the impact of the data augmentation module on the model’s performance, as detailed in Table 3. From the table, it can be observed that incorporating data augmentation enhances the model’s performance when processing long-sequence data. Without data augmentation, the sensitivity and AUC for 60-s data segments decrease notably by 5.7% and 1%, respectively. However, when the data augmentation module is applied, its effect on short-sequence data is less pronounced. While sensitivity improves slightly, the overall AUC metric shows a marginal decline.

Table 3.

Impact of Data Augmentation on Model Performance.

5. Discussion

The results presented in this study demonstrate the superior performance of the proposed DGDCN model over benchmark methods such as LSTM, CNN, EEGNET, and DCRNN. DGDCN consistently demonstrates favorable results across all evaluation metrics, especially in sensitivity and AUC. These findings indicate the model’s capability to effectively handle the complexities of EEG data, especially in imbalanced classification tasks such as epileptic seizure detection, where reducing false negatives (i.e., improving sensitivity) is crucial.

One of the key findings is the significant advantage of DGDCN in processing both short (12-s) and long (60-s) EEG segments. While CNN and EEGNET perform poorly across different segment lengths, likely due to their inability to capture long-term temporal dependencies, LSTM shows relatively stable AUC but struggles with structural inconsistencies in EEG signals. The ability of DGDCN to leverage graph-based architectures to model non-Euclidean relationships allows it to capture spatiotemporal dependencies more effectively. The comparison between DCRNN and DGDCN highlights the latter’s improved performance, particularly in handling long-sequence data, with notable increases in AUC.

Ablation experiments further validate the contributions of individual components in DGDCN. The removal of the TAtt leads to decreased sensitivity and AUC, confirming its role in enhancing temporal correlations. The SAtt also proves essential, as its removal lowers sensitivity and specificity, indicating its importance in capturing spatial dependencies in EEG signals. Eliminating the GCN module results in a notable reduction in both sensitivity and AUC, underscoring the necessity of graph-based modeling. Additionally, the removal of the DCN module causes the most substantial performance drop, particularly for 60-s data, reaffirming its role in handling long-sequence data effectively.

These findings emphasize that the DGDCN model successfully integrates dynamic spatiotemporal information using graph-based and dilated convolutional mechanisms, making it a robust solution for EEG-based seizure detection. The superior performance of DGDCN in long-sequence data processing suggests its potential applicability in real-world clinical settings, where EEG signals often vary in duration.

Further experiments also indicate that data augmentation enhances the model’s performance in processing long-sequence EEG data. Without augmentation, sensitivity and AUC decrease notably, emphasizing the importance of training data diversity in improving model robustness. Interestingly, although data augmentation significantly improved sensitivity in both the 12-s and 60-s settings, it resulted in a slight reduction in AUC for the 12-s segments. This may be due to the fact that shorter EEG windows contain less temporal variability, making them more sensitive to distortions introduced by augmentation. While such augmentation enhances the model’s ability to detect seizures overall, it may also increase uncertainty for borderline cases, thereby destabilizing the decision boundary and slightly reducing AUC.

Overall, the results confirm that DGDCN effectively addresses the challenges of spatiotemporal EEG classification, particularly in seizure detection. The model’s performance across various segment lengths, along with its use of key attention-based components, demonstrates promising potential for clinical deployment, showing results that are comparable to or better than traditional methods. Future work may focus on extending this approach to other neurological disorders and further optimizing its real-time applicability in medical settings.

Limitation

Recent evaluations by Koren et al. and Reus et al. have systematically assessed commercial seizure detection systems in real-world epilepsy monitoring unit settings [18,19]. While these tools demonstrate reasonable sensitivity and assist in seizure identification, their overall clinical reliability remains limited, particularly due to high false alarm rates, missed events, and poor robustness in noisy clinical environments. These findings underscore the importance of developing detection systems that are not only accurate on public datasets but also clinically viable in deployment scenarios.

Despite the promising performance of our proposed method on benchmark datasets, several limitations should be acknowledged. First, our current evaluation does not consider critical deployment-related factors such as detection latency, computational efficiency, and the ability to process continuous streaming EEG signals. Second, the system has not yet been validated in realistic clinical settings or integrated into hospital workflows, which poses challenges for practical adoption.

To address these limitations, future work should incorporate standardized benchmarking frameworks such as SzCORE. This platform offers unified evaluation protocols across multiple datasets and patient populations, enabling more objective comparisons and improving reproducibility. Integrating SzCORE could help verify our method’s generalizability under real-world conditions and facilitate its translation into clinical use.

Although our study is based on multi-channel EEG data, the proposed spatial-temporal attention framework is inherently flexible and can be adapted to settings with varying electrode layouts. First, the spatial attention matrix is learned from the input, which allows it to naturally scale with different channel configurations. Second, the dynamic graph construction mechanism enables the model to capture inter-channel dependencies even under sparse or individualized layouts. These properties support the potential clinical use of our method in reduced-channel EEG systems. Moreover, if the framework is combined with an internal electrode selection strategy, such as attention-based ranking or sparsity-regularized learning as proposed in related studies [20,21,22,23], it may not only reduce computational complexity but also enhance performance by concentrating on the most informative channels.

Lastly, while this study focuses on data-driven spatiotemporal modeling of EEG signals, true clinical validation also requires alignment with anatomical and electro-clinical information. For example, although the TUSZ dataset is widely used, it lacks metadata such as seizure onset zones or detailed semiology. Future research should incorporate richer clinical annotations to support more comprehensive and translationally meaningful validation.

6. Conclusions

In conclusion, this study addresses key limitations in automated seizure detection by introducing the Dynamic Graph Convolutional Network with Dilated Convolution, which effectively captures dynamic spatiotemporal correlations through a novel attention mechanism and adaptive adjacency matrix construction. The integration of a spatiotemporal convolution module and dilated convolutional network enables the extraction of broader temporal and spatial dependencies, leading to significant improvements in seizure detection performance. The proposed method achieves superior AUC scores of 88.7% and 90.4% on 12-s and 60-s EEG segments, respectively, on the TUSZ dataset, demonstrating its potential as a robust and advanced solution for epileptic seizure detection.

Author Contributions

Conceptualization, X.Z.; methodology, Y.G.; formal analysis, X.Z. and C.D.; investigation, X.Z., Y.G., and C.D.; writing—original draft preparation, X.Z. and Y.G.; writing—review and editing, C.D; funding acquisition, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Interdisciplinary Medical-Engineering Research Fund of Shanghai Jiao Tong University (Grant No. YG2025QNB04).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The Temple University Hospital EEG (TUH EEG) Corpus used in this study is publicly available at https://isip.piconepress.com/projects/nedc/html/tuh_eeg/ (accessed on 18 July 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- World Health Organization. The World Health Report 2001: Mental Health: New Understanding, New Hope. 2001. Available online: https://iris.who.int/handle/10665/42390 (accessed on 31 July 2025).

- Siddiqui, M.K.; Morales-Menendez, R.; Huang, X.; Hussain, N. A review of epileptic seizure detection using machine learning classifiers. Brain Inform. 2020, 7, 5. [Google Scholar] [CrossRef] [PubMed]

- Dan, J.; Pale, U.; Amirshahi, A.; Cappelletti, W.; Ingolfsson, T.M.; Wang, X.; Cossettini, A.; Bernini, A.; Benini, L.; Beniczky, S.; et al. SzCORE: Seizure Community Open-Source Research Evaluation framework for the validation of electroencephalography-based automated seizure detection algorithms. Epilepsia 2024. ahead of print. [Google Scholar] [CrossRef] [PubMed]

- Thuwajit, P.; Rangpong, P.; Sawangjai, P.; Autthasan, P.; Chaisaen, R.; Banluesombatkul, N.; Boonchit, P.; Tatsaringkansakul, N.; Sudhawiyangkul, T.; Wilaiprasitporn, T. EEGWaveNet: Multiscale CNN-based spatiotemporal feature extraction for EEG seizure detection. IEEE Trans. Ind. Inform. 2021, 18, 5547–5557. [Google Scholar] [CrossRef]

- Song, Z.; Deng, B.; Wang, J.; Yi, G.; Yue, W. Epileptic seizure detection using brain-rhythmic recurrence biomarkers and onasnet-based transfer learning. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 979–989. [Google Scholar] [CrossRef] [PubMed]

- Hu, X.; Yuan, S.; Xu, F.; Leng, Y.; Yuan, K.; Yuan, Q. Scalp EEG classification using deep Bi-LSTM network for seizure detection. Comput. Biol. Med. 2020, 124, 103919. [Google Scholar] [CrossRef] [PubMed]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion Convolutional Recurrent Neural Network: Data-Driven Traffic Forecasting. In Proceedings of the International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Tang, S.; Dunnmon, J.; Saab, K.K.; Zhang, X.; Huang, Q.; Dubost, F.; Rubin, D.; Lee-Messer, C. Self-Supervised Graph Neural Networks for Improved Electroencephalographic Seizure Analysis. In Proceedings of the International Conference on Learning Representations, Virtual, 25 April 2022. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 922–929. [Google Scholar]

- Gao, Y.; Chen, X.; Liu, A.; Liang, D.; Wu, L.; Qian, R.; Xie, H.; Zhang, Y. Pediatric seizure prediction in scalp EEG using a multi-scale neural network with dilated convolutions. IEEE J. Transl. Eng. Health Med. 2022, 10, 4900209. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Liu, Y.; Ye, Z.; Hu, D. A novel multiscale dilated convolution neural network with gating mechanism for decoding driving intentions based on EEG. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 1712–1721. [Google Scholar] [CrossRef]

- Shah, V.; Von Weltin, E.; Lopez, S.; McHugh, J.R.; Veloso, L.; Golmohammadi, M.; Obeid, I.; Picone, J. The temple university hospital seizure detection corpus. Front. Neuroinform. 2018, 12, 83. [Google Scholar] [CrossRef] [PubMed]

- Tzallas, A.T.; Tsipouras, M.G.; Fotiadis, D.I. Epileptic seizure detection in EEGs using time–frequency analysis. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 703–710. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z. Super convergence cosine annealing with warm-up learning rate. In Proceedings of the CAIBDA 2022, 2nd International Conference on Artificial Intelligence, Big Data and Algorithms, VDE, Nanjing, China, 17–19 June 2022; pp. 1–7. [Google Scholar]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A compact convolutional neural network for EEG-based brain–computer interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef] [PubMed]

- Koren, J.; Colabrese, K.; Hartmann, M.; Feigl, M.; Lang, C.; Hafner, S.; Nierenberg, N.; Kluge, T.; Baumgartner, C. Systematic comparison of Commercial seizure detection Software: Update equals Upgrade? Clin. Neurophysiol. 2025, 174, 178–188. [Google Scholar] [CrossRef] [PubMed]

- Reus, E.E.; Visser, G.; van Dijk, J.; Cox, F. Automated seizure detection in an EMU setting: Are software packages ready for implementation? Seizure-Eur. J. Epilepsy 2022, 96, 13–17. [Google Scholar] [CrossRef] [PubMed]

- Hartmann, M.; Koren, J.; Baumgartner, C.; Duun-Henriksen, J.; Gritsch, G.; Kluge, T.; Perko, H.; Fürbass, F. Seizure detection with deep neural networks for review of two-channel electroencephalogram. Epilepsia 2023, 64, S34–S39. [Google Scholar] [CrossRef] [PubMed]

- El-Dajani, N.; Wilhelm, T.F.L.; Baumann, J.; Surges, R.; Meyer, B.T. Patient-Independent Epileptic Seizure Detection with Reduced EEG Channels and Deep Recurrent Neural Networks. Information 2025, 16, 20. [Google Scholar] [CrossRef]

- Dokare, I.; Gupta, S. Optimized seizure detection leveraging band-specific insights from limited EEG channels. Health Inf. Sci. Syst. 2025, 13, 30. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, R.; Giaquinto, M.; Percannella, G.; Rundo, L.; Saggese, A. Personalizing Seizure Detection for Individual Patients by Optimal Selection of EEG Signals. Sensors 2025, 25, 2715. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).