Abstract

Patient no-shows significantly disrupt pediatric healthcare delivery, highlighting the necessity for precise predictive models, especially during the dynamic shifts caused by the SARS-CoV-2 pandemic. In outpatient settings, these no-shows result in medical resource underutilization, increased healthcare costs, reduced access to care, and decreased clinic efficiency and increased provider workload. The objective is to develop a predictive model for patient no-shows using data-driven techniques. We analyzed five years of historical data retrieved from both a scheduling system and electronic health records from a general pediatrics clinic within the WVU Health systems. This dataset includes 209,408 visits from 2015 to 2018, 82,925 visits in 2019, and 58,820 visits in 2020, spanning both the pre-pandemic and pandemic periods. The data include variables such as patient demographics, appointment details, timing, hospital characteristics, appointment types, and environmental factors. Our XGBoost model demonstrated robust predictive capabilities, notably outperforming traditional “no-show rate” metrics. Precision and recall metrics for all features were 0.82 and 0.88, respectively. Receiver Operator Characteristic (ROC) analysis yielded AUCs of 0.90 for all features and 0.88 for the top five predictors when evaluated on the 2019 cohort. Furthermore, model generalization across racial/ethnic groups was also observed. Evaluation on 2020 telehealth data reaffirmed model efficacy (AUC: 0.90), with consistent top predictive features. Our study presents a sophisticated predictive model for pediatric no-show rates, offering insights into nuanced factors influencing attendance behavior. The model’s adaptability to evolving healthcare delivery models, including telehealth, underscores its potential for enhancing clinical practice and resource allocation.

1. Introduction

Patient no-shows, defined as a patient failing to attend a scheduled appointment without prior notification, vary widely across healthcare settings, ranging from 12% to 80% [1]. No-shows are a major logistical and economic challenge for clinics and hospital systems, leading to significant revenue losses. No-shows cost the United States healthcare system over USD 150 billion annually, with individual physicians losing an average of USD 200 per unused time slot [2]. Whether patients show up or not, healthcare organizations and medical practices must still pay their staff and cover expenses of resources/facilities. For the provider, no-showed appointments decrease the volume of medical care that can be given. For patients, the increasing lengths of time needed to schedule follow-up appointments can be prohibitive of receiving proper care [3]. Patients who missed an appointment were up to 70% less likely to return within 18 months, particularly older patients with chronic illnesses who often discontinued care after a single missed visit [4].

Several studies have modeled strategies for reducing patient wait times for clinic appointments through artificial intelligence (AI)-enabled technologies and solutions [5]. These machine and deep learning approaches have utilized random forest [6,7], logistic regression [6,8], gradient boosting [9,10], ensemble-based models [11,12], deep neural networks [13,14], and various other approaches to predict patient no-shows. Studies have also examined the type of visit, whether primary care or specialty clinic, and varying demographic populations (e.g., United States, Saudi Arabia, Brazil, etc.) [5]. While outpatient scheduling with a healthcare provider is traditionally thought to account for the largest percentage of no-showed appointments, the use of no-show algorithms could also predict patient readmissions [15] and attrition from diagnostic visits (i.e., imaging and laboratory studies) [16]. These individualized approaches boost patient/provider satisfaction [17] and allow for a more efficient model of healthcare.

The factors affecting patient no-shows can vary slightly between studied populations but can be generalized into three major categories: (1) modifiable and unmodifiable patient characteristics (e.g., age, sex, race/ethnicity, BMI), (2) type of appointment scheduled (i.e., primary care or specialty clinic), and (3) patient behavior (e.g., previous no-show rate, time-to-appointment, travel distance, weather, etc.) [5]. Previous research indicates patients who miss appointments tend to be of lower socioeconomic status, and often have a history of failed/no-show appointments, government-provided health benefits, and psychosocial problems and are less likely to understand the purpose of the appointment [1,9,18]. In addition to forgetting appointments, issues such as trouble getting off work, trouble finding childcare, transportation, and cost can also limit patient compliance for an appointment. No-show rates also increase with increasing time between scheduling and the actual appointment [19]. In pediatrics, few studies have examined how no-show rates can be predicted using machine learning [20,21], with no pediatric studies exploring how the SARS-CoV-2 pandemic affects the ability of no-show rates to handle virtual appointments.

Our study assesses the ability of a machine learning model to predict pediatric no-show rates before and during the SARS-CoV-2 pandemic. We utilized electronic medical record (EMR) data for patients, including features related to modifiable and unmodifiable patient characteristics, appointment type, and patient behavior. We built our model on pre-pandemic outpatient appointment data and utilized pre-pandemic- and pandemic-derived external validation sets. We were able to effectively predict pediatric no-show rates in our validation sets and further explored the role of race/ethnicity in no-show rate prediction.

2. Methods

2.1. Ethical Approval

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of West Virginia University (protocol number: 2002907160, date of approval: 17 March 2020) for studies involving humans.

2.2. Study Population and Data Preprocessing

Data from medical appointments were gathered from an outpatient clinic within a prominent academic pediatric hospital (West Virginia University Hospitals), aimed at enhancing quality improvement efforts (Table 1). The dataset includes appointments from all clinic departments, covering both main campus and satellite locations, as well as various visit types. It also includes healthcare provider details and environmental factors such as weather conditions. To facilitate analysis, categorical data were transformed into multiple binary variables. For instance, the original “day of the week of the appointment” feature, ranging from 1 to 7 (representing Sunday to Saturday), was converted into seven binary indicators, each indicating the appointment’s occurrence on a specific day. Numerical features were normalized to a range of 0 to 1. The labels indicating appointment outcomes were binary, with 1 indicating a no-show and 0 denoting attendance. Notably, a single patient may have multiple records due to multiple appointments. The patient demographic largely comprises children, often accompanied by their parents or caregivers to appointments.

Table 1.

Details of the study population and influence of the variables across the show and no-show appointments. “*” represents a Cohen Effect Size ≥ 0.1.

2.3. Machine Learning Algorithm Development

Our current model leverages pediatric appointments from 2015–2018 (training/testing), 2019 (validation), and 2020 (external holdout) that were scheduled at West Virginia University Outpatient Clinics under the West Virginia University Hospital Systems in West Virginia. The datasets consist of 209,408 (2015–2018), 82,925 (2019), and 58,820 (2020) patient appointments. A total of 46 features were collected that included demographic factors, time of appointment, hospital variables, type of appointment scheduled, and environmental conditions.

Our machine learning model relies on a XGBoost framework that allows for adaptable weighting of variables and hyperparameter optimization [22]. To predict patient no-shows, we implemented the XGBoost algorithm using the XGBClassifier, chosen for its robust performance on complex datasets. The classifier was configured with a gradient boosting “gbtree” booster and a base score of 0.5. We set the learning rate to 0.3 and the maximum depth of trees to 6, ensuring the model was sufficiently detailed yet avoided overfitting. Each tree node considered all features due to a subsample rate and colsample parameters set to 1. The model utilized 100 estimators, with the optimization objective set to binary logistic, tailored for binary classification tasks.

For regularization, we applied L2 regularization (lambda = 1) and L1 regularization (alpha = 0) to balance model complexity and performance. The model automatically handled missing values by treating them as NaNs, ensuring flexibility in dealing with incomplete records. The model operated under a binary logistic objective, focusing on the probability of no-show events. A total of four parallel jobs were run (n_jobs set to 4), exploiting multi-core processing to expedite computation. The random state was anchored at 0 to ensure consistency and reproducibility across model runs. We employed an automatic predictor setting, which optimally selected the most efficient prediction method based on the data structure. The tuning of hyperparameters like max_depth, min_child_weight, subsample, and colsample_bytree was conducted through a methodical grid search to identify the optimal balance, enhancing model effectiveness without overfitting. This methodological approach was geared towards developing a robust predictive model that could effectively forecast patient no-show probabilities in pediatric outpatient settings, considering various patient and environmental factors.

2.4. Performance Evaluation Metrics

The model’s performance was evaluated using standard metrics: accuracy, precision, recall, and area under the ROC curve (AUROC). The ROC curve plots the true positive rate (sensitivity) against the false positive rate (1-specificity) at different thresholds ranging from 0 to 1. The prediction scores (i.e., the predicted probabilities of no-shows) are compared at each threshold. A higher AUROC value (closer to 1) indicates better prediction quality. Similarly, the precision–recall curve (PRC) and area under the PRC (AUPRC) were calculated. Precision measures the accuracy of positive predictions, while recall measures the model’s ability to identify all actual positives. These metrics collectively provide insights into the model’s predictive accuracy and effectiveness.

2.5. SHAP Feature Analysis

We employed SHAP (SHapley Additive exPlanations) feature analysis to interpret the predictive model’s behavior and understand the importance of each feature in making predictions. SHAP values provide insights into how individual features contribute to the model’s output. By decomposing the model’s output for each prediction, SHAP enables us to understand the impact of each feature on the prediction outcome. In particular, SHAP summary plots are generated to visualize the overall feature importance and understand the relationship between specific features and the predicted outcome. Positive SHAP values indicate a feature that contributes to increasing the prediction, while negative values suggest a feature that decreases the prediction.

2.6. Intellectual Property/Data Availability

Our machine learning algorithm is covered by a provisional patent filed between Aspirations LLC and West Virginia University. This is distinctly unique from other filed patents, including the following: US0150242819A1 [2015]—utilizing advanced statistical techniques with no indication of accuracy or performance of the models. US20110208674A1 [2010]—a similar concept but within a ticket booking system. WO2018058189A1 [2016]—describes a supervised learning module that targets overbooking strategies, rather than uniquely identifying patients who are at risk of no-showing their appointment. Due to confidentiality agreements and the proprietary nature of the technology, the underlying data supporting this work are not publicly available.

2.7. Statistics

Baseline characteristics of the dataset were analyzed to provide insights into the demographic, clinic-based, insurance, and appointment-related attributes of the patient population. Descriptive statistics, including measures of central tendency and dispersion, were computed for numerical variables such as age and appointment duration, etc. Categorical variables, such as gender and appointment type, were summarized using frequency distributions.

To identify factors influencing the likelihood of patient no-shows, univariate and multivariate analyses were conducted. Univariate analyses involved assessing the association between each individual predictor variable and the outcome variable (completed vs. show) using appropriate statistical tests such as chi-square tests for categorical variables and t-tests or ANOVA for continuous variables.

3. Results

3.1. Baseline Characteristics and Influence of Variables on No-Show Rates

We retrospectively collected records from 161,822 hospital appointments made by 19,450 patients between 1 January 2015 and 31 December 2019 at pediatric clinics of all specialties in West Virginia University Hospitals [WVUH]. Figure 1 describes the workflow for the project. From our experience, the main factors driving the no-show rate were the days until the scheduled appointments. The longer the interval, the lower the likelihood of the appointment being completed. We also noted that same day appointments had low likelihood of patient no-shows. Appointment cancellations or reschedules were also strong predictors of no-shows. Interestingly, full-time employment status of the parent had a positive impact on adherence to the appointment in our pediatric clinics. Those with previous history of no-shows tended to have more chances of missing future appointments. Although not a major factor, some pediatric specialties exhibited higher appointment adherence rates, for example cardiology and nephrology, while others had higher chances of experiencing patient no-shows, for example hematology, neurology, and gastroenterology.

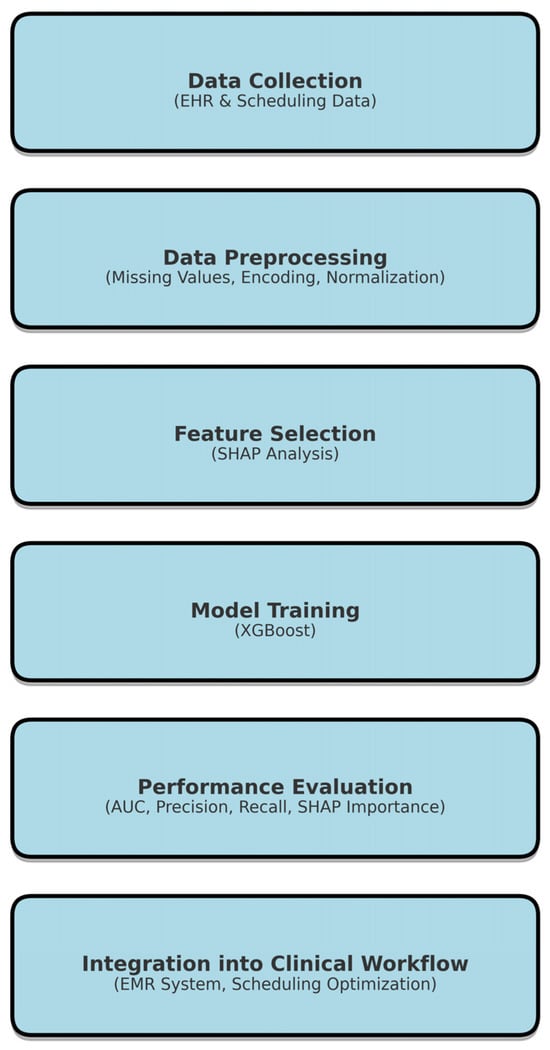

Figure 1.

Research framework for predicting patient no-shows using XGBoost. Sequential steps, including data collection from electronic health records (EHR), preprocessing techniques, feature selection using SHAP analysis, model training with XGBoost, performance evaluation using AUC and AUC metrics, and integration into clinical workflows for scheduling optimization. The structured process ensures efficient and interpretable predictive modeling for healthcare applications.

Gender, weather, race or ethnicity, and language preference did not significantly impact the no-show status for a scheduled appointment.

3.2. Performance of Model Predictions of No-Shows

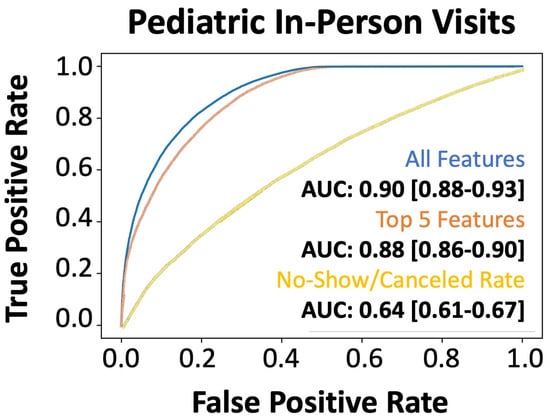

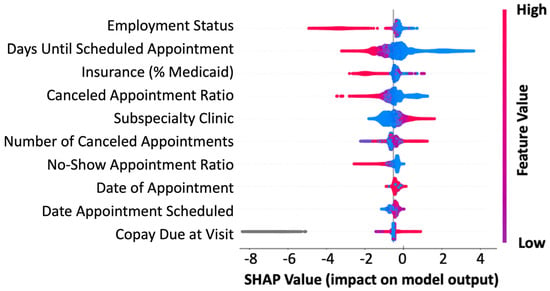

The machine learning model developed the no-show prediction probabilities. Table 2 highlights the superior prediction capacity for patient no-shows when using all 46 features collected (precision: 0.82, recall: 0.88) as well as the top 5 predictive features (precision: 0.81, recall: 0.84) in the validation dataset. This is further captured by the Receiver Operator Characteristic (ROC) area under the curve (AUC) for all features (AUC: 0.90) and the top five features (AUC: 0.88) (Figure 2). Additionally, we used the basic “no-show rate” alone to compute the likelihood of a patient being compliant with their visit (AUC: 0.64) (Figure 2). This “no-show rate” is a simple frequency: (total visits the patient has no-showed)/(total visits the patient has attended + total visits the patient has no-showed). This frequency is commonly employed by EMR systems to provide a baseline estimation if double booking or other alternative scheduling procedures should be enacted. To test if our algorithm can provide unbiased predictions across racial/ethnic groups, we subset the data. While Caucasians make up the primary patient population, our algorithm can efficiently generalize to other racial and ethnic populations, even when underrepresented (Table 3). From our analyses in the pediatric population, the no-show rate alone was insufficient to effectively predict patient compliance with their appointment. Additionally, features that were most important to the construction of the model were not within a single category, highlighting the complexity in interpretating patient no-shows (Figure 3). The most influential factors in predicting patient no-shows include employment status, appointment wait time, insurance type, cancellation history, and medical specialty (Table 4). Patients with full-time employment and shorter scheduling delays are more likely to attend, while those with Medicaid, frequent cancellations, and certain specialty appointments have higher no-show rates.

Table 2.

Model performance metrics on the 2019 validation data.

Figure 2.

Performance of the machine learning (XGBoost) model for predicting no-shows in the holdout test set. AUROC of xgboost machine learning model: all features (blue line), only the top five features (orange line), and the direct no-show/cancel rate (yellow line) in predicting no-shows for the 2019 holdout validation dataset.

Table 3.

Model training on the entire dataset followed by application and evaluation on individual racial and ethnic groups.

Figure 3.

Feature importance in predicting no-shows. SHAP summary plot for the top 10 features contributing to the XGBoost model. Each line represents a feature, and the abscissa is the SHAP value. Red dots represent higher feature values, blue dots represent lower feature values, and gray dots represent missing values.

Table 4.

Top five most predictive features based on SHAP analysis.

3.3. Evaluation of Model Generalizability and Adaptability to Unknown Healthcare Needs

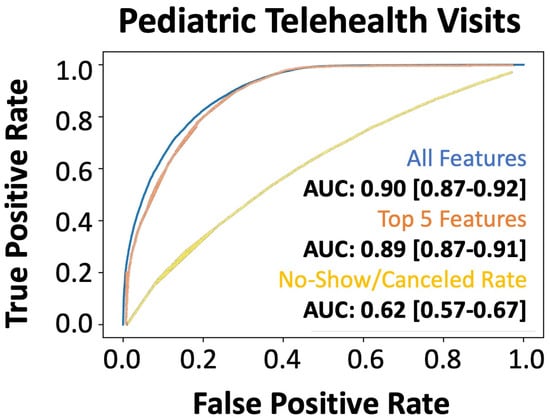

We also wanted to understand if our model was adaptable to the changing dynamics of healthcare needs and initiatives, such as those driven by coronavirus disease 2019 (COVID-19), which created an increase in no-contact telehealth appointments. The evaluation of our holdout dataset (2020), which contained 35% telehealth visits, revealed that our model provided superior predictions across all features (AUC: 0.90) and the topmost predictive features (AUC: 0.88) (Figure 4). Again, we showed that the traditional “no-show rate” computed in the EMR system was significantly inferior to our integrative approach (AUC: 0.62). Model robustness on the 2020 pediatric telemedicine dataset and the shared top features between the validation and external holdout datasets highlight the persistence of our machine learning model in generating accurate predictions of patient no-shows. Additionally, the preliminary data are from pediatric appointments, highlighting our algorithm’s ability to predict the no-show rate of the patient based primarily on external factors, such as transportation by the guardian/caregiver.

Figure 4.

Performance of the machine learning (XGBoost) model for predicting no-shows in the external validation dataset. AUROC of xgboost machine learning model: all features (blue line), only the top five features (orange line), and the direct no-show/cancel rate (yellow line) in predicting no-shows in the 2020 telemedicine dataset during the pandemic.

For our system at WVU, we learned that strategic double booking might be a solution. Most of our clinics had 20 min visit slots, so for a 4 h clinic session, our proposed double booking was focused towards the middle of the session with limits of two per 4 h and three for 8 h. Some of our specialists had 30 min for a return visit and 1 h for a new patient visit. For those schedules, our strategy was to overbook a follow-up visit in the new patient visit slot with a new patient who had waited over 3 months for the appointment, as the data suggested decreased probability of patient adherence to a scheduled appointment after 3 months wait time. We strongly recommended not overbooking at the beginning or end of a clinic session to maintain the flow of the clinic as well as considering the providers’ efficiency during the clinic session. As technology improves, all these measures might even become second nature in clinic patient scheduling.

4. Discussion

Our technology uses an AI-based machine learning algorithm to predict the probability of individual patients not showing up to an appointment on a given date and time. The algorithm uses a patient’s historical demographic data to proactively predict no-shows and employ strategic double booking to avoid disruptions and minimize costs to the clinic. Unlike other scheduling systems that employ advanced statistical techniques (such as logistic regression and Bayesian prediction), our platform leverages cutting-edge machine learning technology—a scalable, distributed gradient-boosted decision tree algorithm utilizing minimal feature input for easy implementation across all healthcare systems—that continues to learn and improve, thus increasing prediction accuracy over time.

Our model maintained high predictive performance (AUC: 0.90) for telehealth appointments, which is important for generalization to hybrid appointment styles. Importantly, when examining the top five features used for no-show prediction, none of these features are specific to in-person visits. This further highlights that there are innate factors involved in appointment adherence that are separate from accessible transportation, weather conditions, and other factors contributing to an in-person visit. The marginal difference in performance between all features and the top five features (AUC: 0.90 vs. 0.88) can be explained by the types of included features. For example, important features, such as “canceled appointment ratio”, had multiple highly correlated features, including the number of changed appointments, number of canceled appointments, number of appointments attended, and no-show count. These highly correlated features added little value to the model performance. Further, during COVID-19, the increased use of telehealth altered patient behavior. Traditional factors such as travel distance became irrelevant, while new variables (e.g., internet connectivity, prior telehealth experience) gained importance. As discussed, features innate to the experience of telehealth or in-person visits were less contributory to model development overall, therefore allowing the model to generalize well between both populations.

Of the work that has been performed to improve patient no-show prediction, many studies have failed to perform superiorly to the basic statistic of no-show rate displayed per patient [17]. While the no-show rate metric (AUC: 0.64) is a commonly used heuristic, it lacks contextual awareness. It does not factor in temporal variations (e.g., seasonal trends), socioeconomic variables, or patient behavior, limiting its predictive capability compared to our machine learning model. Of the studies that performed better than the basic no-show rate statistic, most algorithms have not shown superior performance in predicting patient no-shows (i.e., AUC < 0.85) and have also only been applied to very specific populations (i.e., a single population or visit type) [17]. While the current algorithms predicting patient no-shows reveal promise for clinical application [23,24], there is currently no validated machine learning algorithm incorporated into an EMR to provide real-time predictions. Additionally, due to the limited scope of most no-show prediction algorithms, racial bias within the algorithm can result in up to a 30% increase in wait time for black patients [25]; optimizing care for patients, regardless of gender, race/ethnicity, and socioeconomic status, requires a demonstration of the algorithm’s stability across these conditions to promote healthcare equity.

Clinics currently utilize scheduling protocols that dictate how patients get scheduled for each clinic and within different specialties. There is considerable variability per provider, per clinic, and sometimes even per site within a healthcare system. Epic Systems Corporation (Epic) Electronic Medical Record (EMR) has a basic statistic that displays the no-show rate per patient in the scheduling software, but there is little knowledge about the prospective performance of this information. However, this information is not currently utilized in improving the scheduling of appointments.

While the scheduling staff can see the historic no-show rate for each patient when they call for an appointment, they have no autonomy to actively modify scheduling to reduce patient no-shows and follow the guidance of clinic protocols. As such, commonly implemented approaches to avoid no-shows include appointment reminders and no-show fines, while approaches to reduce the impact of no-shows on the providers and the healthcare system include double booking. Double booking could offer an advantage to both the patient and provider if implemented in a strategic manner, including through combination with predictive AI algorithms. However, such automated “strategic double booking” would require a machine learning algorithm capable of dynamically updating based on each patient’s likelihood of missing an appointment. For example, at WVU, strategic double booking was observed to be an effective approach. In clinics with 20 min visit slots, double booking was typically concentrated in the middle of 4 h sessions, with a common limit of two patients for a 4 h session and three for an 8 h session. For specialists with 30 min return visits and 1 h new patient appointments, follow-up visit slots were often overbooked with new patients who had been waiting over 3 months, as data suggested a decrease in adherence following such delays. Additionally, overbooking at the start or end of clinic sessions was generally avoided to maintain clinic flow and optimize provider efficiency.

To ensure operational feasibility, the algorithm must optimize computational efficiency by leveraging parallel processing and scalable infrastructure to handle large EMR datasets in real time. Data collection challenges, such as missing values and inconsistent formats across healthcare systems, require robust preprocessing pipelines with imputation and standardization techniques. Seamless integration with EMR systems demands API-based interoperability, ensuring secure, real-time data exchange without disrupting clinical workflows. Additionally, model updates should be automated to adapt to evolving patient behaviors and scheduling trends while maintaining interpretability for clinical decision making. We outline additional approaches for clinical application of the algorithm in Table 5.

Table 5.

Optimized double booking and EMR integration strategies.

5. Limitations

Our study looked at the clinic population in the Appalachian region of West Virginia. Even though the financial diversity within the state was taken into consideration, there might be factors that were not obvious in our results due to limited ethnic and racial diversity in the state of West Virginia. Further, although the model performed well across all groups, slight performance variability was observed, particularly in underrepresented populations. Future work could help offset class imbalance through oversampling techniques (e.g., the Synthetic Minority Over-sampling Technique (SMOTE) [26]) or fairness-aware techniques such as adversarial debiasing [27] to improve model robustness.

Another limitation is the lack of additional machine/deep learning models for comparison. For example, some novel approaches include genetic algorithms or hybrid genetic algorithms combined with standard machine learning approaches such as Gaussian naive Bayes, support vector machines, and XGBoost [28]. Genetic algorithms optimize feature selection and hyperparameters through evolutionary searching but are computationally expensive due to iterative stochastic sampling. XGBoost, a gradient boosting framework, builds decision trees sequentially, optimizing errors with parallelization and regularization for efficiency. While genetic algorithms explore a broader solution space, they lack the structured learning efficiency of XGBoost, which can be faster and more scalable.

While our study provides valuable insights, it is important to acknowledge limitations such as potential data biases and the retrospective nature of the analysis. Importantly, the data used in this study are provided by a specific hospital or hospital system, and thus, in pediatric populations the generalizability of the research results may be limited. Future research can extend the data source to other hospitals and to other population and age ranges to cross-validate our results or could focus on prospectively collecting data and implementing interventions to evaluate their effectiveness in reducing patient no-show rates.

Our proposed solution of strategic overbooking itself has its limitations. It depends on the protocols shared with the call center to offer certain slots for overbooking. We believe that a model actively analyzing the patient no-show data and proposing slots in real time using patient no-show history might be a better solution to take away any end user bias or human errors in interpreting the protocol implementation.

6. Conclusions

No-shows in healthcare settings pose significant challenges for both providers and patients. Understanding the underlying factors driving these no-shows is crucial for developing effective interventions to reduce their occurrence and enhance clinic efficiency. This study proposes the implementation of a machine learning framework to predict patient no-shows, offering hospitals a proactive approach to optimize their outpatient appointment systems. By accurately anticipating potential no-show behavior, healthcare facilities can implement targeted strategies such as personalized appointment reminders, flexible scheduling options, and provider-specific interventions to mitigate the impact of no-shows on healthcare delivery. These proactive measures not only improve clinic efficiency but also enhance patient satisfaction, provider productivity, and overall healthcare outcomes. Future research will explore dynamic scheduling systems that adjust patient appointments based on predictive no-show risk and investigate fairness-aware ML techniques to mitigate demographic biases.

Author Contributions

J.N., T.N. and N.Y. contributed to the conception and design of this study. N.Y. organized the database. Q.A.H. and N.Y. performed the statistical analysis and developed models. Q.A.H. wrote the first draft of the manuscript. J.N., T.N. and N.Y. wrote sections of the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board of West Virginia University (protocol number: 2002907160, date of approval: 17 March 2020) for studies involving humans.

Informed Consent Statement

Patient consent was waived due to the retrospective nature of the study.

Data Availability Statement

All data produced in the present study are available upon reasonable request to the authors.

Acknowledgments

The authors express their gratitude to all the patients, providers, administrators, clinic staff, schedulers, and researchers at West Virginia University Hospitals who contributed and/or supported our research.

Conflicts of Interest

Author T.N. was employed by the company Chicagi LLC. Additionally, Q.A.H. and T.N. served in advisory roles to Aspirations LLC. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Marbouh, D.; Khaleel, I.; Al Shanqiti, K.; Al Tamimi, M.; Simsekler, M.C.E.; Ellahham, S.; Alibazoglu, D.; Alibazoglu, H. Evaluating the Impact of Patient No-Shows on Service Quality. Risk Manag. Healthc. Policy 2020, 13, 509–517. [Google Scholar] [CrossRef]

- Gier, J. Missed Appointments Cost the U.S. Healthcare System $150B Each Year. Available online: https://www.hcinnovationgroup.com/clinical-it/article/13008175/missed-appointments-cost-the-us-healthcare-system-150b-each-year (accessed on 24 April 2023).

- Ansell, D.; Crispo, J.A.G.; Simard, B.; Bjerre, L.M. Interventions to reduce wait times for primary care appointments: A systematic review. BMC Health Serv. Res. 2017, 17, 295. [Google Scholar] [CrossRef]

- Hayhurst, C. No-Show Effect: Even One Missed Appointment Risks Retention. Available online: https://www.athenahealth.com/knowledge-hub/financial-performance/no-show-effect-even-one-missed-appointment-risks-retention (accessed on 6 June 2023).

- Salazar, L.H.A.; Parreira, W.D.; Fernandes, A.M.R.; Leithardt, V.R.Q. No-Show in Medical Appointments with Machine Learning Techniques: A Systematic Literature Review. Information 2022, 13, 507. [Google Scholar] [CrossRef]

- Qureshi, Z.; Maqbool, A.; Mirza, A.; Iqbal, M.Z.; Afzal, F.; Kanubala, D.D.; Rana, T.; Umair, M.Y.; Wakeel, A.; Shah, S.K. Efficient Prediction of Missed Clinical Appointment Using Machine Learning. Comput. Math. Methods Med. 2021, 2021, 2376391. [Google Scholar] [CrossRef]

- Salazar, L.H.A.; Leithardt, V.R.Q.; Parreira, W.D.; Fernandes, A.M.R.; Barbosa, J.L.V.; Correia, S.D. Application of Machine Learning Techniques to Predict a Patient’s No-Show in the Healthcare Sector. Future Int. 2021, 14, 3. [Google Scholar] [CrossRef]

- Moharram, A.; Altamimi, S.; Alshammari, R. Data Analytics and Predictive Modeling for Appointments No-show at a Tertiary Care Hospital. In Proceedings of the 2021 1st International Conference on Artificial Intelligence and Data Analytics (CAIDA), Riyadh, Saudi Arabia, 6–7 April 2021; pp. 275–277. [Google Scholar]

- Daghistani, T.; AlGhamdi, H.; Alshammari, R.; AlHazme, R.H. Predictors of outpatients’ no-show: Big data analytics using apache spark. J. Big Data 2020, 7, 108. [Google Scholar] [CrossRef]

- Fan, G.; Deng, Z.; Ye, Q.; Wang, B. Machine learning-based prediction models for patients no-show in online outpatient appointments. Data Sci. Manag. 2021, 2, 45–52. [Google Scholar] [CrossRef]

- Alshammari, A.; Almalki, R.; Alshammari, R. Developing a Predictive Model of Predicting Appointment No-Show by Using Machine Learning Algorithms. J. Adv. Inform. Technol. 2021, 12, 234–239. [Google Scholar] [CrossRef]

- Ahmadi, E.; Garcia-Arce, A.; Masel, D.T.; Reich, E.; Puckey, J.; Maff, R. A metaheuristic-based stacking model for predicting the risk of patient no-show and late cancellation for neurology appointments. IISE Trans. Healthc. Syst. Eng. 2019, 9, 272–291. [Google Scholar] [CrossRef]

- Srinivas, S.; Salah, H. Consultation length and no-show prediction for improving appointment scheduling efficiency at a cardiology clinic: A data analytics approach. Int. J. Med/Inform. 2021, 145, 104290. [Google Scholar] [CrossRef]

- Alshammari, R.; Daghistani, T.; Alshammari, A. The Prediction of Outpatient No-Show Visits by using Deep Neural Network from Large Data. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 533–539. [Google Scholar] [CrossRef]

- Shameer, K.; Johnson, K.W.; Yahi, A.; Miotto, R.; Li, L.I.; Ricks, D.; Jebakaran, J.; Kovatch, P.; Sengupta, P.P.; Gelijns, S.; et al. Predictive Modeling of Hospital Readmission Rates Using Electronic Medical Record-Wide Machine Learning: A Case-Study Using Mount Sinai Heart Failure Cohort. Pac. Symp. Biocomput. 2017, 22, 276–287. [Google Scholar] [CrossRef] [PubMed]

- Chong, L.R.; Tsai, K.T.; Lee, L.L.; Foo, S.G.; Chang, P.C. Artificial Intelligence Predictive Analytics in the Management of Outpatient MRI Appointment No-Shows. AJR Am. J. Roentgenol. 2020, 215, 1155–1162. [Google Scholar] [CrossRef] [PubMed]

- Mohammadi, I.; Wu, H.; Turkcan, A.; Toscos, T.; Doebbeling, B.N. Data Analytics and Modeling for Appointment No-show in Community Health Centers. J. Prim. Care Community Health 2018, 9, 2150132718811692. [Google Scholar] [CrossRef] [PubMed]

- Ellis, D.A.; McQueenie, R.; McConnachie, A.; Wilson, P.; Williamson, A.E. Demographic and practice factors predicting repeated non-attendance in primary care: A national retrospective cohort analysis. Lancet Public Health 2017, 2, e551–e559. [Google Scholar] [CrossRef]

- Festinger, D.S.; Lamb, R.J.; Marlowe, D.B.; Kirby, K.C. From telephone to office: Intake attendance as a function of appointment delay. Addict. Behav. 2002, 27, 131–137. [Google Scholar] [CrossRef]

- Chen, J.; Goldstein, I.H.; Lin, W.C.; Chiang, M.F.; Hribar, M.R. Application of Machine Learning to Predict Patient No-Shows in an Academic Pediatric Ophthalmology Clinic. AMIA Annu. Symp. Proc. 2020, 2020, 293–302. [Google Scholar] [PubMed]

- Liu, D.; Shin, W.Y.; Sprecher, E.; Conroy, K.; Santiago, O.; Wachtel, G.; Santillana, M. Machine learning approaches to predicting no-shows in pediatric medical appointment. NPJ Digit. Med. 2022, 5, 50. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the KDD’16: 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Srinivas, S.; Ravindran, A.R. Optimizing outpatient appointment system using machine learning algorithms and scheduling rules. Expert Syst. Appl. 2018, 102, 245–261. [Google Scholar] [CrossRef]

- Srinivas, S.; Ravindran, A.R. Designing schedule configuration of a hybrid appointment system for a two-stage outpatient clinic with multiple servers. Health Care Manag. Sci. 2020, 23, 360–386. [Google Scholar] [CrossRef]

- Samorani, M.; Harris, S.L.; Blount, L.G.; Lu, H.; Santoro, M.A. Overbooked and Overlooked: Machine Learning and Racial Bias in Medical Appointment Scheduling. Manuf. Serv. Oper. Manag. 2021, 24, 2825–2842. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Yang, J.; Soltan, A.A.S.; Eyre, D.W.; Yang, Y.; Clifton, D.A. An adversarial training framework for mitigating algorithmic biases in clinical machine learning. NPJ Digit. Med. 2023, 6, 55. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Dhanka, S.; Singh, J.; Ali Khan, A.; Maini, S. Hybrid machine learning techniques based on genetic algorithm for heart disease detection. Innov. Emerg. Technol. 2024, 11, 2450008. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).