Abstract

The lack of spatial pose information and the low positioning accuracy of the picking target are the key factors affecting the picking function of citrus-picking robots. In this paper, a new method for automatic citrus fruit harvest is proposed, which uses semantic segmentation and rotating target detection to estimate the pose of a single culture. First, Faster R-CNN is used for grab detection to identify candidate grab frames. At the same time, the semantic segmentation network extracts the contour information of the citrus fruit to be harvested. Then, the capture frame with the highest confidence is selected for each target fruit using the semantic segmentation results, and the rough angle is estimated. The network uses image-processing technology and a camera-imaging model to further segment the mask image of the fruit and its epiphyllous branches and realize the fitting of contour, fruit centroid, and fruit minimum outer rectangular frame and three-dimensional boundary frame. The positional relationship of the citrus fruit to its epiphytic branches was used to estimate the three-dimensional pose of the citrus fruit. The effectiveness of the method was verified through citrus-planting experiments, and then field picking experiments were carried out in the natural environment of orchards. The results showed that the success rate of citrus fruit recognition and positioning was 93.6%, the average attitude estimation angle error was 7.9°, and the success rate of picking was 85.1%. The average picking time is 5.6 s, indicating that the robot can effectively perform intelligent picking operations.

1. Introduction

Citrus is one of the most important fruit tree genera in the world, with a planting area and yield ranking among the top in the world []. In China, the citrus industry has always been a pillar of rural economic development []. However, currently, citrus harvesting in China still relies on high-cost and high-intensity manual harvesting []. The insufficient supply of agricultural labor and the huge labor expenditure are seriously hindering the development of the industry []. Therefore, utilizing intelligent devices to automatically harvest fruits is of great significance for liberating productivity and improving production efficiency []. Due to the irregular position and angle of citrus growth, as well as the influence of branches and fruit occlusion, the harvesting conditions are complex and variable, making it difficult for harvesting robots to accurately identify and locate citrus fruits in complex orchard environments [].

In recent years, robots have been widely used in industrial, agricultural, and other scenarios, and play an increasingly important role. As the most commonly used basic action of the robots, grasping is a very important ability of the robots to complete the task of picking and placing. However, because of unstructured farm environments and other uncertainties, it remains a huge challenge for robots to reliably grasp objects. The grasping task not only requires the robot to accurately identify the object in the scene but also needs the robot to accurately determine what the object is as a basic and key action. Grasping is the indispensable ability of the robot to perform the task of picking and placing. In an unstructured field environment, the challenge for a picking robot is not only to accurately identify the target fruit in the scene but also to precisely determine the location and orientation of the object. This requires robots to be able to achieve highly precise automation to adapt to complex farm environments. At present, machine vision detection technology is widely used in fruit detection tasks []. Sun proposed a multi-task learning model called FPENet to simultaneously locate the fruit navel point, predict the fruit rotation vector [], and introduce hyperparameters into the loss function to achieve simultaneous convergence of multiple tasks []. A 2D image annotation tool was designed, and a citrus pose dataset was constructed, which is helpful for model training and algorithm evaluation []. Lin proposed a fruit detection and pose estimation method using low-cost red green blue depth (RGB-D) sensors []. Firstly, a state-of-the-art fully convolutional network is deployed to segment RGB images and output a binary image of fruits and branches []. Then, based on the fruit binary image and RGB-D depth image, Euclidean clustering is applied to group the point cloud into a set of individual fruits []. Subsequently, a multi-three-dimensional (3D) line segment detection method was developed to reconstruct the segmented branches []. Finally, its center position and nearest branch information are used to estimate the 3D pose of the fruit. Kim Taehyeong proposed a 2D pose estimation method for multiple rooted tomatoes based on a bottom-up approach []; first, detect all components related to tomato pose in an image, and then estimate the pose of each object. However, image-processing techniques based on color, shape, and texture require accurate feature information of the target fruit []. This method takes human pose estimation as the backbone and trains the model based on four key points defined in this study: tomato center [], calyx [], ionosphere [], and branching points []. Rong Jiacheng aims to accurately identify the position of tomato fruits and estimate the grasping posture to improve the success rate and efficiency of robot harvesting []. A specially designed adsorption and clamping integrated robotic arm was proposed, and the optimal sorting algorithm and fruit nearest neighbor positioning algorithm were developed []. Directional grasping and sequential picking control strategies were designed to reduce the impact of dense fruits on the precise grasping of the robotic arm [].

Overall, there is currently limited research on the target detection and pose estimation of citrus fruits in natural environments, and there have been no reports on mature intelligent harvesting technologies in natural orchard environments []. In an unstructured citrus orchard environment, a reliable and robust citrus fruit object detection algorithm is crucial for the development of harvesting robots. At the same time, fruit pose estimation is an important factor in guiding the end effector of the harvesting robot to approach the fruit for precise harvesting []. Therefore, this article proposes a monocular pose estimation method based on semantic segmentation and rotation object detection to address the high accuracy requirements and difficult pose estimation of citrus fruit target recognition and localization algorithms in natural orchard planting environments: Use Faster R-CNN for grabbing detection to obtain candidate grabbing boxes; combine with semantic segmentation networks to obtain contour information of citrus fruits; based on the semantic segmentation results, select the grabbing box with the highest confidence for each citrus fruit to be grabbed, and complete rough angle estimation in order to provide a technical basis for the development of citrus picking robots [].

2. Related Work

2.1. Data Acquisition Platform

In this study, we need to pick citrus as the research object. All the pictures were taken in a natural citrus orchard at the Hunan Academy of Agricultural Sciences in Changsha, Hunan province. April 2024, China. The experimenters used Intel’s RealSense D435i (Intel Corporation, Santa Clara, CA, USA) as an image capture device. During the collection process, the experimenter controlled the camera at a distance of 0.3 to 0.6 m from the crown surface parallel to the fruit tree. The pixel resolution is fixed at 1280 × 720. The sample image is shown in Figure 1. In order to improve the robustness of model training, the data of the acquired original images were screened, and Labelme software 3.16.2 was used to manually label the images, and then the image data set was randomly divided into the training set, the test set, and the verification set at a ratio of 8/1/1.

Figure 1.

Example of citrus dataset. (a) Sunny day; (b) sunny backlight; (c) cloudy sky.

2.2. Network Model Construction

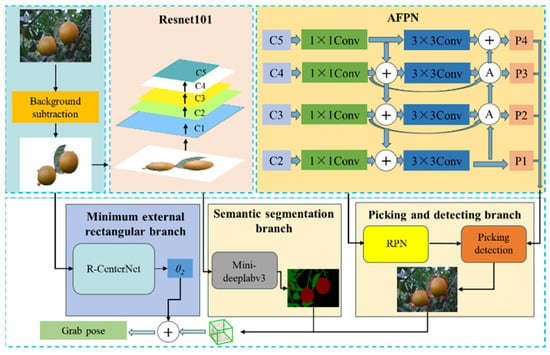

In this study, the two-dimensional grabbing rectangle generated in the RGB color images is used as the reference for the grabbing posture of the picking robot. Among them, the deflection angle of the detection frame is used as the angle when the picking robot works, and the pixel coordinate of the center point of the detection frame is converted into the three-dimensional coordinate value of the corresponding space point in the base coordinate system of the picking robot. In order to efficiently suppress background interference and the influence of irrelevant branches to obtain accurate segmentation images of citrus fruits and their attached branches, the background difference method based on RGB color information can be used to separate the citrus fruits to be captured from the natural background of the orchard after obtaining the background of the application scene, and the suppression of the background may introduce noise points in the outline of the object []. The effect of some noise points should be removed by morphological operation. However, this method does not suppress the effect of the shadow of the object to be grabbed in the scene. Therefore, the overall architecture of the network can be divided into two stages in this study, as shown in Figure 2. Stage 1: First, the capture detection algorithm Faster R-CNN is used to obtain the initial capture frame. Then, using the obtained results of a series of initial grasping frames and semantic segmentation, the outline information of the citrus fruits to be grabbed and the geometric information of initial grasping frames were integrated The grasping position of each citrus fruit to be grabbed was selected on the object, the frame with the highest confidence was taken as the initial grasping frame, and the rough angle estimation was completed. Stage 2: The angle prediction branch is used to obtain a fine grasp angle of citrus fruit to supervise the deflection angle of the grasp frame in stage 1.

- (1)

- Faster R-CNN model

Figure 2.

Citrus fruit picking pose-estimation network structure.

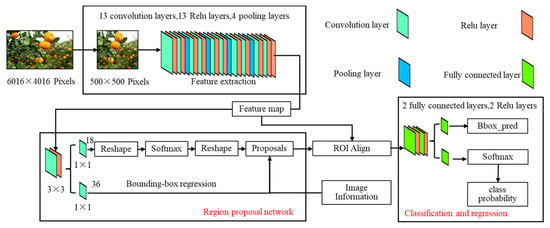

In order to efficiently suppress the influence of background interference and irrelevant branches and obtain accurate segmentation images of citrus fruits and their attached branches, Resnet101 was used as the backbone network in the Faster R-CNN model to extract features. R-CNN, which stands for Region-CNN, was the first algorithm to successfully apply deep learning to object detection. The Faster R-CNN model consists of a backbone network, a regional suggestion network, and a detection head. Extract the features of the input image based on the backbone network and generate a feature map C1, C2, C3, C4, and C5. The feature pyramid network receives the feature graph {C2, C3, C4, C5} output by the backbone network, and fuses the feature graph of different levels to generate P1, P2, P3, and P4. In order to reduce the impact of citrus fruit features disappearing with the deepening of the network, the feature pyramid network adopts AFPN, which is an attention-based top-down and bottom-up network structure that can achieve multi-scale feature fusion. The regional generation network uses the feature map generated by AFPN to generate a series of grab candidate frames and output them to the picking detection head. The deflection angle of the picking detection head to the grab frame is classified as 18, while the pixel coordinates of the center point of the grab frame and the width and height of the grab frame are generated by regression operation. The network structure of Faster R-CNN is shown in Figure 3.

- (2)

- FPNs (Feature Pyramid Networks)

Figure 3.

Framework diagram of Faster R-CNN.

The low-dimensional feature map of FPN mainly contains the local location feature information of objects such as edge lines but lacks sufficient semantic information. However, high-dimensional feature maps mainly contain global semantic information such as details and contours, but the position information is rough []. Usually, rich high-dimensional semantic information can obtain better detection results. Therefore, FPNs fuse the high-level features of low-resolution high-semantic information with the low-level features of high-resolution low-semantic information, so that the feature maps at each level of the pyramid can contain rich semantic information. However, location information is very important for picking detection, so this study added a bottom-up structure on the basis of an FPN, and added low-dimensional features to high-dimensional decision features, so as to obtain more accurate location information of citrus fruits. An attention feature pyramid network (AFPN) is proposed in this paper, which is a top-down and bottom-up structure based on a self-attention mechanism. The structure of the AFPN is shown in Figure 3, and the A in AFPN is the cosine non-local attention module. In AFPNs, the attention module and bottom-up structure are used to capture fine information of different scales in the image and achieve multi-scale feature fusion, so that all layers of the pyramid share similar semantic features and accurate location information, so as to improve the accuracy of picking detection.

- (3)

- Semantic segmentation module

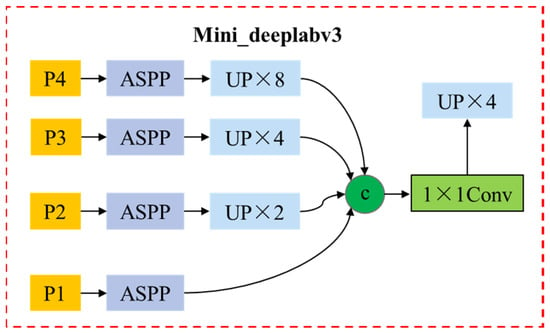

Because the picking robot does not take into account the contour information of the citrus fruit to be picked in the picking detection of the target citrus fruit, it uses the detection frame of the target fruit to select a picking frame with the intersection and the highest confidence from a series of picking frames as the optimal picking frame, without considering whether the picking location is on the object []. Semantic segmentation can obtain the contour information of citrus fruits to be grabbed in the natural scene of the orchard. Therefore, the frame with the highest grasping position and the highest confidence can be selected from a series of grasping frames using the contour information of citrus fruits obtained by semantic segmentation as the grasping frame with the optimal grasping position. The semantic segmentation network structure of this paper is shown in Figure 4. The specific process of the semantic segmentation branch is divided into three steps. First, the semantic segmentation module receives the output of the Attention Feature Pyramid Network(AFPN) as input and sends it to Atrous Spatial Pyramid Pooling (ASPP) to obtain the outline information of the object to be grasped on the workbench []. Then, from a series of grasping frames generated from Faster R-CNN by using the outline information of objects to be grasped on the workbench, the frame with the highest confidence is selected for each object to be grasped as the initial grasping frame, and the rough angle estimation is completed.

- (4)

- Citrus fruit pose estimation module

Figure 4.

Mini deelabv3 Network structure.

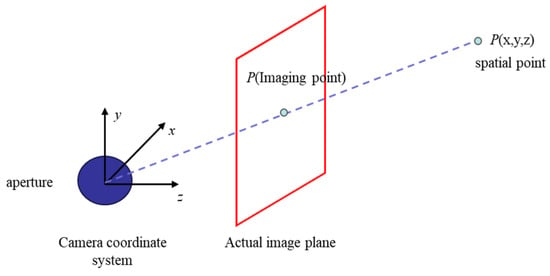

The fruit’s spatial position and posture information can be determined based on the three-dimensional positioning of the citrus fruit’s three-dimensional boundary box, and the acquisition of the fruit’s three-dimensional frame needs to map the fruit from the three-dimensional space to the two-dimensional space []. The relationship between the actual three-dimensional space points and the actual imaging plane is usually calculated through the pinhole imaging principle. As shown in Figure 5, each pixel in the image is the intersection point between the aperture, the actual space point line, and the imaging plane, which are used to realize the imaging of the three-dimensional space point to the two-dimensional image.

Figure 5.

Pinhole imaging model of citrus fruit in natural environment.

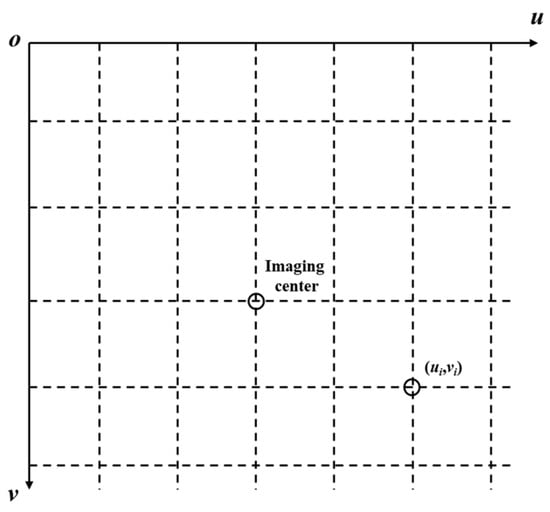

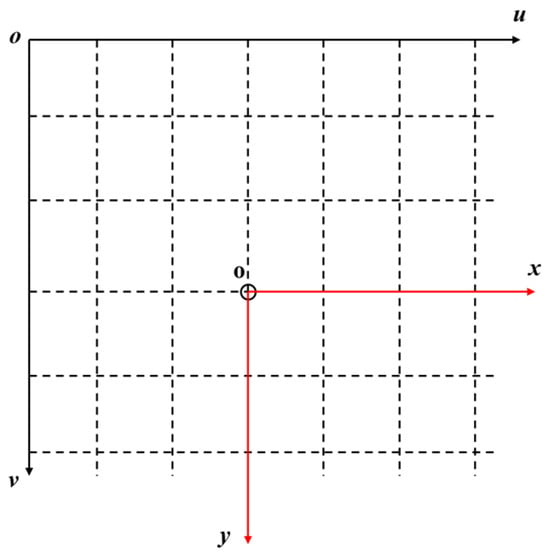

In practical applications, the image captured by the camera and the data read are calculated by pixel points, usually based on the uov plane in the pixel coordinate system, as shown in Figure 6. Therefore, pixel coordinates need to be converted into image coordinates corresponding to two-dimensional space, and the image coordinate system is shown in Figure 7. Set the size of each pixel as dx and dy, and the pixel corresponding to the origin o of the image coordinate system is (u0, v0). By giving any specific pixel coordinate (u, v), its image coordinate (x, y) can be calculated. The calculation formula is as follows:

Figure 6.

Pixel coordinate system.

Figure 7.

Image coordinate system.

Furthermore, the spatial depth information obtained can realize the conversion of the two-dimensional image to three-dimensional spatial coordinates. According to the spatial calculation model of the camera, the joint vertical (1) can be obtained as follows:

where f is the focal length of the camera; (xp, yp, zp) is the three-dimensional space coordinate of point P; zp is the depth of the actual space point 0P in the camera coordinate system, so as to calculate the space coordinates of the actual space point in the camera coordinate system.

The focal length of the camera in the x and y axes is defined as fx and fy, respectively, and Equation (2) is simplified to obtain the relationship between pixel coordinates and spatial three-dimensional coordinates as follows:

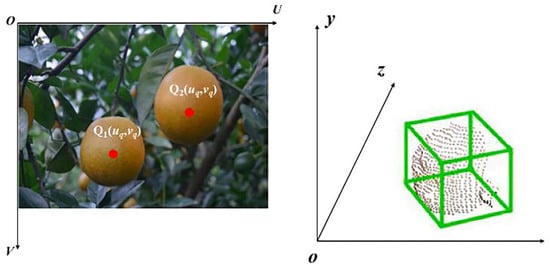

To realize the one-to-one mapping between each pixel in the image and the actual three-dimensional space point. Based on the above principles, the three-dimensional boundary frame of the citrus fruit is fitted, and then the three-dimensional pose information of the citrus fruit is obtained.

The detected target position and attitude information can usually be determined by a 3D bounding box. P1–P8 are the 8 vertices of the citrus fruit 3D boundary box with coordinates (Xi, Yi, Zi) (i = 1, 2, …, 8). Its coordinates can be obtained from the relative geometric position from Pi to Qo, for example, the three-dimensional coordinates of point P1 are as follows:

3. Experimental Results and Analysis

3.1. Grab Data Set

In this paper, the network realizes the training and testing of the capture pose detection task under the Pytorch deep learning framework, which mainly relies on OpenCV 3.4.11, Numpy, matlibplot, and so on. The proposed algorithm runs in the Ubuntu 20.04 operating system with Intel Xeon E5700 V4@2.10 GHz CPU, 64 GB RAM, and NVIDIA GeForce RTX 2080 GPU (Intel Corporation). CUDA and cuDNN versions are 10.1 and 9.6.5, respectively. The average crossover ratio (mIoU) and average pixel accuracy (mPA) were used as evaluation indexes to evaluate the performance of the citrus segmentation model, and the angle error between the estimated and actual growth pose was used as an evaluation index to evaluate the evaluation effect of citrus pose estimation.

3.2. Citrus Image Segmentation Experiment

Compared with the Masked Fusion, FFB6D, PR-GCN, and PVN3D networks on the test set images, Table 1 shows that the performance of the AFPN model proposed in this paper is significantly superior to other four network models for image detection and segmentation of citrus fruits and its attached and growing branches. The average crossover ratio of fruit image segmentation is 89.96%, and the average pixel accuracy is 95.67%. The average crossover ratio of attached and growing branches image segmentation is 78.62%, and the average pixel accuracy is 75.62%. Although several models have a good segmentation effect on citrus fruit images, due to the difficulty of the image segmentation of citrus fruits attached to growing branches, PR-GCN and PVN3D are prone to mis-segmentation and image mask loss, and the contours of citrus branches are not smooth enough. The results of image segmentation using AFPN are better than those of the other four models.

Table 1.

Comparison of Different Models for Sub-Image-Recognition Results.

3.3. Estimation of Citrus Fruit Growth Posture

Firstly, the proposed algorithm was tested on the test set for citrus growth pose estimation, so as to test the stability of the algorithm and calculate the angle between the estimated growth pose and the actual growth pose (manually marked) to measure the citrus pose estimation error. The smaller the angle, the smaller the error, and the larger the angle, the larger the error. The angle θi between the estimated growth attitude and the actual growth attitude is as follows:

where is the estimated attitude of citrus; is the actual growth posture of citrus. The growth attitude estimation results of some citrus fruits measured on site are shown in Figure 8. In the figure, the yellow dot is the fruit growth point, the direction of the red arrow is the attitude estimated by the algorithm, and the direction of the black arrow is the real attitude. It can be seen that the angle error of this algorithm is small, and it has a good attitude estimation effect. At the same time, some attitude estimation failures also occurred in this study, as shown in Figure 9. The main reasons for the large error in attitude estimation are as follows: (1) When the citrus branches grow luxuriant, the image segmentation of the fruit-growing branches is easily mis-segmented, resulting in difficulty in determining the positional relationship between the fruit and its epiphytic branches. (2) In this algorithm, the junction point of citrus and its attached growing branches is defined as the fruit growth point, and it is defined as the starting point of the fruit pose estimation vector. Although this point is the closest point to the fruit centroid on the contours of the growing branches, it may not be the starting point of the best pose estimation vector.

Figure 8.

Examples of citrus 2D and 3D location information.

Figure 9.

Citrus pose estimation vector diagram.

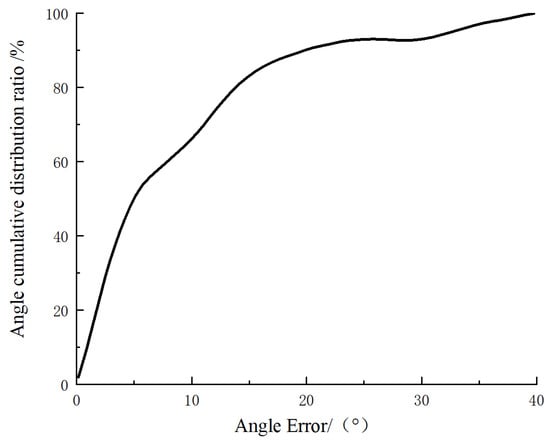

The cumulative distribution ratio of angle errors is shown in Figure 10. The proportion of angle errors within 10° is 66.6%, the proportion of angle errors within 20° is 90%, the average of all angle errors is 8.8°, and the standard deviation is 7.2°.

Figure 10.

Proportion of cumulative angular error distribution.

3.4. Field Picking Experiment

The harvesting experiment was carried out in the citrus orchard of the Changsha Academy of Agricultural Sciences in the Hunan Province, and the branches of the fruit grew more luxuriant. The test time was from 1 PM to 6 PM, and the fruit trees used in the test were of the “Dafen No. 4” variety. The combination of the depth camera RealSense D455, the robotic arm, the end effector, and the tracked moving chassis constitutes the citrus-picking robot, as shown in Figure 11.

Figure 11.

Field test environment of citrus picking robot.

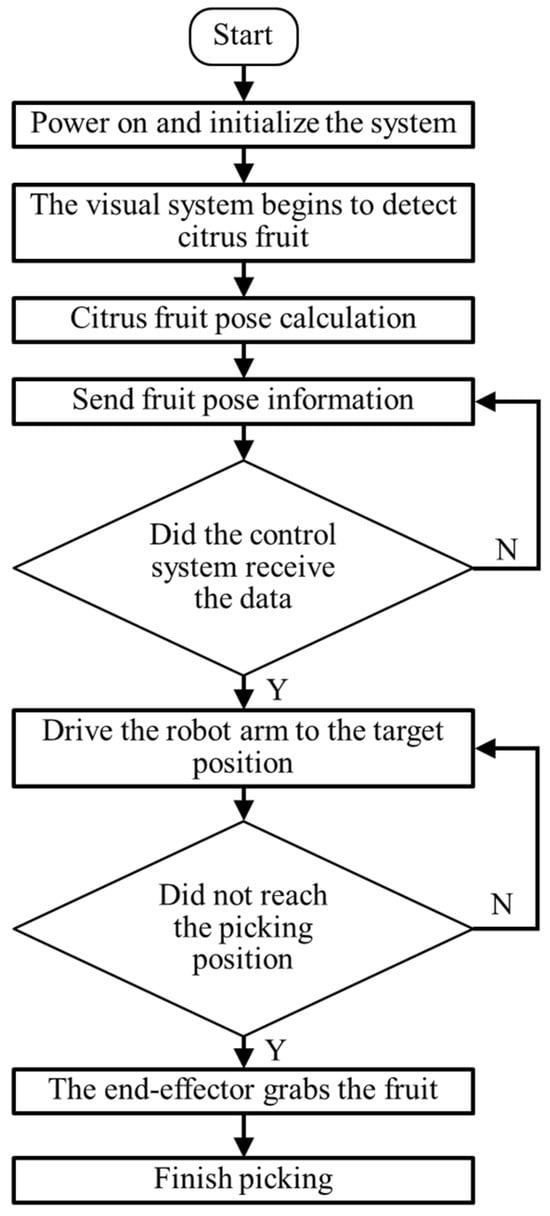

The workflow of the citrus-picking robot is shown in Figure 12. After the picking robot was energized and initialized, it began to move along the planting line in the citrus orchard. When a ripe red citrus fruit is detected within the field of view set by the robot camera, the robot stops moving and begins to calculate the pose of the fruit and sends the pose information to the robot control module. After path planning is completed, the control command is sent to control the movement of the robotic arm to pick the fruit. If the branches seriously block the fruit, the citrus fruit picking will not be set up for the time being, and the obstacle avoidance picking research will be carried out instead. After the picking robot’s arm reaches the target position according to the attitude information of the citrus, the end effector grabs the citrus and rotates to pick it up. At the same time, there are some examples of fruit-picking failure in this experiment. The main reasons for the failure are the error of the attitude estimation angle and the instability of the mechanical claw. The picking test results are shown in Table 2: The recognition success rate of the citrus fruit picking robot arm in the natural field environment is 93.6%, and the average angle error of attitude estimation is 7.9°. The harvest was successful 1648 times, with a success rate of 85.1%. The average picking time was 5.6 s, indicating that the robot could basically complete the intelligent picking operation. During the picking test, locating the citrus fruits was unsuccessful mainly because the location of citrus fruits was beyond the picking range of the end effector, and the motion parameters of the robotic arm joints would produce errors, affecting the motion accuracy of the robotic arm, and leading to the picking failure.

Figure 12.

Robot flow chart.

Table 2.

Field performance test results of picking robots.

4. Conclusions

Aiming at the pose detection task of citrus-fruit-picking robots, a new pose estimation method based on semantic segmentation and rotating target detection was proposed. First, Faster R-CNN was used for capture detection to identify candidate frames. Meanwhile, a semantic segmentation network was used to extract the contour information of citrus fruits. Then, the capture frame with the highest confidence was selected for each target fruit by semantic segmentation, which was convenient for rough angle estimation. Finally, field performance tests were carried out, and the success rate of citrus fruit identification and positioning was 93.6%, the average attitude estimation angle error was 7.9°, and the success rate of picking was 85.1%. The average picking time was 5.6 s, indicating that the robot can effectively perform intelligent picking operations. Specific conclusions are as follows:

- (1)

- Aiming at the citrus-picking task of a citrus-picking robot, a single culture pose estimation method based on a semantic segmentation network and rotating target detection is proposed. In the upstream network comparison experiment of the data set constructed by us, the accuracy of the network capture detection proposed in this paper can reach 95.67%.

- (2)

- The citrus picking robot developed based on the algorithm in this paper can accurately reach the target position and pick according to the control instructions. The success rate of picking in the natural environment of the orchard was 85.1%, and the average picking time was 5.6 s, which could meet the requirements for the mechanical picking of citrus.

- (3)

- Judging from the failure of the grasping experiment, the influence of the centroid of citrus fruit and the friction between the end effector and the fruit can be considered in further picking and detection research, which is the focus of the future improvement of the citrus fruit positioning and grasping algorithm.

Author Contributions

Software, X.X. and Y.W.; Validation, Y.J.; Formal analysis, H.W.; Investigation, B.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research is funded by Hunan Intelligent Agricultural Machinery Equipment Innovation Research and Development Project (Z2023260002414).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kok, E.; Chen, C. Occluded apples orientation estimator based on deep learning model for robotic harvesting. Comput. Electron. Agric. 2024, 219, 108781. [Google Scholar] [CrossRef]

- Chen, J.; Ma, A.; Huang, L.; Li, H.; Zhang, H.; Huang, Y.; Zhu, T. Efficient and lightweight grape and picking point synchronous detection model based on key point detection. Comput. Electron. Agric. 2024, 217, 108612. [Google Scholar] [CrossRef]

- Zhang, J.; Xie, J.; Zhang, F.; Gao, J.; Yang, C.; Song, C.; Rao, W.; Zhang, Y. Greenhouse tomato detection and pose classification algorithm based on improved YOLOv5. Comput. Electron. Agric. 2024, 216, 108519. [Google Scholar] [CrossRef]

- Zhang, K.; Chu, P.; Lammers, K.; Li, Z.; Lu, R. Active Laser-Camera Scanning for High-Precision Fruit Localization in Robotic Harvesting: System Design and Calibration. Horticulturae 2023, 10, 40. [Google Scholar] [CrossRef]

- Ju, C.; Kim, J.; Seol, J.; Son, H.I. A review on multirobot systems in agriculture. Comput. Electron. Agric. 2022, 202, 107–136. [Google Scholar] [CrossRef]

- Visentin, F.; Castellini, F.; Muradore, R. A soft, sensorized gripper for delicate harvesting of small fruits. Comput. Electron. Agric. 2023, 213, 108202. [Google Scholar] [CrossRef]

- Li, Y.; Liao, J.; Wang, J.; Luo, Y.; Lan, Y. Prototype Network for Predicting Occluded Picking Position Based on Lychee Phenotypic Features. Agronomy 2023, 13, 2435. [Google Scholar] [CrossRef]

- Sun, Q.; Zhong, M.; Chai, X.; Zeng, Z.; Yin, H.; Zhou, G.; Sun, T. Citrus pose estimation from an RGB image for automated harvesting. Comput. Electron. Agric. 2023, 211, 108022. [Google Scholar] [CrossRef]

- Kim, T.; Lee, D.-H.; Kim, K.-C.; Kim, Y.-J. 2D pose estimation of multiple tomato fruit-bearing systems for robotic harvesting. Comput. Electron. Agric. 2023, 211, 108004. [Google Scholar] [CrossRef]

- He, Z.; Wu, K.; Wang, F.; Jin, L.; Zhang, R.; Tian, S.; Wu, W.; He, Y.; Huang, R.; Yuan, L.; et al. Fresh Yield Estimation of Spring Tea via Spectral Differences in UAV Hyperspectral Images from Unpicked and Picked Canopies. Remote Sens. 2023, 15, 1100. [Google Scholar] [CrossRef]

- Shi, Y.; Jin, S.; Zhao, Y.; Huo, Y.; Liu, L.; Cui, Y. Lightweight force-sensing tomato picking robotic arm with a “global-local” visual servo. Comput. Electron. Agric. 2023, 204, 107549. [Google Scholar] [CrossRef]

- Jana, S.; Basak, S.; Parekh, R. Automatic fruit recognition from natural images using color and texture features. In 2017 Devices for Integrated Circuit (DevIC); IEEE: Piscataway, NJ, USA, 2017; pp. 620–624. [Google Scholar]

- Mai, X.; Zhang, H.; Jia, X.; Meng, M.Q.-H. Faster R-CNN with classifier fusion for automatic detection of small fruits. IEEE Trans. Autom. Sci. Eng. 2020, 17, 1555–1569. [Google Scholar] [CrossRef]

- Wan, S.; Goudos, S. Faster R-CNN for multi-class fruit detection using a robotic vision system. Comput. Netw. 2020, 168, 107–126. [Google Scholar] [CrossRef]

- Wang, Y.; He, Z.; Cao, D.; Ma, L.; Li, K.; Jia, L.; Cui, Y. Coverage path planning for kiwifruit picking robots based on deep reinforcement learning. Comput. Electron. Agric. 2023, 205, 107593. [Google Scholar] [CrossRef]

- Xiao, X.; Huang, J.; Li, M.; Xu, Y.; Zhang, H.; Wen, C.; Dai, S. Fast recognition method for citrus under complex environments based on improved YOLOv3. J. Eng. 2022, 2022, 148–159. [Google Scholar] [CrossRef]

- Bac, C.W.; Roorda, T.; Reshef, R.; Berman, S.; Hemming, J.; van Henten, E.J. Analysis of a motion planning problem for sweet-pepper harvesting in a dense obstacle environment. Biosyst. Eng. 2016, 146, 85–97. [Google Scholar] [CrossRef]

- Wang, L.; Wang, Z.; Liu, M.; Ying, Z.; Xu, N.; Meng, Q. Full coverage path planning methods of harvesting robot with multi-objective constraints. J. Intell. Robot. Syst. 2022, 106, 17. [Google Scholar] [CrossRef]

- Fang, Z.; Liang, X. Intelligent obstacle avoidance path planning method for picking manipulator combined with artificial potential field method. Ind. Robot: Int. J. Robot. Res. Appl. 2022, 49, 835–850. [Google Scholar] [CrossRef]

- Yang, H.; Chen, L.; Ma, Z.; Chen, M.; Zhong, Y.; Deng, F.; Li, M. Computer vision-based high-quality tea automatic plucking robot using Delta parallel manipulator. Comput. Electron. Agric. 2021, 181, 105946. [Google Scholar] [CrossRef]

- Ye, L.; Duan, J.; Yang, Z.; Zou, X.; Chen, M.; Zhang, S. Collision-free motion planning for the litchi-picking robot. Comput. Electron. Agric. 2021, 185, 106–151. [Google Scholar] [CrossRef]

- Budijati, S.M.; Iskandar, B.P. Dynamic programming to solve picking schedule at the tea plantation. Int. J. Eng. Technol. 2018, 7, 285–290. [Google Scholar] [CrossRef]

- Li, H.; Qi, C.; Gao, F.; Chen, X.; Zhao, Y.; Chen, Z. Mechanism design and workspace analysis of a hexapod robot. Mech. Mach. Theory 2022, 174, 104917. [Google Scholar] [CrossRef]

- Cao, X.; Zou, X.; Jia, C.; Chen, M.; Zeng, Z. RRT-based path planning for an intelligent litchi-picking manipulator. Comput. Electron. Agric. 2019, 156, 105–118. [Google Scholar] [CrossRef]

- Feng, Q.; Wang, G.; Wang, S.; Wang, X. Tomato’s mechanical properties measurement aiming for auto-harvesting. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2019; Volume 585, p. 012120. [Google Scholar]

- Zhang, Y.; Chen, Y.; Song, Y.; Zhang, R.; Wang, X. Finding the lowest damage picking mode for tomatoes based on finite element analysis. Comput. Electron. Agric. 2023, 204, 107536. [Google Scholar] [CrossRef]

- Li, Z.; Yuan, X.; Wang, C. A review on structural development and recognition–localization methods for end-effector of fruit–vegetable picking robots. Int. J. Adv. Robot. Syst. 2022, 19, 17–30. [Google Scholar] [CrossRef]

- Chen, S.; Xiong, J.; Jiao, J.; Xie, Z.; Huo, Z.; Hu, W. Citrus fruits maturity detection in natural environments based on convolutional neural networks and visual saliency map. Precis. Agric. 2022, 23, 1515–1531. [Google Scholar] [CrossRef]

- Wang, L.; Zhao, B.; Fan, J.; Hu, X.; Wei, S.; Li, Y.; Qian, Z.; Wei, C. Development of a tomato harvesting robot used in greenhouse. Int. J. Agric. Biol. Eng. 2017, 10, 140–149. [Google Scholar]

- Xu, L.; Wang, Y.; Shi, X.; Tang, Z.; Chen, X.; Wang, Y.; Zou, Z.; Huang, P.; Liu, B.; Yang, N.; et al. Real-time and accurate detection of citrus in complex scenes based on HPL-YOLOv4. Comput. Electron. Agric. 2023, 205, 107590. [Google Scholar] [CrossRef]

- Li, Y.; He, L.; Jia, J.; Lv, J.; Chen, J.; Qiao, X.; Wu, C. In-field tea shoot detection and 3D localization using an RGB-D camera. Comput. Electron. Agric. 2021, 185, 106149. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).