Abstract

Background: Computer-guided static implant surgery (CGSIS) is widely adopted to enhance the precision of dental implant placement. However, significant heterogeneity in reported accuracy values complicates evidence-based clinical decision-making. This variance is likely attributable to a fundamental lack of standardization in the methodologies used to assess dimensional accuracy. Objective: This scoping review aimed to systematically map, synthesize, and analyze the clinical methodologies used to quantify the dimensional accuracy of CGSIS. Methods: The review was conducted in accordance with the PRISMA-ScR guidelines. A systematic search of PubMed/MEDLINE, Scopus, and Embase was performed from inception to October 2025. Clinical studies quantitatively comparing planned versus achieved implant positions in human patients were included. Data were charted on study design, guide support type, data acquisition methods, reference systems for superimposition, measurement software, and accuracy metrics. Results: The analysis of 21 included studies revealed extensive methodological heterogeneity. Key findings included the predominant use of two distinct reference systems: post-operative CBCT (n = 12) and intraoral scanning with scan bodies (n = 6). A variety of proprietary and third-party software packages (e.g., coDiagnostiX, Geomagic, Mimics) were employed for superimposition, utilizing different alignment algorithms. Critically, this heterogeneity in measurement approach directly manifests in widely varying reported values for core accuracy metrics. In addition, the definitions and reporting of core accuracy metrics—specifically global coronal deviation (range of reported means: 0.55–1.70 mm), global apical deviation (0.76–2.50 mm), and angular deviation (2.11–7.14°)—were inconsistent. For example, these metrics were also reported using different statistical summaries (e.g., means with standard deviations or medians with interquartile ranges). Conclusions: The comparability and synthesis of evidence on CGSIS accuracy are significantly limited by non-standardized measurement approaches. The reported ranges of deviation values are a direct consequence of this methodological heterogeneity, not a comparison of implant system performance. Our findings highlight an urgent need for a consensus-based minimum reporting standard for future clinical research in this field to ensure reliable and translatable evidence.

1. Introduction

1.1. Background and Rationale

The accurate three-dimensional positioning of dental implants is a crucial factor for enduring success, affecting functional, aesthetic, and biological results [1,2,3]. Deviations from the intended position can result in clinical complications, encompassing prosthetic and soft-tissue concerns, as well as grave outcomes when involving critical anatomical structures [4,5,6,7]. Computer-Guided Static Implant Surgery (CGSIS) has been widely adopted to make things more predictable. This technology sends a virtual surgical plan to the operating room using a static surgical template. This is said to improve accuracy and make the procedure easier [8]. However, the evidence base for CGSIS accuracy exhibits considerable heterogeneity, complicating clinical interpretation and comparison. Reported accuracy metrics, including global and angular deviation, exhibit significant variability across studies [9,10,11]. Clinical variables play a role, but a major reason for this spread is the wide range of methods used to measure and report accuracy [12,13,14]. This includes differences in the reference systems used to record the implant’s position, the methods used to combine planned and actual data, and the language and statistical reporting of key metrics. This methodological inconsistency represents a significant knowledge deficit, obscuring the actual efficacy of guided surgery systems. The main goals of this scoping review are to:

- Systematically outline the methodologies utilized to assess the accuracy of CGSIS in the literature.

- Combine the different reference systems and metrics that are used to report dimensional deviations.

- Find out how different methodological choices can change how accurate the reported results are.

- Identify significant gaps and sources of diversity to facilitate future research.

1.2. Review Question and Objectives

Given the critical need to map the methodological landscape, identify key concepts, and examine the extent, range, and nature of methodological approaches—rather than to aggregate and appraise the quantitative results of those approaches, a scoping review was selected as the most appropriate methodology. This approach is explicitly designed for clarifying complex and heterogeneous fields where varied study designs and methods are used, and where a systematic review aimed at meta-analysis would be precluded by the very inconsistency it seeks to describe. The primary research question guiding this scoping review was: What methodologies are used in the clinical literature to assess the dimensional accuracy of computer-guided static implant surgery?

To address this question, the following specific objectives were formulated:

- To systematically map and categorize the clinical study designs employed in this domain, including randomized controlled trials, prospective cohorts, and retrospective analyses.

- To identify, classify, and compare the reference systems (e.g., CBCT, IOS) and measurement technologies used to capture the achieved implant position and perform the planned-to-achieved superimposition.

- To synthesize the definitions, terminology, and statistical reporting of core accuracy metrics, such as linear deviations (global, lateral, vertical) and angular deviation.

- To identify critical gaps and inconsistencies in the current methodological approaches and, based on the synthesized evidence, propose foundational elements for a standardized reporting framework to enhance the reliability and comparability of future clinical research on CGSIS accuracy.

By achieving these objectives, this review will provide a comprehensive map of the current methodological landscape, elucidate the sources of inconsistency that pervade the literature, and propose a pathway toward a more standardized, transparent, and clinically meaningful evidence base for this highly important technology.

2. Methods

2.1. Study Design

This scoping review was conducted in accordance with the Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) guidelines [15]. The objective was to systematically map the literature concerning the methodologies used to assess the dimensional accuracy of computer-guided static implant surgery (CGSIS). The completed PRISMA-ScR checklist is provided in Supplementary File S1. A review protocol was not formally registered.

2.2. Eligibility Criteria

The inclusion and exclusion criteria were established a priori using the Population, Concept, and Context (PCC) framework, as recommended by the Joanna Briggs Institute (JBI) for scoping reviews [16]. The specific criteria are detailed in Table 1.

Table 1.

Study Inclusion and Exclusion Criteria.

2.3. Search Strategy

A systematic literature search was performed across three major electronic bibliographic databases from their inception until October 2025: PubMed/MEDLINE, Scopus, and Embase (via Ovid). These platforms were selected for their comprehensive coverage of the biomedical and dental literature. The search strategy employed a combination of controlled vocabulary (e.g., MeSH terms) and free-text keywords related to the core concepts of “computer-guided surgery”, “dental implants”, and “accuracy”. The full search syntax for each database is available in Supplementary File S1.

2.4. Study Selection Process

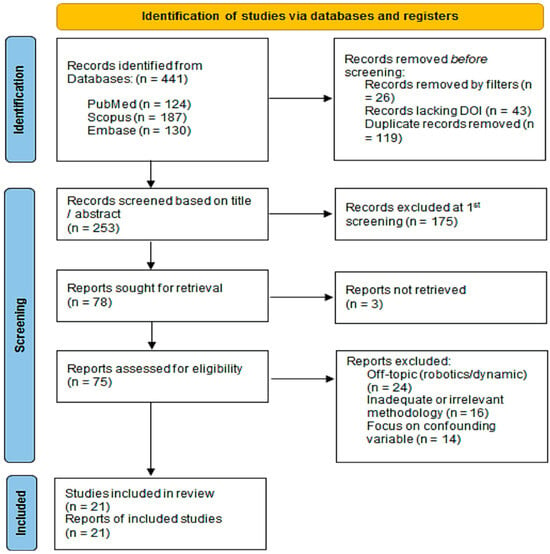

The study selection process, illustrated in the PRISMA flow diagram (see Section 3), was conducted in two sequential phases by two independent reviewers. All identified records were collated and de-duplicated using reference management software (Zotero 6) and Microsoft Excel 365/2021. Inter-rater agreement for the full-text screening phase was calculated, showing a high level of consistency (Cohen’s kappa = 0.87).

The first step in the screening process was to compare the titles and abstracts to the PCC criteria. This step found 253 records that could be useful. The main reason for exclusion at this stage (n = 175) was that the focus was on interventions that were not part of this review, like dynamic navigation, robotic surgery, or freehand placement.

In the second phase, the complete texts of the 78 potentially pertinent studies were pursued for acquisition. Three articles could not be found. The remaining 75 studies underwent a thorough full-text review to confirm their eligibility based on the predetermined criteria. Studies were excluded if they did not primarily focus on the evaluation of CGSIS accuracy, lacked sufficient methodological details concerning the measurement procedure, or fell into an ineligible publication category (e.g., review articles). This strict process led to the final inclusion of 21 studies that directly contributed to the review’s goal by providing strong, clinically based data on how to measure dimensional accuracy. There was discussion to settle any disagreements among the reviewers until everyone agreed.

2.5. Data Charting Process

We used a standard data charting form made with Microsoft Excel to systematically collect data from the 21 studies that were included. During the pilot phase, definitions and categories (e.g., “CBCT-to-CBCT”, “STL-to-STL via scan bodies”) were established for complex methodological items (e.g., “reference system for superimposition”) to ensure consistent extraction. The form was validated on five randomly chosen studies and improved over time to make sure it was clear and consistent. The two reviewers acquired the data separately and then compared and resolved any differences.

The extracted data items were chosen to comprehensively address the review’s objective and included:

- Bibliographic and General Study Information: Author, year, study design, and sample size (patients, implants, guides).

- Surgical Protocol: Guide support type and planning software used.

- Core Assessment Methodology: This was the focus of extraction, detailing the data acquisition method for the achieved implant position (e.g., post-operative CBCT, intraoral scan), the reference system for superimposition (e.g., planned STL vs. post-op STL), the specific software used for measurement, and the key accuracy metrics reported (e.g., linear and angular deviations).

2.6. Critical Appraisal of Individual Sources

Following the objective and methodological framework of a scoping review, which seeks to outline the primary concepts, evidence types, and methodologies within a discipline, a formal critical appraisal of individual sources for potential bias was not performed. This approach aligns with established practices for conducting scoping reviews. The Joanna Briggs Institute (JBI) manual for scoping reviews, for instance, says that quality assessment is not usually needed because the goal is to give a general picture of the evidence that is out there. The PRISMA extension for scoping reviews (PRISMA-ScR) also does not require a critical appraisal step. Instead, it concentrates on charting and methodically displaying the attributes of evidence. The primary objective of this review was to identify and elucidate the various methodological approaches employed in the literature, rather than to assess the strength or validity of their individual findings. Consequently, the analysis focused on meticulously defining the characteristics, scope, and distribution of the assessment methodologies employed in the included studies. This gives us a basic idea of the different ways that researchers in the field do their work. This can help with future primary research and more focused systematic reviews that include formal risk-of-bias assessments.

3. Results

3.1. Study Selection

Following the PRISMA ScR guidelines, the scoping search identified 441 records from the databases. The subsequent screening and selection process is illustrated in Figure 1.

Figure 1.

Flow diagram of the study selection process according to PRISMA ScR guidelines.

3.2. Characteristics of Included Studies

The 21 included studies, summarized in Table 2, were published between 2010 and 2025, reflecting the evolving nature of this field.

Table 2.

Characteristics of Included Studies.

The sample sizes varied considerably, ranging from 5 to 59 patients, 20 to 311 implants, and 5 to 60 surgical guides. A diversity of study designs was employed: 8 were Randomized Controlled Trials (RCTs), 6 were prospective studies, and 7 were retrospective in design. The guide support types investigated were predominantly tooth-supported (n = 12 studies) and mucosa-supported (n = 13 studies), with four studies also including bone-supported guides. A wide array of planning software was utilized, with coDiagnostiX (Dental Wings) and the SimPlant/Simplant Pro suite (Materialise) being the most common.

The various study designs (RCTs, prospective/retrospective cohorts) exhibit differing degrees of methodological control, potentially influencing the robustness of accuracy estimates. Nonetheless, these designs were not subjected to formal evaluation in this scoping review.

3.3. Mapping of Methodological Approaches

3.3.1. Reference Systems for Data Acquisition and Superimposition

The methodology for capturing the achieved implant position and the subsequent superimposition with the planned data demonstrated a fundamental dichotomy, as detailed in Table 3. This table highlights the fundamental dichotomy between CBCT-based and digital impression (IOS/STL)—based reference systems, as well as the diversity of software used for analysis.

Table 3.

Methodologies for Accuracy Assessment.

The most common approach, used in 12 studies (57%), involved post-operative CBCT to capture the 3D position of the placed implants [18,20,23,24,25,26,29,30,31,35,36]. The reference system for these studies typically involved superimposing the pre-operative CBCT (with the virtual plan integrated) onto the post-operative CBCT. The alignment was predominantly achieved through surface-based registration of stable anatomical structures (e.g., bone, teeth) using an Iterative Closest Point (ICP) algorithm, as seen in studies using Mimics version 27.0 or coDiagnostiX software version 9.12, to perform a ”point-to-point registration” between two 3D datasets.

The second major approach, employed in 6 studies (29%), utilized digital surface data to determine the implant position [17,18,19,21,27,28]. This involved using an intraoral scanner (IOS, TRIOS, Copenhagen, Denmark) or a laboratory scanner to capture the position of a scan body connected to the implant. The reference system here was the superimposition of the STL file from the planning software (containing the virtual scan body) onto the STL file from the post-operative scan. This method relied on best-fit alignment of the scan bodies themselves or of the surrounding dentition/palate.

Three studies utilized alternative or hybrid methods. Schwindling et al. (2021) used a laboratory scan of a stone cast, while Cassetta et al. (2014) employed a sophisticated protocol to decompose total error into random and systematic components [22,32]. Sarhan et al. (2021) was unique in using fiducial markers embedded in a radiographic guide for CBCT-to-CBCT alignment [23].

3.3.2. Software and Analytical Techniques

A diverse ecosystem of software was employed for the core tasks of superimposition and measurement, falling into three categories:

- Proprietary Dental Planning Software: Used in 8 studies, these tools (e.g., coDiagnostiX, BlueSky Plan) offered integrated “treatment evaluation” modules, streamlining the workflow but potentially operating as a “black box” with undisclosed algorithms [19,20,23,25,26,29,35,36].

- Third-party Engineering and Inspection Software: Employed in 8 studies, packages like Geomagic (Wrap, Control X, DesignX), Mimics, and MeshLab provided high flexibility and transparency in the alignment process but required advanced technical expertise [17,18,22,24,27,28,32,37].

- Hybrid or Custom Approaches: Five studies used combinations of the above or custom algorithms, highlighting the lack of a turn-key solution [21,30,31,32,33].

3.3.3. Accuracy Metrics and Statistical Reporting

In synthesizing the data from the included studies, this review uses the term “coronal deviation” to refer to linear error measured at the implant platform (also referred to in the literature as the entry point, shoulder, or cervix). “Apical deviation” refers to linear error measured at the implant apex.

The synthesis of accuracy outcomes is presented here not for direct cross-study comparison, which the methodological heterogeneity precludes, but to document the consequences of this heterogeneity on reported results and to catalog the diversity in metric definitions and statistical reporting (Table 4). The data illustrate the wide range of reported accuracy values and the heterogeneity in statistical reporting (means vs. median), which complicates cross-study comparison.

Table 4.

Reported Accuracy Outcomes.

The three core metrics were nearly universally reported:

- Global Coronal Deviation: Reported in all 21 studies, with mean values ranging from 0.55 mm to 1.70 mm [19,28].

- Global Apical Deviation: Also reported in all studies, with mean values ranging from 0.76 mm to 2.50 mm [33,34].

- Angular Deviation: Reported in 20 studies, with mean values ranging from 2.11° to 11.11° (median) [23,35].

Beyond these core metrics, studies frequently decomposed deviations into directional components (e.g., mesio-distal, bucco-lingual, vertical/depth), with 12 studies reporting such data [19,20,24,25,26,29,30,32,33,34,36,37]. This practice provides more clinically actionable feedback but further adds to the complexity of cross-study comparison.

A critical source of heterogeneity was the choice of descriptive statistics. The majority of studies (n = 15) reported data as Mean ± Standard Deviation. However, a substantial number (n = 6), particularly those with smaller sample sizes or investigating non-normal distributions, reported results as Median with Interquartile Range (IQR) or Quartiles (Q1, Q3) [19,23,26,29,30,32]. This fundamental difference in data summarization presents a major challenge for any meta-analytic synthesis.

In summary, the results paint a picture of a research field utilizing robust but highly disparate methodological pathways. The choice between CBCT-based or IOS/STL-based reference systems, proprietary or third-party software, and the reporting of means versus medians creates methodological heterogeneity that prevents meaningful comparison of accuracy values across studies.

4. Discussion

4.1. Summary of Evidence

This scoping review systematically mapped the methodologies used to assess the dimensional accuracy of computer-guided static implant surgery (CGSIS) across 21 clinical studies. The principal finding reveals profound methodological heterogeneity, which permeates every stage of the assessment workflow. This lack of standardization constitutes a fundamental issue that confounds the entire evidence base. Our analysis highlights significant variations in study designs, a core dichotomy in the reference systems for capturing achieved implant position (CBCT-based versus digital impression-based), a diverse array of software for superimposition and measurement, and inconsistent definitions and statistical reporting of core accuracy metrics. Collectively, these methodological disparities offer a clear, evidence-based explanation for the widely dispersed accuracy values present in the existing literature.

4.2. Interpretation of Key Findings

4.2.1. The Dichotomy of Reference Systems. Mapping Distinct Methodological Paradigms and Their Clinical Constructs

This review mapped two dominant, distinct methodological paradigms for assessing accuracy, each designed to answer a different clinical question and measuring a different construct.

This analysis reveals two dominant, yet fundamentally different, pathways for assessing clinical accuracy: post-operative CBCT and intraoral scanning (IOS) with scan bodies. It is important to interpret the substantial and systematic variation in reported numerical values primarily as a direct illustration of these methodological differences, rather than as a basis for direct clinical comparison or inference. Each pathway answers a distinct clinical question and carries inherent limitations that directly and predictably shape its results.

The CBCT-based approach assesses the bone-level implant position. While it provides a direct visualization of the implant within the bone, its accuracy is challenged by a lack of standardization. Scatter artifacts from the implant and adjacent structures can obscure the true implant axis, a methodological limitation that likely contributes to the elevated angular deviation values reported in studies such as Wang et al. (2024) and Sarhan et al. (2021) [18,23]. Consequently, the dispersion in CBCT-derived data must be interpreted in light of these inherent measurement constraints.

In contrast, the IOS-based approach benefits from the high precision of optical scanning, which is not susceptible to radiographic scatter [17,19,21,27,28]. This method captures the prosthetic platform with high fidelity, as demonstrated by studies like Luongo et al. (2024) and Monaco et al. (2020), which reported lower mean global coronal deviations (0.73 mm and ~0.92 mm, respectively) [17,25]. However, this method infers the apical position based on the planned implant axis, failing to account for any bending or deflection of the implant during insertion. It measures prosthetic-driven accuracy but does not validate the bone-level position, which is important for assessing proximity to vital structures. The choice of system, therefore, answers different clinical questions, and their results are not directly comparable (Table 5).

Table 5.

Comparative framework of the two primary reference systems for assessing CGSIS accuracy.

Recent investigations reinforce that this dichotomy is not merely binary but contains further methodological layers. For example, Limones et al. (2024) demonstrated that within the IOS pathway, the alignment method (e.g., using a single versus multiple scan bodies) can significantly alter the reported deviation values [38]. This underscores that the observed dispersion in data is often a direct artifact of the chosen assessment protocol. Furthermore, a novel non-radiologic method (digital registration method), which uses laboratory scans of physical casts with implant analogs, has been validated as comparable to CBCT-based assessment, offering a third, radiation-free pathway for certain cases [39,40,41,42].

In vitro study by Pellegrino et al. (2022) directly compared three assessment methods (CBCT, laboratory scanner, IOS) and found that while the operator’s experience significantly influenced accuracy, the choice of evaluation method also yielded statistically different results, underscoring that methodology itself is a variable that can confound performance comparisons [43].

Therefore, these pathways are not interchangeable, and their results represent distinct constructs, bone-level versus restorative-level accuracy, that should not be directly compared.

4.2.2. The Problem of Inconsistent Terminology and Statistical Reporting

Recent investigations suggest that this dichotomy is not solely binary but encompasses additional methodological aspects. For instance, Limones et al. (2024) illustrated that within the IOS pathway, the alignment method (e.g., employing a single versus multiple scan bodies) can significantly modify the reported deviation values [38]. This demonstrates that the perceived variability in data frequently results directly from the selected assessment protocol. Additionally, a new non-radiologic technique (digital registration method) that employs laboratory scans of physical casts with implant analogs has been confirmed as equivalent to CBCT-based evaluation, providing a third, radiation-free option for specific instances [44].

Furthermore, there is a widespread conflation of the ISO-defined terms trueness (deviation from a true value) and precision (reproducibility). Most clinical studies measure trueness—the deviation from the plan. However, without repeated measurements to assess precision, the reliability of a system’s performance remains unknown. Adhering to this standardized terminology would bring much-needed clarity.

Recent literature confirms the persistent use of heterogeneous metrics. For instance, Mittal et al. (2025) reported deviations with means and standard deviations, while Song et al. (2025) also used means and SDs but focused on “trueness”, correctly adopting the ISO term [45,46,47]. This represents a small step towards standardization in terminology, though statistical reporting remains inconsistent.

4.2.3. The Impact of Clinical Reality on Measured Accuracy

The extracted data consistently illustrate that clinical complexity is a primary determinant of reported accuracy, a factor that must be contextualized within the aforementioned methodological frameworks. Controlled scenarios in partially edentulous cases yield lower deviations [17,19,27], while challenging clinical conditions—such as mucosa-supported guides in edentulous arches—consistently result in higher reported inaccuracies [18,23,30].

This pattern quantitatively validates that clinical variables (e.g., guide fit, tissue resilience) are significant, measurable sources of error [32]. Recent studies further dissect these variables, showing that factors like guide manufacturing method, sterilization, and surgical cantilever length have a quantifiable and significant impact on the final deviation values [48,49]. Therefore, the dispersion in the evidence base should be understood as a combined function of (a) the chosen measurement pathway’s inherent limitations, (b) inconsistent data reporting, and (c) the genuine spectrum of clinical difficulty. Isolating the clinical signal from the methodological noise remains a central challenge in interpreting this body of literature.

4.3. Implications for Practice and Research

4.3.1. For Researchers: Proposed Framework

To mitigate the identified heterogeneity and foster a more cumulative science, based on the evidence mapped in this review, we propose a conceptual framework for a Minimum Reporting Standard for future clinical studies on CGSIS accuracy. We emphasize that this is a proposal derived from the synthesis of current methodological inconsistencies, intended to spark discussion and consensus-building, and not a formally endorsed guideline. This framework suggests that future studies aim to report the following elements:

- Explicit Methodology Description: A detailed account of the reference system, including the specific hardware (CBCT machine, IOS model) and software (name and version) used for superimposition and measurement, including the alignment algorithm (e.g., ICP, best-fit).

- Should include Core Metrics: Reporting of the following three metrics for every implant, as a minimum:

- Global 3D deviation at the implant platform (coronal);

- Global 3D deviation at the implant apex;

- Three-dimensional angular deviation.

- Standardized Statistical Reporting: Providing both mean ± standard deviation and median with interquartile range (IQR) for all core metrics to accommodate both parametric and non-parametric understanding of the data.

- Adherence to ISO Terminology: Clearly stating that the study is assessing trueness and, where possible, designing studies to also evaluate precision.

- Reporting of Clinical Confounders: Should include reporting of key clinical variables known to affect accuracy, including but not limited to: guide support type, edentulism status (fully vs. partially), surgical protocol (fully vs. partially guided), implant region (anterior/posterior), and use of anchor pins.

4.3.2. For Clinicians: A Critical Lens for Interpreting the Evidence

Clinicians must interpret published accuracy data with a critical understanding of the methodology employed. A reported accuracy value is not an absolute truth but a measurement contingent on the assessment protocol. When evaluating a system or study, clinicians should ask: Was the achieved position captured by CBCT or IOS? What was the guide support type? The answers to these questions provide essential context. For instance, a low deviation value from an IOS-based study in a tooth-supported case may not be transferable to the clinical reality of a fully edentulous, mucosa-supported rehabilitation. Understanding these methodological nuances is essential to forming realistic expectations and making evidence-based choices.

The emergence of dynamic navigation and robotic-assisted surgery (r-CAIS) provides an important new context. Multiple recent studies consistently show that r-CAIS achieves significantly higher accuracy than s-CAIS [50,51,52,53]. For instance, a clinical study on fully edentulous jaws reported high accuracy for dynamic navigation, a technology whose assessment methodology faces similar standardization challenges [54]. Chen et al. (2025) reported mean angular deviations of 1.51 for robotics versus 3.44 for static guides [46]. When reading the literature, clinicians must now differentiate between these fundamentally different guided technologies, as the performance benchmarks are shifting.

4.4. Limitations of the Review

While this review provides a comprehensive map of the methodological landscape, certain limitations must be acknowledged. The search was restricted to three major databases, and although no language restrictions were applied, it is possible that relevant studies in other databases or in the gray literature were missed. The field of digital dentistry is evolving at a rapid pace; new planning software, scanning technologies, and analysis tools are continuously emerging. Furthermore, the eligibility criteria required accuracy to be the primary outcome with detailed methodological description. This necessarily excluded studies where accuracy was a secondary outcome or reported with less detail, which may limit the depiction of reporting practices in the broader clinical literature. This review represents a snapshot in time, and the methodological trends we identified may shift with future technological advancements. Finally, as a scoping review, our objective was to map the evidence rather than appraise the quality of individual studies, which means our conclusions speak to the collective practices of the field rather than the validity of any single finding.

5. Conclusions

This scoping review demonstrates that clinical literature on the dimensional accuracy of Computer-Guided Static implant Surgery (CGSIS) is marked by extensive methodological heterogeneity. The direct comparison of accuracy values across studies is hindered by inconsistent reference systems, diverse measurement software, and variable reporting of metrics and statistics. Consequently, reported outcomes are highly dependent on the specific assessment protocol used. To facilitate the generation of comparable, reliable, and clinically meaningful evidence, this review highlights the necessity for standardized methodology and reporting in CGSIS accuracy research and suggests key elements to inform future consensus-building efforts. The reported performance of CGSIS is inextricably linked to the assessment protocol used, rather than reflecting the technology’s intrinsic capability alone.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/dj14010043/s1, Supplementary File S1: PRISMA-ScR Checklist.

Author Contributions

Conceptualization, R.-I.V., A.M.D. and S.N.R.; methodology, R.-I.V., M.S.T., A.M.V., A.M. and A.M.D.; software, R.-I.V., A.M.D., I.G. and L.B.; validation, R.-I.V., M.S.T., N.I. and A.M.D.; formal analysis, R.-I.V., S.N.R. and A.M.D.; investigation, R.-I.V., A.M.D. and A.O.A.; resources, A.M.D., R.-I.V., E.-O.L. and S.N.R.; data curation, C.C.H., I.G. and N.I.; writing—original draft preparation, A.M.D., R.-I.V. and S.N.R.; writing—review and editing, R.-I.V., A.M.D., L.B. and A.M.V.; visualization, A.M.D., R.-I.V. and M.S.T.; supervision, R.-I.V., A.M., A.O.A. and A.M.D.; project administration, S.N.R., R.-I.V., A.M.D., C.C.H. and L.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CGSIS | Computer-guided static implant surgery |

| PRISMA-ScR | Preferred Reporting Items for Systematic reviews and Meta-Analyses extension for Scoping Reviews |

| CBCT | Cone-Beam Computed Tomography |

| IOS | Intraoral Scanner |

| STL | Standard Tessellation Language/Stereolithography |

| RCT | Randomized Controlled Trial. |

| JBI | Joanna Briggs Institute |

| PCC | Population, Concept, Context |

| IQR | Interquartile Range |

| s-CAIS | Static computer-assisted implant surgery |

| r-CAIS | Robotic-assisted implant surgery |

References

- Yan, Y.; Lin, Y.; Wei, D.; Di, P. Three-Dimensional Analysis of Implant-Supported Fixed Prosthesis in the Edentulous Maxilla: A Retrospective Study. BMC Oral Health 2025, 25, 1223. [Google Scholar] [CrossRef] [PubMed]

- Vartan, N.; Gath, L.; Olmos, M.; Plewe, K.; Vogl, C.; Kesting, M.R.; Wichmann, M.; Matta, R.E.; Buchbender, M. Accuracy of Three-Dimensional Computer-Aided Implant Surgical Guides: A Prospective In Vivo Study of the Impact of Template Design. Dent. J. 2025, 13, 150. [Google Scholar] [CrossRef] [PubMed]

- Peitsinis, P.R.; Blouchou, A.; Chatzopoulos, G.S.; Vouros, I.D. Optimizing Implant Placement Timing and Loading Protocols for Successful Functional and Esthetic Outcomes: A Narrative Literature Review. J. Clin. Med. 2025, 14, 1442. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.T.; Buser, D.; Sculean, A.; Belser, U.C. Complications and Treatment Errors in Implant Positioning in the Aesthetic Zone: Diagnosis and Possible Solutions. Periodontol. 2000 2023, 92, 220–234. [Google Scholar] [CrossRef]

- Kim, S.-G. Clinical Complications of Dental Implants. In Implant Dentistry—A Rapidly Evolving Practice; Turkyilmaz, I., Ed.; InTech: London, UK, 2011; ISBN 978-9533076584. [Google Scholar]

- Mistry, A.; Ucer, C.; Thompson, J.; Khan, R.; Karahmet, E.; Sher, F. 3D Guided Dental Implant Placement: Impact on Surgical Accuracy and Collateral Damage to the Inferior Alveolar Nerve. Dent. J. 2021, 9, 99. [Google Scholar] [CrossRef]

- Monje, A.; Kan, J.Y.; Borgnakke, W. Impact of Local Predisposing/Precipitating Factors and Systemic Drivers on Peri-Implant Diseases. Clin. Implant Dent. Relat. Res. 2023, 25, 640–660. [Google Scholar] [CrossRef]

- Liu, W.; Zhu, F.; Samal, A.; Wang, H. Suggested Mesiodistal Distance for Multiple Implant Placement Based on the Natural Tooth Crown Dimension with Digital Design. Clin. Implant Dent. Relat. Res. 2022, 24, 801–808. [Google Scholar] [CrossRef]

- Ribas, B.R.; Nascimento, E.H.L.; Freitas, D.Q.; Pontual, A.D.A.; Pontual, M.L.D.A.; Perez, D.E.C.; Ramos-Perez, F.M.M. Positioning Errors of Dental Implants and Their Associations with Adjacent Structures and Anatomical Variations: A CBCT-Based Study. Imaging Sci. Dent. 2020, 50, 281. [Google Scholar] [CrossRef]

- Kheiri, L.; Amid, R.; Kadkhodazadeh, M.; Kheiri, A. What Are the Outcomes of Dental Implant Placement in Sites with Oroantral Communication Using Different Treatment Approaches?: A Systematic Review. BMC Oral Health 2025, 25, 652. [Google Scholar] [CrossRef]

- Wright, E.F. Persistent Dysesthesia Following Dental Implant Placement: A Treatment Report of 2 Cases. Implant Dent. 2011, 20, 20–26. [Google Scholar] [CrossRef]

- Manor, Y.; Anavi, Y.; Gershonovitch, R.; Lorean, A.; Mijiritsky, E. Complications and Management of Implants Migrated into the Maxillary Sinus. Int. J. Periodontics Restor. Dent. 2018, 38, e112–e118. [Google Scholar] [CrossRef] [PubMed]

- D’haese, J.; Ackhurst, J.; Wismeijer, D.; De Bruyn, H.; Tahmaseb, A. Current State of the Art of Computer-guided Implant Surgery. Periodontol. 2000 2017, 73, 121–133. [Google Scholar] [CrossRef]

- Tia, M.; Guerriero, A.T.; Carnevale, A.; Fioretti, I.; Spagnuolo, G.; Sammartino, G.; Gasparro, R. Positional Accuracy of Dental Implants Placed by Means of Fully Guided Technique in Partially Edentulous Patients: A Retrospective Study. Clin. Exp. Dent. Res. 2025, 11, e70144. [Google Scholar] [CrossRef]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef]

- Peters, M.D.; Godfrey, C.; McInerney, P.; Munn, Z.; Tricco, A.C.; Khalil, H. Scoping Reviews. In JBI Manual for Evidence Synthesis; Aromataris, E., Lockwood, C., Porritt, K., Pilla, B., Jordan, Z., Eds.; JBI: Adelaide, Australia, 2024; ISBN 9780648848820. [Google Scholar]

- Luongo, F.; Lerner, H.; Gesso, C.; Sormani, A.; Kalemaj, Z.; Luongo, G. Accuracy in Static Guided Implant Surgery: Results from a Multicenter Retrospective Clinical Study on 21 Patients Treated in Three Private Practices. J. Dent. 2024, 140, 104795. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Yang, J.; Siow, L.; Wang, Y.; Zhang, X.; Zhou, Y.; Yu, M.; Wang, H. Clinical Accuracy of Partially Guided Implant Placement in Edentulous Patients: A Computed Tomography-Based Retrospective Study. Clin. Oral Implants Res. 2024, 35, 31–39. [Google Scholar] [CrossRef]

- Sun, Y.; Ding, Q.; Yuan, F.; Zhang, L.; Sun, Y.; Xie, Q. Accuracy of a Chairside, Fused Deposition Modeling Three-Dimensional-Printed, Single Tooth Surgical Guide for Implant Placement: A Randomized Controlled Clinical Trial. Clin. Oral Implants Res. 2022, 33, 1000–1009. [Google Scholar] [CrossRef]

- Ngamprasertkit, C.; Aunmeungthong, W.; Khongkhunthian, P. The Implant Position Accuracy between Using Only Surgical Drill Guide and Surgical Drill Guide with Implant Guide in Fully Digital Workflow: A Randomized Clinical Trial. Oral Maxillofac. Surg. 2022, 26, 229–237. [Google Scholar] [CrossRef] [PubMed]

- Orban, K.; Varga, E.; Windisch, P.; Braunitzer, G.; Molnar, B. Accuracy of Half-Guided Implant Placement with Machine-Driven or Manual Insertion: A Prospective, Randomized Clinical Study. Clin. Oral Investig. 2022, 26, 1035–1043. [Google Scholar] [CrossRef]

- Schwindling, F.S.; Juerchott, A.; Boehm, S.; Rues, S.; Kronsteiner, D.; Heiland, S.; Bendszus, M.; Rammelsberg, P.; Hilgenfeld, T. Three-Dimensional Accuracy of Partially Guided Implant Surgery Based on Dental Magnetic Resonance Imaging. Clin. Oral Implants Res. 2021, 32, 1218–1227. [Google Scholar] [CrossRef]

- Sarhan, M.M.; Khamis, M.M.; El-Sharkawy, A.M. Evaluation of the Accuracy of Implant Placement by Using Fully Guided versus Partially Guided Tissue-Supported Surgical Guides with Cylindrical versus C-Shaped Guiding Holes: A Split-Mouth Clinical Study. J. Prosthet. Dent. 2021, 125, 620–627. [Google Scholar] [CrossRef]

- Chai, J.; Liu, X.; Schweyen, R.; Setz, J.; Pan, S.; Liu, J.; Zhou, Y. Accuracy of Implant Surgical Guides Fabricated Using Computer Numerical Control Milling for Edentulous Jaws: A Pilot Clinical Trial. BMC Oral Health 2020, 20, 288. [Google Scholar] [CrossRef]

- Monaco, C.; Arena, A.; Corsaletti, L.; Santomauro, V.; Venezia, P.; Cavalcanti, R.; Di Fiore, A.; Zucchelli, G. 2D/3D Accuracies of Implant Position after Guided Surgery Using Different Surgical Protocols: A Retrospective Study. J. Prosthodont. Res. 2020, 64, 424–430. [Google Scholar] [CrossRef]

- Kiatkroekkrai, P.; Takolpuckdee, C.; Subbalekha, K.; Mattheos, N.; Pimkhaokham, A. Accuracy of Implant Position When Placed Using Static Computer-Assisted Implant Surgical Guides Manufactured with Two Different Optical Scanning Techniques: A Randomized Clinical Trial. Int. J. Oral Maxillofac. Surg. 2020, 49, 377–383. [Google Scholar] [CrossRef] [PubMed]

- Smitkarn, P.; Subbalekha, K.; Mattheos, N.; Pimkhaokham, A. The Accuracy of Single-Tooth Implants Placed Using Fully Digital-Guided Surgery and Freehand Implant Surgery. J Clin. Periodontol. 2019, 46, 949–957. [Google Scholar] [CrossRef] [PubMed]

- Younes, F.; Cosyn, J.; De Bruyckere, T.; Cleymaet, R.; Bouckaert, E.; Eghbali, A. A Randomized Controlled Study on the Accuracy of Free-Handed, Pilot-Drill Guided and Fully Guided Implant Surgery in Partially Edentulous Patients. J Clin. Periodontol. 2018, 45, 721–732. [Google Scholar] [CrossRef]

- Schnutenhaus, S.; Edelmann, C.; Rudolph, H.; Luthardt, R.G. Retrospective Study to Determine the Accuracy of Template-Guided Implant Placement Using a Novel Nonradiologic Evaluation Method. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2016, 121, e72–e79. [Google Scholar] [CrossRef] [PubMed]

- Vercruyssen, M.; Cox, C.; Coucke, W.; Naert, I.; Jacobs, R.; Quirynen, M. A Randomized Clinical Trial Comparing Guided Implant Surgery (Bone- or Mucosa-Supported) with Mental Navigation or the Use of a Pilot-Drill Template. J. Clin. Periodontol. 2014, 41, 717–723. [Google Scholar] [CrossRef]

- Verhamme, L.M.; Meijer, G.J.; Boumans, T.; Schutyser, F.; Bergé, S.J.; Maal, T.J.J. A Clinically Relevant Validation Method for Implant Placement after Virtual Planning. Clin. Oral Implants Res. 2013, 24, 1265–1272. [Google Scholar] [CrossRef]

- Cassetta, M.; Di Mambro, A.; Giansanti, M.; Stefanelli, L.V.; Barbato, E. How Does an Error in Positioning the Template Affect the Accuracy of Implants Inserted Using a Single Fixed Mucosa-Supported Stereolithographic Surgical Guide? Int. J. Oral Maxillofac. Surg. 2014, 43, 85–92. [Google Scholar] [CrossRef]

- Arısan, V.; Karabuda, Z.C.; Özdemir, T. Accuracy of Two Stereolithographic Guide Systems for Computer-Aided Implant Placement: A Computed Tomography-Based Clinical Comparative Study. J. Periodontol. 2010, 81, 43–51. [Google Scholar] [CrossRef]

- Kraft, B.; Frizzera, F.; De Freitas, R.M.; De Oliveira, G.J.L.P.; Marcantonio Junior, E. Impact of Fully or Partially Guided Surgery on the Position of Single Implants Immediately Placed in Maxillary Incisor Sockets: A Randomized Controlled Clinical Trial. Clin. Implant Dent. Relat. Res. 2020, 22, 631–637. [Google Scholar] [CrossRef]

- Alqutaibi, A.Y.; Al-Gabri, R.S.; Ibrahim, W.I.; Elawady, D. Trueness of Fully Guided versus Partially Guided Implant Placement in Edentulous Maxillary Rehabilitation: A Split-Mouth Randomized Clinical Trial. BMC Oral Health 2025, 25, 1680. [Google Scholar] [CrossRef] [PubMed]

- D’Addazio, G.; Xhajanka, E.; Traini, T.; Santilli, M.; Rexhepi, I.; Murmura, G.; Caputi, S.; Sinjari, B. Accuracy of DICOM–DICOM vs. DICOM–STL Protocols in Computer-Guided Surgery: A Human Clinical Study. J. Clin. Med. 2022, 11, 2336. [Google Scholar] [CrossRef] [PubMed]

- Cassetta, M.; Di Mambro, A.; Giansanti, M.; Stefanelli, L.V.; Cavallini, C. The Intrinsic Error of a Stereolithographic Surgical Template in Implant Guided Surgery. Int. J. Oral Maxillofac. Surg. 2013, 42, 264–275. [Google Scholar] [CrossRef]

- Limones, A.; Cascos, R.; Molinero-Mourelle, P.; Abou-Ayash, S.; De Parga, J.A.M.V.; Celemin, A.; Gómez-Polo, M. Impact of the Superimposition Methods on Accuracy Analyses in Complete-Arch Digital Implant Investigation. J. Dent. 2024, 147, 105081. [Google Scholar] [CrossRef]

- Fang, Y.; An, X.; Jeong, S.-M.; Choi, B.-H. Accuracy of Computer-Guided Implant Placement in Anterior Regions. J. Prosthet. Dent. 2019, 121, 836–842. [Google Scholar] [CrossRef]

- Marquez Bautista, N.; Meniz-García, C.; López-Carriches, C.; Sánchez-Labrador, L.; Cortés-Bretón Brinkmann, J.; Madrigal Martínez-Pereda, C. Accuracy of Different Systems of Guided Implant Surgery and Methods for Quantification: A Systematic Review. Appl. Sci. 2024, 14, 11479. [Google Scholar] [CrossRef]

- Floriani, F.; Jurado, C.A.; Cabrera, A.J.; Duarte, W.; Porto, T.S.; Afrashtehfar, K.I. Depth Distortion and Angular Deviation of a Fully Guided Tooth-Supported Static Surgical Guide in a Partially Edentulous Patient: A Systematic Review and Meta-Analysis. J. Prosthodont. 2024, 33, 10–24. [Google Scholar] [CrossRef]

- Carini, F.; Coppola, G.; Saggese, V. Comparison between CBCT Superimposition Protocol and S.T.A.P. Method to Evaluate the Accuracy in Implant Insertion in Guided Surgery. Minerva Dent. Oral Sci. 2022, 71, 223–232. [Google Scholar] [CrossRef] [PubMed]

- Pellegrino, G.; Lizio, G.; D’Errico, F.; Ferri, A.; Mazzoni, A.; Bianco, F.D.; Stefanelli, L.V.; Felice, P. Relevance of the Operator’s Experience in Conditioning the Static Computer-Assisted Implantology: A Comparative In Vitro Study with Three Different Evaluation Methods. Appl. Sci. 2022, 12, 9561. [Google Scholar] [CrossRef]

- Reyes, A.; Turkyilmaz, I.; Prihoda, T.J. Accuracy of Surgical Guides Made from Conventional and a Combination of Digital Scanning and Rapid Prototyping Techniques. J. Prosthet. Dent. 2015, 113, 295–303. [Google Scholar] [CrossRef]

- Song, W.; Deng, C.; Rao, C.; Luo, Y.; Yang, X.; Wu, Y.; Qu, Y.; Man, Y. Multifactorial Analysis of Trueness in Computer-Assisted Implant Surgery: A Retrospective Study. Clin. Oral Implants Res. 2025, 36, 1095–1105. [Google Scholar] [CrossRef]

- Chen, N.; Wang, Y.; Zou, H.; Chen, Y.; Huang, Y. Comparison of Accuracy and Systematic Precision Between Autonomous Dental Robot and Static Guide: A Retrospective Study. Clin. Implant Dent. Relat. Res. 2025, 27, e70050. [Google Scholar] [CrossRef]

- Mittal, S.; Kaurani, P.; Goyal, R. Comparison of Accuracy between Single Posterior Immediate and Delayed Implants Placed Using Computer Guided Implant Surgery and a Digital Laser Printed Surgical Guide: A Clinical Investigation. J. Prosthet. Dent. 2025, 134, 1133–1139. [Google Scholar] [CrossRef] [PubMed]

- Seidel, A.; Zerrahn, K.; Wichmann, M.; Matta, R.E. Accuracy of Drill Sleeve Housing in 3D-Printed and Milled Implant Surgical Guides: A 3D Analysis Considering Machine Type, Layer Thickness, Sleeve Position, and Steam Sterilization. Bioengineering 2025, 12, 799. [Google Scholar] [CrossRef] [PubMed]

- Miyashita, M.; Leepong, N.; Vichitkunakorn, P.; Suttapreyasri, S. Impact of Cantilever Length on the Accuracy of Static CAIS in Posterior Distal Free-End Regions. Clin. Implant Dent. Relat. Res. 2025, 27, e70020. [Google Scholar] [CrossRef]

- Yu, M.; Luo, Y.; Li, B.; Xu, L.; Yang, X.; Man, Y. A Comparative Prospective Study on the Accuracy and Efficiency of Autonomous Robotic System Versus Dynamic Navigation System in Dental Implant Placement. J. Clin. Periodontol. 2025, 52, 280–288. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Yi, C.; Yu, Z.; Wu, A.; Zhang, Y.; Lin, Y. Accuracy Assessment of Implant Placement with versus without a CAD/CAM Surgical Guide by Novices versus Specialists via the Digital Registration Method: An in Vitro Randomized Crossover Study. BMC Oral Health 2023, 23, 426. [Google Scholar] [CrossRef]

- Nulty, A. A Literature Review on Prosthetically Designed Guided Implant Placement and the Factors Influencing Dental Implant Success. Br. Dent. J. 2024, 236, 169–180. [Google Scholar] [CrossRef]

- Li, J.; Dai, M.; Wang, S.; Zhang, X.; Fan, Q.; Chen, L. Accuracy of Immediate Anterior Implantation Using Static and Robotic Computer-Assisted Implant Surgery: A Retrospective Study. J. Dent. 2024, 148, 105218. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Ge, Y.; Mühlemann, S.; Pan, S.; Jung, R.E. The Accuracy of Dynamic Computer Assisted Implant Surgery in Fully Edentulous Jaws: A Retrospective Case Series. Clin. Oral Implants Res. 2023, 34, 1278–1288. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2026 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.