Abstract

We propose a new iterative scheme without memory for solving nonlinear equations. The proposed scheme is based on a cubically convergent Hansen–Patrick-type method. The beauty of our techniques is that they work even though the derivative is very small in the vicinity of the required root or . On the contrary, the previous modifications either diverge or fail to work. In addition, we also extended the same idea for an iterative method with memory. Numerical examples and comparisons with some of the existing methods are included to confirm the theoretical results. Furthermore, basins of attraction are included to describe a clear picture of the convergence of the proposed method as well as that of some of the existing methods. Numerical experiments are performed on engineering problems, such as fractional conversion in a chemical reactor, Planck’s radiation law problem, Van der Waal’s problem, trajectory of an electron in between two parallel plates. The numerical results reveal that the proposed schemes are of utmost importance to be applied on various real–life problems. Basins of attraction also support this aspect.

Keywords:

nonlinear equation; iterative method with memory; R-order of convergence; basin of attraction MSC:

65H05; 65H99

1. Introduction

Determining the zeros of a nonlinear function promptly and accurately has become a very crucial task in many branches of science and technology. The most used technique in this regard is Newton’s method [1], which converges linearly for multiple roots and quadratically for simple roots. Various higher order schemes have also been presented in [2,3,4,5,6,7,8]. One amongst them is the Hansen–Patrick’s family [9] of order 3 given by

where and . This family comprises of Euler’s method for , Ostrowski’s square-root method for , Laguerre’s method for and Newton’s method as a limiting case. Despite the fact that it has cubic convergence, the involvement of second order derivative is limiting the applied region. This factor has inspired many researchers to concentrate on multipoint methods [10], since they overcome the drawbacks of one-point iterative methods with respect to the convergence order. The main motive in the development of new iterative methods is to achieve an order of convergence that is as high as possible with a certain number of functional evaluations per iteration.

Sharma et al. [11] had modified Equation (1), which is given as follows:

where and , are free parameters. Here, . Instead of , the authors calculated a second-order derivative of f at . Moreover, several developments of Hansen–Patrick-type methods have been presented and examined in [12] in order to eradicate the second-order derivative. Using some appropriate approximation for , authors in [12] presented the following method:

where and are free parameters. The prominent problem when using such types of methods is that they fail to work in the case where and diverge or fail when the derivative is very small in the vicinity of the required root. That is why our main goal is to develop a method that is globally convergent.

On the other hand, it is sometimes possible to increase the convergence order with no further functional evaluation by making use of a self-accelerating parameter. Traub [1] referred these methods as schemes with memory as these methods use the previous information to calculate the next iterate. Traub was the first to introduce the idea of methods with memory. He made minute alterations in the already existing Steffensen method [13] and presented the first method with memory [1] as follows:

where is a self-accelerating parameter given as

This method has an order of convergence of 2.414. However, if we use a better self-accelerating parameter, there are apparent chances that the order of convergence will increase.

Using a secant approach, and by reusing information from the previous iteration, Traub refined a Steffensen-like method and presented the following method:

having R-order of convergence [14] at least 2.414.

In addition, a new approach of hybrid methods is being adopted for solving nonlinear problems which can be seen in [15,16].

For finding the R-order of convergence of our proposed method with memory, we make use of the Theorem 1 given by Traub.

The rest of the paper is organized as follows. Section 2 contains the development of a new iterative method without memory and the proof of its order of convergence. Section 3 covers the inclusion of memory to develop a new iterative method with memory and its error analysis. Numerical results for the proposed methods and comparisons with some of the existing methods to illustrate our theoretical results are given in Section 4. Section 5 depicts the convergence of the methods using basins of attraction. Lastly, Section 6 presents conclusions.

Theorem 1.

Suppose that is an iterative method with memory that generates a sequence (converging to the root ξ) of approximations to ξ. If there exists a nonzero constant ζ and nonnegative numbers , , such that the inequality

holds, then the R-order of convergence of the iterative method satisfies the inequality

where is the unique positive root of the equation

2. Iterative Method without Memory and Its Convergence Analysis

We aim to construct a new two-point Hansen–Patrick-type method without memory in this section.

Suppose be the Newton’s iterate. Expanding about a point by Taylor series, we get

Further, if we expand the function about by Taylor series, we have

Using previous developments, we have

As we can see, this estimation for uses 4 functional evaluations per iteration [8], , , and . To decrease the number of functional evaluations, King’s approximation [17] may be used which is

when , where is a free parameter.

Now, using this new approximation for in Equation (1), authors in [12] presented the following scheme:

where and are free parameters.

Now, in order to extend to the method with memory, we come up with an idea of introducing a parameter b in the scheme given by Equation (7) and we present a modification in this method as follows:

where and are free parameters.

Next, we establish the convergence results for our proposed method without memory given by Equation (8).

Convergence Analysis

Theorem 2.

Suppose that be a real function suitably differentiable in a domain D. If is a simple root of and an initial guess is sufficiently close to ξ, then the iterative method given by Equation (8) converges to ξ with convergence order having the following error relation,

where and

Proof.

Expanding about by Taylor series, we have

Then,

Further, the Taylor’s expansion of is

3. Iterative Method with Memory and Its Convergence Analysis

Now, we present an extension to the method given by Equation (8) by inclusion of memory having improved convergence order without the addition of any new functional evaluation.

If we observe clearly, it can be seen from the error relation given in Equation (15), if , then the order of convergence of the presented scheme given by Equation (8) can possibly be improved, but this value can’t be reached because the values of and are not practically available. Instead, we can use approximations calculated by already available information [18]. So, to improve the convergence order, we give an estimation using first order divided difference [19], given by , where denotes a first-order divided difference.

So, by replacing b by in the method given by Equation (8), we obtain a new family with memory using the two previous iterations as follows:

where and are free parameters.

Next, we establish the convergence results for our proposed method with memory given by Equation (16).

Convergence Analysis

Theorem 3.

Suppose that be a real function suitably differentiable in a domain D. If is a simple root of and an initial guess is sufficiently close to ξ, then the iterative method given by Equation (16) converges to ξ with convergence order at least .

Proof.

Using Taylor series expansion about , we get

Then,

Now, using previous developments, we have

Now, we can see the lowest term of the error equation is , therefore, by Theorem 1, the unique positive root of the polynomial gives the R-order of the proposed scheme given by Equation (16), which is . This completes our proof. □

4. Numerical Results

Now, we will investigate the numerical results of our proposed scheme. Further, we will be comparing those results with some existing schemes, both with and without memory. All calculations have been accomplished using Mathematica 11.1 in multiple precision arithmetic environment with the specification of a processor Intel(R) Pentium(R) CPU B960 @ 2.20 GHz (64-bit operating system) Windows 7 Ultimate @ 2009 Microsoft Corporation. We suppose that the initial value of must be selected prior to performing the iterations and a suitable be given. We have taken (or ) in our computations. In all the numerical values, refers to .

We are using the following functions for our computations:

- having one of the real zero .

- having one of the real zero 0.

- having one of the real zero 2.

- having one of the real zero .

- having one of the real zero .

The results are computed by using the initial guesses , , , 0 and for functions , , , and respectively. To check the theoretical order of convergence, we have calculated the computational order of convergence [20], (COC) using the following formula,

considering the last three approximations in the iterative procedure. The errors of approximations to the respective zeros of the test functions, and COC are displayed in Table 1 and Table 2.

Table 1.

Comparison of different methods without memory.

Table 2.

Comparison of different methods with memory.

We have demonstrated results for our special cases without memory by taking , and in Equation (8) denoted by , and , respectively. Further, for our special cases with memory by taking , and in Equation (16) denoted by , and , respectively.

For comparisons, we are considering the methods given below:

Hansen–Patrick’s family () [9]:

where and .

Sharma et al. method without memory () [11]:

where and and are free parameters.

Halley’s method () [3]:

where and .

Traub’s method with memory () [1]:

where is a self-accelerating parameter given as

Traub’s method with memory () [1]:

Remark 1.

In Table 1, for the function , as the derivative of the function becomes zero, the existing methods , and fail. Further, for the function , , and converge to undesired root ‘’. Further, in Table 2, for the function , and converge to the desired root but in 11 and 14 number of iterations, respectively as we can see the errors of approximations are large in these cases. Further, for the function , and both converge to undesired root ‘’.

Further, we are considering some real life problems which are as follows:

Example 1.

Firstly, we analyze the well-known Planck’s radiation law problem [21],

where λ is the wavelength of radiation, is the Planck’s constant, T is the absolute temperature of the blackbody, c is the speed of light and is the Boltzmann constant. It computes the energy density within an isothermal blackbody. We intend to obtain wavelength λ corresponding to maximum energy density .

To obtain maximum value of ψ, we take which gives

Let . Then, Equation (31) becomes

As we find the solutions of , we get the maximum wavelength of radiation λ. As stated in [22], the L.H.S. of Equation (32) is zero when . Further, . Thus, another root could appear close to . The desired zero is .

Example 2.

Van der Waal’s equation of state [4],

The following nonlinear equation needs to be solved to attain the volume V of the gas in terms of another parameters,

Here, G is the universal gas constant, P is the pressure and T is the absolute temperature. If the parameters a and b of a specific gas are given, the values of n, P and T can be calculated. Using certain values, the following nonlinear equation can be obtained,

having three roots, out of which one is real and two are complex. Though our required zero is .

Example 3.

Fractional conversion in a chemical reactor [23],

Here, x denotes the fractional conversion of quantities in a chemical reactor. If x is less than zero or greater than one, then the above fractional conversion will be of no physical meaning. Hence, x is taken to be bounded in the region of . Moreover, the desired root is .

Example 4.

The path traversed by an electron in the air gap between two parallel plates considering the multi-factor effect is given by

where and are the position and velocity of the electron at time , m and are the mass and the charge of the electron at rest and is the RF electric field between the plates. If particular parameters are chosen, Equation (37) can be simplified as

The desired root of Equation (38) is .

Example 5.

The following nonlinear equation results from the embedment x of a sheet-pile wall,

The required zero of Equation (39) is .

We have also implemented our proposed schemes given by Equations (8) and (16) on the above-mentioned problems. Table 3 and Table 4 demonstrate the corresponding results. Further, Table 1 demonstrates COC for our proposed method without memory given by Equation (8) (, and ), method given by Equation (25) denoted by , the method given by Equation (26) denoted by and Halley’s method given by Equation (27) denoted by , respectively. Table 2 demonstrates COC for our proposed method with memory given by Equation (16) (, and ), method given by Equation (28) denoted by and the method given by Equation (29) denoted by , respectively.

Table 3.

Comparison of different methods without memory for real-life problems.

Table 4.

Comparison of different methods with memory for real life problems.

Remark 2.

5. Basins of Attraction

The basins of attraction of the root of are the sets of all initial points in the complex plane that converge to on the application of the given iterative scheme. Our objective is to make use of basins of attraction to examine the comparison of several root-finding iterative methods in the complex plane in terms of convergence and stability of the method.

On this front, we have taken a grid of the rectangle . A color is assigned to each point on the basis of the convergence of the corresponding method starting from to the simple root and if the method diverges, a black color is assigned to that point. Thus, distinct colors are assigned to the distinct roots of the corresponding problem. It is decided that an initial point converges to a root when . The point is said to belong to the basins of attraction of . Likewise, the method beginning from the initial point is said to diverge if no root is located in a maximum of 25 iterations. We have used MATLAB R2021a software [24] to draw the presented basins of attraction.

Furthermore, Table 5 and Table 6 list the average number of iterations denoted by Avg_Iter, percentage of non-converging points denoted by and the total CPU time taken by the methods to generate the basins of attraction.

Table 5.

Comparison of different methods without memory in terms of Avg_Iter, and CPU time.

Table 6.

Comparison of different methods with memory in terms of Avg_Iter, and CPU time.

To carry out the desired comparisons, we have considered the test problems given below:

Problem 1.

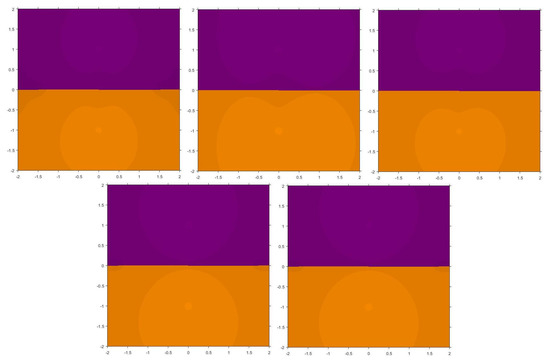

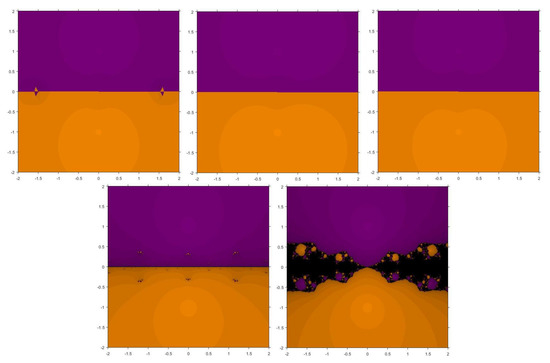

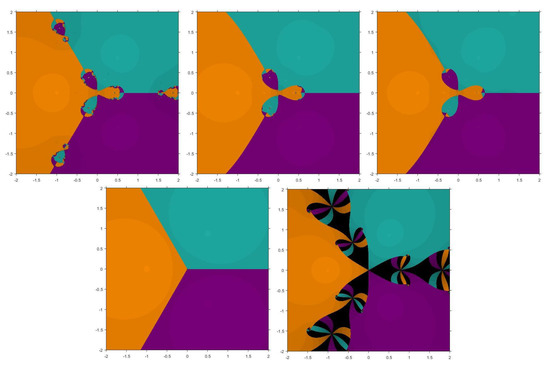

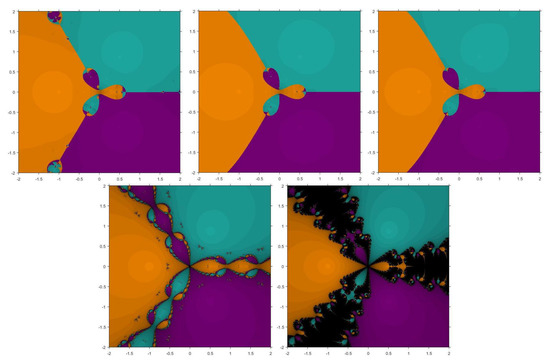

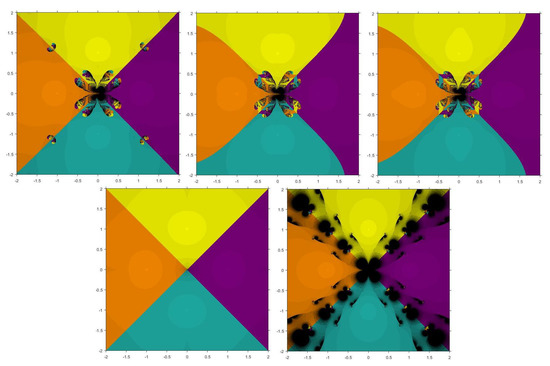

The first function considered is . The roots of this function are i, . The basins corresponding to our proposed method and the mentioned existing methods are shown in Figure 1 and Figure 2. It is observed that , , , , and converge to the root with no diverging points but , , and have some points painted as black.

Figure 1.

Basins of attraction for , , , , , respectively for .

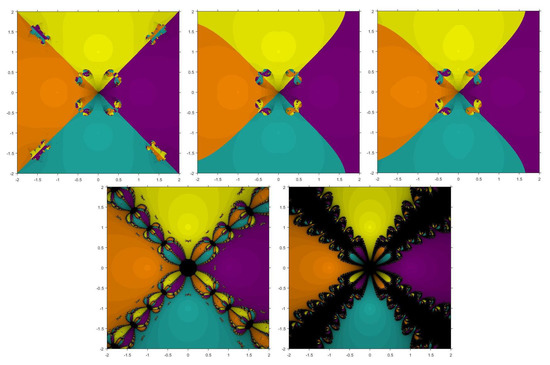

Figure 2.

Basins of attraction for , , , , , respectively for .

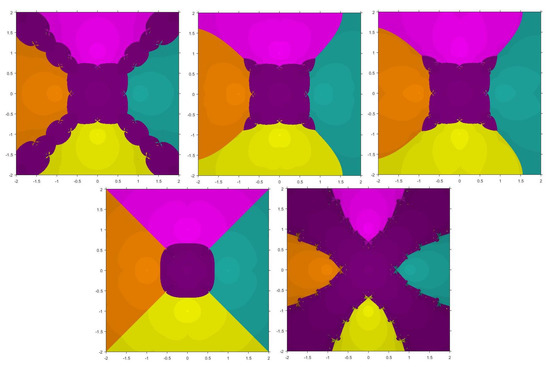

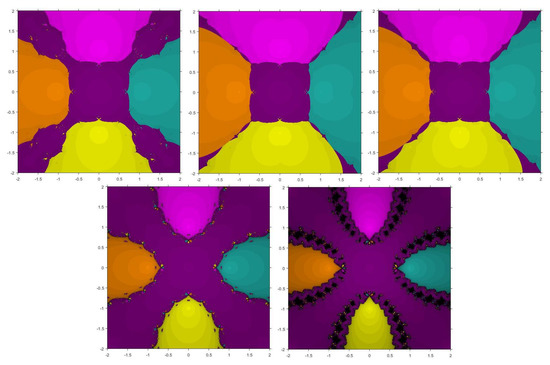

Problem 2.

Second function taken is having roots , , . Figure 3 and Figure 4 show the basins for in which it can be seen that , and have wider regions of divergence.

Figure 3.

Basins of attraction for , , , , , respectively for .

Figure 4.

Basins of attraction for , , , , , respectively for .

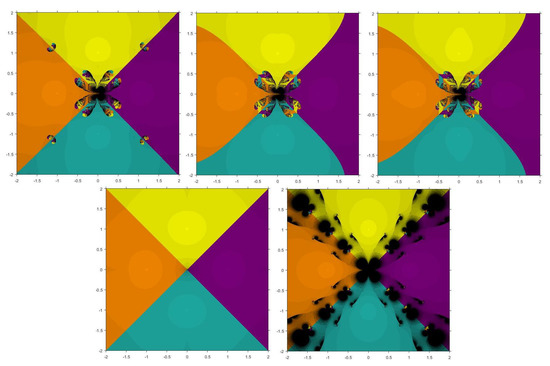

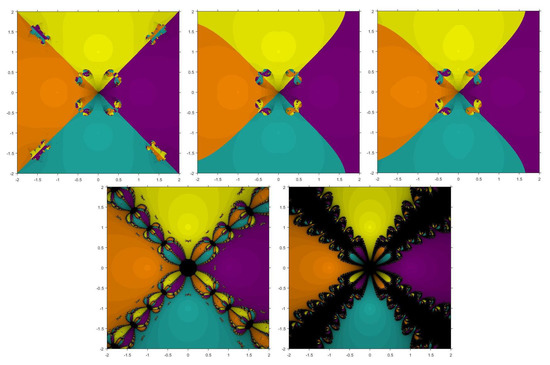

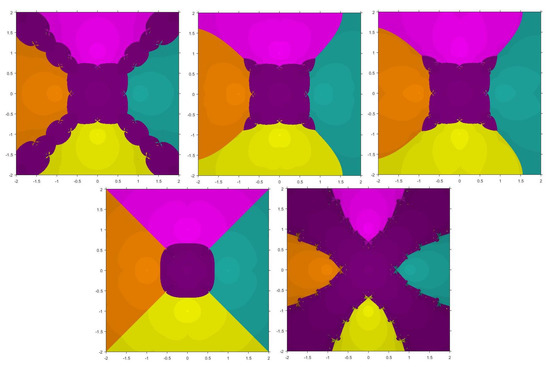

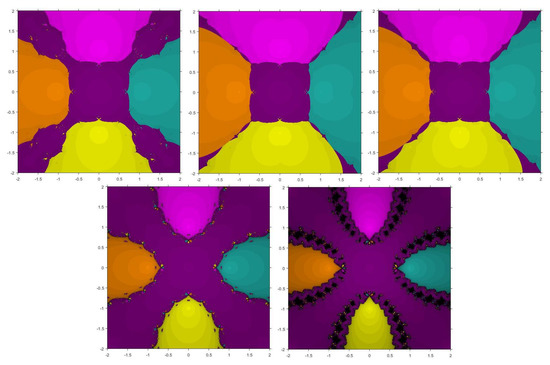

Problem 3.

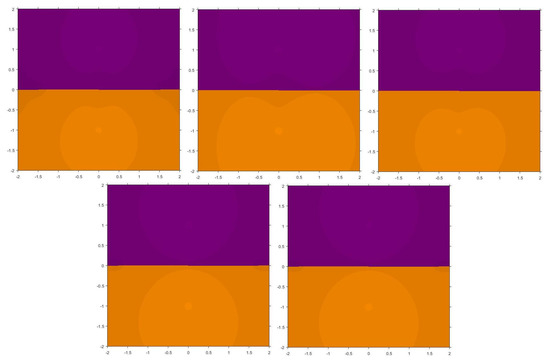

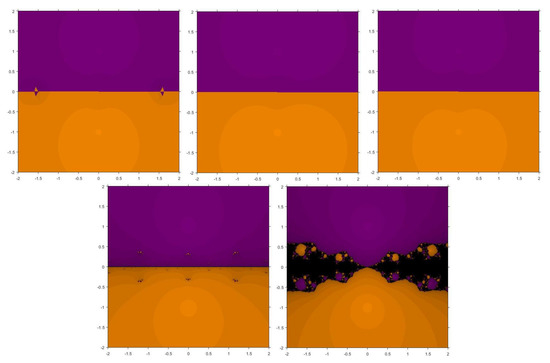

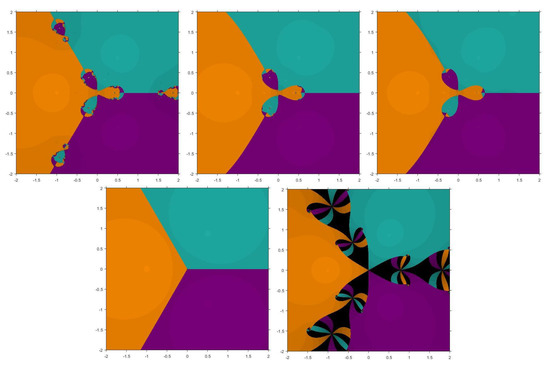

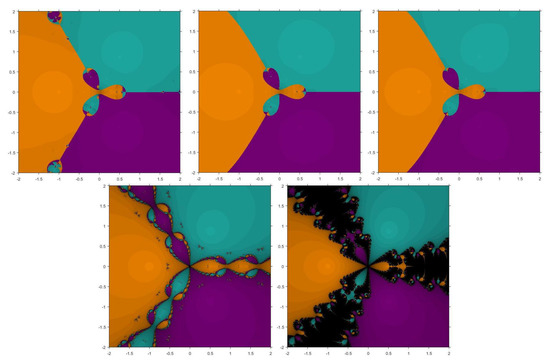

The third function considered is having roots , . Figure 5 and Figure 6 show that and have smaller basins. Although , , and have some diverging points, yet they converge faster than the existing methods.

Figure 5.

Basins of attraction for , , , , , respectively for .

Figure 6.

Basins of attraction for , , , , , respectively for .

Problem 4.

The fourth function we have taken is whose roots are 0, , . Figure 7 and Figure 8 show that , , , , , and depict convergence to the root for any initial point as they have no diverging points.

Figure 7.

Basins of attraction for , , , , , respectively for .

Figure 8.

Basins of attraction for , , , , , respectively for .

Remark 3.

One can see from Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8 and Table 5 and Table 6 that there is a marginal increase in the average number of iterations per point of the existing methods, as they have more number of divergent points than that of the proposed method. Special mention to the fact that our proposed with memory method has negligible number of divergent points in the specified mesh of points and hence, larger basins of attraction. Consequently, our proposed method with memory shows faster convergence in comparison to the existing methods.

6. Conclusions

A new method with memory has been introduced. The proposed method has a higher order of convergence in comparison with the Hansen–Patrick and Traub methods. For verification, we have carried out numerical experiments on a few test functions and some real-life problems. It is clearly visible from our results that the proposed method improves the convergence order. This increase in the convergence order has been achieved with no additional functional evaluation. Furthermore, we have also presented the basins of attraction for the proposed, as well as some existing methods, and our results point to the very fact that our proposed method converges largely to the desired zeros over a specified region much faster. Finally, to conclude, we would say that the proposed method can be significantly used for solving nonlinear equations.

Author Contributions

M.K.: Conceptualization; Methodology; Validation; H.S.: Writing—Original draft preparation; M.K. and R.B.: Writing—Review and Editing, Supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This project was funded by the Deanship of Scientific Research (DSR) at King Abdulaziz University, Jeddah, Saudi Arabia, under grant no. (KEP-MSc-58-130-1443). The authors, therefore, acknowledge with thanks DSR for technical and financial support.

Acknowledgments

This project was funded by the Deanship of Scientific Research (DSR) at King Abdulaziz University, Jeddah, Saudi Arabia, under grant no. (KEP-MSc-58-130-1443). The authors, gratefully acknowledge DSR for technical and financial support. Technical support provided by the Seed Money Project (TU/DORSP/57/7290) by TIET, Punjab, India is also acknowledged with thanks.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Amat, S.; Busquier, S.; Gutiérrez, J.M. Geometric constructions of iterative functions to solve nonlinear equations. J. Comput. Appl. Math. 2003, 157, 197–205. [Google Scholar] [CrossRef]

- Argyros, I.K. A note on the Halley method in Banach spaces. Appl. Math. Comput. 1993, 58, 215–224. [Google Scholar]

- Argyros, I.K.; Kansal, M.; Kanwar, V.; Bajaj, S. Higher-order derivative-free families of Chebyshev–Halley type methods with or without memory for solving nonlinear equations. Appl. Math. Comput. 2017, 315, 224–245. [Google Scholar] [CrossRef]

- Cordero, A.; Moscoso-Martinez, M.; Torregrosa, J.R. Chaos and stability in a new iterative family for solving nonlinear equations. Algorithms 2021, 14, 101. [Google Scholar] [CrossRef]

- Gutiérrez, J.M.; Hernández, M.A. A family of Chebyshev–Halley type methods in Banach spaces. Bull. Austral. Math. Soc. 1997, 55, 113–130. [Google Scholar] [CrossRef]

- Jain, P.; Chand, P.B. Derivative free iterative methods with memory having R-order of convergence. Int. J. Nonlinear Sci. Numer. Simul. 2020, 21, 641–648. [Google Scholar] [CrossRef]

- Weerakoon, S.; Fernando, T.G.I. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 13, 87–93. [Google Scholar] [CrossRef]

- Hansen, E.; Patrick, M. A family of root finding methods. Numer. Math. 1977, 27, 257–269. [Google Scholar] [CrossRef]

- Petković, M.S.; Neta, B.; Petković, L.D.; Dẑunić, J. Multipoint methods for solving nonlinear equations: A survey. Appl. Math. Comput. 2014, 226, 635–660. [Google Scholar] [CrossRef]

- Sharma, J.R.; Guha, R.K.; Sharma, R. Some variants of Hansen–Patrick method with third and fourth order convergence. Appl. Math. Comput. 2009, 214, 171–177. [Google Scholar] [CrossRef]

- Kansal, M.; Kanwar, V.; Bhatia, S. New modifications of Hansen–Patrick’s family with optimal fourth and eighth orders of convergence. Appl. Math. Comput. 2015, 269, 507–519. [Google Scholar] [CrossRef]

- Zheng, Q.; Li, J.; Huang, F. An optimal Steffensen-type family for solving nonlinear equations. Appl. Math. Comput. 2011, 217, 9592–9597. [Google Scholar] [CrossRef]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solutions of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Sihwail, R.; Solaiman, O.S.; Ariffin, K.A.Z. New robust hybrid Jarratt–Butterfly optimization algorithm for nonlinear models. J. King Saud Univ. Comput. Inform. Sci. 2022; in press. [Google Scholar] [CrossRef]

- Sihwail, R.; Solaiman, O.S.; Omar, K.; Ariffin, K.A.Z.; Alswaitti, M.; Hashim, I. A hybrid approach for solving systems of nonlinear equations using harris hawks optimization and Newton’s method. IEEE Access 2021, 9, 95791–95807. [Google Scholar] [CrossRef]

- King, R.F. A family of fourth order methods for nonlinear equations. SIAM J. Numer. Anal. 1973, 10, 876–879. [Google Scholar] [CrossRef]

- Soleymani, F.; Lotfi, T.; Tavakoli, E.; Haghani, F.K. Several iterative methods with memory using self-accelerators. Appl. Math. Comput. 2015, 254, 452–458. [Google Scholar] [CrossRef]

- Campos, B.; Cordero, A.; Torregrosa, J.R.; Vindel, P. Stability of King’s family of iterative methods with memory. J. Comput. Appl. Math. 2017, 318, 504–514. [Google Scholar] [CrossRef]

- Jay, I.O. A note on Q-order of convergence. BIT Numer. Math. 2011, 41, 422–429. [Google Scholar] [CrossRef]

- Jain, D. Families of Newton-like method with fourth-order convergence. Int. J. Comput. Math. 2013, 90, 1072–1082. [Google Scholar] [CrossRef]

- Bradie, B. A Friendly Introduction to Numerical Analysis; Pearson Education Inc.: New Delhi, India, 2006. [Google Scholar]

- Shacham, M. Numerical solution of constrained nonlinear algebraic equations. Int. J. Numer. Methods Eng. 1986, 23, 1455–1481. [Google Scholar] [CrossRef]

- Zachary, J.L. Introduction to Scientific Programming: Computational Problem Solving Using Maple and C; Springer: New York, NY, USA, 2012. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).