Abstract

As third-generation neural network models, spiking neural P systems (SNP) have distributed parallel computing capabilities with good performance. In recent years, artificial neural networks have received widespread attention due to their powerful information processing capabilities, which is an effective combination of a class of biological neural networks and mathematical models. However, SNP systems have some shortcomings in numerical calculations. In order to improve the incompletion of current SNP systems in dealing with certain real data technology in this paper, we use neural network structure and data processing methods for reference. Combining them with membrane computing, spiking neural membrane computing models (SNMC models) are proposed. In SNMC models, the state of each neuron is a real number, and the neuron contains the input unit and the threshold unit. Additionally, there is a new style of rules for neurons with time delay. The way of consuming spikes is controlled by a nonlinear production function, and the produced spike is determined based on a comparison between the value calculated by the production function and the critical value. In addition, the Turing universality of the SNMC model as a number generator and acceptor is proved.

1. Introduction

Membrane computing, an important branch of natural computing, is a computing model inspired by the structure, function, and behavior of biological cells. At present, there are three main types of membrane computing models: cell-like P system, tissue-like P system, and neural P system. In the past few years, research on neural P systems has mostly focused on spiking neural P systems, which is a type of computing model inspired by the processing of information in the form of spikes by neurons in biological neural networks. In 2006, Ionescu et al. first proposed the concept of spiking neural membrane systems [1], which have received extensive attention in recent years as a third-generation neural network model. Artificial neural networks are based on imitating the information processing function of the human brain nervous system, based on network topology to simulate the processing mechanism of the human brain nervous system towards complex information. It is a type that combines the understanding of biological neural networks with mathematical models to achieve powerful information processing capabilities, and it has a wide range of applications in pattern recognition, information processing, and image processing. We can find that both membrane computing and artificial neural networks are inspired by biological neural networks, and, in a certain sense, they are connected.

The SNP systems have accumulated rich research results in theory and application, especially in theoretical research. By changing the rules, objects, synapses, and structures to expand systems, many new SNP systems have been established. The changes in rules are mainly reflected in the form of the rules, such as SNP systems with white hole rules [2], SNP systems with communication rules [3], SNP systems with polarizations [4], asynchronous SNP systems [5], SNP systems with inhibitory rules [6], SNP systems with astrocytes [7], nonlinear SNP systems [8], and numerical SNP systems [9]. Inspired by the inhibitory spike effect of communication between neurons, the concept of the anti-spike was introduced, and a type of SNP system with anti-spike was proposed [10,11,12]. With expansions on synapses, there are systems such as SNP systems with weights on synapses [13], SNP systems with multiple channels [14], SNP systems with the rule on the synapse [15,16], and SNP systems with scheduled synapses [17,18]. The improvement of the structure mainly lies in making the structure of the membrane system dynamically changeable, for example, self-organizing SNP systems with variable synapses [19] and SNP systems with neuron division and budding [20].

SNP systems have a good network-distributed structure, a powerful parallel computing ability, dynamic characteristics, and nondeterminism. These characteristics mean the SNP systems have good application prospects in solving many practical problems. At present, some scholars have proven the feasibility of SNP systems to solve pattern recognition problems [21,22,23,24,25], combined with algorithms to solve optimization problems [26,27,28], clustering [29], automatic design [30], fault diagnosis [31,32,33,34], and perform arithmetic and logic operations [35,36,37,38], implemented by software and hardware [39,40].

At present, the research on membrane computing mainly focuses on theoretical research, and further research on its application is needed. Therefore, how to use membrane computing to solve practical application problems is not only an important topic in the field of membrane computing research, it also has important significance for the theoretical development of membrane computing and neural networks. Membrane computing is similar to artificial neural networks in many features; for example, they are both highly parallel. Therefore, some scholars are currently dedicated to combining membrane computing with neural networks. For example, according to the self-organizing and self-adaptive characteristics of the artificial neural network, SNP systems with a plastic structure have been proposed [41,42,43,44]. Inspired by Eckhorn’s neuron model, coupled neural P systems are proposed [45]. Inspired by the intersecting cortical model, dynamic threshold neural P systems have been proposed [46]. An application is the use of neural network and neural P systems for image processing [47,48,49,50]. It is notable that the combination of neural network and neural P systems is only a theoretical improvement based on a certain characteristic of neural networks or an improvement in rule structure based on the operation mechanism of a specific network model. This has certain research value and development prospects for the development of membrane computing, but these still need further research.

Therefore, both the theory and application of membrane computing need to be further expanded. Artificial neural networks are currently widely used in classification, image processing, and pattern recognition, but there are few studies on membrane computing dealing with these problems. If they can be combined, the theory and application research of membrane computing can be further expanded. In this paper, based on the structure of the neural network and data processing method, combining it with membrane computing, spiking neural membrane computing models (SNMC models) are proposed. SNMC models retain the distributed parallel computing of membrane computing and also have the method and structure of data processing by artificial neural networks, which provides a new dynamic evolution model and enriches the computing model for membrane computing.

Although SNP systems have made great progress in recent years, they still have some problems that can be improved, especially in data processing. In computer engineering and other fields of calculation, numerical information processing is important work. However, traditional SNP systems take the number of spikes as symbolic data, so it is difficult to process a large amount of numerical information. However, in the SNMC model, although the object is still spike, its production function can realize the processing of numerical information.

In this paper, inspired by the MP neuron model, SNMC models are proposed. The SNMC model contains two data units and rules with a production function. The data units are all real values. The function of rules is to control the activation of neurons. Additionally, the formulation of the rules is inspired by a nonlinear activation function. The main difference between the SNMC model and the artificial neural network is that the data flow of the SNMC model is completed by rules and objects. The artificial neural network is only calculated through mathematical models. The difference between the SNMC models and the existing SNP systems are as follows.

- (1)

- The forms of the rules are different; they contain the production functions. Additionally, each neuron contains two data units, including the input value and the threshold value. However, SNP systems contain the number of spikes in integer form.

- (2)

- The execution steps of the rules are different. When the rules start to be executed, SNMC models have the production and comparison steps.

- (3)

- The synapse weights of connecting neurons in SNMC models are divided into inhibitory synapses and excitatory synapses, and the corresponding weights are positive and negative. It can be explained in this manner: if the spike passes through the inhibitory synapse, the spike will be negatively charged.

The structure of the rest of this paper is as follows. In Section 2, we give the concepts of SNP systems and the MP model. In Section 3, the definition of a new type of neural membrane computing model, called the SNMC model, is given; a detailed explanation of the definition is also given, and the working process of the model is explained through an example. In Section 4, through a simulation of a register machine, the Turing universality of the SNMC model is proven in the generating mode and the accepting mode, respectively. Finally, conclusions and future work are given in Section 5.

2. Related Works

In this section, SNP systems and the general mathematical model of the neuron network are introduced. Moreover, some basic expressions of membrane computing are given.

2.1. Spiking Neural P Systems

Definition 1.

An SNP system with the degreeis regarded as a tuple

where

- (1)

- is the alphabet, and is a spike included in neurons;

- (2)

- represents neurons with the form , , where

- (a)

- is the number of spikes in neuron ;

- (b)

- is the finite set of rules, including spiking rules and forgetting rules. The form of spiking rules is , , where indicates the time delay and indicates the regular expression over the alphabet . The form of forgetting rules is , . Additionally indicates the neuron is empty, without spikes.

- (3)

- represents synapses that connect neurons. Additionally, () indicates the synapse between neuron and neuron , where;

- (4)

- is the input neuron;

- (5)

- is the output neuron.

An SNP system can be regarded as a digraph without self-circulation, denoted as . V is a set of vertices for neurons. A is the arc set for synapses. Spikes and rules are included in neurons, and the number of spikes changes according to the rules in the neuron. If the spiking rule activates, it means that the neuron contains at least spikes. Additionally, these spikes will be consumed and produce spikes that are sent to connected neurons after time units. In particular, the parameter refers to delay, which means that the neurons involved in the delay turn off and refuse to accept external spikes before time units. For instance, assume and the rule in neuron fires at step , then is closed in steps and . The neuron reopens at step and receives spikes at the next step.

If the forgetting rule activates, it means spikes are removed from the neuron. The function of input neuron is reading spikes from the environment, and the function of the output neuron is outputting the results computed by the system.

The register machine has been shown to describe a set of recursive enumerable languages called NRE, which is equivalent to the computing power of the Turing machine. When proving the computational universality of various membrane systems below, the purpose of characterizing NRE is mainly achieved by simulating the register machine, which is denoted as a tuple, . Among them, is the number of registers, is the instruction tag set, is the start instruction, is the halting instruction, and is the instruction set. It is notable that each element in corresponds to the element in . The register machine contains the following three forms of instructions:

- (1)

- ADD instructions, such as , mean that the number stored in register is increased by 1, and the next instruction is chosen or nondeterministically.

- (2)

- SUB instructions, such as , generate two results according to the number in register . If the value stored in register is greater than 0, the operation of subtracting 1 is performed, and the next instruction is executed. If the value stored in register is equal to 0, no operation is performed on , and the next execution instruction is .

- (3)

- Halting instruction is used to halt calculation.

2.2. Neural Network

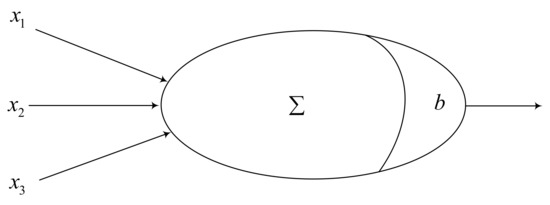

From a biological point of view, a neuron can be regarded as a small processing unit. Additionally, the neural network of the brain is made up of many neurons connected in a certain way. The simplified mathematical model of neurons is shown in Figure 1. The representation of the model can be regarded as Formula (1), which indicates the sum of input of neuron

where is the weight between neuron and neuron , is the input vector that comes from neuron , and is the threshold of neuron , the value of which can be set to positive or negative. In this way, it indicates that the neuron activates when the signal received by the neuron is greater than the threshold.

Figure 1.

The structure of the MP neuron model.

This neuron model is called the MP neuron model, which is an abstract and simplified model constructed according to the structure and working principle of biological neurons. In our proposed models, we consider its activation function is a nonlinear function, which is the binary function shown as Formula (2)

3. Spiking Neural Membrane Computing Models

In this section, inspired by artificial neural networks, a new variant membrane computing model, called the spiking neural membrane computing model, is proposed. It is a combined model of neural network and spiking neural P systems and contains multiple neurons. Neurons are connected by synapses, and the synapses have weights, where the weights represent the relationship between neurons. To facilitate understanding and expression, we use an expression similar to SNP systems.

3.1. Definition

Definition 2.

The tuple of an SNMC model with a degreeis represented as

where

- (1)

- is the alphabet, and refers to the spike included in neurons.

- (2)

- is the set of neurons, and neuron has the form , where

- (a)

- is input data in neuron ;

- (b)

- is a threshold of neuron ;

- (c)

- is the production function, which is to compute the total real value of neuron . The total real value is the weighted sum of all inputs minus the threshold;

- (d)

- is the set of firing rules, with the form , . If , neuron is not producing spikes, denoted as .

- (3)

- is the weight on the synapse, which can be positive or negative. A positive weight means an excitatory synapse, and a negative weight means an inhibitory synapse.

- (4)

- is the set of synapses.

- (5)

- and are the input neuron and the output neuron, respectively. The input neuron converts the input data into spikes containing real values. The output neuron outputs the input data as a binary string composed of 0 s and 1 s.

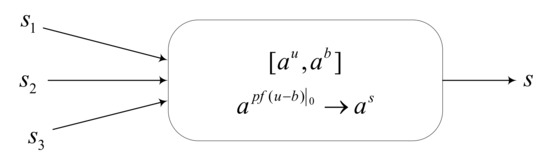

A spiking neural membrane computing model can be regarded as a digraph structure without self-circulation, where the nodes of the graph are represented by neurons, and the arcs represent the synaptic connections between neurons, as shown in Figure 2. The definition and description of SNMC models are given below. The neuron contains two kinds of data: a real input value and a threshold value. The way of transmitting data is determined by rules and synaptic connections.

Figure 2.

The neuronal structure of an SNMC model.

How the SNMC model works is explained here. There are two types of synapses: one is the inhibitory synapse, and the other is the excitatory synapse. This can be embodied by the value of weights, where a positive weight means an excitatory synapse, and a negative weight means an inhibitory synapse. It also indicates the relationship between neurons. For example, the weight between and is , which means the synapse between them is an excitatory synapse, and neuron receives twice the value that neuron outputs.

There are two types of data units in each neuron, including the input data unit and the threshold unit. The threshold can be 0, which means no threshold in neurons. It is notable that the neurons in our proposed model contain spikes with real values, which are real numbers. The input data of a neuron is the linking input data plus original data. The linking input data comes from the connected neurons, and the original data are that the neuron itself already exists. In this way, neuron has a spike with real value , which is the sum of the weighted values sent by the connected neurons plus the original values, such as Formula (3), where is the weight between and , is the output value of neuron , and is the original data of neuron .

For the convenience of calculation in this article, only integers are involved, which can be interpreted as “integer spikes” in this paper. For instance, the real value 2 is shown as , which can be explained as two spikes in a neuron. Additionally, is explained by two spikes with a negative charge in the neuron. A negatively charged spike can annihilate one spike.

The output state of the neuron is related to the rules. At each step, each neuron contains at least one firing rule, which is applied sequentially within the same neuron, but neurons work in parallel mode with each other. At a certain moment, if some neurons contain more than one rule that can be applied, they will nondeterministically choose one of the rules to apply. The way the rules are executed and interpreted is given below.

The rule contains two parts, including the production function and the outputting function. The production function is used to calculate the total real value of the current neuron, and the total effect on neuron is the input data minus the threshold, which will cause the state change of neuron . In addition, the neuron has a critical value, which is set to 0. Therefore, the execution steps of rules are divided into three steps: production, comparison, and outputting.

- (1)

- Production step. When neuron receives weighted spikes with real value from connected neurons at time , and the threshold is , the production function works to calculate the total real value by Formula (4).

- (2)

- Comparison step. In this step, the result computed by Formula (4) is compared with the critical value 0. It determines whether the real value output of the next step is 1 or 0.

- (3)

- Outputting step. According to the result of the comparison step, if it has , then , and the rule can be applied to output a spike with the real value of 1. If it has , then and the rule fires. Therefore, no spike can be sent to the connected neurons.

The firing of rules requires two conditions: (1) Assume the number of spikes contained in neuron is , belongs to the language set represented by the regular expression , and the number of spikes contained in neuron is greater than or equal to the number of spikes consumed, , i.e., . (2) The neuron can only be activated when it receives the signal sent by the connected neurons.

Additionally, and after the rule refers to time delay. means the time neuron receives spikes and represents the rule execution time (from the execution of the production steps to the outputting step). Before a delay of times, the neuron is in a closed state. If there is no delay, then the firing rule is abbreviated to . Moreover, the neuron fires and contains a spike with the real value of ; then, the real input value of the input unit is reset to 0, and the threshold unit is unchanged after the outputting step. In other words, once the rules fires in the neuron, the input value in the neuron is consumed. It is noted that if the input value of the SNMC model is a natural number, and there is no threshold in neurons and the weights are positive integers, then the SNP systems belong to a special case of our proposed SNMC models.

At each step, the configuration of the system is composed of the real values of input units and threshold units of all neurons, denoted as , where is the number of neurons. The initial configuration is denoted as . With the application of firing rules, the configuration of the system at a certain time is also changed. The transition from configuration at time to the configuration at time is denoted as . When the calculation reaches a certain configuration and there is no rule that can be activated, then the calculation stops, and this configuration is denoted as . The computational process of the system can be regarded as a transition of a series of configurations, which is ordered and finite, i.e., from the initial configuration to halting configuration .

When an SNMC model is working in a generating mode in the initial configuration, all the neurons in the model are empty except for neuron , which means that all registers are empty except for the number stored in Register 1. The calculation starts from instruction , stops when the end instruction is reached, and then the number stored in Register 1 is the generated number. The calculation result is associated with the firing time of neuron , which is calculated by the time interval between the two nonzero values, that is, the time it takes neuron to send the two spikes to the environment. Suppose that neuron sends the spike to the environment for the first time at time ; the environment receives the second spike coming from at time , and then the calculation result is . In addition, when an SNMC model works in the accepting mode, an input neuron is added to read the values from the environment and all neurons are empty at the beginning. The number is fed into the system in the form of an encoded spike train, and it means the time interval between the two firings of is the input number. For instance, the input number is , , and it is encoded as a spike train , where 1 represents spike and 0 is empty. The interval between two spikes is , which is the input number.

The family of all sets of numbers is denoted as , . When , represents the result of the system calculation. Additionally, “2” indicates that only the first two firing times of neuron are considered. When , is the set of all the accepted numbers by . The sets generated and accepted by SNMC models are denoted by , where means two variables contained in each production function, is the number of neurons, and is the number of rules. Notice that when the number of neurons cannot be counted, it is denoted by a symbol, . In the following proof of the computing universality of SNMC models, the NRE, which is a family of numerically computable natural numbers, can be described mainly through simulating the register machine.

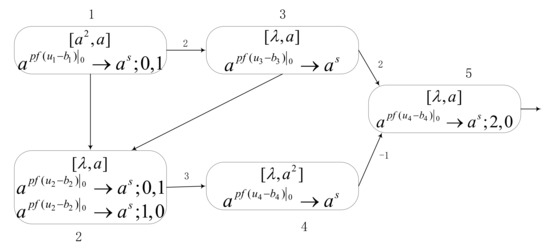

3.2. Illustrative Example

This example consists of 5 neurons and several synapses to explain the workflow of the system , as shown in Figure 3. Neuron contains a real input value 2 and a threshold value 1, and it exists in the neuron in the form of spikes . Suppose that at Time 1, neuron fires since , and a spike is generated at Time 2, that is,. Thus, neuron receives two spikes from neuron . At Time 3, generates one spike because its value is 1. Additionally, neuron contains two rules, of which one is selected for execution indeterminately. Hence, there are two cases that can happen depending on the rule selection in .

Figure 3.

An example of the SNMC model. It is an explanation of the model workflow.

- (1)

- Assuming that the rule is applied, neuron receives a spike from at Time 2, and then the production function executes at Time 3. The value is obtained. Hence, at Time 4, neuron produces and sends an empty to neuron . At the same time, neuron also receives one spike from neuron ; the rule is used. Since its value is 0, neuron sends an empty to neuron . The neuron has not received any spikes, so it produces empty at Time 5, and neuron receives two spikes from neuron . At Time 6, its rule in neuron fires and its , so it produces one spike and sends it out at the same time.

- (2)

- Assuming that the rule is used, then neuron is in the closed state before Time 3 and does not receive any spikes. At Time 3, the production function of neuron performs and produces one spike to send to neurons and . Thus, at Time 3, neuron receives two spikes: one from neuron and the other from neuron . At Time 4, since in , it has , and produces a spike and sends it to neuron . Neuron receives three spikes, and at Time 5, its producing function can be calculated as , so . Meanwhile, neuron receives two spikes from neuron and one negative spike from neuron , so neuron contains one spike. In this way, no spike is generated and sent out at Time 6 because of .

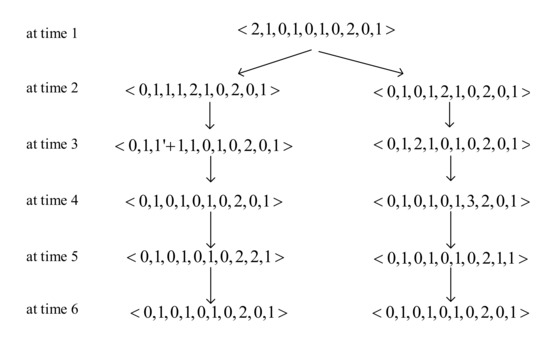

In order to conveniently display the changes of neurons at each time, a graph of configuration is given, as shown in Figure 4. The configuration in this figure is in the order of neuron , , , , and , and it is composed of the input unit and the threshold unit with the form of . When rules are still performing at a certain moment, the corresponding spikes are considered not to be consumed completely, denoted as “”.

Figure 4.

The configuration of the example at each time.

4. Turing Universality of SNMC Models

In this section, the computational power of SNMC models is proved as number generators and acceptors, respectively.

4.1. Generating Mode

In generating mode, the most important neuron is contained. In this way, the Turing universality of SNMC models as a generator is investigated by simulating three instructions, including the ADD instruction, the SUB instruction, and the halt instruction. Thus, three modules, named ADD, SUB, and FIN modules, are used for the simulation. Assume that each neuron contains a certain initial threshold. It is stipulated that each neuron corresponding to an instruction has an initial threshold , and each neuron corresponding to the register has an initial threshold .

Theorem 1.

.

Proof of Theorem 1.

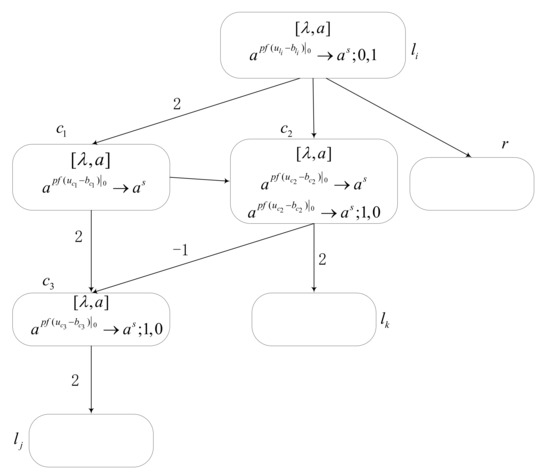

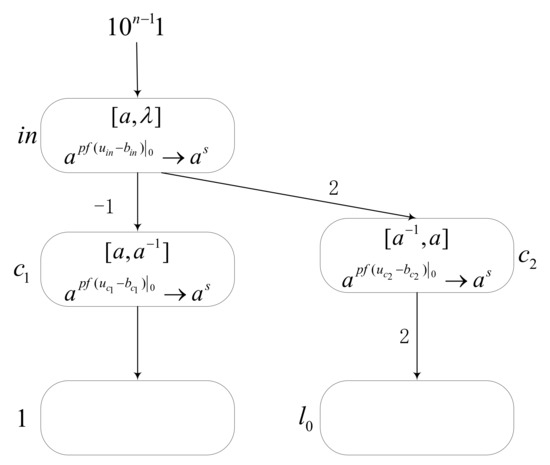

Module ADD is used to simulate ADD instructions , as shown in Figure 5. When register is increased by one, the spike is transmitted indeterminately to or . Assume that the configurations of the module ADD is associated with six neurons , , ,, and , respectively. Assume neuron receives two spikes at time , and the configuration of time is . At time , the rule in fires and the production function starts to compute. It has , thus neuron sends the produced spike to neurons , and respectively, at time . There are two rules within neuron , and the application of one of them is indeterminately selected. Therefore, two cases happen.

Figure 5.

Module ADD. Its function is to Simulate ADD instruction .

- (1)

- If the rule is applied, neuron receives two spikes and receives one spike at time . The configuration becomes . In neuron , it has and sends one spike to and at time . But due to the delay of one time in , neuron receives the spike at the next time. Thus, the configuration of time is . At time , the rule fires and the production result in neuron is , such that the neuron produced empty, with in the outputting step. In this way, neuron is empty. Since the time delay in neuron , only receives the spikes sent by neurons and at time , so neuron receives two spikes from in total and . Calculate the value , and one spike is generated. Therefore, neuron receives two spikes at time and .

- (2)

- If the rule in neuron is activated, then at time , since there is the time delay in , only neuron receives two spikes and neuron receives one spike. The configuration of time is . The value in neuron is 1, which is greater than 0; thus, sends out a spike at time . Thus, neuron receives two spikes sent by neurons and at time , and . At the next time, neuron receives two spikes sent by neuron . Additionally, neuron receives two spikes from and one spike with a negative charge from neuron , so neuron has one spike at this time, and the configuration is . Therefore, no spike is sent to neuron in time because of in and .

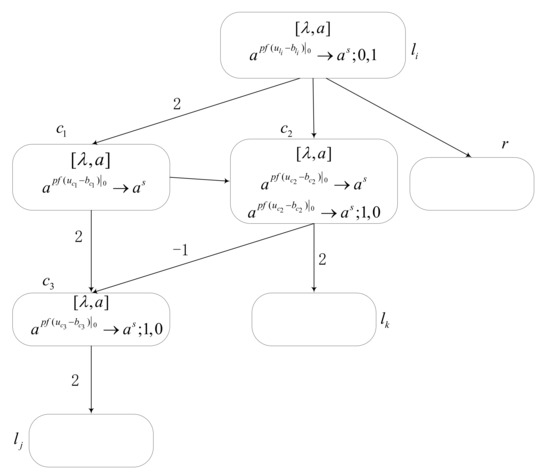

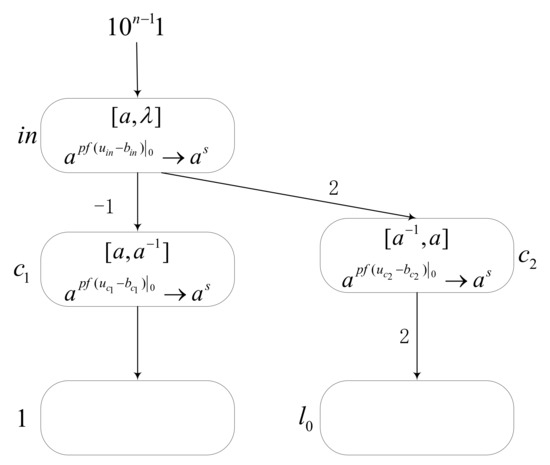

The module SUB, as shown in Figure 6, is used to simulate the SUB instruction in the register machine. The configuration of the module SUB is ; they correspond to the number of input units and threshold units of neurons , , , , , and . Assume that neuron receives two spikes at time , and the configuration is . At time , the production function starts to calculate a value that is equal to 1 (greater than 0), so one spike is generated at time and sent to and . At time , neuron receives two spikes, and neuron receives one spike, but it is unknown whether there is empty in neuron . According to the number of spikes contained in , the operation results are divided into the following two cases:

Figure 6.

Module SUB. Its function is to simulate SUB instruction .

- (1)

- If register of register machine stores a number , it means that neuron contains at least one spike. At time , neuron contains at least two spikes, and so . As its value is 1>0, one spike is generated and sent to neurons and , respectively, at time . At the same time, neuron generates one spike since . Thus, neuron receives two spikes, one from and the other from , and receives one spike because one negatively charged spike and one spike are annihilated. The configuration of time is . At the next time, since of neuron , a spike is generated and two spikes are sent to . Additionally, the value of neuron is 0, so no spike is generated; then, neuron is empty. In this way, the configuration of time is .

- (2)

- Suppose that no number is stored in register at the initial time; that is, neuron is empty. At time , one spike from is received by , and the rule fires. Thus, the configuration is . Since there is only one spike in , and its , neuron produces no spike at time . Meanwhile, the neuron receives one spike from and receives two spikes from . The configuration of time is . At time , the rule in is applied, and the calculation , so no spike is generated. Additionally, according to the rule, generates one spike and neuron obtains two spikes. Therefore, neuron has one spike and neuron is empty. In this way, .

It is notable that despite that it is possible to have multiple SUB instructions operating on the same register, no incorrect simulation of caused by the interference among SUB modules in takes place. Assume that SUB instructions and share the same register , so when the instruction works, we need to ensure that the work of instruction will not be affected in the next work. Assume that the neurons connected to register in instruction have and , which correspond to and shown in Figure 6. According to the simulation of the above module SUB, when there is no number stored in register , neuron does not generate any spike, so it will not affect instruction . When register is not empty, then neuron produces one spike. After passing through the synapse, neuron receives one spike and neuron receives a spike with a negative charge. According to the rules of neurons and , their value does not exceed 0, so no spike is generated. Therefore, instruction is not affected when instruction is simulated. In this way, the simulation of module SUB is proven correct.

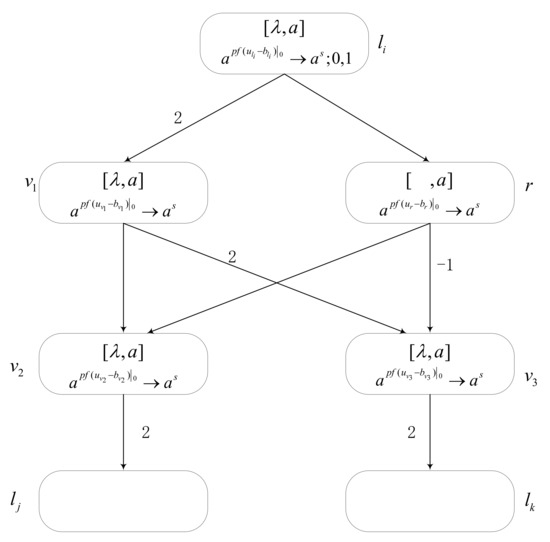

The function of module FIN is to output the computational result (shown in Figure 7). Suppose that the number in Register 1 is , that is, there are spikes in neuron . Additionally, neurons and contain a spike, respectively. Suppose that at time , neuron receives two spikes. As shown in Figure 7, we can see that the configuration is . Therefore, at time , from the value of (), one spike is generated and sent to neurons and . Neurons and both receive two spikes, and their rules are activated. Since the values of and are , at time , both neurons and generate one spike. Neurons and both receive two spikes from neuron , and neuron also receives two spikes from . Hence, at time until the calculation stops, neurons and will always repeat the operation as at time . At time , the rule of neuron is activated, and it has , so one spike is sent to neurons and , respectively. Neuron contains two spikes. Thus, at time , according to the rule in , one spike can be generated and sent to the environment. At the same time, neuron receives a spike obtained from neuron . It is worth noting that neuron always sends one spike every time after time to neuron and until the calculation stops.

Figure 7.

Module FIN. Its function is to output the computation result.

At time , neuron receives a spike with a negative charge. Hence, neuron contains spikes at this time. However, according to the rule in neuron , the rule can fire if and only if the number of spikes in neuron is not more than 2. Hence, until time , when neuron contains only two spikes, the rule is activated. At time , since in neuron , it generates a spike and sends it to neuron . At the next time, the rule of fires. Since its value is greater than 0 and the rule execution time has a one-step delay, one spike is generated and sent to neuron at time . At this time, neuron also gets a spike from neuron , so it contains two spikes. Therefore, at time , neuron generates one spike and sends it to the environment. The calculation result of the SNMC model is defined as the time interval for the output neuron to send the first two nonzero values to the environment, that is, which is consistent with number stored in Register 1. Therefore, the FIN module can output the calculation result correctly.

In this way, the computation power of SNMC models in generating mode is investigated by simulating the register machine correctly through three modules. □

4.2. Accepting Mode

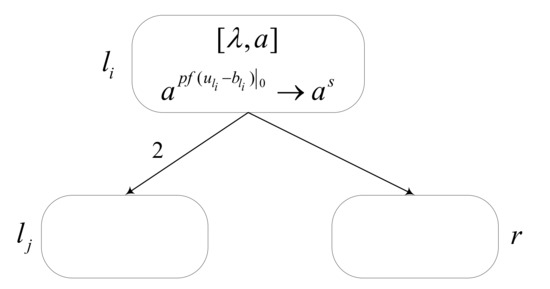

The computational power of an SNMC model is obtained by simulating the deterministic register machine in the accepting mode. We need to construct an SNMC model, including module INPUT, module SUB, and module ADD’, to simulate the deterministic register machine. Module SUB is the same as that in the generating mode, and we will not prove it in this part. Additionally, module ADD’ simulates the deterministic ADD instruction .

Theorem 2.

.

Proof of Theorem 2.

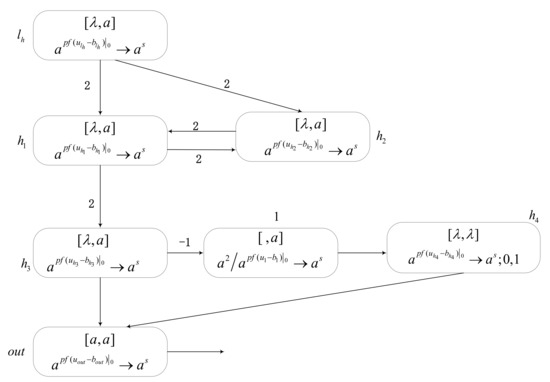

We only need to prove that module INPUT and module ADD can simulate the register machine. The function of module INPUT is to read the number encoded into a spike train into the model, as shown in Figure 8. Assume that number is to be read into the SNMC model by module INPUT. Firstly, encode into a spike train , where the time interval between two spikes is . When input neuron reads the symbol 1, it means that input value is 1, and when the read symbol is 0, it means input value is 0. Then, use module INPUT to store the number in Register 1. If Register 1 stores number , it corresponds to neuron receiving spikes.

Figure 8.

Module INPUT. Its function is to read the spike train to model .

At the initial moment, there is one spike in neuron , one spike in , and one spike with a negative charge in , i.e., the initial configuration is . Suppose that at time , neuron receives the first spike from the environment. Thus, there are two spikes in . According to the rule , the value is equal to , so a spike is generated. At time , neuron receives one spike with a negative charge, which annihilates the spike it contains. Hence, there is no spike in . According to the rule , calculate the value and . Hence, produces one spike and sends it to neuron at the next time. Neuron also receives two spikes from at time , one of which is annihilated by the negatively charged spike. At this time, has one spike. Additionally, calculate , so that neuron generates empty at time . Simultaneously, neuron receives the empty (0) from the environment and sends the empty to neurons and according to its rule.

Therefore, at time , neuron is activated again, and its . Thus, produces one spike and sends it to neuron . At the next time , neuron always accepts the empty, so neuron receives spikes until time . At time , obtains the second spike. Thus, according to the value , produces one spike. In this way, receives one spike with a negative charge, and receives two spikes. At time , neuron fires, and its , hence no spike is generated. Meanwhile, according to the rule in neuron which is , a spike is produced and two spikes are sent to neuron . So far, the module INPUT simulation is completed.

The module ADD’, used to simulate deterministic ADD instruction , is shown in Figure 9. When neuron receives two spikes, the produced one spike is transmitted to neuron because of ; hence, neuron receives two spikes and neuron also gets one spike from neuron . Therefore, the operation of increasing by 1 to register is successfully simulated by module ADD’.

Figure 9.

Module ADD’. Its function is to simulate deterministic ADD instruction .

Based on the above proof, it is determined that the register machine can be correctly simulated by the SNMC model working in the accepting mode. Therefore, . □

5. Conclusions

Inspired by the SNP systems and artificial neural networks, this paper presents a new membrane computing model called the spiking neural membrane computing model. The model is composed of multiple neurons and connected synapses. The weights on the synapses represent the relationship between the neurons. According to the types of synapses, weights can be either positive or negative. If the synapse is inhibitory, the weight is negative. If the synapse is excitatory, a positive weight value denotes it. Each neuron contains two data units: the input value unit and the threshold unit, both of which exist in the form of spikes. In this model, the activation of rules in neurons requires two conditions. One is to meet the conditions generated by the regular expression, and the other is that the neuron can only be activated when it receives signals from the connected neurons.

The operation of the rule needs to be divided into three stages: production step, comparison step, and outputting step. Note that when the generated positive real value is transmitted to the neuron through the inhibitory synapse, the neuron receives a negative value; this means that there is a spike with a negative charge in the neuron. A spike with a negative charge and a spike with a positive charge can cancel each other out. In addition, we also proved the computing power of the SNMC model through Theorems 1 and 2. When the model is in the generating mode, the Turing universality of the SNMC model as a number generator is proven by simulating the nondeterministic register machine. Additionally, the Turing universality of the SNMC model as a number acceptor is proven by simulating a deterministic register machine.

The following are the advantages of the SNMC model:

- The weight and threshold values are introduced into the SNMC model, and the rule mechanism is improved compared with the SNP system so that the model combines the advantages of membrane computing and neural networks and can extend the application when processing real value information in particular.

- The rules of the SNMC model involve production function, and the calculation method of production function originates from the data processing method of neural networks, so the effective combination of the SNMC model and algorithms can be realized in the future.

- The computing power of the SNMC model has been proven, and it is a kind of Turing universal computational model.

The SNMC model extends the current SNP systems and comprehensively considers the relevant elements of the current SNP systems, such as time delay, threshold, and weight. The way of data processing in SNMC models makes the development of SNP systems more possible. We found that if the potential value of the SNMC model is a natural number, there is no threshold and weights are only positive integers; then, most of the currently existing SNP systems belong to a special case of our proposed SNMC model. The main difference between SNMC models and current SNP systems lies in the operating mechanism of rules. For example, the SNMC model is compared with SNP systems with weights and thresholds (WTSNP) [51]. They have different forms of rules, roles of thresholds, and operation mechanisms of rules. Additionally, the main difference with the numerical SNP system (NSNP) [9] is that the NSNP is embedded into the SNP system as the form of rule of the numerical P system and its object is not a spike. The firing rules also operate differently. In the SNMC model, the potential value is consumed in the form of spikes, and two results are produced, 0 or 1, according to the comparison results of the production function. Additionally, the SNMC model works by mapping the production function to an activation function.

The main difference between the SNMC model and artificial neural networks is that the data flow of the SNMC model is completed by rules and objects. Artificial neural networks are only calculated through mathematical models. The proposed SNMC model not only retains the distributed parallel computing of membrane computing but also has the method and structure of neural networks for data processing. Therefore, the model can be used in the future to deal with certain practical problems and expand the application of membrane computing. For example, further research can be carried out on image processing and algorithm design.

Author Contributions

Conceptualization, Q.R. and X.L.; methodology, Q.R.; validation, Q.R. and X.L.; formal analysis, Q.R.; investigation, Q.R.; resources, X.L.; writing—original draft preparation, Q.R.; writing—review and editing, Q.R. and X.L.; supervision, X.L.; project administration, X.L.; funding acquisition, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Nos. 61876101, 61802234, and 61806114), the Social Science Fund Project of Shandong (16BGLJ06, 11CGLJ22), the China Postdoctoral Science Foundation Funded Project (2017M612339, 2018M642695), the Natural Science Foundation of the Shandong Provincial (ZR2019QF007), the China Postdoctoral Special Funding Project (2019T120607), and the Youth Fund for Humanities and Social Sciences, Ministry of Education (19YJCZH244).

Data Availability Statement

No data were used in this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ionescu, M.; Păun, G.; Yokomori, T. Spiking neural P systems. Fundam. Inform. 2006, 71, 279–308. [Google Scholar]

- Song, T.; Gong, F.; Liu, X.; Zhao, Y.; Zhang, X. Spiking neural P systems with white hole neurons. IEEE Trans. NanoBiosci. 2016, 15, 666–673. [Google Scholar] [CrossRef]

- Wu, T.; Bîlbîe, F.-D.; Păun, A.; Pan, L.; Neri, F. Simplified and yet Turing universal spiking neural P systems with communication on request. Int. J. Neural Syst. 2018, 28, 1850013. [Google Scholar] [CrossRef]

- Wu, T.; Pan, L. The computation power of spiking neural P systems with polarizations adopting sequential mode induced by minimum spike number. Neurocomputing 2020, 401, 392–404. [Google Scholar] [CrossRef]

- Song, X.; Peng, H.; Wang, J.; Ning, G.; Sun, Z. Small universal asynchronous spiking neural P systems with multiple channels. Neurocomputing 2020, 378. [Google Scholar] [CrossRef]

- Peng, H.; Li, B.; Wang, J.; Song, X.; Wang, T.; Valencia-Cabrera, L.; Pérez-Hurtado, I.; Riscos-Núñez, A.; Pérez-Jiménez, M.J. Spiking neural P systems with inhibitory rules. Knowl. Based Syst. 2020, 188, 105064. [Google Scholar] [CrossRef]

- Aman, B.; Ciobanu, G. Spiking Neural P Systems with Astrocytes Producing Calcium. Int. J. Neural Syst. 2020, 30, 2050066. [Google Scholar] [CrossRef]

- Peng, H.; Lv, Z.; Li, B.; Luo, X.; Wang, J.; Song, X.; Wang, T.; Pérez-Jiménez, M.J.; Riscos-Núñez, A. Nonlinear spiking neural P systems. Int. J. Neural Syst. 2020, 30, 2050008. [Google Scholar] [CrossRef]

- Wu, T.; Pan, L.; Yu, Q.; Tan, K.C. Numerical Spiking Neural P Systems. IEEE Trans. Neural Netw. Learn. Syst. 2020. [Google Scholar] [CrossRef]

- Pan, L.; Păun, G. Spiking neural P systems with anti-spikes. Int. J. Comput. Commun. Control 2009, 4, 273–282. [Google Scholar] [CrossRef]

- Wu, T.; Zhang, T.; Xu, F. Simplified and yet Turing universal spiking neural P systems with polarizations optimized by anti-spikes. Neurocomputing 2020, 414, 255–266. [Google Scholar] [CrossRef]

- Song, X.; Wang, J.; Peng, H.; Ning, G.; Sun, Z.; Wang, T.; Yang, F. Spiking neural P systems with multiple channels and anti-spikes. Biosystems 2018, 169, 13–19. [Google Scholar] [CrossRef]

- Pan, L.; Zeng, X.; Zhang, X.; Jiang, Y. Spiking Neural P Systems with Weighted Synapses. Neural Process. Lett. 2012, 35, 13–27. [Google Scholar] [CrossRef]

- Peng, H.; Yang, J.; Wang, J.; Wang, T.; Sun, Z.; Song, X.; Luo, X.; Huang, X. Spiking neural P systems with multiple channels. Neural Netw. 2017, 95, 66–71. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; de la Cruz, R.T.A.; Cailipan, D.P.P.; Zhang, D.; Liu, X.; Zeng, X. On solutions and representations of spiking neural P systems with rules on synapses. Inf. Sci. 2019, 501, 30–49. [Google Scholar] [CrossRef]

- Jiang, S.; Fan, J.; Liu, Y.; Wang, Y.; Xu, F. Spiking neural P systems with polarizations and rules on synapses. Complexity 2020, 2020, 8742308. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; Adorna, H.N.; Jiang, M.; Zeng, X. Spiking neural P systems with scheduled synapses. IEEE Trans. NanoBiosci. 2017, 16, 792–801. [Google Scholar] [CrossRef]

- Bibi, A.; Xu, F.; Adorna, H.N.; Cabarle, F.G.C. Sequential Spiking Neural P Systems with Local Scheduled Synapses without Delay. Complexity 2019, 2019, 7313414. [Google Scholar] [CrossRef]

- Wang, X.; Song, T.; Gong, F.; Zheng, P. Computational power of spiking neural P systems with self-organization. Sci. Rep. 2016, 6, 27624. [Google Scholar] [CrossRef]

- Pan, L.; Păun, G.; Pérez-Jiménez, M.J. Spiking Neural P systems with neuron division and budding. Sci. China Inf. Sci. 2011, 54, 1596–1607. [Google Scholar] [CrossRef]

- Song, T.; Pang, S.; Hao, S.; Rodríguez-Patón, A.; Zheng, P. A parallel image skeletonizing method using spiking neural P systems with weights. Neural Process. Lett. 2019, 50, 1485–1502. [Google Scholar] [CrossRef]

- Gou, X.; Liu, Q.; Rong, H.; Hu, M.; Pirthwineel, P.; Deng, F.; Zhang, X.; Yu, Z. A Novel Spiking Neural P System for Image Recognition. Int. J. Unconv. Comput. 2021, 16, 121–139. [Google Scholar]

- Ma, T.; Hao, S.; Wang, X.; Rodriguez-Paton, A.A.; Wang, S.; Song, T. Double Layers Self-Organized Spiking Neural P Systems with Anti-Spikes for Fingerprint Recognition. IEEE Access 2019, 7, 177562–177570. [Google Scholar] [CrossRef]

- Song, T.; Pan, L.; Wu, T.; Zheng, P.; Wong, M.L.D.; Rodriguez-Paton, A. Spiking Neural P Systems with Learning Functions. IEEE Trans. NanoBiosci. 2019, 18, 176–190. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Zhang, P.; Wang, X.; Shi, X.; Wu, T.; Zheng, P. A computational approach for nuclear export signals identification using spiking neural P systems. Neural Comput. Appl. 2018, 29, 695–705. [Google Scholar] [CrossRef]

- Zhu, M.; Yang, Q.; Dong, J.; Zhang, G.; Gou, X.; Rong, H.; Paul, P.; Neri, F. An Adaptive Optimization Spiking Neural P System for Binary Problems. Int. J. Neural Syst. 2020, 31, 2050054. [Google Scholar] [CrossRef]

- Ramachandranpillai, R.; Arock, M. An adaptive spiking neural P system for solving vehicle routing problems. Arab. J. Sci. Eng. 2020, 45, 2513–2529. [Google Scholar] [CrossRef]

- Zein, M.; Adl, A.; Hassanien, A.E. Spiking neural P grey wolf optimization system: Novel strategies for solving non-determinism problems. Expert Syst. Appl. 2019, 121, 204–220. [Google Scholar] [CrossRef]

- Kong, D.; Wang, Y.; Wu, X.; Liu, X.; Qu, J.; Xue, J. A Grid-Density Based Algorithm by Weighted Spiking Neural P Systems with Anti-Spikes and Astrocytes in Spatial Cluster Analysis. Processes 2020, 8, 1132. [Google Scholar] [CrossRef]

- Dong, J.; Stachowicz, M.; Zhang, G.; Matteo, C.; Rong, H.; Prithwineel, P. Automatic Design of Spiking Neural P Systems Based on Genetic Algorithms. Int. J. Unconv. Comput. 2021, 16, 201–216. [Google Scholar]

- Wang, T.; Wei, X.; Wang, J.; Huang, T.; Peng, H.; Song, X.; Cabrera, L.V.; Pérez-Jiménez, M.J. A weighted corrective fuzzy reasoning spiking neural P system for fault diagnosis in power systems with variable topologies. Eng. Appl. Artif. Intell. 2020, 92, 103680. [Google Scholar] [CrossRef]

- Liu, W.; Wang, T.; Zang, T.; Huang, Z.; Wang, J.; Huang, T.; Wei, X.; Li, C. A fault diagnosis method for power transmission networks based on spiking neural P systems with self-updating rules considering biological apoptosis mechanism. Complexity 2020, 2020, 2462647. [Google Scholar] [CrossRef]

- Wang, J.; Peng, H.; Yu, W.; Ming, J.; Pérez-Jiménez, M.J.; Tao, C.; Huang, X. Interval-valued fuzzy spiking neural P systems for fault diagnosis of power transmission networks. Eng. Appl. Artif. Intell. 2019, 82, 102–109. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J.; Ming, J.; Shi, P.; Perez-Jimenez, M.J.; Yu, W.; Tao, C. Fault Diagnosis of Power Systems Using Intuitionistic Fuzzy Spiking Neural P Systems. IEEE Trans. Smart Grid 2018, 9, 4777–4784. [Google Scholar] [CrossRef]

- Wang, H.F.; Zhou, K.; Zhang, G.X. Arithmetic Operations with Spiking Neural P Systems with Rules and Weights on Synapses. Int. J. Comput. Commun. Control 2018, 13, 574–589. [Google Scholar] [CrossRef]

- Zhang, G.; Rong, H.; Paul, P.; He, Y.; Neri, F.; Pérez-Jiménez, M.J. A Complete Arithmetic Calculator Constructed from Spiking Neural P Systems and its Application to Information Fusion. Int. J. Neural Syst. 2020, 31, 2050055. [Google Scholar] [CrossRef]

- Frias, T.; Sanchez, G.; Garcia, L.; Abarca, M.; Diaz, C.; Sanchez, G.; Perez, H. A New Scalable Parallel Adder Based on Spiking Neural P Systems, Dendritic Behavior, Rules on the Synapses and Astrocyte-like Control to Compute Multiple Signed Numbers. Neurocomputing 2018, 319, 176–187. [Google Scholar] [CrossRef]

- Rodríguez-Chavarría, D.; Gutiérrez-Naranjo, M.A.; Borrego-Díaz, J. Logic Negation with Spiking Neural P Systems. Neural Process. Lett. 2020, 52, 1583–1599. [Google Scholar] [CrossRef]

- Carandang, J.P.; Villaflores, J.M.B.; Cabarle, F.G.C.; Adorna, H.N. CuSNP: Spiking neural P systems simulators in CUDA. Rom. J. Inf. Sci. Technol. 2017, 20, 57–70. [Google Scholar]

- Guo, P.; Quan, C.; Ye, L. UPSimulator: A general P system simulator. Knowl. Based Syst. 2019, 170, 20–25. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; Adorna, H.N.; Pérez-Jiménez, M.J.; Song, T. Spiking neural P systems with structural plasticity. Neural Comput. Appl. 2015, 26, 1905–1917. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; de la Cruz, R.T.A.; Zhang, X.; Jiang, M.; Liu, X.; Zeng, X. On string languages generated by spiking neural P systems with structural plasticity. IEEE Trans. NanoBiosci. 2018, 17, 560–566. [Google Scholar] [CrossRef] [PubMed]

- Jimenez, Z.B.; Cabarle, F.G.C.; de la Cruz, R.T.A.; Buño, K.C.; Adorna, H.N.; Hernandez, N.H.S.; Zeng, X. Matrix representation and simulation algorithm of spiking neural P systems with structural plasticity. J. Membr. Comput. 2019, 1, 145–160. [Google Scholar] [CrossRef]

- Yang, Q.; Li, B.; Huang, Y.; Peng, H.; Wang, J. Spiking neural P systems with structural plasticity and anti-spikes. Theor. Comput. Sci. 2020, 801, 143–156. [Google Scholar] [CrossRef]

- Peng, H.; Wang, J. Coupled neural P systems. IEEE Trans. Neural Netw. Learn. Syst. 2018, 30, 1672–1682. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Wang, J.; Pérez-Jiménez, M.J.; Riscos-Núñez, A. Dynamic threshold neural P systems. Knowl. Based Syst. 2019, 163, 875–884. [Google Scholar] [CrossRef]

- Li, B.; Peng, H.; Wang, J.; Huang, X. Multi-focus image fusion based on dynamic threshold neural P systems and surfacelet transform. Knowl. Based Syst. 2020, 196, 105794. [Google Scholar] [CrossRef]

- Li, B.; Peng, H.; Wang, J. A novel fusion method based on dynamic threshold neural P systems and nonsubsampled contourlet transform for multi-modality medical images. Signal Process. 2021, 178, 107793. [Google Scholar] [CrossRef]

- Xue, J.; Wang, Z.; Kong, D.; Wang, Y.; Liu, X.; Fan, W.; Yuan, S.; Niu, S.; Li, D. Deep ensemble neural-like P systems for segmentation of central serous chorioretinopathy lesion. Inf. Fusion 2021, 65, 84–94. [Google Scholar] [CrossRef]

- Li, B.; Peng, H.; Luo, X.; Wang, J.; Song, X.; Pérez-Jiménez, M.J.; Riscos-Núñez, A. Medical Image Fusion Method Based on Coupled Neural P Systems in Nonsubsampled Shearlet Transform Domain. Int. J. Neural Syst. 2020, 31, 2050050. [Google Scholar] [CrossRef]

- Wang, J.; Hoogeboom, H.J.; Pan, L.; Păun, G. Spiking Neural P Systems with Weights and Thresholds. Gheorge Paun 2009, 514–533. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.225.7268&rep=rep1&type=pdf#page=524 (accessed on 27 February 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).