Abstract

Real-world problems such as scientific, engineering, mechanical, etc., are multi-objective optimization problems. In order to achieve an optimum solution to such problems, multi-objective optimization algorithms are used. A solution to a multi-objective problem is to explore a set of candidate solutions, each of which satisfies the required objective without any other solution dominating it. In this paper, a population-based metaheuristic algorithm called an artificial electric field algorithm (AEFA) is proposed to deal with multi-objective optimization problems. The proposed algorithm utilizes the concepts of strength Pareto for fitness assignment and the fine-grained elitism selection mechanism to maintain population diversity. Furthermore, the proposed algorithm utilizes the shift-based density estimation approach integrated with strength Pareto for density estimation, and it implements bounded exponential crossover (BEX) and polynomial mutation operator (PMO) to avoid solutions trapping in local optima and enhance convergence. The proposed algorithm is validated using several standard benchmark functions. The proposed algorithm’s performance is compared with existing multi-objective algorithms. The experimental results obtained in this study reveal that the proposed algorithm is highly competitive and maintains the desired balance between exploration and exploitation to speed up convergence towards the Pareto optimal front.

1. Introduction

Most realistic problems in science and engineering consist of diverse competing objectives that require coexisting optimization to obtain a solution. Sometimes, there are several distinct alternatives [1,2] solutions for such problems rather than a single optimal solution. Targeting objectives are considered the best solutions as they are superior to other solutions in decision space. Generally, realistic multiple objective problems (MOOPs) require a long time to evaluate each objective function and constraint. In solving such realistic MOOPs, the stochastic process shows more competence and suitability than conventional methods. The evolutionary algorithms (EA) that mimic natural biological selection and evolution process are found to be an efficient approach to solve MOOPs. These approaches evidence their efficiency to solve solutions simultaneously and explore an enormous search space with adequate time. Genetic algorithm (GA) is an acclaimed approach among bio-inspired EA. In recent years, GA has been used to solve MOOPs, called non-dominated sorting genetic algorithm (NSGA-II) [3]. NSGA II is recognized as a recent advancement in multi-objective evolutionary algorithms. Besides, particle swarm optimization is another biologically motivated approach which is extended to multi-objective particle swarm optimization (MOPSO) [4,5], bare-bones multi-objective particle swarm optimization (BBMOPSO) [6], and non-dominated sorting particle swarm optimization (NSPSO) [7] to solve multi-objective optimization problems. These multi-objective approaches in one computation can produce several evenly distributed candidate solutions rather than a single solution.

However, based on Coulomb’s law of attraction electrostatic force and law of motion principle, a new algorithm called an artificial electric field algorithm [8] (AEFA) was proposed. AEFA mimics the interaction of charged particles under the attraction electrostatic force control and follows an iterative process. In a multi-dimensional search space, charged particles move towards heavier charges and converge to the heaviest charged particle. Due to the strong capability of exploration and exploitation, AEFA proved to be more robust and effective than artificial bee colony (ABC) [8], ant colony optimization (ACO) [8], particle swarm optimization (PSO) [8], and bio-geography based optimization (BBO) [8]. AEFA received growing attention from researchers in the development of a series of algorithms in several areas, such as parameter optimization [9], capacitor bank replacement [10], and scheduling [11]. There have been no research contributions found in recent years that have extended AEFA to solve MOOPs. In this paper, a modest contribution for improving AEFA to solve MOOP is made. The proposed algorithm (1) adopted the concept of “strength Pareto” for the fitness refinement of the charged particles, (2) introduced a fine-grained elitism selection mechanism based on the shift-based density estimation (SDE) approach to improve population diversity and manage the external population set, (3) utilized SDE with “strength Pareto” for density estimation, and (4) utilized bounded exponential crossover (BEX) [12] and polynomial mutation operator (PMO) [13,14] to improve global exploration, the local exploitation capability, and the convergence rate.

This paper is organized in the following way: Section 2 covers an overview of the existing literature on multi-objective optimization, Section 3 describes the preliminaries and background algorithms, Section 4 describes the proposed multi-objective method in detail, Section 5 presents results and performance of the proposed algorithm in comparison to existing optimization techniques, and Section 6 sums up the findings of this research in concluding remarks.

2. Related Work

Since a single solution cannot optimize multiple objectives at once, the problem is formulated as multi-objective optimization. Nobahari et al. [1] proposed a non-dominated sorting based gravitational search algorithm (NSGSA). Several multi-objective evolutionary algorithms (MOEAs) [15,16,17,18] are proposed in the past years. These algorithms have proven their ability to find various Pareto-optimal solutions in one single computation. Srinivas and Deb [19] proposed a non-dominated Sorting genetic algorithm (NSGA) where elitism was used to achieve a better convergence. Zitzler and Thiele [20] introduced the concept strength Pareto evolutionary algorithm (SPEA) with elitism selection. They proposed to maintain an external population to keep all the non-dominated solutions obtained in each generation. In each generation, the external and internal population were combined as a set, then based on the number of dominated solutions, all the non-dominated solutions in the combined set were assigned a fitness value. The fitness value decided the rank of the solutions that directed the exploration process towards the nondominated solution. Rudolph [21] proposed a simple elitist [22] multi-objective EA based on a comparison between the parent population and the child population. At each iteration, the candidate parent solutions were compared with the child non-dominated solution, and a final non-dominated solution set was formed to participate as the parent population for the next iteration. Although the proposed algorithm ensured the convergence to the Pareto-optimal front, it suffered from population diversity loss. All population-based evolutionary algorithms help in maintaining population diversity and convergence for multi-objective optimization [23]. In recent years, many significant contributions have been made that centered around the development of hybrid [24] algorithms and many-objective optimization algorithms [25,26]. Zhao and Cao [27] proposed an external memory-based particle swarm optimization (MOPSO) algorithm in which, besides the initial search population, two external memories were used to store global and local best individuals. Also, a geographic-based technique was utilized to maintain population diversity. Zhang and Li [28] combined traditional mathematical approaches with EA and introduced a decomposition-based MOEA, MOEA/D [29]. Martinez and Coello [30] extended MOEA/D and proposed a constraint-based selection mechanism to solve constrained MOPs. Gu et al. [31] proposed a dynamic weight design method based on the projections of non-dominated solutions to produce evenly distributed non-dominated solutions. Zhang [32] introduced a novel and efficient approach for non-dominated sorting to solve MOEAs. Chong and Qiu [33] proposed a novel opposition-based self-adaptive hybridized differential evolution algorithm to handle the continuous MOPs. Cheng et al. [34] proposed a many-objective optimization for high-dimensional objective space in which a reference vector guided an evolutionary algorithm introduced to maintain balance between diversity and convergence of the solutions. Hassanzadeh and Rouhani [35] proposed a multi-objective gravitational search algorithm (MOGSA). Yuan et al. [36] extended the gravitational search algorithm (GSA) to strength Pareto based multi-objective gravitational search algorithm (SPGSA). A review of existing literature is presented in Table 1.

Table 1.

Summary of existing MOOPs.

Our Contribution

In this paper, an improved artificial electric field algorithm is proposed to solve multi-objective optimization problems. The concept of strength Pareto is used in the optimization process of the proposed algorithm to refine fitness assignment. The shift-based density estimation (SDE) technique with fine-grained elitism selection approach and SDE with strength Pareto are applied to improve the population diversity and density estimation, respectively. The bounded exponential crossover (BEX) operator and polynomial mutation operator (PMO) are used to reduce the possibility of the solution trapping in local optima. The proposed algorithm is validated using different benchmark functions and compared with existing multi-objective optimization techniques.

3. Preliminaries and Background

This section briefly discusses the basic concepts of multi-objective optimization, artificial electric field algorithm, shift-based density estimation technique, and recombination and mutation operators.

3.1. Multi-Objective Optimization

In general, a multiple-objective problem (MOOP) involving diverse objectives is mathematically defined as

where represents the solution to MOOP, and represents the dimension of the decision boundary. A MOOP can be a minimization problem, a maximation problem, and a combination of both. The target objectives in are defined as

Minimize/Maximize:

Subject to the constraints:

where represents the objective function of solution. An MOOP either can have no or more than one constraint, e.g., and are considered as the and optional inequality and equality constraints, respectively. and represent the total number of inequality and equality constraints, respectively. The is then processed to calculate the value of the solution () for which satisfies the desired optimization. Unlike single-objective optimization (SOO), MOOP considers multiple conflicting objectives and produces a set of solutions in the search space. These solutions are processed by the concept of Pareto dominance theory [36], defined as follows:

Considering an MOOP, a vector = shows domination on vector = , if and only if

where represents the objective space dimension. A solution (Universe) is called Pareto optimal if and only if no other solution dominates it. In such a case, solutions are said to be non-dominated solutions. All these non-dominated solutions are composed to a set called Pareto-optimal set.

3.2. Artificial Electric Field Algorithm (AEFA)

Artificial electric field algorithm (AEFA) is a population-based meta-heuristic algorithm, which mimics the Coulomb’s law of attraction electrostatic force and law of motion. In AEFA, the possible candidate solutions of the given problem are represented as a collection of the charged particles. The charge associated with each charged particle helps in determining the performance of each candidate solution. Attraction electrostatic force causes each particle to attract towards one another resulting in global movement towards particles with heavier charges. A candidate solution to the problem corresponds to the position of charged particles and fitness function, which determines their charge and unit mass. The steps of AEFA are as follows:

Step 1. Initialization of Population.

A population of P candidate solutions (charged particles) is initialized as follows:

where represents the position of charged particle in the dimension, and is the dimensional space.

Step 2. Fitness Evaluation.

A fitness function is defined as a function that takes a candidate solution as input and produces an output to show how well the candidate solution fits with respected to the considered problem. In AEFA, the performance of each charged particle depends on the fitness value during each iteration. The best and worst fitness are computed as follows:

where and represent the fitness value of charged particle and total number of charged particles in the population, respectively. , represent the best fitness and the worst fitness respectively of all charged particles at time T.

Step 3. Computation of Coulomb’s Constant.

At time t, Coulomb’s constant is denoted by and computed as follows:

Here, represents the initial value and is set to 100. is a parameter and is set to 30. and represent current iteration and maximum number of iterations, respectively.

Step 4. Compute the Charge of Charged Particles.

At time , the charge of charged particle is represented by . It is computed based on the current population’s fitness as follows:

where

Here, is the fitness of the charged particle. helps in determining the total charge acting on the charged particle at time .

Step 5. Compute the Electrostatic Force and Acceleration of the Charged Particles.

- The electrostatic force exerted by the charged particle on the charged particle in the dimension at time is computed as:where and are the charges of and charged particles at any time . is a small positive constant, and is the distance between two charged particles and . and are the global best and current position of the charged particle at time . is the net force exerted on charged particle by all other charged particles at time . is uniform random number generated in the [0, 1] interval.

- The acceleration of charged particle at time in dimension is computed using the Newton law of motion as follows:where and represent respectively the electric field and unit mass of charged particle at time and in dimension.

Step 6. Update velocity and position of charged particles.

At time , the position and velocity of charged particle in dimension ae updated as follows:

where and represent the velocity and position of the charged particle in dimension at time , respectively. is a uniform random number in the interval [0, 1].

3.3. Shift-Based Density Estimation

In order to maintain good convergence and population diversity, the shift-based density estimation (SDE) [37] was introduced. While determining the density of an individual unlike traditional density estimation approaches, SDE shifts other individuals in the population to a new position. The SDE utilizes the convergence comparison between current and other individuals for each objective. For example, if outperforms on any objective, the corresponding objective value of is shifted to the position of on the same objective. In contrast, the value (position) of the objective remains unchanged. The process is described as

where represent the shifted objective value of , and represents shifted objective vector of . The steps followed in density estimation are explained in Algorithm 1.

| Algorithm 1 Density Estimation for Non-Domination Solutions |

| Input: Non-dominated solutions Output: Density of each solution

|

3.4. Recombination and Mutation Operators

In AEFA, movement (acceleration) of one charged particle towards another is determined by the total electrostatic force exerted by other particles on it. In this paper, bounded exponential crossover (BEX) [12] and polynomial mutation operator (PMO) [13,14] are used with AEFA to enhance the acceleration in order to increase convergence speed further.

3.4.1. Bounded Exponential Crossover (BEX)

The performance of MOOP highly depends upon the solution generated by the crossover operator. A crossover operator produces solutions that reduce the possibility of being trapped in local optima. Bounded exponential crossover (BEX) is used to generate the offspring solutions in the interval BEX involves an additional factor that depends on the interval and the parent solution position. The steps to generate offspring solutions in the interval and related to each pair of the parent and are given in Algorithm 2.

3.4.2. Polynomial Mutation Operator (PMO)

In order to avoid premature convergence in MOOP, the mutation operator is used. In this paper, the polynomial mutation operator (PMO) is used with the proposed algorithm. PMO includes two control parameters: mutation probability of a parent solution () and the magnitude of the expected solution (). For an individual ), PMO is computed as follows:

where represents the decision variable after the mutation, and represents the decision variable before the mutation. and represent the lower and upper bounds of the decision variable, respectively. represents a small variation obtained by

Here, is a random number in [0, 1] interval, which is compared with a predefined threshold value , and represents the mutation distribution index.

| Algorithm 2 Bounded Exponential Crossover (BEX) |

| Input: Parent solutions and Output: Offspring solutions While

|

4. Proposed Algorithm

In this section, the proposed multi-objective optimization method is described in detail. The algorithm starts with parameter initialization. Then, a population of candidate solutions is generated. Further, by computing fitness values for each candidate solution, the best solution is selected. The population is iteratively updated until the termination conditions are satisfied and the optimal solution is returned. The proposed algorithm is presented in Algorithm 3. The explanation of the symbols used in the proposed algorithm is presented in Table 2. The steps followed in the proposed algorithm are as follows.

Table 2.

Symbols used in the proposed algorithm.

4.1. Population Generation

In the proposed algorithm, there are two populations, i.e., searching population and the external population . The searching population, which contains initial candidate solutions, computes the non-dominated solutions and stores them in the external population. The process is performed iteratively.

| Algorithm 3 Proposed Multi-Objective Optimization Algorithm |

| Input: Searching population of size External Population of size ( Output: Non-dominated set of charged particles Begin

For each

For each do If then end if end for If then = else delete non-dominated solution from using Equation (17) as described in Section 4.3 end if

For each do If is a non-dominated solution then = ; end if end for |

4.2. Fitness Evaluation

The traditional AEFA is not suitable for the MOOPs due to the definition of charge. According to Equations (7) and (8), the charge of a particle is related to fitness value. Thus, the multi-objective fitness assignment used in the SPEAII [38] is introduced to evaluate the charge of the AEFA algorithm for two or more objectives in MOOPs. The multi-objective fitness assignment of the proposed algorithm is calculated by using the formula as follows:

where is the fitness value of the charged particle . means the raw fitness value of the charged particle , and represents the additional density information of the charged particle . The raw fitness exhibits the strength of each charged particle by assigning a rank to each charged particle. In MOOP, to avoid such conditions where more than more solution (charged particle) shows a similar rank, an additional density measure is used for distinguishing the difference between them. The SDE technique is used to describe the additional density (crowding distance) of .

4.3. A Fine-Grained Elitism Selection Mechanism

Elitism selection is a critical process in multi-objective optimization. It helps in the determination of the best fit solution for the next generation. The selection process affects the Pareto front output. If a better solution is lost in the selection, it cannot be found again. The selection of a better solution that dominates other solutions helps in removing worse solutions. In this study, a modified fine-grained elitism selection method that utilizes the shift-based density estimation (SDE) approach is proposed to improve population diversity. Similarly, to the non-dominated solution selection mechanism, the proposed method also selects the non-dominated solution as follows:

As the external population size ( is fixed, the non-dominated solutions are copied to the external population set until the current size of the external population is less than . In contrast, the non-dominated solutions ( are discarded from the external population set. In order to discard solutions and maintain diversity, the proposed method deletes the solution with the most crowded region computed using the SDE approach, as described in Section 3.3. The steps used to compute the shared crowding distance are as follows.

- The distance between two adjacent particles and is computed as Algorithm 1.

- For each particle, an additional density estimation (shared crowding distance) is computed as follows:

When the size of the non-dominated solution size exceeds external population size (i.e., the proposed method selects the charged particle from the external population using Equation (17) and deletes it. This process is performed iteratively until .

Here, the solution (charged particle) whose density is greater than the average shared crowing distance of all non-dominated solutions in the external population is considered as the worst solution and is deleted from the external population set. Then, the average crowding distance is recomputed and used in a similar manner until .

5. Experimental Results and Discussion

This section is further divided into three sub-sections. Section 5.1 discusses the performance comparison of the AEFA with existing evolutionary approaches. Section 5.2 gives a performance comparison of the proposed algorithm with existing multi-objective optimization algorithms, and Section 5.3 presents a sensitivity analysis of the proposed algorithm.

5.1. Performance Comparison of the AEFA With Existing Evolutionary Approaches

At first, the performance of AEFA is evaluated on 10 benchmark functions. These benchmarks are taken as three unimodal (UM), three low-dimensional multimodal (LDMM), and four high-dimensional multimodal (HDMM) functions. All these functions belong to the minimization problem. The description of the benchmark functions is given in Table 3. Then, the performance of the AEFA is compared with existing evolutionary approaches. For performance comparison, four statistical performance indices are considered: average best-so-far solution, mean best-so-far solution, the variance of the best-so-far solution, and average run-time. During each iteration, the algorithm updates the best-so-far solution and records running time. The performance indices are computed by analyzing the obtained best-so-far solution and running time.

Table 3.

Benchmark functions used for performance comparison of evolutionary algorithms.

5.1.1. Parameter Setting

For experimental analysis, the parameters, i.e., population size (), the maximum Coulomb’s constant , and the maximum number of iterations , are initialized in Table 4.

Table 4.

Parameters used to evaluate AEFA.

5.1.2. Results and Discussion

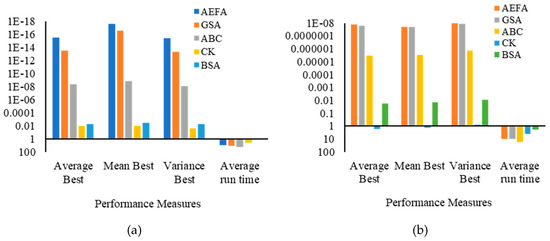

The performance of AEFA is compared with existing evolutionary optimization algorithms: backtracking search algorithm (BSA) [36], cuckoo search algorithm (CK) [36], artificial bee colony (ABC) algorithm [36], and gravitational search algorithm (GSA) [36]. The results are demonstrated in Table 5 and Figure 1. Figure 1 presents the difference between the AEFA and other existing algorithms based on the values obtained by performance measures on benchmark functions. For a better representation, all the results (except Figure 1d–h) are formulated in logarithm scale (base 10). A larger logarithmic value represents the minimum values of performance measures obtained for different functions. The results in Table 5 and Figure 1 demonstrate that the AEFA obtained minimum values for all UM and LDMM functions. The obtained values competed with the values of GSA and outperformed the value of ABC, CK, and BSA, which reveals that AEFA maintains a balance between exploration and exploitation and performs better in comparison to existing approaches. However, for HDMM (except benchmark F10) functions, AEFA performs worse as compared to ABC, and BSA, which implies that the AEFA suffers from the loss of diversity resulting in premature convergence in solving complex HDMM problems. It is concluded from results that the original AEFA faces challenges in solving higher-dimensional multimodal problems.

Table 5.

Performance comparison between AEFA and existing evolutionary optimization algorithms.

Figure 1.

Difference among algorithms based on the values obtained by performance measures on benchmark functions. (a) Performance measurement for F1 benchmark function. (b) Performance measurement for F2 benchmark function. (c) Performance measurement for F3 benchmark function. (d) Performance measurement for F4 benchmark function. (e) Performance measurement for F5 benchmark function. (f) Performance measurement for F6 benchmark function. (g) Performance measurement for F7 benchmark function. (h) Performance measurement for F8 benchmark function. (i) Performance measurement for F9 benchmark function. (j) Performance measurement for F10 benchmark function.

5.2. Performance Comparison of the Proposed Algorithm with Existing Multi-Objective Optimization Algorithms

As shown in Table 5, the AEFA faces challenges in solving MOOP. These challenges are addressed through the proposed algorithm. The proposed algorithm is evaluated with six benchmark functions. The description of benchmark functions is presented in Table 6. The performance of the proposed algorithm is compared with the existing MOOP based on four performance measures: generational distance metric (GD) [2], diversity metric (DM) [3], converge metric (CM) [3], and spacing metric (SM) [39]. DM measures the extent of spread attained in the obtained optimal solution. CM measures the convergence to the obtained optimal Pareto front. GD measures the proximity of the optimal solution to the Pareto optimal front. SM demonstrates how evenly the optimal solutions are distributed among themselves. Further, the performance of the proposed algorithm is compared with existing MOOPs in terms of recombination and mutation operators.

Table 6.

Benchmark functions used for MOOP performance comparison.

5.2.1. Parameter Setting

For experimental analysis, the parameters, i.e., initial population size (), external population size , the maximum Coulomb’s constant , the maximum number of iterations , initial crossover probability , final crossover probability , initial mutation probability ), and final mutation probability , are initialized and presented in Table 7.

Table 7.

Parameters used in the proposed algorithm.

5.2.2. Results and Discussion

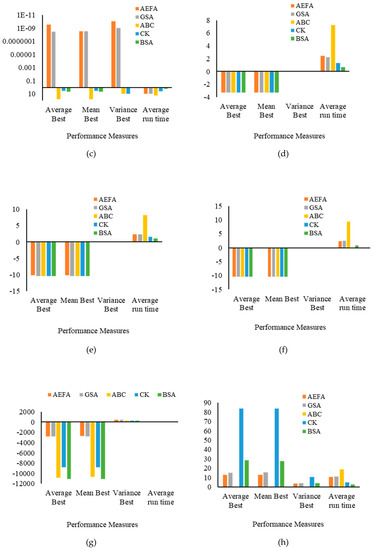

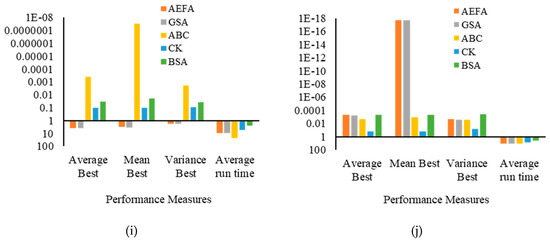

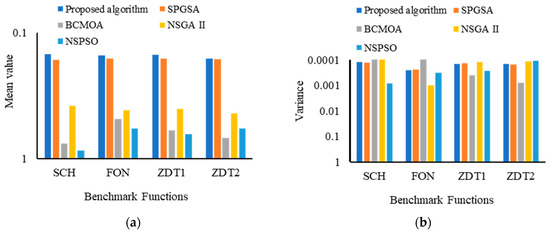

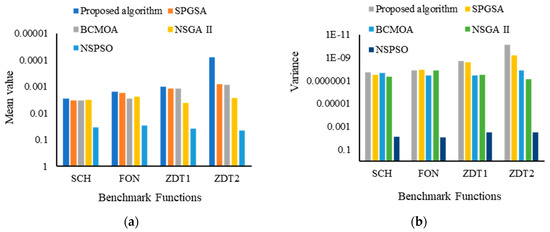

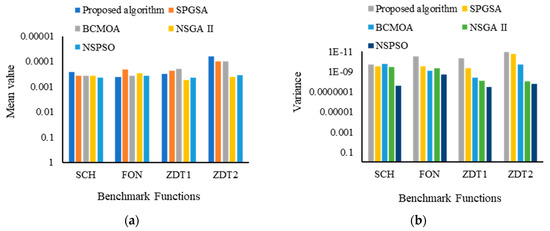

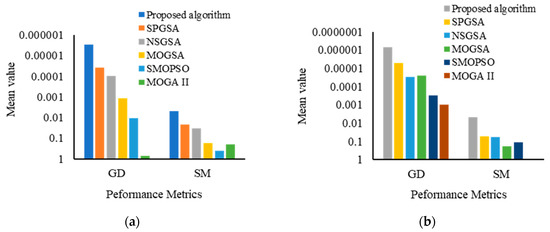

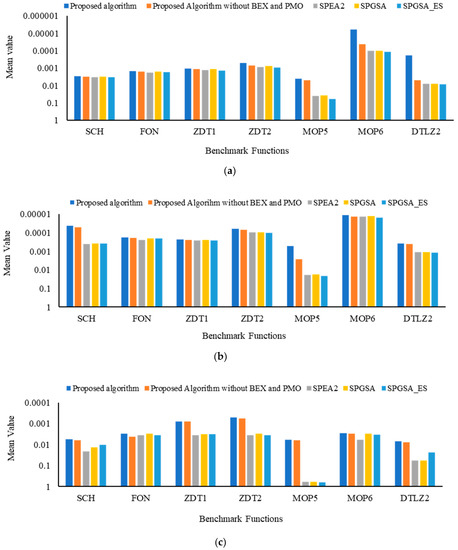

The performance of the proposed algorithm is analyzed in three steps: (1) the proposed algorithm is evaluated on SCH, FON, and ZDT benchmarks and then compared with NSGA [3], NSPSO [7], BCMOA [40], and SPGSA [36] based on CM, DM, and GD metrics; the (2) proposed algorithm is evaluated on MOP5 and MOP6 benchmarks and then compared with NSGSA [36], MOGSA [36], SMOPSO [36], MOGA II [36], and SPGSA [36] based on GD and SM metrics; and (3) the efficacy of using the recombination operator is validated by evaluating the proposed algorithm on six benchmarks and then compared with the existing MOOPs. Experiments are performed 10 times on each benchmark function, and results are recorded in terms of mean and variance, contributing to the robustness of the algorithm. The results are presented in Table 8, Table 9, Table 10, Table 11 and Table 12 and Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6. For better representation, all the results in Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6 are prepared using logarithmic scale, where a high logarithmic value corresponds to a minimum output value (mean and variance). Results in Table 8 and Figure 2 demonstrate that the proposed algorithms obtained minimum values of CM metric as compared to NSGSA [36], MOGSA [36], SMOPSO [36], MOGA II [36], and SPGSA [36] which proves that the proposed algorithm ensures better convergence and keeps a good balance between exploration and exploitation of low-dimensional as well as high-dimensional problems. Figure 3 and Table 9 show that the proposed algorithm obtained the minimum value of variance (in DM metric) for all the benchmark functions as compared to NSGA II, NSPSO, BCMOA, and SPGSA, which validates the efficacy and robustness of the proposed algorithm. Results in Table 10 (GD metric) and Figure 4 demonstrate that the proposed algorithm performed better in comparison to existing NSGA II, NSPSO, BCMOA, and SPGSA. GD metric validates the proximity of obtained optimal solution to the optimal Pareto front, thereby ensuring the effectiveness of the proposed algorithm. Table 11 and Figure 5 demonstrate that the proposed algorithm achieves better values for GD and SM metrics than the compared algorithm. GD values of the proposed algorithm show more accurate results than the compared algorithm, and SM values show that the proposed algorithm attains a better convergence accuracy for all benchmark functions, which shows that the proposed algorithm can produce uniformly distributed, non-dominated optimal solutions. Table 12 and Figure 6a–c show that the proposed algorithm is compared with SPEA2, SPGSA, SPGSA_NRM, and a self-variant of MOOP without utilizing BEX and PMO operators. Results indicate that involving BEX and PMO operators in the proposed algorithm significantly improves the algorithm optimization performance in comparison to the compared algorithm. Contrary, in the absence of these operators, the proposed algorithm suffers from premature convergence. So, it is concluded from all four metrics (Table 8, Table 9, Table 10 and Table 11) and Table 12 that the proposed algorithm shows better efficacy, accuracy, and robustness in searching true Pareto fronts across the global search space in comparison to the existing algorithm. Further, involving BEX and PMO operators, the proposed algorithm maintains desirable diversity in searching the true Pareto front.

Table 8.

Performance comparison of the proposed algorithm with existing MOOPs based on SCH, FON, and ZDT functions using the CM metric.

Table 9.

Performance comparison of the proposed algorithm with existing MOOPs based on SCH, FON, and ZDT functions using the DM metric.

Table 10.

Performance comparison of the proposed algorithm with existing MOOPs based on SCH, FON, and ZDT functions using the GD metric.

Table 11.

Performance comparison of the proposed algorithm with existing MOOPs based on MOP5 and MOP6 functions using the GD/SM metric.

Table 12.

Performance comparison of the proposed algorithm with existing MOOPs based on recombination and mutation operators.

Figure 2.

Performance comparison of the proposed algorithm with existing MOOPs based on SCH, FON, and ZDT functions using the CM metric. (a) Performance measurement based on mean value; (b) performance measurement based on variance.

Figure 3.

Performance comparison of the proposed algorithm with existing MOOPs based on SCH, FON, and ZDT functions using the DM metric (a). Performance measurement based on mean value; (b) performance measurement based on variance.

Figure 4.

Performance comparison of the proposed algorithm with existing MOOPs based on SCH, FON, and ZDT functions using the GD metric. (a) Performance measurement based on mean value; (b) performance measurement based on variance.

Figure 5.

Performance comparison of the proposed algorithm with existing MOOPs based on MOP5 and MOP6 functions using the GD/SM metric. (a) Performance measurement for MOP5 benchmark function; (b) performance measurement for MOP6 benchmark function.

Figure 6.

Performance comparison of the proposed algorithm with existing MOOPs based on recombination and mutation operators. (a) Performance comparison of the proposed algorithm with existing MOOPs based on recombination and mutation operators on the CM metric. (b) Performance comparison of the proposed algorithm with existing MOOPs based on recombination and mutation operators on the GD metric. (c) Performance comparison of the proposed algorithm with existing MOOPs based on recombination and mutation operators on the SM metric.

5.3. Sensitivity Analysis of the Proposed Algorithm

Sensitivity analysis is defined as the process that determines the influences of change in input variable on the robustness of outcome. In this paper, the robustness of the proposed algorithm is evaluated in two steps: (1) sensitivity analysis 1 and (2) sensitivity analysis 2. Sensitivity analysis 1 is performed for the different values of crossover operator (BEX), mutation operator (PMO), and initial value of coulomb’s constant . Sensitivity analysis 2 is performed for the different values of initial population size and maximum number of iterations. The detailed description of the sensitivity analysis and related parameter settings is described in Table 13. Further, a combined performance score, described in Table 14, is used measure the performance of the proposed algorithm.

Table 13.

Parameter settings for sensitivity analysis.

Table 14.

Performance measure for sensitivity analysis.

Results and Discussion

Sensitivity of mutation operator (PMO), crossover operator (BEX), initial population size, maximum number of iterations, and initial value of coulomb’s constant are analyzed using GD and SM metrics, and results are presented in Table 15, Table 16, Table 17, Table 18 and Table 19, respectively. Table 15 shows that performance of the PMO varied from “Average” to “Good”, which means that selection of suitable values for mutation is required to achieve optimum results. Table 16 demonstrates that performance of the BEX varied from “Average” to “Very Good”, which means that the sensitivity of BEX is low for the proposed algorithm. Table 17 demonstrates that performance of the population size varied from “Average” to “Very Good”, which means that the sensitivity of the population is moderate for the proposed algorithm. Table 18 demonstrates that performance of maximum no. of iterations is “Good” for all experiments, which means that the proposed algorithm is less sensitive to maximum number of iterations. Table 19 demonstrates that performance of the initial value of Coulomb’s constant ( varies from “Good” to “Very Good”, which means that the proposed algorithm is less sensitive to ( It is concluded from all the tables (Table 15, Table 16, Table 17, Table 18 and Table 19) that the proposed algorithm works efficiently in all given scenarios, which shows its robustness.

Table 15.

Sensitivity analysis of mutation parameter (PMO).

Table 16.

Sensitivity analysis of crossover parameter (BEX).

Table 17.

Sensitivity analysis of initial population size.

Table 18.

Sensitivity analysis of maximum no. of iterations.

Table 19.

Sensitivity analysis of Coulomb’s constant initial value.

6. Conclusions and Future Work

In this paper, an improved population-based meta-heuristic algorithm, AEFA, is proposed to handle the multi-objective optimization problems. The proposed algorithm used strength Pareto dominance theory to refine fitness assignment and an improved fine-grained elitism selection based on the SDE mechanism to maintain population diversity. Further, the proposed algorithm used the SDE technique with strength Pareto dominance theory for density estimation, and it implemented bounded exponential crossover (BEX) and polynomial mutation operator (PMO) to avoid solutions trapping in local optima and enhance convergence. The experiments were performed in two steps. In the first step, the AEFA was evaluated on different low-dimensional and high-dimensional benchmark functions, and then the performance of the AEFA was compared with the existing evolutionary optimization algorithms (EOAs) based on four parameters: average best-so-far solution, mean best-so-far solution, the variance of the best-so-far solution, and average run-time. Experimental results show that for low-dimensional benchmark functions, AEFA performed better in comparison to existing EOAs, but for complex high-dimensional benchmark functions, AEFA suffered from loss of diversity and performed worse. In the second step, the proposed algorithm was evaluated on different benchmark functions, and then the performance of the proposed algorithm was compared with the existing evolutionary multi-objective optimization algorithms (EMOOPs) based on diversity, converge, generational distance, and spacing metrics. Experimental results prove that the proposed algorithm is not only able to find the optimal Pareto front (non-dominated solutions), but it also shows better performance than the existing multi-objective optimization techniques in terms of accuracy, robustness, and efficacy. In the future, the proposed work can be explored in various directions. One direction is to extend the proposed algorithm to solve various real-word multi-objective optimization problem. The proposed work can also be used to solve complex problems by hybridization of the proposed algorithm with another existing algorithm.

Author Contributions

Conceptualization, Methodology, Formal analysis, Validation, Writing—Original Draft Preparation, H.P.; Supervision, R.R. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Nobahari, H.; Nikusokhan, M.; Siarry, P. Non-dominated sorting gravitational search algorithm. In Proceedings of the 2011 International Conference on Swarm Intelligence, Cergy, France, 14–15 June 2011; pp. 1–10. [Google Scholar]

- Van Veldhuizen, D.A.; Lamont, G.B. On measuring multiobjective evolutionary algorithm performance. In Proceedings of the 2000 Congress on Evolutionary Computation, La Jolla, CA, USA, 16–19 July 2000; Volume 1, pp. 204–211. [Google Scholar]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Zhao, B.; Cao, Y.J. Multiple Objective Particle Swarm Optimization Technique for Economic Load Dispatch. J. Zhejiang Univ. Sci. A 2005, 6, 420–427. [Google Scholar]

- Cagnina, L. A Particle Swarm Optimizer for Multi-Objective Optimization. J. Comput. Sci. Technol. 2005, 5, 204–210. [Google Scholar]

- Zhang, Y.; Gong, D.W.; Ding, Z. A bare-bones multi-objective particle swarm optimization algorithm for environmental/economic dispatch. Inf. Sci. 2012, 192, 213–227. [Google Scholar] [CrossRef]

- Li, X. A non-dominated sorting particle swarm optimizer for multiobjective optimization. In Genetic and Evolutionary Computation Conference; Springer: Berlin/Heidelberg, Germany, 2003; pp. 37–48. [Google Scholar]

- Yadav, A. AEFA: Artificial electric field algorithm for global optimization. Swarm Evol. Comput. 2019, 48, 93–108. [Google Scholar]

- Demirören, A.; Hekimoğlu, B.; Ekinci, S.; Kaya, S. Artificial Electric Field Algorithm for Determining Controller Parameters in AVR system. In Proceedings of the 2019 International Artificial Intelligence and Data Processing Symposium (IDAP), Malatya, Turkey, 21–22 September 2019; pp. 1–7. [Google Scholar]

- Abdelsalam, A.A.; Gabbar, H.A. Shunt Capacitors Optimal Placement in Distribution Networks Using Artificial Electric Field Algorithm. In Proceedings of the 2019 the 7th International Conference on Smart Energy Grid Engineering (SEGE), Oshawa, ON, Canada, 12–14 August 2019; pp. 77–85. [Google Scholar]

- Shafik, M.B.; Rashed, G.I.; Chen, H. Optimizing Energy Savings and Operation of Active Distribution Networks Utilizing Hybrid Energy Resources and Soft Open points: Case Study in Sohag, Egypt. IEEE Access 2020, 8, 28704–28717. [Google Scholar] [CrossRef]

- Thakur, M.; Meghwani, S.S.; Jalota, H. A modified real coded genetic algorithm for constrained optimization. Appl. Math. Comput. 2014, 235, 292–317. [Google Scholar] [CrossRef]

- Gong, M.; Jiao, L.; Du, H.; Bo, L. Multiobjective immune algorithm with nondominated neighbor-based selection. Evol. Comput. 2008, 16, 225–255. [Google Scholar] [CrossRef]

- Liu, Y.; Niu, B.; Luo, Y. Hybrid learning particle swarm optimizer with genetic disturbance. Neurocomputing 2015, 151, 1237–1247. [Google Scholar] [CrossRef]

- Fonseca, C.M.; Fleming, P.J. Genetic algorithms for multi-objective optimization: Formulation, discussion and generalization. In Proceedings of the 5th International Conference on Genetic Algorithms, San Mateo, CA, USA, 1 June 1993; pp. 416–423. [Google Scholar]

- Horn, J.; Nafploitis, N.; Goldberg, D.E. A niched Pareto genetic algorithm for multi-objective optimization. In Proceedings of the 1st IEEE Conference on Evolutionary Computation, Orlando, FL, USA, 27–29 June 1994; pp. 82–87. [Google Scholar]

- Tan, K.C.; Goh, C.K.; Mamun, A.A.; Ei, E.Z. An evolutionary artificial immune system for multi-objective optimization. Eur. J. Oper. Res. 2008, 187, 371–392. [Google Scholar] [CrossRef]

- Zitzler, E. Evolutionary Algorithms for Multiobjective Optimization: Methods and Applications; Ithaca: Shaker, OH, USA, 1999; Volume 63. [Google Scholar]

- Srinivas, N.; Deb, K. Multi-Objective function optimization using non-dominated sorting genetic algorithms. Evol. Comput. 1995, 2, 221–248. [Google Scholar] [CrossRef]

- Zitzler, E.; Thiele, L. Multiobjective optimization using evolutionary algorithms—A comparative case study. In International Conference on Parallel Problem Solving from Nature; Springer: Berlin/Heidelberg, Germany, 1998; pp. 292–301. [Google Scholar]

- Rudolph, G. Technical Report No. CI-67/99: Evolutionary Search under Partially Ordered Sets; Dortmund Department of Computer Science/LS11, University of Dortmund: Dortmund, Germany, 1999. [Google Scholar]

- Rughooputh, H.C.; King, R.A. Environmental/economic dispatch of thermal units using an elitist multiobjective evolutionary algorithm. In Proceedings of the IEEE International Conference on Industrial Technology, Maribor, Slovenia, 10–12 December 2003; Volume 1, pp. 48–53. [Google Scholar]

- Deb, K. Multi-Objective Optimization Using Evolutionary Algorithms; John Wiley & Sons: New York, NY, USA, 2001; Volume 16. [Google Scholar]

- Vrugt, J.A.; Robinson, B.A. Improved evolutionary optimization from genetically adaptive multimethod search. Proc. Natl. Acad. Sci. USA 2007, 104, 708–711. [Google Scholar] [CrossRef] [PubMed]

- Deb, K.; Jain, H. An evolutionary many-objective optimization algorithm using reference-point-based nondominated sorting approach, part I: Solving problems with box constraints. IEEE Trans. Evol. Comput. 2013, 18, 577–601. [Google Scholar] [CrossRef]

- Jain, H.; Deb, K. An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part II: Handling constraints and extending to an adaptive approach. IEEE Trans. Evol. Comput. 2013, 18, 602–622. [Google Scholar] [CrossRef]

- Zhao, B.; Guo, C.X.; Cao, Y.J. A multiagent-based particle swarm optimization approach for optimal reactive power dispatch. IEEE Trans. Power Syst. 2005, 20, 1070–1078. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, H. MOEA/D: A multiobjective evolutionary algorithm based on decomposition. IEEE Trans. Evol. Comput. 2007, 11, 712–731. [Google Scholar] [CrossRef]

- Ma, X.; Liu, F.; Qi, Y.; Li, L.; Jiao, L.; Liu, M.; Wu, J. MOEA/D with Baldwinian learning inspired by the regularity property of continuous multiobjective problem. Neurocomputing 2014, 145, 336–352. [Google Scholar] [CrossRef]

- Martinez, S.Z.; Coello, C.A.C. A multi-objective evolutionary algorithm based on decomposition for constrained multi-objective optimization. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 429–436. [Google Scholar]

- Gu, F.; Liu, H.L.; Tan, K.C. A multiobjective evolutionary algorithm using dynamic weight design method. Int. J. Innov. Comput. Inf. Control 2012, 8, 3677–3688. [Google Scholar]

- Zhang, X.; Tian, Y.; Cheng, R.; Jin, Y. An efficient approach to nondominated sorting for evolutionary multiobjective optimization. IEEE Trans. Evol. Comput. 2014, 19, 201–213. [Google Scholar] [CrossRef]

- Chong, J.K.; Qiu, X. An Opposition-Based Self-Adaptive Differential Evolution with Decomposition for Solving the Multiobjective Multiple Salesman Problem. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; pp. 4096–4103. [Google Scholar]

- Cheng, R.; Jin, Y.; Olhofer, M.; Sendhoff, B. A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 2016, 20, 773–791. [Google Scholar] [CrossRef]

- Hassanzadeh, H.R.; Rouhani, M. A multi-objective gravitational search algorithm. In Proceedings of the 2010 2nd International Conference on Computational Intelligence, Communication Systems and Networks, Liverpool, UK, 28–30 July 2010; pp. 7–12. [Google Scholar]

- Yuan, X.; Chen, Z.; Yuan, Y.; Huang, Y.; Zhang, X. A strength pareto gravitational search algorithm for multi-objective optimization problems. Int. J. Pattern Recognit. Artif. Intell. 2015, 29, 1559010. [Google Scholar] [CrossRef]

- Li, M.; Yang, S.; Liu, X. Shift-based density estimation for Pareto-based algorithms in many-objective optimization. IEEE Trans. Evol. Comput. 2013, 18, 348–365. [Google Scholar] [CrossRef]

- Zitzler, E.; Laumanns, M.; Thiele, L. TIK report 103: SPEA2: Improving the Strength Pareto Evolutionary Algorithm; Computer Engineering and Networks Laboratory (TIK), ETH Zurich: Zurich, Switzerland, 2001. [Google Scholar]

- Schott, J.R. Fault Tolerant Design Using Single and Multicriteria Genetic Algorithm Optimization; Air force inst of tech Wright-Patterson AFB: Cambridge, MA, USA, 1995. [Google Scholar]

- Guzmán, M.A.; Delgado, A.; De Carvalho, J. A novel multiobjective optimization algorithm based on bacterial chemotaxis. Eng. Appl. Artif. Intell. 2010, 23, 292–301. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).