Research on Intelligent Early Warning and Emergency Response Mechanism for Tunneling Face Gas Concentration Based on an Improved KAN-iTransformer

Abstract

1. Introduction

2. Project Background and Data Processing

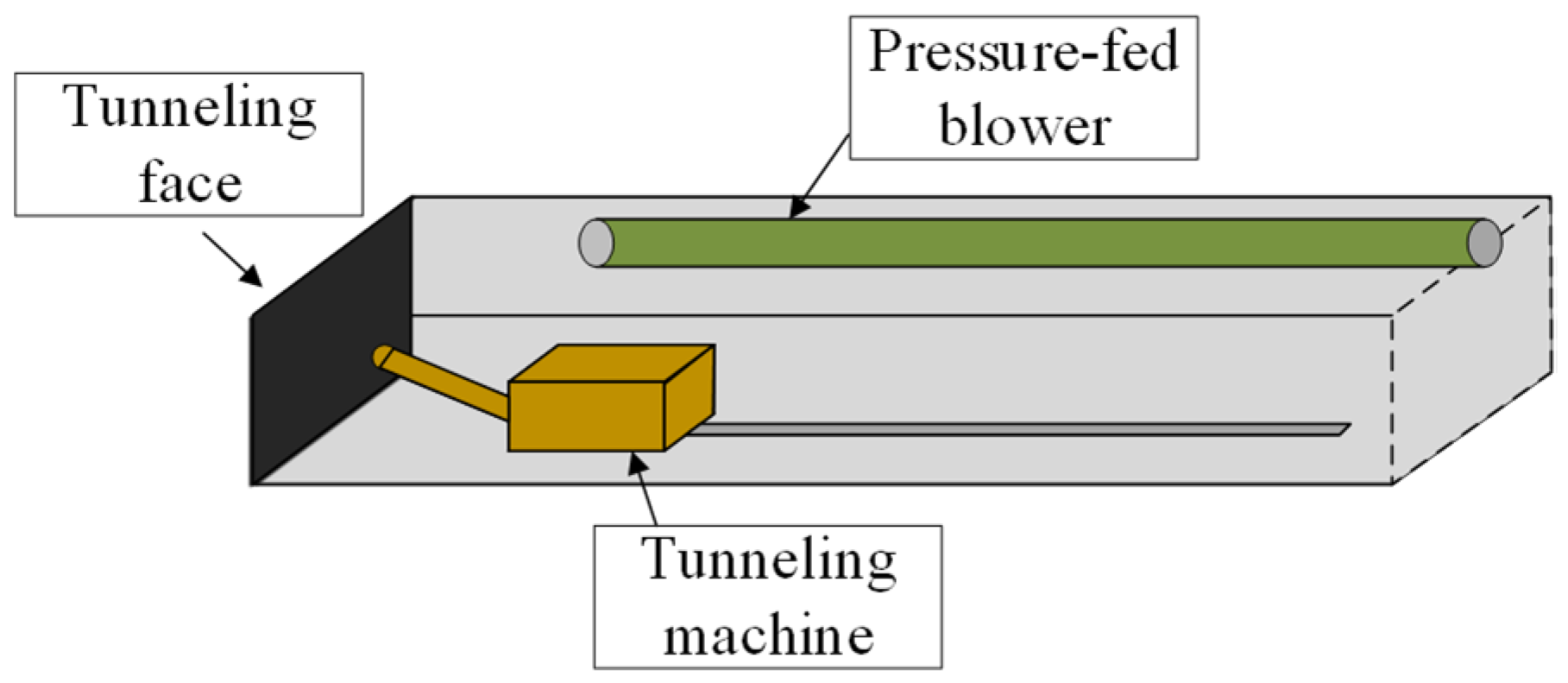

2.1. Project Background and Data Sources

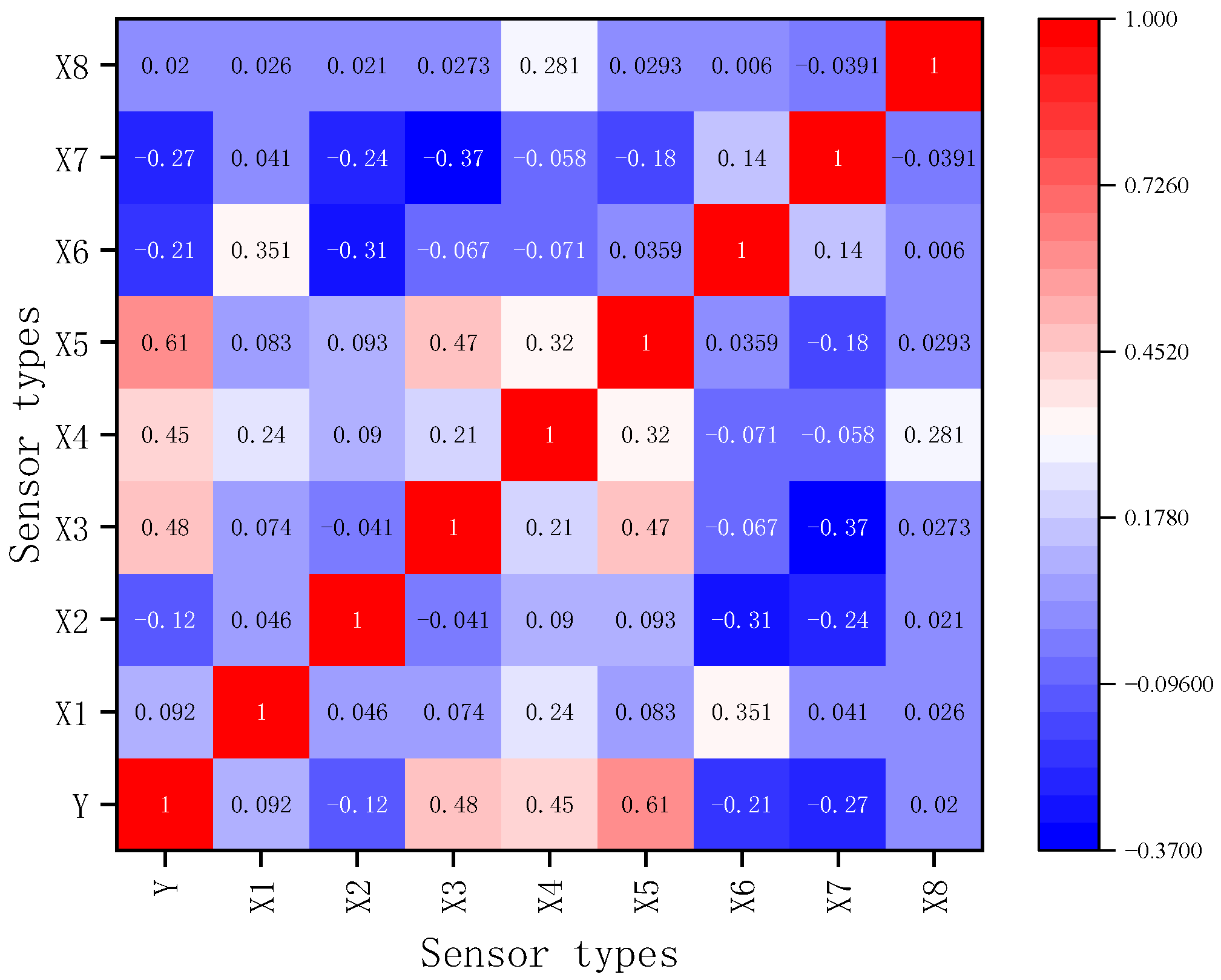

2.2. Feature Extraction

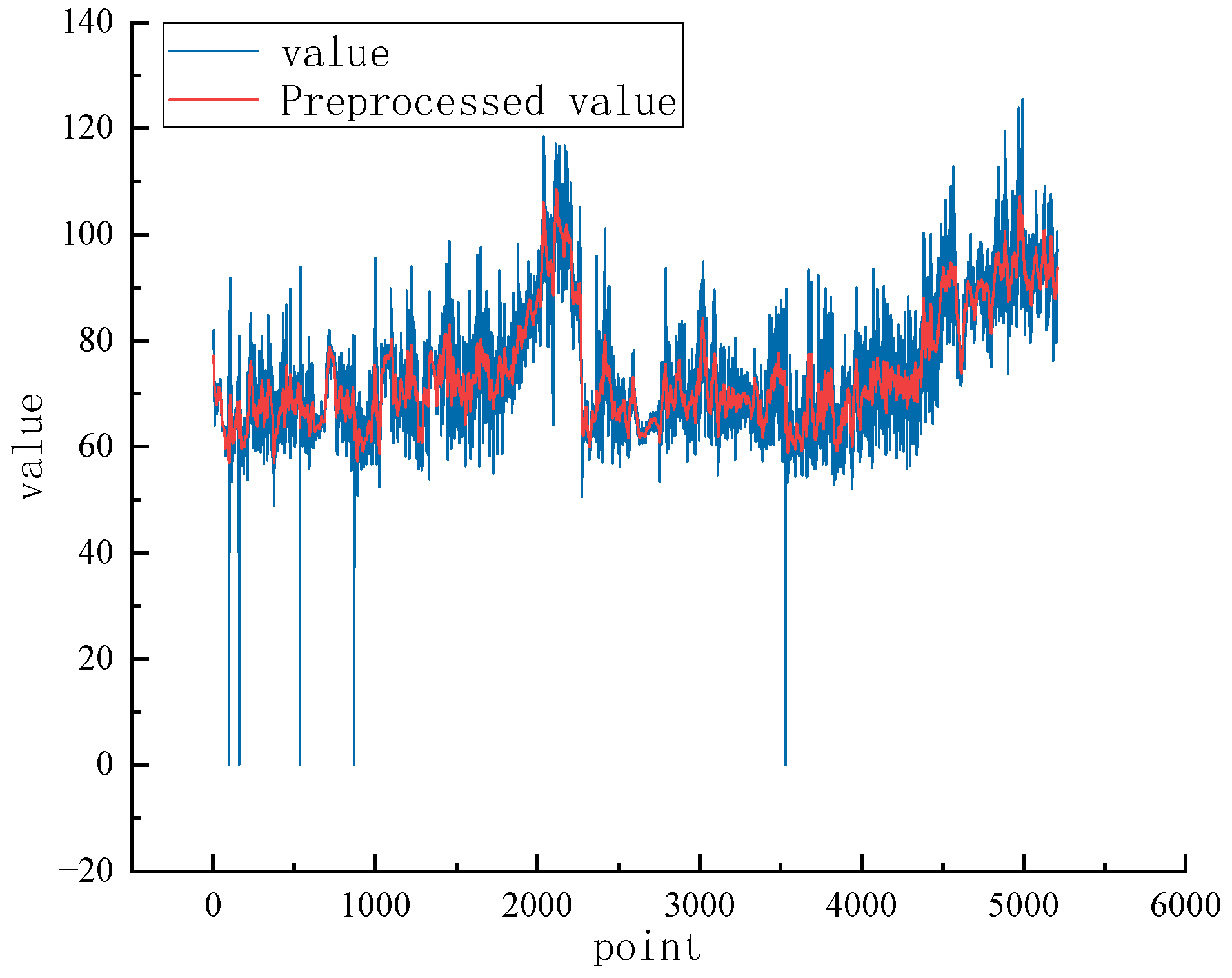

2.3. Data Preprocessing

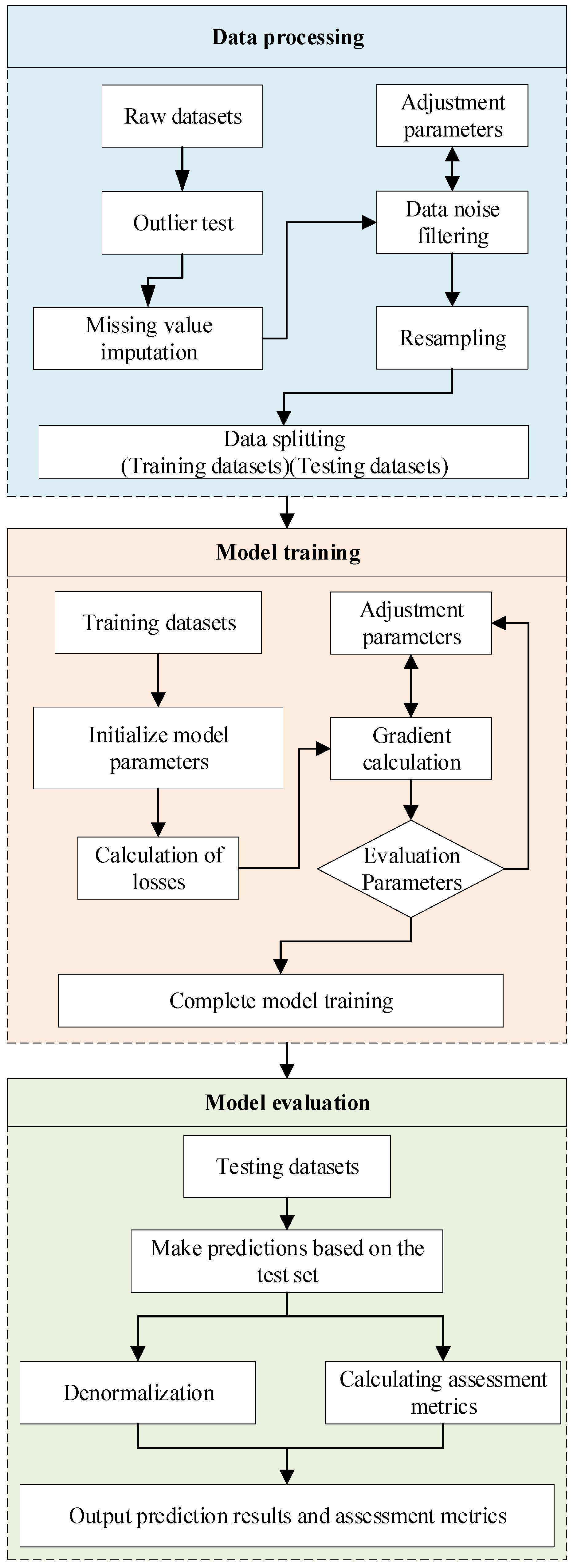

3. Gas Concentration Prediction Model Based on KAN-iTransformer

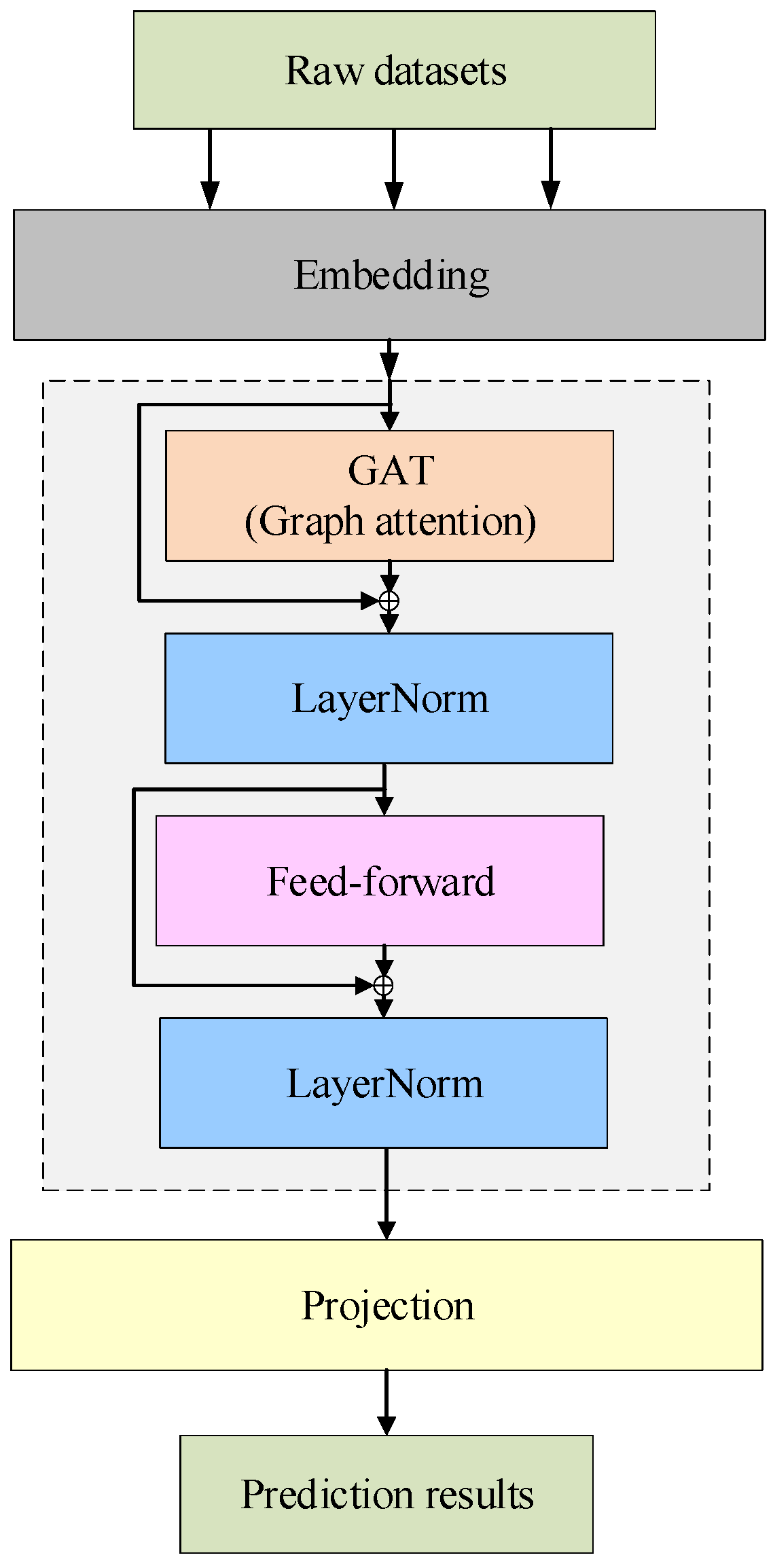

3.1. Overall Model Process

3.2. KAN Model Architecture

3.3. Improve the Architecture of the iTransformer Model

3.3.1. Embedded Layer

3.3.2. Improved Inverted Transformer Module

3.3.3. Prediction Header

4. Experimental Results and Analysis

4.1. Model Evaluation and Experimental Configuration

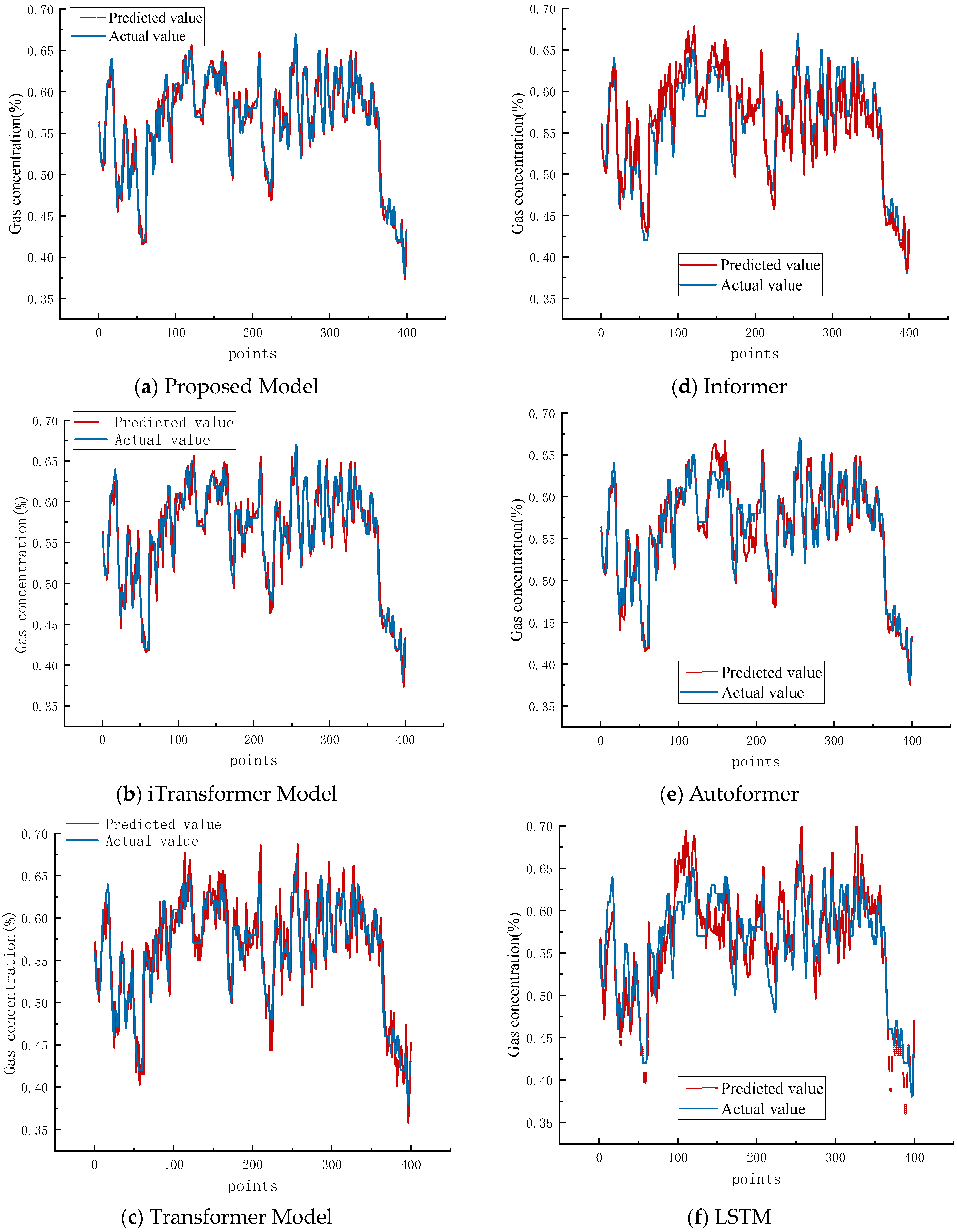

4.2. Comparative Analysis of Single-Step Forecasting Performance Across Models

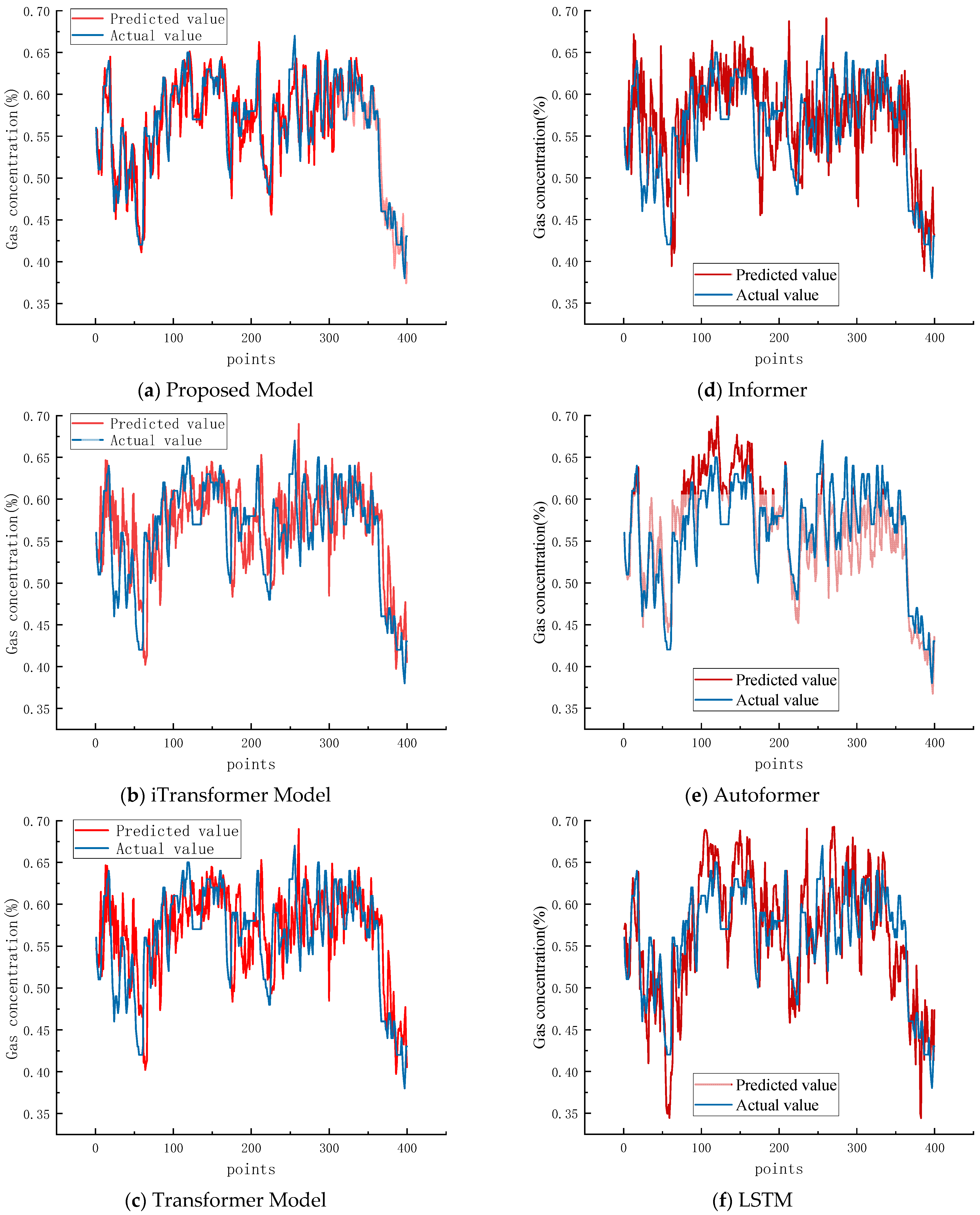

4.3. Model Multi-Step Prediction Performance Comparison Analysis

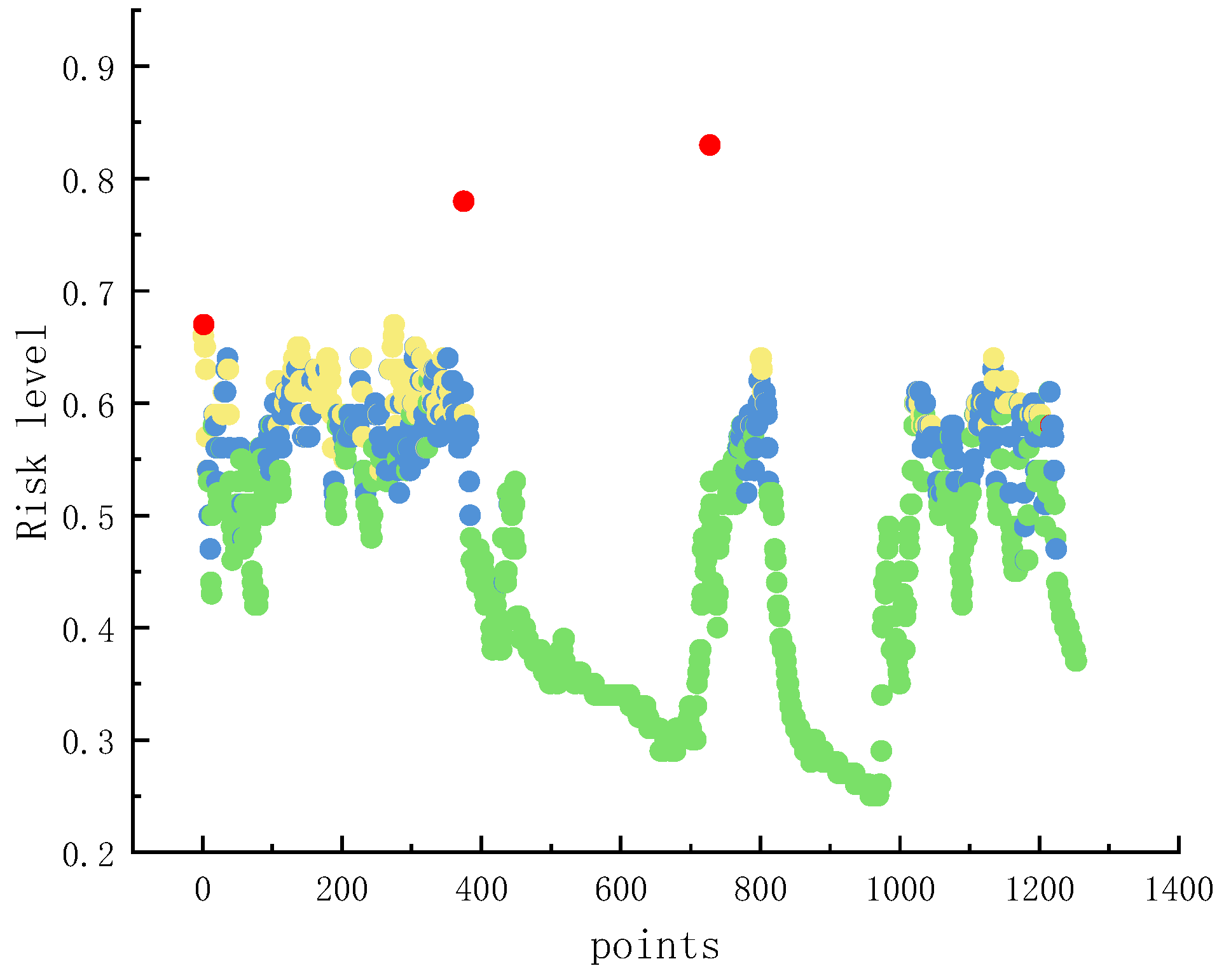

4.4. Model Emergency Response Mechanism

5. Discussion

5.1. Model Generalization

5.2. Model Limitations and Adaptability Under Extreme Conditions

6. Conclusions

- (1)

- This paper innovatively proposes a gas concentration prediction model for the tunneling face based on the Kernel Attention Network (KAN) and the improved iTransformer. The KAN network replaces the traditional linear weights with a learnable kernel function, enhancing the model’s ability to represent nonlinear relationships. The improved iTransformer effectively captures the long-term dependencies among multiple variables through inverted token construction and graph attention mechanism.

- (2)

- The experimental results show that in single-step prediction, the mean squared error (MSE) of the proposed model is 0.000307, the mean absolute error (MAE) is 0.012921, the mean absolute percentage error (MAPE) is 2.321373, and the coefficient of determination (R2) reaches 0.916450. Compared with the iTransformer and Transformer models, the error indicators are significantly reduced (the MSE decreases by 14.2% and 45.3%, respectively). More importantly, when compared with other state-of-the-art time series models, our model demonstrates even more substantial improvements, with MSE reductions of 25.2% over Informer, 37.1% over Autoformer, and 66.5% over LSTM. This proves the superiority of the model in capturing transient changes and peak features.

- (3)

- The proposed model achieves a high prediction accuracy, with an R2 value exceeding 0.9, indicating excellent consistency between the predicted and observed gas concentrations. This high level of accuracy ensures that the model can reliably capture the dynamic evolution of harmful gas concentrations in real time. By incorporating prediction interval estimation and graded early-warning mechanisms, the model not only quantifies prediction uncertainty but also enables dynamic multi-level responses to potential gas over-limit events. These capabilities establish a solid foundation for intelligent real-time early warning and decision-making in underground coal mine safety management.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liu, B.; Chang, H.; Li, Y.; Zhao, Y. Carbon emissions predicting and decoupling analysis based on the PSO-ELM combined prediction model: Evidence from Chongqing Municipality, China. Environ. Sci. Pollut. Res. 2023, 30, 78849–78864. [Google Scholar] [CrossRef] [PubMed]

- Wei, C.; Li, C.; Ye, Q.; Li, Z.; Hao, M.; Wei, S. Modeling of gas emission in coal mine excavation workface: A new insight into the prediction model. Environ. Sci. Pollut. Res. 2023, 30, 100137–100148. [Google Scholar] [CrossRef] [PubMed]

- Bi, S.; Shao, L.; Qi, Z.; Wang, Y.; Lai, W. Prediction of coal mine gas emission based on hybrid machine learning model. Earth Sci. Inform. 2023, 16, 501–513. [Google Scholar] [CrossRef]

- Song, W.; Han, X.; Qi, J. Prediction of gas emission in the tunneling face based on LASSO-WOA-XGBoost. Atmosphere 2023, 14, 1628. [Google Scholar] [CrossRef]

- Ji, P.; Shi, S.; Shi, X. Research on gas emission quantity prediction model based on EDA-IGA. Heliyon 2023, 9, e17624. [Google Scholar] [CrossRef] [PubMed]

- Zeng, J.; Li, Q. Research on prediction accuracy of coal mine gas emission based on grey prediction model. Processes 2021, 9, 1147. [Google Scholar] [CrossRef]

- Yang, Y.; Du, Q.; Wang, C.; Bai, Y. Research on the method of methane emission prediction using improved grey radial basis function neural network model. Energies 2020, 13, 6112. [Google Scholar] [CrossRef]

- Zhang, J.; Cui, Y.; Yan, Z.; Huang, Y.; Zhang, C.; Zhang, J.; Guo, J.; Zhao, F. Time series prediction of gas emission in coal mining face based on optimized Variational mode decomposition and SSA-LSTM. Sensors 2024, 24, 6454. [Google Scholar] [CrossRef] [PubMed]

- Lin, H.; Li, W.; Li, S.; Wang, L.; Ge, J.; Tian, Y.; Zhou, J. Coal mine gas emission prediction based on multifactor time series method. Reliab. Eng. Syst. Saf. 2024, 252, 110443. [Google Scholar] [CrossRef]

- Liang, R.; Chang, X.; Jia, P.; Xu, C. Mine gas concentration forecasting model based on an optimized BiGRU network. ACS Omega 2020, 5, 28579–28586. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zheng, W.; Lu, G.; Kang, Y.; Xia, Y.; Zhou, Z. A new time series of gas outburst prediction model and application in coal mine and through-coal-seam tunnel mining face. IEEE Access 2025, 13, 115960–115971. [Google Scholar] [CrossRef]

- Yang, H.; Wang, J.; Zhang, H. Research on Gas Multi-indicator Warning Method of Coal Mine tunneling face Based on MOA-Transformer. ACS Omega 2024, 9, 22136–22144. [Google Scholar] [CrossRef] [PubMed]

- Qu, H.; Shao, X.; Gao, H.; Chen, Q.; Guang, J.; Liu, C. A Prediction Model for Methane Concentration in the Buertai Coal Mine Based on Improved Black Kite Algorithm–Informer–Bidirectional Long Short-Term Memory. Processes 2025, 13, 205. [Google Scholar] [CrossRef]

- Lai, K.; Xu, H.; Sheng, J.; Huang, Y. Hour-by-Hour Prediction Model of Air Pollutant Concentration Based on EIDW-Informer—A Case Study of Taiyuan. Atmosphere 2023, 14, 1274. [Google Scholar] [CrossRef]

- Pan, K.; Lu, J.; Li, J.; Xu, Z. A Hybrid Autoformer Network for Air Pollution Forecasting Based on External Factor Optimization. Atmosphere 2023, 14, 869. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv 2024, arXiv:2404.19756. [Google Scholar] [PubMed]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. itransformer: Inverted transformers are effective for time series forecasting. arXiv 2023, arXiv:2310.06625. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; NIPS: San Diego, CA, USA, 2017; Volume 30. [Google Scholar]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

| Time Stamp | Y (%) | X1 (m) | X2 (%) | X3 (%) | X4 (A) | X5 (%) | X6 (m/s) | X7 (°C) | X8 (ppm) |

|---|---|---|---|---|---|---|---|---|---|

| 2025-03-19 03:07 | 0.49 | 32.5 | 0.11 | 0.28 | 52.8 | 0.32 | 3.81 | 27.40 | 4.00 |

| 2025-03-19 03:08 | 0.49 | 32.5 | 0.11 | 0.28 | 50.9 | 0.32 | 3.78 | 27.40 | 4.00 |

| 2025-03-19 03:09 | 0.51 | 32.5 | 0.11 | 0.26 | 50.2 | 0.33 | 3.80 | 27.40 | 4.00 |

| 2025-03-19 03:10 | 0.51 | 32.5 | 0.11 | 0.27 | 50.5 | 0.33 | 3.85 | 27.40 | 4.00 |

| 2025-03-19 03:11 | 0.51 | 32.5 | 0.11 | 0.28 | 50.2 | 0.33 | 3.86 | 27.40 | 4.00 |

| 2025-03-19 03:12 | 0.52 | 32.5 | 0.13 | 0.28 | 50.1 | 0.34 | 3.82 | 27.40 | 4.00 |

| 2025-03-19 03:13 | 0.52 | 32.5 | 0.13 | 0.28 | 49.9 | 0.34 | 3.89 | 27.40 | 4.00 |

| 2025-03-19 03:14 | 0.52 | 32.5 | 0.13 | 0.28 | 50 | 0.34 | 3.90 | 27.40 | 4.00 |

| 2025-03-19 03:15 | 0.54 | 32.5 | 0.11 | 0.31 | 50.2 | 0.32 | 3.91 | 27.40 | 4.00 |

| 2025-03-19 03:16 | 0.54 | 32.5 | 0.11 | 0.31 | 50.1 | 0.32 | 3.86 | 27.40 | 4.00 |

| Model | MSE | MAE | MAPE | R2 |

|---|---|---|---|---|

| Improved iTransformer | 0.000307 | 0.012921 | 2.321373 | 0.916450 |

| iTransformer | 0.000358 | 0.014020 | 2.511322 | 0.882508 |

| Transformer | 0.000562 | 0.018397 | 3.320037 | 0.846822 |

| Informer | 0.000412 | 0.026127 | 4.6949 | 0.806608 |

| Autoformer | 0.000488 | 0.024723 | 4.4184 | 0.823428 |

| LSTM | 0.000917 | 0.037535 | 6.7352 | 0.708254 |

| Model | MSE | MAE | MAPE | R2 |

|---|---|---|---|---|

| Improved iTransformer | 0.000913 | 0.022867 | 4.117202 | 0.851230 |

| iTransformer | 0.002517 | 0.039033 | 7.183084 | 0.706521 |

| Transformer | 0.004784 | 0.052639 | 9.741005 | 0.303680 |

| Informer | 0.002978 | 0.046203 | 7.832372 | 0.664133 |

| Autoformer | 0.002728 | 0.041250 | 7.532246 | 0.689241 |

| LSTM | 0.004171 | 0.071535 | 11.35268 | 0.278823 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, L.; Kong, S.; Li, K. Research on Intelligent Early Warning and Emergency Response Mechanism for Tunneling Face Gas Concentration Based on an Improved KAN-iTransformer. Processes 2025, 13, 3748. https://doi.org/10.3390/pr13113748

An L, Kong S, Li K. Research on Intelligent Early Warning and Emergency Response Mechanism for Tunneling Face Gas Concentration Based on an Improved KAN-iTransformer. Processes. 2025; 13(11):3748. https://doi.org/10.3390/pr13113748

Chicago/Turabian StyleAn, Lei, Shaoqi Kong, and Kunjie Li. 2025. "Research on Intelligent Early Warning and Emergency Response Mechanism for Tunneling Face Gas Concentration Based on an Improved KAN-iTransformer" Processes 13, no. 11: 3748. https://doi.org/10.3390/pr13113748

APA StyleAn, L., Kong, S., & Li, K. (2025). Research on Intelligent Early Warning and Emergency Response Mechanism for Tunneling Face Gas Concentration Based on an Improved KAN-iTransformer. Processes, 13(11), 3748. https://doi.org/10.3390/pr13113748