Abstract

The modified Rankin Scale (mRS) is a widely used outcome measure for assessing disability in stroke care; however, its administration is often affected by subjectivity and variability, leading to poor inter-rater reliability and inconsistent scoring. Originally designed for hospital discharge evaluations, the mRS has evolved into an outcome tool for disability assessment and clinical decision-making. Inconsistencies persist due to a lack of standardization and cognitive biases during its use. This paper presents design principles for creating a standardized clinical assessment tool (CAT) for the mRS, grounded in human–computer interaction (HCI) and cognitive engineering principles. Design principles were informed in part by an anonymous online survey conducted with clinicians across Canada to gain insights into current administration practices, opinions, and challenges of the mRS. The proposed design principles aim to reduce cognitive load, improve inter-rater reliability, and streamline the administration process of the mRS. By focusing on usability and standardization, the design principles seek to enhance scoring consistency and improve the overall reliability of clinical outcomes in stroke care and research. Developing a standardized CAT for the mRS represents a significant step toward improving the accuracy and consistency of stroke disability assessments. Future work will focus on real-world validation with healthcare stakeholders and exploring self-completed mRS assessments to further refine the tool.

1. Introduction

The modified Rankin Scale (mRS), a clinician-administered stroke assessment tool, has been central to stroke care since its introduction in 1957 [1]. Initially developed to categorize functional recovery of patients at hospital discharge, its application has now expanded, leading to certain limitations in its effectiveness. The mRS is currently used in several different settings, such as for acute stroke assessment, follow up assessments and primarily used as an outcome measure in clinical trials. The assessment is typically administered around the 90-day mark after the stroke and can be conducted in person or via phone call. The purpose of the modified Rankin Scale (mRS) has shifted from a categorical discharge tool to a long-term functional disability assessment that contributes to the knowledge of treatment success. With this shift, an update to the mRS is needed to maintain effectiveness.

The results and data taken from the mRS have real implications for clinical decision-making, and patient care within stroke research care delivery. The administration of the modified Rankin Scale (mRS) currently shows a lack of standardized assessment causing issues with inter-rater reliability [2]. The existing methodology, without a standardized approach for gathering corroborative information, introduces variability in the mRS’s application allowing for incongruous cognitive processes influencing scoring outcomes. This impacts inter-rater reliability and potentially affects the scale’s overall reliability in clinical outcomes [3]. This study uses results from a preliminary online questionnaire completed by Canadian clinicians to understand current uses and challenges of the modified Rankin Scale (mRS).

This paper explores the principles required for a new design to improve the process of assigning an mRS grade to patients. By developing parameters and guidelines for a detailed clinical assessment tool (CAT) which will transition into a simple and intuitive user interface following basic design principles, we aim to enhance inter-rater reliability of assigning mRS scores across diverse clinical settings. The development of the design principles for a new mRS tool were informed from our online survey results. This paper has two objectives, one, to analyze clinician use and experiences with the current modified Rankin Scale (mRS) administration through an anonymous online survey and second, to propose design principles for a new clinical assessment tool (CAT) for the mRS informed by these findings and current literature grounded in human–computer interaction (HCI) principles to improve inter-rater reliability and the standardization of the mRS.

2. Modified Rankin Scale Overview and Related Works

The modified Rankin Scale (mRS) is widely used in stroke care as an assessment for patients after a stroke event. Despite its frequent use, the scales accuracy and reliability have been questioned, with studies showing various levels of inter-rater reliability [1,2,3] and criticism for its broad categories [1,2]. Originally developed to categorize a patient’s functional recovery at hospital discharge, the mRS is currently primarily used for long-term disability assessments [1]. The original Rankin Scale from 1957 was a single-item scale with five rankings which represented no disability, slight, moderate, moderately severe, and severe disability. It was modified once in 1988 alongside an Aspirin stroke prevention study. In this addition, a ranking of zero was added to indicate no symptoms, death was added as a sixth grade and the wording for levels one and two were altered [4,5]. The mRS has since been criticized for being ‘broad and poorly defined’ leaving room for subjective and personal perceptions. The descriptions of the mRS grades contribute to the inter-observer variation between clinician administrators [6].

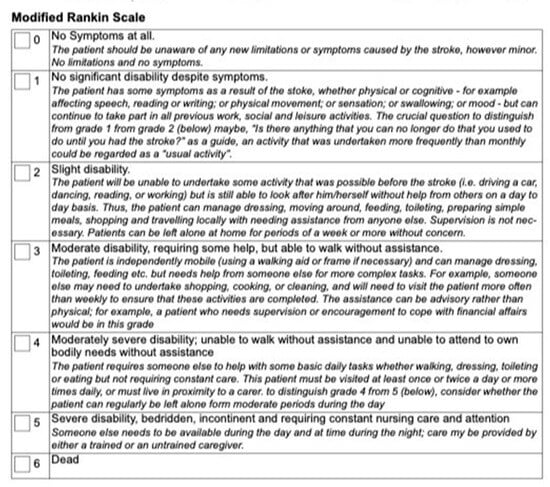

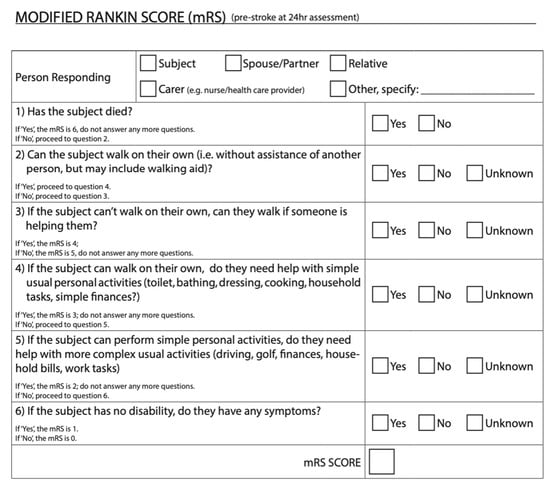

Figure 1 shows an example of the standard mRS grade definitions with some indicators and guidelines for scoring italicized under the score, from a clinical trial lead by the University of Calgary [7]. Figure 2 shows a questionnaire version of the mRS from the ESCAPE-NA1 stroke trial [8]. These are just two examples of a questionnaire and definitions for the mRS used for outcome measures in stroke trials.

Figure 1.

Modified Rankin Scale (mRS) from the ESCAPE stroke trial [7]. This mRS was used as an outcome measure approximately 90 days post-stroke. This figure shows one of the versions of the mRS that was administered to stroke survivors. This version has the scores and grades bolded with further guidelines italicized below.

Figure 2.

Modified Rankin Scale from ESCAPE-NA1 Stroke trial [9]. This mRS was used as an outcome measure approximately 90 days post-stroke. This figure shows one version of the mRS that was administered to stroke survivors. This version of the mRS goes in descending order of scores starting with six and working down to zero.

2.1. Current Modified Rankin Scale Collection Process

There are currently several ways to administer the mRS. Besides the defined grades on the scale (Figure 1 and Figure 2), there is no technical standardization. The mRS is mainly used for clinical trials in stroke, typically the administration of the mRS in clinical trials will have specifications for the way it should be administered based on the trial protocols. For example, the 2018 ESCAPE-NA1 trial used a structured questionnaire with a yes/no system beginning with the highest score 6 (see Figure 2) [9]. A second use of the mRS is during a follow up assessment. In this context the mRS is typically applied less rigidly than in clinical trials, here the mRS serves more as a guideline rather than a structured questionnaire. Clinicians may adapt their approach to include more probing questions and corroborative information sources to select the most accurate score to gauge their patient’s recovery and disability. Lastly, the mRS is at times administered in acute stroke assessments. Because of the multiple questionnaires, guidelines, and administrative methods for the mRS, there are no clear standardizations for scoring.

It is clear there are ongoing challenges in the current administration of the mRS, contributing to the complex and variable decision-making process of clinicians scoring the mRS. During the assessment the decision-making involves three parts, firstly, understanding the range of choice options and courses of action. This involves recognizing the different levels of disability or recovery of the patient according to the clinician administering the mRS and then deciding on the most accurate score based on the patient. Second is forming beliefs about objective states, processes, and events, this includes outcome states and means to achieve them. This adds complexity in the assessment and scoring process from the vague and subjective nature of the current mRS categories leading to differences in interpretation across administrators. Lastly, decision-making considers the broader implications of the mRS score. Clinicians may implicitly weigh the assessment score for its impact on patient care, treatment plans, research, or healthcare system implications [10]. These challenges highlight the need for a clinical assessment tool (CAT) that will standardize the administration of the mRS, reduce subjectivity, and improve scoring reliability while ensuring consistent application across both clinical and research settings.

2.2. Reliability of the Modified Rankin Scale

The lack of standardization in the mRS and its administration allows for interpretation leading to variability. This may be due to the cognitive load and individual decision-making the administrator may assume when scoring the modified Rankin Scale (mRS). For instance, biases towards scoring positively or negatively, routine exposure to stroke disability, or lack of corroborative information can result in discrepancies within scoring. Studies have shown that different medical professionals assign mRS grade differently. For example, one study found that physicians administering patients to rehabilitation wards tended to rate disability higher than neurologists discharging patients from a stroke unit [2]. Multiple factors influence how a clinician interprets the administration process and scores of the mRS [6] such as varying personal and professional influences, diverse sources of information, and subjective judgement. This variability has led to support for a more standardized administration process for the mRS. A 2023 study looked at the reliability of the mRS in stroke units and rehabilitation wards looked at the agreement between raters from each department. The study found an overall agreement of 70.5% (Kappa = 0.55) [2]. A 2007 study found improved inter-rater reliability using a structured questionnaire where the kappa value went from 0.56 to 0.78 and showed strong test–retest reliability (k = 0.81 to 0.95). The 2007 study shows that structured interviews have been shown to improve inter-rater reliability and should be considered with helping in mitigating the levels of inter-rater variability [1]. Ideally the kappa value for inter-rater reliability should be as close to 1 as possible. Creating a more standardized clinical assessment tool could mitigate these varied interpretations of the mRS across all settings and address the challenges currently faced by clinicians.

3. Methods: Online Survey

An anonymous online survey was conducted, targeting clinicians who administer modified Rankin Scale (mRS) from across Canada. The survey was conducted using Microsoft forms and had 12 questions with an option for additional feedback at the end. The survey was made up of both open and closed-ended questions, which included multiple choice, and binary questions with spaces to explain answers. To collect demographic information there was a question asking what the participants’ profession was, and how long they have been practicing. We then broke the professions down into specialties to gather more information. For example, if a participant selected physician, the next question would show several options for different types of physicians and an “other” option to select. The remainder of the survey asked participants about current uses, challenges, and opinions of administering the modified Rankin Scale (mRS). To keep all answers anonymous and organized all participants were randomly assigned a number through Microsoft Forms.

The content of the anonymous online survey was designed to gather insights on clinicians current use of the modified Rankin Scale (mRS). Questions focused on issues identified in the literature and current challenges, opinions and uses of the mRS. No formal psychometric validation was performed as the aim of the survey was to gather exploratory qualitative insights to help inform design principles.

Recruitment was performed via email; there was an email sent out through the Canadian Stroke Consortium briefly explaining the online survey with a link attached. Ethics was obtained from Dalhousie’s Health Sciences Research Ethics Board (REB#: 2024-7443). The survey was anonymous, and consent was ensured through a comprehensive document prior to starting the survey. Participants who did not consent were not able to participate in the survey.

This survey was exploratory and targeted clinicians who are familiar with the mRS in Canada. The sample size was 20, which covers potentially ~80% of the 25 hospitals who participate in stroke trials nationally. This sample size follows the established norms for exploratory qualitative research where we can find meaningful insights with smaller yet targeted samples that can reach saturation, for qualitative research using interviews it has been found saturation can be reached with between 9 and 17 participants with homogenous populations such as our online survey [11,12]. The aim of the survey was to gather qualitative inferences and design input rather than deductive conclusions; no formal calculations were conducted.

4. Results: Online Survey

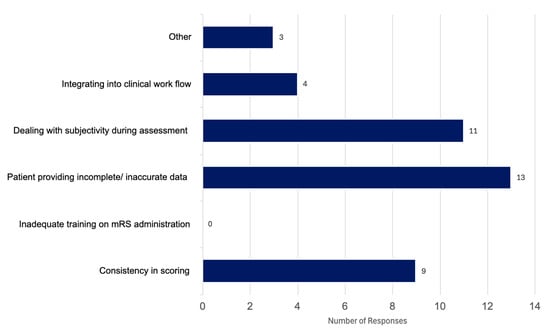

The survey was completed by 20 participants which is significant because there are 25 hospitals in Canada that participate in stroke trials, thus regularly collect 90-day modified Rankin Scale (mRS). Of the 20 participants, 12 were neurologists, 5 were nurses and 3 were stroke coordinators (not nurses). All participants were familiar with administering the mRS, where 55% used it weekly, 25% said they use it daily and 20% responded with using it either monthly or rarely. When asked about context of use, 50% of participants said they used it for clinical trials, 27.5% for follow up assessments, and 22.5% for acute stroke assessments. When asked about the main challenges faced when administering the mRS, 32.5% selected patients providing incomplete and or inaccurate information, 27.5% said dealing with subjectivity during the assessment was a significant challenge in administering the mRS, 22.5% of participants stated consistency of scoring to be an issue, and 10% said integrating the mRS into clinical workflow was a significant challenge. Three participants (7.5%) selected “other”, two responded by indicating there were no challenges, and one participant commented on how the scale is “inadequate” (Figure 3). When asked to elaborate on their answers, we found that the main comments were about how standardizing the mRS and integrating it into workflows could improve consistency. Two participants mentioned the assessment was straight forward while the rest emphasized the need for a structured tool to ensure accurate scoring, while still mentioning external factors such as caregiver burden, patient safety and cultural background as holding challenges for administration. When asked if participants found the current mRS measure to be effective in capturing patient outcomes, we found 40% of participants said yes, 35% said no and 25% selected other. All participants who chose “other” commented on how it can be helpful at times, although noted the crudeness of the scale, indicating it does not capture a patient’s full recovery, for example,

Figure 3.

Results from our anonymous online survey looking at reported challenges in administering the current modified Rankin Scale (mRS); the question asked was “What are the main challenges you face when administering the mRS (select all that apply)”. This figure is based on responses from 20 Canadian clinicians.

“Patient function can vary due to stroke symptoms and comorbidities; factors such as fatigue or depression may lead to lower scores”;

“The tool is considered helpful, but is a crude measure that doesn’t adequately account for cognitive status”;

“The tool is easy to administer, but provides only a broad measure of recovery”;

“The tool is generally effective until patients reach a level of independent functioning, at which point important nuances in recovery and disability can be lost”;

“The tool sometimes reflects patient needs, but many important aspects are not captured by the mRS, limiting its ability to fully represent patient outcomes”.

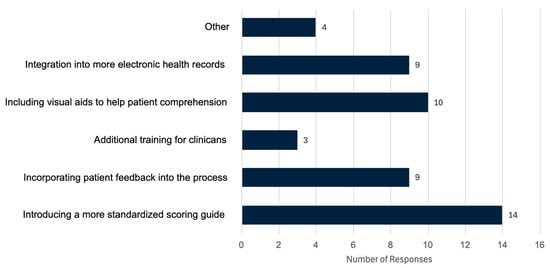

In response to how participants thought the mRS could be improved for both clinicians and patients (Figure 4). Here, we found that 28.6% responded “introducing a more standardized scoring guide”, 20.41% said “including visual aids to help patient comprehension”, 18.37% said “incorporating patient feedback into the process”, 18.37% stated “integrating the mRS into more electronic health records would be beneficial”, 6.12% said “additional training for clinicians” and 8.16% chose “other”. Responses under other included

Figure 4.

Results from our anonymous online survey looking at proposed improvements to the modified Rankin Scale (mRS); the question asked was “In your opinion, how do you think the mRS could be improved for both clinicians and patients? (select all that apply)”. This figure is based on responses from 20 Canadian clinicians.

“Changing to another similar scoring system that reflects more of the issues faced”;

“Frequently, patients’ caregiver, or family members need to administer it [the mRS] for objectivity, and the current mRS is very limited in capturing all needs, AI may be helpful”;

“Redevelopment to capture more nuance or maybe collaborations with other existing tests”.

5. Designing an mRS Collection Tool: Principles for a Clinical Assessment Tool

Clinical assessment tools (CATs) are designed to support healthcare delivery through standardization and simplification in the evaluation process, focusing on the ease and reliability of the tool. Clinical assessment tools aim to improve the assessment process, creating and maintaining consistency and reducing subjectivity during the administration. The main goal is providing a structured framework to collect, organize and interpret assessment data in an accurate and reliable way across all settings.

Research highlights the importance of standardizing clinical tools to reduce variability and enhance reliability. For example, one review explored the use of Clinical Decision Support Systems (CDSSs) a type of clinical assessment tool that focuses on decision-making. The review found that CDSSs can improve clinician adherence to guidelines, but their impact on patient outcomes remains inconsistent. While some studies have shown significant benefits, overall effectiveness of CDSSs in clinical practice is difficult to determine due to limited research on patient outcomes [13]. However, these findings highlight the importance of developing and evaluating structured clinical tools, such as CDSSs and CATs, which have the potential to enhance clinical workflows and decision-making [13].

These findings support the need for clinical assessment tools (CATs) that can optimize the assessment process in tools such as the modified Rankin Scale (mRS) that requires careful, consistent, and reliable scoring between clinicians and clinical settings. Important aspects of a CAT for the mRS need to account for usability and standardization. A CAT should include features to reduce subjective judgement and cognitive load while administering the mRS. Cognitive load refers to the mental effort required to process excess information that competes for the limited cognitive resources available creating a burden on our working memory which can affect the accuracy of the clinical assessment [14].

6. Design Principles for a Clinical Assessment Tool for the Modified Rankin Scale

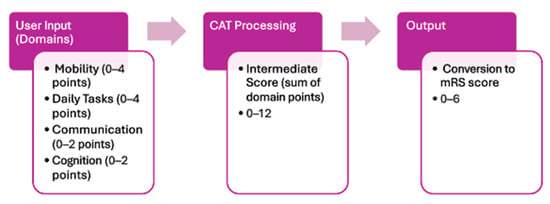

To improve the consistency and usability of the modified Rankin Scale (mRS), we propose a clinical assessment tool (CAT) designed using principles from human–computer interaction (HCI), cognitive engineering, and established usability heuristics. The basic model can be seen in Figure 5. There should be a simple user input which will lead to the CAT processing the input which will then produce an output of an mRS grade. The CAT should guide users through the mRS assessment using a structured process that will include unambiguous questions, and a comprehensive scoring guide that will lead to the existing mRS grades. Grounded in evidence-based guidelines, the CAT should reduce subjective interpretation and increase the inter-rater reliability, streamlining decision-making. The design of the CAT is guided by core principles aimed at reducing cognitive load and ensuring a seamless user experience. These principles prioritize usability, efficiency, and accessibility to support clinicians and patients in accurately assessing post-stroke recovery across all settings [15].

Figure 5.

Process flow diagram of the clinical assessment tool (CAT) outlining the process to complete the CAT and obtain a modified Rankin Scale (mRS) score.

6.1. Domains

Incorporating more domains into the design of a clinical assessment tool (CAT) specifically for the modified Rankin Scale (mRS) will be useful in achieving a more accurate grade. The mRS currently only focuses on mobility with a secondary focus on basic daily tasks. The research shows cognition and communication are highly affected post-stroke which is why they should be considered when administering the 90-day mRS. In the mRS CAT, having four domains such as mobility, daily tasks, communication and cognition will help gain a better understanding of the patient’s outcomes 90 days post-stroke while maintaining the current scales focus of mobility and daily tasks (Figure 5). Furthermore, including a more holistic approach to what encompasses an mRS score is at the forefront of accessibility and inclusion, adding in categories such as cognition or communication in the CAT would help capture a more realistic understanding of outcomes post-stroke. These domains are underrepresented in the current mRS scoring but impact a patient’s function and recovery, making their inclusion in the assessment critical for equity and accuracy. This would in turn allow for a more meaningful and accurate mRS score.

6.2. Images

The clinical assessment tool (CAT) should incorporate simple, clear visuals alongside text cues for each option. These images should be easily interpreted, for example, using consistent basic black-and-white icon-style illustrations representing people and actions described in textual cues. Providing clear and intuitive visual representations will enhance clarity and reduce cognitive load through accelerating decision-making by allowing quick, accessible visual cues that guide users in selecting the appropriate option with minimal cognitive load. Having images in the CAT also increases accessibility and inclusivity by making the options more approachable and understandable to users with varying cognition or language abilities.

6.3. Phrasing

Phrasing within the CAT should be simple and at a rudimentary reading level to accommodate the diverse demographic of users. Design decisions should prioritize readability by using clear, direct language that minimizes ambiguity. Using a first-person perspective in all phrasing options will further support comprehension by enabling users to identify with options more easily and efficiently during the assessment process. This design principle highlights accessibility and clarity for the user, which in turn creates a more efficient decision-making process.

6.4. Providing Examples

Including examples for more ambiguous options is important to reduce cognitive load and support accurate decision-making, each domain should include examples for options in the middle. Examples should focus on typical daily tasks and activities relevant to users’ lives, thereby providing context that enhances understanding and allows users to make selections confidently. Examples should be easy to understand and applicable to a vast demographic. Specifically for the modified Rankin Scale (mRS) having example that are relatable and firsthand perspective, for example, if the option was for mobility and said “I have mobility restrictions” the example could be “I have issues with balance, distance or speed when moving independently” this indicates a person is independently mobile and has some slight mobility issues. This will help with efficiency and clarity by allowing the user a deeper and more relatable understanding of the choices which may help decrease cognitive load, creating a more efficient tool.

6.5. Number of Options

The number of options provided within each section is critical to avoid overwhelming the user. Maintaining a concise set of distinct and meaningful options will reduce cognitive load, create efficient decision-making, and improve the overall usability of the tool. Having enough information and choice on the page is crucial to obtaining an accurate score without creating a time burden or having too many options that will overload the user. Having between three to six options per domain and having them read from upper left to bottom right to align with how majority of users read on and offline is an important step for minimal cognitive load, and easy scanning [14]. For a clinical assessment tool (CAT) for the modified Rankin Scale (mRS) if adding in new domains we suggest keeping mobility and daily tasks which are the current focus as primary domains in the CAT allowing them more options and heavier scoring weights than any new domains being added. For example, adding in cognition and communication, they could have three to five options and mobility, and daily tasks could have four to six. This way the tool is still recognizable to clinicians using it who are used to only focusing on mobility and daily tasks.

6.6. Visual Esthetics

The overall visual esthetic of the CAT should be clean, minimalistic, and uncluttered to reduce distractions and cognitive load. Consistent use of images, spacing, and style will contribute to an intuitive and easily learnable interface, supporting focus and enhancing the assessment experience. Having a clear visual hierarchy is important, breaking down the information into distinct sections such as title, question, options will allow users to instantly separate and understand the relevant information of each section on the page allowing for easier focus [14]. Each page or section should discuss one area of disability at a time, so there is no overlap and reduces confusion when gathering information, such as separating daily tasks and mobility into separate sections. Similarly, structuring the CAT questions in ascending order from least to most severe disability will support more deliberate decisions making to choose the most appropriate response; this will allow users to move on faster to the next question.

6.7. User Satisfaction Engagement

Making sure the clinical assessment tool (CAT) creates a positive user experience will increase usability. The CAT should be designed to feel intuitive to use making the user feel in control during the process. Making the CAT familiar will help with ease of use, following an intuitive route will lessen the need for attention and short-term memory use creating a more pleasant and comfortable user experience [14]. Ensuring the CAT is responsive is key for user satisfaction, making sure to have ways to keep the user informed such as adding in page numbers, and progress indicator are a good way to show active responsiveness to the user [16]. Having a progress indicator to help reduce uncertainty and show users their progress in the CAT is important while having an intuitive flow that makes users feel in control will increase user satisfaction and autonomy while using the tool. Having an immediate scoring and an explanation of results will reinforce trust in the CAT while ensuring users understand their outcomes. Having a thank you message with a simple intuitive layout will also help contribute to a more satisfying user experience.

7. Impact

Through creating a clinical assessment tool (CAT) for the modified Rankin Scale (mRS) grounded in human–computer interaction (HCI) principles and cognitive engineering principles, we aim to reduce variability in the administration and scoring. By reducing cognitive load and mitigating cognitive biases during administration, the CAT will help increase inter-rater reliability. Through focusing on usability, simplicity, and standardization, the clinical assessment tool will help guide clinicians through a structured and intuitive process for administering the mRS. A standardized clinical assessment tool for the mRS could significantly enhance the efficiency and accuracy of clinical workflow in several ways. First, it would create a standardized administration and scoring process across all clinical and research settings, reducing inconsistencies throughout administration. This would lead to more consistent patient care and improved reliability of the mRS as an outcome measure which can help lead to a more uniform patient care process. Additionally, it could influence treatment approaches as reflected in clinical trials using mRS as an outcome measure. Second, a standardized CAT would help streamline the assessment process by providing structured and standardized protocol, reducing administrative burdens associated with administering the mRS. Through using principles focusing on reducing cognitive load and HCI for the design of a standardized CAT for the mRS, we can enhance the way the mRS is used across all settings, creating a new standard for reliability and effectiveness in patient assessments.

8. Discussion

In this paper we outline the potential advancement of modified Rankin Scale (mRS) administration using a clinical assessment tool (CAT) that supports administrators’ decision-making processes with minimal cognitive burden. The CATs user-centric design, focusing on learnability, efficiency, reliability, and satisfaction, serves as the cornerstone for improved inter-rater reliability. The design choices, including clear navigation, and immediate feedback mechanisms underscore the potential of the CAT to become an indispensable tool in stroke assessment.

The comparison of the current modified Rankin Scale (mRS) and the proposed clinical assessment tool (CAT) for the mRS is vast. The current mRS assessment method allows for open-ended interpretation by clinicians, whereas the CAT would be structured using binary questions and predefined options to achieve a more precise and reproducible grade. The standardization of the current mRS varies based on administrator and at times, setting. The CAT is designed to have high standardization with automated scoring to minimize subjective judgement. The Cognitive load of the current mRS can be seen as high due to the ambiguity and lack of prompts creating the need for subjective decision-making on the scoring, whereas the newly proposed CAT offers reduced cognitive load through having a clear guide for decisions making. Lastly, the current mRS focuses mainly on mobility with a slight secondary focus on basic daily tasks, the new proposed CAT suggests adding domains such as cognition and communication to gain a more global understanding of disability post-stroke.

While this paper lays a foundation for design principles of a CAT, ongoing research and development are crucial. Subsequent phases will include user testing with real-world clinical teams, further refinement of the CAT based on user feedback, and the examination of its impact on clinical outcomes. As mRS scoring becomes more standardized, the stroke care community can expect more consistent and accurate assessments, ultimately leading to improved patient care and more reliable clinical research data. This CAT also introduces the potential for exploration of a patient-completed mRS. Creating a CAT using these design principles allows for a more intuitive and straightforward way to administer the mRS. If we can use these design principles to support clinicians, why not take it a step further and explore a firsthand approach that allows stroke survivors to assess their own disability as part of the recovery process. This would lead to not only more data, but more first-hand data collected by stroke survivors or caregivers who are experiencing recovery firsthand.

8.1. Future Direction

Next steps and future directions are to take the design principles and create a low fidelity first iteration of a user interface for the modified Rankin Scale (mRS) clinical assessment tool (CAT). This will be the first step of validation and standardization of the new tool. Once the first iteration of the tool is developed, there will be a user study with stroke survivors and clinicians performed to gain insights into the efficacy and validity of the new tool. The aim is to elevate the CAT to become a benchmark tool across healthcare settings. The user interface of this tool will eventually aim to complement existing electronic health records and tools while providing guidance throughout the assessment, helping gather information and decision-making.

8.2. Limitations

The limitations in this paper were the sample size of the online survey, we had 20 participants which sufficed and helped gain valuable data. Having more participants could have upheld more data for our study. Having the survey anonymous was important and allowed for opinions to be shared more readily, although adding more details for the demographic portion of the survey could have added value so we could have had an idea of where the participants were working, this could have added concrete data to assure we had participants from across Canada.

9. Conclusions

This paper has provided an in-depth analysis of the current application of the modified Rankin Scale (mRS) in clinical settings and the identified need for standardization in its administration. We have explored the historical context, a brief evolution of the mRS, and the challenges faced due to its interpretative nature leading to inter-rater variability. It is evident that the integration of a clinical assessment tool (CAT) informed by human–computer Interaction (HCI) principles would be beneficial to enhancing the reliability and accuracy of mRS assessments. The implementation of a CAT aligns with the cognitive workflow of clinicians, offering an intuitive user experience. This would address the cognitive load concerns and individual decision-making processes that contribute to variability in mRS scoring. A well-designed CAT informed by HCI could significantly reduce variability in mRS interpretation, leading to improved patient outcomes and more accurate data for stroke research and quality of assessments.

Author Contributions

L.L. completed the study design, data collection, and initial writing for the manuscript. N.K. completed supervision, funding acquisition and manuscript review. All authors have read and agreed to the published version of the manuscript.

Funding

Funding for this work is provided by an NSERC Alliance grant ALLRP 580440 (Title: Designing and Deploying a National Registry to Reduce Disparity in Access to Stroke Treatment and Optimise Time to Treatment; PI: Noreen Kamal).

Institutional Review Board Statement

The online survey for this study was conducted in accordance with the Tri-Council Policy Statement Ethical Conduct for Research Involving Humans and approved by the Health Sciences Research Ethics Board of Dalhousie University (REB#2024-7443, 12 December 2024) for studies involving humans.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Online survey data cannot be publicly shared due to ethical restrictions, please contact the corresponding author for more information.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Banks, J.L.; Marotta, C.A. Outcomes validity and reliability of the modified Rankin scale: Implications for stroke clinical trials: A literature review and synthesis. Stroke 2007, 38, 1091–1096. [Google Scholar] [CrossRef] [PubMed]

- Pożarowszczyk, N.; Kurkowska-Jastrzębska, I.; Sarzyńska-Długosz, I.; Nowak, M.; Karliński, M. Reliability of the modified Rankin Scale in clinical practice of stroke units and rehabilitation wards. Front. Neurol. 2023, 14, 1064642. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Collier, J.M.; Quah, D.M.; Purvis, T.; Bernhardt, J. The Modified Rankin Scale in Acute Stroke Has Good Inter-Rater-Reliability but Questionable Validity. Cerebrovasc. Dis. 2010, 29, 188–193. [Google Scholar] [CrossRef] [PubMed]

- Zeltzer, L. Modified Rankin Scale (MRS). Stroke Engine. Available online: https://strokengine.ca/en/assessments/modified-rankin-scale-mrs/#Purpose (accessed on 19 April 2008).

- Quinn, T.; Dawson, J.; Walters, M. Dr John Rankin; His Life, Legacy and the 50th Anniversary of the Rankin Stroke Scale. Scott. Med. J. 2008, 53, 44–47. [Google Scholar] [CrossRef] [PubMed]

- Rethnam, V.; Bernhardt, J.; Johns, H.; Hayward, K.S.; Collier, J.M.; Ellery, F.; Gao, L.; Moodie, M.; Dewey, H.; Donnan, G.A.; et al. Look closer: The multidimensional patterns of post-stroke burden behind the modified Rankin Scale. Int. J. Stroke Off. J. Int. Stroke Soc. 2021, 16, 420–428. [Google Scholar] [CrossRef] [PubMed]

- Cumming School of Medicine. ESCAPE Stroke. University of Calgary. Available online: https://cumming.ucalgary.ca/escape-stroke (accessed on 9 April 2025).

- Goyal, M.; Demchuk, A.M.; Menon, B.K.; Eesa, M.; Rempel, J.L.; Thornton, J.; Roy, D.; Jovin, T.G.; Willinsky, R.A.; Sapkota, B.L.; et al. Randomized Assessment of Rapid Endovascular Treatment of Ischemic Stroke. N. Engl. J. Med. 2015, 372, 1019–1030. [Google Scholar] [CrossRef] [PubMed]

- Hill, M.D.; Goyal, M.; Menon, B.K.; Nogueira, R.G.; McTaggart, R.A.; Demchuk, A.M.; Graziewicz, M. Efficacy and safety of nerinetide for the treatment of acute ischaemic stroke (ESCAPE-NA1): A multicentre, double-blind, randomised controlled trial. Lancet 2020, 395, 878–887. [Google Scholar] [CrossRef] [PubMed]

- Patel, V.L.; Kaufman, D.R.; Kannampallil, T.G. Diagnostic Reasoning and Decision Making in the Context of Health Information Technology. Rev. Hum. Factors Ergon. 2013, 8, 149–190. [Google Scholar] [CrossRef]

- Sharma, S.K.; Mudgal, S.K.; Gaur, R.; Chaturvedi, J.; Rulaniya, S.; Sharma, P. Navigating Sample Size Estimation for Qualitative Research. J. Med. Evid. 2024, 5, 133–139. [Google Scholar] [CrossRef]

- Hennink, M.; Kaiser, B.N. Sample sizes for saturation in qualitative research: A systematic review of empirical tests. Soc. Sci. Med. 2022, 292, 114523. [Google Scholar] [CrossRef] [PubMed]

- Johnston, M.E.; Langton, K.B.; Haynes, R.B.; Mathieu, A. Effects of computer-based clinical decision support systems on clinician performance and patient outcome: A critical appraisal of research. Ann. Intern. Med. 1994, 120, 135–142. [Google Scholar] [CrossRef] [PubMed]

- Patel, V.L.; Kaufman, D.R.; Kannampallil, T. Human-Computer Interaction, Usability, and Workflow. In Biomedical Informatics; Shortliffe, E.H., Cimino, J.J., Eds.; Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Lidwell, W.; Holden, K.; Butler, J. Universal Principles of Design: 125 Ways to Enhance Usability, Influence Perception, Increase Appeal, Make Better Design Decisions, and Teach Through Design (Revised and Updated); Rockport Publishers: Beverly, MA, USA, 2010. [Google Scholar]

- Johnson, J. Designing with the Mind in Mind: Simple Guide to Understanding User Interface Design Guidelines; Morgan Kaufmann: Cambridge, MA, USA, 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).