CAMBSRec: A Context-Aware Multi-Behavior Sequential Recommendation Model

Abstract

1. Introduction

- (1)

- Self-attention has an inherent insufficient inductive bias when processing sequential data. Although it can capture long-range dependencies, it may not fully consider certain fine-grained sequential patterns. Additionally, it may overfit the training data, which can lead to a weak generalization ability.

- (2)

- As self-attention processes the entire data range, it may inadvertently ignore important, detailed patterns, causing an oversmoothing problem. This problem hinders the model’s ability to capture critical temporal dynamics and provide accurate predictions.

- (3)

- Existing self-attention mechanisms in a sequential recommendation typically achieve position awareness through absolute positional encoding, which assigns a learnable vector to each position. However, user historical behavior sequences may have diverse characteristics. For example, users may purchase items of the same type multiple times within a session, where relative positions or context-dependent positions might be more important than absolute positions. Traditional positional encoding does not consider the contextual information of user behaviors; thus, it fails to capture users’ dynamic interests effectively.

- (1)

- We design a context-aware multi-behavior sequential recommendation approach that models user behaviors and items separately. The method employs an inductive bias self-attention layer to capture long-short-term dependencies in multi-behavioral data, introduces a Fourier transform to balance long-short-term interest preferences, and designs a high-pass filter to alleviate the oversmoothing issue. Additionally, a weighted binary cross-entropy loss function is utilized to balance different behaviors, enabling fine-grained control over the weight ratios of each behavioral type.

- (2)

- For a personalized forward recommendation, we design location encoding on the basis of context similarity. Location information is determined by the dissimilarity between context items and target items, rather than by a fixed order. This allows related items to share similar position codes. Therefore, the semantic relevance of the location representation is enhanced.

- (3)

- We conducted extensive experiments on three multi-behavior datasets, and the results demonstrate that our method achieves better results than five baseline methods on all three datasets.

2. Related Work

2.1. Sequential Recommendation

2.2. Multi-Behavior Recommendation

2.3. Positional Encoding

3. Problem Formulation

4. Methodology

4.1. Multi-Behavior Encoding

4.2. Position Encoding

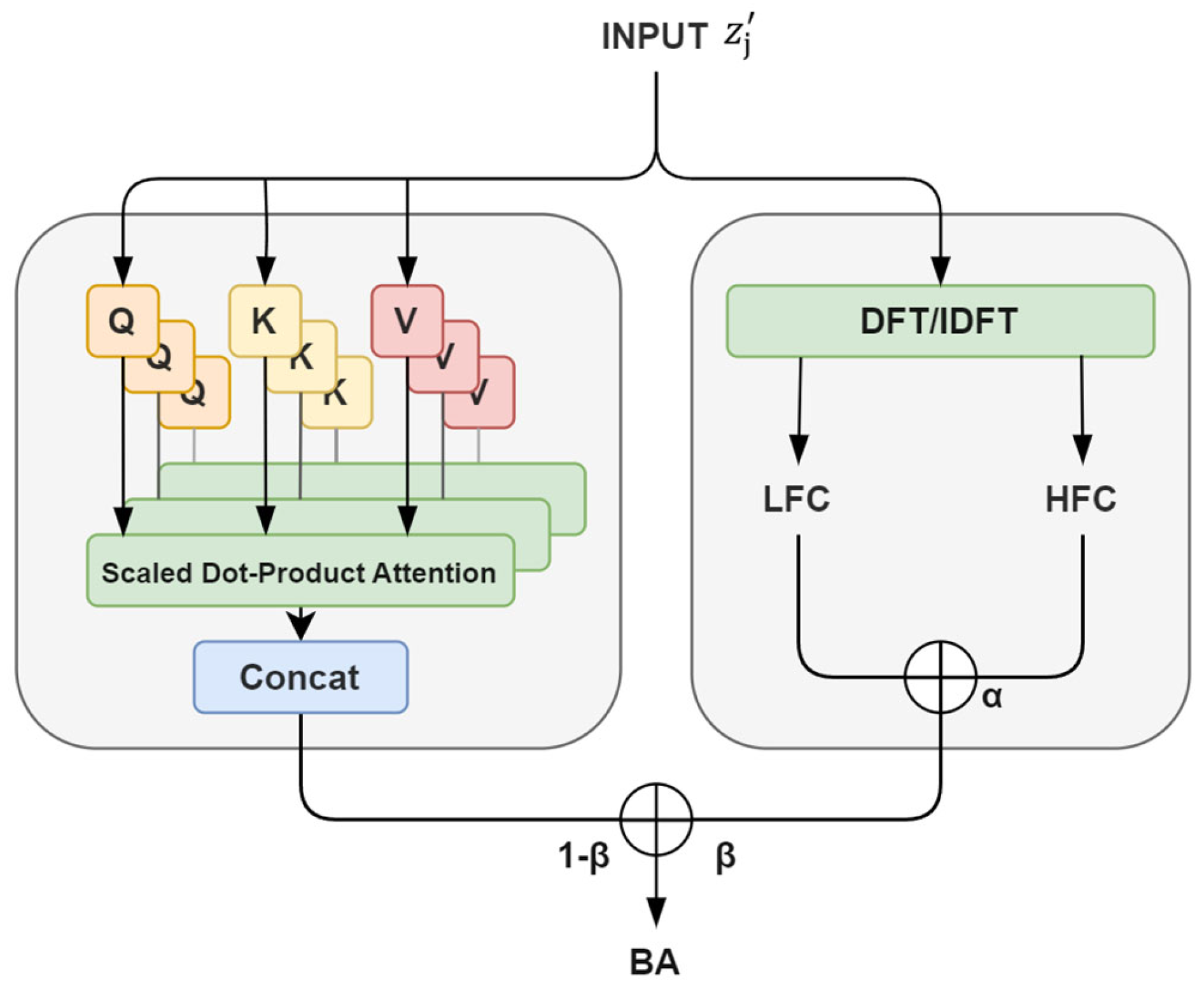

4.3. Inductive Bias Self-Attention Layer

4.4. Model Training and Prediction

5. Experiments

- Question 1:

- How does the CAMBSRec model perform compared with state-of-the-art multi-behavior and sequential models?

- Question 2:

- What is the impact of selecting different weights for different behaviors?

- Question 3:

- Is the specially designed context-aware positional encoding useful?

- Question 4:

- How does the performance of the attention mechanisms with inductive bias perform?

- Question 5:

- How does the CAMBSRec model perform in the face of sparse data?

5.1. Datasets and Experimental Setup

5.2. Model Performance (RQ1)

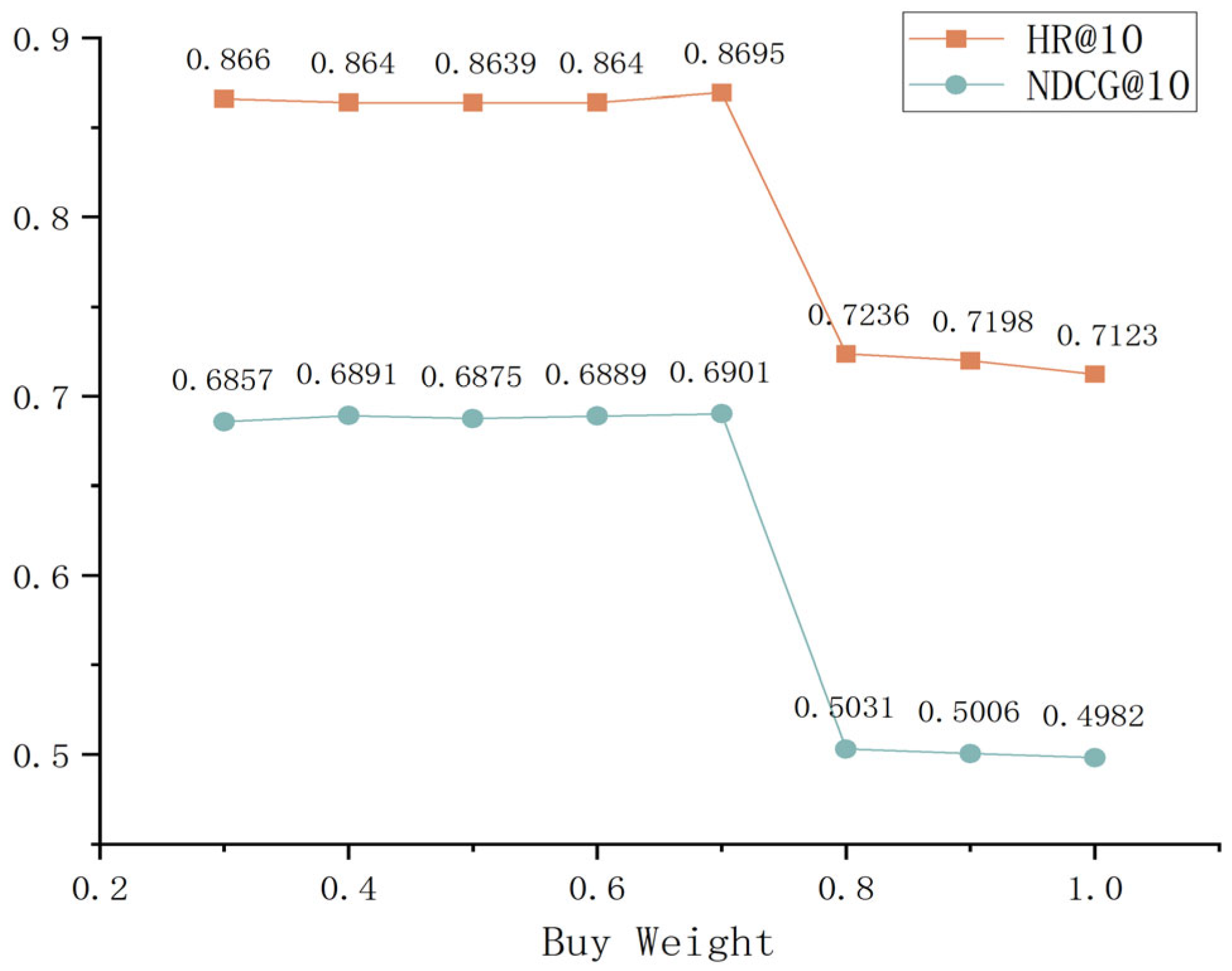

5.3. Impact of Behavior Weights (RQ2)

5.4. Impact of Context-Aware Positional Encoding (RQ3)

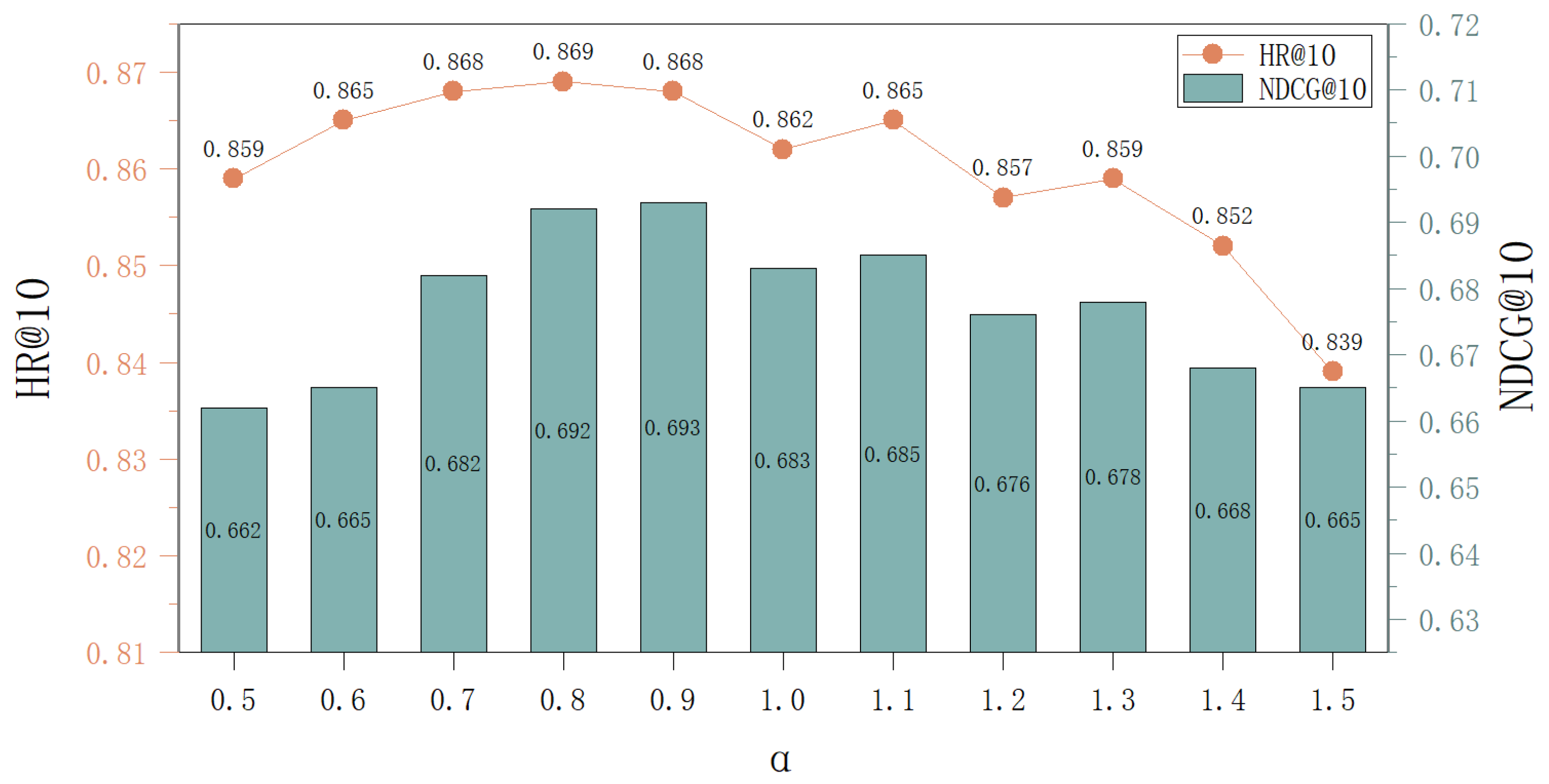

5.5. Impact of Inductive Bias (RQ4)

5.6. Performance in the Face of Sparse Data (RQ5)

6. Discussion

6.1. Comparison with Existing Studies

6.2. Complexity and Efficiency of Long Sequences

6.3. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Loukili, M.; Messaoudi, F.; El Ghazi, M. Machine learning based recommender system for e-commerce. IAES Int. J. Artif. Intell. (IJ-AI) 2023, 12, 1803–1811. [Google Scholar] [CrossRef]

- Sharma, K.; Lee, Y.-C.; Nambi, S.; Salian, A.; Shah, S.; Kim, S.-W.; Kumar, S. A survey of graph neural networks for social recommender systems. ACM Comput. Surv. 2024, 56, 1–34. [Google Scholar] [CrossRef]

- Chen, X.; Yao, L.; McAuley, J.; Zhou, G.; Wang, X. Deep reinforcement learning in recommender systems: A survey and new perspectives. Knowl.-Based Syst. 2023, 264, 110335. [Google Scholar] [CrossRef]

- Liu, H.; Zheng, C.; Li, D.; Shen, X.; Lin, K.; Wang, J.; Zhang, Z.; Zhang, Z.; Xiong, N.N. EDMF: Efficient deep matrix factorization with review feature learning for industrial recommender system. IEEE Trans. Ind. Inform. 2021, 18, 4361–4371. [Google Scholar] [CrossRef]

- Papadakis, H.; Papagrigoriou, A.; Panagiotakis, C.; Kosmas, E.; Fragopoulou, P. Collaborative filtering recommender systems taxonomy. Knowl. Inf. Syst. 2022, 64, 35–74. [Google Scholar] [CrossRef]

- Xia, L.; Huang, C.; Zhang, C. Self-supervised hypergraph transformer for recommender systems. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 2100–2109. [Google Scholar]

- Kang, W.C.; McAuley, J. Self-attentive sequential recommendation. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, Singapore, 17–20 November 2018; pp. 197–206. [Google Scholar]

- Jin, B.; Gao, C.; He, X.; Jin, D.; Li, Y. Multi-behavior recommendation with graph convolutional networks. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, China, 25–30 July 2020; pp. 659–668. [Google Scholar]

- Xia, L.; Xu, Y.; Huang, C.; Dai, P.; Bo, L. Graph meta network for multi-behavior recommendation. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, Canada, 11–15 July 2021; pp. 757–766. [Google Scholar]

- Yang, Y.; Huang, C.; Xia, L.; Liang, Y.; Yu, Y.; Li, C. Multi-behavior hypergraph-enhanced transformer for sequential recommendation. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; pp. 2263–2274. [Google Scholar]

- Yuan, E.; Guo, W.; He, Z.; Guo, H.; Liu, C.; Tang, R. Multi-behavior sequential transformer recommender. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 1642–1652. [Google Scholar]

- Rendle, S.; Freudenthaler, C.; Schmidt-Thieme, L. Factorizing personalized markov chains for next-basket recommendation. In Proceedings of the 19th International Conference on World Wide Web, Raleigh, NC, USA, 26–30 April 2010; pp. 811–820. [Google Scholar]

- Afsar, M.M.; Crump, T.; Far, B. Reinforcement learning based recommender systems: A survey. ACM Comput. Surv. 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Xia, H.; Luo, Y.; Liu, Y. Attention neural collaboration filtering based on GRU for recommender systems. Complex Intell. Syst. 2021, 7, 1367–1379. [Google Scholar] [CrossRef]

- Hou, Y.E.; Gu, W.; Yang, K.; Dang, L. Deep Reinforcement Learning Recommendation System based on GRU and Attention Mechanism. Eng. Lett. 2023, 31, 695. [Google Scholar]

- Roy, D.; Dutta, M. A systematic review and research perspective on recommender systems. J. Big Data 2022, 9, 59. [Google Scholar] [CrossRef]

- An, H.-W.; Moon, N. Design of recommendation system for tourist spot using sentiment analysis based on CNN-LSTM. J. Ambient. Intell. Humaniz. Comput. 2019, 13, 1653–1663. [Google Scholar] [CrossRef]

- Li, J.; Wang, Y.; McAuley, J. Time interval aware self-attention for sequential recommendation. In Proceedings of the 13th International Conference on Web Search and Data Mining, Houston, TX, USA, 3–7 February 2020; pp. 322–330. [Google Scholar]

- Gao, C.; Zheng, Y.; Li, N.; Li, Y.; Qin, Y.; Piao, J.; Quan, Y.; Chang, J.; Jin, D.; He, X.; et al. A survey of graph neural networks for recommender systems: Challenges, methods, and directions. ACM Trans. Recomm. Syst. 2023, 1, 1–51. [Google Scholar] [CrossRef]

- Zhao, N.; Long, Z.; Wang, J.; Zhao, Z.-D. AGRE: A knowledge graph recommendation algorithm based on multiple paths embeddings RNN encoder. Knowl.-Based Syst. 2022, 259, 110078. [Google Scholar] [CrossRef]

- Pradhan, T.; Kumar, P.; Pal, S. CLAVER: An integrated framework of convolutional layer, bidirectional LSTM with attention mechanism based scholarly venue recommendation. Inf. Sci. 2021, 559, 212–235. [Google Scholar] [CrossRef]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-attention with relative position representations. arXiv 2018, arXiv:1803.02155. [Google Scholar]

- Qiu, R.; Huang, Z.; Chen, T.; Yin, H. Exploiting positional information for session-based recommendation. ACM Trans. Inf. Syst. 2021, 40, 1–24. [Google Scholar] [CrossRef]

- Mu, Z.; Zhuang, Y.; Tang, S. Position-aware compositional embeddings for compressed recommendation systems. Neurocomputing 2024, 592, 127677. [Google Scholar] [CrossRef]

- Golovneva, O.; Wang, T.; Weston, J.; Sukhbaatar, S. Contextual Position Encoding: Learning to Count What’s Important. arXiv 2024, arXiv:2405.18719. [Google Scholar]

- Shin, Y.; Choi, J.; Wi, H.; Park, N. An attentive inductive bias for sequential recommendation beyond the self-attention. Proc. AAAI Conf. Artif. Intell. 2024, 38, 8984–8992. [Google Scholar] [CrossRef]

| Notation | Description |

|---|---|

| Item Embedding, Behavior Embedding, and Joint Embedding | |

| Dissimilarity Gate Value | |

| Context-Aware Positional Encoding | |

| LFC, HFC | Low-Frequency Components and High-Frequency Components |

| Score Function | |

| Loss Function |

| Dataset | Users | Items | Interactions | Behavior Typers |

|---|---|---|---|---|

| Tianchi | 6876 | 237,700 | 1,048,575 | {Click, Favorite, Cart, Buy} |

| BeiBei | 21,716 | 7977 | 3,338,068 | {Click, Favorite, Cart, Buy} |

| MovieLens | 67,787 | 8704 | 9,922,014 | {Dislike, Neutral, Like} |

| Method | Tianchi | Beibei | MovieLens | ||||

|---|---|---|---|---|---|---|---|

| HR@10 | NDCG@10 | HR@10 | NDCG@10 | HR@10 | NDCG@10 | ||

| Single-Behavior Sequential | SASRec | 0.658 | 0.484 | 0.423 | 0.382 | 0.815 | 0.573 |

| TiSASRec | 0.646 | 0.496 | 0.493 | 0.393 | 0.786 | 0.543 | |

| Multi-Behavior Sequential | MB-GCN | 0.836 | 0.603 | 0.633 | 0.384 | 0.750 | 0.489 |

| MB-GMN | 0.848 | 0.633 | 0.648 | 0.386 | 0.744 | 0.469 | |

| MBHT | 0.834 | 0.654 | 0.623 | 0.358 | 0.829 | 0.615 | |

| CAMBSRec (our) | 0.869 | 0.690 | 0.654 | 0.391 | 0.841 | 0.623 | |

| Improve (%) | 4.37% | 5.42% | 0.93% | 1.24% | 1.51% | 1.18% | |

| Model | HR@10 | NDCG@10 | Change |

|---|---|---|---|

| Baseline | 0.869 | 0.690 | — |

| Baseline + TNS | 0.858 | 0.684 | ↓1.2%/↓0.9% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhuang, B.; Lan, Y.; Zhang, M. CAMBSRec: A Context-Aware Multi-Behavior Sequential Recommendation Model. Informatics 2025, 12, 79. https://doi.org/10.3390/informatics12030079

Zhuang B, Lan Y, Zhang M. CAMBSRec: A Context-Aware Multi-Behavior Sequential Recommendation Model. Informatics. 2025; 12(3):79. https://doi.org/10.3390/informatics12030079

Chicago/Turabian StyleZhuang, Bohan, Yan Lan, and Minghui Zhang. 2025. "CAMBSRec: A Context-Aware Multi-Behavior Sequential Recommendation Model" Informatics 12, no. 3: 79. https://doi.org/10.3390/informatics12030079

APA StyleZhuang, B., Lan, Y., & Zhang, M. (2025). CAMBSRec: A Context-Aware Multi-Behavior Sequential Recommendation Model. Informatics, 12(3), 79. https://doi.org/10.3390/informatics12030079