Toolkit for Inclusion of User Experience Design Guidelines in the Development of Assistants Based on Generative Artificial Intelligence

Abstract

1. Introduction

2. Background

3. Materials and Methods

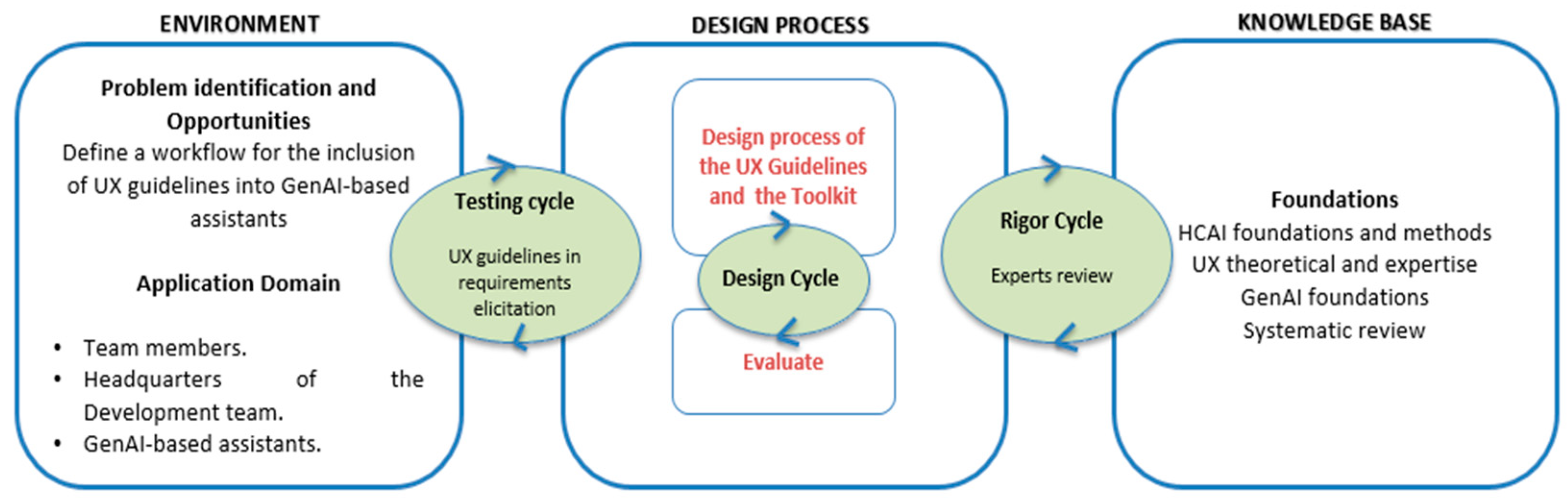

3.1. Methodology

3.2. UX Design Guidelines and Recommendations for GenAI-Based Assistants

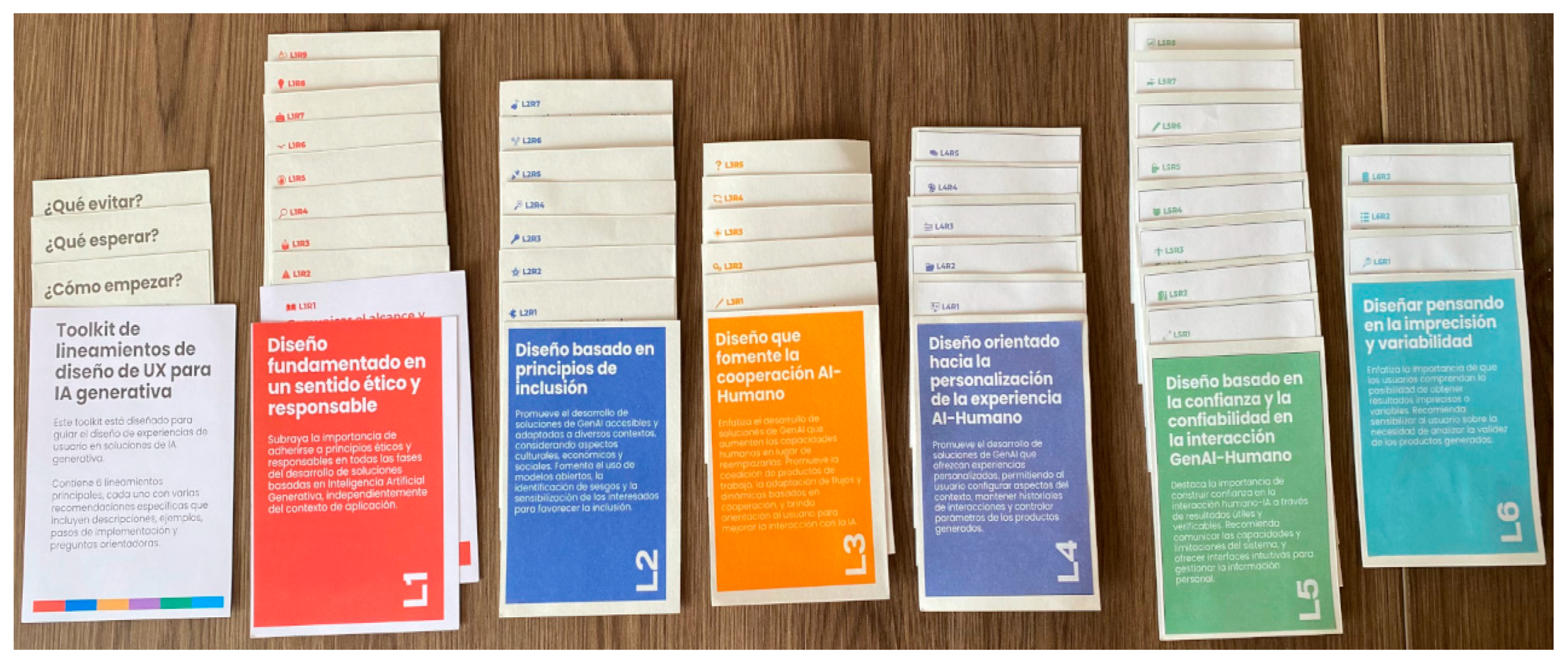

3.3. Toolkit for UX Design Guidelines for GenAI-Based Assistants

3.4. Evaluation

4. Results and Discussion

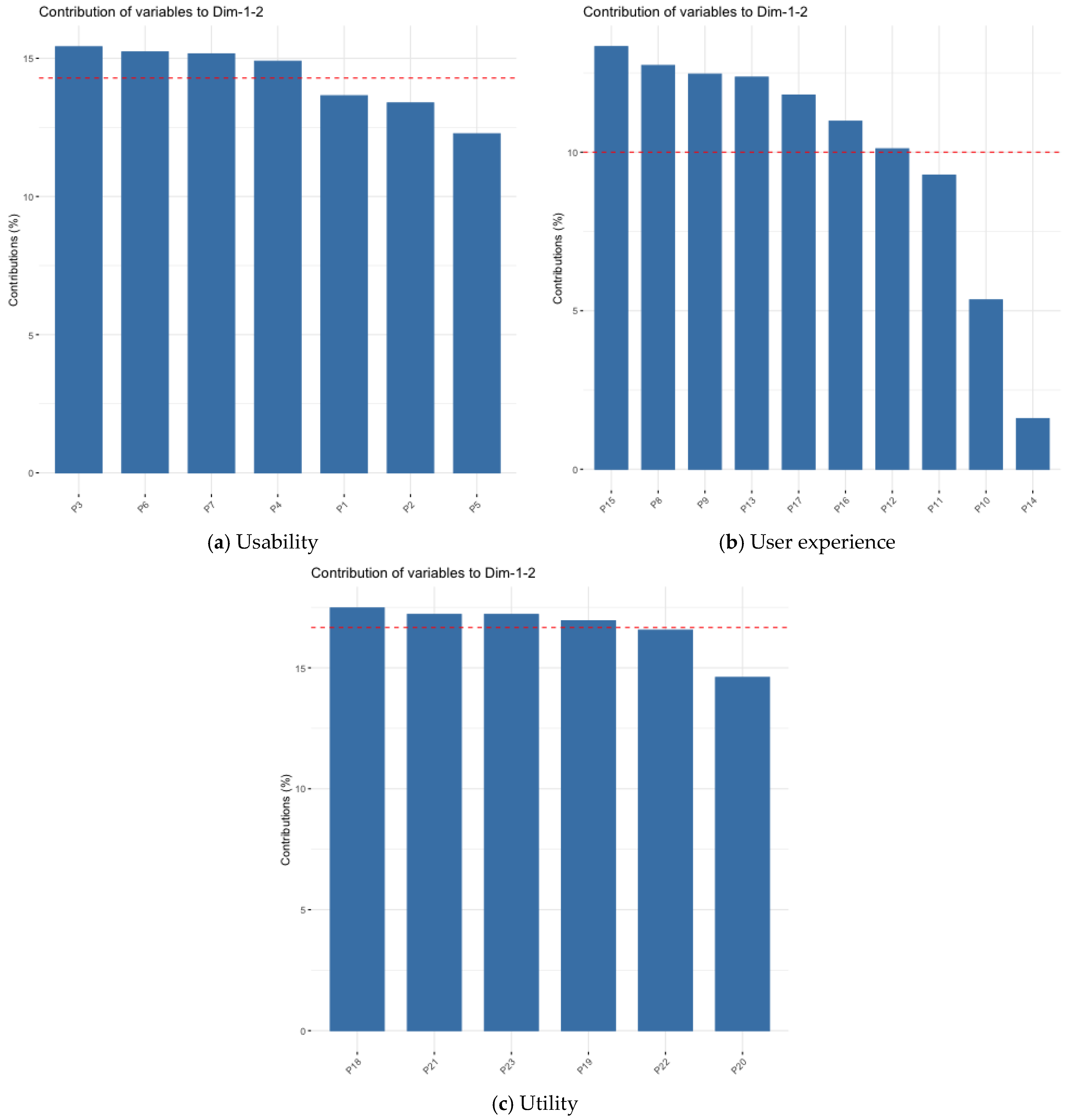

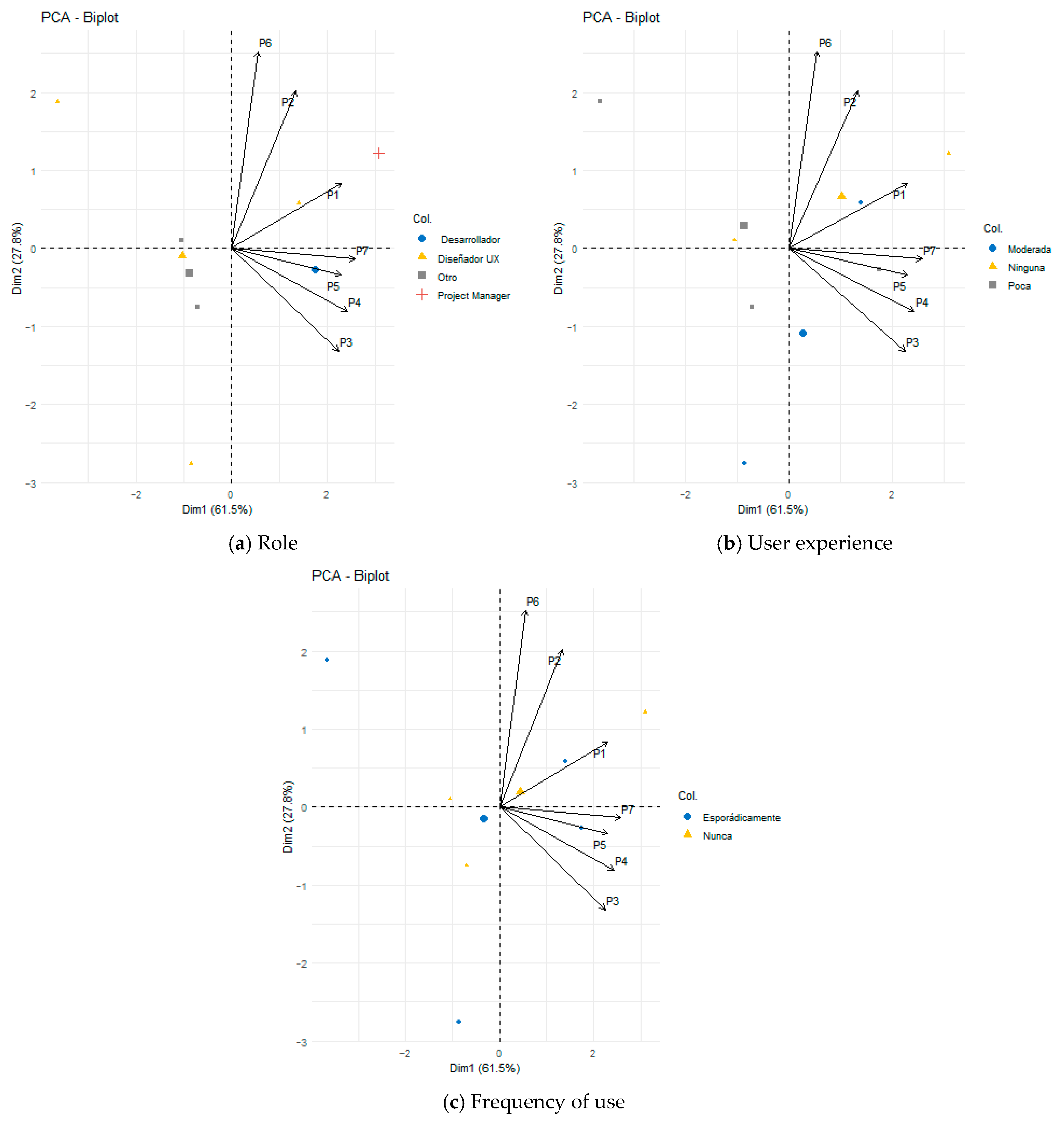

4.1. Quantitative Results

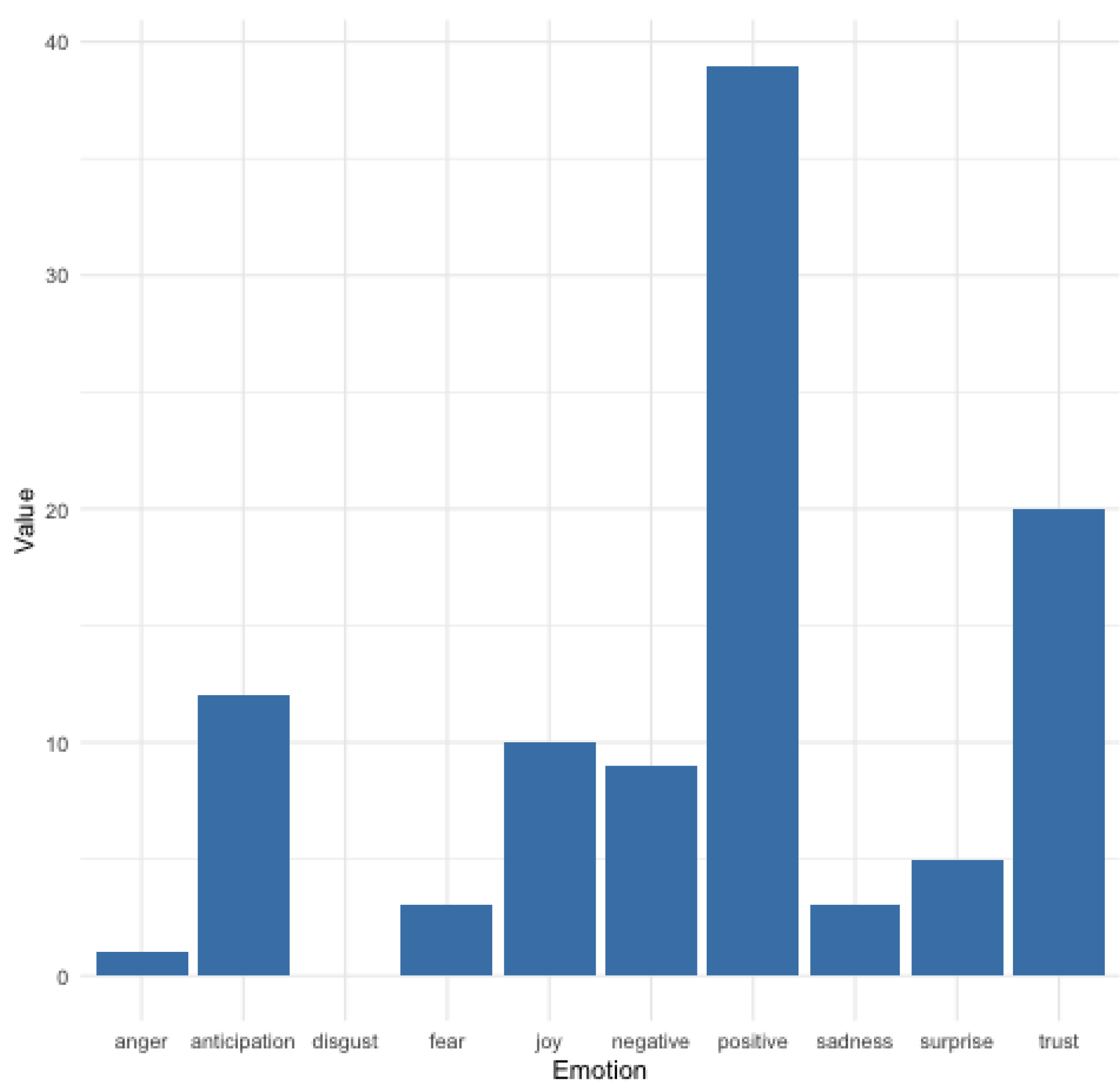

4.2. Qualitative Results

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, A.; Liu, L.; Zhu, T. Advancing the democratization of generative artificial intelligence in healthcare: A narrative review. J. Hosp. Manag. Health Policy 2024, 8, 12. [Google Scholar] [CrossRef]

- Dessimoz, C.; Thomas, P.D. AI and the democratization of knowledge. Sci. Data 2024, 11, 268. [Google Scholar] [CrossRef] [PubMed]

- Rajaram, K.; Tinguely, P.N. Generative artificial intelligence in small and medium enterprises: Navigating its promises and challenges. Bus. Horiz. 2024, 67, 629–648. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoriet, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 11. [Google Scholar]

- Dix, A.; Finlay, J.; Abowd, G.D.; Beale, R. Human-Computer Interaction; Taylor & Francis, Inc.: Philadelphia, PA, USA, 2004. [Google Scholar]

- Xu, W.; Dainoff, M. Enabling human-centered AI: A new junction and shared journey between AI and HCI communities. Interactions 2023, 30, 42–47. [Google Scholar] [CrossRef]

- Xu, W.; Gao, Z. Enabling human-centered AI: A methodological perspective. In Proceedings of the 2024 IEEE 4th International Conference on Human-Machine Systems (ICHMS), Ontario, ON, Canada, 15–17 May 2024. [Google Scholar]

- Capel, T.; Brereton, M. What is Human-Centered about Human-Centered AI? A Map of the Research Landscape. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023. [Google Scholar]

- Sison, A.J.G.; Daza, M.T.; Gozalo-Brizuela, R.; Garrido-Merchán, E.C. ChatGPT: More Than a “Weapon of Mass Deception” Ethical Challenges and Responses from the Human-Centered Artificial Intelligence (HCAI) Perspective. Int. J. Hum. Comput. Interact. 2023, 40, 4853–4872. [Google Scholar] [CrossRef]

- Casteleiro-Pitrez, J. Generative artificial intelligence image tools among future designers: A usability, user experience, and emotional analysis. Digital 2024, 4, 316–332. [Google Scholar] [CrossRef]

- Takaffoli, M.; Li, S.; Mäkelä, V. Generative AI in User Experience Design and Research: How Do UX Practitioners, Teams, and Companies Use GenAI in Industry? In Proceedings of the 2024 ACM Designing Interactive Systems Conference, Copenhagen, Denmark, 1–5 July 2024.

- Peláez, C.; Solano, A.; Nuñez, J.; Castro, D.; Cardona, J.; Duque, J.; Espinosa, J.; Montaño, A.; De la Prieta, F. Designing User Experience in the Context of Human-Centered AI and Generative Artificial Intelligence: A Systematic Review. In Proceedings of the International Symposium on Distributed Computing and Artificial Intelligence—DCAI 2024, Salamanca, Spain, 26–29 June 2024. [Google Scholar]

- Bingley, W.J.; Curtis, C.; Lockey, S.; Bialkowski, A.; Gillespie, N.; Haslam, S.A.; Worthy, P. Where is the human in human-centered AI? Insights from developer priorities and user experiences. Comput. Hum. Behav. 2023, 141, 107617. [Google Scholar] [CrossRef]

- Baabdullah, A.M.; Alalwan, A.A.; Algharabat, R.S.; Metri, B.; Rana, N.P. Virtual agents and flow experience: An empirical examination of AI-powered chatbots. Technol. Forecast. Soc. Chang. 2022, 181, 121772. [Google Scholar] [CrossRef]

- Gill, S.S.; Xu, M.; Patros, P.; Wu, H.; Kaur, R.; Kaur, K.; Buyya, R. Transformative effects of ChatGPT on modern education: Emerging Era of AI Chatbots. Internet Things Cyber-Phys. Syst. 2024, 4, 19–23. [Google Scholar] [CrossRef]

- Banh, L.; Strobel, G.; Markets, E. Generative artificial intelligence. Electron. Mark. 2023, 33, 63. [Google Scholar] [CrossRef]

- Peruchini, M.; da Silva, G.M.; Teixeira, J.M. Between artificial intelligence and customer experience: A literature review on the intersection. Discov. Artif. Intell. 2024, 4, 4. [Google Scholar] [CrossRef]

- York, E. Evaluating ChatGPT: Generative AI in UX Design and Web Development Pedagogy. In Proceedings of the 41st ACM International Conference on Design of Communication, Orlando, FL, USA, 26–28 October 2023. [Google Scholar]

- Liu, Y.; Siau, K. Generative Artificial Intelligence and Metaverse: Future of Work, Future of Society, and Future of Humanity. In Proceedings of the AI-generated Content, Proceedings of the first International Conference, AIGC 2023, Shanghai, China, 25–26 August 2023; Springer: Singapore, 2023. [Google Scholar]

- Liu, F.; Zhang, M.; Budiu, R. AI as a UX Assistant. 27 October 2023. Available online: https://www.nngroup.com/articles/ai-roles-ux/ (accessed on 21 February 2024).

- Qadri, R.; Shelby, R.; Bennett, C.L.; Denton, E. AI’s Regimes of Representation: A Community-centered Study of Text-to-Image Models in South Asia. In Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, Chicago, IL USA, 12–15 June 2023. [Google Scholar]

- Wang, X.; Attal, M.I.; Rafiq, U.; Hubner-Benz, S. Turning Large Language Models into AI Assistants for Startups Using Prompt Patterns. In Proceedings of the International Conference on Agile Software Development, Copenhagen, Denmark, 13–17 June 2022. [Google Scholar]

- Shah, C.S.; Mathur, S.; Vishnoi, S.K. Continuance Intention of ChatGPT Use by Students. In Proceedings of the International Working Conference on Transfer and Diffusion of IT, Nagpur, India, 15–16 December 2023. [Google Scholar]

- Li, J.; Cao, H.; Lin, L.; Hou, Y.; Zhu, R.; El Ali, A. User experience design professionals’ perceptions of generative artificial intelligence. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024. [Google Scholar]

- Kim, P.W. A Framework to Overcome the Dark Side of Generative Artificial Intelligence (GAI) Like ChatGPT in Social Media and Education. IEEE Trans. Comput. Soc. Syst. 2023, 11, 5266–5274. [Google Scholar] [CrossRef]

- Asadi, A.R. LLMs in Design Thinking: Autoethnographic Insights and Design Implications. In Proceedings of the 2023 5th World Symposium on Software Engineering, Tokyo, Japan, 22–24 September 2023. [Google Scholar]

- Sun, J.; Liao, Q.V.; Agarwal, M.M.M.; Houde, S.; Talamadupula, K.; Weisz, J.D. Investigating explainability of generative AI for code through scenario-based design. In Proceedings of the 27th International Conference on Intelligent User Interfaces, Helsinki, Finland, 22–25 March 2022. [Google Scholar]

- Oniani, D.; Hilsman, J.; Peng, Y.; Poropatich, R.K.; Pamplin, J.C.; Legault, G.L.; Wang, Y. Adopting and expanding ethical principles for generative artificial intelligence from military to healthcare. NPJ Digit. Med. 2023, 6, 225. [Google Scholar] [CrossRef] [PubMed]

- Shin, D.; Ahmad, N. Algorithmic nudge: An approach to designing human-centered generative artificial intelligence. Computer 2023, 56, 95–99. [Google Scholar] [CrossRef]

- Tlili, A.; Shehata, B.; Adarkwah, M.A.; Bozkurt, A.; Hickey, D.T.; Huang, R.; Agyemang, B. What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learn. Environ. 2023, 10, 15. [Google Scholar] [CrossRef]

- Fisher, J. Centering the Human: Digital Humanism and the Practice of Using Generative AI in the Authoring of Interactive Digital Narratives. In Proceedings of the International Conference on Interactive Digital Storytelling, Kobe, Japan, 11–15 November 2023. [Google Scholar]

- Mao, Y.; Rafner, J.; Wang, Y.; Sherson, J. A Hybrid Intelligence Approach to Training Generative Design Assistants: Partnership Between Human Experts and AI Enhanced Co-Creative Tools. Front. Artif. Intell. Appl. 2023, 368, 108–123. [Google Scholar]

- Guo, M.; Zhang, X.; Zhuang, Y.; Chen, J.; Wang, P.; Gao, Z. Exploring the Intersection of Complex Aesthetics and Generative AI for Promoting Cultural Creativity in Rural China after the Post-Pandemic. In AI-Generated Content, Proceedings of the First International Conference, AIGC 2023, Shanghai, China, 25–26 August 2023; Springer Nature: Singapore, 2023; pp. 313–331. [Google Scholar]

- Geerts, G. A design science research methodology and its application to accounting information systems research. Int. J. Account. Inf. Syst. 2011, 12, 142–151. [Google Scholar] [CrossRef]

- Adikari, S.; McDonald, C.; Campbell, J. A design science framework for designing and assessing user experience. In Proceedings of the Human-Computer Interaction, Design and Development Approaches: 14th International Conference, HCI International, Orlando, FL, USA, 9–14 July 2011. [Google Scholar]

- Hollander, M.; Wolfe, D. Nonparametric Statistical Methods: Solutions Manual to Accompany; Wiley-Interscience: Hoboken, NJ, USA, 1999. [Google Scholar]

- IDEO. IDEO Method Cards: 51 Ways to Inspire Design; William Stout: San Francisco, CA, USA, 2003. [Google Scholar]

- Friedman, B.; Hendry, D. The envisioning cards: A toolkit for catalyzing humanistic and technical imaginations. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2012. [Google Scholar]

- Friedman, B.; Hendry, D. Value Sensitive Design: Shaping Technology with Moral Imagination; MIT Press: Boston, MA, USA, 2019. [Google Scholar]

- Sarsenbayeva, Z.; van Berkel, N.; Hettiachchi, D.; Tag, B.; Velloso, E.; Goncalves, J.; Kostakos, V. Mapping 20 years of accessibility research in HCI: A co-word analysis. Int. J. Hum.-Comput. Stud. 2023, 175, 103018. [Google Scholar] [CrossRef]

- Hassan, A. Factors Affecting the Use of ChatGPT in Mass Communication. In Emerging Trends and Innovation in Business and Finance; Springer Nature: Singapore, 2023; pp. 671–685. [Google Scholar]

- Brandtzaeg, P.B.; You, Y.; Wang, X.; Lao, Y. “Good” and “Bad” Machine Agency in the Context of Human-AI Communication: The Case of ChatGPT. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 23–28 July 2023. [Google Scholar]

- Rana, N.P.; Pillai, R.; Sivathanu, B.; Malik, N. Assessing the nexus of Generative AI adoption, ethical considerations and organizational performance. Technovation 2024, 135, 103064. [Google Scholar] [CrossRef]

- Creely, E. The possibilities; limitations, and dangers of generative AI in language learning and literacy practices. In Proceedings of the International Graduate Research Symposium 2023, Hanoi, Vietnam, 27–29 October 2023. [Google Scholar]

- Weisz, J.D.; Muller, M.; He, J.; Houde, S. Toward General Design Principles for Generative AI Applications. 13 January 2023. Available online: https://arxiv.org/abs/2301.05578 (accessed on 17 February 2024).

- Sarraf, S.; Kar, A.K.; Janssen, M. How do system and user characteristics, along with anthropomorphism, impact cognitive absorption of chatbots–Introducing SUCCAST through a mixed methods study. Decis. Support Syst. 2024, 178, 114132. [Google Scholar] [CrossRef]

- Perri, L. What’s New in the 2023 Gartner Hype Cycle for Emerging Technologies. 23 August 2023. Available online: https://www.gartner.com/en/articles/what-s-new-in-the-2023-gartner-hype-cycle-for-emerging-technologies (accessed on 1 July 2024).

- Harley, H. Ideation in Practice: How Effective UX Teams Generate Ideas. 29 October 2017. Available online: https://www.nngroup.com/articles/ideation-in-practice/ (accessed on 19 November 2024).

- Object Management Group. Essence–Kernel and Language for Software Engineering Methods; OMG: Needham, MA, USA, 2018. [Google Scholar]

- Feng, K.K.; Li, T.W.; Zhang, A.X. Understanding collaborative practices and tools of professional UX practitioners in software organizations. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023. [Google Scholar]

- Wagemans, J.; Elder, J.H.; Kubovy, M.; Palmer, S.E.; Peterson, M.A.; Singh, M.; von der Heydt, R. A century of Gestalt psychology in visual perception: I. Perceptual grouping and figure–ground organization. Psychol. Bull. 2012, 138, 1172–1217. [Google Scholar] [CrossRef] [PubMed]

- Johannesson, P.; Perjons, E. An Introduction to Design Science; Springer: Stockholm, Sweden, 2014. [Google Scholar]

- Team, R.C. A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2022. [Google Scholar]

- Nunnally, J. Psychometric Theory, 2nd ed.; McGraw-Hill: New York, NY, USA, 1978. [Google Scholar]

- Jolliffe, I.T. Principal component analysis for special types of data. In Principal Component Analysis; Springer: New York, NY, USA, 2002; pp. 338–372. [Google Scholar]

- Shneiderman, B. Human-Centered AI; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Elliot, A.J.; Maier, M.A. Color psychology: Effects of perceiving color on psychological functioning in humans. Annu. Rev. Psychol. 2014, 65, 95–120. [Google Scholar] [CrossRef] [PubMed]

- Kress, G.; van Leeuwen, T. Colour as a semiotic mode: Notes for a grammar of colour. Vis. Commun. 2002, 1, 343–368. [Google Scholar] [CrossRef]

- Roy, R.; Warren, J.P. Card-based design tools: A review and analysis of 155 card decks for designers and designing. Des. Stud. 2019, 63, 125–154. [Google Scholar] [CrossRef]

- Petri, G.; von Wangenheim, C.G.; Borgatto, A.F. MEEGA+, systematic model to evaluate educational games. In Encyclopedia of Computer Graphics and Games; Springer International Publishing: Cham, Switzerland, 2024; pp. 1112–1119. [Google Scholar]

- Minge, M.; Thüring, M. The meCUE questionnaire (2.0): Meeting five basic requirements for lean and standardized UX assessment. In Proceedings of the Design, User Experience, and Usability: Theory and Practice: 7th International Conference, DUXU 2018, Held as Part of HCI International 2018, Las Vegas, NV, USA, 15–20 July 2018. [Google Scholar]

| Code | UX Guideline | Description |

|---|---|---|

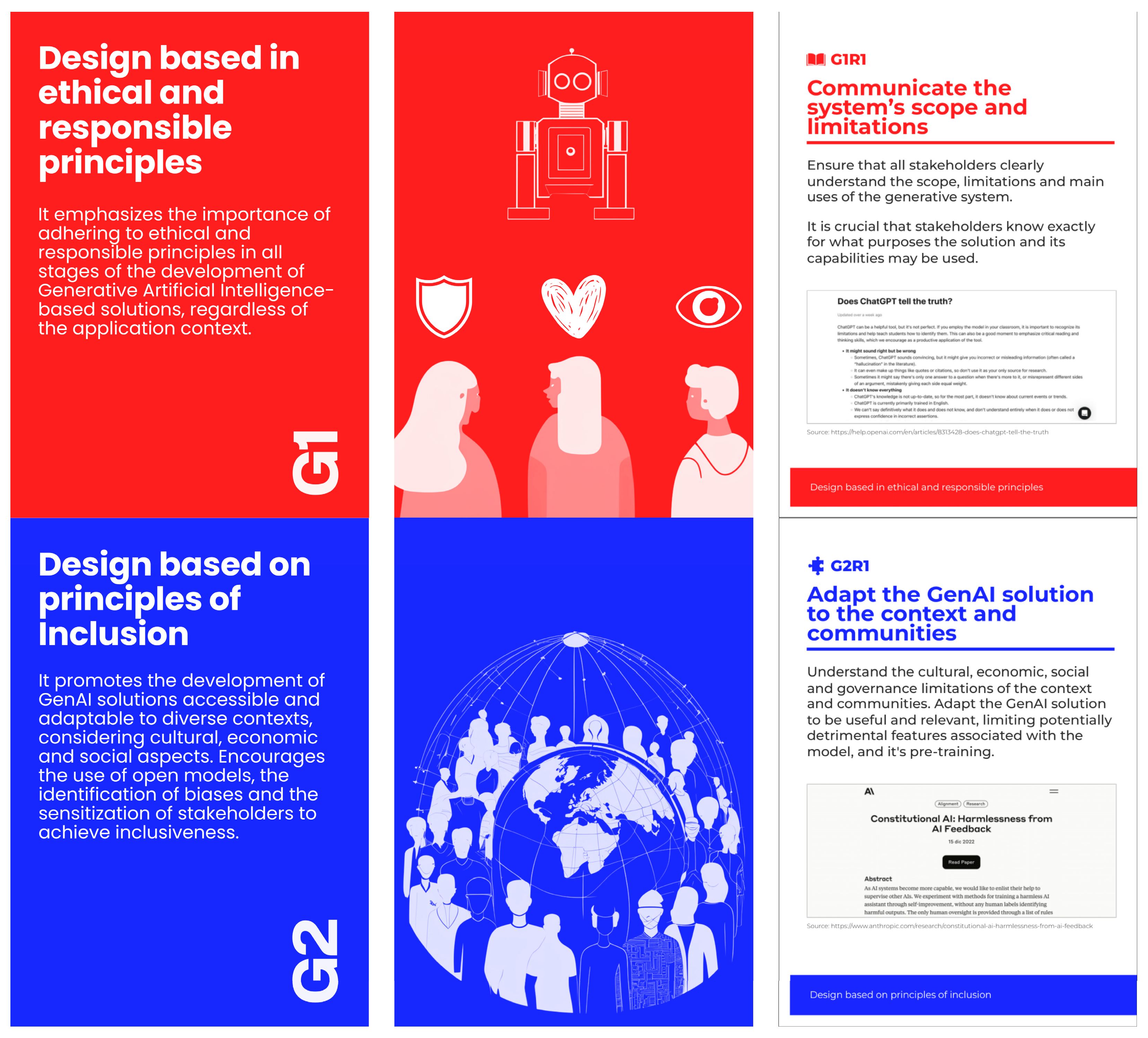

| G1 | Design Based on Ethical and Responsible Principles | This guideline should be considered a cross-cutting factor in the development of any solution based on GenAI, regardless of its application context [41]. Incorporating a GenAI-based solution or evolving an existing one should prompt us to question how that solution or its evolution will contribute to creating value for the UX [42]. A design grounded in ethical and responsible principles contributes to creating value in UX by enhancing the user’s perception of transparency in GenAI-based assistants. It enables mechanisms that clearly communicate the solution’s capabilities, limitations, and potential risks, prevents misunderstandings, and includes bias mitigation as a key element that requires traceability and control throughout the human–AI interaction process [43]. |

| G2 | Design Based on Principles of Inclusion | A design based on the principles of inclusion ensures that GenAI-based assistants can be used and understood by a diverse and representative audience. This is achieved by creating solutions adaptable to various accessibility needs, addressing several disabilities within the population. Furthermore, it emphasizes the importance of considering cultural and linguistic variations to ensure their utility for a global and diverse audience and mitigating potential biases and prejudices [44]. This approach promotes the reduction in barriers to the adoption of GenAI in disadvantaged contexts, particularly in emerging economies, recognizing the need to advance toward models whose development is not constrained by data derived predominantly from customs, cultures, and contexts belonging to industrialized countries [21]. |

| G3 | Design that Promotes AI–Human Collaboration | This guideline responds to the significant variety of human tasks that can be supported by GenAI-based solutions at various stages, tailored to specific needs throughout their execution [45]. Grounded in the principle of HCAI, this guideline adheres to the premise that AI solutions should exist to augment capabilities, not replace them. In this context, various types of human activities can be greatly enhanced through the mediation of GenAI-based solutions in their processes. |

| G4 | Design Oriented Toward Personalization of the AI–Human Experience | GenAI-based solutions offer the potential to usher in a new era of information technologies, with the capacity to scale toward a more natural and personalized human–computer experience. A key factor enabling this evolution is the anthropomorphism resulting from human–GenAI interaction [46]. It is crucial, however, to ensure that users remain aware that they are interacting with technology, not another human (a conscious being). This guideline highlights the challenge of value tension [38], which must be considered in UX design for such solutions. |

| G5 | Design Based on Trust and Reliability in GenAI–Human Interaction | In the context of emerging technologies, such as GenAI-based solutions, trust and reliability are critical factors for their adoption and evolution. Trust arises when a system delivers useful results, a high quality, and is verifiable [29]. It is essential for users to identify issues such as hallucinations, biases, and errors and evaluate whether these problems are manageable or acceptable. This capability allows users to adjust their expectations, maintain control, and ensure informed use of the system. In contrast, reliability refers to the system’s consistency and predictability under different conditions. A reliable system not only reduces errors but also strengthens user perception by demonstrating safe and consistent performance. Both concepts—trust and reliability—are essential pillars for ensuring effective and sustainable interaction in GenAI-based solutions. In this regard, the adoption of GenAI follows a cycle similar to Gartner’s Hype Cycle for emerging technologies. Currently, many GenAI solutions are at a peak of inflated expectations [47], where the initial enthusiasm of users contrasts with their difficulties in recognizing the limitations and potential risks of these technologies. However, as these solutions progress toward maturity, trust needs to be established in human–AI interactions to determine their long-term success and adoption. |

| G6 | Design with Consideration of Inaccuracy and Variability | The results generated by GenAI-based solutions are far from infallible. This may significantly deviate from the originally estimated or expected results. On the one hand, responses may be subject to inaccuracies due to the inherent limitations of the algorithms, data, and architectures underlying these solutions. On the other hand, users should be aware that GenAI systems can provide responses that vary in quality and form, even if the input provided by the user remains unchanged [45]. |

| UX Guideline | Associated Recommendations |

|---|---|

| G1 | G1R1. Communicate the System’s Scope and Limitations: Ensure that stakeholders are aware of the system’s scope, limitations, and primary intended uses. Clearly specify the purposes of the GenAI system. The system should be prepared to assist users when they inquire about its capabilities. G1R2. Suggest Limits and Offer Assistance: The system should suggest its own limitations and deficiencies when inaccuracies are detected in the generated results. This can foster trust and reassurance in users. If the system fails, it should provide users with the option to be transferred to human customer support. G1R3. Communicate Possibilities of Emergent Behaviors: If your system allows for emergent behaviors, ensure that users understand that the GenAI-based system may provide feedback on topics for which they have not been explicitly trained. G1R4. Monitor and Mitigate Biases and Inappropriate Content: Test, monitor, and track the results generated from user interactions with your GenAI solution, paying attention to outcomes that may exhibit potential biases, prejudices, or even toxic content or misinformation. If such issues are identified, various mechanisms should be explored to mitigate their impact, both at the system level and through communication with stakeholders. G1R5. Document Limitations and Known Issues of the Model: Ensure that you identify and document the potential limitations and known issues associated with the model used for the development of your GenAI-based solution. Investigate whether there is verifiable information about similar solutions that have exhibited biases, prejudices, or negative stereotypes when using the same model. G1R6. Establish Ethical Boundaries and Detect Bias in Responses: Identify biased response patterns related to racial, gender, ethnic, or political identities. Set clear boundaries for the behavior of the AI model, particularly concerning sensitive content and language. Prioritize ethical guidelines that promote more holistic decision-making, incorporating ethics, morality, and fairness. G1R7. Raise Stakeholder Awareness of the Real Capabilities of AI: Educate stakeholders throughout the lifecycle, guiding the development and eventual launch of the GenAI-based solution, providing a realistic and informed perspective on how the system functions. This will help them avoid misinformation, false expectations, and exaggerations about the capabilities of these types of solutions. G1R8. Clearly Communicate the Scope of the Solution: Clearly articulate the solution’s scope at the outset of the interaction to prevent user frustration when the system encounters requests that are beyond its capabilities. Ensure that the organization transparently informs users that they are engaged with a GenAI-based system. |

| G2 | G2R1. Adapt the GenAI Solution to the Context and Communities: Acknowledge communities and their context by understanding their cultural, economic, social, and governance limitations. Consider how to tailor your GenAI-based solution to be useful in this context and strive to mitigate any potential features associated with this model and pre-training that could undermine its primary purpose. G2R2. Assessing the Need for Multimodality to Ensure Accessibility: Although incorporating multimodality into GenAI solutions naturally entails a significantly higher cost, its necessity within a specific context and communities should be evaluated. Determine the potential modalities required to ensure accessibility for most stakeholders. If multimodality is not feasible in the initial versions due to various factors, ensure that the solution’s architecture is sufficiently modular, flexible, and economically viable to accommodate future evolutions of GenAI systems. G2R3. Use Open and Adaptable Models: Encourage the use of open models that allow for greater adaptability to a knowledge base aligned with the context and communities targeted by your GenAI solution. G2R4. Communicate Evidence of Biases and Prejudices in GenAI Responses: Upon identifying potential biases and prejudices in your GenAI solution’s responses that could affect or harm users, such as those related to gender ideology, sexual preferences, religious beliefs, or ethnic and social discrimination, promptly communicate these issues to stakeholders. If possible, implement corrective actions to evolve the solution to minimize this type of response. G2R5. Promote Inclusion and Consideration of GenAI Developers: Raise awareness among stakeholders to adopt an attitude of inclusion and consideration toward those responsible for developing the GenAI solution. Encourage an understanding of the limitations and risks inherent in the current use and adoption of such technologies. This approach fosters relationships that support the system’s evolution through a more inclusive design process that involves all stakeholders. G2R6. Provide Accessible Alternatives to Generated Content: Offer alternatives to generated content, such as text descriptions of images. These alternatives are essential to ensure that all users, regardless of their abilities, can access and understand the information provided by the AI. Adopt recognized accessibility standards, such as the Web Content Accessibility Guidelines (WCAGs). G2R7. Ensure Accessibility Technologies and Navigation Options: Consider assistive technologies and alternative navigation options to ensure that the experience is accessible to users with functional differences. Ensure that multimodal information is accessible to all users by providing options such as subtitles for videos, audio descriptions for images, contrast and readable font settings, adjustable speed of the information being presented, and flexible interaction methods with different resources. |

| G3 | G3R1. Enable Co-Editing of Work Outputs: Ensure that your GenAI assistant promotes the co-editing of work outputs, allowing users to enhance them with the system’s assistance. G3R2. Identify Opportunities for Human–AI Collaboration: Adapt workflows and cooperative dynamics by identifying points of the value chain where the use of a GenAI assistant can provide significant advantages. Define work outputs amenable to generative processes, ensuring that the responsible use of the solution remains a core principle throughout its implementation. G3R3. Provide Guidance for Crafting Effective Prompts: Offer users guidance on how to improve the creation of prompts to generate work outputs that are more consistent and aligned with users’ needs. The solution should provide options and support to help users understand how to define a clear prompt, identify relevant keywords, and establish an appropriate overall structure for writing prompts, tailored to their needs and the solution’s capabilities. G3R4. Implement Real-Time Bidirectional Feedback: Incorporate functionalities in your solution that enable real-time bidirectional feedback during human–AI interactions, allowing for the continuous improvement in work outputs generated by the GenAI assistant. G3R5. Provide Contextual Help for New Features: This feature provides users contextual assistance when they are about to use a feature for the first time. |

| G4 | G4R1. Enable Context and Process Flow Configuration: Provide users with the ability to configure factors related to the application context and process flow within the GenAI assistant, tailoring the generation of work outputs to meet their expectations. G4R2. Enable Interaction History Management: Allow users to retain their history of previous interactions with the GenAI assistant, providing them with the ability to manage these records by deleting or preserving those deemed relevant and necessary for their needs and goals. G4R3. Provide Control Over Work Output Parameters: Allow users to control parameters related to generative work outputs according to their needs. For example, users should be able to specify the number of images they wish to produce or the number of alternatives they wish to generate for a specific planning strategy, among other needs, depending on the context of the GenAI assistant solution. G4R4. Personalize the Experience Through Individual Parameters and Preferences: Facilitate human–AI interaction by promoting the personalization of the experience through a set of user-specific information and basic configuration parameters (e.g., personal information, colors, interface layout, images, audio, etc.). Additionally, enable the modeling of a structure for more personalized and user-centered generative response styles tailored to the needs of each user. G4R5. Adapt the Communication Style to Context and User Profile: Ensure that the GenAI assistant offers a communication style in human–GenAI interactions that is adaptable and consistent with both the application context and the specific characteristics of the user profile for whom the solution is intended. G4R6. Control and Monitor Anthropomorphization and Clarify the Nature of AI: Regulate and monitor the anthropomorphization in human–GenAI interactions, reminding users that they are not interacting with a human being (raising their awareness). As such, AI should not be attributed with intelligence, sensitivity, or empathy, and its primary purpose is to assist users more efficiently. |

| G5 | G5R1. Guide Users in Identifying Expected Benefits: Support the user in defining the specific benefits anticipated to arise from using a GenAI assistant on their processes, workflows, and work styles. In addition, it helps them identify the key stages where the solution should be applied to maximize its impact. G5R2. Disclose the Sources of Information Used: Inform stakeholders of the resources (sources) of the original information, which enables the generation of responses that the GenAI system provides to the user. G5R3. Set Realistic Expectations About Capabilities and Limitations: Communicate both the capabilities and limitations of GenAI-based solutions to stakeholders to align user expectations with the system’s actual performance. This approach helps build consistent trust in the system’s abilities and lays the foundation for future developments that can progressively enhance user confidence in the GenAI system. G5R4. Explain the Generation Processes and Mechanisms: Clearly and simply communicate to users the processes and mechanisms used by AI models that enable the generation of responses or work outputs. G5R5. Promote Safe Practices and Protect Sensitive Data: Inform users about the importance of not using sensitive data during interactions with the GenAI system to ensure data security and prevent practices such as phishing, privacy violations, confidential information leaks, and other security breaches. It is crucial that users are aware of how to interact safely with a GenAI-based assistant and understand the risks associated with the inadvertent introduction of sensitive data. G5R6. Enable Persistence of Information in Interaction Sessions: The solution should be capable of maintaining persistence in the information and elements generated during human–GenAI interaction sessions, thereby enhancing the optimization of prompt engineering. G5R7. Provide Users Control Over Personal Data: Offer intuitive interfaces within the GenAI assistant that allow users to manage their personal information. This includes options to opt out of personal data collection and mechanisms to ensure continuous and direct control over personal data. G5R8. Scale the Solution While Preserving Privacy and Quality: Implement mechanisms that allow the GenAI assistant to scale to a larger user base without compromising data privacy or the quality of the generated responses. |

| G6 | G6R1. Encourage Critical Evaluation of Generated Results: Raise user awareness about the importance of critically analyzing the work outputs generated by GenAI mediation and assessing their accuracy and validity. G6R2. Provide Multiple Generative Response Options: Whenever possible, offer users a set of generative response options produced by the solution, allowing them to select the one that best aligns with their interests and needs. G6R3. Provide Predefined Questions Aligned with the Scope: Consider offering users a predefined list of questions for using a solution that aligns with its scope, allowing users to quickly obtain information. However, note that predefined questions may limit the ability to explore all the system’s options. |

| Factor | Code | Question |

|---|---|---|

| Usability | P1 | The visual design of the toolkit facilitates the rapid identification of guidelines for GenAI solutions. |

| Usability | P2 | The instructions and examples provided in the toolkit are clear and easily understandable. |

| Usability | P3 | Learning to use the toolkit was straightforward and intuitive for me. |

| Usability | P4 | I believe that most individuals can quickly learn to use this toolkit effectively. |

| Usability | P5 | The recommendations and guidelines provided by the toolkit are clear and comprehensible within the specific context of UX design for GenAI. |

| Usability | P6 | The fonts (size and style) used in the toolkit are easily readable. |

| Usability | P7 | The structure of the toolkit allows for easy navigation across different UX aspects specific to GenAI. |

| User Experience | P8 | I am satisfied with how the toolkit assisted me in addressing specific UX challenges. |

| User Experience | P9 | The use of the toolkit was a pleasant experience, free from frustration. |

| User Experience | P10 | I find the content of the toolkit to be relevant and useful for my work. |

| User Experience | P11 | The toolkit provides concrete and applicable examples of how to implement UX guidelines across various types of GenAI solutions. |

| User Experience | P12 | Using the toolkit enabled me to enhance the accuracy of the defined requirements. |

| User Experience | P13 | The guidelines and examples provided by the toolkit are useful and applicable to my specific needs. |

| User Experience | P14 | The toolkit promotes collaboration and discussion within the work team. |

| User Experience | P15 | If possible, I would use this toolkit on a daily basis to tackle UX design projects for GenAI. |

| User Experience | P16 | I would choose the toolkit for use in future projects related to GenAI. |

| User Experience | P17 | I prefer using the physical version of the toolkit over the digital version. |

| Utility | P18 | The toolkit helped me identify critical UX aspects that are unique to GenAI solutions. |

| Utility | P19 | The toolkit’s recommendations were directly applicable to the UX challenges encountered in the projects. |

| Utility | P20 | The use of the toolkit allowed me to save time in identifying and resolving GenAI-specific UX problems. |

| Utility | P21 | The toolkit provides valuable guidance on how to effectively communicate the capabilities and limitations of GenAI to end-users. |

| Utility | P22 | The toolkit was useful in anticipating and mitigating potential ethical issues in UX design for GenAI. |

| Utility | P23 | The toolkit is a valuable resource for enhancing UX in GenAI projects. |

| Component | Cumulative Percentage of Variance | ||

|---|---|---|---|

| Usability | User Experience | Utility | |

| 1 | 61.5 | 45.4 | 76.9 |

| 2 | 89.3 | 70.7 | 94.5 |

| 3 | 97.4 | 83.8 | 98.1 |

| 4 | 99.6 | 92.3 | 99.4 |

| 5 | 100.0 | 99.0 | 100.0 |

| 6 | 100.0 | 100.0 | 100.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peláez, C.A.; Solano, A.; Ospina, J.A.; Espinosa, J.C.; Montaño, A.S.; Castillo, P.A.; Duque, J.S.; Castro, D.A.; Nuñez Velasco, J.M.; De la Prieta, F. Toolkit for Inclusion of User Experience Design Guidelines in the Development of Assistants Based on Generative Artificial Intelligence. Informatics 2025, 12, 10. https://doi.org/10.3390/informatics12010010

Peláez CA, Solano A, Ospina JA, Espinosa JC, Montaño AS, Castillo PA, Duque JS, Castro DA, Nuñez Velasco JM, De la Prieta F. Toolkit for Inclusion of User Experience Design Guidelines in the Development of Assistants Based on Generative Artificial Intelligence. Informatics. 2025; 12(1):10. https://doi.org/10.3390/informatics12010010

Chicago/Turabian StylePeláez, Carlos Alberto, Andrés Solano, Johann A. Ospina, Juan C. Espinosa, Ana S. Montaño, Paola A. Castillo, Juan Sebastián Duque, David A. Castro, Juan M. Nuñez Velasco, and Fernando De la Prieta. 2025. "Toolkit for Inclusion of User Experience Design Guidelines in the Development of Assistants Based on Generative Artificial Intelligence" Informatics 12, no. 1: 10. https://doi.org/10.3390/informatics12010010

APA StylePeláez, C. A., Solano, A., Ospina, J. A., Espinosa, J. C., Montaño, A. S., Castillo, P. A., Duque, J. S., Castro, D. A., Nuñez Velasco, J. M., & De la Prieta, F. (2025). Toolkit for Inclusion of User Experience Design Guidelines in the Development of Assistants Based on Generative Artificial Intelligence. Informatics, 12(1), 10. https://doi.org/10.3390/informatics12010010