Abstract

Background/Objectives: The digitization of care opens up new opportunities to support family caregivers, who play a key role in home care. While telemedicine applications have already shown initial relief effects, AI-supported chatbots are increasingly coming into focus as an innovative form of digital support. The aim of this study was to build on an earlier study on the integration of telemedicine into home care and to conduct a complementary study on AI-based chatbots to analyze their acceptance, perceived benefits, and potential barriers from the perspective of family caregivers. Methods: The study comprises two consecutive online surveys with a total of n = 62 family caregivers. The first study assessed the use and acceptance of telemedicine systems; the second complementary survey examined attitudes toward AI-supported chatbots. Both questionnaires were developed based on a systematic literature review and in accordance with the Technology Acceptance Model (TAM). The dimensions of user-friendliness, data protection, communication support, emotional relief, and training needs were among those recorded. The data were evaluated using descriptive statistics, including comparative analyses between the two studies. Results: The results show that family caregivers generally have a positive attitude toward digital health solutions, but at the same time identify specific barriers. While technical barriers and privacy concerns dominated the telemedicine study, the AI results place greater emphasis on psychosocial factors. It also became clear that participants assumed that chatbots would be more acceptable if they were designed to be empathetic and dialogue-oriented. A comparison of the two data sets shows that the perceived benefits of digital systems are shifting from functional support to interactive, emotional support. Conclusions: The results suggest that AI-powered chatbots could offer significant added value to family caregivers by combining information sharing, emotional support, and self-reflection. In doing so, they expand the focus of traditional telemedicine to include a communicative and psychosocial dimension. Future research should examine the actual user experience and effectiveness of such systems in longitudinal and qualitative designs. Despite limitations in terms of sample representativeness and hypothetical usage estimates, the study makes an important contribution to the further development of digital care concepts and the ethically responsible integration of AI into home care.

1. Introduction

The ongoing digitization of healthcare and the growing need for flexible care models have significantly accelerated the development and implementation of telemedicine technologies in recent years [1,2,3]. Telemedicine offers the opportunity to optimize home care for people in need of care by facilitating access to medical services, overcoming time and space barriers, and improving continuity of care [4,5,6]. Especially in rural or underserved regions, telemedicine can ensure health care and reduce the burden on nursing staff and family caregivers [7,8,9,10].

However, the successful implementation of these solutions requires an understanding of how family caregivers accept and use such technologies. Technical barriers, such as inadequate infrastructure or a lack of digital skills, psychological barriers, such as uncertainty in using the technology or concerns about data protection, and social factors, such as a lack of support from family or professionals, can significantly limit its use [10,11,12,13,14].

This study builds on an earlier investigation into the integration of telemedicine into home care, which identified specific barriers and acceptance factors [1,2,3,4,5]. Based on these findings, the present study was designed to shift the focus from technological infrastructure to communicative interaction. While telemedicine applications are primarily focused on the exchange of medical data and remote monitoring of health parameters, AI-supported chatbots represent a novel form of digital support based on dialogue-based interaction and artificial intelligence [7,8]. These systems can provide both informational and emotional support by responding to individual needs, enabling low-threshold counseling, and being available to family caregivers around the clock as digital conversation partners [9].

The theoretical basis for telemedicine interventions is based on technology acceptance models. The Technology Acceptance Model (TAM) postulates that perceived usefulness and ease of use are key predictors of the use of new technologies [15]. The Unified Theory of Acceptance and Use of Technology (UTAUT) adds that social influences, expected performance, user experience, and supportive conditions influence usage [16]. These models have been confirmed in numerous studies on the validation and acceptance of telemedicine among patients and family caregivers [17,18,19,20].

This paper pays particular attention to AI-supported chatbots as a support for telemedicine applications. Chatbots can provide both technical and emotional support to family caregivers, convey information in an understandable way, and improve communication with medical staff [21,22,23]. They thus offer the potential to overcome existing barriers, strengthen the self-efficacy of caregivers, and sustainably improve the quality of home care [24,25,26]. Previous studies suggest that AI-supported dialogue systems can strengthen the self-efficacy of family caregivers, facilitate information retrieval, and promote psychosocial relief [10]. Nevertheless, uncertainties remain regarding the acceptance, data protection, trust building, and emotional quality of such systems.

Against this background, the present study aims to examine the acceptance, perceived benefits, and potential applications of AI-supported chatbots in home care from the perspective of family caregivers. The study thus expands on previous findings from telemedicine research by adding a communicative and interactive dimension to digital support. It thus contributes to the further development of our understanding of how digital technologies can provide not only functional but also emotional and social relief for family caregivers.

The aim of this article is to systematically analyze the challenges, uses, and potential of telemedicine technologies for family caregivers. To this end, two empirical online surveys are combined:

Study 1: Investigation of the use of telemedicine and the challenges of its implementation.

Study 2: Focus on the integration of AI-supported chatbots to facilitate the use of telemedicine technologies and support family caregivers.

By combining empirical results with existing literature, practice-relevant recommendations for action will be derived and the limitations of previous implementations will be highlighted.

2. Materials and Methods

2.1. Study Design

This paper is based on a two-stage research design conducted as part of an ongoing study on digital support for family caregivers in home care. The aim of the research was to first examine the acceptance and barriers to telemedicine applications (Study 1) and then to investigate the perception and potential uses of AI-supported chatbots (Study 2).

Both surveys follow a quantitative-descriptive approach, are methodologically coordinated, but were conducted separately and address different dimensions of digital support. While Study 1 focused on the technological and organizational integration of telemedicine systems, Study 2 focuses on the communicative and interactive dimension of digital counseling through AI-based dialogue systems.

Combining both data sets provides an integrated understanding of how family caregivers perceive and evaluate digital technologies on a technical, emotional, and communicative level.

2.2. Target Group and Setting

The target group comprises family caregivers who are participating in a care course designed specifically for family members. The surveys were conducted as part of this course to ensure a comparable structure and enable a comparison of the results:

Survey 1: Investigation of the use of telemedicine and the challenges of its implementation (January 2025, 45 participants, 23 completed questionnaires, response rate: 51.1%).

Survey 2: Focus on the integration of AI-supported chatbots to facilitate the use of telemedicine technologies and support family caregivers (September 2025, 45 participants, 39 fully completed questionnaires, response rate: 86.7%).

Since caregiving courses can be repeated regularly, it is theoretically possible for individual participants to overlap between the two studies, but this cannot be verified due to the anonymous nature of the surveys.

Inclusion criteria: Family caregivers who actively care for a loved one, are of legal age, and are willing to participate in the anonymous survey.

Exclusion criteria: Professional caregivers and individuals who do not provide home care.

Table 1 lists the demographic data of the participants from Study 1.

Table 1.

Demographic characteristics and caregiving experience of survey participants (study 1).

Table 2 lists the demographic data of the participants from Study 2.

Table 2.

Demographic characteristics and caregiving experience of survey participants (study 2).

Interpretation of Demographic Data

In Study 1, participants were predominantly female and had a wide age distribution, with most participants between 30 and 59 years old. The majority of participants had vocational training or a college degree. Caregiving experience varied, with a significant proportion of participants having more than three years of caregiving experience.

In Study 2, participants were also predominantly female, and the age distribution showed a concentration in the 40–49 age group. The majority of participants had vocational training or a university degree. These demographic data show that the participants in both studies represent a representative sample of family caregivers who are actively involved in home care.

2.3. Development of the Surveys

- Phase 1: Theoretical conceptualization and literature review

The items for both surveys were developed on the basis of a systematic literature review on the topics of digital care support, telemedicine, acceptance research, and AI-based assistance systems. The literature review included scientific articles, current research results, and specialist literature in order to identify relevant challenges and theoretically sound solutions.

The following sources, among others, were used for telemedicine:

- Beckers and Strotbaum (2021) on the integration of telemedicine into nursing [1].

- Hübner and Egbert (2017) on telecare [2].

- Hoffmann et al. (2024) on needs-based telemedicine from the patient’s perspective [3].

- Juhra (2023) on telemedicine in orthopedics [4].

- Radic and Radic (2020) on user acceptance of digitally supported care [5].

- Gagnon et al. (2006) on the implementation of telemedicine in rural and remote regions [27].

- Krick et al. (2019) on the acceptance and effectiveness of digital care technologies [28].

- Weber et al. (2022) on challenges and solutions for digital technology in outpatient care [29].

- Kitschke et al. (2024) on determinants of telemedicine implementation in nursing homes [30].

The following sources, among others, were used for the AI-supported chatbots:

- Wang et al. (2021) on the development of emotional-affective chatbots [23].

- Zheng et al. (2025) on the adaptation of emotional support by LLM-supported chatbots [26].

- Perez et al. (2022) on the technology acceptance of mobile applications to support nursing staff [21].

- Schinasi et al. (2021) on attitudes and perceptions of telemedicine during the COVID-19 pandemic [22].

- Devaram (2020) on the emotional intelligence of empathetic chatbots for mental well-being [31].

- Yang et al. (2025) on ChatWise, a strategy-driven chatbot for improving cognitive support in older adults [32].

- Shi et al. (2025) on mapping the needs of caregivers and designing AI chatbots for mental support of Alzheimer’s and dementia caregivers [33].

- Anisha et al. (2024) on evaluating the potential and pitfalls of AI-powered conversational agents as virtual health caregivers [34].

- Fan et al. (2021) on the use of self-diagnosis health chatbots in real-world settings [35].

Key models of technology acceptance, such as the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT), were also taken into account [15,16].

- Phase 2: Item formulation

The items were predominantly formulated as five-point Likert scales (“does not apply at all”–“applies completely”) in order to capture intensities and perceptions in a differentiated manner. In addition, multiple-choice questions (e.g., on the use of support services) and free text fields were used to allow for individual reflections and personal additions.

- Study 1—Telemedicine

The questionnaire for the first survey comprised a total of 52 items relating to the following topics:

- General experience of using digital technologies: Recording participants’ previous experience with digital technologies and their use.

- Acceptance of and trust in telemedicine systems: Assessment of willingness to use telemedicine and trust in these technologies.

- Perceived barriers: Identification of obstacles such as data protection concerns, technical hurdles, and costs.

- Support and training needs: Determination of the need for technical support and training in the use of telemedicine.

Table 3 shows sample items and i-CVI values for Study 1.

Table 3.

List of sample items in Study 1.

The complete list of questionnaire items can be found in the Supplementary Materials.

- Study 2—AI-powered chatbots

Based on the results of Study 1, a second questionnaire was developed that focused on the dimensions of communication, interaction, trust, and emotional support. It comprised 15 items relating to the following topics:

- Communication and interaction: Assessment of the effectiveness and user-friendliness of chatbots in communication.

- Trust in chatbots: Assessment of participants’ trust in the reliability and security of chatbots.

- Emotional support: Investigation of the role of chatbots in providing emotional support and stress management.

- Data protection and security: Assessment of concerns regarding data protection and data security when using chatbots.

Table 4 shows sample items and i-CVI values for Study 2.

Table 4.

List of sample items in Study 2.

The complete list of questionnaire items can be found in the Supplementary Materials.

2.4. Validation

The surveys were validated in several steps and involved the same caregiving relatives and experts to ensure comparability:

- Study 1—Telemedicine

- Content validity: Three experts from the fields of nursing science, telemedicine, and digitization were consulted to review the items for content validity, comprehensibility, and completeness. The Content Validity Index (CVI) was calculated to assess the relevance of the items. The average CVI (S-CVI/Ave) was 0.88, indicating high content validity.

- Pretest/pilot study: A pretest with n = 8 relatives tested comprehensibility, response times, and technical feasibility. Items with low comprehensibility were adjusted.

- Reliability: Cronbach’s alpha for subscales:

- Context/experience: Cronbach’s alpha = 0.82.

- Acceptance/trust: Cronbach’s alpha = 0.87.

- Barriers: Cronbach’s Alpha = 0.75.

- Training/Support: Cronbach’s Alpha = 0.80.

- Communication/Collaboration: Cronbach’s Alpha = 0.85 [36].

- Documentation of item development: All items were documented, including literature references, expert feedback, and qualitative interviews to ensure replicability and traceability.

- Study 2—AI-supported chatbots

- Content validity: The same three experts from the fields of nursing science, telemedicine, and digitization were consulted again to review the items for content validity, comprehensibility, and completeness. The Content Validity Index (CVI) was also calculated to assess the relevance of the items. The average CVI (S-CVI/Ave) was 0.91, indicating high content validity.

- Pretest/pilot study: A pretest with n = 8 relatives tested comprehensibility, response times, and technical feasibility. Items with low comprehensibility were adjusted.

- Reliability: Cronbach’s alpha for subscales:

- Communication/interaction: Cronbach’s alpha = 0.82

- Trust in chatbots: Cronbach’s alpha = 0.81

- Emotional support: Cronbach’s alpha = 0.79

- Data protection/security: Cronbach’s Alpha = 0.83 [36].

- Documentation of item development: All items were documented, including literature references, expert feedback, and qualitative interviews to ensure replicability and traceability

Addition to the Experts

The surveys were validated by three experts with extensive professional qualifications in the relevant fields:

- Professor of Digital Medicine: An expert with a professorship in digital medicine, specializing in the integration and application of digital technologies in healthcare.

- Nursing expert: A specialist in nursing science with extensive experience in practical nursing and the implementation of telemedicine solutions.

- Health informatics expert: A specialist in health informatics who focuses on the development and evaluation of information systems in healthcare.

These experts contributed significantly to ensuring the content validity and comprehensibility of the survey items and guaranteed that the scales developed are theoretically sound and relevant to practice.

2.5. Subscales, Items, CVI, and Cronbach’s Alpha

The items were assigned to the respective subscales based on theoretical models of technology acceptance (TAM, UTAUT) and content criteria derived from the literature review. For each subscale, the content validity (Content Validity Index, CVI) and internal consistency (Cronbach’s Alpha) were determined, as was the theoretical reference to TAM and UTAUT.

Table 5 shows the results for Study 1, which relates to the use of telemedicine technologies in home care. The content validity of the items was assessed by three experts from the fields of nursing science, telemedicine, and digitalization. The calculated i-CVI values for all subscales are between 0.83 and 1.0, indicating a very high level of agreement among the experts regarding the relevance of the items. The Cronbach’s alpha values range from 0.75 to 0.87, indicating good to very good internal consistency.

Table 5.

Subscale assignment including Cronbach’s alpha, i-CVI, and theoretical reference TAM and UTAUT (study 1).

The subscales of the second study (Table 6) relate to the use of AI-supported chatbots in nursing support. Here, too, very good values for content validity and reliability are evident. The i-CVI values are between 0.83 and 1.0 in all cases, which indicates a high degree of content fit for the items. The Cronbach’s alpha values range between 0.79 and 0.83, which is very good.

Table 6.

Subscale assignment including Cronbach’s alpha, i-CVI, and theoretical reference TAM and UTAUT (study 2).

The results confirm the high internal consistency and content validity of the subscales developed in both studies. This allows us to assume that the relevant dimensions have been assessed in a reliable and theoretically sound manner.

2.6. Statistical Analysis

The statistical analyses were performed using IBM SPSS 29. Due to the independent samples and exploratory design, no inferential statistical generalizations were made; instead, descriptive and comparative analyses were performed. Although statistical tests such as t-tests, Mann–Whitney U tests, and chi-square tests were used in this study, these are primarily for descriptive analysis and comparison of the data. As this is an exploratory study, the tests are not intended to draw general conclusions, but rather to highlight differences and patterns in the data.

- Study 1: Use and acceptance of telemedicine.

The descriptive statistics for the first study showed that the mean values of the responses to the questions on the use and acceptance of telemedicine ranged between 2.1 and 2.7, with standard deviations between 1.02 and 1.15. The internal consistency of the subscales was assessed using Cronbach’s alpha and yielded good to very good values: Context/Experience (α = 0.82), Acceptance/Trust (α = 0.87), Barriers (α = 0.75), Training/Support (α = 0.80), and Communication/Collaboration (α = 0.85).

The t-tests and Mann–Whitney U tests showed no significant differences between the groups, suggesting that the acceptance of telemedicine is independent of age, gender, or educational level. For example, the t-test for comparing question 1 with question 50 yielded a t-value of −0.45 and a p-value of 0.65, while the Mann–Whitney U test yielded a U-value of 120.5 and a p-value of 0.67. The chi-square tests also showed no significant differences, such as the comparison of question 1 with question 50, which yielded a chi-square value of 2.34 and a p-value of 0.31. The effect sizes, measured using Cohen’s d and Cramer’s V, were consistently small to moderate, for example, Cohen’s d of 0.10 and Cramer’s V of 0.15 for the comparison of question 1 with question 50.

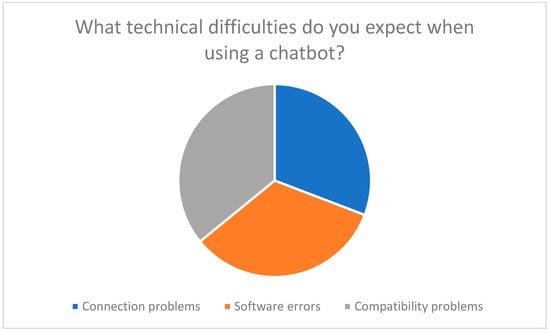

The results of the first study identified technical barriers such as connection problems and software errors, as well as concerns about data protection, as significant obstacles to the use of telemedicine. These findings underscore the need for a stable technical infrastructure and targeted training to promote the acceptance and use of telemedicine.

- Study 2: Use and acceptance of AI-powered chatbots.

In the second study, the mean values of the responses to the questions on the use and acceptance of AI-supported chatbots ranged between 3.1 and 3.9, with standard deviations between 0.85 and 1.06. The internal consistency of the subscales was also assessed using Cronbach’s alpha and yielded good to very good values: Communication/Interaction (α = 0.82), Trust in Chatbots (α = 0.81), Emotional Support (α = 0.79), and Data Protection/Security (α = 0.83).

Here, too, the t-tests and Mann–Whitney U tests showed no significant differences between the groups. For example, the t-test for comparing question 1 with question 13 yielded a t-value of −0.23 and a p-value of 0.82, while the Mann–Whitney U test yielded a U-value of 110.5 and a p-value of 0.75. The chi-square tests also showed no significant differences, such as the comparison of question 1 with question 13, which yielded a chi-square value of 1.34 and a p-value of 0.51. The effect sizes, measured using Cohen’s d and Cramer’s V, were also small to moderate; for example, Cohen’s d of 0.05 and Cramer’s V of 0.10 for the comparison of question 1 with question 13.

The results of the second study complemented the findings of the first study and showed that AI-supported chatbots can not only provide technical support but also offer emotional and social relief for family caregivers. This highlights the need to consider both functional and emotional and social aspects when implementing new technologies.

Overall, the results of both studies show that the successful integration of digital technologies into home care requires a combination of technical infrastructure, social support, and targeted training. These findings contribute significantly to the further development of digital care concepts and underscore the need to focus future research on actual user experiences and the effectiveness of such systems in longitudinal and qualitative designs.

2.7. Limitations and Methodological Peculiarities

Several methodological limitations and peculiarities must be taken into account when interpreting the results of both studies.

First, the total sample size was relatively small, which limits the statistical significance and generalizability of the results to the broader population of family caregivers. In addition, there is a possible overlap between the participants in Study 1 and Study 2, as both surveys were conducted in the same caregiving course for family caregivers. Participants in the caregiving course have the option of repeating it at any time, which means that overlap cannot be ruled out. This potential overlap may have influenced certain response patterns or attitudes, even though participants were not explicitly identified in the different surveys.

Another limitation concerns possible temporal effects. The two surveys were conducted several months apart, during which time participants’ familiarity with digital technologies may have increased due to general societal trends or personal experiences. Consequently, some of the differences in attitudes between Study 1 (telemedicine) and Study 2 (AI-powered chatbots) may partly reflect such temporal changes rather than differences in the technologies under investigation.

Due to the limited sample size, no factor analysis was performed. Conducting an exploratory or confirmatory factor analysis requires a much larger data set in order to obtain reliable factor loadings and stable model estimates. Instead, the formation of the subscales was guided by theoretical modeling based on the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT), supplemented by expert feedback to ensure conceptual coherence. Internal consistency was assessed using Cronbach’s alpha for each subscale, which yielded satisfactory reliability for all dimensions.

In addition, an item analysis was performed for each question to provide differentiated insights into specific aspects of acceptance, barriers, and support needs. Each item was documented and validated individually, allowing for a differentiated interpretation of the data, even though no large-scale psychometric tests were performed.

Although these methodological limitations restrict the scope for far-reaching generalizations, the rigorous theoretical foundation, expert validation, and transparent documentation of the item development process provide a solid basis for further, larger studies.

2.8. Ethics

Both studies were approved in accordance with the Declaration of Helsinki (2013) and the Ethics Committee of the University of Witten/Herdecke (No. 184/2023, 22 August 2023). All participants gave their written/e-consent prior to participation. The study was conducted in accordance with the Declaration of Helsinki; data protection and anonymity of responses were guaranteed.

3. Results

3.1. Technology Acceptance and Use of Digital Health Technologies

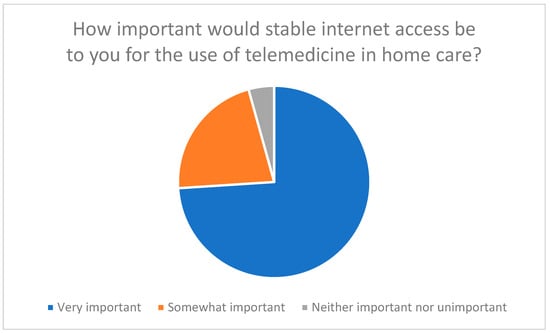

The integration of telemedicine technologies into home care requires a high level of acceptance on the part of family caregivers, as they have a significant influence on successful implementation and continued use. The survey results show that the availability of a stable internet connection and suitable end devices, such as smartphones, tablets, or computers, is considered essential. In the first survey, 74% of participants stated that stable internet access was very important for the use of telemedicine (see Figure 1).

Figure 1.

Assessment of the importance of stable internet access for the use of telemedicine in home care.

These results can be attributed to the constructs of the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT). The importance of stable internet access and suitable end devices shows that participants recognize the benefits of telemedicine technologies when the technical requirements are met (perceived usefulness). In addition, the need for stable technical infrastructure and user-friendly devices indicates that participants expect the use of these technologies to involve minimal effort (effort expectancy).

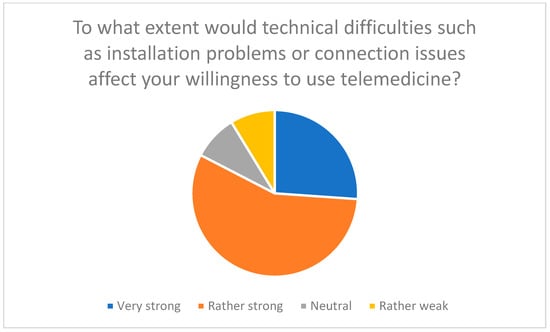

Technical difficulties, such as connection problems or software errors, represent a major barrier. 83% of respondents reported that such problems would significantly reduce their willingness to use telemedicine technologies (Figure 2). These findings confirm earlier studies that highlight the importance of a stable technical infrastructure for the success of telemedicine interventions [27,37,38].

Figure 2.

Influence of technical difficulties such as installation or connection problems on willingness to use telemedicine.

These technical difficulties increase the perceived effort and thus reduce the willingness to use the technology (effort expectancy). In addition, concerns about technical difficulties reflect participants’ expectations that the technologies should be easy to use (perceived ease of use).

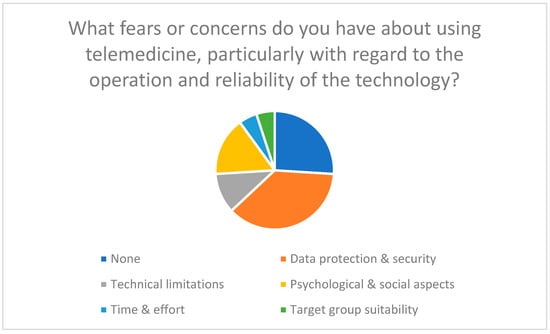

In addition to technical hurdles, concerns regarding data protection may influence acceptance (see Figure 3). These results are consistent with the findings of Meingast et al. (2006) [39] and other experts who point to the need for strict data protection measures [40,41,42].

Figure 3.

Fears and concerns regarding the use of telemedicine, particularly in relation to user-friendliness and reliability of the technology.

Support from family and friends plays a crucial role in the adoption of new technologies (social influence). Data protection concerns and mistrust of technology increase the perceived risk and reduce acceptance (perceived risk).

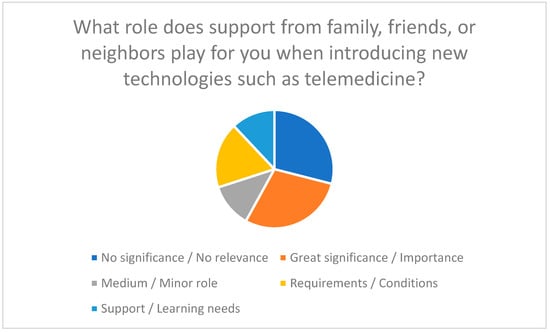

Social support also plays a crucial role in the introduction of new technologies. A lack of support from family or friends can reduce the acceptance of telemedicine solutions, while a supportive social environment facilitates motivation and use [16,42]. In the survey, participants stated that support from their personal environment facilitates the use of telemedicine (Figure 4).

Figure 4.

Importance of support from family, friends, or neighbors when introducing new technologies such as telemedicine.

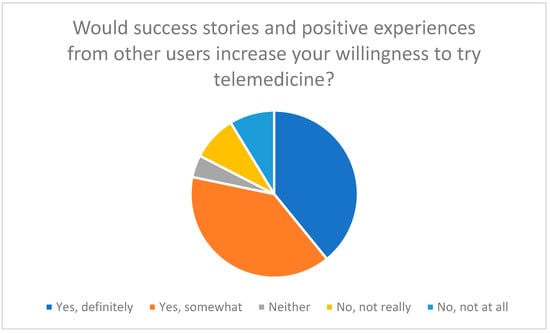

Despite these barriers, the results also identify key success factors. Intuitive usability, simple user interfaces, and the communication of positive user experiences can significantly increase acceptance. The survey clearly showed that the presentation of success stories significantly promotes willingness to use telemedicine (see Figure 5) [17].

Figure 5.

Influence of success stories and positive experiences of other users on willingness to try telemedicine.

Positive user experiences and success stories increase the perceived usefulness of the technologies. Practical training, demonstrations, and ongoing technical support are crucial for improving the skills of family caregivers and reducing reservations about the technology (facilitating conditions).

Practical training, demonstrations, and ongoing technical support are also considered crucial. Hotlines, online tutorials, and personal support can improve the skills of family caregivers and reduce reservations about the technology [42,43,44]. Overall, the results underscore that the combination of technical infrastructure, social support, and targeted training can significantly increase the acceptance and use of telemedicine technologies.

3.2. Role of AI-Powered Chatbots

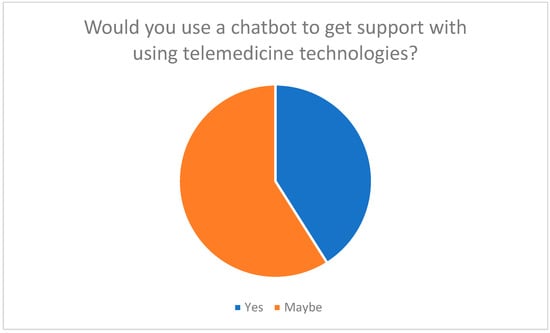

Participants rated AI-powered chatbots as potentially helpful in providing technical, emotional, and informational support when using telemedicine technologies. 41% of respondents said they would use a chatbot to receive help using telemedicine (see Figure 6).

Figure 6.

Willingness to use a chatbot to assist with the application of telemedicine technologies.

Participants assumed that chatbots could be useful for providing support with technical and emotional challenges (perceived usefulness). They also suggested that the hypothetical 24/7 availability of chatbots might reduce the perceived effort required to use such technologies (effort expectancy).

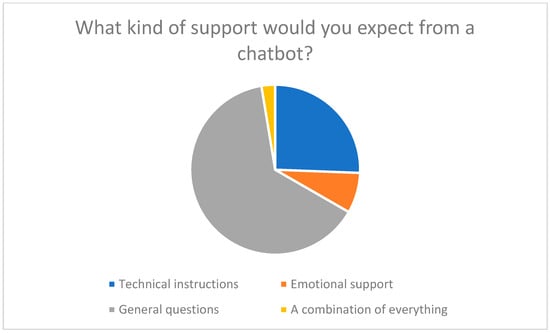

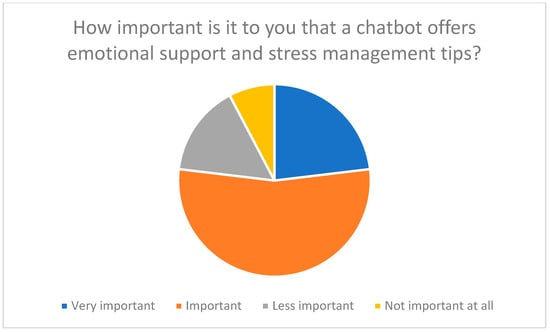

Respondents primarily expected the following functions: technical instructions, emotional support, and answers to general questions. 77% of participants rated emotional support as important (see Figure 7 and Figure 8). This suggests that chatbots not only simplify operation but could also break down psychological barriers by strengthening trust and self-efficacy [13,23,26].

Figure 7.

What kind of support would you expect from a chatbot? (Technical instructions, Emotional support, General questions, Other).

Figure 8.

The importance of emotional support from a chatbot for family caregivers.

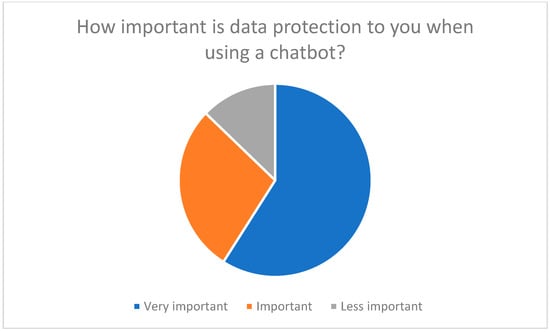

Another aspect is improved communication with the medical team. Chatbots could provide regular updates and clear information to avoid misunderstandings and improve the quality of care [23,26]. Participants emphasized that data protection is essential when using chatbots (see Figure 9), which underscores the need for transparent data protection information and secure communication channels [45,46].

Figure 9.

Concerns about data protection when using chatbots.

3.3. Functionality and Benefits of a Chatbot

The survey results show that an AI-powered chatbot should fulfill several core functions:

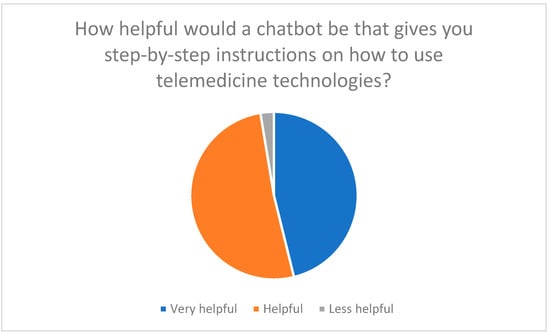

- Technical support

- Step-by-step instructions: 97% of participants expect clear and understandable instructions for installing and using telemedicine devices (Figure 10).

Figure 10. Expectation of step-by-step instructions from a chatbot.

Figure 10. Expectation of step-by-step instructions from a chatbot. - Troubleshooting: Technical support is considered essential to overcome expected difficulties with chatbots (Figure 11).

Figure 11. Technical difficulties when using a chatbot.

Figure 11. Technical difficulties when using a chatbot.

- 2.

- Emotional support

- Stress management: Chatbots could send reassuring messages, provide tips on stress management, and boost user confidence.

- Success stories: Positive experiences from other users promote motivation and trust in the technology.

- 3.

- Information provision

- General questions: Chatbots should answer basic questions about telemedicine to reduce uncertainty (see Figure 7).

- 4.

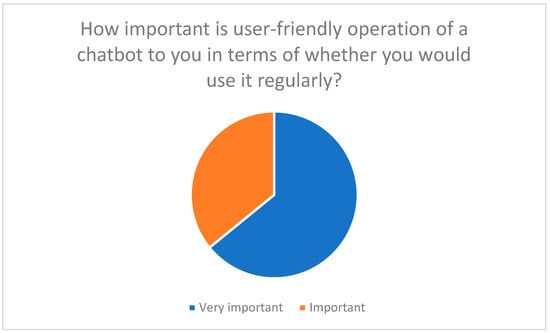

- User-friendliness

- Intuitive user interface: 100% of respondents emphasized the importance of ease of use (Figure 12).

Figure 12. The importance of an intuitive user interface for the acceptance of chatbots.

Figure 12. The importance of an intuitive user interface for the acceptance of chatbots. - Multilingualism and barrier-free communication: Improve access for different user groups, e.g., through voice control or text-to-speech [24].

The results show that these functions are not only desirable but necessary in order to overcome barriers to the use of telemedicine and improve the quality of care.

3.4. Data Protection and Security

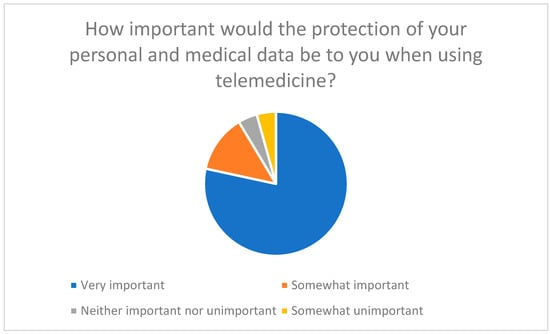

Data protection and data security are key concerns for respondents. 78% of participants rated the protection of their personal and medical data as very important (Figure 13).

Figure 13.

The importance of protecting personal and medical data when using telemedicine.

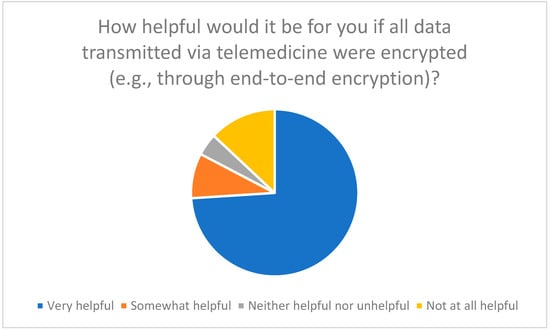

74% are in favor of data encryption and the use of secure communication channels (Figure 14).

Figure 14.

Assessment of the usefulness of data encryption (e.g., end-to-end encryption) when using telemedicine.

Data protection concerns and the need for secure communication channels reflect perceived risk. Transparent data protection policies and security measures are crucial for gaining user trust (facilitating conditions).

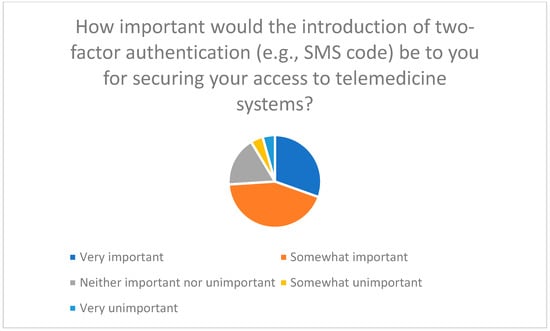

Transparent privacy policies and two-factor authentication were also considered essential (Figure 15). Regular security updates and reviews are seen as necessary to build trust and promote acceptance of telemedicine [45,46].

Figure 15.

Importance of introducing two-factor authentication to secure access to telemedicine systems.

Overall, concerns regarding data protection and technical stability are consistent across both telemedicine and AI-powered chatbots. Addressing these overlapping barriers with secure systems, intuitive interfaces, and supportive guidance is crucial for adoption.

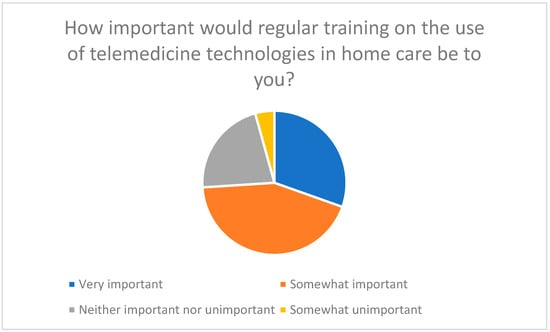

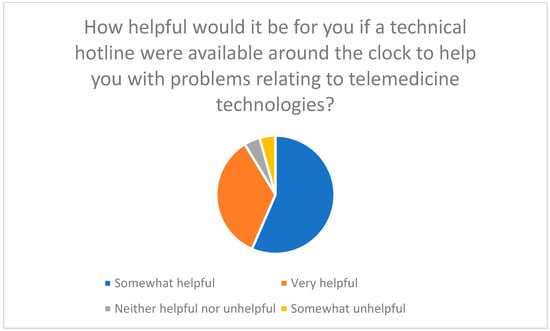

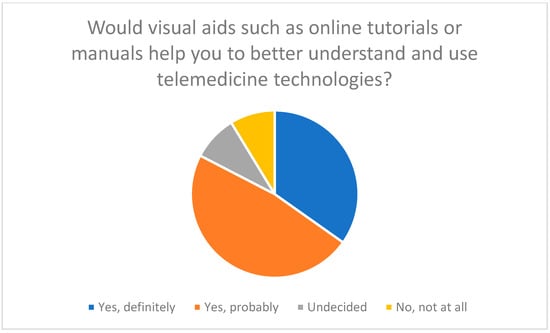

3.5. Application Support and Training

Regular training, hotlines, and visual aids are considered essential to improve technical skills and facilitate use of telemedicine and chatbots (Figure 16, Figure 17 and Figure 18).

Figure 16.

The importance of regular training on the use of telemedicine technologies in home care.

Figure 17.

Usefulness of a 24/7 technical hotline for telemedicine issues.

Figure 18.

Importance of visual aids to support the use of telemedicine technologies.

Training should also cover topics such as data protection and data security [39,40] in order to fully prepare users for safe use [41].

Combined with social support, targeted training and practical guidance are crucial for successful adoption across both telemedicine and chatbot technologies, highlighting the interplay of technical, social, and psychological dimensions.

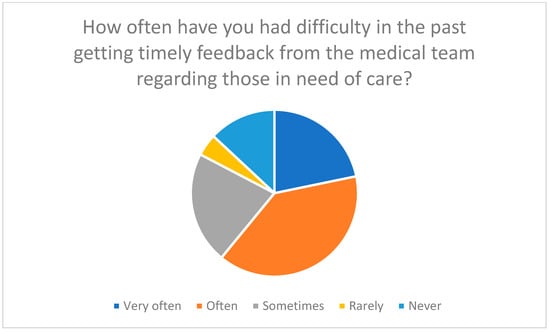

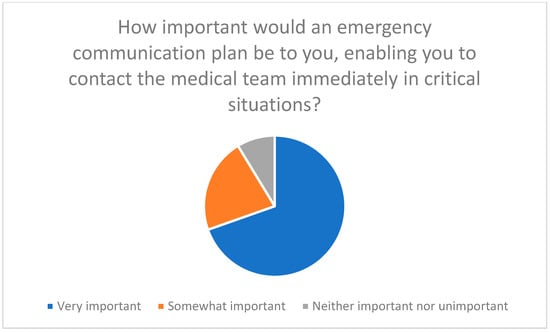

3.6. Communication with the Medical Team

Efficient communication with medical staff is essential; participants highlighted difficulties in timely feedback and the need for emergency communication plans (Figure 19 and Figure 20).

Figure 19.

Frequency of difficulties in receiving timely feedback from the medical team.

Figure 20.

Importance of an emergency communication plan that enables immediate contact with the medical team in critical situations.

Support from the medical team and improved communication increase acceptance of the technologies (social influence). Standardized communication protocols and emergency communication plans are crucial for improving the quality of care (facilitating conditions).

3.7. Summary of Results

The surveys show that the successful integration of telemedicine and AI-powered chatbots depends on several factors:

- Technical support: Step-by-step instructions and troubleshooting are essential.

- Emotional support: Stress management and motivation through chatbots increase acceptance.

- Provision of information: Clear answers and data protection information reduce uncertainty.

- User-friendliness: Intuitive operation and multilingualism facilitate use.

- Data protection and security: Transparent guidelines, encryption, and two-factor authentication build trust.

- Training and support: Continuous training and technical support improve skills and acceptance.

- Communication with the medical team: Standardized protocols and emergency communication plans increase the quality of care.

The results confirm the findings of existing literature [13,15,16,45,46] and show that AI-supported chatbots could offer valuable support to family caregivers [23,26].

The assignment of the results to the constructs of the Technology Acceptance Model (TAM) and the Unified Theory of Acceptance and Use of Technology (UTAUT) illustrates how various factors influence the acceptance and use of telemedicine technologies and AI-supported chatbots (Table 7). The results show that both technical and psychosocial aspects must be taken into account to ensure the successful implementation and use of these technologies.

Table 7.

Assignment of result areas to the TAM/UTAUT construct.

4. Discussion

This study builds on an earlier study on the integration of telemedicine into home care and expands on it with a follow-up study on the use of AI-supported chatbots. While the first study identified structural, organizational, and technical barriers to the introduction of telemedicine systems in family households, the second survey focuses on the communicative and interactive dimension of digital support. Combining both data sets provides a more comprehensive picture of the digital transformation process in home care.

4.1. Technical Support and User-Friendliness

Technical difficulties, such as connection problems, are a common barrier for both telemedicine and chatbots. Chatbots may help overcome these hurdles through clear step-by-step instructions, thus enhancing usability [13,23,26,31,32,33,34,35,47].

4.2. Emotional Support and Stress Management

Chatbots are perceived as potentially providing not only functional but also emotional support, for example, by offering reassurance, stress management tips, or motivational success stories, which participants believe could enhance caregiver confidence and self-efficacy [13,23,26,31,32,33,34,35,48].

4.3. Information Provision and Data Protection

Data protection remains a key barrier for both telemedicine and chatbots. Transparent privacy information and secure communication channels are essential to build user trust [45,46,47].

4.4. Communication with the Medical Team

Chatbots could improve communication with the medical team by providing regular updates and facilitating emergency contacts, thereby supporting timely information exchange and high quality of care [22,23,25,32,48].

4.5. Comparison of the Results of Both Surveys

Comparing both surveys shows that while telemedicine adoption depends largely on technical infrastructure, chatbot acceptance additionally relies on comprehensibility, empathy, and trust. This illustrates the shift from technology as a tool to technology as a social interaction partner [23,26,31,32,33,34,35,47].

4.6. Innovation and Added Value of the Chatbot Component

AI-powered chatbots enable continuous, low-threshold interaction that combines informative and emotional support. However, family caregivers emphasize that chatbots should complement human contact, not replace it [13,23,26,31,32,33,34,35]. This ambivalence can be understood as an expression of dual expectations: family caregivers want digital support, but at the same time demand emotional authenticity and personal resonance [13,23,26,31,32,33,34,35,48].

Compared to telemedicine, which primarily strengthens the structural connection between home care and professional care [27,37,38], the use of chatbots could represent a person-centered advance. The technology addresses not only organizational but also psychosocial challenges [13,23,26,31,32,33,34,35]. It can help reduce cognitive and emotional stress by acting as a communicative link between people and the system It is particularly noteworthy that caregiving relatives hypothetically assume that chatbots are more likely to be perceived as companions in everyday care than as a primary source of medical information [23,26,31,32,33,34,35]. This shift in perspective points to a new phase of digitalization in the context of caregiving, one that is more focused on interaction and relationship building [13,23,26,31,32,33,34,35].

4.7. Practical Implications

The practical implementation of AI chatbots in home care should be participatory, including training for family caregivers, and ensure transparency, data protection, and emotional security. Trust in the functionality of the system is essential to realize the full potential of digital support [13,23,26,31,32,33,34,35,45,46,47].

4.8. Scientific Implications

Scientifically, the integration of AI chatbots expands the concept of digital caregiving competence to include emotional and communicative dimensions. Future research should examine the long-term effects on the well-being of family caregivers and identify design principles that promote trust, empathy, and transparency [13,23,26,31,32,33,34,35,48].

4.9. Summary of the Discussion

Overall, this study shows that digital support for family caregivers is undergoing a phase of qualitative transformation. The transition from purely functional telemedicine applications to AI-based, interactive systems marks a paradigm shift: from technical networking to communicative cooperation. The study thus makes an innovative contribution to the further development of digital care models in the home context and at the same time provides practice-relevant insights for the design of sustainable care support [13,23,26,31,32,33,34,35,48]. However, it is important to emphasize that the results are exploratory and descriptive and should serve as a basis for further research.

4.10. Critical Reflection and Limitations

Despite the insights gained, the study has several limitations. First, it is a cross-sectional online survey that only captures snapshots of attitudes and perceptions. Long-term studies would be necessary to examine how acceptance and user experiences with AI-based chatbots in everyday care develop over time.

Second, the sample is not representative of all family caregivers in Germany. People with a higher digital affinity may be overrepresented, which could lead to a certain bias toward technology-friendly attitudes. Future studies should therefore specifically include more heterogeneous groups, in particular older, less digitally experienced, or socially disadvantaged family caregivers.

Third, the assessments of the use of chatbots are based on hypothetical scenarios, as many respondents have not yet gained any practical experience with AI systems. Accordingly, the results should be interpreted as indicators of expectations and perceptions, not as evidence of actual usage behavior. Experimental or qualitative follow-up studies could provide valuable additions here.

Fourth, participants were selected based on their participation in a caregiving course, which may lead to selection bias. Participants may be more motivated and digitally savvy than the general population of family caregivers, which limits the generalizability of the results.

Finally, the study was limited to the perspective of family caregivers. The inclusion of professional caregivers, consultants, and developers could provide a more comprehensive understanding of how AI-supported systems can be integrated into existing care structures in the future.

In addition to these points, there are further limitations:

- Sample size and representativeness: The first survey had only 23 participants, while the second survey had 39 participants. These relatively small sample sizes limit the generalizability of the results. Future studies should include larger and more diverse samples to obtain more representative results.

- Time lag: The surveys were conducted at different times (January vs. September 2025). This time lag may have influenced learning effects and changes in participants’ experiences and attitudes. It would be useful to conduct future studies closer together in time to minimize such effects.

- Geographical and cultural limitations: Both surveys were conducted with national participants, which limits the transferability of the results to international contexts. Cultural differences in the acceptance and use of telemedicine and chatbots could influence the results. Future research should include international samples to account for cultural differences.

- Self-reported data: The data is based on self-reported information from participants, which may lead to bias due to social desirability or memory effects. Supplementary objective data, such as usage statistics or observations, could increase the validity of the results.

- Technological development: Rapid technological development in the field of AI and telemedicine could cause the results to quickly become outdated. It is important to conduct ongoing research to keep pace with the latest developments and understand their impact on home care.

Despite these limitations, the study offers important starting points for research and practice. It makes it clear that AI technologies in the care context must be understood primarily as communicative and social innovations rather than technical ones. The resulting change in perspective can make a decisive contribution to shaping digital transformation in care in a human-centered, participatory, and ethically responsible manner. The results underscore the need for further research to better understand and optimize the acceptance, use, and long-term impact of these technologies [49].

5. Conclusions

This study shows that the use of AI-powered chatbots in home care has the potential to significantly expand the current development of digital support systems. Building on the results of the previous telemedicine study, it is clear that the perspective of family caregivers is shifting from purely functional expectations to an interactive, dialogue-oriented understanding of digital support [13,23,26,31,32,33,34,35].

AI-powered chatbots could provide not only technical support, but also emotional and social relief. They could overcome technical barriers through clear instructions and problem solving and increase user-friendliness. In addition, they could offer emotional support through reassuring messages, stress management tips, and sharing success stories, which would boost users’ self-confidence and self-efficacy [13,23,26,31,32,33,34,35].

Privacy concerns were identified as a key barrier in both surveys [39,40,41]. Chatbots could strengthen user trust through transparent privacy information and secure communication channels [40,41]. The results show that chatbots could improve communication with the medical team by providing regular updates and standardized communication protocols [22,23,25,32].

In practice, this leads to the recommendation that AI systems should be specifically integrated into counseling and training programs for family caregivers. Politically and institutionally, framework conditions are needed that promote innovation without neglecting ethical and data protection principles. From a scientific perspective, the results underscore the need to expand the concept of “digital care competence” to include communicative and emotional dimensions. Future studies should investigate the extent to which such systems can strengthen the self-efficacy and well-being of family caregivers in the long term [13,23,26,31,32,33,34,35].

It is important to emphasize that the results of this study are exploratory and descriptive. The relatively small sample size and the possible overlap of participants between the two studies limit the generalizability of the results. In addition, the assessments of chatbot use are based on hypothetical scenarios, as many participants have not yet gained practical experience with these systems.

Overall, this study shows that digital support for family caregivers is undergoing a phase of qualitative transformation. The transition from purely functional telemedicine applications to AI-based, interactive systems marks a paradigm shift: from technical networking to communicative collaboration. The results underscore the need for further research to better understand and optimize the acceptance, use, and long-term impact of these technologies. Through the continuous development and adaptation of these technologies, the quality of home care can be improved and the burden on family caregivers can be reduced in the long term [13,23,26,31,32,33,34,35].

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/healthcare13233159/s1, Table S1: List of all items in Study 1; Table S2: List of all items in Study 2.

Author Contributions

K.-J.S. was responsible for conducting the study and writing the article, with T.O., J.P.E. and G.H. providing advisory support and reviewing the manuscript. The study was collaboratively planned by all authors, resulting in the joint preparation of the ethical approval. T.O., J.P.E. and G.H. offered guidance throughout the study, including methodological support and feedback on statistical analysis. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The ethics approval was granted by the Ethics Committee of the University of Witten-Herdecke under the reference number 184/2023 on 22 August 2023. The study was conducted in accordance with the Declaration of Helsinki of 1975, as revised in 2008.

Informed Consent Statement

The participants provided written informed consent.

Data Availability Statement

The data are contained within the article.

Acknowledgments

The authors would like to thank all participating patients, their families, and study participants for their valuable contributions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Beckers, R.; Strotbaum, V. Telepflege—Telemedizin in der Pflege; Springer: Berlin/Heidelberg, Germany, 2021; pp. 259–271. [Google Scholar] [CrossRef]

- Hübner, U.; Egbert, N. Telepflege; Springer: Berlin/Heidelberg, Germany, 2017; pp. 211–224. [Google Scholar] [CrossRef]

- Hoffmann, S.; Walter, C.; Engler, T. Bedarfsgerechte Telemedizin aus Patientinnenperspektive in der klinischen Gynäkologie. Geburtshilfe Frauenheilkd. 2024, 84, e143–e144. [Google Scholar] [CrossRef]

- Juhra, C. Telemedizin [Telemedicine]. Orthopädie 2023, 52, 560–566. [Google Scholar] [CrossRef]

- Radic, M.; Radic, D. Automatisierung von Dienstleistungen zur digital unterstützten Versorgung multimorbider Patienten—Eine qualitative Analyse der Nutzerakzeptanz. In Automatisierung und Personalisierung von Dienstleistungen. Forum Dienstleistungsmanagement; Bruhn, M., Hadwich, K., Eds.; Springer Gabler: Wiesbaden, Germany, 2020. [Google Scholar] [CrossRef]

- Nittari, G.; Khuman, R.; Baldoni, S.; Pallotta, G.; Battineni, G.; Sirignano, A.; Amenta, F.; Ricci, G. Telemedicine Practice: Review of the Current Ethical and Legal Challenges. Telemed. J. E-Health 2020, 26, 1427–1437. [Google Scholar] [CrossRef]

- Dockweiler, C. Akzeptanz der Telemedizin; Springer: Berlin/Heidelberg, Germany, 2016; pp. 257–271. [Google Scholar] [CrossRef]

- Antonacci, G.; Benevento, E.; Bonavitacola, S.; Cannavacciuolo, L.; Foglia, E.; Fusi, G.; Garagiola, E.; Ponsiglione, C.; Stefanini, A. Healthcare professional and manager perceptions on drivers, benefits, and challenges of telemedicine: Results from a cross-sectional survey in the Italian NHS. BMC Health Serv. Res. 2023, 23, 1115. [Google Scholar] [CrossRef]

- Eberly, L.A.; Khatana, S.A.M.; Nathan, A.S.; Snider, C.; Julien, H.M.; Deleener, M.E.; Adusumalli, S. Telemedizinische ambulante kardiovaskuläre Versorgung während der COVID-19-Pandemie: Überbrückung oder Öffnung der digitalen Kluft? Circulation 2020, 142, 510–512. [Google Scholar] [CrossRef] [PubMed]

- Hepburn, J.; Williams, L.; McCann, L. Barriers to and Facilitators of Digital Health Technology Adoption Among Older Adults With Chronic Diseases: Updated Systematic Review. JMIR Aging 2025, 8, e80000. [Google Scholar] [CrossRef]

- Dockweiler, C.; Wewer, A.; Beckers, R. Alters- und geschlechtersensible Nutzerorientierung zur Förderung der Akzeptanz telemedizinischer Verfahren bei Patientinnen und Patienten; Springer: Berlin/Heidelberg, Germany, 2016; pp. 299–321. [Google Scholar] [CrossRef]

- Woo, K.; Dowding, D. Factors Affecting the Acceptance of Telehealth Services by Heart Failure Patients: An Integrative Review. Telemed. J. E-Health 2018, 24, 292–300. [Google Scholar] [CrossRef] [PubMed]

- Peek, S.T.; Wouters, E.J.; van Hoof, J.; Luijkx, K.G.; Boeije, H.R.; Vrijhoef, H.J. Factors influencing acceptance of technology for aging in place: A systematic review. Int. J. Med. Inform. 2014, 83, 235–248. [Google Scholar] [CrossRef] [PubMed]

- Potaszkin, I.; Weber, U.; Groffmann, N. “Die süße Alternative” Smart Health: Akzeptanz der Telemedizin bei Diabetikern; Working Paper; 13; ISM—International School of Management: Münster, Germany, 2018; ISBN 978-3-96163-161-2. [Google Scholar]

- Jockisch, M. Das Technologieakzeptanzmodell. In „Das ist gar kein Modell!”; Bandow, G., Holzmüller, H., Eds.; Gabler: Wiesbaden, Germany, 2010. [Google Scholar] [CrossRef]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User Acceptance of Information Technology: Toward a Unified View. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef]

- Kruse, C.S.; Krowski, N.; Rodriguez, B.; Tran, L.; Vela, J.; Brooks, M. Telehealth and patient satisfaction: A systematic review and narrative analysis. BMJ Open 2017, 7, e016242. [Google Scholar] [CrossRef]

- Almathami, H.K.Y.; Win, K.T.; Vlahu-Gjorgievska, E. Barriers and Facilitators That Influence Telemedicine-Based, Real-Time, Online Consultation at Patients’ Homes: Systematic Literature Review. J. Med. Internet Res. 2020, 22, e16407. [Google Scholar] [CrossRef] [PubMed]

- Niu, S.; Hong, W.; Ma, Y. How Expectations and Trust in Telemedicine Contribute to Older Adults’ Sense of Control: An Empirical Study. Healthcare 2024, 12, 1685. [Google Scholar] [CrossRef]

- Groß, D.; Gründer, G.; Simonovic, V. Akzeptanz, Nutzungsbarrieren und ethische Implikationen neuer Medizintechnologien. In Studien des Aachener Kompetenzzentrums für Wissenschaftsgeschichte; Bd. 8; Kassel University Press: Kassel, Germany, 2010; ISBN 978-3-89958-931-3. [Google Scholar]

- Perez, H.; Miguel-Cruz, A.; Daum, C.; Comeau, A.K.; Rutledge, E.; King, S.; Liu, L. Technology Acceptance of a Mobile Application to Support Family Caregivers in a Long-Term Care Facility. Appl. Clin. Inform. 2022, 13, 1181–1193. [Google Scholar] [CrossRef]

- Schinasi, D.A.; Foster, C.C.; Bohling, M.K.; Barrera, L.; Macy, M.L. Attitudes and Perceptions of Telemedicine in Response to the COVID-19 Pandemic: A Survey of Naïve Healthcare Providers. Front. Pediatr. 2021, 9, 647937. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Cai, X.; Huang, C.H.; Wang, H.; Lu, H.; Liu, X.; Peng, W. Emily: Developing an emotion-affective open-domain chatbot with knowledge graph-based persona. arXiv 2021, arXiv:2109.08875. [Google Scholar] [CrossRef]

- Smith, A.C.; Thomas, E.; Snoswell, C.L.; Haydon, H.; Mehrotra, A.; Clemensen, J.; Caffery, L.J. Telehealth for global emergencies: Implications for Coronavirus Disease 2019 (COVID-19). J. Telemed. Telecare 2020, 26, 309–313. [Google Scholar] [CrossRef]

- Ladin, K.; Porteny, T.; Perugini, J.M.; Gonzales, K.M.; Aufort, K.E.; Levine, S.K.; Wong, J.B.; Isakova, T.; Rifkin, D.; Gordon, E.J.; et al. Perceptions of Telehealth vs In-Person Visits Among Older Adults With Advanced Kidney Disease, Care Partners, and Clinicians. JAMA Netw. Open 2021, 4, e2137193. [Google Scholar] [CrossRef]

- Zheng, X.; Li, Z.; Gui, X.; Luo, Y. Customizing emotional support: How do individuals construct and interact with LLM-powered chatbots. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI ’25), Yokohama, Japan, 26 April–1 May 2025; 20p. [Google Scholar] [CrossRef]

- Gagnon, M.P.; Duplantie, J.; Fortin, J.P. Implementierung von Telemedizin zur Unterstützung der medizinischen Praxis in ländlichen/abgelegenen Regionen: Was sind die Voraussetzungen für den Erfolg? Implement. Sci. 2006, 1, 18. [Google Scholar] [CrossRef]

- Krick, T.; Huter, K.; Domhoff, D.; Schmidt, A.; Rothgang, H. Digitale Technologie und Pflege: Eine Scoping-Studie zu Akzeptanz-, Effektivitäts- und Effizienzstudien informeller und formeller Pflegetechnologien. BMC Health Serv. Res. 2019, 19, 400. [Google Scholar] [CrossRef]

- Weber, K.; Frommeld, D.; Scorna, U.; Haug, S. (Eds.) Digitale Technik für ambulante Pflege und Therapie: Herausforderungen, Lösungen, Anwendungen und Forschungsperspektiven; Transcript Verlag: Bielefeld, Germany, 2022. [Google Scholar] [CrossRef]

- Kitschke, L.; Traulsen, P.; Waschkau, A.; Steinhäuser, J. Determinants of the implementation of telemedicine in nursing homes: A qualitative analysis from Schleswig-Holstein. Z. Evid. Fortbild. Qual. Gesundheitswesen 2024, 187, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Devaram, S. Empathic Chatbot: Emotional Intelligence for Empathic Chatbot: Emotional Intelligence for Mental Health Well-being. arXiv 2020, arXiv:2012.09130. [Google Scholar] [CrossRef]

- Yang, Z.; Hong, J.; Pang, Y.; Zhou, J.; Zhu, Z. ChatWise: A strategy-guided chatbot for enhancing cognitive support in older adults. arXiv 2025, arXiv:2503.05740. [Google Scholar]

- Shi, J.M.; Yoo, D.W.; Wang, K.; Rodriguez, V.J.; Karkar, R.; Saha, K. Mapping caregiver needs to AI chatbot design: Strengths and gaps in mental health support for Alzheimer’s and dementia caregivers. arXiv 2025, arXiv:2506.15047. [Google Scholar]

- Anisha, S.A.; Sen, A.; Bain, C. Evaluating the Potential and Pitfalls of AI-Powered Conversational Agents as Humanlike Virtual Health Carers in the Remote Management of Noncommunicable Diseases: Scoping Review. J. Med. Internet Res. 2024, 26, e56114. [Google Scholar] [CrossRef]

- Fan, X.; Chao, D.; Zhang, Z.; Wang, D.; Li, X.; Tian, F. Utilization of Self-Diagnosis Health Chatbots in Real-World Settings: Case Study. J. Med. Internet Res. 2021, 23, e19928. [Google Scholar] [CrossRef] [PubMed]

- Tavakol, M.; Dennick, R. Making sense of Cronbach’s alpha. Int. J. Med. Educ. 2011, 2, 53–55. [Google Scholar] [CrossRef]

- Ezeamii, V.C.; Okobi, O.E.; Wambai-Sani, H.; Perera, G.S.; Zaynieva, S.; Okonkwo, C.C.; Ohaiba, M.M.; William-Enemali, P.C.; Obodo, O.R.; Obiefuna, N.G. Revolutionizing Healthcare: How Telemedicine Is Improving Patient Outcomes and Expanding Access to Care. Cureus 2024, 16, e63881. [Google Scholar] [CrossRef] [PubMed]

- Block, L.; Gilmore-Bykovskyi, A.; Jolliff, A.; Mullen, S.; Werner, N.E. Exploring dementia family caregivers’ everyday use and appraisal of technological supports. Geriatr. Nurs. 2020, 41, 909–915. [Google Scholar] [CrossRef]

- Meingast, M.; Roosta, T.; Sastry, S. Security and privacy issues with health care information technology. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 5453–5458. [Google Scholar] [CrossRef]

- Beck, E.; Böger, A.; Bogedaly, A.; Enzmann, T.; Goetz, C.; Harbou, K.; Oeff, M.; Schmidt, G.; Schrader, T.; Wikarski, D.; et al. Telemedizin und Kommunikation: Medizinische Versorgung über räumliche Distanzen; Medizinisch Wissenschaftliche Verlagsgesellschaft: Berlin, Germany, 2010. [Google Scholar]

- Watt, J.A.; Fahim, C.; Straus, S.E.; Goodarzi, Z. Barriers and facilitators to virtual care in a geriatric medicine clinic: A semi-structured interview study of patient, caregiver and healthcare provider perspectives. Age Ageing 2022, 51, afab218. [Google Scholar] [CrossRef]

- Alodhialah, A.M.; Almutairi, A.A.; Almutairi, M. Telehealth Adoption Among Saudi Older Adults: A Qualitative Analysis of Utilization and Barriers. Healthcare 2024, 12, 2470. [Google Scholar] [CrossRef]

- Van Dyk, L. Eine Überprüfung der Implementierungsrahmen für Telegesundheitsdienste. Int. J. Environ. Res. Public Health 2014, 11, 1279–1298. [Google Scholar] [CrossRef] [PubMed]

- Kramer, B. Die Akzeptanz neuer Technologien bei Pflegenden Angehörigen von Menschen mit Demenz. Ph.D. Thesis, Universität Heidelberg, Heidelberg, Germany, 2016. [Google Scholar] [CrossRef]

- Hall, J.L.; McGraw, D. For telehealth to succeed, privacy and security risks must be identified and addressed. Health Aff. 2014, 33, 216–221. [Google Scholar] [CrossRef] [PubMed]

- Hudelmayer, A.; zur Kammer, K.; Schütz, J. Eine App für die informelle Pflege. Z. Gerontol. Geriatr. 2023, 56, 623–629. [Google Scholar] [CrossRef] [PubMed]

- Pool, J.; Akhlaghpour, S.; Fatehi, F.; Gray, L.C. Data privacy concerns and use of telehealth in the aged care context: An integrative review and research agenda. Int. J. Med. Inform. 2022, 160, 104707. [Google Scholar] [CrossRef]

- Starman, J. Barrieren der Inanspruchnahme von Unterstützungs- und Entlastungsleistungen für pflegende Angehörige und das Potenzial digitaler Technologien. Ph.D. Thesis, Universität Heidelberg, Heidelberg, Germany, 2024. [Google Scholar]

- Ewers, M. Vom Konzept zur klinischen Realität: Desiderata und Perspektiven der Forschung über die technikintensive häusliche Versorgung in Deutschland. Pflege Ges. 2010, 15, 314–329. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).