Abstract

In this paper, we propose a new weak order 2.0 numerical scheme for solving stochastic differential equations with Markovian switching (SDEwMS). Using the Malliavin stochastic analysis, we theoretically prove that the new scheme has local weak order 3.0 convergence rate. Combining the special property of Markov chain, we study the effects from the changes of state space on the convergence rate of the new scheme. Two numerical experiments are given to verify the theoretical results.

1. Introduction

In recent years, stochastic differential equations with Markovian switching (SDEwMS) has attracted the attention of many scholars. Comparing with stochastic differential equations with jumps (SDEwJ), SDEwMS are not only applied in finance, but they also have many applications in other fields, which include control system, biomathematics, chemistry, and mechanics. Zhou and Mamon [1] proposed an accessible implementation of interest rate models with Markov-switching. Siu [2] proposed a high-order Markov-switching model for risk measurement. He, Qi, and Kao [3] studied the HMM-based adaptive attack-resilient control for Markov jump system and application to an aircraft model. In this paper, we consider the following q-dimensional stochastic differential equations with Markovian switching:

where , the Brownian motion is independent of the Markov chain , and . Qualitative theory of the existence and uniqueness of the solution for SDEwMS has been studied for the past years (see [4,5]). Many scholars have studied the stability of SDEwMS, for example, stability of linear or semi-linear type of Equation (1) has been studied by Basak [6] and Ji [7]. Mao [4] discussed the exponential stability for general nonlinear stochastic differential equations with Markovian switching. Yang, Yin and Li [8] focused on stability analysis of numerical solutions to jump diffusion and jump diffusion with Markovian switching. Ma and Jia [9] considered the stability analysis of linear It stochastic differential equations with countably infinite Markovian switchings.

Generally, most of SDEwMS do not have explicit solutions and hence require numerical solutions. Yuan and Mao [10] firstly developed a Euler–Maruyama scheme for solving SDEwMS and estimated the errors between the numerical and exact solutions under Lipschitz conditions. Yuan and Mao [11] proved that the strong rate of convergence of the Euler–Maruyama scheme is equal to 0.5 under non-Lipschitz conditions. Then, many scholars extended to the semi-implicit Euler scheme [12,13,14]. Furthermore, Li [15] and Chen [16] developed an Euler scheme for solving stochastic differential equations with Markovian switching and jumps. For a higher convergence rate, many scholars aimed to design a numerical Milstein-type scheme. Fan [17] and Nguyen [18] proposed the Milstein scheme for solving SDEwMS and proved its strong rate of convergence is equal to 1.0. Based on the work of Author1 [18], Kumar [19] provided many improved conditions to prove the convergence theorem of Milstein scheme. Lately, Zhao [20] proposed a two-step Euler scheme for solving highly nonlinear SDEwMS.

Since Markov chain is a special Poisson jump process, the numerical scheme for solving SDEwJ can be applied to solve SDEwMS. There are some scholars studying in higher order weak numerical schemes for solving SDEwJ. For example, Buckwar [21] proposed the implicit order 2.0 Runge–Kutta scheme for solving jump-diffusion differential equations. Liu and Li [22] studied an effective higher order weak scheme to solve stochastic differential equations with jumps but involved computing multiple stochastic integral. Lately, Author1 [23] proposed a new simplified weak order 2.0 scheme for solving stochastic differential equations with Poisson jumps.

Inspired by high-order numerical methods [21,22,23] and based on classical weak order 2.0 Taylor scheme [24] for solving stochastic differential equations, we develop a new weak order 2.0 numerical approximation scheme for solving SDEwMS and rigorously prove the new scheme has order 2.0 convergence rate by using Malliavin stochastic analysis. Meanwhile, we simply utilize the Runge–Kutta scheme for solving SDEwMS in order to make a comparison with the new scheme on the accuracy and convergence rate. In addition, in view of the special property of Markov chain, we fully investigate the effects from the state space of Markov chain on the convergence rate of the new scheme.

The important contributions of this paper can now be highlighted as follows:

- We propose a new weak order 2.0 scheme and approximate multiple stochastic integral by utilizing the compound Poisson process.

- By integration-by-parts formula of Malliavin calculus theory [25], we rigorously prove that the new scheme has local weak order 3.0 convergence rate. We also prove that the convergence rate is related to the maximum state difference and upper bounds of state values.

- We give two numerical experiments to confirm our theoretical convergence results and the convergence rate effects from Markov chain space.

- In the experiments, we make comparisons with other schemes such as Euler scheme and Runge–Kutta scheme and verify the new scheme is effective and accurate.

Some notations to be used later are listed as follows:

- is the norm for vector or matrix defined by .

- is the set of k times continuously differentiable functions which, together with their partial derivatives of order up to k, have at most polynomial growth.

- is the -field generated by the diffusion process .

- C is a generic constant depending only on the upper bounds of derivatives of and g, and C can be different from line to line.

2. Preliminaries

2.1. Markov Chain

In this paper, let be a complete probability space with a filtration satisfying the usual conditions. Let denote the transpose of a vector or matrix . Let be a right-continuous Markov chain on the probability space taking values in a finite state space with generator given by

where , and, for , For the above Markov chain, is a Poisson random measure with intensity on , in which is the Lebesgue measure on , where is equipped with its Borel field E. with where are the intervals having length satisfying

and so on.

In [17], the discrete Markov chain given a step size is simulated as follows: compute the one-step transition probability matrix

Let and generate a random number which is uniformly distributed in . Define

where we set as usual. Generate independently a new random number , which is again uniformly distributed in , and then define

2.2. It Formula

Given a multi-index with the length , we denote the hierarchical set of multi-indices by and . We write and for the multi-index in by deleting the first and last component of , respectively. Denote by the multiple Itô integral recursively defined by

where the Itô coefficient functions are defined by

for all and . For , we define

Applied to Equation (1), for , , we define operators from to ,

for and . Then, the It formula can be presented as:

where is a compensated Poisson random measure.

Assumption 1.

Assume that there exist two positive constants L and K such that

- (Lipschitz condition) for alland

- (Linear growth condition) for all

2.3. Malliavin Calculus

Suppose that H is a real separable Hilbert space with scalar product denoted by . The norm of an element is denoted by . Let denote an isonormal Gaussian process associated with the Hilbert space H on .

We call a row vector with for a multi-index of length and denote by v the multi-index of length zero . Let be the set of all multi-indices, i.e.,

Assume . We have the following definition

where

A random variable F is Malliavin differentiable if and only if , where the space is defined by completion with respect to the norm . The details about the Malliavin derivative of Poisson process can be found in [25]. For any integer , is the domain of in , i.e., is the closure of the class of smooth random variables F with respect to the norm

Lemma 1 (Product rule).

Let , then , and we have

Lemma 2 (Chain rule and Duality formula).

Let and , and let φ be a real continuous function on . Then,

3. Main Results

First, we give the equi-step time division; assume , for . For simple representation, we assume , which is the kth component of explicit solution . Assume , , ,

where multi-index . Then, it follows from the It–Taylor formula and trapezoidal rule that

with the truncation error

where . Then, we propose the following weak order 2.0 numerical scheme for solving SDEsMS.

Scheme 1.

Remark 1.

For the multiple stochastic integral in Scheme 1, we simulateandby the following steps.

- Step 1. Mark a discrete Markov chainfrom the definition of Markov process.

- Step 2. Generateand, whereis the number of jumps andis the switching time.

- Step 3. If, else let.

- Step 4. Solve those multiple stochastic integrals by the following equations.and

Convergence Theorem

In this section, we prove our new weak order 2.0 scheme in the condition of local weak convergence. We should firstly define weak convergence before proving the theorem.

Definition 1.

(Weak convergence) Let , assume , for , and suppose that the drift and diffusion functions a and b belong to the space and satisfy Lipschitz conditions and linear growth bounds. For each smooth function , there exists positive constant C such that

with that is converges weakly with order β to as .

Theorem 1.

(Local weak convergence) Supposeandsatisfy Equations (11) and (17), respectively. If Assumption 1 holds and , is a smooth function, we have

where C is a generic constant depending only on K, the maximum state difference, and upper bounds of derivatives of functionsand d.

Proof.

For ease of proof, using multi-dimensional Taylor formula, we have

where

Assume is the kth component of explicit solution . To simplify the notions, we assume , , , By It–Taylor expansion, we can get the explicit solution

and we have the numerical solution

where . Note that the fact , and

subtracting (17) from (16), we deduce

By taking Malliavin derivative with respect to , we obtain

for , which by combining chain rule yields

for , where is a function not depending only on time t.

Since , we have

which gives Taking Malliavin calculus, we have a similar fact

for . Similarly, for by chain rule we can obtain that

Then, by Lemma 2 and Inequality (27), we have

Using Lemma 2 (chain rule and duality formula), we have

Combining Equation (19), and Inequalities (28) and (29), we obtain that

where is a constant depending on K, the maximum state difference and upper bounds of derivatives of functions For , applying the Itô isometry formula, we have

For , we similarly obtain

The proof is completed. □

Remark 2.

If Assumption 1 holds, under the conditions and we can obtain order 3.0 local weak convergence rate of Scheme 1. However, under a weaker regularity condition on the coefficients of , we can only obtain lower order of local weak convergence of Scheme 1. For example, if and , in Theorem 1, we can deduce

4. Numerical Experiments

In this section, before studying numerical examples, we simply simulate the generation of Markov chains and Brownian motions. Let the state space Markov chain be in with a transition probability matrix as

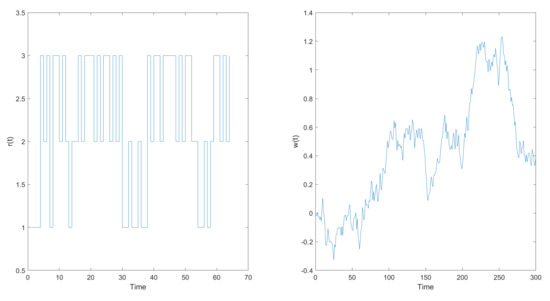

and, from (3) and (4), we have the following emulational pictures in Figure 1.

Figure 1.

(Left) Setting the number of steps equal 64, we have a three states Markov chain stair figure. (Right) Setting the number of steps equal 300, we have a Brownian motion figure.

In our numerical experiments, we consider two one-dimensional SDEwMS. We choose the sample number , where is the number of sample paths. The errors of global weak convergence can be measured by:

and the average errors of local weak convergence can be measured by:

where , are . We let . Assume and are the numerical solution and explicit solutions at the time , respectively, where .

where is a one-dimensional Brownian motion. The Markov chain is in and and are assumed to be independent. The group coefficients f and g are given by

Example 1.

We consider the Ornstein–Uhlenbeck (O-U) process for SDEwMS:

It is well known that Equation (34) has the explicit solution

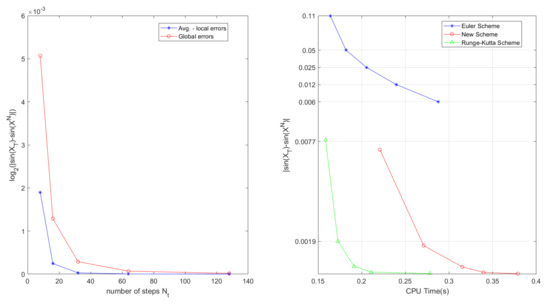

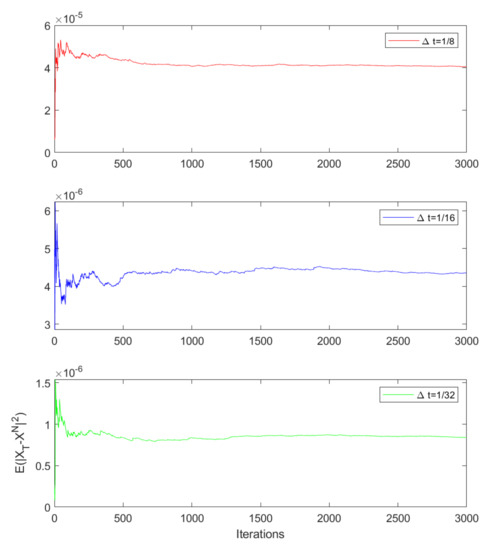

In Table 1, we set the time , where the terminal time is . CR stands for the convergence rate with respect to time step . We compute global errors and average local errors (Avg.local errors) of the new scheme. We can get that global convergence rate (Glo.CR) and average local convergence rate (Avg.local CR) have the order 2.0 and order 3.0, respectively. For more intuitive display, we show the numerical results of the local and global errors in Figure 2 (left). In Table 2, we simply utilize the Euler scheme and Runge–Kutta scheme to make a comparative experiment with the new scheme. It is easily to know that the new scheme has the most precision, but it also takes longer CPU time than Runge–Kutta scheme. For more intuitive display, the CPU Time and result of errors of all schemes are shown in Figure 2 (right). In addition, Figure 3 shows the mean-square stability of the new scheme with three kinds of time steps.

where is a one-dimensional Brownian motion. The Markov chain is in and and are assumed to be independent. The coefficients f and g are given by It is well known that Equation (35) has the explicit solution

Example 2.

We consider the following geometrical model for SDEwMS:

Table 1.

Errors and convergence rate of Scheme 1 with the parameters of in Example 1.

Figure 2.

(Left) The global error and Avg.local error of Scheme 1 for Example 1. (Right) The correlations for global errors and CPU Time of all schemes.

Table 2.

Errors and convergence rates of all schemes with the parameters of .

Figure 3.

Mean–square stability of three kinds of time steps of global errors with New Scheme.

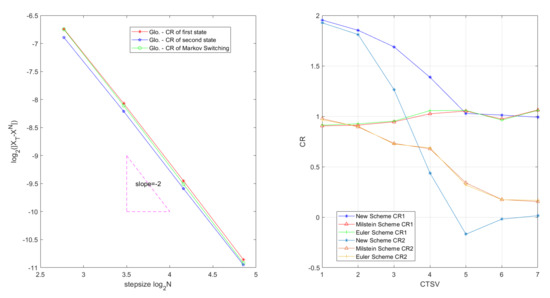

In Table 3, we find that Example 2 can also obtain that Glo.CR and Avg.local CR have the order 2.0 and order 3.0 of the new scheme. In Figure 4 (left), we clearly show that the global errors are different between a single state and switching states. In Table 4, for all three schemes, we fix a rational upper bounds of state values and find that the convergence rate of the new scheme will tend to order 1.0 when all state values become bigger. Meanwhile, the convergence rates of Milstein scheme and Euler scheme stay stable at order 1.0. In Table 5, for all three schemes, we fix the state difference and find that the convergence rate of the new scheme decline rapidly when all state values become bigger. The convergence rate of the new scheme is even negative when the state values are extremely big. Meanwhile, the convergence rates of Milstein scheme and Euler scheme are very low when the state values are extremely big. For more intuitive display, Figure 4 (right) shows the change process of convergence rate with the change times of state values (CTSV).

Table 3.

Errors and convergence rate of Scheme 1 with the parameters of in Example 2.

Figure 4.

(Left) The convergence rates of different states. (Right) The variations in two methods of state values and the variations in the convergence rates of three schemes.

Table 4.

Multiple groups of state values and convergence rates of three schemes with .

Table 5.

Multiple groups of state values and convergence rates of three schemes with .

5. Conclusions

In this paper, we propose a new weak order 2.0 scheme for solving SDEwMS. By using trapezoidal rule and the integration-by-parts formula, we theoretically prove the new scheme has order 3.0 convergence under local weak condition. Two numerical experiments are given to confirm theoretical results. In addition, the first numerical experiment is also given to compare the New Scheme with other schemes, such as Euler scheme and Runge–Kutta scheme on convergence rate and accuracy, and the second numerical experiment is also given to explain that the change of Markov chain has some effects on convergence rate of all schemes. According to above experiments, we can obtain that the new scheme has the most precision of all schemes but costs longer time. Moreover, we also find that the maximum state difference and the upper bounds of state values have some effects on all schemes.

Author Contributions

Conceptualization, Y.L.; methodology, Y.L.; software, Y.W. and T.F.; validation, Y.L., Y.W., T.F. and Y.X.; formal analysis, Y.L., Y.W. and Y.X.; investigation, Y.L., Y.W. and Y.X.; resources, Y.L.; data curation, Y.L., Y.W. and T.F.; Writing—Original draft preparation, Y.L. and Y.W.; Writing—Review and editing, Y.L., Y.W., T.F. and Y.X.; visualization, T.F.; supervision, Y.L.; project administration, Y.L.; funding acquisition, Y.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China grant number No.11501366.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

We would like to thank the reviewers for their valuable comments, which help us to improve our paper a lot.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhou, N.; Mamon, R. An accessible implementation of interest rate models with Markov-switching. Expert Syst. Appl. 2012, 39, 4679–4689. [Google Scholar] [CrossRef]

- Siu, T.; Ching, W.; Fung, E.; Ng, M.; Li, X. A high-order Markov-switching model for risk measurement. Comput. Math. Appl. 2009, 58, 1–10. [Google Scholar] [CrossRef][Green Version]

- He, H.; Qi, W.; Kao, Y. HMM-based adaptive attack-resilient control for Markov jump system and application to an aircraft model. Appl. Math. Comput. 2021, 392, 125668. [Google Scholar]

- Mao, X. Stability of stochastic differential equations with Markovian switching. Stoch. Process. Their Appl. 1999, 79, 45–67. [Google Scholar] [CrossRef]

- Mao, W.; Mao, X. On the approximations of solutions to neutral SDEs with Markovian switching and jumps under non-Lipschitz conditions. Appl. Math. Comput. 2014, 230, 104–119. [Google Scholar] [CrossRef]

- Basak, G.K.; Bisi, A.; Ghosh, M.K. Stability of a random diffusion with linear drift. J. Math. Anal. Appl. 1996, 202, 604–622. [Google Scholar] [CrossRef]

- Ji, Y.; Chizeck, H.J. Controllability, stabilizability and continuous-time Markovian jump linear quadratic control. IEEE Trans. Automat. Control 1990, 35, 777–788. [Google Scholar] [CrossRef]

- Yang, Z.; Yin, G.; Li, H. Stability of numerical methods for jump diffusions and Markovian switching jump diffusions. J. Comput. Appl. Math. 2015, 275, 197–212. [Google Scholar] [CrossRef]

- Ma, H.; Jia, Y. Stability analysis for stochastic differential equations with infinite Markovian switchings. J. Anal. Appl. 2016, 435, 593–605. [Google Scholar] [CrossRef]

- Yuan, C.; Mao, X. Convergence of the Euler-Maruyama method for stochastic differential equations with Markovian switching. Math. Comput. Simul. 2004, 64, 223–235. [Google Scholar] [CrossRef]

- Mao, X.; Yuan, C.; Yin, G. Approximations of Euler-Maruyama type for stochastic differential equations with Markovian switching, under non-Lipschitz conditions. J. Comput. Appl. Math. 2007, 205, 936–948. [Google Scholar] [CrossRef]

- Rathinasamy, A.; Balachandran, K. Mean square stability of semi-implicit Euler method for linear stochastic differential equations with multiple delays and Markovian switching. Appl. Math. Comput. 2008, 206, 968–979. [Google Scholar] [CrossRef]

- Zhou, S.; Wu, F. Convergence of numerical solutions to neutral stochastic delay differential equations with Markovian switching. J. Comput. Appl. Math. 2009, 229, 85–96. [Google Scholar] [CrossRef]

- Yin, B.; Ma, Z. Convergence of the semi-implicit Euler method for neutral stochastic delay differential equations with phase semi-Markovian switching. Appl. Math. Model. 2011, 35, 2094–2109. [Google Scholar] [CrossRef]

- Li, R.H.; Chang, Z. Convergence of numerical solution to stochastic delay differential equation with poisson jump and Markovian switching. Appl. Math. Comput. 2007, 184, 451–463. [Google Scholar]

- Chen, Y.; Xiao, A.; Wang, W. Numerical solutions of SDEs with Markovian switching and jumps under non-Lipschitz conditions. J. Comput. Appl. Math. 2019, 360, 41–54. [Google Scholar] [CrossRef]

- Fan, Z.; Jia, Y. Convergence of numerical solutions to stochastic differential equations with Markovian switching. Appl. Math. Comput. 2017, 315, 176–187. [Google Scholar] [CrossRef]

- Nguyen, S.; Hoang, T.; Nguyen, D.; Yin, G. Milstein-type procedures for numerical solutions of stochastic differential equations with markovian switching. SIAM J. Numer. Anal. 2017, 55, 953–979. [Google Scholar] [CrossRef]

- Kumar, C.; Kumar, T.; Wang, W. On explicit tamed Milstein-type scheme for stochastic differential equation with Markovian switching. J. Comput. Appl. Math. 2020, 377, 112917. [Google Scholar] [CrossRef]

- Zhao, J.; Yi, Y.; Xu, Y. Strong convergence of explicit schemes for highly nonlinear stochastic differential equations with Markovian switching. Appl. Math. Comput. 2021, 393, 125959. [Google Scholar]

- Buckwar, E.; Riedler, M.G. Runge-Kutta methods for jump-diffusion differential equations. J. Comput. Appl. Math. 2011, 236, 1155–1182. [Google Scholar] [CrossRef]

- Liu, X.; Li, C. Weak approximations and extrapolations of stochastic differential equations with jumps. SIAM J. Numer. Anal. 2000, 37, 1747–1767. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Feng, T.; Xin, Y. A New Simplified Weak Second-order Scheme for Solving Stochastic Differential Equations With Jumps. Mathematics 2021, 9, 224. [Google Scholar] [CrossRef]

- Platen, E.; Bruti-Liberati, N. Numerical Solutions of Stochastic Differential Equations with Jump in Finance; Springer: Berlin/Heidelberg, Germany, 2010; Volume 64. [Google Scholar]

- Øksendal, G.; Proske, F. Malliavin Calculus for Lévy Processes with Applications to Finance; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).