Abstract

In this note, given a matrix (or a general matrix polynomial , ) and an arbitrary scalar , we show how to define a sequence which converges to some element of its spectrum. The scalar serves as initial term (), while additional terms are constructed through a recursive procedure, exploiting the fact that each term of this sequence is in fact a point lying on the boundary curve of some pseudospectral set of A (or ). Then, the next term in the sequence is detected in the direction which is normal to this curve at the point . Repeating the construction for additional initial points, it is possible to approximate peripheral eigenvalues, localize the spectrum and even obtain spectral enclosures. Hence, as a by-product of our method, a computationally cheap procedure for approximate pseudospectra computations emerges. An advantage of the proposed approach is that it does not make any assumptions on the location of the spectrum. The fact that all computations are performed on some dynamically chosen locations on the complex plane which converge to the eigenvalues, rather than on a large number of predefined points on a rigid grid, can be used to accelerate conventional grid algorithms. Parallel implementation of the method or use in conjunction with randomization techniques can lead to further computational savings when applied to large-scale matrices.

1. Introduction

The theory of pseudospectra originates in numerical analysis and can be traced back to Landau [1], Varah [2], Wilkinson [3], Demmel [4], and Trefethen [5], motivated by the need to obtain insights into systems evolving in ways that the eigenvalues alone could not explain. This is especially true in problems where the underlying matrices or linear operators are non-normal or exhibit in some sense large deviations from normality. A better understanding of such systems can be gained through the concept of pseudospectrum, which, for a matrix and a positive parameter , was introduced as the subset of the complex plane that is bounded by the –level set of the norm of the resolvent . A second definition stated in terms of perturbations characterizes the elements of this set as eigenvalues of some perturbation with . In this sense, the notion of pseudospectrum provides information that goes beyond eigenvalues, while retaining the advantage of being a natural extension of the spectral set. In fact, for different values of magnitude , pseudospectrum provides a global perspective on the effects of perturbations; this is in stark contrast to the concept of condition number, where only the worst-case scenario is considered.

On one hand, pseudospectrum may be used as a visualization tool to reveal information regarding the matrix itself and the sensitivity of its eigenvalues. Applications within numerical analysis include convergence of nonsymmetric matrix iterations [6], backward error analysis of eigenvalue algorithms [7], and stability of spectral methods [8]. On the other hand, it is a versatile tool that has been used to obtain quantitative bounds on the transient behavior of differential equations in finite time, which may deviate from the long-term asymptotic behavior [9]. Important results involving pseudospetra have been also been obtained in the context of spectral theory and spectral properties of banded Toeplitz matrices [10,11]. Although emphasis has been placed on the standard eigenproblem, attention has also been drawn to matrix pencils [12] and more general matrix polynomials [13,14] arising in vibrating systems, control theory, etc. For a comprehensive overview of this research field and its applications, the interested reader may refer to [15].

In this note, we propose an application of pseudospectral sets as a mean to obtain eigenvalue estimates in the vicinity of some complex scalar. In particular, given a matrix (or a general matrix polynomial) and a scalar , we construct a sequence that converges to some element of its spectrum. The scalar serves as initial term (), while additional terms are constructed through an iterative procedure, exploiting the fact that each term of this sequence is in fact a point lying on the boundary curve of some pseudospectral set. Then, the next term in the sequence is detected in the perpendicular direction to the tangent line at the point . Repeating the construction for a tuple of initial points encircling the spectrum, several peripheral eigenvalues are approximated. Since the pseudospectrum may be disconnected, this procedure allows the identification of individual connected components and, as a by-product, a convenient and numerically efficient procedure for approximate pseudospectrum computation emerges. Moreover, this approach is clearly amenable to parallelization or randomization and can lead to significant computational savings when applied to probems involving large–scale matrices.

Our paper is organized as follows. In Section 2, we provide the necessary theoretical background on the method and provide examples for the constant matrix case. As confirmed by numerical experiments, the method can provide a sufficiently accurate pseudospectrum computation at a much-reduced computational cost, especially in cases where the spectrum is convexly independent (i.e., each eigenvalue does not lie in the convex hull of the others) or exhibits large eigenvalue gaps. A second application of the method on Perron-root approximation for non–negative matrices is presented. Then, Section 3 shows how the procedure may be modified to estimate the spectrum of more general matrix polynomials. Numerical experiments showcasing the application of the method on damped mass–spring and gyroscopic systems conclude the paper.

2. Eigenvalues via Pseudospectra

Let the matrix with spectrum , where denotes the determinant of a matrix. With respect to the –norm, the pseudospectrum of A is defined by

where denotes the smallest singular value of a matrix and is the maximum norm of admissible perturbations.

For every choice of increasing positive parameters , the corresponding closed, strictly nested sequence of pseudospectra

is obtained. In fact, the respective boundaries satisfy the inclusions

It is also clear that, for any , .

Our objective now is to exploit the properties of these sets to detect an eigenvalue of A in the vicinity of a given scalar . This given point of interest may be considered to lie on the boundary of some pseudospectral set, i.e., there exists some non–negative parameter , such that

Indeed, points satisfying the equality for some and lying in the interior of are finite in number. Thus, in the generic case, we may think of the inclusion (1) as an equality.

We consider the real–valued function with . In the process of formulating a curve-tracing algorithm for pseudospectrum computation [16], Brühl analyzed and, identifying , noted that its differentiability is explained by the following Theorem in [17]:

Theorem 1.

Let the matrix valued function be real analytic in a neighborhood of and let a simple nonzero singular value of with , its associated left and right singular vectors, respectively.

Then, there exists a neighborhood of on which a simple nonzero singular value of is defined with corresponding left and right singular vectors and , respectively, such that , , and the functions σ, u, v are real analytic on . The partial derivatives of are given by

Hence, recalling (1) and assuming is a simple singular value of the matrix , then

where and denote the left and right singular vectors of associated to , respectively [16] (Corollary 2.2).

On the other hand, if is an eigenvalue of A near , it holds . The latter observation follows from the fact that

where and equality holds for normal matrices. So, the scalar

can be considered to be an estimate of eigenvalue . In particular, and lies in the interior of . Moreover, the sequence

converges to .

The above process requires the computation of the triplet

at every point ; see [18].

Remark. To avoid the computational burden of computing the (left and right) singular vectors, a cheaper alternative would be to consider at each iteration () the canonical octagon with vertices

instead and simply compute

In this case, instead of (2), we can set

with such that

2.1. Numerical Experiments

2.1.1. Pseudospectrum Computation

The approximating sequences in (2) may be utilized to implement a computationally cheap procedure to visualize matrix pseudospectra, at least in cases where the order of the matrix is small or when its spectrum exhibits large eigenvalue gaps. Several related techniques for pseudospectrum computation have appeared in the literature. These fall largely into two categories: grid [14] and path-following algorithms [16,19,20,21]. Grid algorithms begin by evaluating the function on a predefined grid on the complex plane and lead to a graphical visualization of the boundary by plotting the -contours of . This approach faces two severe challenges; namely, the requirement of a–priori information on the location of the spectrum to correctly identify a suitable region to discretize, as well as the typically large number of grid points the computations have to be performed upon. path-following algorithms, on the other hand, require an initial step to detect a starting point on the curve and then proceed to compute additional boundary points for each connected component of . The main drawbacks of this latter approach lie in the difficulty in performing the initial step and the need to correctly identify every connected component of in order to repeat the procedure and properly trace its boundary. Moreover, cases where pseudospectrum computation is required for a whole tuple of parameters drastically compromise the efficiency of path-following algorithms.

Our approach is to use the approximating sequences (2) to decrease the number of singular value evaluations and therefore speed up the computation of pseudospectra. The basic steps are outlined as follows:

- Select a tuple of initial points encircling the spectrum; for instance, these can be chosen on the circle .

- Construct eigenvalue approximating sequences (), as in (2). If () are such that , the length of each sequence is determined, so that for all , where is some prefixed parameter value. In other words, indicates the tolerance with which the approached by the constructed sequences eigenvalues should be approximated and corresponds to the minimum parameter for which pseudospectra will be computed.

- Classify the sequences into distinct clusters, according to the proximity of their final terms. This step may be performed using a k-means clustering algorithm, using a suitable criterion to evaluate the optimal number of groups.

- Compute

- If necessary, repeat the procedure for t additional points between the centroids of the detected clusters, constructing additional sequences, so that

- Detect boundary points of for any choice of parameters along the polygonal chains formed by the total of constructed sequences of points by interpolation.

- Fit closed spline curves passing through the respective sets of boundary points in for the various choices of to obtain sketches of the corresponding pseudospectra .

The proposed method successfully localizes the spectrum, initiating the procedure with a restricted number of points. Then, singular value computations are kept to a minimum by considering points only on the constructed sequences. Pseudospectrum components corresponding to peripheral eigenvalues not in the convex hull of the other eigenvalues, are thus extremely easy to identify. This approach is also well–suited to cases, where the matrix has convexly independent spectrum; i.e., when , for every . Moreover, it is clearly amenable to parallelization, which could lead to significant computational savings in cases of large matrices.

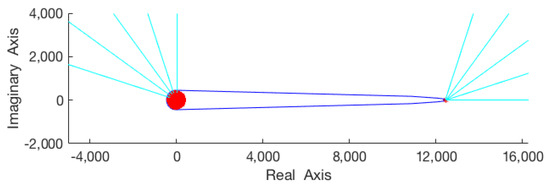

Application 1.

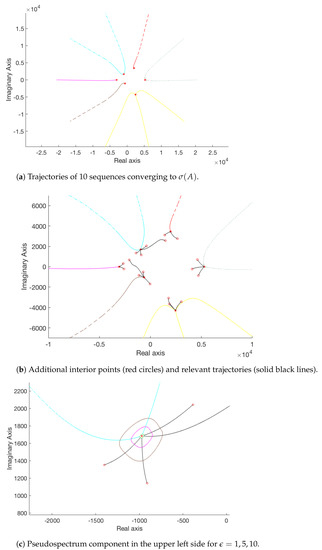

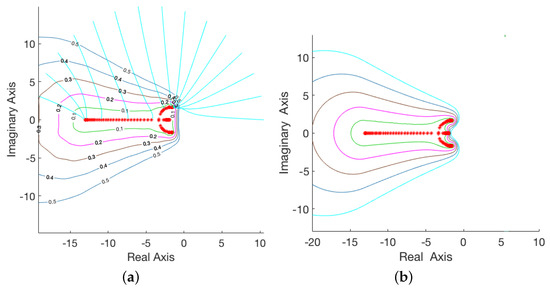

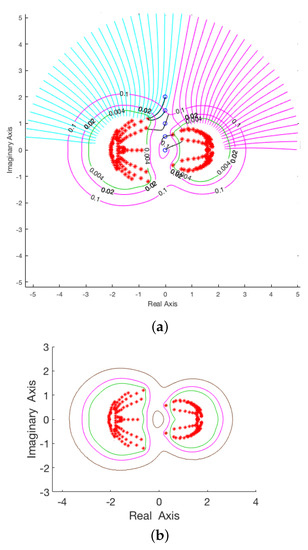

We consider a random matrix , the sole constraint being that its eigenvalues are distant form each other; the real and imaginary parts of its entries follow the standardized normal distribution scaled by . For the proposed procedure, we select initial points and exploit the fact that the corresponding sequences generated as in (2) converge to some element of . The number of terms in each sequence is determined, so that all values do not exceed . The sequences are organized into distinct clusters, grouping together those sequences which approximate the same element of . This grouping is performed using a k-means clustering algorithm, where the optimal number of clusters is evaluated via the silhouette criterion and using a distance metric based on the sum of absolute differences between points. Since six different groups are identified, clearly all elements of have been sufficiently approximated by at least one of the sequences. For an illustration, refer to Figure 1a; different colors have been used to differentiate between polygonal chains corresponding to distinct clusters. The construction so far required 914 singular value computations. Having calculated all parameter values such that during the previous procedure, it is possible to interpolate between these known points along the trajectories formed by () to approximate boundary points of for selected values . Since all ten trajectories converge to eigenvalues from points encircling , to obtain better pseudospectra approximations, it is necessary to repeat the procedure for additional suitably selected points. Hence, for each cluster we consider three additional points; see Figure 1b. In particular, denoting the centroids of the clusters, for each j we consider the three centroids which lie closest to and take the convex combinations

Then, additional sequences corresponding to these extra points are constructed so that the desired parameter values of ϵ for which pseudospectra should be computed (in this instance, the triple of ) may be interpolated within these trajectories, as for the ten initial ones. This imposes an extra cost of 1170 additional singular value computations (2084 in total). The resulting approximations of the pseudospectra components identified by the upper left corner trajectories for are depicted in greater detail in Figure 1c; the relevant eigenvalue is indicated by “*”.

Figure 1.

Pseudospectrum computation for random with spectral gaps, using 10 initial points.

An advantage of this procedure is that it does not require some a–priori knowledge of the initial region on the complex plane where the spectrum is located. In fact, the very nature of this specific example, whose spectrum covers a wide area , would render computations on a suitable grid impractical. Another way in which this method diverges from conventional grid algorithms is in that the computations are performed on a dynamically chosen set of points, iteratively selected as the corresponding trajectories converge to peripheral eigenvalues and identify the relevant pseudospectrum components, rather than on a large number of predefined points on a rigid grid.

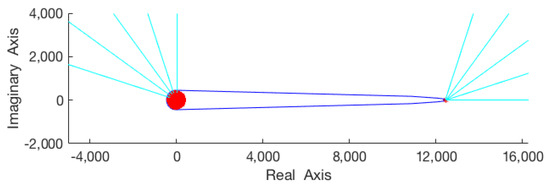

Application 2.

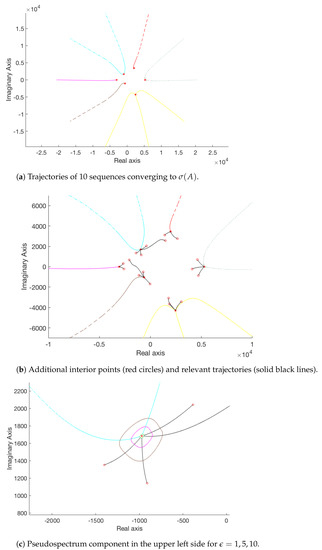

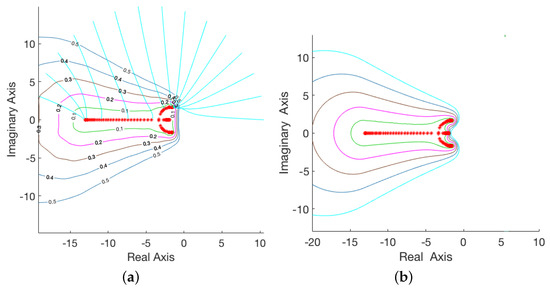

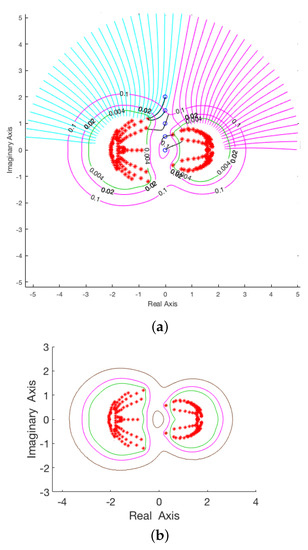

To demonstrate how the procedure works in cases of larger matrices, in this application we examine the matrix , where is a matrix from the Harwell-Boeing sparse matrix collection [22] related to a non–symmetric computational fluid dynamics problem. Here, the factor is used for scaling purposes and is related to the norm– order of the matrix under consideration. Initiating the procedure with 30 equidistributed points on the circle , the method required a total of 810 singular value computations for a minimum parameter value of ; the resulting pseudospectra visualizations for are depicted in Figure 2. For this example, we have opted not to introduce additional points.

Figure 2.

Pseudospectra computations for a non–symmetric sparse matrix of order 1024 from the Harwell-Boeing collection and .

Perron root computation. Applications of non–negative matrices, i.e., matrices with exclusively non–negative real entries, abound in such diverse fields as probability theory, dynamical systems, Internet search engines, tournament matrices etc. In this context, the dominant eigenvalue of non–negative matrices, also referred to as Perron root, is of central importance. Localization of the Perron root has been extensively studied in the literature; relevant bounds can be found in [23,24,25,26,27]. Its computation is typically carried out using the power method; the convergence rate of this approach depends on the relative magnitudes of the two dominant eigenvalues. Relevant methods have appeared in [28,29,30], among others. As a second application of the approximating sequences (2), the following experiment reports an elegant way of approximating Perron roots.

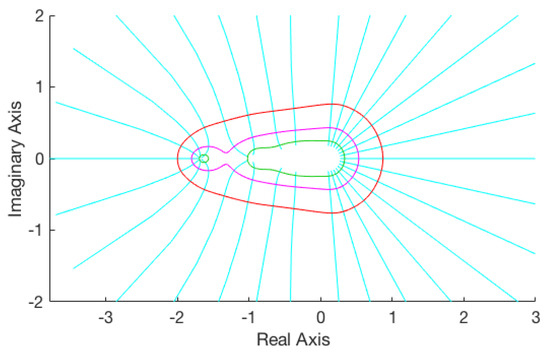

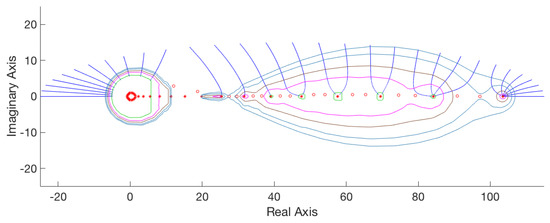

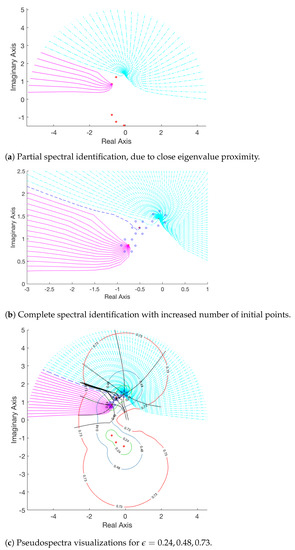

Application 3.

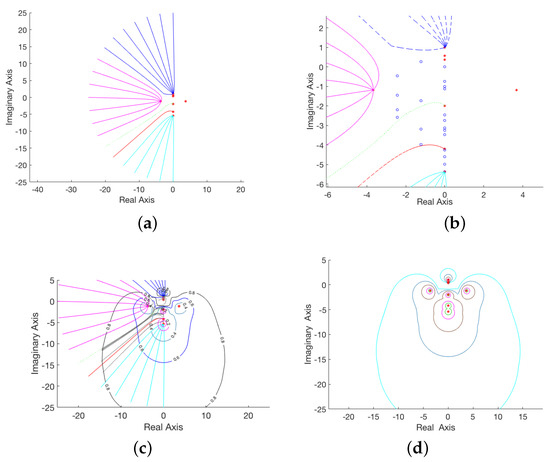

For this experiment, we considered a tuple of 50 non–negative matrices with uniformly distributed entries in . The symmetry of with respect to the real axis suggests that it suffices to restrict the computations exclusively to the closed upper half–plane. Hence, for each of the matrices , we initiated the construction of the sequences (2) from equidistributed initial terms (). As expected, the rightmost of these points formed sequences converging to the Perron root of , while each of the remaining ones approximated some other peripheral eigenvalue. In the generic case, the magnitude of the second highest eigenvalue of was much smaller than the Perron root. Figure 3 is illustrative of this separation; the blue curve traces the boundary of the numerical range of such a matrix, red points indicate its eigenvalues, while the cyan lines correspond to the trajectories of the constructed sequences. Denoting () those sequences approximating the Perron root (), then the relative error in each iteration , , decreases rapidly, even though the initial points () were chosen to be extremely remote from . Averages

of these relative approximation errors over the tuple of matrices for the first iterations are demonstrated in the first column of Table 1, verifying that a reliable estimate for the Perron root may in the generic case be obtained after the computation of as few as 3 terms in the corresponding trajectories.

Figure 3.

Indicative numerical range of non–negative matrix and 10 approximating trajectories.

Table 1.

Relative approximation errors for Perron root and other peripheral eigenvalues of non–negative matrices.

The remaining () sequences converge to some other peripheral eigenvalues , reasonable approximations of which require a rather larger number of iterations, as can be seen from the second column of Table 1 reporting.

Application 3 suggests that any reasonable upper bound suffices to yield reliable estimations for the Perron root after computation of only 2–3 terms in the sequence (2).

The previous experiment may seem excessively optimistic. Indeed, there can be instances when the situation is much more demanding.

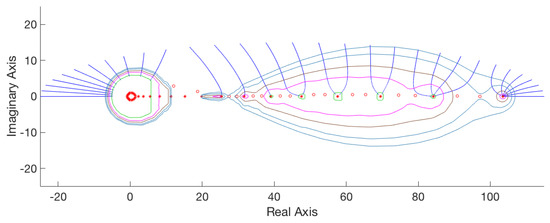

Application 4.

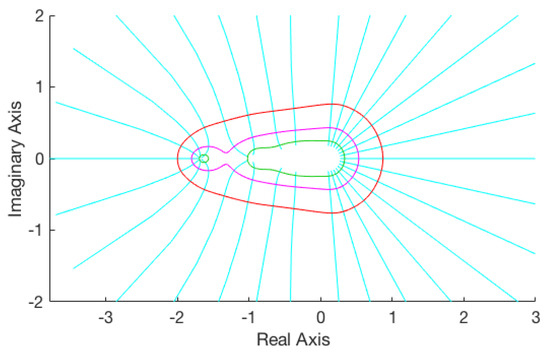

The Frank matrix is well–known to have ill-conditioned eigenvalues. For this application, we test the behavior of the proposed method on the Frank matrix of order 32, the normalized matrix of eigenvectors of which has condition number . Figure 4 depicts the resulting pseudospectra visualizations for , initiating the procedure from 30 points located on the upper semiellipse centered at with semi–major and semi–minor axes lengths equal to 70 and 15, respectively. The depicted trajectories were constructed, so that the final terms in each polygonal chain lie within . Then, according to the distances of the final terms of consecutive sequences, at most two additional points are introduced on the line segment connecting these respective final terms. The necessary iterations for the construction of the relevant sequences are reported in Table 2 for different numbers of initial points.

Figure 4.

Pseudospectra computation for the Frank matrix of order 32 and . Additional points selected between the endpoints of the initial sequences are denoted by red circles, while eigenvalues are denoted by red stars.

Table 2.

Number of iterations for different numbers of initial points ().

The approximating quality of the sequences is much compromised when compared to the generic case, requiring many more iterations, especially for the eigenvalues with smallest real parts; these are also the most ill-conditioned ones. In fact, the seven rightmost sequences converging to the Perron root (refer to Figure 4) display the fastest convergence, the second group of thirteen sequences leading to the intermediate eigenvalues being somewhat more compromised, while the leftmost sequences naturally exhibit even more diminished approximation quality. Mean relative approximation errors for these three groups are reported in Table 3.

Table 3.

Relative approximation errors for Perron root and other eigenvalues of the Frank matrix of order 32.

For the numerical experiments in this section, we have restricted ourselves to initial points encircling the spectrum. Another option would be to use our method in tandem with randomization techniques for the initial points selection.

3. Matrix Polynomials

The derivation of eigenvalue approximating sequences may be readily extended to account for the general matrix polynomial case

where and (), with . Recall that the spectrum of is the set of all its eigenvalues; i.e., . For a scalar , the nonzero solutions to the system are the eigenvectors of corresponding to .

The –pseudospectrum of was introduced in [14] for a given parameter and a set of nonnegative weights as the set

of eigenvalues of all admissible perturbations of of the form

where the norms of the matrices () satisfy the specified -related constraints. In contrast to the constant matrix case, a whole tuple of perturbing matrices is involved, which explains the presence of the additional parameter vector in the definition of . However, considering for some the pencil , note that (3) reduces to the usual –pseudospectrum of the matrix for the choice of , since

In the general case, the nonnegative weights allow freedom in how perturbations are measured; for example, in an absolute sense when , or in a relative sense when (). On the other hand, the choice leads to .

From a computational viewpoint, a more convenient characterization [14] (Lemma 2.1) for this set is given by

where is the minimum singular value of the matrix and the scalar polynomial

is defined in terms of the weights used in the definition (3) of . In fact, since the eigenvalues of are continuous with respect to the entries of its coefficient matrices, the boundary of is expressed as

the equality is satisfied for some only for a finite number of points .

Suppose now that we want to approximate an eigenvalue of a matrix polynomial which lies in the neighborhood of some point of interest on the complex plane. Expression (4) suggests that the derivation of a convergent sequence in Section 2 may be readily adapted for our purposes. Indeed, for every scalar , there exists some , such that and then (4) implies . Moreover, assuming is a simple singular value of the matrix , we may invoke Theorem 1 to conclude that the function with is real analytic in a neighborhood of . In fact,

where and denote the left and right singular vectors of associated to , respectively [13] (Corollary 4.2).

As in the constant matrix case, moving from the initial point towards the interior of in the normal direction to the curve , the scalar

with can be considered to be an estimate of some eigenvalue . In this way, a convergent sequence to the eigenvalue is recursively defined with initial point and general term

Numerical Experiments

The steps outlined in Section 2.1.1 are readily modified using the sequences in (5) to yield spectral enclosures for matrix polynomials.

Application 5

([31], Example 3). We consider the matrix polynomial , where

describing a damped mass-spring system [14,32] and set non-negative weights , measuring perturbations of the coefficient matrices in an absolute sense. We initiate the procedure with 15 equidistributed initial points on the semicircle

and proceed to determine eigenvalue approximating sequences () according to (5), so that their final terms all lie in the interior of . This computation requires 722 iterations. As in the constant matrix case, interpolation between the values of such that along the trajectories formed by () results in approximations of for , as seen in Figure 5a. Note this yields a sufficiently accurate sketch of and is very competitive when compared to other methods. For instance, Figure 5b is obtained via the procedure in [31] applied to a grid on the relevant region . This latter approach is far more computationally intensive, requiring 71,575 iterations to visualize for the same tuple of parameters.

Figure 5.

Pseudospectrum computation for a damped mass-spring system. (a) Approximate pseudospectra visualization, interpolating along 15 trajectories of converging sequences. (b) Pseudospectra visualization, using the modified grid algorithm in [31].

In case a more detailed spectral localization is desired, our method may be adapted, as in Application 1, to identify individual pseudospectrum components. Our next experiment also serves to illustrate the fact that the number of initial trajectories that are attracted by the individual eigenvalues to form the related clusters is intimately connected to eigenvalue sensitivity.

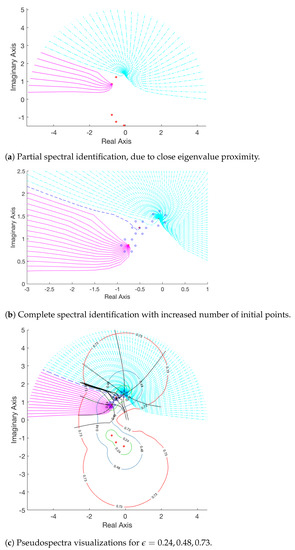

Application 6

([13], Example 5.1). We consider the mass-spring system from ([13], Ex. 5.2) defining the selfadjoint matrix polynomial

and set . As in Application 5, computations are restricted exclusively to the closed upper half-plane. However, the close proximity of the eigenvalues (indicated by “*” in Figure 6), as well as the fact that the pair is less sensitive than the other two, necessitates the use of many initial points. Indeed, as demonstrated in Figure 6a, initiating the procedure with 40 equidistributed initial points onresults in being under-represented in the resulting clusters. In order to correctly approximate all three elements of the spectrum on the upper half-plane enforces the use of as many as 80 points on the selected semicircle. The length of each sequence is determined, so that all values do not exceed the prefixed parameter value of ; this construction involved 1162 singular value computations. Using the squared Euclidean distance as the metric for computing the cluster evaluation criterion, three distinct groups are correctly identified, each converging to a different eigenvalue in the closed upper half-plane, as in Figure 6b. Note that the least sensitive eigenvalue ends up attracting only one of these sequences; the corresponding group being a singleton. To correctly sketch the boundaries of for the triple of , , (>, we introduce six additional points for each cluster. Indeed, denoting , , the centroids of the clusters, for each cluster , 2, 3 we consider the vertices of a canonical hexagon centered at with maximal diameter equal to . These vertices are indicated by circles in Figure 6b and are used as starting points to construct the additional trajectories indicated by the black lines in Figure 6c. Note that all three selected parameters ϵ = 0.24, 0.48, 0.73 should be possible to interpolate along these additional lines as well, which explains why most of these trajectories have been extended to the opposite directions as well, modifying the definition of the sequences in (5) in each instance accordingly. The construction of the additional sequences requires 202 singular value computations (leading to a total of 1364 iterations), while the resulting approximations of pseudospectra boundaries for are depicted in Figure 6c.

Figure 6.

Pseudospectra computations for a vibrating system.

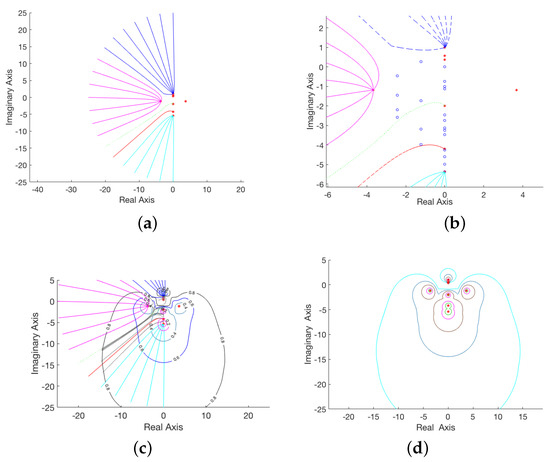

Application 7

([13], Example 5.3). This experiment tests the behavior of the method on a damped gyroscopic system described by the matrix polynomial

with

and B the nilpotent matrix having ones on its subdiagonal and zeros elsewhere. Note are positive and negative definite respectively, G is skew-symmetric, and the tridiagonal D is a damping matrix.

Starting with 50 points on

and then 5 additional points on the perpendicular bisector of the line segment defined by the two centroids of the resulting clusters (indicated by the blue circles), the resulting pseudospectrum approximation required 1212 iterations in total with and can be seen in the left part of Figure 7a. The algorithm in [31] applied to a grid on the region required 29,110 iterations for pseudospectra visualization for the same triple to obtain comparable results in Figure 7b.

Figure 7.

Comparison of pseudospectra computation for a damped gyroscopic system and ϵ = 0.004, 0.02, 0.1. (a) Computation using 50 initial points. (b) Computation using algorithm in [31].

We conclude this section, examining the behavior of the method on a non-symmetric example.

Application 8

([31], Example 2). We consider the gyroscopic system

and . Starting with 21 points on

and , five clusters are detected (Figure 8a) after 1140 iterations. Then, two additional points are introduced on each of the line segments defined by the centroids of the detected clusters (indicated by the blue circles in Figure 8b), causing the iterations to rise to the total number of 2662 in order to determine the 20 corresponding trajectories (indicated by grey lines in Figure 8c). The corresponding visualizations in Figure 8d), obtained via [31] applied to a grid on the region required 88,462 iterations.

Figure 8.

Comparison of pseudospectra computation for a gyroscopic system and . (a) Cluster detection using 21 initial points. (b) Locations of additional points. (c) Pseudospectra visualizations interpolating along the trajectories of 21 initial and 20 additional points. (d) Pseudospectra visualization, using the modified grid algorithm in [31].

4. Concluding Remarks

In this note, we have shown how to define sequences which, beginning from arbitrary complex scalars, converge to some element of the spectrum of a matrix. This approach can be applied both to constant matrices and to more general matrix polynomials and can be used as a means to obtain estimates for those eigenvalues that lie in the vicinity of the initial term of the sequence. This construction is also useful when no information on the location of the spectrum is a priori known. In such cases, repeating the construction from arbitrary points, it is possible to detect peripheral eigenvalues, localize the spectrum and even obtain spectral enclosures.

As an application, in this paper we used this construction to compute the pseudospectrum of a matrix or a matrix polynomial. Thus, a useful technique for speeding up pseudospectra computations emerges, which is essential for applications. An advantage of the proposed approach is that does not make any assumptions on the location of the spectrum. The fact that all computations are performed on some dynamically chosen locations on the complex plane which converge to the eigenvalues, rather than on a large number of predefined points on a rigid grid, can be seen as improvement over conventional grid algorithms.

Parallel implementation of the method can lead to further computational savings when applied to large matrices. Another option would be to apply this method combined with randomization techniques for the selection of the initial points of the sequences. In the large-scale matrix case, this method may be helpful in obtaining a first impression of the shape and size of pseudospectrum and even computing a rough approximation. Then, if desired, this could be used in conjunction with local versions of the grid algorithm and small local meshes about individual areas of interest.

Author Contributions

All authors have equally contributed to the conceptualization of this paper, to software implementation and to the original draft preparation. Funding acquisition and project administration: P.P. All authors have read and agreed to the submitted version of the manuscript.

Funding

This research is carried out/funded in the context of the project “Approximation algorithms and randomized methods for large-scale problems in computational linear algebra” (MIS 5049095) under the call for proposals “Researchers’ support with an emphasis on young researchers–2nd Cycle’.” The project is co-financed by Greece and the European Union (European Social Fund—ESF) by the Operational Programme Human Resources Development, Education and Lifelong Learning 2014–2020.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Landau, H.J. On Szegö’s eigenvalue distribution theorem and non–Hermitian kernels. J. Analyse Math. 1975, 28, 216–222. [Google Scholar] [CrossRef]

- Varah, J.M. On the separation of two matrices. SIAM J. Numer. Anal. 1979, 16, 216–222. [Google Scholar] [CrossRef]

- Wilkinson, J.H. Sensitivity of eigenvalues II. Utilitas Math. 1986, 30, 243–286. [Google Scholar]

- Demmel, W. A counterexample for two conjectures bout stability. IEEE Trans. Aut. Control 1987, 32, 340–342. [Google Scholar] [CrossRef]

- Trefethen, L.N. Approximation theory and numerical linear algebra. In Algorithms for Approximation, II (Shrivenham, 1988); Chapman & Hall: London, UK, 1990; pp. 336–360. [Google Scholar]

- Nachtigal, N.M.; Reddy, S.C.; Trefethen, L.N. How fast are nonsymmetric matrix iterations? SIAM J. Matrix. Anal. 1992, 13, 778–795. [Google Scholar] [CrossRef]

- Mosier, R.G. Root neighborhoods of a polynomial. Math. Comput. 1986, 47, 265–273. [Google Scholar] [CrossRef]

- Reddy, S.C.; Trefethen, L.N. Lax–stability of fully discrete spectral methods via stability regions and pseudo–eigenvalues. Comput. Methods Appl. Mech. Eng. 1990, 80, 147–164. [Google Scholar] [CrossRef]

- Higham, D.J.; Trefethen, L.N. Stiffness of ODEs. BIT Numer. Math. 1993, 33, 285–303. [Google Scholar] [CrossRef]

- Davies, E.; Simon, B. Eigenvalue estimates for non–normal matrices and the zeros of random orthogonal polynomials on the unit circle. J. Approx. Theory 2006, 141, 189–213. [Google Scholar] [CrossRef]

- Böttcher, A.; Grudsky, S.M. Spectral Properties of Banded Toeplitz Matrices; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2005. [Google Scholar]

- Van Dorsselaer, J.L.M. Pseudospectra for matrix pencils and stability of equilibria. BIT Numer. Math. 1997, 37, 833–845. [Google Scholar] [CrossRef]

- Lancaster, P.; Psarrakos, P. On the pseudospectra of matrix polynomials. SIAM J. Matrix Anal. Appl. 2005, 27, 115–129. [Google Scholar] [CrossRef][Green Version]

- Tisseur, F.; Higham, N.J. Structured pseudospectra for polynomial eigenvalue problems with applications. SIAM J. Matrix Anal. Appl. 2001, 23, 187–208. [Google Scholar] [CrossRef]

- Trefethen, L.N.; Embree, M. Spectra and Pseudospectra: The Behavior of Nonnormal Matrices and Operators; Princeton University Press: Princeton, NJ, USA, 2005. [Google Scholar]

- Brühl, M. A curve tracing algorithm for computing the pseudospectrum. BIT 1996, 36, 441–454. [Google Scholar] [CrossRef]

- Sun, J.-G. A note on simple non–zero singular values. J. Comput. Math. 1988, 62, 235–267. [Google Scholar]

- Kokiopoulou, E.; Bekas, C.; Gallopoulos, E. Computing smallest singular triples with implicitly restarted Lanczos biorthogonalization. Appl. Num. Math. 2005, 49, 39–61. [Google Scholar] [CrossRef]

- Bekas, C.; Gallopoulos, E. Cobra: Parallel path following for computing the matrix pseudospectrum. Parallel Comput. 2001, 27, 1879–1896. [Google Scholar] [CrossRef]

- Bekas, C.; Gallopoulos, E. Parallel computation of pseudospectra by fast descent. Parallel Comput. 2002, 28, 223–242. [Google Scholar] [CrossRef]

- Trefethen, L.N. Computation of pseudospectra. Acta Numer. 1999, 9, 247–295. [Google Scholar] [CrossRef]

- Duff, I.S.; Grimes, R.G.; Lewis, J.G. Sparse matrix test problems. ACM Trans. Math. Softw. 1989, 15, 1–14. [Google Scholar] [CrossRef]

- Kolotilina, L.Y. Lower bounds for the Perron root of a nonnegative matrix. Linear Algebra Appl. 1993, 180, 133–151. [Google Scholar] [CrossRef][Green Version]

- Liu, S.L. Bounds for the greater characteristic root of a nonnegative matrix. Linear Algebra Appl. 1996, 239, 151–160. [Google Scholar] [CrossRef][Green Version]

- Duan, X.; Zhou, B. Sharp bounds on the spectral radius of a nonnegative matrix. Linear Algebra Appl. 2013, 439, 2961–2970. [Google Scholar] [CrossRef]

- Xing, R.; Zhou, B. Sharp bounds on the spectral radius of nonnegative matrices. Linear Algebra Appl. 2014, 449, 194–209. [Google Scholar] [CrossRef]

- Liao, P. Bounds for the Perron root of nonnegative matrices and spectral radius of iteration matrices. Linear Algebra Appl. 2017, 530, 253–265. [Google Scholar] [CrossRef]

- Elsner, L.; Koltracht, I.; Neumann, M.; Xiao, D. On accuate computations of the Perron root. SIAM J. Matrix. Anal. 1993, 14, 456–467. [Google Scholar] [CrossRef][Green Version]

- Lu, L. Perron complement and Perron root. Linear Algebra Appl. 2002, 341, 239–248. [Google Scholar] [CrossRef]

- Dembélé, D. A method for computing the Perron root for primitive matrices. Numer. Linear Algebra Appl. 2021, 28, e2340. [Google Scholar] [CrossRef]

- Fatouros, S.; Psarrakos, P. An improved grid method for the computation of the pseudospectra of matrix polynomials. Math. Comp. Model. 2009, 49, 55–65. [Google Scholar] [CrossRef][Green Version]

- Tisseur, F.; Meerbergen, K. The quadratic eigenvalue problem. SIAM Rev. 1997, 39, 383–406. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).