Abstract

In this paper, we have presented a family of fourth order iterative methods, which uses weight functions. This new family requires three function evaluations to get fourth order accuracy. By the Kung–Traub hypothesis this family of methods is optimal and has an efficiency index of 1.587. Furthermore, we have extended one of the methods to sixth and twelfth order methods whose efficiency indices are 1.565 and 1.644, respectively. Some numerical examples are tested to demonstrate the performance of the proposed methods, which verifies the theoretical results. Further, we discuss the extraneous fixed points and basins of attraction for a few existing methods, such as Newton’s method and the proposed family of fourth order methods. An application problem arising from Planck’s radiation law has been verified using our methods.

Keywords:

nonlinear equation; iterative methods; basins of attraction; extraneous fixed points; efficiency index MSC:

65H05; 65D05; 41A25

1. Introduction

One of the best root-finding methods for solving nonlinear scalar equation is Newton’s method. In recent years, numerous higher order iterative methods have been developed and analyzed for solving nonlinear equations that improve classical methods, such as Newton’s method (NM), Halley’s iteration method, etc., which are respectively given below:

and:

The convergence order of Newton’s method is two, and it is optimal with two function evaluations. Halley’s iteration method has third order convergence with three function evaluations. Frequently, is difficult to calculate and computationally more costly, and therefore, in Equation (2) is approximated using the finite difference; still, the convergence order and total number function evaluation are maintained [1]. Such a third order method similar to Equation (2) after approximating in Halley’s iteration method is given below:

In the past decade, a few authors have proposed third order methods with three function evaluations free from ; for example, [2,3] and the references therein. The efficiency index (EI) of an iterative method is measured using the formula , where p is the local order of convergence and d is the number of function evaluations per full iteration cycle. Kung–Traub [4] conjectured that the order of convergence of any multi-point without the memory method with d function evaluations cannot exceed the bound , the “optimal order”. Thus, the optimal order for three evaluations per iteration would be four. Jarratt’s method [5] is an example of an optimal fourth order method. Recently, some optimal and non-optimal multi-point methods have been developed in [6,7,8,9,10,11,12,13,14,15] and the references therein. A non-optimal method [16] has been recently rediscovered based on a quadrature formula, which can also be obtained by giving in Equation (3). In fact, each iterative fixed-point method produces a unique basins of attraction and fractal behavior, which can be used in the evaluation of algorithms [17]. Polynomiography is defined to be the art and science of visualization in the approximation of zeros of complex polynomials, where the created polynomiography images satisfy the mathematical convergence properties of iteration functions.

This paper considers a new family of optimal fourth order methods, which is an improvement of the method given in [16]. We study extraneous fixed points and basins of attraction for two particular cases of the new family of methods and a few equivalent available methods. The rest of the paper is organized as follows. Section 2 presents the development of the methods, their convergence analysis and the extension of new fourth order methods to sixth and twelfth order. Section 3 includes some numerical examples and results for the new family of methods along with some equivalent methods, including Newton’s method. In Section 4, we obtain all possible extraneous fixed points for these methods as a special study. In Section 5, we study basins of attraction for the proposed fourth order methods, Newton’s method and some existing methods. Section 6 discusses an application on Planck’s radiation law problem. Finally, Section 7 gives the conclusions of our work.

2. Development of the Methods and Convergence Analysis

This Method (4) is of order three with three evaluations per full iteration having EI = 1.442. To improve the order of the above method with the same number of function evaluations leading to an optimal method, we propose the following without memory method, which includes weight functions:

where and are two weight functions with and .

2.1. Convergence Analysis

The proofs for Theorems 1 and 2 are worked out with the help of Mathematica.

Theorem 1.

Let be a sufficiently smooth function having continuous derivatives up to fourth order. If has a simple root in the open interval D and is chosen in a sufficiently small neighborhood of , then the family of Method (5) is of local fourth-order convergence, when:

and it satisfies the error equation:

where and .

Proof.

Taylor expansion of and about gives:

and:

so that:

Again, using Taylor expansion of about gives:

Expanding the weight function and about 1 using Taylor series, we get:

Using Equations (13) and (14) in Equation (5), such that the conditions in Equation (6) are satisfied, we obtain:

Note that for each choice of and in Equation (15) will give rise to a new optimal fourth order method. Method (5) has efficiency index EI = 1.587, better than Method (4). Two members in the family of Method (5) satisfying Condition (6), with corresponding weight functions, are given in the following:

By choosing , we get a new Proposed method called as PM1:

where its error equation is:

By choosing , we get another new Proposed method called as PM2:

where its error equation is:

Remark 1.

By this way, we can propose many such fourth order methods similar to PM1 and PM2. Further, the methods PM1 and PM2 are equally good, since they have the same order of convergence and efficiency. Based on the analysis done using basins of attraction, we find that PM1 is marginally better than PM2, and hence, we have considered PM1 to propose a higher order method, namely PM3.

2.2. Higher Order Methods

We extend the method PM1 to a new sixth order method called as PM3:

The following theorem gives the proof of convergence for Method (18).

Theorem 2.

Let be a sufficiently smooth function having continuous derivatives up to fourth order. If has a simple root in the open interval D and is chosen in a sufficiently small neighborhood of , then Method (18) is of local sixth order convergence, and it satisfies the error equation:

Proof.

Taylor expansion of about gives:

Babajee et al. [7] improved a sixth order Jarratt method to a twelfth order method. Using their technique, we obtain a new twelfth order method called as PM4:

where is approximated as follows: in order to reduce one function evaluation, we replace:

The following theorem is given without proof, which can be worked out with the help of Mathematica.

Theorem 3.

Let be a sufficiently smooth function having continuous derivatives up to fourth order. If has a simple root in the open interval D and is chosen in a sufficiently small neighborhood of , then Method (21) is of local twelfth order convergence, and it satisfies the error equation:

Remark 2.

The efficiency indices for the methods PM3 and PM4 are EI = 1.565 and EI = 1.644, respectively.

2.3. Some Existing Fourth Order Methods

Consider the following fourth order optimal methods for the purpose of comparing results:

Jarratt method (JM) [5]:

Method of Sharifi-Babajee-Soleymani (SBS1) [12]:

Method of Sharifi-Babajee-Soleymani (SBS2) [12]:

Method of Soleymani-Khratti-Karimi (SKK) [15]:

Method of Singh-Jaiswal (SJ) [14]:

Method of Sharma-Kumar-Sharma (SKS) [13]:

Furthermore, consider the following non-optimal method found in Divya Jain (DJ) [10]:

3. Numerical Examples

In this section, we give numerical results on some test functions to compare the efficiency of the proposed family of methods with some known methods. Numerical computations have been carried out in the MATLAB software, rounding to 500 significant digits. Depending on the precision of the computer, we use the stopping criteria for the iterative process: , where and N is the number of iterations required for convergence. represents the total number of function evaluations. The computational order of convergence (COC) denoted as ρ is given by (see [18]):

Functions taken for our study are mostly used in the literature [7,11], and their simple zeros are given below:

From Table 1 and Table 2, we observe that PM1 and PM2 converge in a lesser number of iterations and with low error when compared to Methods (1) and (4). For equivalent fourth order methods, PM1 and PM2 converge in a lesser number of iterations for certain functions, for example PM2 performs better compared to Method (25) for the functions and . In terms of the number of iterations for convergence, PM1 and PM2 are equivalent to JM. Table 3 and Table 4 displays the total number of function evaluations () and the computational order of convergence (COC) ρ for the methods taken for our study.

Table 1.

Comparison of the results for some known methods and proposed methods.

Table 2.

Comparison of the results for some known methods and proposed methods.

Table 3.

Total number of function evaluations () and COC (ρ).

Table 4.

Total number of function evaluations () and COC (ρ).

Table 5 displays the results for the “” command in MATLAB, where is the number of iterations to find the interval containing the root and is the error after N number of iterations. For the command, zeros are considered to be points where the function actually crosses, not just touches the x-axis. It is observed that the present methods (PM1 and PM2) converge with a lesser number of total function evaluations than the solver.

Table 5.

Results for the command in MATLAB.

4. A Study on Extraneous Fixed Points

Definition 4.

A point is a fixed point of R if

Definition 5.

A point is called attracting if , repelling if and neutral if . If the derivative is also zero, then the point is super attracting.

It is interesting to note that all of the above discussed methods can be written as:

As per the definition, is a fixed point of this method, since . However, the points at which are also fixed points of the method, since ; the second term on the right side of Equation (29) vanishes. Hence, these points ξ are called extraneous fixed points.

Moreover, for a general iteration function given by:

the nature of extraneous fixed points can be discussed. Based on the nature of the extraneous fixed points, the convergence of the iteration process will be determined. For more details on this aspect, the paper by Vrcsay et al. [19] will be useful. In fact, they investigated that if the extraneous fixed points are attractive, then the method will give erroneous results. If the extraneous fixed points are repelling or neutral, then the method may not converge to a root near the initial guess.

In this section, we will discuss the extraneous fixed points of each method for the polynomial . As does not vanish in Theorem 6, there are no extraneous fixed points.

Theorem 7.

There are six extraneous fixed points for Jarratt Method (22).

Proof.

The extraneous fixed point of Jarratt method for which

are found. Upon substituting , we get the equation The extraneous fixed points are found to be All of these fixed points are repelling (since ). ☐

Theorem 8.

There are fifty two extraneous fixed points for Method (23).

Proof.

We found for Method (23),

The extraneous fixed points are at found to be

All of these fixed points are repelling (since ). ☐

Theorem 9.

There are thirty nine extraneous fixed points for Method (24).

Proof.

For Method (24),

The extraneous fixed points are at

All of these fixed points are repelling (since ). ☐

Theorem 10.

There are twenty four extraneous fixed points for Method (25).

Proof.

We found for Method (25),

The extraneous fixed points are found to be

All of these fixed points are repelling (since ). ☐

Theorem 11.

There are eighteen extraneous fixed points for Method (26).

Proof.

For Method (26),

The extraneous fixed points are at

All of these fixed points are repelling (since ). ☐

Theorem 12.

There are twelve extraneous fixed points for Method (27).

Proof.

For Method (27),

The extraneous fixed points are at

All of these fixed points are repelling (since ). ☐

Theorem 13.

There are twenty four extraneous fixed points for Method (16).

Proof.

For Method (16),

The extraneous fixed points are at

All of these fixed points are repelling (since ). ☐

Theorem 14.

There are thirty extraneous fixed points for Method (17).

Proof.

For Method (17),

The extraneous fixed points are at

All of these fixed points are repelling (since ). ☐

5. Basins of Attraction

Section 2 and Section 3 discussed methods whose roots are in the real domain, that is . The study can be extended to functions defined in the complex plane having complex zeros. From the fundamental theorem of algebra, a polynomial of degree n with real or complex coefficients has n roots, which may or may not be distinct. In such a case, a complex initial guess is needed for the convergence of complex zeros. Note that we need some basic definitions in order to study functions for the complex domain with complex zeros. We give below some definitions required for our study, which are found in [20,21,22]. Let be a rational map on the Riemann sphere.

Definition 15.

For , we define its orbit as the set

Definition 16.

A periodic point of the period m is such that , where m is the smallest integer.

Definition 17.

The Julia set of a nonlinear map denoted by is the closure of the set of its repelling periodic points. The complementary of is the Fatou set .

Definition 18.

If O is an attracting periodic orbit of period m, we define the basins of attraction to be the open set consisting of all points for which the successive iterates converge towards some point of O.

Lemma 19.

Every attracting periodic orbit is contained in the Fatou set of R. In fact, the entire basins of attraction A for an attracting periodic orbit is contained in the Fatou set. However, every repelling periodic orbit is contained in the Julia set.

In the following subsections, we produce some beautiful graphs obtained for the proposed methods and for some existing methods using MATLAB [23,24]. In fact, an iteration function is a mapping of the plane into itself. The common boundaries of these basins of attraction constitute the Julia set of the iteration function, and its complement is the Fatou set. This section is necessary in this paper to show how the proposed methods could be considered in polynomiography. In the following section, we describe the basins of attraction for Newton’s method and some higher order Newton type methods for finding complex roots of polynomials and .

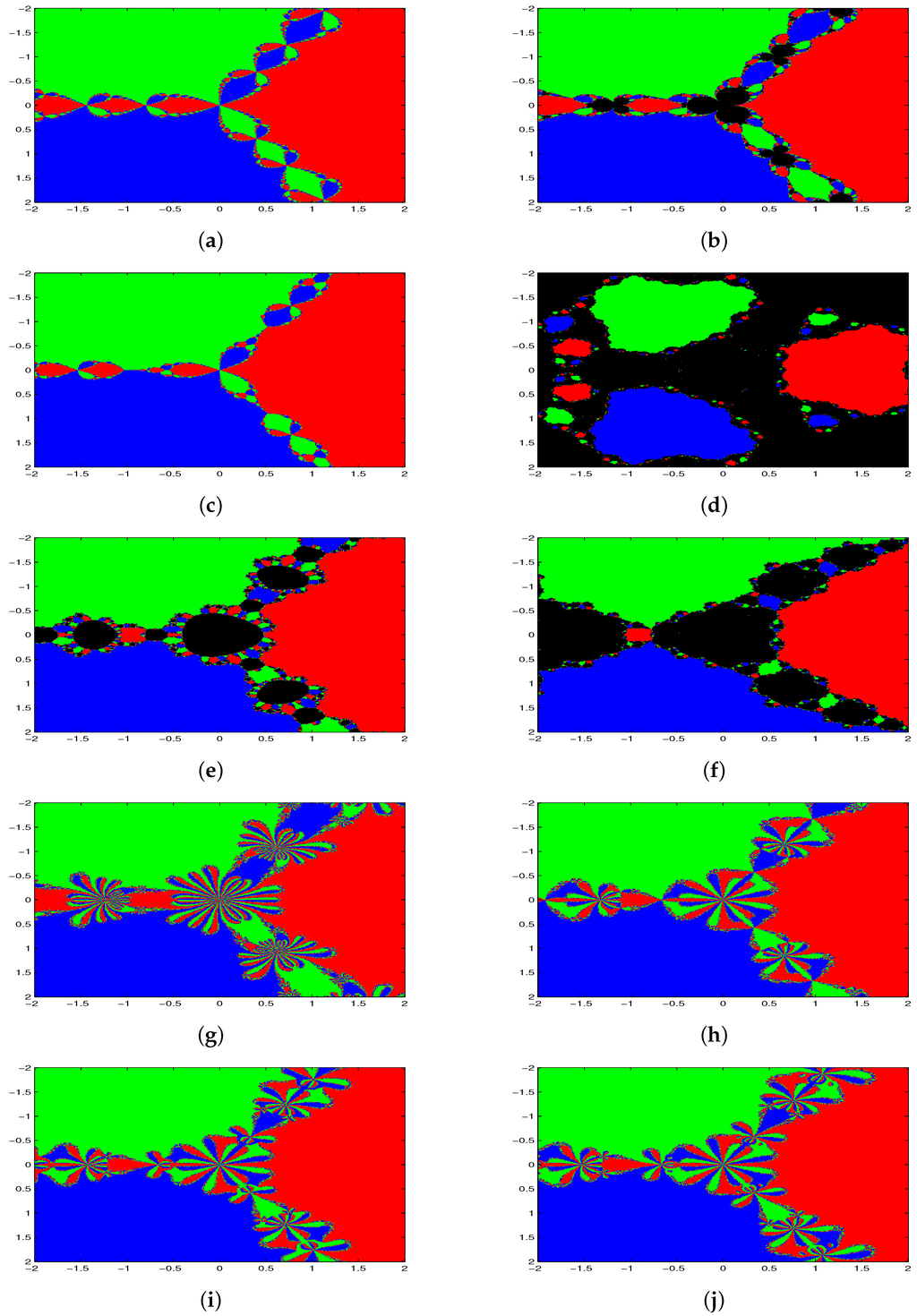

5.1. Polynomiographs of

We consider the square region , and in this region, we have 160,000 equally-spaced grid points with mesh . It is composed of 400 columns and 400 rows, which can be related to the pixels of a computer display, which would represent a region of the complex plane [25]. Each grid point is used as an initial point , and the number of iterations until convergence is counted for each point. Now, we draw the polynomiographs of with roots , and . We assign “red color” if each grid point converges to the root , “green color” if they converge to the root and “blue color” if they converge to the root in at most 200 iterations and if . In this way, the basins of attraction for each root would be assigned a characteristic color. If the iterations do not converge as per the above condition for some specific initial points, we assign “black color”.

Figure 1a–j shows the polynomiographs of the methods for the cubic polynomial . There are diverging points for the method of Noor et al., SBS1, SBS2 and SKK. All starting points are converging for the methods NM, JM, SJ, SKS, PM1 and PM2. In Table 6, we classify the number of converging and diverging grid points for each iterative method. Note that a point belongs to the Julia set if and only if the dynamics in a neighborhood of displays sensitive dependence on the initial conditions, so that nearby initial conditions lead to wildly different behavior after a number of iterations. For this reason, some of the methods are getting many divergent points. The common boundaries of these basins of attraction constitute the Julia set of the iteration function.

Figure 1.

Polynomiographs of . (a) Newton’s method (NM) (1); (b) method of Noor et al. (4); (c) Jarratt method (JM) (22); (d) method of Sharifi et al. (SBS1) (23); (e) method of Sharifi et al. (SBS2) (24); (f) method of Soleymani et al. (SKK) (25); (g) method of Singh et al. (SJ) (26); (h) method of Sharma et al. (SKS) (27); (i) proposed method (PM1) (16); (j) proposed method (PM2) (17).

Table 6.

Comparison of convergent and divergent grids for polynomiographs of .

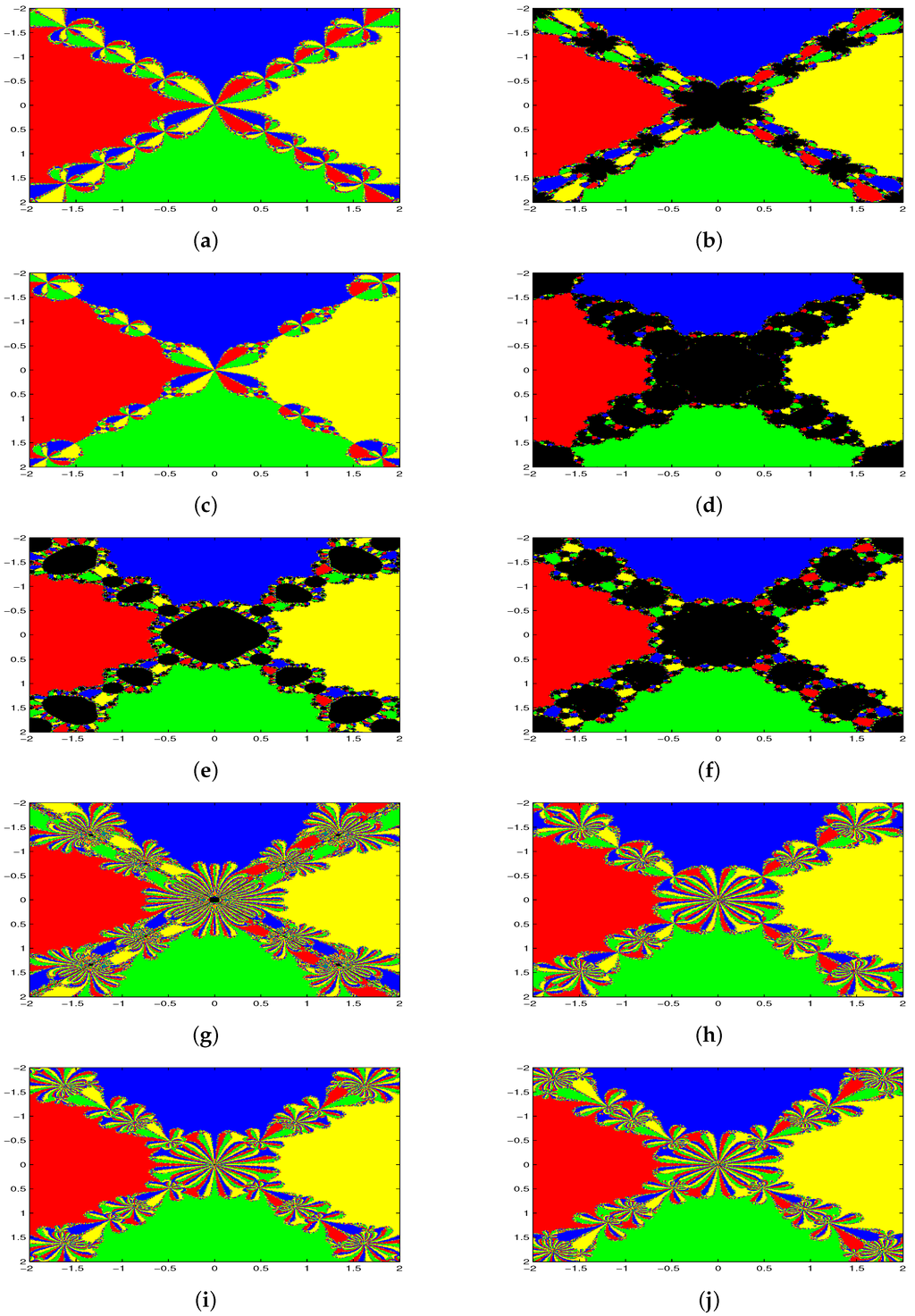

5.2. Polynomiographs of

Next, we draw the polynomiographs of with roots , , and . We assign yellow color if each grid point converges to the root , red color if they converge to the root , green color if they converge to the root and blue color if they converge to the root in at most 200 iterations and if . Therefore, the basins of attraction for each root would be assigned a corresponding color. If the iterations do not converge as per the above condition for some specific initial points, we assign black color.

Figure 2a–j shows the polynomiographs of the methods for the quartic polynomial . There are diverging points for the method of Noor et al., SBS1, SBS2, SKK, SJ, SKS, PM1 and PM2. All starting points are convergent for NM and JM. In Table 7, we classify the number of converging and diverging grid points for each iterative methods. Furthermore, we observe that the SKS, PM1 and PM2 methods are divergent at a lesser number of grid points than the method of Noor et al., SBS1, SBS2, SKK and SJ. Table 8 shows that the proposed methods are better than or equal to other comparable methods with respect to the number of iterations, computational order convergence and error. All of the methods applied on the cubic and quartic polynomials and are convergent with real roots as the starting point.

Figure 2.

Polynomiographs of . (a) Newton’s method (NM) (1); (b) method of Noor et al. (4); (c) Jarratt method (JM) (22); (d) method of Sharifi et al. (SBS1) (23); (e) method of Sharifi et al. (SBS2) (24); (f) method of Soleymani et al. (SKK) (25); (g) method of Singh et al. (SJ) (26); (h) method of Sharma et al. (SKS) (27); (i) proposed method (PM1) (16); (j) proposed method (PM2) (17).

Table 7.

Comparison of convergent and divergent grids for polynomiographs of .

Table 8.

Results for polynomials , with real roots.

From this comparison based on the basins of attractions for cubic and quartic polynomials, we could generally say that NM, JM, PM1 and PM2 are more reliable in solving nonlinear equations. Furthermore, by observing the polynomiographs of and , we find certain symmetrical patterns for the x-axis and y-axis, where the starting point leads to convergent real or complex pair of roots of the respective polynomials.

6. An Application Problem

To test our methods, we consider the following Planck’s radiation law problem found in [10,26]:

which calculates the energy density within an isothermal blackbody. Here, λ is the wavelength of the radiation; T is the absolute temperature of the blackbody; k is Boltzmann’s constant; h is the Planck’s constant; and c is the speed of light. Suppose we would like to determine wavelength λ, which corresponds to maximum energy density . From Equation (31), we get:

It can be checked that a maxima for φ occurs when , that is when:

Here, putting , the above equation becomes:

Define:

The aim is to find a root of the equation . Obviously, one of the roots is not taken for discussion. As argued in [26], the left-hand side of Equation (32) is zero for and . Hence, it is expected that another root of the equation might occur near . The approximate root of the Equation (33) is given by . Consequently, the wavelength of radiation (λ) corresponding to which the energy density is maximum is approximated as:

We apply the methods NM, DJ, PM1, PM2, PM3 and PM4 to solve Equation (33) and compared the results in Table 9 and Table 10. From these tables, we note that the root is reached faster by the method PM4 than by other methods. This is due to the fact that PM4 has the highest efficiency index EI = 1.644.

Table 9.

Comparison of the results.

Table 10.

Comparison of the results.

Results for the command in MATLAB for this application problem are given in Table 11.

Table 11.

Results for Planck’s radiation law problem in .

7. Conclusions

In this work, we have proposed a family of fourth order methods using weight functions. The fourth order methods are found to be optimal as per the Kung–Traub conjuncture. Further, we have extended one of the methods to sixth and twelfth order methods with four and five function evaluations, respectively. The extraneous fixed points for the fourth order methods and for some existing methods are discussed in detail. By analysis using basins of attraction, our methods PM1 and PM2 are found to be superior to the methods of Noor et al. [16], SBS1, SBS2, SKK and SJ; specifically, the methods of SBS1, SBS2 and SKK are very badly scaled in both cubic and quartic polynomials. Moreover, PM1 and PM2 are better than other compared methods, except Newton’s method and Jaratt’s method, which perform equally well. We have also verified our methods (PM1, PM2, PM3, PM4), NM and DJ on Planck’s radiation law problem, and the results show that PM4 is more efficient than other compared methods.

Acknowledgments

The authors would like to thank the editors and referees for the valuable comments and for the suggestions to improve the readability of the paper.

Author Contributions

The contributions of both of the authors have been similar. Both of them have worked together to develop the present manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ezquerro, J.A.; Hernandez, M.A. A uniparametric halley-type iteration with free second derivative. Int. J. Pure Appl. Math. 2003, 6, 99–110. [Google Scholar]

- Babajee, D.K.R.; Dauhoo, M.Z. An Analysis of the Properties of the Variants of Newton’s Method with Third Order Convergence. Appl. Math. Comput. 2006, 183, 659–684. [Google Scholar] [CrossRef]

- Chun, C.; Yong-Il, K. Several new third-order iterative methods for solving nonlinear equations. Acta Appl. Math. 2010, 109, 1053–1063. [Google Scholar] [CrossRef]

- Kung, H.T.; Traub, J.F. Optimal order of one-point and multipoint iteration. J. Assoc. Comput. Mach. 1974, 21, 643–651. [Google Scholar] [CrossRef]

- Jarratt, P. Some efficient fourth order multipoint methods for solving equations. BIT Numer. Math. 1969, 9, 119–124. [Google Scholar] [CrossRef]

- Ardelean, G. A new third-order newton-type iterative method for solving nonlinear equations. Appl. Math. Comput. 2013, 219, 9856–9864. [Google Scholar] [CrossRef]

- Babajee, D.K.R.; Madhu, K.; Jayaraman, J. A family of higher order multi-point iterative methods based on power mean for solving nonlinear equations. Afr. Mat. 2015. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. Efficient three-step iterative methods with sixth order convergence for nonlinear equations. Numer. Algor. 2010, 53, 485–495. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martinez, E.; Torregrosa, J.R. A family of iterative methods with sixth and seventh order convergence for nonlinear equations. Math. Comput. Model. 2010, 52, 1490–1496. [Google Scholar] [CrossRef]

- Jain, D. Families of newton-like methods with fourth-order convergence. Int. J. Comput. Math. 2013, 90, 1072–1082. [Google Scholar] [CrossRef]

- Madhu, K.; Jayaraman, J. Class of modified Newton’s method for solving nonlinear equations. Tamsui Oxf. J. Inf. Math. Sci. 2014, 30, 91–100. [Google Scholar]

- Sharifi, M.; Babajee, D.K.R.; Soleymani, F. Finding the solution of nonlinear equations by a class of optimal methods. Comput. Math. Appl. 2012, 63, 764–774. [Google Scholar] [CrossRef]

- Sharma, J.R.; Kumar, G.R.; Sharma, R. An efficient fourth order weighted-newton method for systems of nonlinear equations. Numer. Algor. 2013, 62, 307–323. [Google Scholar] [CrossRef]

- Singh, A.; Jaiswal, J.P. Several new third-order and fourth-order iterative methods for solving nonlinear equations. Int. J. Eng. Math. 2014, 2014, 828409. [Google Scholar] [CrossRef]

- Soleymani, F.; Khratti, S.K.; Karimi, V.S. Two new classes of optimal Jarratt-type fourth-order methods. Appl. Math. Lett. 2011, 25, 847–853. [Google Scholar] [CrossRef]

- Noor, M.A.; Waseem, M. Some iterative methods for solving a system of nonlinear equations. Comput. Math. Appl. 2009, 57, 101–106. [Google Scholar] [CrossRef]

- Kalantari, B. Polynomial Root-Finding and Polynomiography; World Scientific Publishing Co. Pte. Ltd.: Singapore, 2009. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Vrscay, E.R.; Gilbert, W.J. Extraneous fixed points, basin boundaries and chaotic dynamics for Schroder and Konig rational iteration functions. Numer. Math. 1988, 52, 1–16. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Plaza, S. Review of some iterative root-finding methods from a dynamical point of view. Sci. Ser. A Math. Sci. 2004, 10, 3–35. [Google Scholar]

- Blanchard, P. Complex Analytic Dynamics on the Riemann sphere. Bull. Am. Math. Soc. 1984, 11, 85–141. [Google Scholar] [CrossRef]

- Scott, M.; Neta, B.; Chun, C. Basin attractors for various methods. Appl. Math. Comput. 2011, 218, 2584–2599. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Torregrosa, J.R. Drawing Dynamical and Parameters Planes of Iterative Families and Methods. Sci. World J. 2013. [Google Scholar] [CrossRef] [PubMed]

- Introduction to Computational Engineering. Available online: http://www.caam.rice.edu (accessed on 10 May 2015).

- Soleymani, F.; Babajee, D.K.R.; Sharifi, M. Modified jarratt method without memory with twelfth-order convergence. Ann. Univ. Craiova Math. Comput. Sci. Ser. 2012, 39, 21–34. [Google Scholar]

- Bradie, B. A Friendly Introduction to Numerical Analysis; Pearson Education Inc.: New Delhi, India, 2006. [Google Scholar]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).