Abstract

Anomaly detection in safety-critical systems often operates under severe label constraints, where only a small subset of normal and anomalous samples can be reliably annotated, while large unlabeled data streams are contaminated and high-dimensional. Deep one-class methods, such as deep support vector data description (DeepSVDD) and deep semi-supervised anomaly detection (DeepSAD), address this setting. However, they treat samples largely in isolation and do not explicitly leverage the manifold structure of unlabeled data, which can limit robustness and interpretability. This paper proposes Anomaly-Aware Graph-based Semi-Supervised Deep Support Vector Data Description (AAG-DSVDD), a boundary-focused deep one-class approach that couples a DeepSAD-style hypersphere with a label-aware latent k-nearest neighbor (k-NN) graph. The method combines a soft-boundary enclosure for labeled normals, a margin-based push-out for labeled anomalies, an unlabeled center-pull, and a k-NN graph regularizer on the squared distances to the center. The resulting graph term propagates information from scarce labels along the latent manifold, aligns anomaly scores of neighboring samples, and supports sample-level interpretability through graph neighborhoods, while test-time scoring remains a single distance-to-center computation. On a controlled two-dimensional synthetic dataset, AAG-DSVDD achieves a mean F1-score of across ten random splits, improving on the strongest baseline by about absolute F1. On three public benchmark datasets (Thyroid, Arrhythmia, and Heart), AAG-DSVDD attains the highest F1 on all datasets with F1-scores of , , and , respectively, compared to all baselines. In a multi-sensor fire monitoring case study, AAG-DSVDD reduces the average absolute error in fire starting time to approximately 473 s (about 30% improvement over DeepSAD) while keeping the average pre-fire false-alarm rate below and avoiding persistent pre-fire alarms. These results indicate that graph-regularized deep one-class boundaries offer an effective and interpretable framework for semi-supervised anomaly detection under realistic label budgets.

Keywords:

deep support vector data description (Deep SVDD); graph-based manifold regularization; multi-channel time-series anomaly detection; fire monitoring; semi-supervised anomaly detection MSC:

68T07; 68T05; 68T09

1. Introduction

Anomaly detection is a core problem in machine learning with applications in industrial fault detection, ECG monitoring, intrusion detection, and early fire detection in complex non-fire environments [1,2,3,4,5]. Its goal is to learn a compact description of normal operating behavior and flag deviations as anomalies under severe class imbalance, where anomalies are rare and structurally diverse. Unlike standard supervised classification, anomaly detection is typically constrained by scarce and costly labels [6,7]. Confirming normal behavior may require expert review, additional quality-control tests, or prolonged observation, while obtaining labeled anomalies often entails controlled stress tests or hazardous experiments [8,9,10]. These limitations are especially pronounced in safety-critical fire monitoring, where realistic multi-sensor fire scenarios must be run in specialized facilities, yielding only a modest number of labeled fire and non-fire sequences compared to the continuous stream of unlabeled sensor data from routine operation [5].

Classical work on one-class classification framed anomaly detection as the problem of estimating the support of normal data. Support vector data description (SVDD) represents the normal class by enclosing the mapped training examples within a minimum-radius hypersphere in feature space, classifying points that fall outside this sphere as anomalies [11]. In contrast, the one-class support vector machine (OC-SVM) learns a maximum-margin hyperplane that separates the mapped data from the origin, thereby implicitly characterizing the region of normal behavior [12]. Both approaches yield compact decision regions with a clear geometric interpretation, but they are usually trained on labeled normal samples and do not leverage the often abundant pool of unlabeled observations present in many practical applications. When expert-validated normal labels are limited and expensive to obtain, such supervised one-class methods can suffer from poor sample efficiency and instability.

The emergence of deep learning has driven substantial progress in anomaly detection. Deep one-class classification (DeepSVDD) builds on the SVDD framework by integrating a deep encoder that projects the data into a latent space, which is then bounded by a hypersphere [13]. Expanding on this concept, several deep SVDD extensions have been developed, including autoencoder-based approaches, such as DASVDD [14], structure-preserving mappings that better retain neighborhood relationships [15], variational extensions leveraging VAE encoders [16], and contrastive learning objectives that enhance latent space representation and sharpen the decision boundary [17]. Deep semi-supervised anomaly detection (DeepSAD) extends this approach by leveraging a limited number of labeled anomalous samples to guide the deep one-class decision boundary when large amounts of unlabeled data are available [8]. In parallel, autoencoders and their extensions have been extensively employed to learn compact latent embeddings and reconstruction-based anomaly scores in industrial and temporal data scenarios [18,19], whereas self-supervised anomaly detection utilizes contrastive learning and related pretext objectives to improve representations when labeled data are scarce [20,21]. Nevertheless, a large proportion of deep anomaly detection approaches still rely on surrogate training objectives—such as reconstruction losses or generic self-supervised losses—that are only indirectly aligned with the final decision boundary, and typically incorporate unlabeled data through proxy tasks instead of imposing task-specific constraints directly on the boundary learner [22,23,24]. In addition, the resulting decision mechanisms are often difficult to interpret or connect to the underlying domain structure, which is a crucial limitation in safety-critical settings [25,26,27].

In safety-critical fire-monitoring applications, there has been a growing reliance on machine learning methods applied to multi-sensor data streams obtained from temperature, smoke, gas, and particulate sensors. Gas-sensor array platforms employing multivariate calibration in combination with pattern-recognition algorithms have shown the capability to identify early-stage fires while compensating for sensor drift [28]. In indoor settings, researchers have investigated deep multi-sensor fusion by employing lightweight convolutional neural networks applied to fused temperature, smoke, and carbon monoxide (CO) signals [29]. Concurrently, transfer-learning frameworks have been introduced to adjust models originally trained in small-scale environments for application in larger rooms equipped with distributed multi-sensor nodes, thereby enhancing early detection capabilities across diverse deployment scenarios [30]. Complementary approaches based on signal processing and one-class modeling include a wavelet-based multi-modeling framework that learns separate detectors tailored to specific fire scenarios in multi-sensor settings [5]. In related work, a multi-class SVDD equipped with a dynamic time warping kernel has been proposed to distinguish between several fire and non-fire classes in sensor networks [4]. However, these sensor-driven frameworks are typically trained in a fully supervised regime at the window level, under the assumption that every time window is labeled as fire or non-fire. In practical deployments, obtaining such labels requires expensive and hazardous staged experiments in dedicated facilities that yield only a limited set of observations. Meanwhile, deployed multi-sensor systems continuously generate large volumes of unlabeled time-series data during normal operation.

Graph-based semi-supervised learning exploits unlabeled data by building a similarity graph and enforcing smoothness of labels or scores through Laplacian penalties on neighboring nodes [31,32]. In anomaly detection, such graph-based methods have been applied at node, edge, and graph levels across diverse domains [33,34]. Within SVDD, graph-based semi-supervised SVDD augments the objective with a k-NN graph Laplacian to leverage the structure of unlabeled data [35], while manifold-regularized SVDD further enforces similar scores for neighboring samples to improve robustness to noisy labels [36]. Related CNN–SVDD architectures combine convolutional feature learning with SVDD and graph regularization for industrial condition monitoring, such as wind-turbine health assessment [37].

Despite these advancements, boundary-focused deep anomaly detection for high-dimensional, multi-channel time series remains limited under realistic labeling constraints. DeepSAD introduces deep representations and, in principle, can use a small labeled set [8]. In its standard form, the method incorporates unlabeled data only through point-wise losses on individual samples. It does not include interaction terms that capture relationships among unlabeled instances in latent space. As a result, the manifold structure of unlabeled data is not directly reflected in the boundary-focused objective. This limitation restricts how effectively information from scarce labels can propagate along the geometry of the unlabeled data. DeepSAD-based methods have also been rarely studied in safety-critical multi-sensor monitoring, where labels are costly and hazardous to obtain, unlabeled streams are abundant, and both missed detections and false alarms carry substantial operational and safety risks [4,5,28,29,30]. These settings demand extensions of DeepSAD with several properties [25]. These gaps motivate extensions of DeepSAD that (i) preserve a boundary-focused one-class formulation while explicitly modeling manifold interactions among unlabeled samples, (ii) directly link anomaly scores of neighboring instances via the latent-space geometry, (iii) are tailored to semi-supervised label-budget constraints in multi-channel sensor monitoring, and (iv) provide sample-level interpretability by associating unlabeled samples with nearby labeled normal and anomalous patterns [25].

Motivated by these challenges, this paper introduces Anomaly-Aware Graph-based Semi-Supervised Deep SVDD (AAG-DSVDD), a boundary-focused deep one-class method for high-dimensional and multi-channel time series under realistic label budgets. Specifically:

- 1.

- We introduce AAG-DSVDD, a semi-supervised, center-based objective that maintains the DeepSVDD-style hypersphere formulation while explicitly modeling manifold relationships through a label-aware k-NN graph on latent representations. Unlabeled samples preferentially connect to reliable labeled neighbors, and a graph regularizer on their squared distances to the center couples their anomaly scores. This design propagates information from the limited labeled set along the manifold structure of the unlabeled data. The resulting graph-regularized latent representation also supports sample-level interpretability, since anomaly scores for unlabeled samples can be inspected, together with their nearest labeled neighbors in latent space, and the graph regularizer encourages nearby points with strong graph connections to attain similar anomaly scores.

- 2.

- We evaluate AAG-DSVDD on a controlled synthetic dataset and on three public benchmark datasets (Thyroid, Heart, and Arrhythmia) under semi-supervised label-budget constraints. On these benchmarks, we compare against four representative one-class baselines: OC-SVM, SVDD with an RBF kernel (SVDD-RBF), DeepSVDD, and DeepSAD. AAG-DSVDD consistently achieves higher F1-scores than these baselines, indicating more accurate separation between normal and anomalous samples.

- 3.

- We further assess AAG-DSVDD on a real multi-sensor fire-monitoring case study under comparable semi-supervised label-budget constraints, using the same set of baselines. In this application, the experimental results demonstrate faster fire-event detection and competitive pre-fire false-alarm rates compared to these baselines.

- 4.

- To the best of our knowledge, this constitutes the first AAG-DSVDD application to semi-supervised multi-sensor fire detection.

The remainder of this paper is organized as follows: Section 2 introduces the problem setting and DeepSAD. Section 3 presents the proposed AAG-DSVDD approach. Section 4 describes the experimental setup and results on a controlled synthetic dataset and three public benchmark datasets and presents an ablation study of AAG-DSVDD. Section 5 reports the multi-sensor fire-monitoring case study under semi-supervised label-budget constraints. Finally, Section 6 concludes the paper and outlines directions for future work.

2. Background and Problem Formulation

In this section, we formalize the semi-supervised anomaly detection setting considered in this work, introduce the notation used throughout the paper, and present the DeepSAD objective that serves as our baseline. We then discuss the limitations of DeepSAD in leveraging the geometry of unlabeled data and motivate the need for graph-based regularization.

2.1. Semi-Supervised Anomaly Detection Setting and Notation

Let denote the input space and the latent space induced by a parametric encoder

where collects the weight matrices of all layers in the encoder network. For an input vector , we write

for its latent representation.

We consider a semi-supervised one-class anomaly detection setting, in which only a small subset of samples is labeled, while the remaining majority is unlabeled and may contain both normal and anomalous instances. Formally, the training data consist of three subsets: a set of labeled normal samples:

a set of labeled anomalous samples:

and a set of unlabeled samples:

We use the convention for labeled normal samples, for labeled anomalous samples, and for unlabeled samples. We denote the numbers of observations by , , and for , , and , respectively, and write for the total number of training observations.

The objective of one-class anomaly detection is to learn a decision function that assigns an anomaly score to each sample, where larger scores indicate stronger evidence of anomalous behavior. A threshold on then yields binary anomaly decisions. In center-based one-class models, the decision function is defined through distances to a learned center . Specifically, for each sample , we define its latent representation and its squared Euclidean distance to the center

The scalar serves as the core anomaly score, optionally combined with a learned radius to form a decision rule, such as “normal if ” and “anomalous otherwise”.

In the semi-supervised setting considered here, the goal is to learn the encoder parameters W and the center vector (and, if used, the radius R) from the labeled and unlabeled training data, such that labeled normals are mapped close to the center, labeled anomalies are mapped far from the center, and unlabeled points are taken into account in a way that is robust to possible contamination and that exploits their geometric structure in latent space.

2.2. Overview of DeepSAD

DeepSAD extends DeepSVDD to the semi-supervised setting by adding a label-dependent term for a small set of labeled examples while maintaining the same center-based hypersphere structure in latent space [8].

Using the notation from Section 2.1, we define the set of all labeled observations as and note that it contains samples, while contains unlabeled samples, with in total. The DeepSAD loss can then be written in our notation as the following optimization problem over W:

The first term in (1) is exactly the DeepSVDD loss applied to the unlabeled data, encouraging all unlabeled samples to lie close to the center in latent space. The second term is the semi-supervised extension: for labeled normals in with , the exponent yields

which reduces to a standard squared-distance penalty and encourages these samples to be mapped close to the center . For labeled anomalies in with , the exponent yields

an inverse squared-distance penalty that is minimized by pushing these samples far away from the center. The scalar controls the relative weight of the labeled term relative to the unlabeled term, while is the strength of the weight decay applied to the weight matrices via the Frobenius norms .

The anomaly score for any sample is defined as the Euclidean distance of its latent representation to the center:

A threshold on is then used to distinguish normal and anomalous samples. DeepSAD therefore preserves the center-based one-class decision mechanism while incorporating label information through the exponentiated term for and the weighting parameter , in combination with the unlabeled DeepSVDD term.

2.3. Limitations of DeepSAD and Need for Graph-Based Regularization

Although DeepSAD is a flexible and effective framework for semi-supervised anomaly detection, its objective in (1) remains fundamentally point-wise: each sample contributes to the loss only through its own distance and, for labeled samples, its own label . The unlabeled term aggregates individual squared distances for , and the labeled term aggregates squared distances raised to the label exponent for , but there are no interaction terms coupling different samples or encoding a neighborhood graph, local density, or manifold structure in latent space. Consequently, unlabeled data influence the model only indirectly through penalties on individual points, rather than through constraints that explicitly relate nearby samples.

This strictly point-wise approach also restricts how information from the small labeled subset can spread into the unlabeled pool. In realistic semi-supervised settings, both labeled normal and anomalous examples are scarce, whereas unlabeled data densely occupy the regions in which a robust decision boundary must be learned. Ideally, unlabeled instances lying in areas dominated by labeled normals should naturally acquire lower anomaly scores, while those residing close to labeled anomalies should be driven toward higher scores. In DeepSAD, such behavior can only arise indirectly through the shared encoder parameters W; there is no explicit mechanism that couples the anomaly scores of nearby points or enforces consistency along the underlying data manifold.

Moreover, the absence of an explicit structure over samples constrains interpretability. DeepSAD provides a scalar anomaly score for each sample through its distance to the center, but it does not reveal how a given unlabeled sample is positioned relative to nearby labeled normals and anomalies in latent space. For many safety-critical applications, it is desirable not only to identify an observation as anomalous, but also to relate this decision to representative neighboring patterns that support or contextualize the score.

Taken together, these limitations motivate the incorporation of an explicit neighborhood structure into the DeepSAD framework, so that label information can propagate along the data manifold and anomaly scores are encouraged to be locally consistent and more interpretable. In the next section, we introduce a DeepSAD variant that realizes this idea.

3. Anomaly-Aware Graph-Based Semi-Supervised Deep SVDD

In this section, we introduce AAG-DSVDD, a DeepSAD-style extension that preserves the center-based hypersphere geometry in latent space while explicitly exploiting the geometry of labeled and unlabeled data. The method combines (i) a soft-boundary enclosure on labeled normals, (ii) a margin-based push-out on labeled anomalies, (iii) a center-pull on unlabeled samples, and (iv) a graph regularizer on the squared-distance field over a label-aware, locally scaled latent k-NN graph.

We retain the notation of Section 2.1: denotes an input vector, its latent embedding, a latent center, and the label indicating a labeled normal, unlabeled, or labeled anomalous sample, respectively. We write

for the squared distance of to the center, and we use the notation for the positive part of u.

3.1. Latent Encoder, Center, and Radius

The AAG-DSVDD model uses a deep encoder:

implemented either as a multilayer perceptron (MLP) or as a long short-term memory (LSTM)-based sequence encoder [38] for multi-channel time series. For each input , we write

for its latent representation.

In the latent space, we maintain a center vector and a non-negative radius . The squared distance of to the center is

These distances form the basis of both the training objective and the anomaly score at test time, with the sign of indicating whether an observation lies inside or outside the learned hypersphere. The practical initialization and updating of and R follow standard soft-boundary Deep SVDD practice and are detailed in Section 3.4.

3.2. Label-Aware Latent k-NN Graph with Local Scales

To exploit the geometry of the joint labeled–unlabeled sample, we construct a label-aware k-NN graph over latent embeddings with node-adaptive Gaussian weights. Let

denote the matrix of embeddings for all n training samples. For each node , we find its k-nearest neighbors in latent space and denote their indices by , using Euclidean distance in and recording the corresponding distances .

We assign each node a local scale from the empirical distribution of its neighbor distances. Concretely, we compute the median of the nonzero distances in and use it as a robust estimate of the local density scale. This yields a self-tuning, node-adaptive Gaussian affinity:

in the spirit of locally scaled kernels for spectral clustering [39]. Using in the denominator adapts the effective kernel width to the local density around both endpoints.

We then apply a label-aware edge policy. For nodes with (unlabeled), we retain all k-nearest neighbors in , irrespective of their labels. In contrast, when (labeled), we scan the neighbors of i in order of increasing distance and retain only those nodes j with (unlabeled), until k neighbors are selected or the candidate list is exhausted. This construction ensures that most outgoing edges from labeled points terminate in unlabeled nodes, allowing for label information to propagate into the unlabeled pool while reducing the risk of overfitting to the sparse labeled set.

Let denote the resulting sparse affinity matrix, with for pairs that do not form edges. To keep the overall scale comparable across nodes of different degrees, we row-normalize W to obtain

and then construct a symmetrized affinity matrix:

Finally, we define

a symmetric matrix that encodes the latent k-NN graph and will later be used through the quadratic form on the vector of squared distances .

This formulation is motivated by earlier research on graph-based semi-supervised learning and manifold regularization, in which the smoothness of a function defined on a weighted graph captures the geometry of labeled and unlabeled data [31,32,35].

3.3. Loss Components and Overall Objective

Let , , and denote the sets of labeled normals, labeled anomalies, and unlabeled samples, with cardinalities , , and , respectively. For each training sample , we write for its latent embedding and

for its squared distance to the center. We collect these distances into the following vector:

Throughout this subsection, we use the notation for the hinge operator, and we write hinge-type penalties with an exponent .

AAG-DSVDD combines four primary components: a graph-based regularizer on the squared-distance field, a soft-boundary enclosure on labeled normals, a margin-based push-out on labeled anomalies, and an unlabeled center-pull, together with a soft-boundary radius term and standard weight decay.

3.3.1. Graph Regularizer on Squared Distances

Using the symmetric matrix L defined in (5), which encodes the latent k-NN graph, we define a smoothness penalty on the squared distances as follows:

where collects the squared distances . Since with M the normalized, symmetrized affinity matrix from Section 3.2, we can rewrite

For a given overall scale , minimizing is equivalent to maximizing . Because and is larger on pairs of nodes that are strongly connected in the latent k-NN graph, the term becomes large when neighboring nodes with high affinity carry similar squared distances. Thus, configurations in which varies smoothly over high-affinity regions of the graph are favored, whereas sharp changes in across strongly connected nodes tend to increase .

In effect, encourages neighboring samples in latent space to have compatible anomaly scores , allowing for information from the limited labeled normals and anomalies to diffuse into the unlabeled pool.

3.3.2. Labeled-Normal Soft-Boundary Enclosure

For labeled normals , the model should treat the hypersphere of radius R as a soft boundary: most normal embeddings are expected to lie inside, while a small fraction of violations is tolerated. Using the squared distances , we penalize such violations through a hinge-type term:

where denotes the hinge operator, is a soft-boundary parameter, and controls the curvature of the penalty. Only labeled normals with contribute to . When a normal sample lies inside the hypersphere (), its contribution is zero, whereas normals outside the boundary incur a positive loss that grows as . Normalizing by ensures that the penalty scales with the proportion of violating normals rather than their absolute count, so that the effective tightness of the hypersphere is controlled by , as in soft-boundary Deep SVDD [13], and not by the number of labeled normal samples. In particular, smaller values of correspond to a tighter enclosure (fewer tolerated violations), while larger values allow for a greater fraction of labeled normals to lie near or beyond the boundary.

3.3.3. Labeled-Anomaly Margin Push-Out

For labeled anomalies , we enforce a clearance margin beyond the hypersphere:

where is an anomaly weight coefficient. This term is active only when an anomaly lies inside the hypersphere or within the margin region . Under such conditions, pushes its embedding farther from the center, either by increasing or, through the joint optimization, discouraging increases in that would otherwise encapsulate it.

3.3.4. Unlabeled Center-Pull

Unlabeled samples provide dense coverage of the data manifold but may contain contamination. To stabilize the global scale of the squared-distance field and keep the center anchored when is small, we apply a label-free pull-in on unlabeled distances:

This term reduces the mean of over the unlabeled pool toward , complementing the local smoothness imposed by . Its influence is controlled by a coefficient .

3.3.5. Overall Objective

Combining all components, the full AAG-DSVDD loss is

where scales the graph regularizer, controls the unlabeled center-pull, and is a weight-decay coefficient on the encoder parameters . The explicit term discourages unnecessarily large hyperspheres; together with and the soft-boundary radius update based on labeled normals, it yields behavior analogous to soft-boundary Deep SVDD, now coupled with anomaly margins, unlabeled pull-in, and graph-based smoothing. When and , the objective reduces to a soft-boundary Deep SVDD-like loss acting on labeled normals and labeled anomalies. Further setting recovers the standard soft-boundary Deep SVDD formulation on labeled normals alone.

The exponent shapes the hinge-type penalties in and . For , violations are penalized linearly, yielding piecewise-constant gradients whenever . For , the penalty is quadratic in the violation, and the gradients scale linearly with its magnitude. In our experiments, we use , which empirically produces smoother, magnitude-aware updates and improves training stability, particularly in the presence of scarce labels and the additional graph regularizer.

The different components of (10) interact to shape the decision boundary in a neighborhood-aware manner. The labeled-normal term and the radius R establish a soft hypersphere around that encloses most labeled normals and treats their squared distances as a reference scale for normal behavior. The anomaly margin term pushes labeled anomalies beyond by at least m, so that regions of latent space associated with anomalous patterns are encouraged to have larger and lie outside the hypersphere. The unlabeled center-pull stabilizes the global level of the squared-distance field when labeled normals are few, preventing uncontrolled drift of for unlabeled samples. Finally, the graph regularizer couples these effects along the latent k-NN graph by favoring configurations in which strongly connected neighbors have compatible squared distances. As a result, unlabeled samples that are well embedded in normal-dominated neighborhoods tend to inherit small and be enclosed by the decision boundary, whereas unlabeled samples whose neighborhoods are influenced by anomalies are pushed toward larger and are more likely to lie outside the hypersphere, receiving higher anomaly scores .

Taken together, this objective provides several practical advantages for semi-supervised anomaly detection under limited labels. First, it strictly generalizes soft-boundary Deep SVDD and DeepSAD. By suitable choices of , the practitioner can interpolate between purely one-class training on labeled normals, supervised separation of labeled anomalies, and graph-regularized semi-supervision on unlabeled data. Second, the label-aware graph term lets scarce anomaly labels influence whole latent neighborhoods rather than single points, which improves robustness in high-dimensional and partially labeled settings such as fire monitoring. Third, the additional structure appears only in the training loss. At test time, AAG-DSVDD keeps the simple distance-to-center scoring rule , so inference remains lightweight and easy to interpret.

Finally, AAG-DSVDD also offers practitioners a practical way to obtain sample-level interpretation, which is important when most observations are unlabeled. Because the latent space has been shaped by the label-aware k-NN graph and its regularizer, nearby points with strong graph connections tend to share similar anomaly scores. A k-NN search in this space can therefore identify labeled neighbors for any unlabeled sample, whose labels and contextual descriptions provide an example-based interpretation of its anomaly score. In practice, this neighborhood inspection allows operators to see whether a high anomaly score is associated primarily with labeled anomalous patterns or with benign operating conditions, yielding a transparent, example-based explanation for each unlabeled sample.

3.4. Optimization and Anomaly Scoring

The encoder parameters W and the soft-boundary radius R are optimized by stochastic gradient methods with mini-batch training, combined with periodic closed-form updates of and periodic reconstruction of the latent k-NN graph. This procedure follows standard Deep SVDD practice while incorporating the additional semi-supervised and graph-based terms in (10). In our implementation, training runs for epochs with mini-batches of size B.

We first perform a short warm-up phase on labeled normals in . During warm-up, the encoder is trained for a few epochs to minimize the average squared norm of the embeddings:

which stabilizes the latent representation around the origin. After the warm-up phase, we determine the center as the empirical average of the embeddings corresponding to the labeled normal samples:

and then keep fixed. We employ a block-coordinate strategy for the soft-boundary radius. Following the standard training procedure of the soft-boundary Deep SVDD [3,13], we do not backpropagate through R. Instead, we treat as a scalar quantity that is updated independently of the gradient-based optimization. Using the current distances of the labeled normal samples, we set

where denotes the empirical -quantile, and controls the tolerated fraction of normal violations. During training, is held fixed within each epoch, while W is updated, and after selected epochs, we refresh by reapplying the same quantile rule to the current squared distances of labeled normals. In practice, this refresh is performed every epochs starting from a user-specified epoch . This yields a soft-boundary behavior, in which roughly a fraction of normals may lie on or outside the hypersphere, matching the interpretation of in the original soft-boundary Deep SVDD formulation [13].

Within each epoch, we sample mini-batches of size B from the combined training set . For each mini-batch, we compute embeddings , squared distances , and the batch contributions of the labeled-normal, labeled-anomaly, and unlabeled terms , , and . The graph term is not evaluated at the mini-batch level. Gradients of the resulting mini-batch loss are backpropagated through the encoder parameters W, while and the graph matrix are treated as constants. We use the Adam optimizer [40] with weight decay to minimize (10) with respect to W for fixed .

To integrate the graph regularizer, we periodically reconstruct the label-aware latent k-NN graph using the current embeddings. At the start of each graph-refresh interval (every epochs), we first compute for all training samples, then rebuild the label-aware k-NN graph with local scaling, and finally construct the symmetric matrix L, as defined in Section 3.2. This matrix L is kept fixed until the next refresh. During this interval, if , we perform a separate full-batch gradient update on the graph term based on the current embeddings, thereby updating W while keeping L unchanged. We do not backpropagate gradients through the graph construction process or the quantile updates of . Consequently, the optimization procedure alternates between mini-batch updates on the labeled and unlabeled objectives and occasional full-batch updates that smooth the squared-distance field over the latent k-NN graph.

Let denote the trained encoder parameters, radius, and center. For any test sample , we compute its anomaly score as the signed squared radial distance:

By this convention, points outside the hypersphere have and are treated as anomalies, inliers satisfy , and boundary points satisfy . A decision threshold on is chosen on a held-out validation set, for example, by targeting a desired false-alarm rate on validation normals or by maximizing a suitable detection metric. At test time, the graph is not required and AAG-DSVDD behaves as a standard center-based one-class model. Each input is processed by a single forward pass through to obtain its embedding, and the anomaly score is computed from the squared distance:

The AAG-DSVDD algorithm is summarized in Algorithm 1. The scoring and decision rule are summarized in Algorithm 2.

Computational Complexity

From a computational standpoint, AAG-DSVDD shares the same per-epoch feed-forward and backpropagation cost as DeepSVDD and DeepSAD, since all three methods use the same encoder architecture and mini-batch training scheme. For n training samples and mini-batch size B, this per-epoch cost is proportional to forward–backward passes through the encoder. The additional overhead in AAG-DSVDD comes from the graph regularization. The computational cost of the graph regularization is minimized by the locally connected structure of the label-aware latent k-NN graph. Although the graph matrix L has dimensions , it is mathematically sparse, as each node is connected to at most k neighbors, and edges originating from labeled samples are further restricted to connect only to unlabeled neighbors. Consequently, the number of non-zero entries in L scales linearly with n rather than quadratically. This allows for the graph regularization term to be evaluated in operations. Furthermore, the neighbor search—typically with spatial indexing—is amortized by performing the graph update only at intervals , independent of the encoder’s gradient iterations. Finally, the graph is used solely for regularization during training. At inference, the model requires only a single forward pass through the encoder to compute the distance to the center, thereby maintaining the same fast inference speed as standard DeepSVDD and DeepSAD.

Regarding memory usage, the main additional cost compared to DeepSVDD and DeepSAD comes from storing the latent embedding matrix and the sparse graph matrix L, which has non-zero entries. For the datasets examined in this paper, these requirements are easily accommodated on a single GPU or CPU. For larger-scale or streaming deployments, two mitigation strategies are particularly effective: (i) constructing the graph using only a subset of unlabeled samples or using mini-batch centroids, thereby reducing the effective n that determines the graph size; and (ii) lowering the graph refresh frequency or turning off the graph term after a fixed number of epochs once the latent representation has stabilized. These strategies enable users to balance graph accuracy against training overhead, while preserving the lightweight inference-time characteristics necessary for real-time fire monitoring.

| Algorithm 1 AAG-DSVDD (training) |

|

| Algorithm 2 AAG-DSVDD scoring and optional threshold selection |

|

3.5. Calibration of AAG-DSVDD Hyperparameters

The hyperparameters of AAG-DSVDD fall into three groups: (i) encoder capacity, (ii) graph construction, and (iii) loss weights. Encoder capacity is controlled by the latent dimension, hidden-layer sizes, network depth, and the choice of encoder type (MLP or LSTM); together, these determine how flexible the representation is. The latent k-NN graph is governed by the neighborhood size k and the locally scaled Gaussian kernel used to build the affinity matrix. The learning objective includes the soft-boundary parameter , the anomaly weight and margin m for labeled anomalies, the unlabeled center-pull weight , the graph smoothness weight , the hinge exponent r, and the weight decay .

All hyperparameters are selected using a held-out validation set. In practice, we use a simple protocol. First, we fix a small number of encoder architectures (latent dimensions and depths) to avoid overfitting the network structure. Second, for each encoder candidate, we perform grid search over the scalar coefficients and structural graph parameters. Scalar regularization weights, such as , , m, , , and , are tuned on logarithmic or linear grids. Structural parameters, such as k, the latent dimension, and the depth, are chosen from discrete sets of candidate values. The final configuration is selected based on validation performance.

Within this validation-based tuning protocol, encoder choices determine the expressive capacity of , while the remaining hyperparameters control how this representation is converted into a decision boundary. The loss coefficients directly shape the decision boundary. The soft-boundary parameter has the same role as in soft-boundary Deep SVDD. Smaller values enforce a tighter hypersphere around labeled normals. The anomaly weight and the margin m determine the strength and extent to which labeled anomalies are pushed away from the center. Larger values emphasize separation, but they can overreact to a small number of anomaly labels. The unlabeled weight determines how much the unlabeled pool pulls the center and the radius. High lets the boundary track the overall unlabeled distribution, which is useful when contamination is low. Low keeps the model closer to a purely labeled one-class setting. The graph weight scales the smoothness penalty on squared distances over the latent k-NN graph. Larger propagates supervision more strongly along the manifold but may oversmooth the boundary, often shrinking the effective decision region when the graph is dominated by normal and unlabeled samples. Smaller limits geometric coupling and allows for more locally adaptive decision boundaries. The neighborhood size k controls graph density and the scale of smoothing. A larger k yields broader and more global regularization. A smaller k produces more localized and fine-grained boundaries.

4. Experiments on Synthetic and Public Datasets

We first study AAG-DSVDD in a controlled two-dimensional setting, where the geometry of normals, anomalies, and the learned decision boundary can be visualized. We compare AAG-DSVDD against DeepSVDD [13], DeepSAD [8], OC-SVM with a radial basis function (RBF) kernel (OC-SVM) [12], and classical SVDD with an RBF kernel (SVDD-RBF) [11]. Then, performance comparison is carried out on popular anomaly detection public datasets from the UCI repository. All methods operate in the same semi-supervised setting, where only a small fraction of the training normals and anomalies are labeled and the remaining training points form a contaminated unlabeled pool.

4.1. Synthetic Dataset Experiments

4.1.1. Synthetic Dataset Description

We generate a two-dimensional dataset with a curved normal manifold and off-manifold anomalies. Normal samples are obtained by first drawing a Gaussian vector with diagonal covariance and then applying a quadratic bending transform:

followed by global scaling and the addition of small Gaussian noise. The parameters are set to , , bend , and noise level . This procedure produces a nonlinearly curved, banana-shaped normal region.

Anomalies are drawn from a mixture of two Gaussian distributions located above and below the banana. Concretely, half of the anomalous points are sampled from

and the remaining half from

with small Gaussian noise added. In all experiments, we generate 1200 normal points and 800 anomalies, for a total of 2000 observations in .

The synthetic datasets are randomly partitioned at the observation level into training, validation, and test sets. We first reserve of the data as a test set. From the remaining , we select as a validation set using stratified sampling, so that the proportions of normal and anomalous points are preserved in each split. This yields approximately of the data for training, for validation, and for testing. Within the training set, we construct a semi-supervised labeling scheme by retaining of the training normals as labeled normals with and of the training anomalies as labeled anomalies with . All remaining training points are treated as unlabeled with . This results in a setting where only a small subset of both classes is labeled, while the unlabeled pool contains a mixture of normals and anomalies, replicating realistic contamination in semi-supervised anomaly detection.

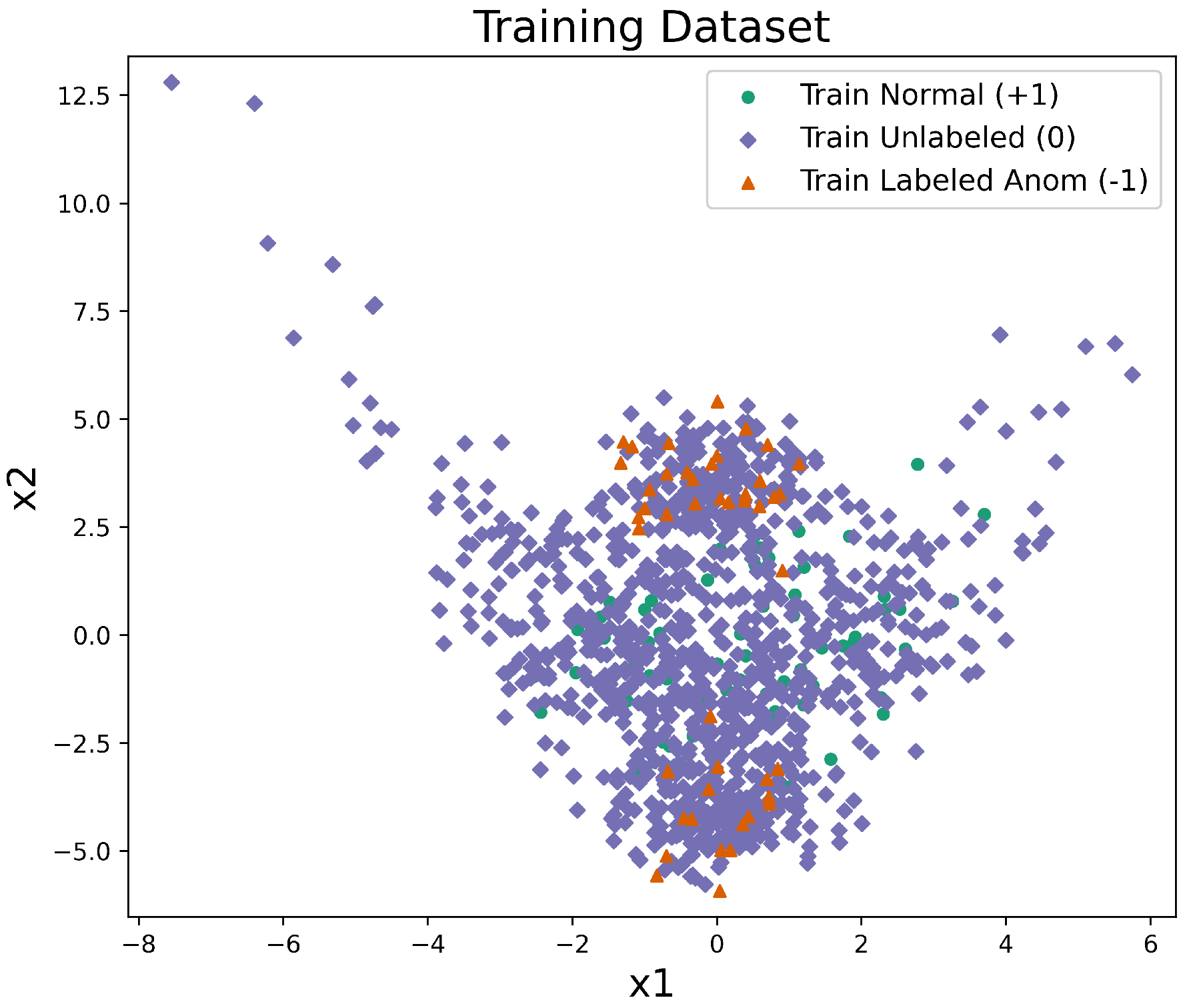

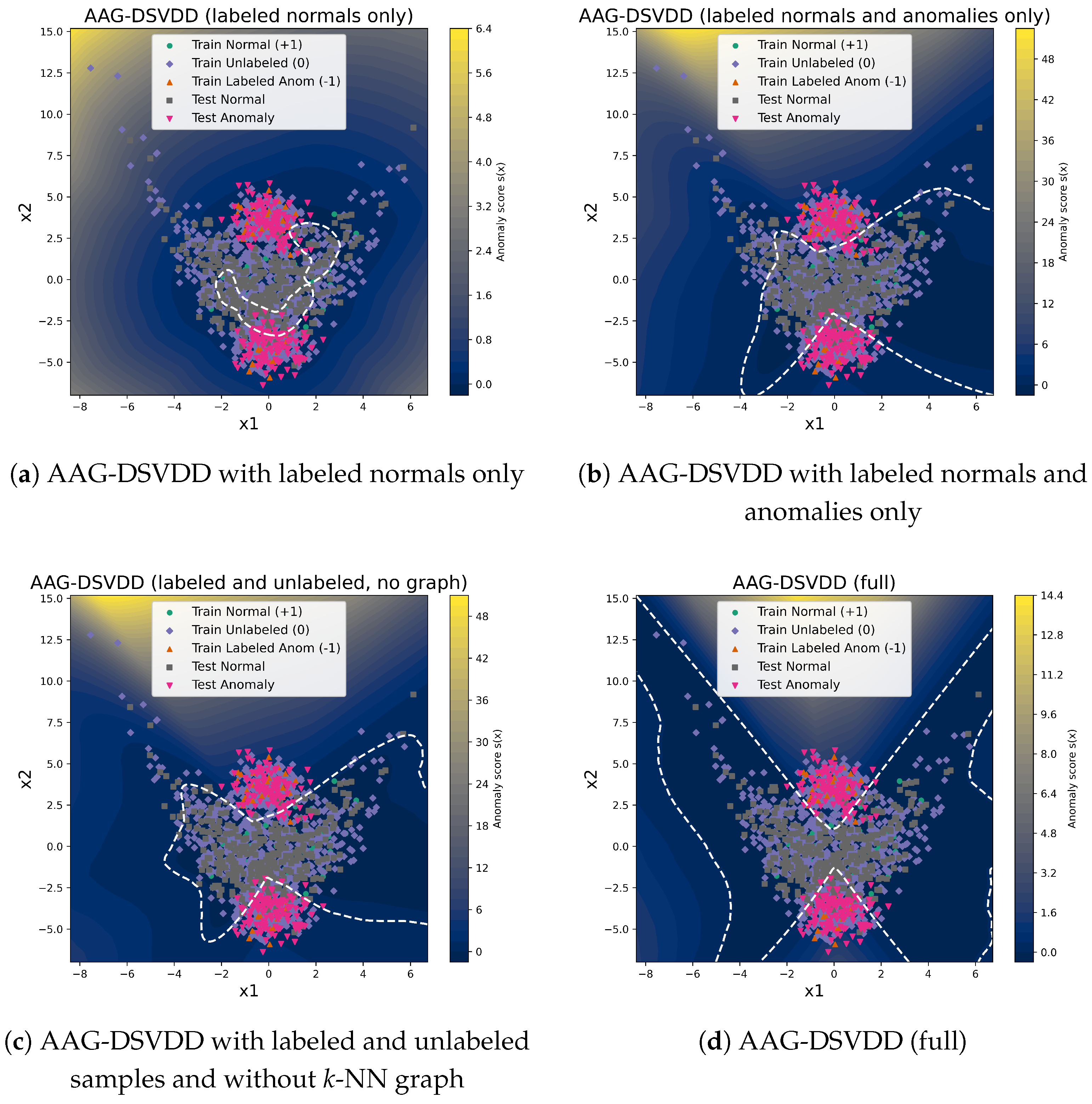

The same observation-level train/validation/test partitions and label budgets are used for all methods to ensure a fair comparison. For the deep methods, AAG-DSVDD and DeepSAD are trained on the full training set, using labeled normals () and labeled anomalies () together with the unlabeled points (). In contrast, DeepSVDD is trained only on the labeled normal samples (), representing a supervised deep one-class baseline. The classical OC-SVM and SVDD-RBF baselines are trained on the training normals only (), ignoring unlabeled and anomaly samples during training. The validation set is used exclusively for hyperparameter selection, while the test set is held out for final evaluation. A sample training dataset from an experiment is shown in Figure 1.

Figure 1.

Example training split for the synthetic banana dataset under the semi-supervised labeling scheme. Labeled normals () are shown as green circles, labeled anomalies ( −1) as orange triangles, and unlabeled points () as purple diamonds whose true class is hidden during training.

All experiments on the synthetic and public datasets were implemented on a Windows workstation equipped with a 13th Gen Intel Core i9-13900K (3.00 GHz), 64 GB of RAM, and an NVIDIA RTX 4090 GPU.

4.1.2. Evaluation Metrics for Synthetic Dataset Experiments

All methods output an anomaly score , with larger values indicating more anomalous points. For AAG-DSVDD, we use the signed squared radial distance:

DeepSVDD and DeepSAD use their standard distance-based scores in latent space, which have the same form up to the learned center and radius. For OC-SVM and SVDD-RBF, we use their native decision functions, with signs adjusted where necessary, so that higher values correspond to a higher degree of abnormality.

For each method and each random seed, we select a decision threshold on the validation set by scanning candidate thresholds and choosing the one that maximizes the F1-score on the validation split. On the held-out test set, an observation is then classified as anomalous if and normal otherwise. The anomaly class is treated as the positive class in all evaluations.

Given the resulting binary predictions, we compute the confusion counts (true positives), (false positives), (true negatives), and (false negatives) on the test set. From these we report precision, recall, F1-score, and accuracy:

To assess robustness to random initialization and sampling, we repeat the entire procedure over ten random seeds . For each method, we summarize performance by reporting the mean and standard deviation of the test F1-score across these seeds.

4.1.3. Hyperparameters Selection and Training Procedure

For the synthetic banana experiment, the hyperparameter settings for AAG-DSVDD, DeepSVDD, DeepSAD, OC-SVM, and SVDD-RBF were obtained through validation-based tuning and then kept fixed for all reported runs. Concretely, we first fixed the encoder architecture and then tuned the AAG-DSVDD hyperparameters on the validation split by a small grid search. Scalar coefficients in the objective () were selected from logarithmic or short linear grids. The neighborhood size of the latent k-NN graph was chosen from a discrete set of candidates. The final configuration corresponds to the setting that maximizes the validation F1-score subject to stable training behavior. The deep baselines were tuned in the same manner where DeepSVDD reuses the selected encoder and soft-boundary setting, and the labeled-term weight in DeepSAD is chosen from a short grid on the validation set. For OC-SVM and SVDD-RBF, we performed a small grid search over the one-class parameter and RBF kernel width around the scale heuristic and selected the configuration that maximizes validation F1. The one-class parameter was fixed at for all methods.

All deep methods use the same MLP encoder for fairness. The encoder maps to a 16-dimensional latent space through two hidden layers of sizes 256 and 128 with ReLU activations. AAG-DSVDD and DeepSAD are trained with the Adam optimizer. DeepSVDD is trained with the AdamW optimizer, which showed better performance on the validation split. All models are trained for 50 epochs, with mini-batches of size 128 and a learning rate of . The weight decay (decoupled weight decay in the case of AdamW) is set to for AAG-DSVDD and for DeepSVDD and DeepSAD. The anomaly class is treated as the positive class in all evaluations.

For AAG-DSVDD we use the soft-boundary formulation of Section 3 with squared hinges (), soft-boundary parameter , anomaly weight , and margin . The unlabeled center-pull weight is set to , and the graph smoothness weight to , which provides a moderate level of graph-based regularization. The latent k-NN graph uses neighbors with the locally scaled Gaussian affinities of Section 3.2. The radius update schedule and graph-refresh schedule follow Section 3.4, with quantile-based updates of at level and reconstruction of the latent k-NN graph every two epochs.

DeepSVDD uses the same encoder architecture and soft-boundary parameter but is trained on labeled normals only, following the standard one-class setup. It omits anomaly margins, unlabeled pull-in, and graph regularization. DeepSAD is instantiated with the same encoder and optimizes the semi-supervised DeepSAD objective on the same labeled and unlabeled splits, with labeled-term weight . Unlabeled points contribute through the DeepSAD loss. Both AAG-DSVDD and DeepSAD exploit labeled anomalies, whereas DeepSVDD does not.

OC-SVM and SVDD-RBF are trained on the same training normals (ignoring anomaly labels) with an RBF kernel and . OC-SVM uses the scale heuristic for the kernel width (included in the scikit-learn OC-SVM library), while SVDD-RBF uses a fixed kernel width parameter . These methods serve as classical non-deep one-class baselines with fixed kernels in the input space, in contrast to the learned latent geometry of the deep methods.

4.1.4. Results and Analysis

The performance comparison of the methods on the synthetic banana dataset is given in Table 1 and Table 2. Table 1 presents the performance of the methods in an experiment conducted on the synthetic banana dataset. AAG-DSVDD achieves the best performance on three metrics. It reaches an F1 score of 0.89 and an accuracy of 0.91. Its recall is 0.90, which is slightly lower than DeepSAD at 0.98, but AAG-DSVDD maintains a much higher precision of 0.87 compared to 0.49 for DeepSAD. DeepSVDD, OC-SVM, and SVDD-RBF also show imbalanced tradeoffs between recall and precision. DeepSAD and DeepSVDD favor recall and allow for many false alarms on normal samples, reducing their F1 scores and accuracies. OC-SVM and SVDD-RBF improve precision relative to deep one-class baselines but still fall short of AAG-DSVDD in F1, recall, and accuracy.

Table 1.

Per-method test performance on the synthetic banana dataset under the semi-supervised setting. For each model F1, recall, precision, and accuracy are reported. Bold entries indicate the best value for each metric across all methods.

Table 2.

F1 performance on the synthetic banana dataset over 10 random seeds. The row “Mean ± Std” reports the average and standard deviation over seeds. The last two rows give one-sided paired t-test and Wilcoxon signed-rank p-values for the null hypothesis that each baseline has F1 at least as large as AAG-DSVDD. Bold values indicate the best performance for each seed and the best average performance.

Table 2 examines robustness in ten independent runs with different random seeds. AAG-DSVDD consistently achieves the highest F1 score, with a mean of 0.88 and a standard deviation of 0.02. The strongest baseline is SVDD-RBF, with a mean F1 of 0.76 and a standard deviation of 0.04. OC-SVM, DeepSVDD, and DeepSAD achieve mean F1 scores of 0.71, 0.62, and 0.65, respectively, with moderately greater variability. The paired t-tests and the Wilcoxon signed-rank tests in the bottom rows of Table 2 compare each baseline with AAG-DSVDD under the one-sided alternative that AAG-DSVDD has a higher F1. All t-test p-values are below and all Wilcoxon p-values equal . These results show that the improvements of AAG-DSVDD over each baseline are not only large in magnitude but also statistically significant across different random realizations of the dataset and the semi-supervised labels.

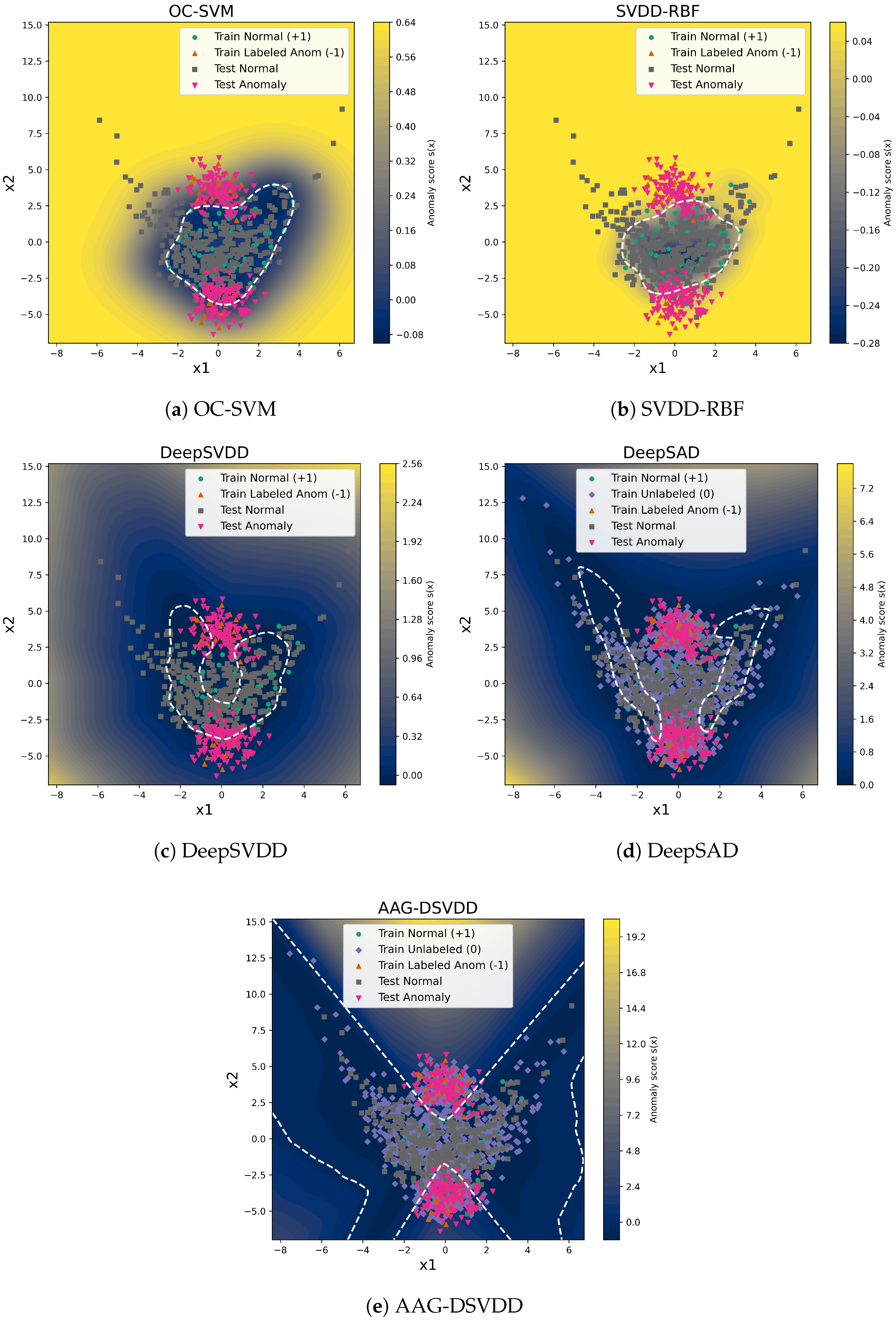

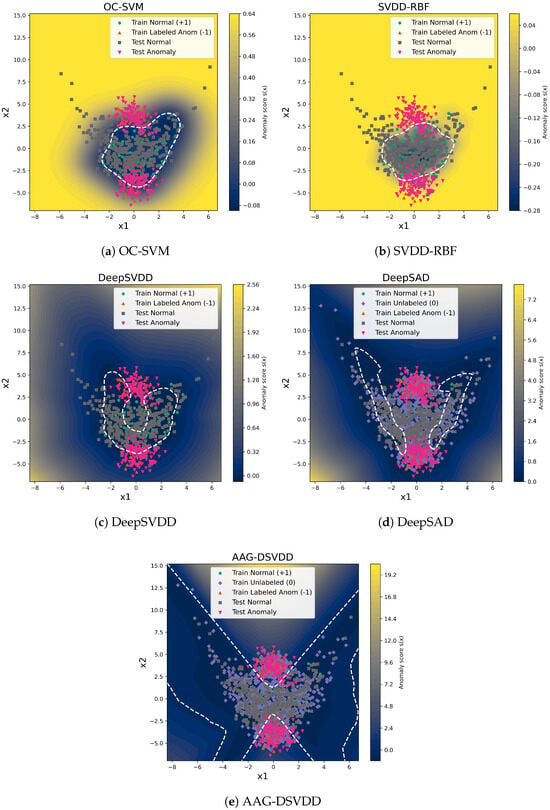

The decision boundaries obtained over an experiment are shown in Figure 2 for all the methods. These trends are consistent with how the different objectives use labels and geometry. DeepSVDD is trained only on labeled normals and fits a soft-boundary hypersphere in latent space. With few labeled normals, the learned region stays concentrated around the densest part of the data, so many legitimate normals in sparser areas are rejected as anomalies, which drives up false positives and reduces precision, accuracy, and F1. DeepSAD introduces labeled anomalies and an unlabeled term, but still relies on distances to a single center. In this setting, it tends to enlarge the decision region to recover anomalous points, which explains its very high recall but also its low precision.

Figure 2.

Anomaly-score surfaces and decision boundaries on the synthetic banana dataset under the semi-supervised setting (10% labeled normals and 10% labeled anomalies). The background heat map shows the anomaly score (brighter regions indicate higher scores), and the white dashed contour marks the decision boundary corresponding to the F1-maximizing threshold on the validation set for each method.

OC-SVM and SVDD-RBF learn directly in the input space with fixed RBF kernels without representation learning or graph structure. They form smooth one-class boundaries around the global data cloud and achieve better precision than DeepSVDD and DeepSAD, yet they cannot adapt the feature space or exploit the unlabeled manifold structure, so they remain clearly dominated by AAG-DSVDD in F1 and accuracy. In contrast, AAG-DSVDD combines a DeepSVDD-style enclosure with a latent k-NN graph and anomaly-aware regularization. The graph term couples scores along the latent manifold so that unlabeled samples help stabilize the center and radius, while anomaly-weighted edges and push-out penalties move the boundary away from regions associated with anomalies. This produces a decision region that covers most normals and excludes anomaly clusters, consistent with the higher and more stable F1 scores observed for AAG-DSVDD in Table 1 and Table 2.

Note that all deep models in this comparison (DeepSVDD, DeepSAD, and AAG-DSVDD) produce one-class decision regions defined by a single hypersphere in latent space, with the radius set by the learned quantile on labeled normals. When these distance-based boundaries are visualized in the original input space (in Figure 2) after the nonlinear mapping of the encoder, the corresponding curves can appear highly curved or even disconnected, despite arising from a single hypersphere in the learned latent representation.

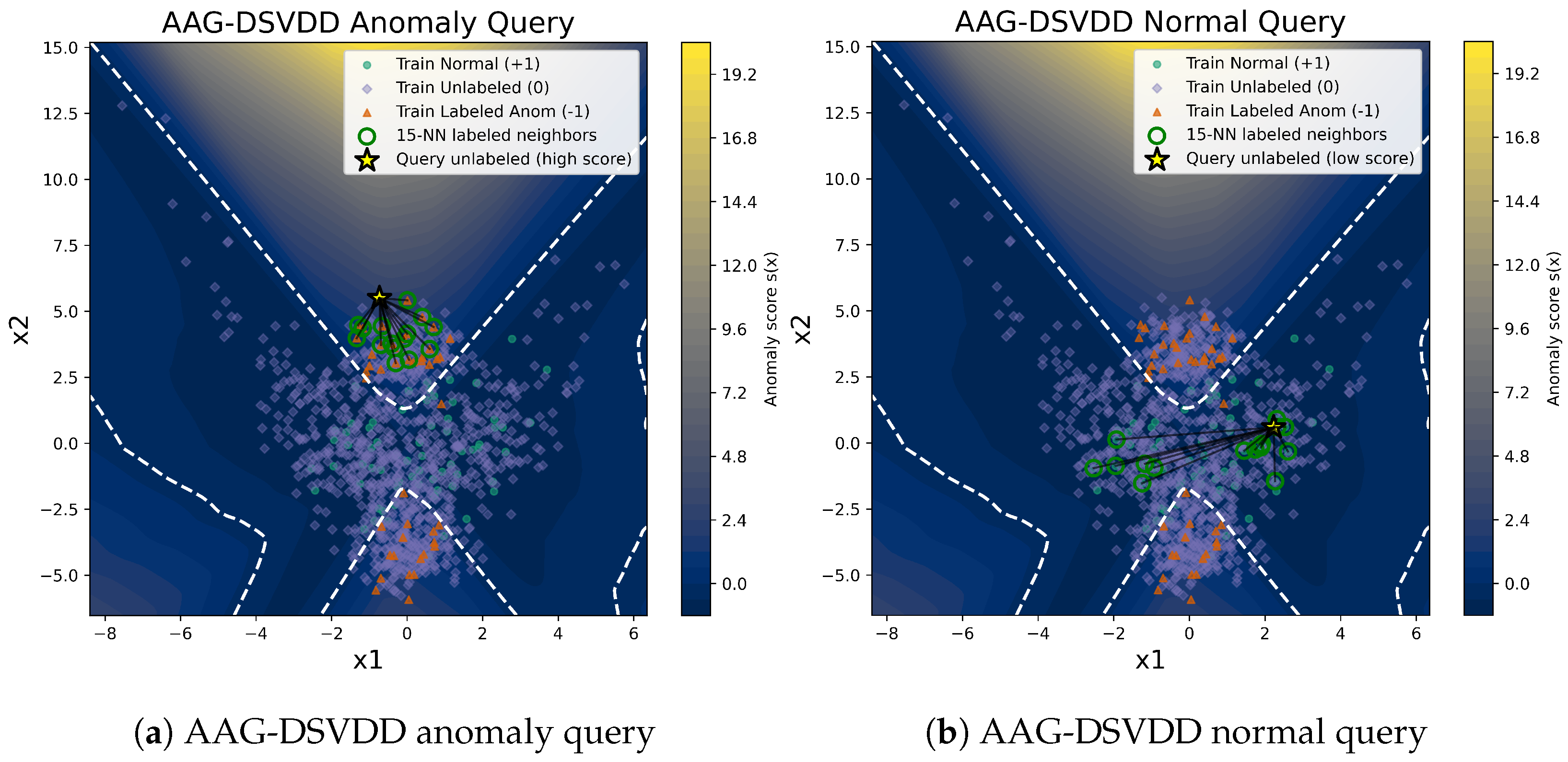

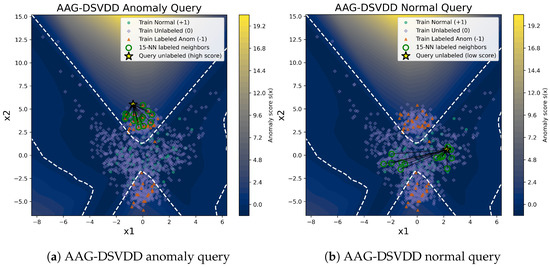

AAG-DSVDD also provides intuitive, example-based interpretation on this synthetic task. Figure 3 illustrates two representative unlabeled query points. One has a low anomaly score inside the learned normal region, and the other has a high anomaly score in the upper anomalous cluster. For each query, we perform a k-NN search in the latent space over the labeled training samples and overlay the resulting labeled neighbors in the input space together with the decision boundary. In the high-score query, the nearest neighbors are predominantly labeled anomalies concentrated in the upper anomalous region. This supports an anomalous interpretation and clarifies which abnormal pattern the model is matching. In the low-score query, the neighbors are labeled normals well inside the interior of the decision region. This indicates that the small score arises from similarity to benign operating conditions. These example-level views show that the graph-regularized latent representation and latent k-NN structure allow practitioners to inspect which labeled scenarios justify the anomaly score of an unlabeled sample instead of relying only on a scalar value.

Figure 3.

Sample-level interpretation for AAG-DSVDD on the synthetic dataset. The background shows the anomaly score , and the white dashed curve is the decision boundary. (a) Anomaly query: a high-scoring anomalous test point (yellow star) and its labeled neighbors (green circles), which are dominated by labeled anomalies in the upper cluster. (b) Normal query: a low-scoring normal test point and its labeled neighbors, which lie well inside the decision region and are dominated by labeled normals.

In the normal query of Figure 3, several labeled normal neighbors appear relatively far from the query in the two-dimensional input plane but remain nearest neighbors in latent space. This visually illustrates that the learned, graph-regularized latent representation reorganizes the data. Points that are distant in input space can become close in latent space; thus, the neighbors used for interpretation reflect the learned manifold structure rather than the raw Euclidean geometry of the original features.

4.2. Public Datasets Experiments

4.2.1. Public Datasets Description

We evaluate the proposed method on three real-valued benchmark data sets from the UCI Machine Learning Repository: Annthyroid, Arrhythmia, and Cleveland Heart Disease. A summary of their characteristics is given in Table 3.

Table 3.

Description of UCI public datasets used in the experiments.

In the Annthyroid dataset, the target variable distinguishes between three clinical classes: normal thyroid function, hypothyroidism, and hyperthyroidism. We regard the normal-function class as the normal class and treat both hypo- and hyperthyroid samples as anomalies. The Arrhythmia data set contains sixteen rhythm categories: one “no arrhythmia” group and fifteen arrhythmia types. We consider the “no arrhythmia” class as normal and aggregate all fifteen arrhythmia classes into a single anomaly class. In the Cleveland Heart Disease dataset, the diagnosis variable takes five discrete values indicating absence or graded presence of coronary artery disease. We treat patients with diagnosis value 0 (no disease) as normal and samples with diagnosis 0 (presence of disease) as anomalous.

4.2.2. Public Datasets Experiment Setup

The benchmark datasets are handled analogously to the synthetic data. For each dataset, we first construct binary labels according to the class definitions described above, where denotes a normal observation and denotes an anomaly. We then partition the observations into training, validation, and test sets using stratified sampling with respect to these binary labels. We first reserve of the data as a test set. From the remaining , we select as a validation set and use the rest for training, which yields approximately of the data for training, for validation, and for testing.

Within the training set, we design a semi-supervised labeling scheme by retaining of the normal training samples as labeled normals with and of the anomalous training samples as labeled anomalies with . All remaining training samples are considered unlabeled and assigned . The validation set is used for threshold selection and hyperparameter tuning. The test set is used performance evaluation. Both validation and testing sets preserve their binary ground-truth labels . The resulting samples in each set are summarized in Table 4.

Table 4.

Split statistics and semi-supervised labels for UCI data sets.

4.2.3. Hyperparameter Selection on Public Datasets

For the public datasets, we follow the same validation-based hyperparameter selection strategy as in the synthetic banana experiment and enforce a common architecture and optimization setup across all deep methods. All deep models (AAG-DSVDD, DeepSVDD, and DeepSAD) use an MLP encoder that maps to a 16-dimensional latent space through two hidden layers of sizes 256 and 128 with ReLU activations. All deep models are trained with the Adam optimizer (DeepSVDD with AdamW) for 50 epochs, mini-batches of size 128, and learning rate . The weight decay is set to for AAG-DSVDD and for DeepSVDD and DeepSAD. The soft-boundary parameter in AAG-DSVDD and DeepSVDD is fixed at for all datasets. For the one-class baselines, OC-SVM and SVDD-RBF also use ; OC-SVM employs the scale heuristic for the RBF kernel width , while SVDD-RBF uses a dataset-specific RBF width .

Within this shared setup, we tune the remaining scalar hyperparameters of all methods by grid searches on the validation split. For AAG-DSVDD, we select the unlabeled pull-in weight , graph weight , anomaly weight , margin m, and latent neighborhood size k, choosing the configuration that maximizes the validation F1-score under stable training. DeepSVDD and DeepSAD reuse the common encoder and optimization settings and are tuned as in the synthetic experiment, with the DeepSAD labeled-term weight selected on the validation set. For OC-SVM and SVDD-RBF, we perform short grids over the one-class and kernel parameters and retain and the dataset-specific RBF widths reported in Table 5. The final dataset-specific values are summarized in Table 5.

Table 5.

Dataset-specific scalar hyperparameters for the public dataset experiments.

4.2.4. Results and Analysis on Public Datasets

Table 6 reports the test performance of all methods on the three public datasets, using the metrics defined in Section 4.1.2. Across all datasets, AAG-DSVDD attains the highest F1-score and therefore offers the best overall balance between missed detections and false alarms. On Thyroid and Heart, it also achieves the highest accuracy and the strongest precision while maintaining high recall. On Arrhythmia, AAG-DSVDD still yields the best F1-score (0.675) with very high recall (0.919) and moderate precision. In this setting, SVDD-RBF attains slightly higher accuracy and precision but at the cost of a clear drop in recall.

Table 6.

Test-set performance of all methods on the public datasets under the semi-supervised label-budget setting. For each dataset, we report F1, recall, precision, and accuracy.

The baselines show clear trade-offs. DeepSVDD is trained only on labeled normals and tends to fit a compact region around the densest normal patterns. Its recall and F1-score are very low on Thyroid and Heart because many less typical normals are incorrectly flagged as anomalies. DeepSAD introduces labeled anomalies and an unlabeled term, which strongly improves recall. At the same time, it often enlarges the decision region too much, which lowers precision and reduces F1 and accuracy, especially on Thyroid and Heart.

OC-SVM and SVDD-RBF operate directly in the input space with fixed RBF kernels and no learned representation or graph structure. They often achieve better precision than DeepSVDD and DeepSAD, especially on Arrhythmia and Heart. However, they do not reach the F1 or accuracy of AAG-DSVDD on Thyroid and Heart and still struggle to balance precision and recall on Arrhythmia. Their fixed kernel geometry limits adaptation to complex data manifolds in the semi-supervised setting.

AAG-DSVDD combines a DeepSVDD-style enclosure with anomaly-aware graph regularization on the latent k-NN graph. The graph term couples scores of neighboring points, so unlabeled samples help refine the center and radius. Anomaly-weighted edges and margin-based push-out penalties move the boundary away from regions associated with anomalous behavior. On Thyroid and Heart, this produces a decision region that captures most normals and separates anomalies more cleanly, which leads to higher precision, F1, and accuracy than all baselines. On Arrhythmia, AAG-DSVDD remains competitive in precision and preserves very high recall, and this combination yields the best F1-score among all methods.

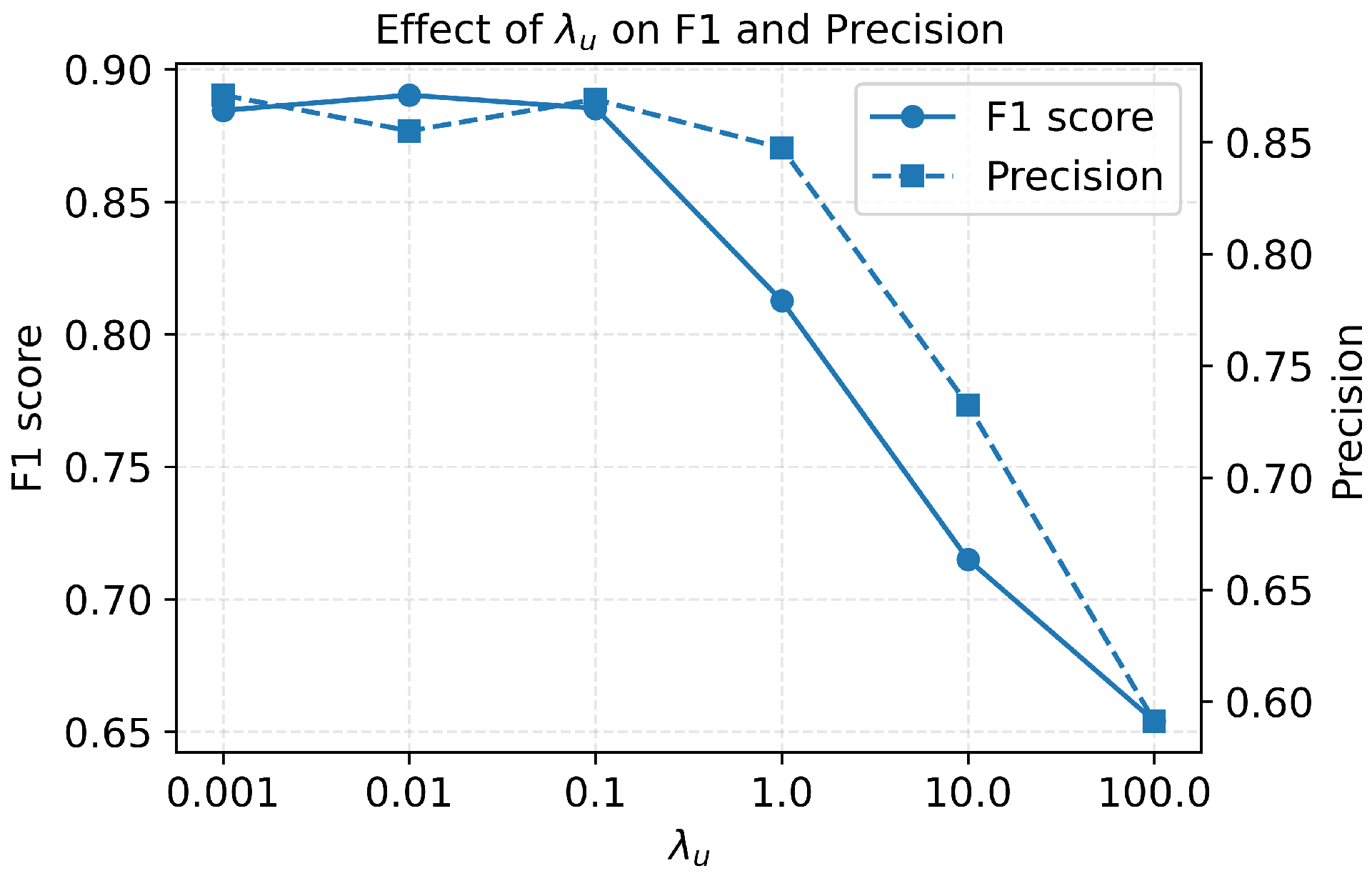

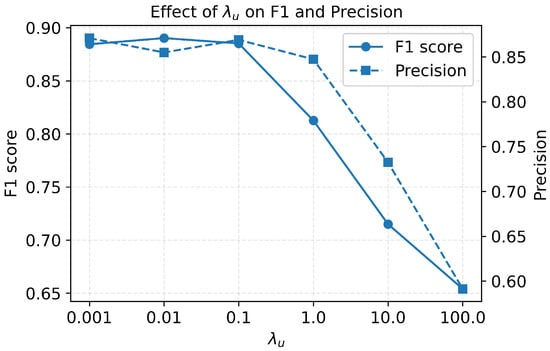

4.3. Effect of Graph Regularization Weight

To assess the impact of the graph regularizer, we varied on the synthetic banana dataset while keeping all other hyperparameters fixed. Figure 4 reports the resulting F1-score and precision. For small to moderate values (–), performance is stable as F1 remains around – and precision around –, indicating that the method benefits from graph-based smoothing but is not overly sensitive to the exact choice of in this range.

Figure 4.

Effect of the graph regularization weight on AAG-DSVDD performance on the synthetic banana dataset. The x-axis shows the values of , the left y-axis shows the F1-score, and the right y-axis shows the precision. The solid line plots the F1-score, whereas the dashed line plots precision. Performance is stable for small to moderate values and degrades once values become much larger.

As increases beyond 1, both F1 and precision degrade monotonically. At , the F1-score drops to about , and for very large regularization (), it falls below , with a corresponding precision below . This pattern is consistent with an over-smoothing effect. Excessively strong graph regularization pushes squared distances toward locally uniform values, obscuring the boundary between normal and anomalous regions. Based on these results, we use in the main synthetic experiments, a robust trade-off between leveraging the latent graph and avoiding over-regularization.

4.4. Ablation Study of AAG-DSVDD

We perform an ablation study on the synthetic banana dataset. The semi-supervised setting uses 10% labeled normals and 10% labeled anomalies in the training split. For this qualitative ablation on the banana data, we slightly adjust the AAG-DSVDD hyperparameters relative to Section 4.1.3 in order to obtain a clearer soft-boundary solution in the labeled-normal-only regime. We fix these settings for all four variants so that differences are due only to the loss terms. Table 7 summarizes the quantitative results.

Table 7.

Ablation of AAG-DSVDD on the synthetic banana dataset. F1, recall (Rec.), precision (Prec.), accuracy (Acc.), TP, FP, TN, and FN metrics are reported over the testing set.

In this ablation study, we use the soft-boundary formulation with squared hinges () and . The anomaly hinge weight is , and the margin is . The graph smoothness weight is , and the unlabeled pull-in weight is . The latent k-NN graph uses neighbors. The encoder has two hidden layers of sizes 256 and 128 and a 16-dimensional latent layer. We train with Adam, learning rate , weight decay , batch size 128, 50 epochs, and 2 warmup epochs.

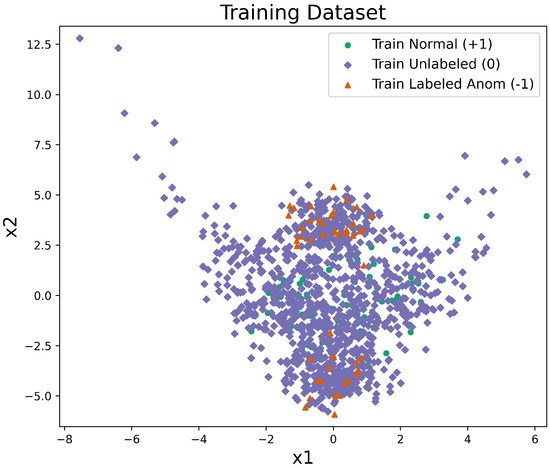

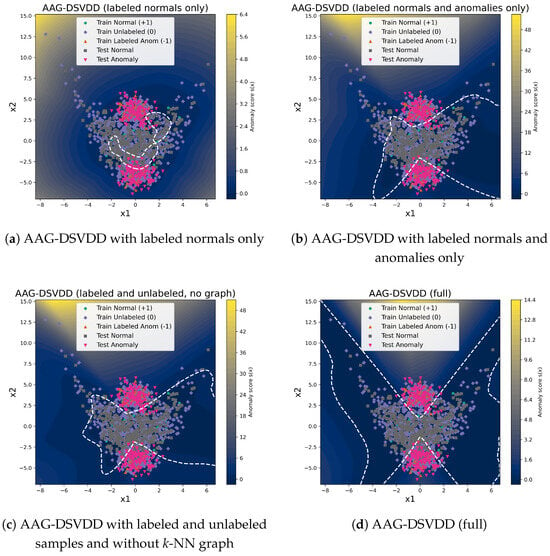

We study four variants: (i) AAG-DSVDD (with labeled normals only) keeps only the soft-boundary hinge on labeled normals and the radius term and removes the anomaly hinge, the unlabeled pull, and the graph term. (ii) AAG-DSVDD (with labeled normals and anomalies only) activates the anomaly hinge on labeled anomalies and still disables the unlabeled pull and the graph term. (iii) AAG-DSVDD (with labeled and unlabeled observations, without k-NN graph) uses the full loss except for the k-NN graph loss term and sets . (iv) AAG-DSVDD (full) uses all loss components.

The labeled-normals-only variant behaves like a DeepSVDD-style soft-boundary model that sees only labeled normals. The anomaly hinge is inactive in this configuration. The objective therefore reduces to a hypersphere on labeled normals and corresponds to a zero-anomaly-label regime for AAG-DSVDD. On banana, it achieves , recall , precision , and accuracy , with TP , FP , TN , and FN . In Figure 5a, the dashed contour forms a compact region around the dense central part of the banana-shaped normal distribution. It truncates the low-density outer regions and misses many normal points in the periphery.

Figure 5.

Decision boundaries of the AAG-DSVDD ablation variants on the synthetic banana dataset. The background shows the anomaly score surface , and the white dashed line marks the level set at the F1-maximizing threshold on the validation set. (a) Labeled normals only. (b) Labeled normals and anomalies only. (c) Labeled normals, anomalies, and unlabeled center pull. (d) Full AAG-DSVDD with labeled normals, labeled anomalies, unlabeled pull, and graph regularization.

AAG-DSVDD (with labeled normals and anomalies only) adds the anomaly hinge. Labeled anomalies are pushed outside the hypersphere and labeled normals are kept inside. On the banana dataset, this variant reaches , recall , precision , and accuracy , with TP , FP , TN , and FN . The boundary in Figure 5b bends along the banana-shaped normal region and separates the two anomaly clusters more clearly than the labeled-normals-only model. Some false alarms remain near the far ends of the banana-shaped normal region where the density of normals is low.

AAG-DSVDD (with labeled and unlabeled, without k-NN graph) also turns on the unlabeled center-pull term while keeping . The unlabeled set is large and contains both normals and anomalies. The anomaly hinge prevents contaminated unlabeled anomalies from collapsing into the center. The center-pull still helps to align scores of unlabeled normals with the labeled core. On the banana dataset, this variant attains , recall , precision , and accuracy with TP , FP , TN , and FN . In Figure 5c, the dashed contour tracks the banana-shaped manifold more closely and removes many of the remaining false alarms between the normal region and the anomaly clusters.

The full AAG-DSVDD model adds the graph regularizer on squared distances over the latent k-NN graph. The graph term spreads label information along the manifold and smooths the score field. On the banana dataset, the full model achieves , recall , precision , and accuracy , with TP , FP , TN , and FN . The contour in Figure 5d is smoother along the banana-shaped normal manifold and around the anomaly clusters than in the no-graph variant. The main effect of the graph is a consistent reduction in false positives while keeping recall high.

In summary, the overall ablation study highlights three effects. First, in the zero-anomaly-label regime AAG-DSVDD reduces to a DeepSVDD-style boundary with limited coverage of low-density normal regions. Second, adding the anomaly hinge and unlabeled pull substantially improves the boundary and already yields high F1 and recall under contamination. Third, the graph regularizer gives a smaller yet consistent gain by smoothing scores along the latent manifold and removing additional false alarms.

5. Case Study: Anomaly-Aware Graph-Based Semi-Supervised Deep SVDD for Fire Monitoring

In this section, we present a case study that applies the proposed AAG-DSVDD framework to real multi-sensor fire monitoring data. The goal is to detect the onset of hazardous fire conditions as early as possible while maintaining a low false-alarm rate. We first describe the fire-monitoring dataset and its preprocessing. We then detail the semi-supervised training protocol, baselines, and evaluation metrics used to assess detection performance in this realistic setting.

5.1. Fire-Monitoring Dataset Description

We evaluate the proposed method on a multi-sensor residential fire detection dataset derived from the National Institute of Standards and Technology (NIST) “Performance of Home Smoke Alarms” experiments [41]. In these experiments, a full-scale residential structure was instrumented and subjected to a variety of controlled fire and non-fire conditions. Sensor responses were recorded over time in systematically designed scenarios.

In our setting, each scenario is represented as a five-channel multivariate time series sampled at 1 Hz. At each time step t in scenario s, the observation vector contains measurements from five sensors in the following order: The first channel is a temperature sensor. The second channel measures CO concentration. The third channel records the output of an ionization smoke detector. The fourth channel records the output of a photoelectric smoke detector. The fifth channel measures smoke obscuration. Accordingly, each scenario s is stored as a matrix:

where denotes the duration of that scenario in seconds.

The dataset comprises multiple classes of fire scenarios. These include smoldering fires, such as the smoldering of upholstery or mattress experiments. They also include cooking-related heating and cooking fires and flaming fires. For each fire scenario, a true fire starting time (TFST) is annotated. This TFST indicates the earliest time at which the fire is deemed to have started based on the original NIST records and the observed sensor responses. Table 8 shows the description of the fire scenarios.

Table 8.

Fire scenarios used for training and testing in the AAG-DSVDD fire monitoring case study.

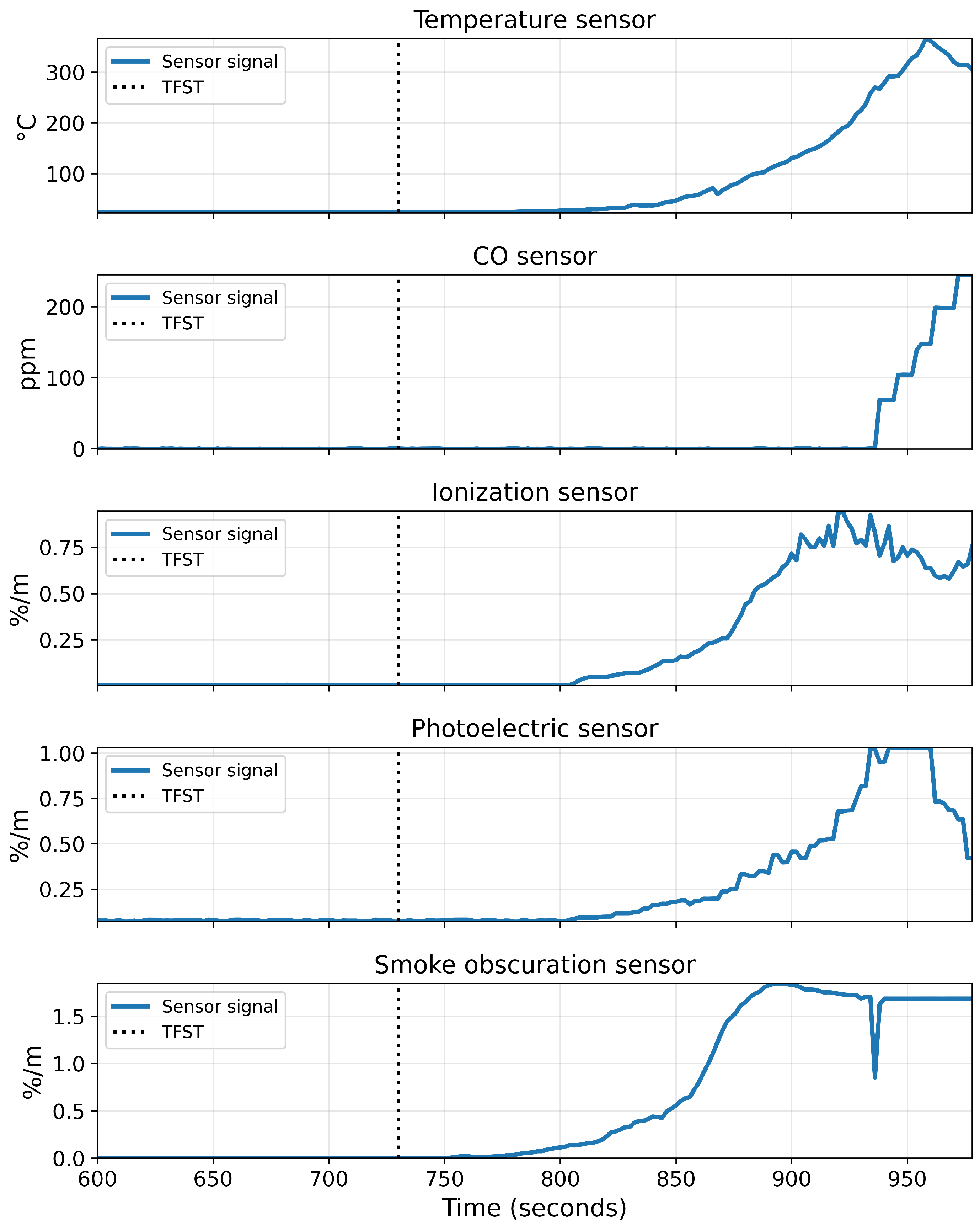

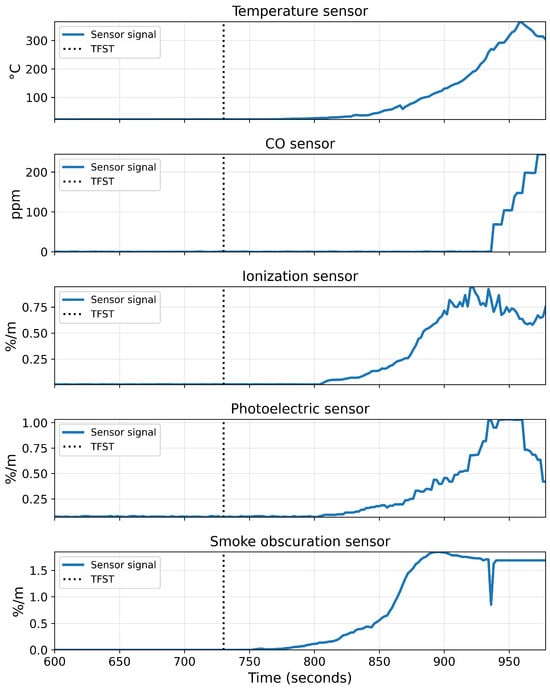

To build intuition about the multi-sensor fire dynamics, Figure 6 shows an excerpt from one fire scenario (Scenario 4 in Table 8). The plots show the five sensor channels sampled at 1 Hz: temperature (top), CO concentration, ionization smoke, photoelectric smoke, and smoke obscuration (bottom). The vertical dotted line marks the annotated s.

Figure 6.

Example of multi-sensor signals from a flaming-fire scenario. Each panel shows one sensor channel sampled at 1 Hz: temperature (top), CO concentration, ionization smoke, photoelectric smoke, and smoke obscuration (bottom). The vertical dotted line marks the annotated TFST.

Before TFST, all channels remain close to their baseline levels, with only small fluctuations attributable to noise. Shortly after TFST, the smoke obscuration begins to rise, followed by increases in the photoelectric and ionization detector outputs, and then a delayed but pronounced growth in CO concentration and temperature. This sequence reflects the physical development of the fire and highlights the temporal coupling between channels. It also motivates the use of windowed multi-channel inputs and a boundary-focused model that leverages the joint evolution of these signals rather than relying on any single sensor in isolation.

5.2. Window Construction and Experiment Setup

5.2.1. Sliding-Window Observations

We adopt a sliding-window observation structure similar to that used in previous real-time fire detection studies on this dataset. For each fire scenario s, let denote the five-dimensional sensor vector at second t, ordered as temperature, CO concentration, ionization detector output, photoelectric detector output, and smoke obscuration. We form an observation window of length w at time t as follows:

where w is the window length in seconds. Each scenario s thus produces a sequence of windows .

For each fire scenario s, an annotated is given in seconds. We assign a binary label to each window based on the time of its last sample relative to this TFST. If the last time index of the window satisfies , the window is labeled as fire, and we set . If the window ends strictly before the fire starts, that is, , the window is labeled as normal, and we set . These end-of-window labels form the base labels on which we build the semi-supervised setting in the next subsection.

For notational consistency with Section 2.1, we subsequently denote each window by and its binary label by . When we use an LSTM encoder, is treated as an ordered length multivariate time series w in . For methods that require fixed-length vector inputs, such as OC-SVM and kernel SVDD, we additionally define a flattened version with by stacking the entries of along the time and channel dimensions into a single vector.

5.2.2. Experiment Setup

We construct an LSTM-ready dataset of five-channel windows with separate training, validation, and test splits. The training set is derived from four fire scenarios (1, 2, 3, and 4). From each of these scenarios, we sample fixed-length windows of size s using the sliding-window construction described in Section 5.2.1. In each training scenario, we draw approximately 250 windows, with about 40% taken from the pre-TFST region and labeled as normal and about 60% taken from the post-TFST region and labeled as fire. This yields roughly 100 normal and 150 fire windows per training scenario, for a total of 1000 training windows across all four scenarios. In all cases, the binary label of a window is determined by the label at its last time step. For a semi-supervised setting, we randomly retain 10% of normal and fire windows as labeled samples, while the rest are considered unlabeled.

For evaluation, we generate dense sliding windows of length s with unit stride over complete scenarios. The validation windows are drawn from the same four scenarios (1, 2, 3, and 4), using all overlapping windows rather than the subsampled training set. The test windows are drawn from six additional fire scenarios (5, 6, 7, 8, 9, and 10) that do not appear in the training set. In all splits, a window is labeled as normal if its last time step occurs before the annotated for that scenario and as fire otherwise.

During the evaluation, each window is passed through the encoder and the AAG-DSVDD model to obtain an anomaly score . A window is classified as fire when its score exceeds a global threshold , which is selected using held-out validation windows from training scenarios. A scenario-level fire alarm is raised once consecutive windows are classified as fire. The estimated fire starting time (EFST) is defined as the time at which the model predicted a fire for q consecutive windows.

The same window-level train/validation/test partitions and semi-supervised label budgets are used for all methods to ensure a fair comparison. For the deep methods, AAG-DSVDD and DeepSAD are trained on the full training window set, using labeled normal windows () and labeled fire windows () together with the remaining unlabeled windows (). In contrast, DeepSVDD is trained only on the labeled normal windows (), representing a supervised deep one-class baseline. The classical OC-SVM and SVDD-RBF baselines are trained on the normal training windows only (), ignoring unlabeled and fire windows during training. Validation windows from the training scenarios are used exclusively for hyperparameter selection and for choosing the global decision threshold, while test windows from held-out scenarios are used only for final evaluation.

The fire monitoring experiments were carried out on a Windows workstation equipped with a 13th Gen Intel Core i9-13900K (3.00 GHz), 64 GB of RAM, and an NVIDIA RTX 4090 GPU.

5.2.3. Evaluation Metrics for Fire Monitoring Experiments

For each fire scenario s, we obtain a sequence of ground-truth window labels:

and the corresponding predicted labels:

where is the number of windows in that scenario, and denotes a predicted fire window.

For each scenario s, the is provided by the dataset annotation and expressed in window indices. Equivalently, it can be written as the first time point at which the ground-truth label becomes fire:

Given the predicted labels , we declare that a scenario-level fire alarm has been raised once q consecutive windows are classified as fire. In our experiments, we use . The is defined as the last index of the first run of q consecutive fire predictions, that is

This matches the implementation in which the alarm time is the index of the last window in the first run of q consecutive predicted fires.

The Fire Starting Time Accuracy (FSTA) for scenario s measures the temporal deviation of the estimated start time from the true start time. It is defined as the absolute difference between the estimated and true fire starting times:

By this definition, quantifies the magnitude of the error in seconds, treating early false alarms () and late detections () symmetrically. Lower values indicate better performance.

To quantify false alarms in the pre-fire region, we compute the false alarm rate (FAR) for each scenario with an annotated . Let

denote the set of pre-fire window indices. The per-scenario false alarm rate is

that is, the fraction of pre-TFST windows that are incorrectly classified as fire. In the reported tables, we express as a percentage.