Abstract

In educational large-scale assessment studies, uniform differential item functioning (DIF) across countries often challenges the application of a common item response model, such as the two-parameter logistic (2PL) model, to all participating countries. DIF occurs when certain items provide systematic advantages or disadvantages to specific groups, potentially biasing ability estimates and secondary analyses. Identifying misfitting items caused by DIF is therefore essential, and several item fit statistics have been proposed in the literature for this purpose. This article investigates the performance of four commonly used item fit statistics under uniform DIF: the weighted root mean square deviation (RMSD), the weighted mean deviation (MD), the infit, and the outfit statistics. Analytical approximations were derived to relate the uniform DIF effect size to these item fit statistics, and the theoretical findings were confirmed through a comprehensive simulation study. The results indicate that distribution-weighted RMSD and MD statistics are less sensitive to DIF in very easy or very difficult items, whereas difficulty-weighted RMSD and MD exhibit consistent detection performance across all item difficulty levels. However, the sampling variance of the difficulty-weighted statistics is notably higher for items with extreme difficulty. Infit and outfit statistics were largely ineffective in detecting DIF in items of moderate difficulty, with sensitivity limited to very easy or very difficult items. To illustrate the practical application of these statistics, they were computed for the PISA 2006 reading study, and the distribution of the statistics across participating countries was descriptively examined. The findings guide selecting appropriate item fit statistics in large-scale assessments and highlight the strengths and limitations of different approaches under uniform DIF conditions.

Keywords:

item response model; 2PL model; differential item functioning; RMSD; MD; infit; outfit; item fit MSC:

62H25; 62P25

1. Introduction

Item response theory (IRT) models [1,2,3,4,5,6] are multivariate statistical models designed to analyze a vector of discrete random variables. IRT models are widely applied in the social sciences, particularly in educational large-scale assessment (LSA; [7,8]) studies, where cognitive tasks are administered. The use of IRT is advantageous because it accommodates complex test designs [9] and addresses measurement error in estimated abilities for secondary analyses [10].

This article focuses on dichotomous (i.e., binary) random variables. Let denote a vector of I random variables , commonly referred to as items or scored item responses. A unidimensional IRT model [11] defines a parametric model for the probability distribution for as

where denotes the density of the normal distribution parameterized by the mean and standard deviation (SD) . The distribution parameters and of the latent variable , often referred to as a trait or ability variable, are collected in the vector . The vector comprises the item parameters for the item response functions (IRFs) for . The IRF of the two-parameter logistic (2PL) model [12] is given by

where and denote the item discrimination and item difficulty parameters, respectively, and is the logistic distribution function. In this formulation, the item parameter vector is .

The Rasch model [13,14] represents a special case of the 2PL model in which all item discrimination parameters are fixed to 1.

For a sample of N individuals with independently and identically distributed observations (also referred to as cases, subjects, students, or persons) based on the realizations from the distribution of the random variable , the model parameters of the IRT model in (1) can be consistently estimated using marginal maximum likelihood estimation (MML; [15,16]), typically implemented with the expectation–maximization algorithm [17,18].

In LSA applications such as programme for international student assessment (PISA; [19]), a parametric model for the IRFs in (1) is typically specified. These studies involve a large number of countries, and it is generally assumed that the item parameters are identical across countries, implying parameter invariance. However, this assumption may be violated in practice because certain items can provide systematic advantages or disadvantages to specific countries. This phenomenon is known as differential item functioning (DIF; [20,21]), although alternative terms such as measurement bias or item bias are also used [22].

As a result, the assumed IRF represents a (slight) misspecification of the true IRT model (1) for a given country (or group, henceforth). The multivariate random vector is represented as

where denotes the assumed IRF and represents the IRF in the data-generating model for the group. In practical applications, it is typically expected that the approximation of by introduces only minimal distortion in estimating the distribution parameters and .

The assessment of the adequacy of parametric IRFs (i.e., item fit; [23,24,25]) is a central topic in psychometrics. The discrepancy between the true IRF and the assumed parametric IRF should be quantified using an appropriate effect size measure. Ideally, statistical inference for this effect size should also be available. Of primary interest is the identification of misfitting items i for which the assumed IRFs deviate substantially from .

A wide range of item fit statistics has been proposed in the literature [25]. The present study focuses on the root mean square deviation (RMSD; [26,27,28,29,30,31,32,33,34,35,36,37,38]) and the related mean deviation (MD) statistic, as well as the infit and outfit statistics [39,40]. The motivation for examining these statistics arises from their operational use in current PISA studies. Item fit statistics are routinely analyzed in both the PISA field and main studies [41]. The field study generally involves relatively small sample sizes per item at the country level. In this context, the fit statistics are computed for each country and reported to participating PISA countries to identify potential issues with items, such as translation errors or country-specific test administration problems at the item level. The investigation of item fit statistics under small sample sizes is therefore particularly relevant, as the field study reports are expected to provide reliable information—that is, item fit statistics with sufficiently small sampling error. In the PISA main study, RMSD and MD item fit statistics are employed to identify items whose parameters deviate substantially from the assumed international item parameters defined across countries. Items flagged for DIF are then assigned country-specific parameters in the operational scaling procedure of the PISA main study [42]. Consequently, reliable DIF detection based on these item fit statistics is essential, as it influences decisions concerning the scaling model applied in official PISA reporting.

The performance of these item fit statistics is investigated under uniform DIF, that is, when the assumed item difficulties in the IRF of the 2PL model differ from the true item difficulties in the IRF . The behavior of these statistics under uniform DIF is examined through analytical derivations and a simulation study. To the best of current knowledge, this study is the first to provide analytical approximations of these item fit statistics under DIF conditions.

The remainder of the article is structured as follows. Section 2 presents analytical results for various weighted RMSD and MD statistics under DIF. Section 3 provides analytical findings for the infit and outfit statistics under DIF. Results from a simulation study examining the performance of these item fit statistics under uniform DIF are reported in Section 4. An empirical example using PISA 2006 data is presented in Section 5. Finally, Section 6 concludes with a discussion.

2. Weighted RMSD and Weighted MD Under DIF

In this section, the definitions of weighted RMSD and weighted MD statistics, as proposed by Joo et al. [43], are reviewed. These item fit statistics are examined under uniform DIF in the 2PL model.

Assume that denotes the assumed IRF, while the IRF in the data-generating model is given by . Here, represents the uniform DIF effect, also interpreted as a DIF effect size on the logit metric within the 2PL model. The RMSD and MD statistics assign weights to deviations to provide a summarized measure of model–data discrepancy.

2.1. Weighted RMSD and Weighted MD

In the definitions of the weighted RMSD and weighted MD statistics, a weighting function for item i is specified such that it integrates to 1; that is, (see [43]). The weighting function may be item-specific or common across items.

The weighted MD statistic is defined as

and represents the weighted average of the IRF differences . The MD statistic is signed and operates on the probability difference metric. Since and take values in , the MD statistic is bounded between and 1, although substantial deviations from 0 are unlikely in practice. This statistic focuses on average differences implied by a misfitting IRF.

The RMSD statistic summarizes squared IRF differences by computing

Unlike the MD statistic, differences between and that cancel out on average do not vanish under RMSD. Consequently, RMSD can reveal discrepancies that remain hidden in MD. In particular, the presence of nonuniform DIF [21] (i.e., DIF in item discriminations ) may be detected by RMSD but remain undetected by MD.

The MD and RMSD statistics are mathematically related. Jensen’s inequality [44] implies for any square-integrable random variable X, leading to

Applying this result to , where the expectation is taken with respect to the probability density , yields

demonstrating that the absolute value of the MD statistic is bounded by the RMSD statistic.

2.2. Distribution-Weighted RMSD and MD Statistics

The RMSD and MD statistics were originally defined using a weighting function given by the normal density (see [28,32,36]), where and denote the mean and SD of the distribution in the group. This approach is referred to as distribution-weighted (dist) item fit statistics for RMSD and MD. In this case, the weighting function is identical across items and depends solely on the location and shape of the distribution within the group.

To evaluate the integrals appearing in the RMSD and MD statistics, products of logit IRFs and normal densities must be computed. These integrals become easier to handle when the logit IRF is approximated by a probit IRF, resulting in expressions involving normal distribution functions and densities. In some cases, no closed-form solution exists, or the available closed form is too complex to interpret. In such situations, linear and quadratic Taylor expansions with respect to the uniform DIF effect are applied, enabling the examination of the behavior of the item fit statistics for values close to zero.

The difference between the IRFs and can be approximated as

where denotes the standard normal density function. The first approximation in (8) replaces the logit IRFs with probit IRFs, expressed through the standard normal distribution function , using the constant (see [45,46,47]). The absolute difference between the logit IRF and its probit approximation does not exceed 0.0095. The second approximation in (8) applies a first-order Taylor expansion to the difference of probit IRFs.

The distribution-weighted MD statistic can be approximately expressed as (see also [48])

where the approximations in (8) and (A1) from Appendix A were applied. Setting and simplifies (9) to

Hence, it holds that

Furthermore, we have for , and for . This indicates that the absolute value of the MD statistic decreases as the assumed item difficulties deviate further from the group mean .

The distribution-weighted RMSD can be approximated as

based on the approximations in (8) and (A3). With and , (12) reduces to

The coefficient in (13) is only slightly larger than the coefficient in (11) for the MD. This indicates that the MD and RMSD are nearly equivalent when uniform DIF is the source of item misfit, at least at the population level. The Simulation Study in Section 4 further demonstrates that, in small to moderately sized samples, sample-based RMSD estimates are considerably larger than the corresponding MD estimates. Similar to the MD statistic, the RMSD decreases as the absolute difference between item difficulty and group mean increases.

The computation of the RMSD statistic in (12) relies on two key approximations. First, the logit IRF is approximated by a probit IRF. Second, the difference between the group-specific probit IRF and the assumed probit IRF is approximated using a linear Taylor expansion with respect to the uniform DIF effect . An upper bound for the resulting approximation error in the computed RMSD statistic is provided in Appendix B.

2.3. Difficulty-Weighted RMSD and MD

Section 2.2 shows that both MD and RMSD depend on the magnitude of . This dependence can be critical when the group mean is much smaller than the item difficulties for most items, which poses challenges for detecting item misfit in low-performing countries in LSA studies [37]. Furthermore, distribution-weighted RMSD and MD are known to perform poorly in detecting uniform DIF for very easy or very difficult items, that is, when is large. To address these limitations, difficulty-weighted RMSD and MD statistics have been proposed in [43].

Difficulty-weighted (denoted by diff) MD and RMSD statistics (i.e., and ) use the normal density with mean and SD 1 as the weighting function. In this way, item misfit receives maximal weight at the assumed item difficulty . By definition, the difficulty-weighted RMSD and MD statistics can be obtained as special cases of the distribution-weighted counterparts by setting and in Equations (9) and (12), yielding

The item difficulty no longer appears in the expressions (14) and (15). Consequently, for , the approximate identities and hold, regardless of , , and .

2.4. Information-Weighted RMSD and MD

As an alternative weighting function, Joo et al. [43] proposed using the item information function corresponding to to weight differences between the IRFs and . The item information function for item i is defined as

The factor cancels in the weighting function , as this function must integrate to 1. Moreover, the product of logit functions in (16) can be reasonably well approximated by a normal density function as

where the constant is obtained by matching the values of the functions on both sides of the approximation at . The difference between and its normal density approximation does not exceed 0.0115. Applying the approximation (17), the weighting function for item i is given by

Information-weighted (denoted by info) RMSD and MD statistics are obtained as special cases of distribution-weighted RMSD and MD statistics with and . Using (9) and (12), the information-weighted MD and RMSD statistics are approximately

Information-weighted RMSD and MD statistics share with difficulty-weighted statistics the property of providing an assessment of item misfit due to uniform DIF independent of , , and . However, they remain dependent on item discrimination . For items with , the difficulty-weighted statistics typically appear larger in absolute value than the corresponding information-weighted MD and RMSD statistics.

2.5. Uniformly Weighted RMSD and MD

Finally, Joo et al. [43] proposed a uniform density on a chosen interval with as a weighting function. The lower and upper bounds of the interval can, for instance, be set to the 1st and 99th quantiles of the group-specific distribution. For a standard normal distribution, this corresponds to . The weighting function is defined as

where denotes the indicator function, taking the value 0 outside . As the IRF difference is typically small outside , can be approximated by the improper constant weighting function , which does not integrate to 1.

RMSD and MD statistics using are referred to as uniformly weighted (denoted by unif) RMSD and MD statistics. For an improper weighting function, Raju [49] provided the explicit expression

which is termed the signed area statistic. Using this result, the uniformly weighted MD is approximately

It is evident that approaches zero as the interval length increases. Therefore, using the improper uniform weighting function equal to 1 for MD assessment is theoretically more appropriate, yielding a value of . This approach also allows direct estimation of the DIF effect size , improving statistical efficiency when the signed area measure is computed. Raju [49] further derived

which is referred to as the unsigned area statistic.

For the uniformly weighted RMSD statistic, the difference in IRFs is approximated using (8). Applying a linear Taylor approximation to the resulting integral yields

For , this simplifies to .

2.6. Estimation of Weighted RMSD and Weighted MD

Until this point, the variants of the RMSD and MD statistics have been described only at the population level, assuming that the group-specific IRF is known, as is the assumed IRF . The observed IRF , a sample-based estimate of , is defined for a theta point () as

where is the posterior distribution of person n at grid point . The posterior distribution is typically obtained by fitting the IRT model via MML [15], so the quantities in (26) can be directly computed from software output. A discrete evaluation of the weighting function is defined as

The sample-based MD statistic is then defined as

Following the derivations in [35], it holds that

because (at least, asymptotically). Thus, on average, the MD statistic is largely unaffected by sampling errors in the estimate of .

The sample-based RMSD is defined as

Along the lines of [35] (see also [48]), it follows that

Equation (31) shows that the sampling variance of contributes explicitly to the expected value of the sample RMSD. As a result, the sample-based RMSD typically exceeds the population-based RMSD. The quantity is larger for extreme points, reflecting higher variability of the observed IRF at the tails. This variance contribution can be minimized by choosing as precision weights, that is, the inverse variances associated with .

3. Infit and Outfit Under DIF

In this section, the expected values of the item fit statistics infit and outfit under uniform DIF are examined. These statistics are primarily applied for model fit assessment in the Rasch model [14,39,40,50,51,52].

First, the statistics are formally introduced. Let n denote a case and the item response of case n on a dichotomous item i. Define the standardized residual [14,53,54] as

where and denote the expected value and variance of under the assumption that is the true IRF.

Early studies used individual ability estimates to compute and , which are given by

However, using instead of the true ability may bias the distribution of even if the model holds. To address this, Wu and Adams [53,55] proposed computing infit and outfit statistics based on individual posterior distributions rather than [53,54]. Accordingly, the expected value and variance are computed as

In practice, is evaluated on a finite grid () and the posterior distribution is represented by probabilities . The quantities in (34) are then approximated by

The outfit statistic is defined as

If the IRT model holds (i.e., no item misfit such as uniform DIF), the residuals are approximately normally distributed, and follows a chi-square distribution with one degree of freedom. The expected value of is therefore 1. Consequently, deviations of the outfit statistic from 1, such as values below 0.7 or above 1.3, indicate potential item misfit [51,56,57].

The infit statistic weights the squared standardized residuals according to their variances:

Equivalently, it can be expressed as

As a weighted average of chi-square distributed variables , the infit statistic also has an expected value of 1 under the model, and cutoffs of 0.7 and 1.3 are frequently used to detect item misfit.

Statistical inference for infit and outfit should be applied when stringent model fit assessment is required [50,54,58].

The following sections present the expected values of the outfit and infit statistics in the presence of uniform DIF.

3.1. Expected Value of Outfit Statistic

In this section, the expected value of the outfit statistic under uniform DIF is computed. The approach consists of evaluating the expected values of the squared residuals in the outfit statistic while letting N tend to infinity.

First, note that

For a large number of items (i.e., estimated ability values converge to true ability values ) and a large number of cases (), the outfit statistic converges to

where and denote the two summands in (40). Using the normal density approximation (17) for the item information function, the first summand in the outfit statistic in (40) can be expressed as

The integral on the right side of (41) has a closed form using the identity (A2) from Appendix A. A quadratic Taylor approximation of the obtained closed form around yields

Next, the computation of is considered. Using the probit IRF approximation of the logit IRF difference and its linear Taylor expansion with respect to (see (8)) in (40), together with the normal density approximation (17) of the information function , the integral can be expressed in closed form by applying the identity (A4) from Appendix A. A quadratic Taylor expansion of this result yields

Overall, the expected value of the outfit statistic can be approximated as

where is a function of the squared difference , which can be derived from (42) and (43). For and , this reduces to

Since the outfit statistic cannot be negative, the approximation (45) is valid only for sufficiently small . The linear term shows that the outfit decreases below 1 for positive DIF effects () when , i.e., for difficult items, and increases when . Interestingly, for items with , (45) simplifies to . This implies that very large DIF effects are required to detect item misfit in the outfit statistic for items of moderate difficulty. In this respect, the outfit statistic behaves in the opposite way of distribution-weighted RMSD and MD statistics, which are more sensitive to uniform DIF when .

3.2. Expected Value of Infit Statistic

Next, the expected value of the infit statistic is computed. First, consider the numerator in the infit statistic (38) and decompose it as

The denominator in (38) converges to

Using the approximation in (17), it follows that

Similarly,

Moreover,

Taking the expected value of the fraction in the infit statistic and applying a Taylor approximation around yields

Setting and simplifies the expression (51) to

Comparing the linear term of the outfit statistic (0.393, see (45)) with the corresponding linear term in the infit statistic (0.282, see (52)) shows that uniform DIF is expected to induce larger deviations from 1 in the outfit than in the infit statistic. Hence, the power to detect model misfit caused by uniform DIF may be higher for the outfit than for the infit statistic.

As with the outfit, positive uniform DIF effects yield infit statistics smaller than 1 when and larger than 1 when . For , values slightly above 1 are expected, as indicated by the approximation (see (52)).

4. Simulation Study

4.1. Method

The 2PL model was used for both data generation and analysis. The mean and SD of the normally distributed factor variable were set to 0 and 1, respectively. Non-normal distributions of were not simulated, as substantially different results were not expected in such conditions.

The simulation study included items to examine the behavior of the fit statistics under more typical conditions of longer tests, where ability estimates are relatively precise. Ten base items with known item discriminations and item difficulties were defined in the simulation and duplicated five times to create a test of 50 items. All item discriminations were fixed at 1, resulting in a test with average discrimination. The item difficulties were set to −2.00, −1.56, −1.11, −0.67, −0.22, 0.22, 0.67, 1.11, 1.56, and 2.00, producing a test with item difficulties uniformly distributed across a broad range of ability values. Exactly two out of the 50 items were simulated to exhibit uniform DIF, corresponding to 4% of the total items. A relatively small proportion of DIF items was selected to minimize the influence of DIF item misfit on the non-DIF items (i.e., items without DIF). Items j and for were specified to have uniform DIF in difficulties with values and , respectively. For instance, for , the first and tenth items were affected by DIF and assigned difficulties and . The DIF effect size was set to and , representing large DIF magnitudes [21,59].

Sample sizes N were set to 250, 500, 1000, 2000, and 4000, reflecting typical applications of item fit statistics in large-scale assessments using the 2PL model [8].

In each of the 5 (sample size N) × 5 (type of selected DIF items) × 2 (DIF effect size ) simulation conditions, 750 replications were conducted. Item parameters were fixed at the parameters of the base items. Hence, the presence of DIF was ignored in the scaling step in order to allow detecting DIF by the item fit statistics , , , , and . In this scaling step, only the mean and the SD were estimated.

The uniformly weighted RMSD and MD fit statistics were not computed in this Simulation Study, as the appropriate bounds for the uniform distribution domain are not clearly defined. The information-weighted RMSD and MD fit statistics were also not evaluated in this simulation, as they are expected to behave similarly to the difficulty-weighted fit statistics, with additional scaling by item discriminations.

Means, SDs, and percentiles (5th, 25th, 50th, 75th, and 95th) were computed for all item fit statistics.

Analyses were conducted in R (Version 4.4.1; [60]) using the TAM package (Version 4.3-25; [61]) for 2PL model fitting. Custom R functions were written for RMSD and MD statistics, while infit and outfit statistics were computed with TAM::msq.itemfit(), which uses numerical integration rather than the stochastic integration in TAM::tam.fit(). Replication materials are available at https://zenodo.org/records/17241167 (accessed on 4 October 2025). Figures were created using the R package ggplot2 (Version 4.0.0; [62,63]).

4.2. Results

Table 1 reports the mean and SD of the item fit statistics for DIF items as a function of item difficulty , DIF effect size , and sample size N. For items with difficulties close to the mean (e.g., ), the distribution-weighted RMSD () exhibited mean and SD values very similar to the difficulty-weighted RMSD (). The empirical RMSD closely matched the approximate expected value of derived in Section 2. MD values reflected the sign of the uniform DIF . Consistent with Section 3, infit and outfit statistics did not indicate uniform DIF for items with near the mean, remaining close to 1 (e.g., for ).

Table 1.

Simulation Study: Mean and standard deviation (SD) of item fit statistics for items with differential item functioning (DIF) as a function of item difficulty , DIF effect size and sample size N.

For easier items (i.e., lower ), RMSD and absolute MD values weighted by the distribution were smaller than the difficulty-weighted counterparts. Notably, difficulty-weighted RMSD and MD exhibited similar means across large samples regardless of , whereas and approached 0 for items with extreme difficulties. However, difficulty-weighted RMSD and MD exhibited substantially larger SDs, indicating that sampling error strongly affected these statistics for extreme items. The SD of these fit statistics decreased as the sample size increased. Bias due to sampling error in small to moderate samples was also more pronounced for difficulty-weighted RMSD.

As expected from the analytical results in Section 3, infit and outfit statistics detected uniform DIF for items with difficulties far from the mean. Deviations from 1 were asymmetric for items with negative versus positive DIF. For instance, for and , infit was 0.729 for and 1.404 for , highlighting potential limitations of symmetric cutoffs such as 0.8 and 1.2 in misfit detection. The SD of outfit was substantially larger than that of infit, particularly for items with extreme difficulties.

Figure 1 presents percentile plots (5th, 25th, 50th, 75th, and 95th) of distribution-weighted and difficulty-weighted RMSD statistics for DIF items as a function of item difficulty, with a DIF effect of . Variability in item fit statistics was markedly higher for small sample sizes (e.g., ). In particular, the sampling distribution of the difficulty-weighted RMSD () for the item with remained wide even for a large sample size of . This limitation may restrict the operational applicability of this statistic. Positive bias due to sampling error for at was also evident in small to moderate samples.

Figure 1.

Simulation Study: Percentile plot of RMSD item fit statistics for items with differential item functioning (DIF) showing the 5th–95th percentile range (black rectangle), the 25th–75th percentile range (gray-shaded rectangle) and the median (thick black line).

Table 2 reports the mean and SD for items without DIF under simulation conditions with , where the fourth and seventh items exhibited DIF. While MD statistics were centered around the expected value of 0, RMSD statistics showed positive values that deviated substantially from 0 in small to moderate sample sizes. Asymptotically, RMSD values are expected to approach 0; however, sampling error induced notable bias, particularly for items with extreme difficulty (). For instance, the mean of the difficulty-weighted RMSD for this item was 0.158 at . In contrast, infit and outfit statistics closely matched their expected value of 1.

Table 2.

Simulation Study: Mean and standard deviation (SD) of item fit statistics for items without differential item functioning (DIF) as a function of item difficulty and sample size N.

Figure 2 presents percentile plots of RMSD statistics for items without DIF. Similar to items with DIF, the sampling distribution of the difficulty-weighted RMSD () was wide, with sampling bias particularly pronounced for items with extreme difficulty. In contrast, the distribution-weighted RMSD () exhibited comparable variability across items with different difficulties.

Figure 2.

Simulation Study: Percentile plot of RMSD item fit statistics for items without differential item functioning (DIF) showing the 5th–95th percentile range (black rectangle), the 25th–75th percentile range (gray-shaded rectangle) and the median (thick black line).

5. Empirical Example

5.1. Method

This section employs the PISA 2006 dataset [64] for the reading domain. The dataset comprises participants from 26 selected countries that participated in PISA 2006. The complete PISA 2006 dataset is publicly available at https://www.oecd.org/en/data/datasets/pisa-2006-database.html (accessed on 4 October 2025) and can be accessed as the data.pisa2006Read dataset from the R package sirt (Version 4.2-133; [65]).

In PISA 2006, reading items were administered to a subset of students. The present analysis included only students who responded to at least one item from the reading domain. In total, 110,236 students were included, with country-specific sample sizes ranging from 2010 to 12,142 (mean = 4239.8, SD = 3046.7).

Student (sampling) weights were applied throughout the analysis. Within each country, weights were normalized to sum to 5000, ensuring equal contribution across countries. These normalized weights are also referred to as house weights.

Among the 28 reading items, some were originally scored polytomously but were recoded dichotomously for this empirical example, with only the highest category coded as correct. The remaining items were treated as dichotomous, consistent with the original PISA analysis.

In the first step, international item parameters were estimated using the 2PL model applied to the weighted pooled dataset across all countries. The resulting item discriminations and item difficulties are reported in Table 3. In the subsequent country-wise scaling step, only the country mean and country SD were estimated, while item parameters were fixed at their international values from the pooled analysis. Item fit statistics were then computed at the country level based on these fixed parameters, which corresponds to the assumption of full invariance.

Table 3.

Empirical Example, PISA 2006 Reading, Netherlands: International item parameters, DIF effect size and item fit statistics (with standard errors in parentheses).

In the next step, the distribution parameters and were fixed, and country-specific DIF effects were estimated by allowing item difficulties to vary freely while fixing item discriminations to the base-item values (i.e., set to 1). The estimated can be interpreted as a DIF effect size on the logit scale of the 2PL model, whereas the additional item fit statistics represent alternative DIF quantifications.

The entire procedure was repeated 80 times using balanced repeated replicate weights [64], which allow estimation of standard errors (SE) for approximately normally distributed statistics [66,67]. Replicate weights were used to obtain standard errors for RMSD, MD, infit, outfit, and statistics.

Items were flagged (i.e., indicated as misfitting) if certain cutoffs of the fit statistics were exceeded: absolute values larger than 0.4, RMSD values larger than 0.08, and infit statistics smaller than 0.7 and larger than 1.3.

5.2. Results

Item fit statistics for PISA 2006 reading results in the Netherlands (NLD) are first reported as an example. A total of 2666 students participated in this country, and results for the 28 reading items are presented. The estimated country mean for NLD was 0.093 (SE = 0.032), and the estimated SD was 1.019 (SE = 0.030). The SD of the estimated DIF effects was 0.432, commonly referred to as the DIF SD [68].

Table 3 reports the item fit statistics for NLD along with their estimated SEs. For instance, 9 of the 28 items exceeded the cutoff value of 0.08 for the RMSD statistic weighted by the distribution (). For 4 additional items (e.g., Item R067Q01), the difficulty-weighted RMSD () exceeded the threshold, while did not. These items had difficulties that deviated substantially from 0. In most cases, items flagged by RMSD were also flagged by the corresponding MD statistic. For NLD, no further items were identified by infit or outfit beyond those already detected as misfitting by , RMSD, or MD statistics.

This study did not attempt to provide substantive interpretations for why DIF occurred in some items for NLD. Although potential explanations such as translation issues or differences in opportunities-to-learn may be conjectured, clear substantive interpretations of DIF are generally the exception rather than the rule [69]. It is also relevant that current operational PISA practice removes items with country-specific DIF effects from group comparisons by assigning them unique item parameters in the scaling model [19,70]. As a result, PISA does not aim to explain DIF but instead treats it as construct-irrelevant [71], a stance that has been subject to criticism [72,73].

In the second part of this section, the distribution of item fit statistics across all countries is examined. The analysis is based on 724 cases (i.e., items crossed with countries, with some items excluded in certain countries for technical reasons in the official PISA dataset).

Figure 3 displays histograms of the distribution-weighted RMSD () and the difficulty-weighted RMSD (). On average, the distribution-weighted RMSD produced smaller values () than the difficulty-weighted RMSD counterpart (). Both distributions were notably skewed (skewness for : 1.843; for : 1.712).

Figure 3.

Empirical Example, PISA 2006 Reading: Histogram of distribution-weighted RMSD (; left panel) and difficulty-weighted RMSD (; right panel) statistics.

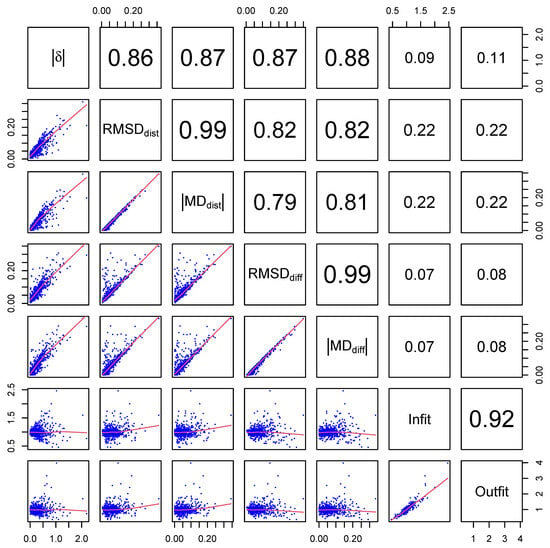

Figure 4 presents a correlation plot of the computed item fit statistics. Corresponding and were highly correlated (), whereas and showed a lower correlation of 0.82, indicating substantial differences between these measures. The DIF effect size and the RMSD and MD statistics were largely uncorrelated with infit or outfit. Notably, correlated slightly more with than with .

Figure 4.

Empirical Example, PISA 2006 Reading: Correlation plot of item fit statistics.

Finally, Table 4 presents the patterns of item flagging based on , , , and infit. Overall, 65.1% of items were not flagged, indicating that 34.9% were identified by at least one fit statistic. Specifically, 20.7% of items were flagged by , indicating uniform DIF as the source of misfit. Additionally, 24.0% of items were flagged by , 32.7% by , and 6.4% by infit.

Table 4.

Empirical Example, PISA 2006 Reading: Relative frequencies for patterns of flagged items based on the DIF effect size , distribution-weighted and difficulty-weighted RMSD ( and , respectively) and infit statistic.

Only 3.0% of items were flagged by all four statistics. Moreover, 2.8% of items were flagged by but not by . Notably, 14.2% of items were flagged when , suggesting that the misfit could be due to nonuniform DIF or misspecification of the IRF functional form. Of these, 12.8% were additionally detected by , indicating limited incremental value of the infit statistic.

Table 5 displays the percentages of flagged items at the country level for the 26 countries included in this study. The results indicate considerable variation in the proportion of detected DIF items across countries. For instance, the average percentage was 24.1% for the distribution-weighted RMSD statistic (), with an SD of , ranging from 7.1% to 37.0%. For the difficulty-weighted RMSD statistic (), the average percentage increased to 32.74% with an SD of 9.94%, ranging from 17.9% to 51.9%. These findings suggest that DIF may be viewed as a country-specific characteristic, with items in some countries exhibiting greater susceptibility to DIF than in others.

Table 5.

Empirical Example, PISA 2006 Reading: Percentages of flagged items based on the DIF effect size , distribution-weighted and difficulty-weighted RMSD ( and , respectively) and infit statistic at the country level.

6. Discussion

This article aimed to provide analytical and simulation evidence on how the uniform DIF effect is reflected in the item fit statistics RMSD, MD, infit, and outfit.

Closed-form approximations were derived for the item fit statistics. The distribution-weighted RMSD and MD statistics, as originally proposed and now applied in operational practice [42], attain the largest absolute values when item difficulties align with the mean of the ability distribution. In this case, RMSD and the absolute MD statistics are approximately , whereas they decrease to around when . The dependency of the item fit statistic on item difficulty is removed by using the difficulty-weighted RMSD and MD statistics. However, the difficulty-weighted statistics have the disadvantage of exhibiting substantially larger variances than their distribution-weighted counterparts.

Moreover, analytical approximations of the expected values of the outfit and infit statistics showed that they exhibit only slight deviations from 1 under uniform DIF when item difficulty is close to the group mean . For positive DIF effects, the expected values of the infit and outfit statistics fall below 1 for difficult items (i.e., ), while they exceed 1 for easy items.

The simulation study indicated that distribution-weighted RMSD and MD statistics consistently detected uniform DIF, but only for items with difficulty levels near the mean. In contrast, difficulty-weighted RMSD and MD were sensitive to uniform DIF even for items with difficulties far from 0. These statistics were also more affected by sampling variability, exhibiting elevated SDs and positive bias for items with extreme difficulties, particularly in small-to-moderate samples. Notably, distribution-weighted RMSD produced substantially lower values for items with extreme difficulties compared to difficulty-weighted RMSD, complicating DIF detection for items with identical DIF effect sizes in the item difficulty parameter. Difficulty-weighted RMSD and MD statistics suffer from substantial sampling variability in small samples, particularly for items with extreme difficulties. Researchers might therefore consider using these difficulty-weighted versions; however, their effectiveness in detecting uniform DIF for such items is limited. Consequently, sufficiently large sample sizes are required if uniform DIF for items with extreme difficulties is to be detected using the difficulty-weighted RMSD and MD statistics.

The results of the simulation study and analytical derivations further indicate that item infit and outfit statistics are not suitable for detecting uniform DIF, except in the case of items with extreme difficulties. However, in such cases, difficulty-weighted RMSD and MD fit statistics provide viable alternatives.

An anonymous reviewer noted that in countries with extremely low or high performance, distribution-weighted RMSD and MD statistics may behave differently even under the same extent of DIF [37]. In such cases, difficulty-weighted or information-weighted RMSD and MD statistics may be preferable. However, these statistics would also exhibit greater variability when the items difficulty substantially deviates from the group mean.

A substantial body of literature addresses methods for DIF detection [74,75]. These methods often assess DIF directly through deviations in item parameters, such as differences in item difficulties or discriminations in the 2PL model [76]. In contrast, the RMSD, MD, infit, and outfit statistics provide aggregated measures that summarize DIF across IRT model parameters into a single discrepancy metric. The choice of metric for DIF detection ultimately depends on the researcher’s objectives. This study also demonstrated that uniform DIF is challenging to detect for items with extreme difficulty when using distribution-weighted RMSD or MD statistics. However, it may be argued that DIF in items with extreme difficulties is of lesser practical importance than DIF in items with moderate difficulties, as the latter receive greater weights in likelihood-based estimation of group differences, whereas the former contribute less. Consequently, for researchers primarily concerned with the practical impact of DIF in likelihood-based estimation, distribution-weighted RMSD or MD statistics may be more appropriate than their difficulty-weighted counterparts.

The empirical application using PISA data demonstrated that item fit statistics deviated substantially from perfect correlations with one another. This highlights the importance of selecting which aspects of misfit are of interest. The assessment of standard errors should also be incorporated in operational practice, as item fit statistics can exhibit considerable variability, particularly in smaller samples, such as those in PISA field test studies.

This article focused exclusively on weighted RMSD item fit statistics as originally defined. RMSD has been shown to exhibit positive bias for small to moderate sample sizes. Future research could explore bias-corrected RMSD estimates to reduce such bias in finite samples (see [35]).

An additional limitation is the exclusive focus on uniform DIF, that is, DIF in item difficulties. Nonuniform DIF, defined as DIF in item discriminations, may also be relevant in empirical applications and represents a promising direction for future research, although evidence indicates that uniform DIF occurs more frequently in practice than nonuniform DIF [77,78,79]. The MD statistic is expected to be less effective for nonuniform DIF than the RMSD fit statistic. Item infit and outfit statistics are expected to show greater sensitivity to misspecified item discriminations [54], and therefore, they may be more suitable for detecting nonuniform DIF than uniform DIF.

An anonymous reviewer raised the question of what would happen if anchoring were not perfect and no fixed item parameters were used. In the simulation study, only two of the 50 items were assumed to exhibit uniform DIF, which is likely a lower proportion of misfitting items than would typically be found in practice. This choice was made to examine the behavior of the fit statistics under ideal conditions, in which the anchor item set shows no DIF and has minimal influence on the item under investigation. The behavior of the item fit statistics can be expected to deteriorate as the proportion of DIF items increases. More specifically, for the RMSD item fit statistic, the average RMSD for non-DIF items would increase notably above zero, whereas the average RMSD for DIF items would decrease as the proportion of DIF items increases [35]. Consequently, as the quality of the anchor item set decreases in terms of DIF absence, detecting DIF in truly affected items becomes more difficult. Moreover, average values of the item fit statistics are not expected to change substantially if item parameters are estimated rather than fixed, although the variability of the item fit statistics would increase when item parameters are estimated.

Finally, this study focused solely on the 2PL model. It has been argued that a potentially misfitting 2PL model (or even the Rasch model) may still be the preferred operational scaling model, even when a more complex IRT model holds [73,80]. Notably, misspecification in the IRF is also reflected in the RMSD statistic in addition to DIF. This property may help explain why RMSD is preferred over MD in applications such as the PISA study, as MD only partially offsets the effect of IRF misfit. If the group-specific item parameters of the 2PL model are interpreted as the best approximation that minimizes the Kullback–Leibler distance, it may be advantageous to compute an RMSD statistic directly on group-specific 2PL item parameters rather than relying on nonparametric estimation of group-specific IRFs.

Funding

This research received no external funding.

Data Availability Statement

Replication material for the Simulation Study in Section 4 can be found at https://zenodo.org/records/17241167 (accessed on 4 October 2025). The dataset data.pisa2006Read used in the empirical example in Section 5 is available from the R package sirt (https://doi.org/10.32614/CRAN.package.sirt; accessed on 4 October 2025).

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 2PL | two-parameter logistic |

| DIF | differential item functioning |

| IRF | item response function |

| IRT | item response theory |

| LSA | large-scale assessment |

| MD | mean deviation |

| MML | marginal maximum likelihood |

| NLD | the Netherlands |

| PISA | programme for international student assessment |

| RMSD | root mean square deviation |

| SD | standard deviation |

| SE | standard error |

Appendix A. Integral Identities for the Normal Distribution

Let denote the density of the standard normal distribution. Moreover, let , , b and d be real numbers. The following integral identities hold according to Owen [81]:

Note that (A3) is obtained from (A2) by setting and .

The computation of the above integrals is based on the identity

with real numbers A, B, C, and A is positive.

Appendix B. Approximation Error in the Computation of the RMSD Statistic

A bound is now derived for the approximation error when computing the distribution-weighted RMSD statistic based on the probit IRF approximation and the Taylor approximation of the difference in the probit IRFs. To simplify notation, let and denote the true IRFs. Furthermore, as in (8), their respective probit IRF approximations are and . It was noted in Section 2 that the absolute difference does not exceed .

The approximation error of the linear Taylor approximation (8) of the difference with respect to the uniform DIF effect is now determined. This requires a bound on the remainder term of the Taylor approximation. The necessary second derivative of is

Hence, the approximation error in the Taylor expansion (8) is bounded by

where denotes the linear Taylor approximation of .

Let denote the square of the RMSD (i.e., the MSD statistic) obtained using the two approximations, formally defined as

The true value of the square of the RMSD statistic, without approximations, is

The following identity holds

Using (A6), this yields

Hence, a bound for the approximation error of the MSD statistic is obtained as

where denotes the upper bound of the approximation error in the computed MSD statistic.

Finally, a bound for the resulting approximation error of the RMSD statistic is derived. For positive x and real-valued e, the following inequality holds:

Let and denote the RMSD values obtained with and without approximation, respectively. Using (A12), the approximation error in the RMSD statistic satisfies

Appendix C. Country Labels for PISA 2006 Reading Study

The country labels used in Table 5 are as follows: AUS = Australia; AUT = Austria; BEL = Belgium; CAN = Canada; CHE = Switzerland; CZE = Czech Republic; DEU = Germany; DNK = Denmark; ESP = Spain; EST = Estonia; FIN = Finland; FRA = France; GBR = United Kingdom; GRC = Greece; HUN = Hungary; IRL = Ireland; ISL = Iceland; ITA = Italy; JPN = Japan; KOR = Korea; LUX = Luxembourg; NLD = Netherlands; NOR = Norway; POL = Poland; PRT = Portugal; SWE = Sweden.

References

- Baker, F.B.; Kim, S.H. Item Response Theory: Parameter Estimation Techniques; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar] [CrossRef]

- Bock, R.D.; Moustaki, I. 15 item response theory in a general framework. In Handbook of Statistics: Psychometrics; Rao, C.R., Sinharay, S., Eds.; North Holland (Elsiver): Amsterdam, The Netherlands, 2007; Volume 26, pp. 469–513. [Google Scholar] [CrossRef]

- Bock, R.D.; Gibbons, R.D. Item Response Theory; Wiley: Hoboken, NJ, USA, 2021. [Google Scholar] [CrossRef]

- Chen, Y.; Li, X.; Liu, J.; Ying, Z. Item response theory—A statistical framework for educational and psychological measurement. Stat. Sci. 2025, 40, 167–194. [Google Scholar] [CrossRef]

- Tutz, G. A Short Guide to Item Response Theory Models; Springer: Cham, Switzerland, 2025. [Google Scholar] [CrossRef]

- Yen, W.M.; Fitzpatrick, A.R. Item response theory. In Educational Measurement; Brennan, R.L., Ed.; Praeger Publishers: Westport, CT, USA, 2006; pp. 111–154. [Google Scholar]

- Lietz, P.; Cresswell, J.C.; Rust, K.F.; Adams, R.J. (Eds.) Implementation of Large-Scale Education Assessments; Wiley: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Rutkowski, L.; von Davier, M.; Rutkowski, D. (Eds.) A Handbook of International Large-Scale Assessment: Background, Technical Issues, and Methods of Data Analysis; Chapman Hall/CRC Press: London, UK, 2013. [Google Scholar] [CrossRef]

- Frey, A.; Hartig, J.; Rupp, A.A. An NCME instructional module on booklet designs in large-scale assessments of student achievement: Theory and practice. Educ. Meas. 2009, 28, 39–53. [Google Scholar] [CrossRef]

- Braun, H.; von Davier, M. The use of test scores from large-scale assessment surveys: Psychometric and statistical considerations. Large-Scale Assess. Educ. 2017, 5, 17. [Google Scholar] [CrossRef]

- van der Linden, W.J. Unidimensional logistic response models. In Handbook of Item Response Theory: Models; van der Linden, W.J., Ed.; CRC Press: Boca Raton, FL, USA, 2016; Volume 1, pp. 11–30. [Google Scholar] [CrossRef]

- Birnbaum, A. Some latent trait models and their use in inferring an examinee’s ability. In Statistical Theories of Mental Test Scores; Lord, F.M., Novick, M.R., Eds.; MIT Press: Reading, MA, USA, 1968; pp. 397–479. [Google Scholar]

- Rasch, G. Probabilistic Models for Some Intelligence and Attainment Tests; Danish Institute for Educational Research: Copenhagen, Denmark, 1960. [Google Scholar]

- Bond, T.; Yan, Z.; Heene, M. Applying the Rasch Model; Routledge: New York, NY, USA, 2020. [Google Scholar] [CrossRef]

- Glas, C.A.W. Maximum-likelihood estimation. In Handbook of Item Response Theory: Statistical Tools; van der Linden, W.J., Ed.; CRC Press: Boca Raton, FL, USA, 2016; Volume 2, pp. 197–216. [Google Scholar] [CrossRef]

- Robitzsch, A. A note on a computationally efficient implementation of the EM algorithm in item response models. Quant. Comput. Methods Behav. Sc. 2021, 1, e3783. [Google Scholar] [CrossRef]

- Aitkin, M. Expectation maximization algorithm and extensions. In Handbook of Item Response Theory: Statistical Tools; van der Linden, W.J., Ed.; CRC Press: Boca Raton, FL, USA, 2016; Volume 2, pp. 217–236. [Google Scholar] [CrossRef]

- Bock, R.D.; Aitkin, M. Marginal maximum likelihood estimation of item parameters: Application of an EM algorithm. Psychometrika 1981, 46, 443–459. [Google Scholar] [CrossRef]

- OECD. PISA 2018; Technical Report; OECD: Paris, France, 2020; Available online: https://bit.ly/3zWbidA (accessed on 4 October 2025).

- Holland, P.W.; Wainer, H. (Eds.) Differential Item Functioning: Theory and Practice; Lawrence Erlbaum: Hillsdale, NJ, USA, 1993. [Google Scholar] [CrossRef]

- Penfield, R.D.; Camilli, G. Differential item functioning and item bias. In Handbook of Statistics: Psychometrics; Rao, C.R., Sinharay, S., Eds.; North Holland (Elsiver): Amsterdam, The Netherlands, 2007; Volume 26, pp. 125–167. [Google Scholar] [CrossRef]

- Mellenbergh, G.J. Item bias and item response theory. Int. J. Educ. Res. 1989, 13, 127–143. [Google Scholar] [CrossRef]

- Douglas, J.; Cohen, A. Nonparametric item response function estimation for assessing parametric model fit. Appl. Psychol. Meas. 2001, 25, 234–243. [Google Scholar] [CrossRef]

- Sinharay, S.; Haberman, S.J. How often is the misfit of item response theory models practically significant? Educ. Meas. 2014, 33, 23–35. [Google Scholar] [CrossRef]

- Swaminathan, H.; Hambleton, R.K.; Rogers, H.J. Assessing the fit of item response theory models. In Handbook of Statistics: Psychometrics; Rao, C.R., Sinharay, S., Eds.; North Holland (Elsiver): Amsterdam, The Netherlands, 2007; Volume 26, pp. 683–718. [Google Scholar] [CrossRef]

- Buchholz, J.; Hartig, J. Comparing attitudes across groups: An IRT-based item-fit statistic for the analysis of measurement invariance. Appl. Psychol. Meas. 2019, 43, 241–250. [Google Scholar] [CrossRef]

- Buchholz, J.; Hartig, J. Measurement invariance testing in questionnaires: A comparison of three multigroup-CFA and IRT-based approaches. Psychol. Test Assess. Model. 2020, 62, 29–53. Available online: https://bit.ly/38kswHh (accessed on 4 October 2025).

- Khorramdel, L.; Shin, H.J.; von Davier, M. GDM software mdltm including parallel EM algorithm. In Handbook of Diagnostic Classification Models; von Davier, M., Lee, Y.S., Eds.; Springer: Cham, Switzerland, 2019; pp. 603–628. [Google Scholar] [CrossRef]

- Kim, Y.K.; Cai, L.; Kim, Y. Evaluation of item fit with output from the EM algorithm: RMSD index based on posterior expectations. Educ. Psychol. Meas. 2025; Epub ahead of print. [Google Scholar] [CrossRef]

- Köhler, C.; Robitzsch, A.; Hartig, J. A bias-corrected RMSD item fit statistic: An evaluation and comparison to alternatives. J. Educ. Behav. Stat. 2020, 45, 251–273. [Google Scholar] [CrossRef]

- Köhler, C.; Robitzsch, A.; Fährmann, K.; von Davier, M.; Hartig, J. A semiparametric approach for item response function estimation to detect item misfit. Brit. J. Math. Stat. Psychol. 2021, 74, 157–175. [Google Scholar] [CrossRef]

- Kunina-Habenicht, O.; Rupp, A.A.; Wilhelm, O. A practical illustration of multidimensional diagnostic skills profiling: Comparing results from confirmatory factor analysis and diagnostic classification models. Stud. Educ. Eval. 2009, 35, 64–70. [Google Scholar] [CrossRef]

- Joo, S.H.; Khorramdel, L.; Yamamoto, K.; Shin, H.J.; Robin, F. Evaluating item fit statistic thresholds in PISA: Analysis of cross-country comparability of cognitive items. Educ. Meas. 2021, 40, 37–48. [Google Scholar] [CrossRef]

- Joo, S.; Ali, U.; Robin, F.; Shin, H.J. Impact of differential item functioning on group score reporting in the context of large-scale assessments. Large-Scale Assess. Educ. 2022, 10, 18. [Google Scholar] [CrossRef]

- Robitzsch, A. Statistical properties of estimators of the RMSD item fit statistic. Foundations 2022, 2, 488–503. [Google Scholar] [CrossRef]

- Sueiro, M.J.; Abad, F.J. Assessing goodness of fit in item response theory with nonparametric models: A comparison of posterior probabilities and kernel-smoothing approaches. Educ. Psychol. Meas. 2011, 71, 834–848. [Google Scholar] [CrossRef]

- Tijmstra, J.; Bolsinova, M.; Liaw, Y.L.; Rutkowski, L.; Rutkowski, D. Sensitivity of the RMSD for detecting item-level misfit in low-performing countries. J. Educ. Meas. 2020, 57, 566–583. [Google Scholar] [CrossRef]

- von Davier, M.; Bezirhan, U. A robust method for detecting item misfit in large scale assessments. Educ. Psychol. Meas. 2023, 83, 740–765. [Google Scholar] [CrossRef]

- Wright, B.D.; Stone, M.H. Best Test Design; Mesa Press: Chicago, IL, USA, 1979; Available online: https://bit.ly/38jnLMX (accessed on 4 October 2025).

- Wu, M.; Tam, H.P.; Jen, T.H. Educational Measurement for Applied Researchers; Springer: Singapore, 2016. [Google Scholar] [CrossRef]

- Fährmann, K.; Köhler, C.; Hartig, J.; Heine, J.H. Practical significance of item misfit and its manifestations in constructs assessed in large-scale studies. Large-Scale Assess. Educ. 2022, 10, 7. [Google Scholar] [CrossRef]

- von Davier, M.; Yamamoto, K.; Shin, H.J.; Chen, H.; Khorramdel, L.; Weeks, J.; Davis, S.; Kong, N.; Kandathil, M. Evaluating item response theory linking and model fit for data from PISA 2000–2012. Assess. Educ. 2019, 26, 466–488. [Google Scholar] [CrossRef]

- Joo, S.; Valdivia, M.; Svetina Valdivia, D.; Rutkowski, L. Alternatives to weighted item fit statistics for establishing measurement invariance in many groups. J. Educ. Behav. Stat. 2024, 49, 465–493. [Google Scholar] [CrossRef]

- Held, L.; Sabanés Bové, D. Applied Statistical Inference; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar] [CrossRef]

- Camilli, G. Origin of the scaling constant d = 1.7 in item response theory. J. Educ. Stat. 1994, 19, 293–295. [Google Scholar] [CrossRef]

- Camilli, G. The scaling constant D in item response theory. Open J. Stat. 2017, 7, 780–785. [Google Scholar] [CrossRef]

- Savalei, V. Logistic approximation to the normal: The KL rationale. Psychometrika 2006, 71, 763–767. [Google Scholar] [CrossRef]

- Robitzsch, A.; Lüdtke, O. A review of different scaling approaches under full invariance, partial invariance, and noninvariance for cross-sectional country comparisons in large-scale assessments. Psychol. Test Assess. Model. 2020, 62, 233–279. Available online: https://bit.ly/3ezBB05 (accessed on 4 October 2025).

- Raju, N.S. The area between two item characteristic curves. Psychometrika 1988, 53, 495–502. [Google Scholar] [CrossRef]

- Joo, S.H.; Lee, P. Detecting differential item functioning using posterior predictive model checking: A comparison of discrepancy statistics. J. Educ. Meas. 2022, 59, 442–469. [Google Scholar] [CrossRef]

- Linacre, J.M. Understanding Rasch measurement: Estimation methods for Rasch measures. J. Outcome Meas. 1999, 3, 382–405. Available online: https://bit.ly/2UV6Eht (accessed on 4 October 2025). [PubMed]

- van der Linden, W.J.; Hambleton, R.K. (Eds.) Handbook of Modern Item Response Theory; Springer: New York, NY, USA, 1997. [Google Scholar] [CrossRef]

- Adams, R.J.; Wu, M.L. The mixed-coefficients multinomial logit model: A generalized form of the Rasch model. In Multivariate and Mixture Distribution Rasch Models; von Davier, M., Carstensen, C.H., Eds.; Springer: New York, NY, USA, 2007; pp. 57–75. [Google Scholar] [CrossRef]

- Wu, M.; Adams, R.J. Properties of Rasch residual fit statistics. J. Appl. Meas. 2013, 14, 339–355. [Google Scholar]

- Adams, R.; Wu, M. (Eds.) PISA 2000 Technical Report; OECD: Paris, France, 2003; Available online: https://tinyurl.com/y79c3kmp (accessed on 4 October 2025).

- Lamprianou, I. Applying the Rasch Model in Social Sciences Using R and BlueSky Statistics; Routledge: New York, NY, USA, 2019. [Google Scholar] [CrossRef]

- Wilson, M. Constructing Measures: An Item Response Modeling Approach; Routledge: New York, NY, USA, 2004. [Google Scholar] [CrossRef]

- Silva Diaz, J.A.; Köhler, C.; Hartig, J. Performance of infit and outfit confidence intervals calculated via parametric bootstrapping. Appl. Meas. Educ. 2022, 35, 116–132. [Google Scholar] [CrossRef]

- Osterlind, S.J.; Everson, H.T. Differential Item Functioning; Sage: Newcastle upon Tyne, UK, 2009. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Core Team: Vienna, Austria, 2024; Available online: https://www.R-project.org (accessed on 15 June 2024).

- Robitzsch, A.; Kiefer, T.; Wu, M. TAM: Test Analysis Modules. R Package Version 4.3-25. 2025. Available online: https://cran.r-project.org/web/packages/TAM/index.html (accessed on 28 August 2025).

- Wickham, H.; Chang, W.; Henry, L.; Pedersen, T.L.; Takahashi, K.; Wilke, C.; Woo, K.; Yutani, H.; Dunnington, D.; van den Brand, T.; et al. ggplot2: Create Elegant Data Visualisations Using the Grammar of Graphics. R Package Version 4.0.0. 2025. Available online: https://cran.r-project.org/web/packages/ggplot2/index.html (accessed on 11 September 2025).

- Wickham, H. ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- OECD. PISA 2006 Technical Report; OECD: Paris, France, 2009; Available online: https://bit.ly/38jhdzp (accessed on 4 October 2025).

- Robitzsch, A. sirt: Supplementary Item Response Theory Models. R Package Version 4.2-133. 2025. Available online: https://cran.r-project.org/web/packages/sirt/index.html (accessed on 27 September 2025).

- Kolenikov, S. Resampling variance estimation for complex survey data. Stata J. 2010, 10, 165–199. [Google Scholar] [CrossRef]

- Rao, J.N.K.; Wu, C.F.J. Resampling inference with complex survey data. J. Am. Stat. Assoc. 1988, 83, 231–241. [Google Scholar] [CrossRef]

- Longford, N.T.; Holland, P.W.; Thayer, D.T. Stability of the MH D-DIF statistics across populations. In Differential Item Functioning; Holland, P.W., Wainer, H., Eds.; Routledge: London, UK, 1993; pp. 171–196. [Google Scholar]

- Ackerman, T.A.; Ma, Y. Examining differential item functioning from a multidimensional IRT perspective. Psychometrika 2024, 89, 4–41. [Google Scholar] [CrossRef]

- von Davier, M.; Khorramdel, L.; He, Q.; Shin, H.J.; Chen, H. Developments in psychometric population models for technology-based large-scale assessments: An overview of challenges and opportunities. J. Educ. Behav. Stat. 2019, 44, 671–705. [Google Scholar] [CrossRef]

- Camilli, G. The case against item bias detection techniques based on internal criteria: Do item bias procedures obscure test fairness issues? In Differential Item Functioning: Theory and Practice; Holland, P.W., Wainer, H., Eds.; Erlbaum: Hillsdale, NJ, USA, 1993; pp. 397–417. [Google Scholar]

- Adams, R.J. Comments on Kreiner 2011: Is the Foundation Under PISA Solid? A Critical Look at the Scaling Model Underlying International Comparisons of Student Attainment; Technical Report; OECD: Paris, France, 2011; Available online: https://bit.ly/3wVUKo0 (accessed on 4 October 2025).

- Robitzsch, A.; Lüdtke, O. Some thoughts on analytical choices in the scaling model for test scores in international large-scale assessment studies. Meas. Instrum. Soc. Sci. 2022, 4, 9. [Google Scholar] [CrossRef]

- Bauer, D.J. Enhancing measurement validity in diverse populations: Modern approaches to evaluating differential item functioning. Brit. J. Math. Stat. Psychol. 2023, 76, 435–461. [Google Scholar] [CrossRef]

- Kopf, J.; Zeileis, A.; Strobl, C. Anchor selection strategies for DIF analysis: Review, assessment, and new approaches. Educ. Psychol. Meas. 2015, 75, 22–56. [Google Scholar] [CrossRef]

- Lord, F.M. Applications of Item Response Theory to Practical Testing Problems; Erlbaum: Hillsdale, NJ, USA, 1980. [Google Scholar] [CrossRef]

- Boer, D.; Hanke, K.; He, J. On detecting systematic measurement error in cross-cultural research: A review and critical reflection on equivalence and invariance tests. J. Cross-Cult. Psychol. 2018, 49, 713–734. [Google Scholar] [CrossRef]

- He, J.; Barrera-Pedemonte, F.; Buchholz, J. Cross-cultural comparability of noncognitive constructs in TIMSS and PISA. Assess. Educ. 2019, 26, 369–385. [Google Scholar] [CrossRef]

- Rutkowski, L.; Svetina, D. Assessing the hypothesis of measurement invariance in the context of large-scale international surveys. Educ. Psychol. Meas. 2014, 74, 31–57. [Google Scholar] [CrossRef]

- Robitzsch, A. On the choice of the item response model for scaling PISA data: Model selection based on information criteria and quantifying model uncertainty. Entropy 2022, 24, 760. [Google Scholar] [CrossRef] [PubMed]

- Owen, D.B. A table of normal integrals. Commun. Stat. Simul. Comput. 1980, 9, 389–419. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).