Abstract

In this paper, we unify and extend the linear quadratic pursuit–evasion games to dynamic equations on time scales. A mixed strategy for a single pursuer and evader is studied in two settings. In the open-loop setting, the corresponding controls are expressed in terms of a zero-input difference. In the closed-loop setting, the corresponding controls require a mixing feedback term when rewriting the system in extended state form. Finally, we offer a numerical simulation.

Keywords:

dynamic equations on time scales; optimal control; pursuit–evasion games; Riccati equation MSC:

49N75; 34N05

1. Introduction

The theory of deterministic pursuit–evasion games can single-handedly be attributed to Isaacs in the 1950s [1]. Here, Isaacs first considered differential games as two-player zero-sum games. One early application was formulation of missile guidance systems during his time with the RAND Corporation. Shortly thereafter, Kalman among others initiated the linear quadratic regulator (LQR) and the linear quadratic tracker (LQT) in the continuous and discrete cases (see Refs. [2,3,4,5]). Since then, the concept of pursuit–evasion games and optimal control have been closely related, each playing a fundamental role in control engineering and economics. One breakout paper that combined these concepts was written by Ho, Bryson, and Baron. Together, they studied linear quadratic pursuit–evasion games (LQPEG) as regulator problems [6,7]. In particular, this work included a three-dimensional target interception problem. Since then, there have been a number of papers that have extended these results in the continuous and discrete cases. One of the issues that researchers have faced in the past is the discrete nature of these mixed strategies.

In 1988, Stefan Hilger initiated the theory of dynamic equations of time scales, which seeks to unify and extend discrete and continuous analysis [8]. As a result, we can generalize a process to account for both cases, or any combination of the two, provided we restrict ourselves to closed, nonempty subsets of the reals (a time scale). From a numerical viewpoint, this theory can be thought of a generalized sampling technique that allows a researcher to evaluate processes with continuous, discrete, or uneven measurements. Since its inception, this area of mathematics has gained a great deal of international attention. Researchers have since found applications of time scales to include heat transfer, population dynamics, and economics. For a more in depth study of time scales, it is suggested that one see Bohner and Peterson’s books [9,10].

There have been a number of researchers who have sought to combine this field with the theory of control. A number of authors have contributed to generalizing the basic notions of controllability and observability (see Refs. [11,12,13,14,15]). Bohner first provided the conditions for optimality for dynamic control processes in Ref. [16]. DaCunha unified the theory of Lyapunov and Floquet theory in his dissertation [17]. Hilscher along with Zeidan have studied optimal control for sympletic systems [18]. Additional contributions can be found in Refs. [19,20,21,22,23,24], among several others.

In this paper, we study a natural extension of the LQR and LQT previously generalized to dynamics equations on time scales (see Refs. [25,26]). Here, we consider the following separable dynamic systems

where represent the pursuer and evader states, respectively, and are the respective controls. In general, we can assume . Note that are the associated state matrices, while are the corresponding control matrices. Here, the pursuing state seeks to overtake the evading state at time , while the evader state seeks an escape. For convenience, we make the following assumptions. First, we assume the matrices for both players are constant (i.e., we have linear-time invariant). However, it should be noted the control schemes developed throughout can be adapted for the time-varying case in a similar fashion. Second, we assume that the pursuer and evader dynamic systems are both controllable and belong to the same time scale.

Next, we note our state equations are associated with the cost functional

where and diagonal, and , . Note that the goal of the pursuing state is to minimize (2), while the evading state seeks to maximize it. Since these states represent opposing players, evaluating this cost can be thought of as a minimax problem.

The pursuit–evasion framework remains an active area across multiple disciplines, as found in Refs. [27,28,29,30,31,32,33]. It should be noted that there have been other excursions in combining dynamic games with time scales calculus. Libich and Stehlík introduced macroeconomic policy games on time scales with inefficient equilibria in Ref. [34]. Martins and Torres considered player games where each player sought to minimize a shared cost functional. Mozhegova and Petrov introduced a simple pursuit problem in Ref. [35] and a dynamic analogue to the “Cossacks-robbers” in Ref. [36]. Minh and Phuong have previously studied linear pursuit-evasion games on time scales in Ref. [37]. However, these results do not include a regulator/saddle point framework, and they are not complete when compared to this manuscript.

The organization of this paper is as follows. Section 2 presents core definitions and concepts of the time scales calculus. We offer the variational properties needed such that an optimal strategy exists in Section 3. In Section 4, we seek a mixed strategy when the final states are both fixed. In this setting, we can rewrite our cost functional (2) in terms of the difference in gramians of each system. For Section 5, we find a pair of a controls in terms of an extended state. In Section 6, we offer some examples including a numerical result. Finally, we provide some concluding remarks and future plans in Section 7.

In Table 1 below, we summarize the notation used throughout the manuscript.

Table 1.

Summary of notation used.

2. Time Scales Preliminaries

Here we offer a brief introduction to the theory of dynamic equations on time scales. For a more in-depth study of time scales, see Bohner and Peterson’s books [9,10].

Definition 1.

A time scale is an arbitrary nonempty closed subset of the real numbers. We let if exists; otherwise, .

Example 1.

The most common examples of time scales are , , for , and for .

Next, we introduce two time scales calculus concepts used throughout this paper.

Definition 2.

We define the forward jump operator and the graininess function by

Below, we note how the forward jump operator is applied to functions.

Definition 3.

For any function , we define the function by .

Next, we define the delta (or Hilger) derivative as follows.

Definition 4.

Assume and let . The delta derivative is the number (when it exists) such that, given any , there is a neighborhood U of t such that

In the next two theorems, we consider some properties of the delta derivative.

Theorem 1.

(See Ref. [9], Theorem 1.16). Suppose is a function and let . Then we have the following:

- a.

- If f is differentiable at t, then f is continuous at t.

- b.

- If f is continuous at t, where t is right-scattered, then f is differentiable at t and

- c.

- If f is differentiable at t, where t is right-dense, then

- d.

- If f is differentiable at t, then

Note that (3) is called the “simple useful formula.”

Example 2.

Note the following examples.

- a.

- When , then (if the limit exists)

- b.

- When , then

- c.

- When for , then

- d.

- When for , then

Next we consider the linearity property as well as the product rules.

Theorem 2.

(See Ref. [9], Theorem 1.20). Let be differentiable at . Then we have the following:

- a.

- For any constants α and β, the sum is differentiable at t with

- b.

- The product is differentiable at t with

Before introducing integration on time scales, we require our functions to be rd-continuous.

Definition 5.

A function is said to be rd-continuous on when f is continuous in points with and it has finite left-sided limits in points with . The class of rd-continuous functions is denoted by . The set of functions that are differentiable and whose derivative is rd-continuous is denoted by .

Next, we define an antiderivative on time scales.

Theorem 3.

(See Ref. [9], Theorem 1.74). Any rd-continuous function has an antiderivative F, i.e., on .

Now we introduce our fundamental theorem of calculus.

Definition 6.

Let and let F be any function such that for all . Then the Cauchy integral of f is defined by

Example 3.

Let with and assume that .

- a.

- When , then

- b.

- When , then

- c.

- When for , then

- d.

- When for , then

Next, we introduce our regressivity condition, used throughout the manuscript.

Definition 7.

An matrix-valued function A on is rd-continuous if each of its entries are rd-continuous. Furthermore, if , A is said to be regressive (we write ) if

Note that for our purposes, the matrix exponential used in this paper is defined to be the solution to the dynamic equation below.

Theorem 4.

(See Ref. [9], Theorem 5.8). Suppose that A is regressive and rd-continuous. Then the initial value problem

where I is the identity matrix, has a unique matrix-valued solution X.

Definition 8.

The solution X from Theorem 4 is called the matrix exponential function on and is denoted by .

Next, we offer useful properties associated with the matrix exponential.

Theorem 5.

(See Ref. [9], Theorem 5.21). Let A be regressive and rd-continuous. Then for ,

- a.

- , hence ,

- b.

- ,

- c.

- ,

- d.

- ,

- e.

- .

Next we give the solution (state response) to our linear system using a variation of parameters.

Theorem 6.

(See Ref. [9], Theorem 5.24). Let be an matrix-valued function on and suppose that is rd-continuous. Let and . Then the solution of the initial value problem

is given by

3. Optimization of Linear Systems on Time Scales

In this section, we make use of variational methods on time scales as introduced by Bohner in Ref. [16]. First, note that the state equations in (1) are uncoupled. However, when establishing our conditions for a saddle point, we would prefer to use a state that combines the information of the pursuer and evader. For convenience, we rewrite (1) as

where z represents an extended state given by , , , and . Associated with (4) is the quadratic cost functional

where , , and , . To minimize (5), we introduce the augmented cost functional

where the so-called Hamiltonian H is given by

and represents a multiplier to be determined later.

Remark 1.

Our treatment of (1) differs from the argument used by Ho, Bryson, and Baron in Ref. [6]. In their paper, they appealed to state estimates of the pursuer and evader to evaluate the cost functional. Their motivation for their argument is due to the notion that, when they studied pursing and evading missiles, they considered the difference in altitude to be negligible. As a result of our rewriting of (1), we are not required to make such a restriction.

Next, we provide necessary conditions for an optimal control. We assume that

for all such that .

In the following result, we determine the equations our extended state, costate, and controls must satisfy.

Lemma 1.

Proof.

First note that

Then

Then after rearranging terms, the first variation can be written as

Now in order for , we set each coefficient of independent increments , , , equal to zero. This yields the necessary conditions for a minimum of (5). Using the Hamiltonian (6), we have state and costate equations

and

Similarly, we have the stationary conditions

and

This concludes the proof. □

The following remark is useful in eliminating the costate later.

Remark 2.

Throughout this paper, we assume that is regressive. As a result, we can determine an optimal strategy if we know the value of the costate.

Finally, we provide the sufficient conditions for local optimal controls that ensure a saddle point.

Lemma 2.

Proof.

Taking the second derivative of , we have

If we assume that , , and satisfy the constraint

then the second variation is given by

Note that and while and . Thus, if and is fixed, then (11) is guaranteed to be positive. □

Next, we provide a definition of a saddle point for two competing players.

Here, the stationary conditions needed to ensure a saddle point are and (see Ref. [38]). For our purposes, this pair corresponds to when neither player wishes to deviate from this compromise without being penalized by the other player. It should be understood that this compromise occurs when we have the natural caveat that the pursuer and evader belong to the same time scale. In this paper, we do not claim that this saddle point must be unique.

4. Fixed Final States Case

In this section, we seek an optimal strategy when the final states are fixed. In this setting, we write the equations for the pursuer and evader separately. Here we consider the state and costate equations for the pursuer

as well as those for the evader

associated with the cost functional

The following term is needed to establish an optimal control scheme when the final states are fixed.

Definition 10.

The initial state difference, , is the difference between the zero-input pursuing and evading states, i.e.,

Next, we determine an open-loop strategy for both players. Note that the following theorem mirrors Kalman’s generalized controllability criterion as found in [15], Theorem 3.2.

Theorem 7.

Proof.

Solving (11) for , we have

Now solving (19) with Theorem 6 at time , we have

Similarly, the final state for the evader can be written as

Taking the difference in the final states and rearranging, we have

The equation for v can be shown similarly. This concludes the proof. □

Next, we determine the optimal cost.

Theorem 8.

Proof.

Remark 3.

Suppose that the pursuer wants to use a strategy u that intercepts the evader (using strategy v) with minimal energy. Note that if and only if . From the classical definition of controllability, this implies that the pursuer captures the evader when the pursuer is “more controllable” than the evader. A sufficient condition for the pursuing state to intercept the evader is given by . As a result, this relationship is preserved in the unification of pursuit–evasion to dynamic equations on time scales.

5. Free Final States Case

In this section, we develop an optimal control law in the form of state feedback. In considering the boundary conditions, note that is known (meaning ), while is free (meaning ). Thus, the coefficient on must be zero. This gives the terminal condition on the costate to be

Remark 4.

Now in order to solve this two-point boundary value problem, we make the assumption that z and λ satisfy

for all. This condition (23) is called a “sweep condition,” a term used by Bryson and Ho in Ref. [7]. Since the terminal condition , it is natural to assume that as well.

Next, we offer a form of our Riccati equation that S must satisfy. Here, the Riccati equation is used to update the pursuer and evader’s controls when expressed in feedback form.

Theorem 9.

Assume that S solves

Proof.

Next, we offer an alternative form of our Riccati equation.

Lemma 3.

Next, we define our Kalman gains as follows.

Definition 11.

Let be regressive. Then the matrix-valued functions

and

are called the pursuer feedback gain and evader feedback gain, respectively.

Now we introduce our combined control scheme in extended state feedback form.

Proof.

Now combining like terms yields

Multiplying both side by the inverse of and rearranging terms, we have

Next we rewrite our extended state equation under the influence of the pursuit–evasion control laws. This yields the closed-loop plant given by

which can be used to find an optimal trajectory for any given .

The following result is useful in establishing another form of the Riccati equation.

Lemma 4.

If is regressive and S is symmetric, then

Proof.

Thus, Equation (32) holds. □

Now we rewrite the Riccati Equation (27) in so-called (generalized) Joseph stabilized form (see [38]).

Theorem 11.

If is regressive and S is symmetric, then S solves the Riccati Equation (27) if and only if it solves

Proof.

The statement follows directly from Lemma 4. □

Note that each equation in this section can be stored “offline,” meaning their structures will not be altered when simulating results. It should be noted that each variation of our Riccati equation accounts for the gaps in time between decisions made by the pursuer and evader. Finally, we rewrite the optimal cost.

Theorem 12.

Proof.

From Theorem 12, if the current state and S are known, we can determine the optimal cost before we apply the optimal control or even calculate it. Table 2 below summarizes our results.

Table 2.

The LQPEG on .

6. Examples

In this section, we offer examples in the free-final state case for various time scales. For the first three examples, we make use of Examples 2 and 3.

Example 4.

(The Continuous LQPEG). Let and consider

associated with the cost functional

Example 5.

(The h-difference LQPEG). Let and consider the extended state system

Note that, by using

we can rewrite the system as

Next, the associated cost functional takes the form

Then the state, costate, and stationary Equations (9) are given by

Example 6.

(The q-difference LQPEG). Let with and consider the extended state

Now using

we can rewrite the system as

Then the associated cost functional is given by

Example 7.

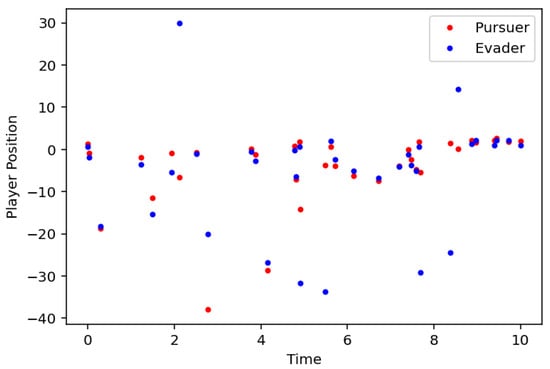

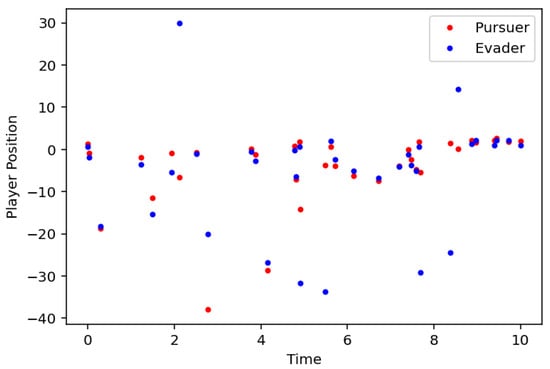

In this last example, we provide a numerical of the LQPEG. Here, we sample a two-dimensional pursuer and evader on the same discrete, but uneven time scale

Next, we consider the theoretical linear dynamic system

Note that the first component of each player represents its position, while the second corresponds to its velocity. For simplicity, only the position is observed. Here, we set the weights in (5) to be , , and . The LQPEG is then implemented in Python 3.12.11 using the formulations found in Table 2. Here, the Riccati equation is calculated component-wise as typical to avoid any lags when handling inverses. Note that, when compared to the classic discrete LQPEG, the feedback equations here account for the different time steps as the algorithm is implemented. This is a more accurate reflection of the dynamics between the pursuer and evader when our measurements are uneven. Further, this does not require “re-indexing” our time steps or implementing additional interpolations when using the classic discrete LQPEG design.

The plots for the pursuer’s and evader’s positions are given in Figure 1 below. The points of intersection can be thought as a saddle point, where the pursuer and evader come to an arrangement. Note that saddle points are inherently unstable. Any deviation by either player results in this arrangement being voided.

Figure 1.

Two-dimensional LQPEG on an isolated, uneven .

7. Concluding Remarks and Future Work

In this project, we have established the LQPEG where the pursuer and evader belong to the same arbitrary time scale One potential application of this work is when the pursuer represents a drone and the evader represents a missile guidance system where their corresponding signals are unevenly sampled. Here, the cost in part represents the wear and tear on the drone. A saddle point in this setting would represent a “live and let live” arrangement, where the drone is allowed to spy briefly on the missile-guidance system and return home, but is not given opportunity to preserve enough of its battery to outstay its welcome. Similarly, in finance, the pursuer and evader can represent competing companies where a saddle point would correspond to an effort to coexist, where a hostile takeover or unnecessarily expended resources can be avoided. We have sidestepped the setting where the pursuer and evader each belong to their own time scale and , respectively. However, these time scales can be merged using a sample-and-hold method as found in Refs. [39,40].

One potential extension of this work is the introduction of additional pursuers. In this setting, the cost must be adjusted to account for the closest pursuer, which can vary over the time scale. A second potential extension is to consider the setting when one player is subject to a delay. Here, both players can still belong to the same time scale. However, this allows one player to act after the other, perhaps with some knowledge of the opposing player’s strategy. Finally, a third possible approach is to such games in a stochastic setting. Here, we can discretize each player’s stochastic linear time-invariant system to a dynamic system on an isolated time scale, as found in Refs. [39,41]. However, the usual separability property is not preserved in this setting.

Author Contributions

D.F. and R.W. contributed to the analysis and writing/editing of the manuscript as well as the numerical example. N.W. contributed to the project conceptualization/analysis, writing/editing, and the funding of the project. All authors have read and agreed to the published version of the manuscript.

Funding

This project was partially supported by the National Science Foundation, grant DMS-2150226, the NASA West Virginia Space Grant Consortium, training grant #80NSSC20M0055, and the NASA Missouri Space Grant Consortium, grant #80NSSC20M0100.

Data Availability Statement

No new data were created or analyzed in this study.

Acknowledgments

The authors would like to thank Matthias Baur and Tom Cuchta for the use of their time scales Python package in producing the last example. The authors also thank the reviewers for their efforts in improving the quality of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LQR | linear quadratic regulator |

| LQT | linear quadratic tracker |

| LQPEG | linear quadratic pursuit-evasion games |

References

- Isaacs, R. Differential Games: A Mathematical Theory with Applications to Warfare and Pursuit, Control and Optimization; John Wiley & Sons Inc.: New York, NY, USA, 1965; pp. xvii+384. [Google Scholar]

- Kalman, R.E. Contributions to the theory of optimal control. Bol. Soc. Mat. Mex. 1960, 5, 102–119. [Google Scholar]

- Kalman, R.E. When is a linear control system optimal? Trans. ASME Ser. D. J. Basic Eng. 1964, 86, 81–90. [Google Scholar] [CrossRef]

- Kalman, R.E.; Koepcke, R.W. Optimal synthesis of linear sampling control systems using generalized performance indexes. Trans. ASME Ser. D. J. Basic Eng. 1958, 80, 1820–1826. [Google Scholar] [CrossRef]

- Kalman, R.E. The theory of optimal control and the calculus of variations. In Mathematical Optimization Techniques; University of California Press: Berkeley, CA, USA, 1963; pp. 309–331. [Google Scholar]

- Ho, Y.C.; Bryson, A.E., Jr.; Baron, S. Differential games and optimal pursuit-evasion strategies. IEEE Trans. Autom. Control 1965, 10, 385–389. [Google Scholar] [CrossRef]

- Bryson, A.E., Jr.; Ho, Y.C. Applied Optimal Control; Optimization, estimation, and control, Revised printing; Hemisphere Publishing Corp.: Washington, DC, USA, 1975; pp. xiv+481. [Google Scholar]

- Hilger, S. Ein Maßkettenkalkül mit Anwendung auf Zentrumsmannigfaltigkeiten. Ph.D. Thesis, Universität Würzburg, Würzburg, Germany, 1988. [Google Scholar]

- Bohner, M.; Peterson, A. Dynamic Equations on Time Scales; Birkhäuser Boston Inc.: Boston, MA, USA, 2001; pp. x+358. [Google Scholar]

- Bohner, M.; Peterson, A. (Eds.) Advances in Dynamic Equations on Time Scales; Birkhäuser Boston Inc.: Boston, MA, USA, 2003; p. xii+348. [Google Scholar]

- Bartosiewicz, Z.; Pawłuszewicz, E. Linear control systems on time scales: Unification of continuous and discrete. In Proceedings of the 10th IEEE International Conference on Methods and Models in Automation and Robotics MMAR’04, Miedzyzdroje, Poland, 30 August–2 September 2004; pp. 263–266. [Google Scholar]

- Bartosiewicz, Z.; Pawłuszewicz, E. Realizations of linear control systems on time scales. Control Cybernet. 2006, 35, 769–786. [Google Scholar]

- Davis, J.; Gravagne, I.; Jackson, B.; Marks, R., II. Controllability, observability, realizability, and stability of dynamic linear systems. Electron. J. Diff. Equ. 2009, 2009, 1–32. [Google Scholar]

- Fausett, L.V.; Murty, K.N. Controllability, observability and realizability criteria on time scale dynamical systems. Nonlinear Stud. 2004, 11, 627–638. [Google Scholar]

- Bohner, M.; Wintz, N. Controllability and observability of time-invariant linear dynamic systems. Math. Bohem. 2012, 137, 149–163. [Google Scholar] [CrossRef]

- Bohner, M. Calculus of variations on time scales. Dynam. Systems Appl. 2004, 13, 339–349. [Google Scholar]

- DaCunha, J.J. Lyapunov Stability and Floquet Theory for Nonautonomous Linear Dynamic Systems on Time Scales. Ph.D. Thesis, Baylor University, Waco, TX, USA, 2004. [Google Scholar]

- Hilscher, R.; Zeidan, V. Weak maximum principle and accessory problem for control problems on time scales. Nonlinear Anal. 2009, 70, 3209–3226. [Google Scholar] [CrossRef]

- Bettiol, P.; Bourdin, L. Pontryagin maximum principle for state constrained optimal sampled-data control problems on time scales. ESAIM Control. Optim. Calc. Var. 2021, 27, 51. [Google Scholar] [CrossRef]

- Zhu, Y.; Jia, G. Linear Feedback of Mean-Field Stochastic Linear Quadratic Optimal Control Problems on Time Scales. Math. Probl. Eng. 2020, 2020, 8051918. [Google Scholar] [CrossRef]

- Ren, Q.Y.; Sun, J.P. Optimality necessary conditions for an optimal control problem on time scales. AIMS Math. 2021, 6, 5639–5646. [Google Scholar] [CrossRef]

- Zhu, Y.; Jia, G. Stochastic Linear Quadratic Control Problem on Time Scales. Discret. Dyn. Nat. Soc. 2021, 2021, 5743014. [Google Scholar] [CrossRef]

- Poulsen, D.; Defoort, M.; Djemai, M. Mean Square Consensus of Double-Integrator Multi-Agent Systems under Intermittent Control: A Stochastic Time Scale Approach. J. Frankl. Inst. 2019, 356, 9076–9094. [Google Scholar] [CrossRef]

- Duque, C.; Leiva, H. Controllability of a semilinear neutral dynamic equation on time scales with impulses and nonlocal conditions. TWMS J. Appl. Eng. Math. 2023, 13, 975–989. [Google Scholar]

- Bohner, M.; Wintz, N. The linear quadratic regulator on time scales. Int. J. Difference Equ. 2010, 5, 149–174. [Google Scholar]

- Bohner, M.; Wintz, N. The linear quadratic tracker on time scales. Int. J. Dyn. Syst. Differ. Equ. 2011, 3, 423–447. [Google Scholar] [CrossRef]

- Mu, Z.; Jie, T.; Zhou, Z.; Yu, J.; Cao, L. A survey of the pursuit–evasion problem in swarm intelligence. Front. Inf. Technol. Electron. Eng. 2023, 24, 1093–1116. [Google Scholar] [CrossRef]

- Sun, Z.; Sun, H.; Li, P.; Zou, J. Cooperative strategy for pursuit-evasion problem in the presence of static and dynamic obstacles. Ocean. Eng. 2023, 279, 114476. [Google Scholar] [CrossRef]

- Chen, N.; Li, L.; Mao, W. Equilibrium Strategy of the Pursuit-Evasion Game in Three-Dimensional Space. IEEE/CAA J. Autom. Sin. 2024, 11, 446–458. [Google Scholar] [CrossRef]

- Venigalla, C.; Scheeres, D.J. Delta-V-Based Analysis of Spacecraft Pursuit–Evasion Games. J. Guid. Control. Dyn. 2021, 44, 1961–1971. [Google Scholar] [CrossRef]

- Feng, Y.; Dai, L.; Gao, J.; Cheng, G. Uncertain pursuit-evasion game. Soft Comput. 2020, 24, 2425–2429. [Google Scholar] [CrossRef]

- Bertram, J.; Wei, P. An Efficient Algorithm for Multiple-Pursuer-Multiple-Evader Pursuit/Evasion Game. In Proceedings of the AIAA Scitech 2021 Forum, Virtual, 11–15 & 19–21 January 2021. [Google Scholar] [CrossRef]

- Ye, D.; Shi, M.; Sun, Z. Satellite proximate pursuit-evasion game with different thrust configurations. Aerosp. Sci. Technol. 2020, 99, 105715. [Google Scholar] [CrossRef]

- Libich, J.; Stehlík, P. Macroeconomic games on time scales. Dyn. Syst. Appl. 2008, 5, 274–278. [Google Scholar]

- Petrov, N.; Mozhegova, E. On a simple pursuit problem on time scales of two coordinated evaders. Chelyabinsk J. Phys. Math. 2022, 7, 277–286. [Google Scholar] [CrossRef]

- Mozhegova, E.; Petrov, N. The differential game “Cossacks–robbers” on time scales. Izv. Instituta Mat. Inform. Udmurt. Gos. Univ. 2023, 62, 56–70. [Google Scholar] [CrossRef]

- Minh, V.D.; Phuong, B.L. Linear pursuit games on time scales with delay in information and geometrical constraints. TNU J. Sci. Technol. 2019, 200, 11–17. [Google Scholar]

- Lewis, F.L.; Vrabie, D.L.; Syrmos, V.L. Optimal Control; John Wiley & Sons, Inc.: New York, NY, USA, 2012. [Google Scholar] [CrossRef]

- Poulsen, D.; Davis, J.; Gravagne, I. Optimal Control on Stochastic Time Scales. IFAC-PapersOnLine 2017, 50, 14861–14866. [Google Scholar] [CrossRef]

- Siegmund, S.; Stehlik, P. Time scale-induced asynchronous discrete dynamical systems. Discret. Contin. Dyn. Syst. B 2017, 22, 1011–1029. [Google Scholar] [CrossRef]

- Poulsen, D.; Wintz, N. The Kalman filter on stochastic time scales. Nonlinear Anal. Hybrid Syst. 2019, 33, 151–161. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).