1. Introduction

Strip steel occupies a pivotal position in industrial production and is widely used across global manufacturing sectors, including construction, automotive, machinery, and shipbuilding. However, various surface defects inevitably occur during the manufacturing and processing stages [

1]. These defects not only affect the appearance of the product but also weaken its material properties, potentially leading to fatigue fractures and accelerated corrosion [

2]. Therefore, achieving accurate detection of strip steel surface defects is essential to ensuring product quality, improving production efficiency, and implementing effective production management. Initially, surface defect detection relied on manual visual inspection, which was heavily influenced by subjective factors and exhibited low efficiency [

3].

Early defect detection methods primarily relied on traditional image processing and machine learning techniques. A significant branch of this research treats defect detection as a texture analysis problem. Methods such as those utilizing Gabor filters [

4] or wavelet transforms [

5] focus on extracting handcrafted features that describe the defect’s texture. After feature extraction, supervised algorithms like Support Vector Machines (SVM) [

6] are commonly employed for classification. This approach, consisting of texture analysis and a supervised classifier, offers the advantage of lower computational cost and can be effective for specific, well-defined defect types. However, the performance of such methods heavily depends on predefined feature extraction rules [

7], which limits their robustness and adaptability in handling complex textures or variable lighting conditions.

In another approach, researchers combined image processing with fuzzy logic [

8]. These techniques typically first use image processing to extract a set of quantitative features from a potential defect, such as area or contrast. These quantitative features are then subjected to fuzzification, where continuous input variables are mapped into fuzzy sets. Finally, classification is performed using a fuzzy inference system, which applies fuzzy logic rules—often derived from expert knowledge—to handle the uncertainty and ambiguity in defect classification. While this approach can effectively model the ambiguity in defect classification, its success is critically dependent on the manual definition of fuzzy sets and rules. This dependence makes it challenging to adapt to new defect types or changes in production conditions.

In recent years, deep learning has demonstrated significant advantages in tasks such as industrial image analysis, defect feature extraction, and recognition. Single-stage detection frameworks, represented by You Only Look Once (YOLO) series models [

9], exhibit remarkable speed advantages. Two-stage detection architectures, such as Faster Region-based Convolutional Neural Networks (Faster R-CNN) [

10], achieve higher detection accuracy in complex scenarios through Region Proposal Networks (RPN). Transformer-based detection models [

11] leverage global modeling capabilities to capture long-range dependencies, demonstrating stronger robustness and multi-scale adaptability in complex scenarios.

However, strip steel surface defect detection presents unique challenges to deep learning methods. First, defect images are characterized by sparse defect distributions with high background proportions. As shown in

Figure 1, background regions typically occupy the majority of strip steel images. Analysis of the NEU-DET dataset reveals that, in approximately 60% of images, defect regions account for no more than 40% of the total pixels. This high background proportion poses a major challenge: detection algorithms must accurately extract sparse target features amidst abundant background information while avoiding interference from background noise. Second, defect features are diverse and complex. Strip steel surface defects not only vary significantly in shape and size but also exhibit randomness in spatial distribution and frequency, often appearing as irregular patterns in local regions. This imposes higher requirements for precise localization and feature extraction capabilities.

To address these challenges, researchers have proposed various deep learning solutions, which can be broadly categorized by their primary strategies. One category focuses on enhancing feature representation and fusion to distinguish defects from the background. For example, EFD-YOLOv4 [

12] and CABF-FCOS [

13] utilize attention mechanisms and bidirectional feature fusion to suppress background noise. A second category aims to improve model accuracy by refining geometric properties or data characteristics, such as STD2 [

14], which uses Deformable Pooling to adapt to defect shape variations, and FDFNet [

15], which enhances defect-background contrast. A third category prioritizes efficiency through lightweight network design, as seen in LAACNet [

16].

However, a fundamental limitation underlies all these approaches: whether they enhance features, refine geometry, or lighten the network, they still perform convolution operations indiscriminately across the entire image. This means substantial computational resources are wasted on processing vast, featureless background regions, which not only leads to inefficiency but also risks introducing redundant background noise that can interfere with the learning of sparse defect features.

In contrast to these strategies, this study approaches the problem of strip steel surface defect detection from a new perspective. Instead of indirectly mitigating the effects of background regions through post hoc feature fusion or attention, we introduce supervision signals directly into the core operational logic of the model. By integrating these signals into the feature extraction, region proposal, and deformable convolution processes, our method proactively forces the model to focus its computational resources only on regions of interest. This paradigm shift directly tackles the root cause of inefficiency and feature interference posed by high background-to-defect ratios. This design not only avoids wasteful computation on redundant backgrounds but also enhances the model’s ability to learn the sparse features of defects, thereby simultaneously improving both computational efficiency and detection accuracy. Based on the above analysis, this paper proposes a supervised focus feature network (SFF-NET) based on the Faster R-CNN framework. The main contributions of this paper are as follows:

(1) Design of the Supervised Convolutional Network (SCN): To address the issues of sparse defect regions and redundant background regions in strip steel images, SCN leverages constructed supervision signals to achieve selective feature extraction. This method focuses the feature learning process on target regions and their contextual signals, ensuring effective extraction of critical region features while optimizing computational efficiency. By skipping redundant computations in background regions, SCN effectively reallocates computational resources to defect-related areas. This not only improves efficiency but also enhances the model’s ability to learn from sparse, defect-critical regions.

(2) Design of the Supervised Region Proposal Strategy (SRPS): Considering the variability in the location, shape, and scale of strip steel surface defects, SRPS utilizes constructed supervision signals to optimize sample allocation during the feature learning process. This strategy ensures that each annotated box corresponds to at least one high-quality candidate region. By intelligently optimizing the candidate region assignment, SRPS reduces redundant computations during training while ensuring high-quality coverage of defect regions. This approach increases the diversity of positive samples, minimizes the introduction of redundant negative samples, and improves both the quality and efficiency of region proposals.

(3) Design and Implementation of the Supervised Deformable Convolutional Layer (SDCN): To handle the irregular shape characteristics of strip steel surface defects, SDCN integrates the supervision mechanism with deformable convolution. This approach maintains adaptability to irregular defect shapes while optimizing the computational range. By dynamically adjusting the sampling regions based on supervision signals, SDCN enhances the model’s ability to represent complex and irregular defect features. Additionally, it reduces computational costs by focusing operations on defect-relevant regions rather than the entire feature map and improves the model’s detection performance for irregular defects.

The remainder of this paper is organized as follows.

Section 2 reviews and discusses the related work.

Section 3 provides a detailed explanation of the architecture and functionality of the supervised focused feature model.

Section 4 presents and evaluates the experimental results.

Section 5 concludes the paper and outlines future research directions.

3. Supervised Focused Feature Network

Our proposed SFF-Net model is based on the Faster R-CNN architecture. As shown in

Figure 2, SFF-Net consists of three key components: a backbone based on Residual Network (ResNet50) [

38] and Feature Pyramid Network (FPN) [

39], an RPN, and a head. During the training phase, the backbone incorporates Supervised Convolution (SCN), enabling the model to naturally focus on local features within supervised ranges, reducing computational cost on redundant background areas and improving training efficiency. Additionally, Supervised Deformable Convolution (SDCN) is introduced to ResNet50. By implementing dynamic sampling within supervised ranges, SDCN enhances the model’s global feature extraction capability for complex defect shapes. In the RPN, our proposed Supervised Region Proposal Strategy (SRPS) utilizes supervised ranges to directly guide sample allocation for candidate regions, improving both the quality and efficiency of region proposals. These supervised operations are implemented based on constructed supervision regions, achieving precise focus in feature extraction and region proposal, enhancing the model’s training efficiency and detection performance.

3.1. Supervised Range Acquisition

Supervised range acquisition serves as a fundamental component of SFF-NET, directly impacting the effectiveness of subsequent supervised convolution operations and sample allocation by precisely defining focus areas for feature extraction and sample assignment. To balance contextual information with computational efficiency while avoiding potential feature information loss during training, we design a method for obtaining supervised ranges based on the characteristics of strip steel defect images. This method includes the acquisition of key supervised range and RPN-compatible supervised ranges, aimed at reducing redundant computations and improving feature extraction quality in critical areas.

The key supervised region aims to expand the annotated box area and introduce a suitable amount of contextual information, ensuring the integrity of target features. Specifically, for each bounding box

in the dataset S, the smallest base anchor box

in the base anchor box set

, with dimensions

that exceed both the width and height of the target s, is selected. Using the center point

of the bounding box as a reference, the expanded region

is generated. The final key supervised region

is defined as follows:

where n denotes the number of bounding boxes,

and

represent width and height functions, respectively,

signifies the function used to update dimensions, and

and

represent the dimensions of the smallest anchor satisfying the conditions. To clearly illustrate the calculation process of

, Algorithm 1 presents its pseudocode.

| Algorithm 1. Key supervised region acquisition |

| Input: | S: bounding boxes {s1, s2, …}; : Predefined base anchor boxes {b1, b2, …} |

| Output: | (corresponding to Equation (1)) |

| 1 | ← ∅ ⟵ Initialize the result set |

| 2 | for each bounding box s in S do: |

| 3 | FoundAnchorDims ← null |

| 4 | for each anchor box b in do: |

| 5 | if width(b) > width(s) and height(b) > height(s) then: |

| 6 | FoundAnchorDims ← (width(b), height(b)) ⟵ Record the dimensions of b |

| 7 | break |

| 8 | end if |

| 9 | end for |

| 10 | (w*, h*) ← FoundAnchorDims ⟵ End of Equation (2) |

| 11 | if (w*, h*) is not null then: |

| 12 | ) ← GetCenter(s) ⟵ Obtain the center point of current bounding box |

| 13 | + h*/2) |

| 14 | ∪ {U} |

| 15 | end if |

| 16 | end for |

| 17 | |

Since the RPN stage relies on anchors at fixed positions to generate candidate regions, and these anchors do not completely overlap with the bounding boxes, using only the key supervised region might lead to missing supervision signals. To ensure the proper functioning of supervised operations within the RPN module, the RPN-compatible supervised region

is further determined.

where

indicates the anchor box that obtains the maximum IoU with ground truth box s, and a contains anchor box

meeting the positive sample threshold

and

meeting the negative sample threshold

. Following the design of Faster R-CNN, we set

and

, and the number of negative samples is set to three times the number of target boxes, with negative samples being randomly selected to maintain the diversity and balance between positive and negative samples during training. To clearly illustrate the calculation process of

, Algorithm 2 presents its pseudocode.

| Algorithm 2. RPN-compatible supervised range acquisition |

| Input: | S: bounding boxes {s1, s2, …}; A: All predefined base anchor boxes {a1, a2, …} |

| Output: | (corresponding to Equation (4)) |

| 1 | ← ∅ ⟵ Initialize RPN-compatible supervised region. |

| 2 | For each bounding box s in S do: |

| 3 | ← FindAnchorWithMaxIoU(s, A) ⟵ Select anchor with the maximum IoU for s. |

| 4 | ∪} ⟵ as a positive sample to the supervised region. |

| 5 | For each anchor a in A do: |

| 6 | If a ≠ then: |

| 7 | then AddToPositiveSamples(, a) |

| 8 | then AddToNegativeSamples(, a) |

| 9 | End if |

| 10 | End for |

| 11 | End for |

| 12 | Return ⟵ Output the final RPN-compatible supervised range. |

Finally, the supervised ranges are merged to obtain the comprehensive supervised range

.

This comprehensive supervised range , encoded as sparse matrices throughout the model training process, provides explicit operational guidance for SCN, SDCN, and SRPS. This method strikes a balance between preserving key information and controlling computational complexity, thereby enhancing the efficiency and accuracy of subsequent processing. In the experimental section, the effectiveness of this method will be further analyzed and verified through ablation experiments.

3.2. Supervised Convolutional Backbone Network Combined with Deformable Convolutions

Traditional convolution operations suffer from low computational efficiency when processing strip steel defect images, where background regions are extensive and highly similar. To address this characteristic, we introduce a supervision mechanism based on traditional convolution and propose SCN. SCN constrains convolution operations within the comprehensive supervised ranges

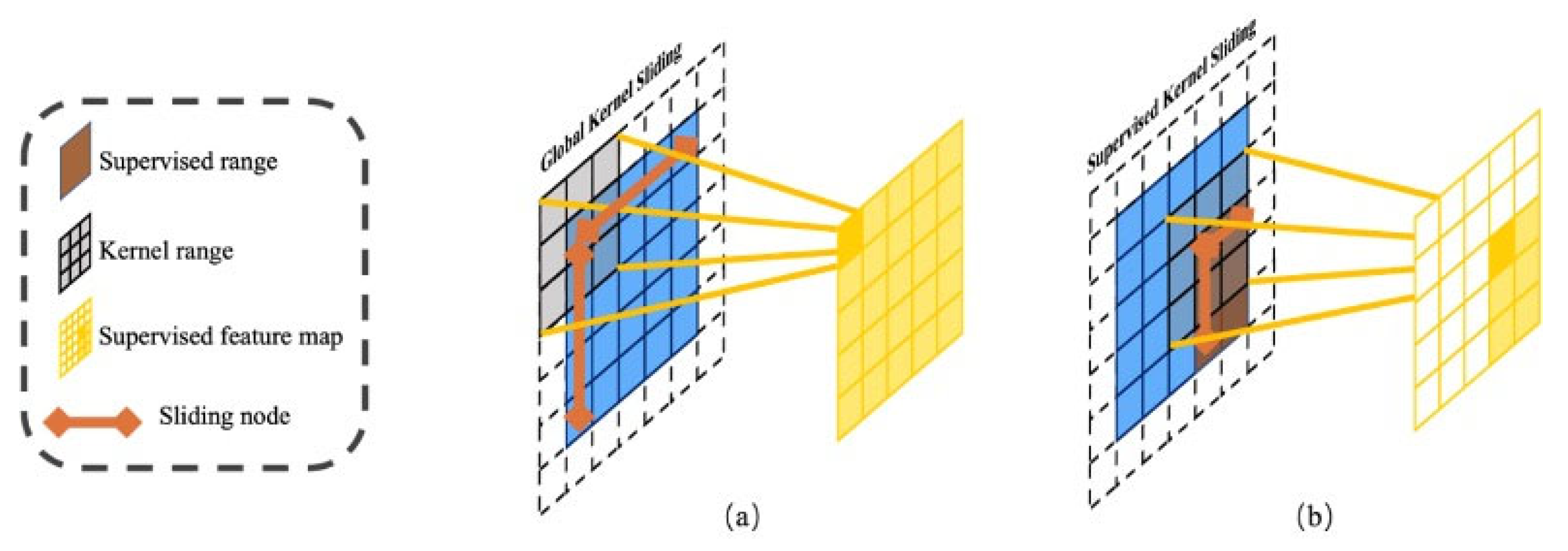

, avoiding redundant calculations on extensive similar background areas while concentrating computational resources on critical regions (defects and their surroundings) to optimize the model’s feature extraction capability and efficiency. A comparison between traditional convolution and supervised convolution is shown in

Figure 3.

Traditional convolution operations utilize a fixed convolutional kernel to traverse all pixels of the input image in a left-to-right and top-to-bottom sequence. The definition of traditional convolution is as follows:

where

and

represent the current point and its neighboring points, respectively, and

is the weight at

. R is the convolution kernel, i.e., the receptive field of x.

The definition of SCN is as follows:

where

is the current convolution center position. If

falls within the supervised range

, then convolution is performed. Otherwise, this position is skipped, and the process continues to evaluate the next position. The core concept of supervised convolution lies in restricting convolution operations within the supervised range

, skipping redundant computations in background regions to optimize computational efficiency and feature extraction capability. Compared to traditional convolution that traverses the entire feature map point by point, SCN more efficiently focuses on target region features by incorporating task-specific supervised range designs. For a clearer illustration of the SCN’s operational mechanism, the computational process defined in Equation (9) is detailed as pseudocode in Algorithm 3.

| Algorithm 3. Supervised Convolution Process |

| Input | X(Input feature map), W(Convolution kernel weights), |

| Output | Y(Output feature map) |

| 1 | Y ← InitializeZeroFeatureMap(ExpectedOutputDimensions) |

| 2 | for each position in the output map Y do: |

| 3 | if the center position ∈ then: |

| 4 | InputPatch ← GetReceptiveFieldRegion(X, center = , size = KernelSize(W)) |

| 5 | ← Perform standard convolution |

| 6 | end if |

| 7 | end for |

| 8 | return Y |

When dealing with irregular defects, traditional convolution may lead to insufficient extraction of target features when dealing with irregular defects due to its fixed-shaped kernel. Deformable convolution transforms the fixed-shape convolution process into a variable convolution process that can adapt to the shape of the object, making it suitable for irregular defect detection [

40]. The deformable convolution can be described as follows:

where

represents the predicted offset, obtained through a dedicated convolution layer.

To simultaneously achieve adaptive feature extraction and computational efficiency optimization, we combine the supervision mechanism with deformable convolution, proposing SDCN. The supervision mechanism ensures that deformation operations occur only in critical regions. The definition is as follows:

However, SDCN ensures that all deformation operations occur strictly within the supervised region . Specifically, after the model predicts the offset , the final sampling point is checked to ensure it lies within . If the sampling point falls outside the supervised region , it is clipped back to the nearest boundary of . This ensures that deformation operations remain focused on critical regions, while avoiding unnecessary sampling outside the supervised area.

SDCN is incorporated at the end of Stage 3, Stage 4, and Stage 5 modules in the backbone network. All parameters in the normal convolution layer are obtained from the training process of the whole detector. This multi-scale feature extraction strategy enables the model to simultaneously acquire target feature information at different resolutions, effectively enhancing the detection capability for defects of different scales.

3.3. Supervised Region Proposal Strategy

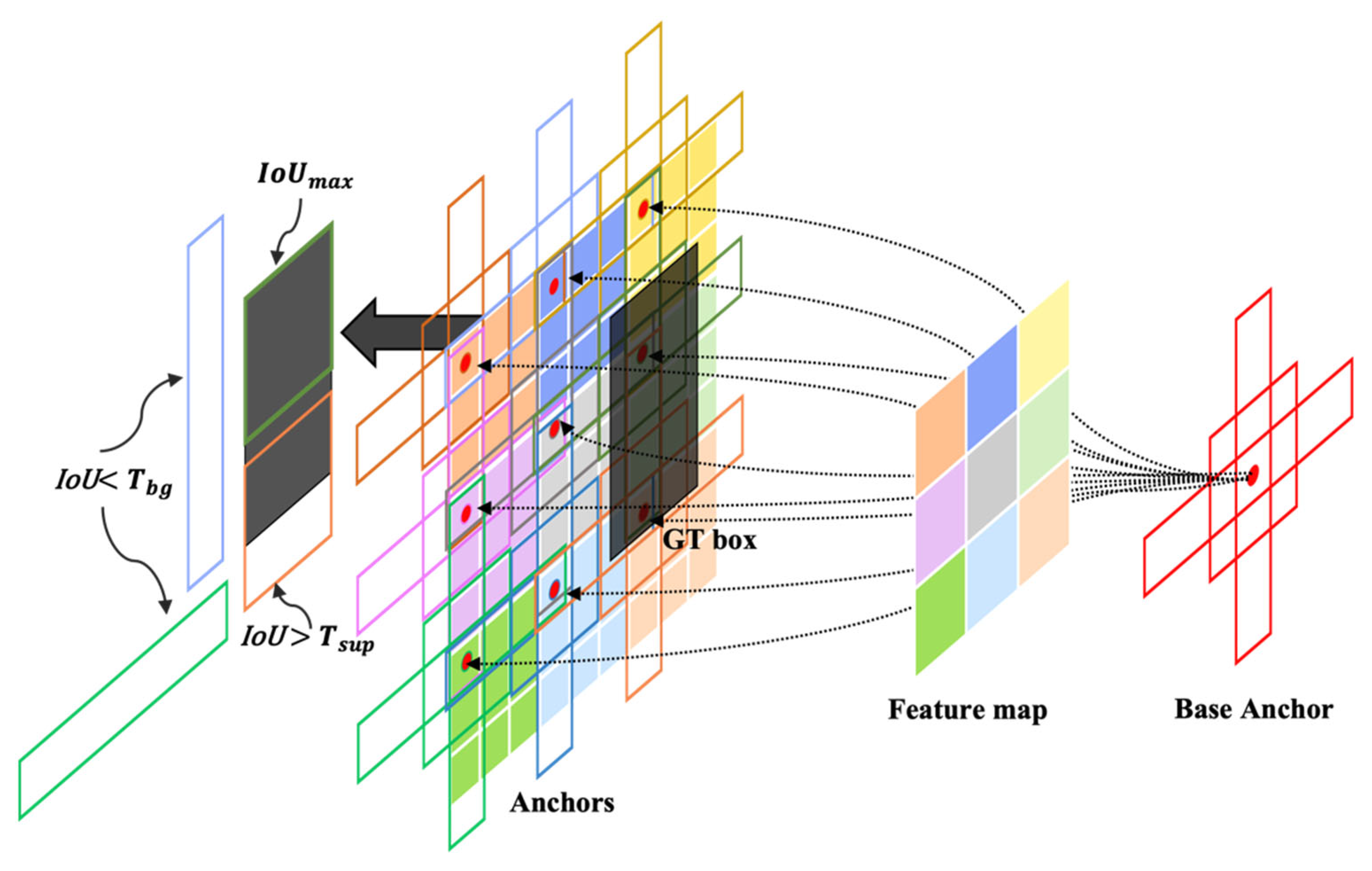

In object detection tasks, high-quality region proposals are crucial for robust detection performance. RPNs primarily rely on the IoU between candidate regions and bounding boxes to allocate positive and negative samples. However, this method faces two major issues in strip defect detection scenarios. First, a significant amount of computational resources is wasted due to the repeated computation of IoU for anchor boxes in background regions during each training iteration. Second, limited by the IoU threshold, some small targets or special shaped targets may not be correctly allocated as positive samples, thus affecting the detection effect. To address these challenges, as shown in

Figure 4, we propose an SRPS that specifically optimizes the RPN sample allocation process, effectively saving computational resources and improving allocation efficiency.

In conventional RPN, complete sample allocation is performed during each training iteration.

where

and

denote the IoU thresholds for positive and negative samples, respectively. This approach not only incurs high computational costs but may also overlook some valuable potential samples.

By contrast, SRPS optimizes the RPN sample allocation strategy by utilizing the supervision range

obtained in

Section 3.1, which is compatible with the RPN stage, for sample allocation.

includes the anchor box

with the highest IoU for each ground truth box, anchor boxes

meeting the positive sample criteria, and proportionally selected negative sample anchor boxes

. This pre-computed sample allocation process can be represented as:

where

denotes the set of samples involved in training, and a denotes the anchor boxes that meet the conditions.

Through this optimization of RPN sample allocation strategy, SRPS effectively eliminates the need for repetitive IoU computation during each training iteration, reducing computational overhead in the RPN stage. Meanwhile, this pre-computed deterministic allocation mechanism ensures that each ground truth box has at least one anchor box with maximum IoU as a positive sample, and enhances sample diversity through reasonable sampling strategies.

By integrating the above supervisory operations, the model achieves efficient feature learning. Algorithm 4 provides a step-by-step overview of the complete training process for the proposed model, illustrating how the supervision mechanisms are integrated and interact across different stages.

| Algorithm 4. SFF-Net Overall Training Process |

| Input | TrainingDataset, Anchors |

| Output | TrainedModel |

| 1. | Model ← Initialize() |

| 2. | SupervisionRrangesCache ← DataPreprocessing (TrainingDataset, Anchors)

← Apply Section 3.1 to acquire supervised ranges |

| 3. | for each training epoch do: |

| 4. | for each (image, ) in (TrainingDataset) do: |

| 5. | ] ← Retrieve the supervised ranges for the current image |

| 6. | Featuremaps ← Model.Backbone(image, )

← Apply Section 3.2 to extract features using SCN and SDCN. |

| 7. | )

← Apply Section 3.3 to perform sample assignment and calculate the RPN loss. |

| 8. | ← Model.Head(Featuremaps, proposals, )

← Calculate the detection head loss |

| 9. | + |

| 10. | ) |

| 11. | end for |

| 12. | end for |

| 13. | TrainedModel ← Model |

| 14. | return TrainedModel |

4. Experiments

4.1. Dataset

NEU-DET dataset was collected from an actual hot-rolled strip steel production line [

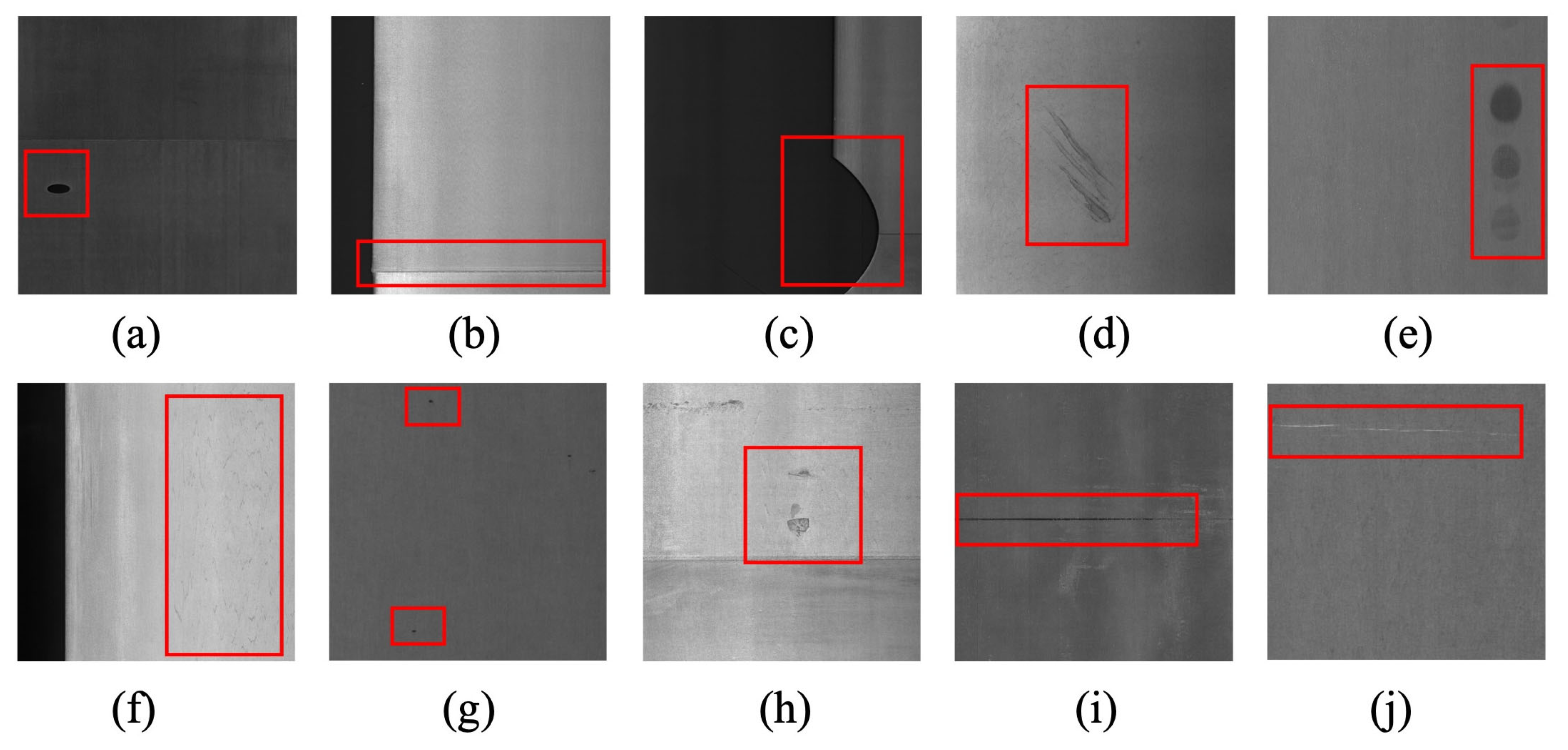

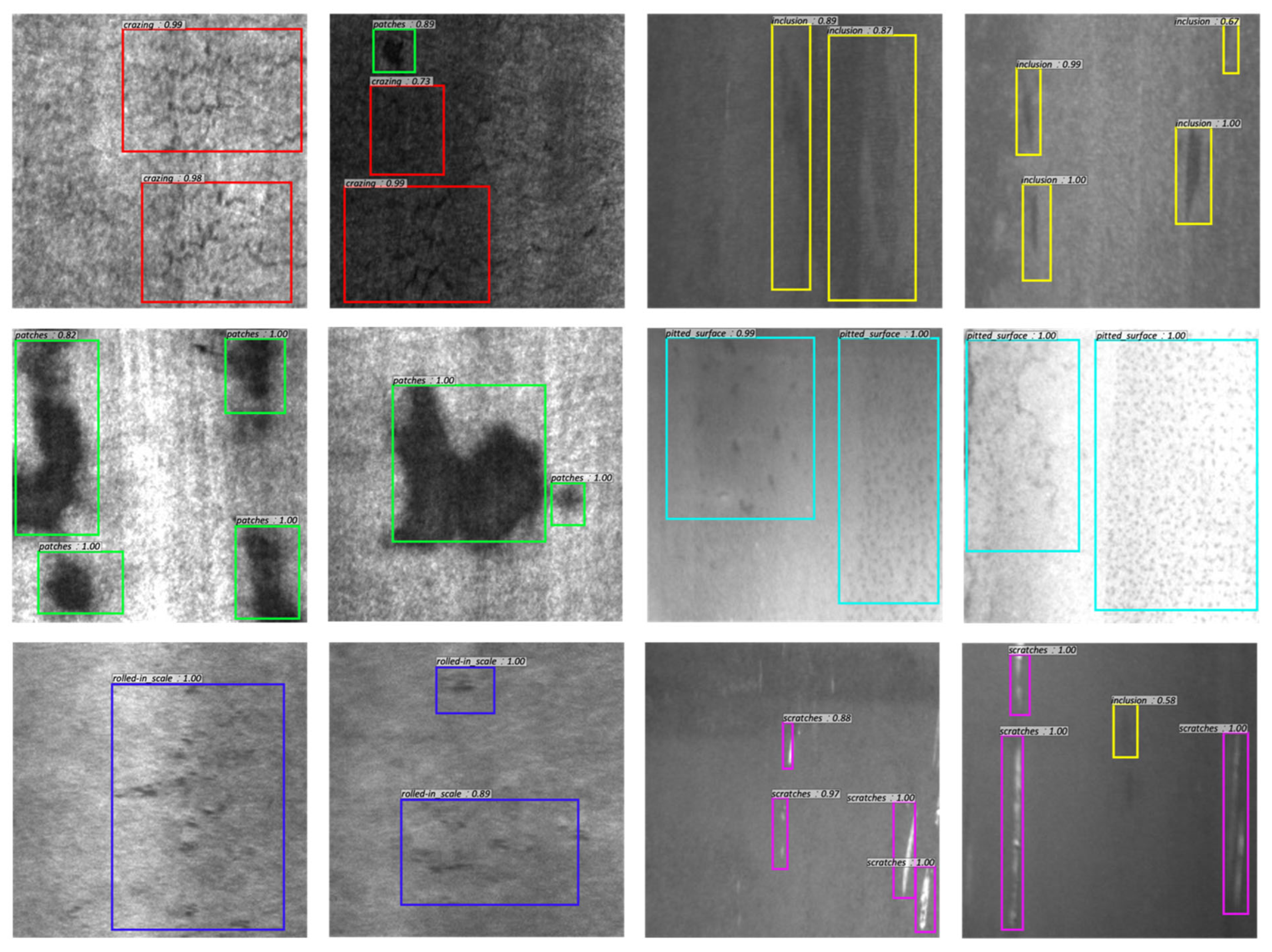

41]. As shown in

Figure 5, this dataset includes six types of typical defects: Crazing (Cr), Inclusion (In), Patches (Pa), Pitted Surface (Ps), Rolled-in Scale (Rs), and Scratches (Sc). Each defect category contains 300 grayscale images with a resolution of 200 × 200 pixels, resulting in a total of 1800 images. The dataset preserves challenging factors from industrial production environments, such as lighting condition variations, ambient light interference, and high-speed motion blur effects. These characteristics effectively reflect real-world challenges in industrial inspection and can validate algorithm adaptability in complex scenarios.

GC10-DET dataset was also collected from actual industrial production lines [

42]. The dataset comprises 2294 grayscale images with a resolution of 2048 × 1000 pixels, covering ten types of typical defects. As shown in

Figure 6, these defects include Punching (pu), Welding Line (wl), Crescent Gap (cg), Water Spot (ws), Oil Spot (os), Silk Spot (ss), Inclusion (in), Roll Pit (rp), Crease (cr), and Waist Fold (wf). The dataset also preserves uncertainty factors from real production environments, such as uneven illumination, material surface reflectivity variations, motion blur, and operational condition differences. These characteristics make the GC10-DET dataset a true reflection of industrial production complexity, suitable for evaluating algorithm robustness and generalization capability in practical scenarios.

4.2. Implementation Details

The experimental framework for this study is implemented using PyTorch, with Python 3.8 as the programming language and PyTorch 1.7.1 as the deep learning framework version. The experiments are conducted on the open-source platform PyCharm 2021.3, running on an Ubuntu 18.04 operating system environment. The hardware configuration includes an Nvidia GeForce RTX 3090 GPU with 24 GB of VRAM. Five-fold cross-validation was employed to tune model hyperparameters and select the optimal model, with initial training parameters shown in

Table 1. The reported results represent the average performance over 5-fold cross-validation, ensuring the robustness of the evaluation.

Considering uncertainty factors in actual industrial environments such as lighting variations and motion blur, although both NEU-DET and GC10-DET datasets preserve complex characteristics from real industrial environments, a series of data augmentation strategies were still employed during training to further enhance model adaptability and robustness. These operations include horizontal flipping with 50% probability, random rotation within ±10°, random translation within ±10%, random brightness adjustment to simulate lighting variations, random noise addition, and random sharpening.

4.3. Evaluation Metrics

To comprehensively evaluate the model’s detection performance, multiple evaluation metrics were employed, with IoU = 0.5 set as the criterion for detection box validity. Specifically, a prediction is considered valid when the Intersection over Union (IoU) between the predicted box and the ground truth box exceeds 0.5. Based on this criterion, the following evaluation metrics were introduced:

where True Positive (TP) represents correctly predicted positive cases with IoU above the threshold, False Positive (FP) denotes negative cases with IoU above the threshold but incorrectly predicted, and False Negative (FN) indicates positive cases with IoU below the threshold that the model failed to predict correctly. To measure the overall detection accuracy across multiple categories, Mean Average Precision (mAP), the most commonly used comprehensive performance metric in object detection, was selected. It is defined as the mean of Average Precision (AP) across all categories:

where

represents Precision as a function of Recall, and n denotes the total number of prediction categories. Additionally, F1-score was introduced as a supplementary metric to balance the relationship between Precision and Recall, defined as:

To comprehensively evaluate the model’s computational efficiency, additional efficiency metrics were introduced. Params represents the total number of model parameters, measuring the model’s scale. Floating Point Operations (FLOPs) measure computational complexity, indicating the number of floating-point operations required for one forward inference. Frames Per Second (FPS) and Inference Time assess the model’s real-time performance, where FPS indicates the number of images the model can process per second, and Inference Time represents the time required to process a single image. Finally, Training Time Per Component was recorded to quantify the optimization effect of each module on model efficiency.

4.4. Ablation Experiment

4.4.1. Impact of SRPS, SCN, and SDCN Modules

To comprehensively evaluate the contributions of each module in SFF-Net, we conducted a series of ablation experiments to quantify their individual and combined impacts on both detection performance and computational efficiency. These experiments were performed on the NEU-DET dataset, utilizing a Faster R-CNN framework with a ResNet50-FPN backbone as the baseline model.

Table 2 presents the detection performance of SFF-Net with various module configurations, illustrating the individual and combined contributions of SRPS, SCN, and SDCN. The results highlight the improvements achieved by integrating these modules into the baseline model.

SRPS enhancing sample assignment. SRPS addresses the limitations of the standard RPN by ensuring that each ground truth box is assigned at least one anchor box with the maximum IoU. This mechanism effectively mitigates the issue of insufficient positive samples and imbalanced assignments, particularly in scenarios with sparse defects and high background-to-defect ratios. Compared to the baseline, SRPS improved mAP from 75.3% to 75.9% and F1-score from 81.4% to 82.3%, with Recall increasing from 85.9% to 87.2%. These improvements demonstrate the enhanced target capture capability provided by SRPS, ensuring more balanced and effective sample assignments.

SRPS + SDCN adapting to irregular defects. The integration of SRPS and SDCN further refines the model’s ability to localize irregular and complex defect morphologies. SDCN dynamically adjusts sampling locations within supervised regions, enhancing feature extraction for defects with variable shapes and sizes. Adding SDCN to SRPS increased mAP from 75.9% to 77.4% and F1-score from 82.3% to 83.7%, with Recall improving from 87.2% to 88.3%. These results validate SDCN’s role in complementing SRPS by providing adaptive sampling capabilities, particularly for challenging defect geometries.

SRPS + SCN enhancing feature discrimination. The Supervised Convolutional Network (SCN) leverages supervised regions to focus convolution operations on defect-relevant areas, effectively suppressing background noise. However, SCN inherently depends on SRPS to define these supervised regions, as its operations are confined to regions pre-selected by SRPS. Adding SCN to the baseline and SRPS configuration boosted mAP to 79.5% and F1-score to 85.0%, with Precision increasing from 77.9% to 80.8% and Recall from 87.2% to 89.6%. These results demonstrate SCN’s strength in improving feature discrimination and detection accuracy by concentrating on defect-relevant features.

Full integration of SRPS, SCN, and SDCN. When all three modules were integrated, the model achieved its highest performance with mAP reaching 81.2%, F1-score increasing to 86.1%, and Recall improving to 90.5%. These results highlight the complementary nature of SRPS, SCN, and SDCN: SRPS ensures effective sample assignment, SCN enhances feature discrimination, and SDCN adapts to irregular defects.

Table 3 illustrates the computational efficiency of SFF-Net with different module configurations, highlighting the performance and computational costs improvements achieved by each module.

SRPS maintaining efficiency while optimizing sample assignment. The introduction of SRPS maintained the parameter count at 41.5 M and slightly reduced FLOPs to 91.3 G, while cutting training time by 1 min. This improvement reflects SRPS’s ability to optimize sample assignment mechanisms by focusing on supervised regions. By reducing redundant computations in the RPN stage, SRPS achieved these efficiency gains without compromising assignment accuracy or increasing computational overhead.

SRPS + SCN significant efficiency gains. The addition of SCN to the SRPS configuration brought notable computational benefits. SCN leverages supervised regions to skip redundant background calculations, making it particularly effective for strip steel defect detection tasks dominated by large, homogeneous backgrounds. SCN reduced FLOPs by 20%, shortened inference time by 24%, and decreased training time by 26% compared to the SRPS-only configuration. These improvements demonstrate SCN’s ability to reallocate computational resources to defect-relevant regions, significantly reducing redundancy and improving overall efficiency.

SRPS + SDCN balancing complexity and efficiency. The integration of SDCN with SRPS introduced a modest increase in computational cost, with parameters rising by 3.2 M and FLOPs by 0.8 G. Despite this, the inference time remained nearly unchanged at 22.3 ms, and training time increased marginally to 107 min. The additional complexity of deformable convolution operations was effectively managed by confining them to supervised regions. This limited the computational burden while enabling adaptive sampling for irregular defects. The performance improvements achieved by SDCN clearly outweighed its minor computational overhead, demonstrating its capability to balance accuracy and efficiency.

Full integration of SRPS, SCN, and SDCN. When all three modules were integrated, SFF-Net achieved the optimal trade-off between detection performance and computational efficiency. The parameter count increased to 44.7 M, and FLOPs reached 73.6 G, representing only a slight increase compared to the SCN-only configuration. The inference time remained low at 17.2 ms, and the training time increased slightly to 81 min, reflecting an effective balance between computational cost and accuracy. These results confirm that the combined impact of SRPS, SCN, and SDCN enables SFF-Net to deliver superior detection performance while maintaining computational efficiency. Compared to the baseline, SFF-Net achieved 19.5% reduction in FLOPs, 22.5% improvement in inference time, and 23.5% decrease in training time.

In conclusion, SFF-NET achieves both detection performance improvement and computational efficiency optimization through the synergistic effect of three key modules. SRPS optimizes sample assignment strategy to enhance target capture capability. SCN enables efficient computational resource utilization and improves feature representation accuracy. SDCN enhances modeling capability for irregular defects. Notably, these performance improvements were achieved while reducing computational overhead, fully demonstrating the effectiveness of supervision mechanisms in strip steel defect detection.

4.4.2. Role of Supervised Range

To comprehensively evaluate the impact of supervised range, this section compares three different size configurations: annotation size, supervised size, and larger size. The results are presented in

Table 4, examining the comprehensive effects of supervised range on model performance and computational efficiency through quantitative and qualitative analysis.

In terms of performance metrics, the choice of supervised range significantly impacts detection effectiveness. Compared to annotation size, using supervised size improved the model’s mAP from 79.5% to 81.2% and F1-score to 86.1%. Precision increased from 80.9% to 82.1%, and Recall from 88.4% to 90.5%, indicating that appropriate expansion of supervised range not only enhanced the model’s defect discrimination accuracy but also improved target capture capability. To verify the rationality of supervised range selection, we further experimented with larger size configuration. Results showed that excessive expansion of supervised range led to performance degradation, with mAP dropping to 80.3% and F1-score to 84.9%. Although still showing improvement over annotation size, it was notably inferior to the designed supervised range, confirming that supervised range requires precise control rather than simply being larger.

From a computational efficiency perspective, adjustments to supervised range produced varying impacts on computational resource consumption. Under annotation size, the model’s FLOPs were 72.9 G, with 16.4 ms inference time and 79 min training time. After adopting the designed supervised range, despite appropriately expanding the feature extraction range, FLOPs only increased by 0.7 G, inference time by 0.8 ms, and training time by 2 min, demonstrating this range expansion’s computational economy. However, with larger range, FLOPs increased to 75.3 G, inference time extended to 18.5 ms, and training time rose to 84 min, further confirming that excessive supervised range leads to unnecessary computational burden.

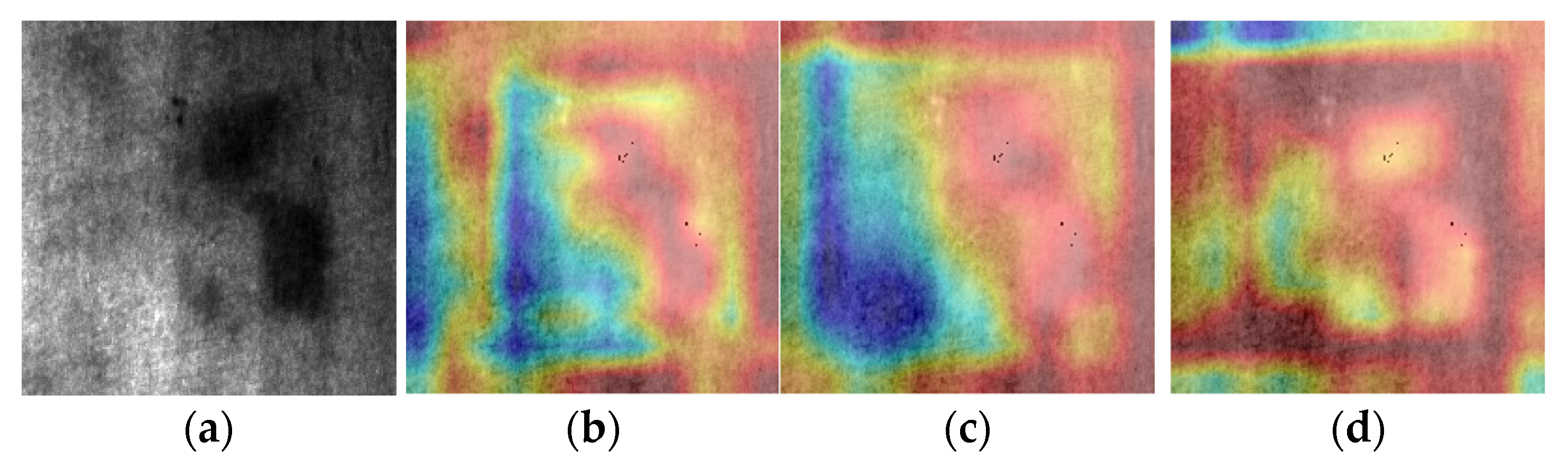

To intuitively understand the impact mechanism of different supervised ranges,

Figure 7 displays the corresponding feature response heatmaps. Under annotation size configuration, heatmaps mainly concentrated on the defect area itself, lacking response to surrounding information. This limitation explains its lower detection performance. With the designed supervised range, heatmaps maintained strong responses to defect areas while including moderate contextual information, reflected in comprehensive performance improvement. Under larger range configuration, heatmaps showed overly dispersed features, validating that performance degradation might stem from redundant information interference.

In conclusion, supervised range design requires balance between feature extraction sufficiency and computational efficiency. Too small a range limits the model’s understanding of target context, while excessive range introduces irrelevant information and increases computational burden. Experimental results demonstrate that our proposed supervised range design method maintains computational efficiency while improving detection performance.

4.5. Comparison with Other Methods

To comprehensively evaluate the performance of SFF-Net, we compared it with a variety of classic one-stage and two-stage models, as well as advanced models incorporating attention mechanisms or Transformer structures, on the NEU-DET and GC10-DET steel surface defect datasets. These models include SSD, Cascade R-CNN, Deformable DETR, CABF-FCOS, RDD-YOLO, YOLOv7, YOLOv8n, DGYOLOv8, RT-DETR-R18, DsP-YOLO, and STD2. The comparison experiments focus on detection accuracy (performance on different defect categories), computational efficiency (FPS), and model complexity (parameters and FLOPs), with an emphasis on analyzing the adaptability and performance differences between SFF-Net and other models.

Performance comparison on NEU-DET dataset. As shown in

Table 5, SFF-Net achieved 81.2% mAP, outperforming most comparison models overall, particularly in handling large-scale defect categories with prominent features (e.g., Pa and Sc). Specifically, SFF-Net achieved 96.5% and 96.3% AP for the Pa and Sc categories, respectively, outperforming RDD-YOLO and DsP-YOLO, which incorporate advanced attention mechanisms. This performance improvement can be attributed to the SCN module’s ability to effectively focus on target regions and extract more discriminative features. By leveraging supervised mechanisms to optimize feature extraction, the SCN module significantly enhances detection precision. For defect categories with significant features (e.g., In and Ps), SFF-Net achieved APs of 88.2% and 89.9%, respectively, which, although slightly lower than CABF-FCOS’s performance on the In category, still maintains a high level overall. This indicates that SFF-Net has good generalization capability for medium-contrast defects with relatively prominent features.

However, for low-contrast defects or those with ambiguous textures (e.g., Cr and Rs), SFF-Net showed relatively weaker performance, achieving APs of 46.7% and 69.4%, which are lower than DsP-YOLO and DGYOLOv8. This gap highlights the limitations of SFF-Net in handling defects with low contrast or complex features. This may be due to the model’s supervised mechanisms lacking sufficient adaptability to complex backgrounds. For example, DsP-YOLO enhances attention to low-contrast regions via the CBAM module, while DGYOLOv8 incorporates a gated attention module (GAM) to improve feature selection for target regions. In comparison, SFF-Net lacks such specific optimizations in these scenarios, leading to relatively lower performance.

In terms of computational efficiency, SFF-Net achieved an inference speed of 58.1 FPS, significantly outperforming traditional two-stage models like Cascade R-CNN and high-complexity models like STD2. This demonstrates that SFF-Net effectively reduces unnecessary computational redundancy through the SCN module while maintaining high detection accuracy. Although SFF-Net’s speed is lower than that of lightweight models such as YOLOv8n, it achieved a 2.3% mAP improvement in overall detection performance, with particularly notable advantages in detecting complex defects. Additionally, SFF-Net’s parameter count is 44.7 M, and its FLOPs are 73.6 G, which are significantly lower than Cascade R-CNN and STD2. This demonstrates that SFF-Net achieves a better balance between performance and computational cost.

Performance comparison on GC10-DET dataset. As shown in

Table 6, SFF-Net achieved 72.5% mAP, slightly lower than RDD-YOLO (75.1%) and DsP-YOLO (76.3%), but still superior to YOLOv8n (68.7%) and Cascade R-CNN (66.7%). Specifically, SFF-Net performed exceptionally well on categories with prominent features (e.g., cg and wf), achieving APs of 99.1% and 91.6%, respectively, validating its effectiveness in detecting defects with clear features. This strong performance can be attributed to the SDCN module’s adaptive feature extraction capability, which optimizes feature representation during detection and significantly enhances detection accuracy.

For defect categories with complex structures (e.g., os and cr), SFF-Net achieved APs of 70.1% and 79.9%, significantly outperforming RT-DETR-R18 (59.6% and 35.2%) and YOLOv8n (56.6% and 42.5%). This demonstrates SFF-Net’s strong adaptability in handling defect detection tasks with complex backgrounds and diverse morphologies. However, for extremely small or low-contrast defects (e.g., in and rp), SFF-Net achieved APs of 30.5% and 42.4%, which shows mixed results, while outperforming Cascade R-CNN and YOLOv8n on the rp category, it lags behind the specialized DsP-YOLO in the same category (42.4% vs. 79.9%). This limitation suggests that SFF-Net’s supervised mechanism still requires further optimization to improve its ability to capture fine details in extreme scenarios. In terms of computational efficiency, SFF-Net achieved an inference speed of 56.0 FPS, which, although slower than YOLOv8n (208.3 FPS), still maintains a good balance between accuracy and efficiency. Compared to RDD-YOLO and DsP-YOLO, SFF-Net demonstrates greater advantages in detecting complex defect categories, while also achieving competitive inference speed.

Visualization analysis.

Figure 8 and

Figure 9 show detection result comparisons between SFF-Net and other algorithms on NEU-DET and GC10-DET datasets. Results demonstrate SFF-Net’s stable detection capability on both datasets. For defects with prominent features, the model accurately localizes and classifies them, reliably detecting even low-contrast targets. For defects with complex backgrounds, while there’s room for improvement in localization precision, basic detection requirements are met. This performance characteristic mainly benefits from the synergistic effect of SCN and SRPN, enabling the model to focus on complete defect regions while effectively reducing interference from redundant background regions and extracting more accurate target features. The introduction of SDCN enhanced model adaptability to various defect morphologies. These results validate that supervised network design is suitable for strip steel defect detection scenarios with relatively uniform backgrounds but diverse defect features, ensuring effective feature extraction while improving detection accuracy.

4.6. Analysis of Failure Cases

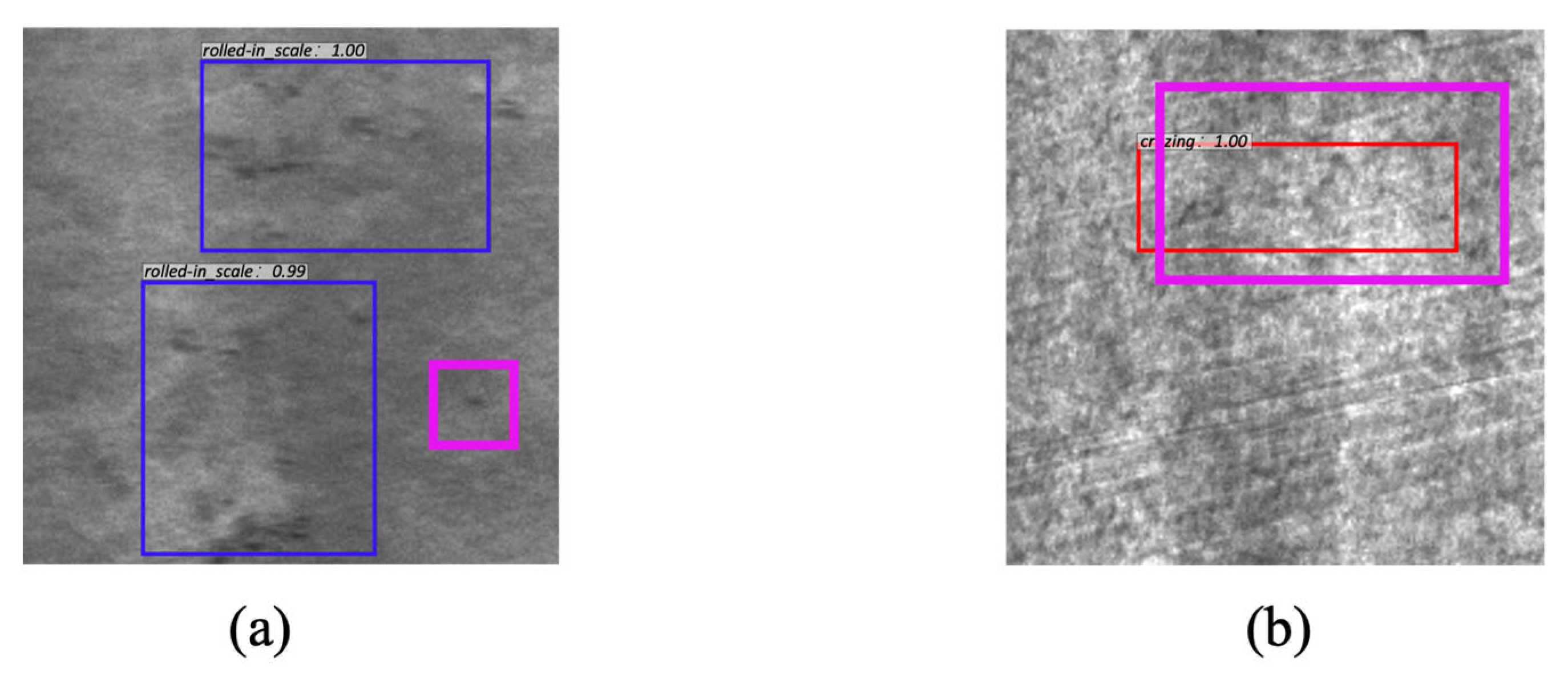

Although SFF-Net significantly improves detection performance for most defect categories, its performance on small defects and low-contrast defects (e.g., Cr and Rs in the NEU-DET dataset, and in and rp in the GC10-DET dataset) is relatively weaker. Based on specific failure cases illustrated in

Figure 10, the pink bounding boxes in the figure indicate the annotated bounding boxes. The limitations of SFF-Net are discussed in the following paragraphs.

First, for small-sized defects, consecutive downsampling operations excessively weaken their features on deeper feature maps, ultimately resulting in missed detections. For instance, As shown in

Figure 10a, the isolated defects located far from the defect clusters are difficult for the model to distinguish from the background due to their small size and blurred boundaries, resulting in missed detections. Observing the sizes of defects in the failure cases, their long axis is often less than 10 pixels, a characteristic that is easily overlooked by detection networks. Second, for low-contrast defects, their boundary features are inherently weak and are further diluted during the convolution and downsampling processes, making it challenging for the model to extract sufficient discriminative features, which results in localization errors or missed detections. As shown in

Figure 10b, these defects are difficult for the model to accurately identify due to their extremely low contrast with the background.

Furthermore, while the SCN and SDCN modules in SFF-Net restrict convolution operations to supervised regions, enabling the model to focus more on feature extraction in target areas, this supervision mechanism does not actively enhance the weak signals of small or low-contrast defects. Consequently, the model’s perception capability for these types of defects remains insufficient.

5. Conclusions

This paper proposes a detection network, SFF-Net, based on supervised feature extraction to address challenges in strip steel surface defect detection, such as sparse target distribution, large uniform background regions leading to redundant computations, and low learning efficiency. The network incorporates the Supervised Convolutional Network (SCN), which confines convolution operations to supervised regions, reducing computational redundancy and improving the model’s learning efficiency. This design also enables the model to focus on defect-related areas. The Supervised Deformable Convolutional Layer (SDCN) is designed to better handle irregularly shaped defects by adaptively adjusting sampling positions within supervised regions, enhancing the model’s ability to capture complex and diverse defect features while maintaining computational efficiency. Finally, the Supervised Region Proposal Strategy (SRPS) optimizes the sample allocation process to ensure high-quality candidate regions.

Experiments conducted on the NEU-DET dataset demonstrate that SFF-Net achieves competitive performance in defect detection while effectively reducing computational overhead. Compared to the baseline, SFF-Net improves the mAP by 5.9% and reduces FLOPs by 19.5%, highlighting its improvements in learning efficiency and accuracy. To evaluate the generalization capability of SFF-Net in strip steel surface defect detection, additional experiments are conducted on the GC10-DET dataset, where SFF-Net exhibits consistent performance, particularly for defects with prominent features. Therefore, SFF-Net provides an effective solution for strip steel surface defect detection.

Despite its advantages, SFF-Net shows weaker performance on small and low-contrast defects, as observed in the Cr and Rs categories of the NEU-DET dataset and the in and rp categories of the GC10-DET dataset. This limitation arises from the dilution of small-scale and low-contrast features in deep feature maps and the inability of the SCN module to actively enhance weak signals. To address these limitations, future research will explore the integration of attention mechanisms or feature enhancement techniques that incorporate contextual information. These methods aim to help the model distinguish low-contrast defects and amplify weak signals, thereby improving the accuracy of detecting small and low-contrast defects.