Neural Network-Based Atlas Enhancement in MPEG Immersive Video

Abstract

1. Introduction

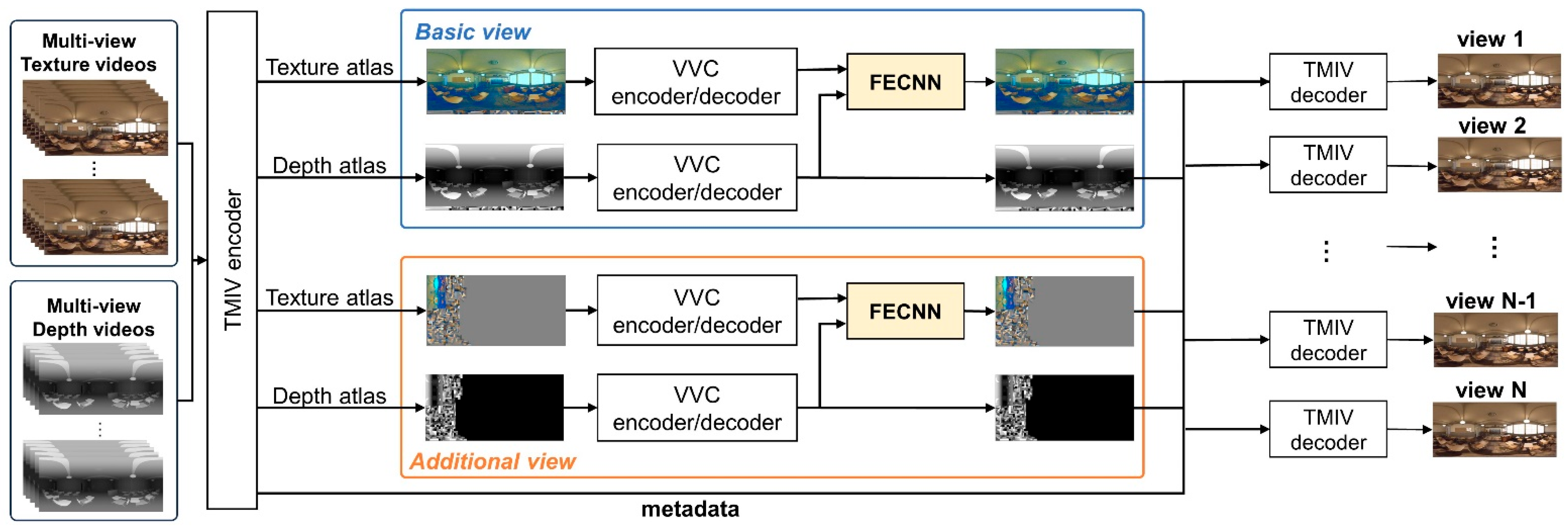

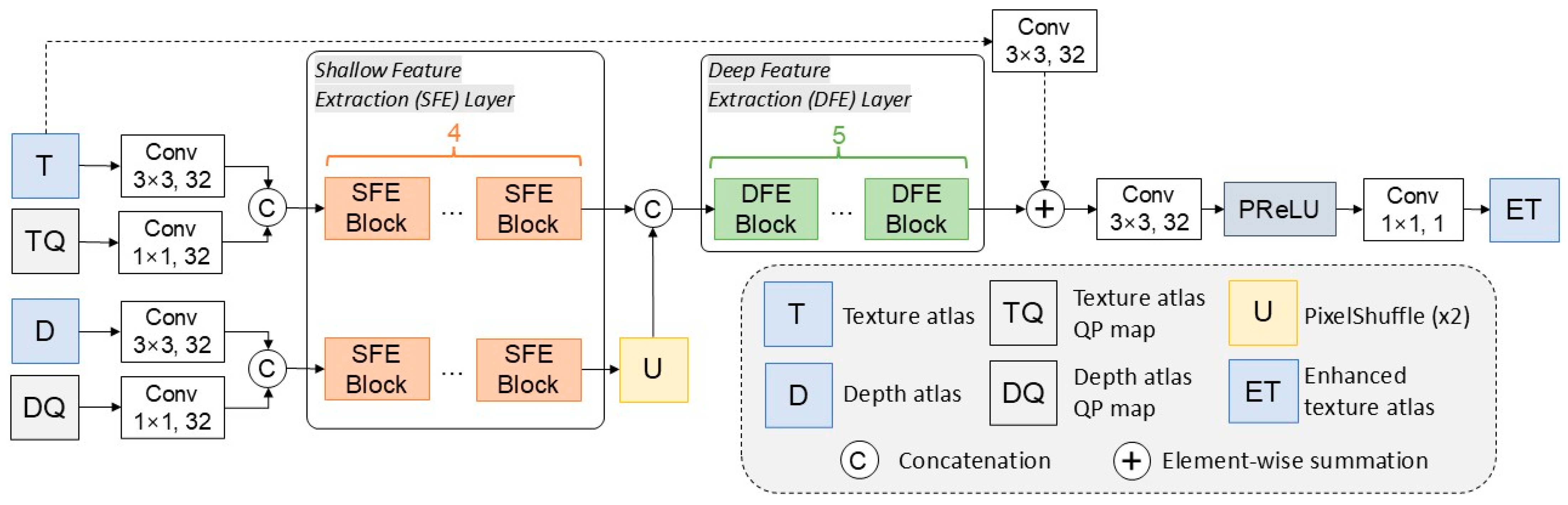

- The proposed FECNN newly deployed depth atlas and QP information were used as inputs to enhance the coding performance as well as visual quality. To the best of our knowledge, this is the first study that uses such neural networks to enhance the atlas quality of MIV in the literature.

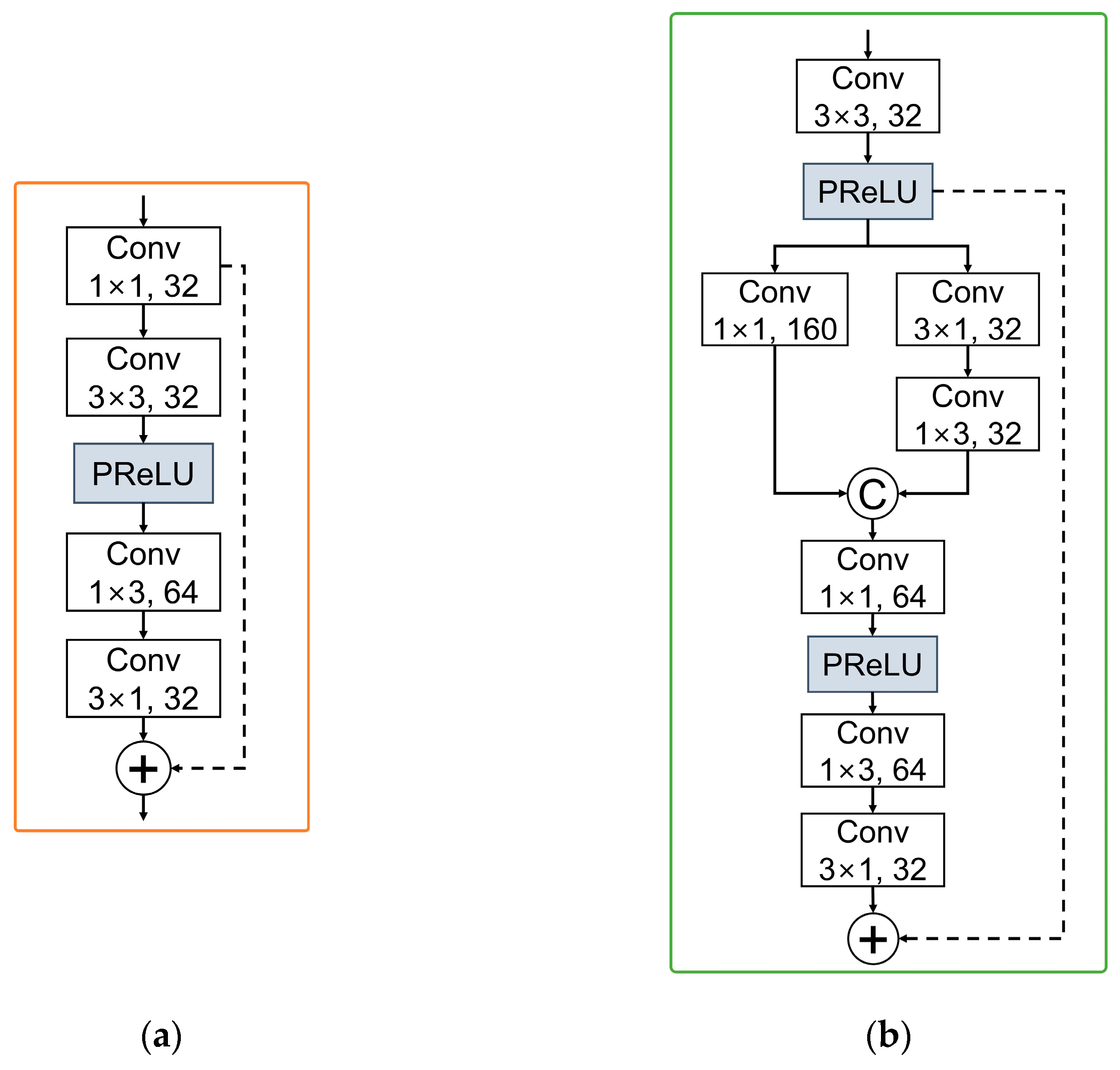

- To develop FECNN, we designed SFE and DFE blocks to improve the visual quality of the texture atlas compared with that of existing TMIV. Specifically, it can provide noticeable visual improvements between objects and patch boundary lines in the atlas videos.

- The proposed FECNN improved BDBR by −4.12% and −6.96% on average in the basic and additional views, respectively.

2. Related Works

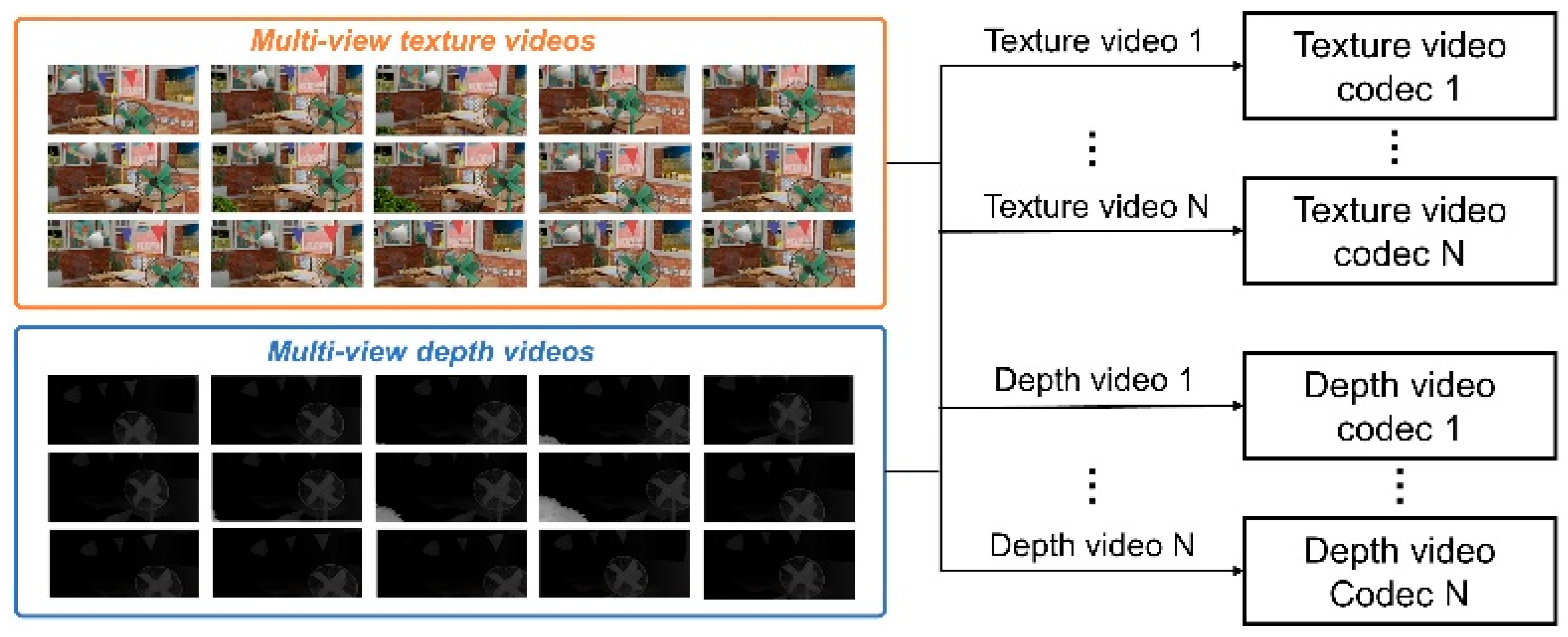

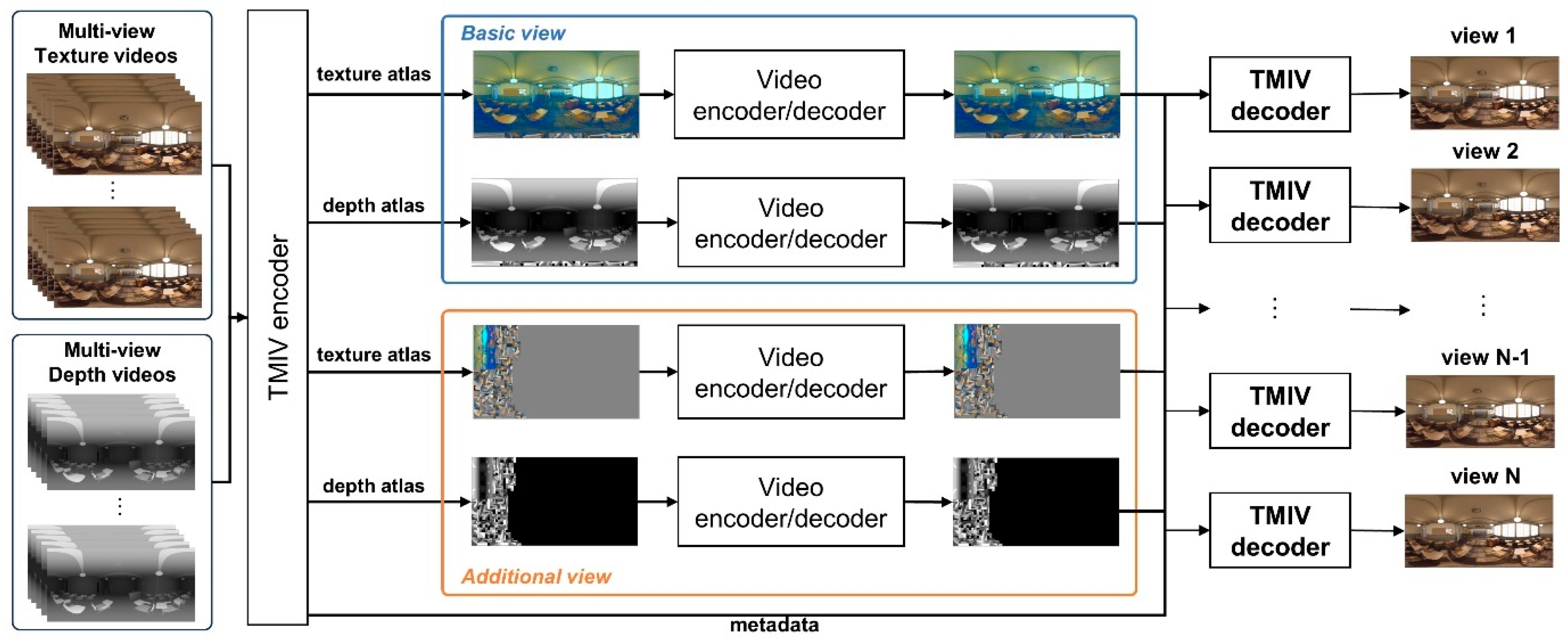

2.1. Previous Coding Methods for Immersive Video

2.2. Artifact Reduction of Video Coding

3. Proposed Method

3.1. Network Architecture

3.2. Network Training

4. Experimental Results

4.1. Experimental Environments

4.2. Performance Measurements

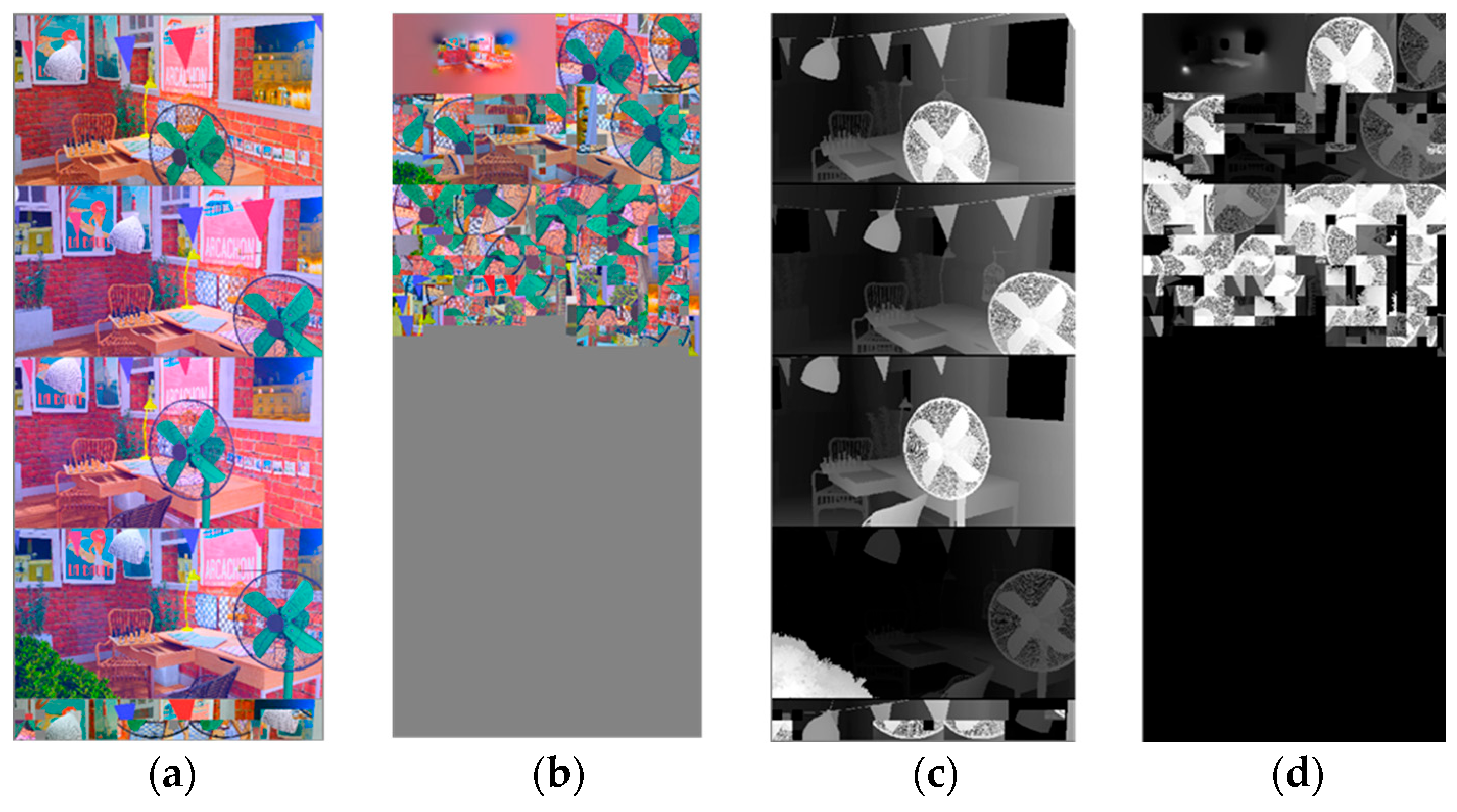

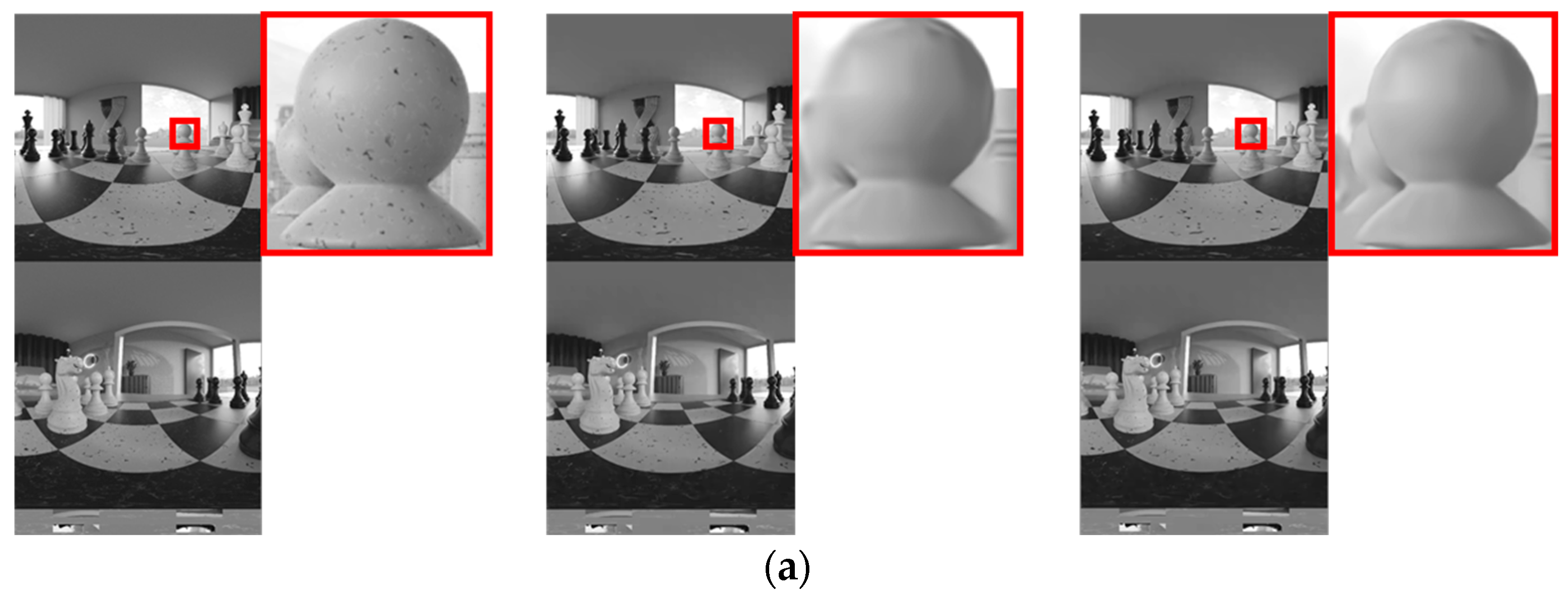

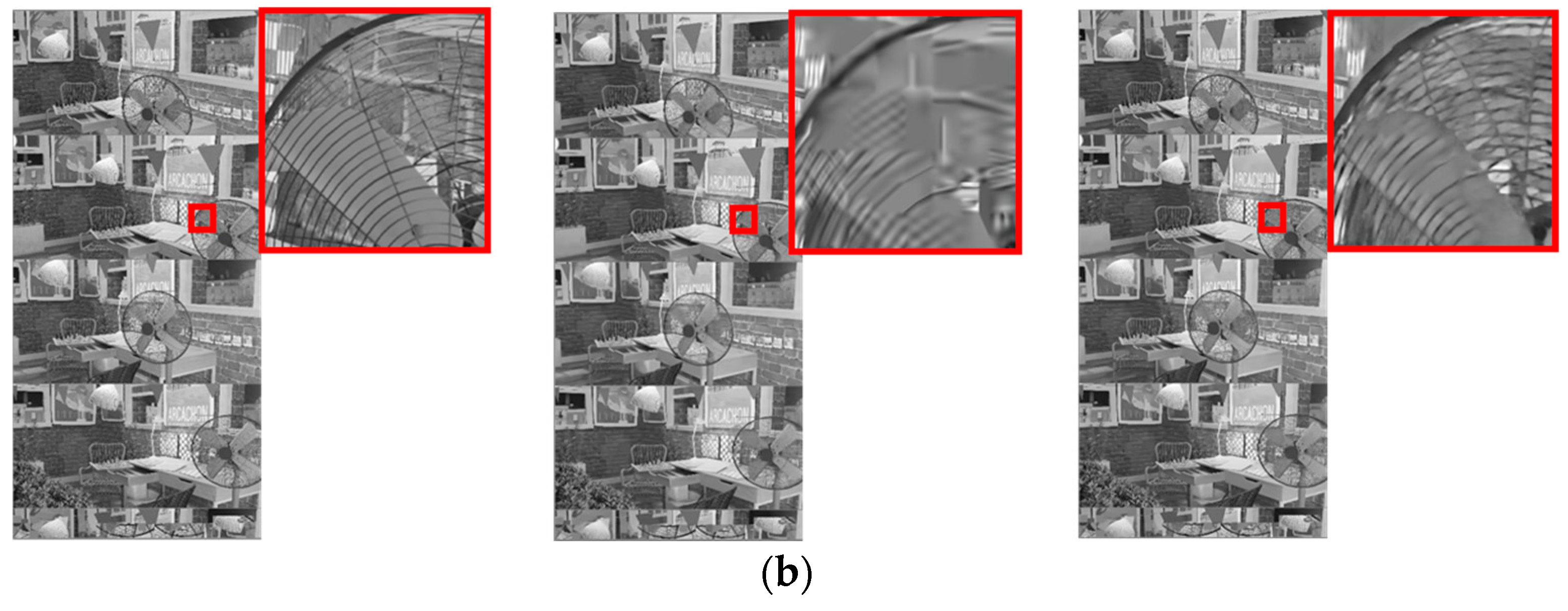

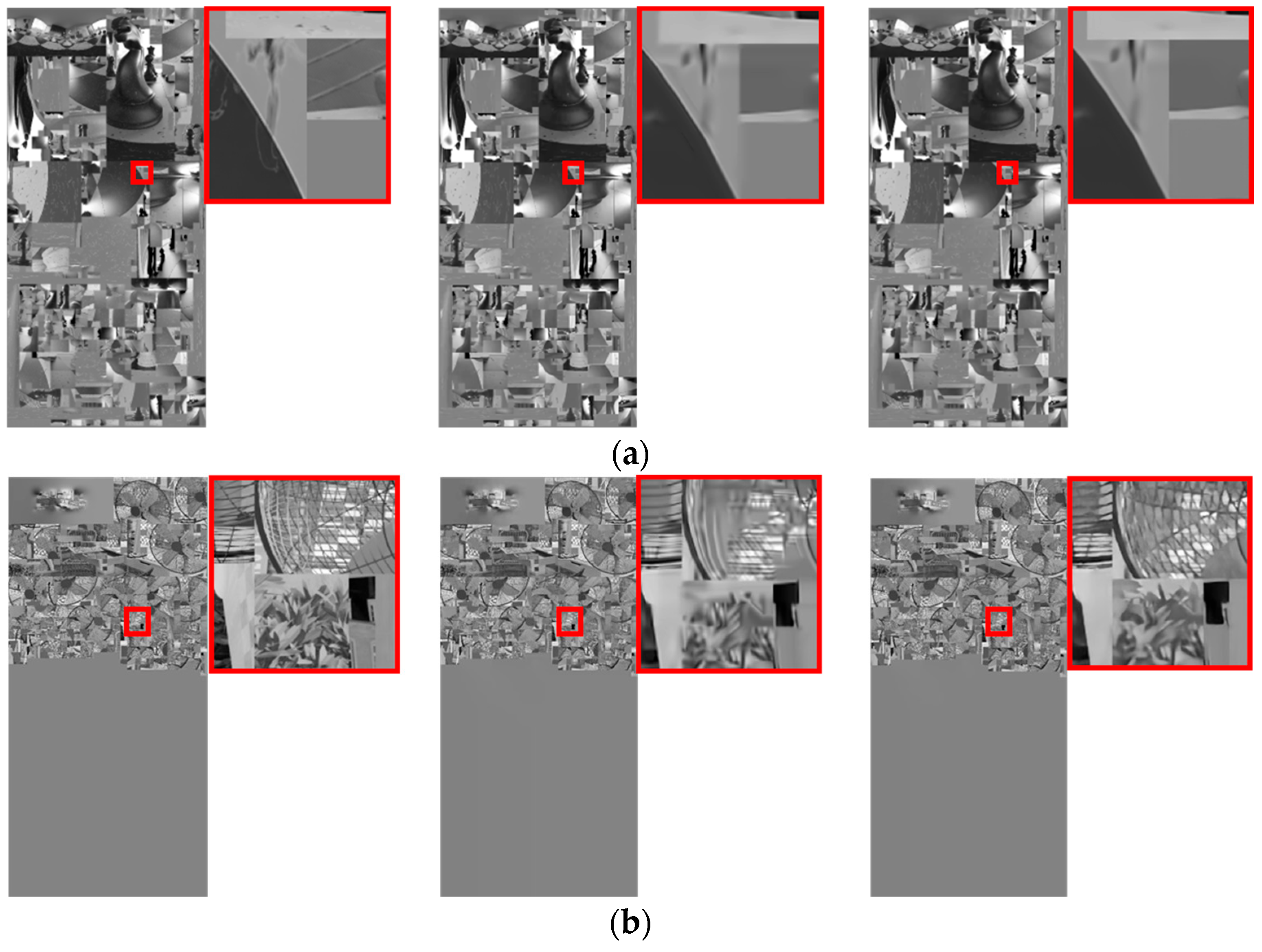

4.3. Visual Comparisons

4.4. Ablation Studies

- Performance analysis according to the use of chroma components as an input

- Determination of a suitable up-sampling method

- Optimal number of SFE and DFE blocks

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Boyce, J.M.; Doré, R.; Dziembowski, A.; Fleureau, J.; Jung, J.; Kroon, B.; Salahieh, B.; Vadakital, V.K.M.; Yu, L. MPEG immersive video coding standard. Proc. IEEE 2021, 109, 1521–1536. [Google Scholar] [CrossRef]

- Dziembowski, A.; Lee, G. Test model 17 for MPEG immersive video. ISO/IEC JTC 1/SC 29/ WG 04, Document N0376. In Proceedings of the 147th MPEG Meeting, Geneva, Switzerland, 15–19 July 2023. [Google Scholar]

- Sullivan, G.J.; Ohm, J.-R.; Han, W.-J.; Wiegand, T. Overview of the HEVC Standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Bross, B.; Wang, Y.-K.; Ye, Y.; Liu, S.; Chen, J.; Sullivan, G.J.; Ohm, J.-R. Overview of the VVC standard and its applications. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 3736–3764. [Google Scholar] [CrossRef]

- Jeong, J.; Lee, S.; Ryu, E. Delta QP Allocation for MPEG Immersive Video. In Proceedings of the 13th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 19–21 October 2022; pp. 568–573. [Google Scholar]

- Milovanovic, M.; Henry, F.; Cagnazzo, M. Depth patch Selection for Decoder-Side Depth Estimation in MPEG Immersive Video. In Proceedings of the Picture Coding Symposium (PCS), San Jose, CA, USA, 7–9 December 2022; pp. 343–347. [Google Scholar]

- Lee, S.; Jeong, J.; Ryu, E. Group-Based Adaptive Rendering System for 6DoF Immersive Video Streaming. IEEE Access 2022, 10, 102691–102700. [Google Scholar] [CrossRef]

- Garus, P.; Henry, F.; Maugey, T.; Guillemot, C. Motion Compensation-based Low-complexity Decoder Side Depth Estimation for MPEG Immersive Video. In Proceedings of the 24th International Workshop on Multimedia Signal Processing (MMSP), Shanghai, China, 26–28 September 2022; pp. 1–6. [Google Scholar]

- Jeong, J.; Lee, S.; Ryu, E. VVC Subpicture-Based Frame Packing for MPEG Immersive Video. IEEE Access 2022, 10, 103781–103792. [Google Scholar] [CrossRef]

- Mieloch, D.; Dziembowski, A.; Kloska, D.; Szydelko, B.; Jeong, J.; Lee, G. A New Approach to Decoder-Side Depth Estimation in Immersive Video Transmission. IEEE Trans. Broadcast. 2023, 69, 951–965. [Google Scholar] [CrossRef]

- Lim, S.; Kim, H.; Kim, Y. Adaptive Patch-Wise Depth Range Linear Scaling Method for MPEG Immersive Video Coding. IEEE Access 2023, 11, 133440–133450. [Google Scholar] [CrossRef]

- Dziembowski, A.; Mieloch, D.; Jeong, J.; Lee, G. Immersive Video Postprocessing for Efficient Video Coding. IEEE Trans. Circuit Syst. Video Technol. 2023, 33, 4349–4361. [Google Scholar] [CrossRef]

- Lee, Y.; Oh, K.; Lee, G.; Oh, B. High-Bit-Depth Geometry Representation and Compression MPEG Immersive Video System. IEEE Access 2024, 12, 189064–189072. [Google Scholar] [CrossRef]

- Oh, J.; Li, X.; Oh, K.; Lee, G.; Jang, E. Efficient Atlas Coding Strategy using cropping for Object-based MPEG Immersive Video. In Proceedings of the IEEE International Conference on Advanced Communications Technology (ICACT), Pyeong Chang, Republic of Korea, 16–19 February 2025; pp. 253–260. [Google Scholar]

- Dai, Y.; Liu, D.; Wu, F. A Convolutional Neural Network Approach for Post-processing in HEVC Intra Coding. In Proceedings of the Multimedia Modeling (MMM), Reykjavik, Iceland, 4–6 January 2017; pp. 28–39. [Google Scholar]

- Yu, L.; Chang, W.; Liu, Q.; Gabbouj, M. High-frequency guided CNN for video compression artifacts reduction. In Proceedings of the IEEE International Conference on Visual Communications and Image Processing (VCIP), Suzhou, China, 13–16 December 2022; pp. 1–5. [Google Scholar]

- Hoang, T.M.; Zhou, J. B-DRRN: A Block Information Constrained Deep Recursive Residual Network for Video Compression Artifacts Reduction. In Proceedings of the Picture Coding Symposium (PCS), Ningbo, China, 12–15 November 2019; pp. 1–5. [Google Scholar]

- Wang, M.; Wan, S.; Gong, H.; Ma, M. Attention-Based Dual-Scale CNN In-Loop Filter for Versatile Video Coding. IEEE Access 2019, 7, 145214–145226. [Google Scholar] [CrossRef]

- Zhao, H.; He, M.; Teng, G.; Shang, X.; Wang, G.; Feng, Y. A CNN-Based Post-Processing Algorithm for Video Coding Efficiency Improvement. IEEE Access 2019, 8, 920–929. [Google Scholar] [CrossRef]

- Das, T.; Choi, K.; Choi, J. High Quality Video Frames Form VVC: A Deep Neural Network Approach. IEEE Access 2023, 11, 54254–54264. [Google Scholar] [CrossRef]

- Zhang, H.; Jung, C.; Zou, D.; Li, M. WCDANN: A Lightweight CNN Post-Processing Filter for VVC-based Video Compression. IEEE Access 2023, 11, 83400–83413. [Google Scholar] [CrossRef]

- Wang, H.; Chen, J.; Reuze, K.; Kotra, M.A.; Karczewicz, M. EE1-related: Neural Network-based in-loop filter with constrained computational complexity, JVET of ITU-T SG 16 WP 3 and ISO/IEC JTC 1/SC 29, document JVET-W0131. In Proceedings of the 23rd JVET Meeting, Teleconference, 7–16 July 2021. [Google Scholar]

- Santamaria, M.; Yang, R.; Cricri, F.; Lainema, J.; Zhang, H.; Youvalari, G.R.; Hannuksela, M.M. EE1-1.11: Content-adaptive post-filter, JVET of ITU-T SG 16 WP 3 and ISO/IEC JTC 1/SC 29, document JVET-AC0055. In Proceedings of the 29th JVET Meeting, Teleconference, 11–20 January 2023. [Google Scholar]

- Dziembowski, A.; Kroon, B.; Jung, J. Common test conditions for MPEG immersive video, ISO/IEC JTC 1/SC 29/ WG 04, document N0372. In Proceedings of the 143rd MPEG Meeting, Geneva, Switzerland, 17–21 July 2023. [Google Scholar]

- Shi, W.; Caballero, J.; Huszar, F.; Totz, J.; Aitken, A.-P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kroon, B. 3DoF+ Test Sequence ClassroomVideo. ISO/IEC JTC 1/SC 29/WG 11, document M42415. In Proceedings of the 122nd MPEG Meeting, San Diego, CA, USA, 16–20 April 2018. [Google Scholar]

- Doré, R. Technicolor 3DoF+ Test Materials. ISO/IEC JTC 1/SC 29/WG 11, document M42349. In Proceedings of the 122nd MPEG Meeting, San Diego, CA, USA, 16–20 April 2018. [Google Scholar]

- Ilola, L.; Vadakital, V.K.M.; Roimela, K.; Keränen, J. New Test Content for Immersive Video-Nokia Chess. ISO/IEC JTC 1/SC 29/WG 11, document M50787. In Proceedings of the 128th MPEG Meeting, Geneva, Switzerland, 7–11 October 2019. [Google Scholar]

- Schenini, J.; Ilolla, L.; Vadakital, V.K.M. A new computer graphics scene, Guitarist, suitable for MIV edition-2. ISO/IEC JTC 1/SC 29/WG 04, document M58080. In Proceedings of the 136th MPEG Meeting, Teleconference, 11–15 October 2021. [Google Scholar]

- Boissonade, P.; Jung, J. Proposition of New Sequences for Windowed-6DoF Experiments on Compression, Synthesis and Depth Estimation. ISO/IEC JTC 1/SC 29/WG 11, document M43318, ISO/IEC JTC 1/SC 29/WG 11. In Proceedings of the 123rd MPEG Meeting, Ljubljana, Slovenia, 16–20 July 2018. [Google Scholar]

- Doré, R.; Briand, G.; Thudor, F. New Cadillac Content Proposal for Advanced MIV v2 Investigations. ISO/IEC JTC 1/SC 29/WG 04, document M57186. In Proceedings of the 135th MPEG Meeting, Teleconference, 12–16 July 2021. [Google Scholar]

- Doré, R.; Briand, G. Interdigital Mirror Content Proposal for Advanced MIV Investigations on Reflection. ISO/IEC JTC 1/SC 29/WG 11, document M55710. In Proceedings of the 133rd MPEG Meeting, Teleconference, 11–15 January 2021. [Google Scholar]

- Doré, R.; Briand, G.; Thudor, F. Interdigital Fan Content Proposal for MIV. ISO/IEC JTC 1/SC 29/WG 11, document M54732. In Proceedings of the 131st MPEG Meeting, Teleconference, 29 June–3 July 2020. [Google Scholar]

- Doré, R.; Briand, G.; Thudor, F. Interdigital Group Content Proposal. ISO/IEC JTC 1/SC 29/WG 11, document M54731. In Proceedings of the 131st MPEG Meeting, Teleconference, 29 June–3 July 2020. [Google Scholar]

- Thudor, F.; Doré, R. Dancing sequence for verification tests, ISO/IEC JTC 1/SC 29/WG 04, document M57751. In Proceedings of the 136th MPEG Meeting, Teleconference, 11–15 October 2021. [Google Scholar]

- Doyen, D.; Langlois, T.; Vandame, B.; Babon, F.; Boisson, G.; Sabater, N.; Gendrot, R.; Schubert, A. Light Field Content from 16-camera Rig. ISO/IEC JTC 1/SC 29/WG 11, document M40010. In Proceedings of the 117th MPEG Meeting, Geneva, Switzerland, 16–20 January 2017. [Google Scholar]

- Tapie, T.; Schubert, A.; Gendrot, R.; Briand, G.; Thudor, F.; Dore, R. Breakfast new natural content proposal for MIV. ISO/IEC JTC 1/SC 29/WG 04, document M56730. In Proceedings of the 134th MPEG Meeting, Teleconference, 26–30 April 2021. [Google Scholar]

- Tapie, T.; Schubert, A.; Gendrot, R.; Briand, G.; Thudor, F.; Dore, R. Barn new natural content proposal for MIV. ISO/IEC JTC 1/SC 29/WG 04, document M56632. In Proceedings of the 134th MPEG Meeting, Teleconference, 26–30 April 2021. [Google Scholar]

- Salahieh, B.; Marvar, B.; Nentedem, M.-M.; Kumar, A.; Popovic, V.; Seshadrinathan, K.; Nestares, O.; Boyce, J. Kermit Test Sequence for Windowed 6DoF Activities. ISO/IEC JTC 1/SC 29/WG 11, document M43748. In Proceedings of the 123rd MPEG Meeting, Ljubljana, Slovenia, 16–20 July 2018. [Google Scholar]

- Mieloch, D.; Dziembowski, A.; Domanski, M. Natural Outdoor Test Sequences. ISO/IEC JTC 1/SC 29/WG 11, document M51598. In Proceedings of the 129th MPEG Meeting, Brussels, Belgium, 13–17 January 2020. [Google Scholar]

- Domanski, M.; Dziembowski, A.; Grzelka, A.; Mieloch, D.; Stankiewicz, O.; Wegner, K. Multiview Test Video Sequences for Free Navigation Exploration Obtained using Paris of Cameras. ISO/IEC JTC 1/SC 29/WG 11, document M38247. In Proceedings of the 115th MPEG Meeting, Geneva, Switzerland, 30 May–3 June 2016. [Google Scholar]

- Bai, Y.; Li, S.; Yu, L. Test results of CBAbasketball sequence and challenges. ISO/IEC JTC 1/SC 29/WG 04, document M59558. In Proceedings of the 138th MPEG Meeting, Teleconference, 25–29 April 2022. [Google Scholar]

- Mieloch, D.; Dziembowski, A.; Szydelko, B.; Kloska, D.; Grzelka, A.; Stankowski, J.; Domansk, M.; Lee, G.; Jeong, J. New natural content-MartialArts. ISO/IEC JTC 1/SC 29/WG 04, document M61949. In Proceedings of the 141st MPEG Meeting, Teleconference, 16–20 January 2023. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference for Learning Representations (ICLR), San diego, CA, USA, 7–9 May 2015; pp. 1–15. [Google Scholar]

- Bjontegaard, G. Calculation of average PSNR differences between RD curves. ITU-T SG 16 Q6 VCEG, document VCEG-M33. In Proceedings of the 13th VCEG Meeting, Austin, TX, USA, 2–4 April 2001. [Google Scholar]

- Dziembowski, A.; Mieloch, D.; Stankowski, J.; Grzelka, A. IV-PSNR—The Objective Quality Metric for Immersive Video Applications. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7575–7591. [Google Scholar] [CrossRef]

| Class | Sequences | Texture QP | Depth QP | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RP1 | RP2 | RP3 | RP4 | RP1 | RP2 | RP3 | RP4 | ||

| A01 | Classroom Video | 26 | 30 | 38 | 51 | 7 | 10 | 16 | 27 |

| B01 | Museum | 29 | 40 | 47 | 51 | 9 | 18 | 23 | 27 |

| B02 | Chess | 18 | 27 | 35 | 45 | 1 | 7 | 14 | 22 |

| B03 | Guitarist | 22 | 24 | 29 | 39 | 3 | 5 | 9 | 17 |

| C01 | Hijack | 19 | 24 | 34 | 49 | 1 | 5 | 13 | 25 |

| C02 | Cyberpunk | 21 | 24 | 29 | 39 | 3 | 5 | 9 | 17 |

| J01 | Kitchen | 18 | 26 | 33 | 41 | 1 | 7 | 12 | 19 |

| J02 | Cadillac | 22 | 31 | 41 | 51 | 3 | 11 | 19 | 27 |

| J03 | Mirror | 26 | 33 | 42 | 51 | 7 | 12 | 19 | 27 |

| J04 | Fan | 32 | 38 | 45 | 51 | 11 | 16 | 22 | 27 |

| W01 | Group | 26 | 31 | 37 | 46 | 7 | 11 | 15 | 23 |

| W02 | Dancing | 20 | 24 | 28 | 40 | 2 | 5 | 8 | 18 |

| D01 | Painter | 24 | 32 | 43 | 51 | 5 | 11 | 20 | 27 |

| D02 | Breakfast | 25 | 30 | 35 | 43 | 6 | 10 | 14 | 20 |

| D03 | Barn | 25 | 30 | 35 | 42 | 6 | 10 | 14 | 19 |

| E01 | Frog | 29 | 34 | 40 | 46 | 9 | 13 | 18 | 23 |

| E02 | Carpark | 23 | 28 | 37 | 47 | 4 | 8 | 15 | 23 |

| E03 | Street | 21 | 25 | 32 | 41 | 3 | 6 | 11 | 19 |

| L01 | Fencing | 23 | 28 | 39 | 51 | 4 | 8 | 17 | 27 |

| L02 | CBABaskeball | 24 | 27 | 31 | 43 | 5 | 7 | 11 | 20 |

| L03 | MartialArts | 24 | 27 | 31 | 43 | 5 | 7 | 11 | 20 |

| Content Categories | Class | Sequences | Resolution | Number of Source Views |

|---|---|---|---|---|

| Computer generated | A | Classroom Video | 4096 × 2048 | 15 |

| B | Museum | 2048 × 2048 | 24 | |

| Chess | 2048 × 2048 | 10 | ||

| Guitarist | 2048 × 2048 | 46 | ||

| C | Hijack | 4096 × 2048 | 10 | |

| Cyberpunk | 2048 × 2048 | 10 | ||

| J | Kitchen | 1920 × 1080 | 25 | |

| Cadillac | 1920 × 1080 | 15 | ||

| Mirror | 1920 × 1080 | 15 | ||

| Fan | 1920 × 1080 | 15 | ||

| W | Group | 1920 × 1080 | 21 | |

| Dancing | 1920 × 1080 | 24 | ||

| Natural | D | Painter | 2048 × 2048 | 16 |

| Breakfast | 1920 × 1080 | 15 | ||

| Barn | 1920 × 1080 | 15 | ||

| E | Frog | 1920 × 1080 | 13 | |

| Carpark | 1920 × 1088 | 9 | ||

| Street | 1920 × 1088 | 9 | ||

| L | Fencing | 1920 × 1088 | 10 | |

| CBABasketball | 2048 × 1088 | 34 | ||

| Martial Arts | 1920 × 1080 | 15 |

| Hyper-Parameters | Options |

|---|---|

| Loss function | Mean Squared Error (MSE) |

| Optimizer | Adam |

| Number of epochs | 50 |

| Batch size | 32 |

| Learning rate | |

| Activation function | PReLU |

| Software | Version |

|---|---|

| TMIV | 17.0 |

| VVenC | 1.7.0 |

| VVdeC | 1.6.0 |

| PyTorch | 1.14.0 |

| CUDA | 11.6 |

| Class | Sequences | Proposed Method | Ref. [20] | Ref. [22] | |||

|---|---|---|---|---|---|---|---|

| Basic | Additional | Basic | Additional | Basic | Additional | ||

| BDBR-YUV | BDBR-YUV | BDBR-YUV | BDBR-YUV | BDBR-YUV | BDBR-YUV | ||

| B02 | Chess | −2.51% | −4.85% | −1.56% | −3.96% | 1.74% | 0.89% |

| B03 | Guitarist | −1.98% | −3.37% | −0.25% | −2.54% | 0.68% | 0.20% |

| J02 | Cadillac | −3.13% | −7.25% | −1.32% | −4.29% | 0.21% | 0.11% |

| J04 | Fan | −8.88% | −13.19% | −1.25% | −5.40% | −0.83% | −1.02% |

| W01 | Group | −2.15% | −6.74% | −0.12% | −4.31% | −0.55% | −0.46% |

| D01 | Painter | −4.96% | −9.37% | −1.33% | −6.59% | −0.40% | −0.18% |

| E01 | Frog | −4.10% | −4.88% | −2.77% | −4.06% | −0.82% | −0.81% |

| L02 | CBABasketball | −5.24% | −6.01% | −4.36% | −5.20% | 0.07% | −0.19% |

| Average | −4.12% | −6.96% | −1.62% | −4.54% | 0.01% | −0.18% | |

| Class | Sequences | Proposed Method | Ref. [20] | ||

|---|---|---|---|---|---|

| Basic | Additional | Basic | Additional | ||

| B02 | Chess | 0.9744 | 0.9636 | 0.9741 | 0.9628 |

| B03 | Guitarist | 0.9704 | 0.9689 | 0.9699 | 0.9685 |

| J02 | Cadillac | 0.9540 | 0.9421 | 0.9494 | 0.9365 |

| J04 | Fan | 0.8796 | 0.9226 | 0.8683 | 0.9149 |

| W01 | Group | 0.8785 | 0.8829 | 0.8763 | 0.8804 |

| D01 | Painter | 0.9136 | 0.9204 | 0.9084 | 0.9176 |

| E01 | Frog | 0.8674 | 0.8434 | 0.8652 | 0.8415 |

| L02 | CBABasketball | 0.9641 | 0.9587 | 0.9636 | 0.9580 |

| Average | 0.9252 | 0.9253 | 0.9219 | 0.9225 | |

| Methods | Parameters | FLOPs | Inference Time |

|---|---|---|---|

| Proposed Method | 5.01 MB | 31.62 T | 22,861.44 ms |

| [20] | 8.45 MB | 39.19 T | 14,364.18 ms |

| [22] | 0.34 MB | 1.54 T | 422.27 ms |

| Class | Sequences | BD-Rate Y-PSNR | BD-Rate IV-PSNR |

|---|---|---|---|

| B02 | Chess | −0.7% | 2.7% |

| B03 | Guitarist | −2.9% | 5.1% |

| J02 | Cadillac | −0.9% | 3.5% |

| J04 | Fan | 3.3% | 2.7% |

| W01 | Group | 0.3% | 1.3% |

| D01 | Painter | −3.2% | 4.4% |

| E01 | Frog | −1.9% | 5.1% |

| L02 | CBABasketball | −1.4% | 2.3% |

| Average | −0.9% | 3.4% | |

| Method | w/Chroma | w/o Chroma | |||

|---|---|---|---|---|---|

| Class | Sequences | Basic | Additional | Basic | Additional |

| BDBR-YUV | BDBR-YUV | BDBR-YUV | BDBR-YUV | ||

| B02 | Chess | −1.64% | −3.41% | −1.46% | −3.90% |

| B03 | Guitarist | −0.98% | −2.63% | −1.71% | −3.14% |

| J02 | Cadillac | −1.28% | −5.27% | −3.36% | −6.63% |

| J04 | Fan | −6.91% | −12.95% | −8.18% | −12.36% |

| W01 | Group | −1.85% | −5.93% | −1.96% | −6.45% |

| D01 | Painter | −3.19% | −7.82% | −4.71% | −8.47% |

| E01 | Frog | −3.14% | −4.49% | −3.81% | −4.73% |

| L02 | CBABasketball | −4.13% | −4.87% | −4.64% | −5.44% |

| Average | −2.89% | −5.92% | −3.73% | −6.39% | |

| Method | De-Convolution | Pixel Shuffle | |||

|---|---|---|---|---|---|

| Class | Sequences | Basic | Additional | Basic | Additional |

| BDBR-YUV | BDBR-YUV | BDBR-YUV | BDBR-YUV | ||

| B02 | Chess | −1.46% | −3.90% | −2.01% | −4.19% |

| B03 | Guitarist | −1.71% | −3.14% | −1.64% | −3.00% |

| J02 | Cadillac | −3.36% | −6.63% | −3.05% | −6.56% |

| J04 | Fan | −8.18% | −12.36% | −8.55% | −12.58% |

| W01 | Group | −1.96% | −6.45% | −1.80% | −6.38% |

| D01 | Painter | −4.71% | −8.47% | −4.92% | −8.57% |

| E01 | Frog | −3.81% | −4.73% | −3.81% | −4.63% |

| L02 | CBABasketball | −4.64% | −5.44% | −4.62% | −5.43% |

| Average | −3.73% | −6.39% | −3.80% | −6.42% | |

| Test | Basic | Additional | |

|---|---|---|---|

| SFE Block | DFE Block | BDBR-YUV | BDBR-YUV |

| 3 | 3 | −3.80% | −6.42% |

| 4 | 3 | −3.79% | −6.51% |

| 5 | 3 | −3.93% | −6.67% |

| 3 | 4 | −3.93% | −6.71% |

| 3 | 5 | −3.49% | −6.42% |

| 4 | 4 | −4.05% | −6.78% |

| 4 | 5 | −4.12% | −6.96% |

| 5 | 4 | −3.82% | −6.64% |

| 5 | 5 | −3.75% | −6.56% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, T.; Yun, K.; Cheong, W.-S.; Jun, D. Neural Network-Based Atlas Enhancement in MPEG Immersive Video. Mathematics 2025, 13, 3110. https://doi.org/10.3390/math13193110

Lee T, Yun K, Cheong W-S, Jun D. Neural Network-Based Atlas Enhancement in MPEG Immersive Video. Mathematics. 2025; 13(19):3110. https://doi.org/10.3390/math13193110

Chicago/Turabian StyleLee, Taesik, Kugjin Yun, Won-Sik Cheong, and Dongsan Jun. 2025. "Neural Network-Based Atlas Enhancement in MPEG Immersive Video" Mathematics 13, no. 19: 3110. https://doi.org/10.3390/math13193110

APA StyleLee, T., Yun, K., Cheong, W.-S., & Jun, D. (2025). Neural Network-Based Atlas Enhancement in MPEG Immersive Video. Mathematics, 13(19), 3110. https://doi.org/10.3390/math13193110