Abstract

This research presents a generative AI mechanism designed to assist artists in finding inspiration and developing ideas during their creative process by leveraging their emotions as a driving force. The proposed iterative inspiration cycle, complete with feedback loops, helps artists digitally capture their creative emotions and use them as a guiding “vision” for creating artwork. Within the mechanism, the “Emotion Vision” images, generated from sketch line drawings and creative emotion prompts, are a medium designed to inspire artists. Experimental results demonstrate a positive inspirational effect, particularly in the creation of ‘Abstract Expressionism’ and ‘Impressionism’ artworks. In addition, we introduce the Emotion Vision Score metric, which quantifies the effectiveness of emotional inspiration. This metric evaluates how well “Emotion Vision” images inspire artists by balancing sketch intentions, creative emotions, and inspirational diversity, thus identifying the most effective images for inspiration. This novel mechanism integrates emotional intelligence into AI for art creation, allowing it to understand and replicate human emotion in its outputs. By enhancing emotional depth and ensuring consistency in generative AI, this research aims to advance digital art creation and contribute to the evolution of artistic expression through generative AI.

MSC:

68T42

1. Introduction

GAI (Generative Artificial Intelligence) was still in its early stages when applied in art creation. Despite a significant rise in popularity across various AI platforms, many challenges and issues that affect the AI application in the art world persist. One major problem is that the content produced by AI can inadvertently introduce biases. For example, suppose the training data contains certain societal biases or cultural stereotypes. In that case, they can be presented in the AI-generated art, resulting in artworks that may unintentionally reveal those biases.

Moreover, current GAI technologies face specific difficulties in producing creativity in art. The generated content may resemble things you have seen before, some appearing as imitation and others as patchwork. These technologies especially struggle to capture the genuine emotions that human artists pour into their artworks. Instead of conveying an artist’s emotional narrative, the generated pieces may result in an incoherent amalgamation of styles or feelings that do not communicate a single idea cohesively. This can lead to a disconnect between the visual elements and the intended emotional expression, confusing viewers about the artwork’s presentation and insight. From passions and affections to emotions, the artist’s creation journal needs emotions to involve and propel. Emotions are not just an addition to the artistic process; they are often the source of inspiration and the primary force that shapes the form, content, and impact of the artwork.

To address these challenges, this work proposes a technological mechanism, “Emotional Vision for Artists’ Inspiration,” which helps artists find inspiration and develop ideas during their creative process. By focusing on emotions as the driving force, it intends to assist artists in capturing their creative emotions and using them as guidance or vision to help them create masterpieces. The total process is an iterative inspiration cycle with feedback loops allowing for continuous refinement, demonstrating an incremental methodology for craft artwork.

This innovative approach aims to integrate emotional intelligence into the AI’s creative process, allowing it to understand and replicate the nuances of human emotion in its artwork. By enhancing the emotional depth and consistency with generative AI outputs, we believe this research could significantly elevate the application of digital art creation and contribute to the evolving landscape of artistic expression through AI technology.

2. Related Work

After ChatGPT launched in 2022, generative AI (GenAI/GAI) has emerged as a significant advancement in AI development. Generative AI is like human creativity, especially in image generation, which has received much attention, particularly in artistic creation. GAI analyzes and uses existing data and user input to produce these things. This artificial intelligence generates content, also known as AIGC (Artificial Intelligence-Generated Content). The generated content can be used in various ways, such as article illustrations, wallpapers, graphic design applications, web design applications, etc.

Now, people are thinking about it, worried about it, and unsure if it will take over the creative work that humans are very proud of. This question shows how image editing companies perceive generative AI. Generative AI has the potential to either replace or assist human artistic creativity, which is an exciting and promising prospect [1]. With advancements in deep learning in recent years, AI-generated art has dramatically improved. It can now mix photos to make new images or change pictures into different content. Moreover, some museums have begun to collect AI-created artwork. Currently, various applications and tools have made generative AI increasingly influential in the art world.

However, a general GAI model may not be adequately suited for specialized tasks without further development. Post-training by fine-tuning, alignment, or instruction can adapt a base model for specific applications. At this point, the basic model can be continuously trained through a small amount of manually labeled data, fine-tuned into a dedicated model, and then applied to a downstream task in the application domain, such as finance, design, or robotic control, and so on. At the same time, the format of input data types can be important; for example, text input (prompt/spell, prompt) can be used to generate images automatically. Providing input or guidelines to a generative model is called prompt writing or prompt engineering.

Prompt engineering (or spell writing, prompt writing) is the process of creating input (usually text) that instructs a generative AI to produce a desired interactive response. In other words, prompts are how we ask an AI to do something. Prompts should be customized based on the type of response you want to receive and the specifics of the generative AI you use. Different types of prompts include instructions, questions, data, examples, etc.

It is not a prerequisite to be a machine learning engineer to write a prompt, but creating an effective one can be challenging. Inadequate prompts can lead to ambiguous or inaccurate responses, limiting the model’s ability to produce meaningful output [2]. Several factors influence the effectiveness of prompt engineering. Factors such as the model you are using, its training data, configurations, your choice of words, writing style, tone, sentence structure, and surrounding context all play a crucial role. Getting it right on the first attempt can be difficult, so prompt engineering is an iterative process that allows for fault tolerance and continuous improvement, which can be learned by anyone, including artists.

Emotions are among humans’ most potent creative energies, a special gift for artists. Emotions have the potential to showcase the skillfulness that is essential for fostering creativity [3]. Intentional emotional expression in art is essential for artistic creativity, as artists often seek to evoke specific feelings in their audience through the emotional tension embedded in their affect-infused artworks [4]. For example, Van Gogh’s creation is filled with swirls and vortexes to evoke a profound and emotional atmosphere of intense, passionate, and turbulent. The research [5] defines “creative emotions” as the various feelings and motivations that play a significant role in the scientific creative process. Emotional elements are fundamental to artistic creativity. The strength of emotional feelings, such as joy, anger, sadness, etc., generates motivational intensity, which serves as the impetus for creativity [6]. Passion, motivation to create, deviation, aggression, courage, and love of learning are vital aspects of the creative emotional landscape. These emotions are not merely passive feelings but active drivers and components of generating new and valuable ideas and discoveries. From our viewpoint, an artist’s creative emotion means the mood, atmosphere, style, and feeling conveyed by an artist’s work, which might reflect their emotional state or the intended emotional impact on the viewer. Whether the emotion the artist felt while creating it or the emotion the viewer feels, it focuses on the style or feeling conveyed by the artist’s artwork, which often implies an emotional interaction. In this work, we call them “creative emotion”. We will further extend the “creative emotion” concept into an inspiration driver for the artist’s creation journal.

In a multivariate model of individual creative potential, emotional intelligence (EI) and emotional competence (EC) are identified as key emotional factors, alongside environmental, cognitive, and conative influences [7]. Emotional creativity (EC) refers to the cognitive abilities and personality traits associated with the originality of emotional experiences and expressions. Individuals with high intrinsic emotional creativity may adapt better and experience enhanced creative outcomes during crises such as the COVID-19 pandemic [8]. Positive emotional states can often reduce cognitive switch costs and enhance performance on divergent thinking and insight problem-solving tasks. Elevated positive emotions have been shown to boost creative thinking, with stronger emotions fostering more original ideas. However, designing technology to capitalize on this connection remains a challenge. The work presented in the study of [9] introduces a system that provides real-time feedback on the originality of ideas, similar to our motivation for this work. Cognitive flexibility is a key reason why positive emotions enhance creativity. Creators need to understand how to activate specific emotions like happiness or anger to boost their creativity for specific tasks. They also need to recognize that their emotions signal the effort required to achieve goals, with positive moods indicating progress and negative moods suggesting more effort is needed [10]. Emotional creativity was also found to indicate the following practices in creative performance: being sensitive to the problem, identifying it and collecting data about it, generating ideas, verifying solutions, selecting the best solution, testing it, and accepting it [11].

For AI applications on emotion recognition, the paper [10] introduces a novel method for speech emotion recognition using large language models (LLMs) without changing their architecture. It addresses the challenge of LLMs analyzing audio by converting speech features into clear text prompts. In our inspiration mechanism, we also utilize speech emotion recognition technology to generate the emotion tags and then use these emotion tags to convert an amateur sketch idea/vision into an inspirational medium. The process enables amateur sketches to generate precise images, living up to the commitment of “what you sketch is what you get” [12]. Understanding the development of visual artworks, stroke by stroke in the sketch, enhances our learning, appreciation, and interactive displays. This enriches our understanding of the creative journey from the initial sketch to the final artwork [13]. This work [14] also presents a new approach that enables concept mixing in image synthesis, driven by user sketches instead of textual prompts. Likewise, our inspiration mechanism introduces a sketch-to-painting phase to explore the sources of creativity.

Our research aims to establish a mechanism for artists’ inspiration and ideas, with emotions as the driving force, to assist them in capturing creative emotions and provide them as guidance or vision during their creative process. If textual prompts are the magic spell for generative AI to produce dedicated, elaborate images, we aim to create visualized emotional vision that inspires artists to craft masterpieces. The difference between the two concepts is that the objective of the textual prompt is the generative model, while the objective of our mechanism is the artist, who uses the mechanism as an assistant tool in their art creation journal. In the remainder of this article, we refer to this mechanism as “Emotional Vision for Artists’ Inspiration.”

3. Methods

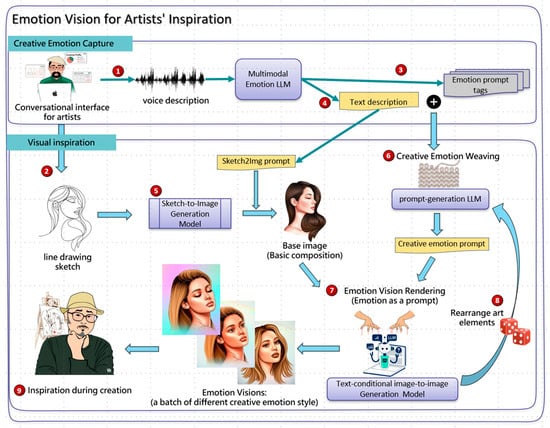

The whole inspiration mechanism mainly adopts the line drawing sketch to generate the initial vision image for their current creation. The framework of the mechanism is illustrated in Figure 1. We used a flowchart-style mixed with the related concepts to depict the overall solution.

Figure 1.

The mechanism of Emotional Vision for Artists’ Inspiration.

3.1. The Inspiration Mechanism

There are several sub-processes in this inspiration mechanism. This emotional vision generation mechanism is intended for artists’ inspiration. The process is divided into two main stages: “Creative Emotion Capture” and “Visual Inspiration”.

3.1.1. Creative Emotion Capture Stage

- Step 1:

- An artist interacts with a conversational agent interface by providing a voice description of his/her creation intention or vision. The artist’s voice input was captured as an audio signal, which was then processed by a “multimodal Emotion LLM”. The “multimodal emotion LLM” is not a single, fine-tuned model but an LLM agent designed to interpret an artist’s vocal description. It executes two main tasks. One is speech-to-text transcription. For example, we can employ OpenAI’s Whisper model (large-v3). Whisper is recognized for its high accuracy and robustness in transcribing spoken language from various acoustic environments, making it ideal for capturing the artist’s natural speech. Another task is emotion and content analysis: the transcribed text was then analyzed to extract its literal content and emotional undertones as prompt tags. In this case, we can utilize OpenAI’s GPT-4 model for this task. The agent instructed GPT-4 to act as an “artistic emotion analyst.”

- Step 2:

- At the same time, the artist provides a line drawing sketch that captures their initial idea of composition. This sketch serves as a structural skeleton and visual guidance for the subsequent image processing.

- Step 3:

- The voice description (from step 1) was fed into a “multimodal emotion LLM” to computationally understand the emotional nuances conveyed in the artist’s voice. This LLM analyzed the voice signals to extract and categorize the expressed emotions into text descriptions and emotion tags.

- Step 4:

- The text descriptions and emotion tags (from step 3) were combined to create a richer, multi-faceted prompt that includes both specific emotional cues and creative descriptive content.

3.1.2. Visual Inspiration Stage

- Step 5:

- The line drawing sketch (from step 2) was used as input for the “Sketch-to-Image Generation Model”. This process utilizes diffusion models to generate a painting version of the sketch image—“Base Image”. The primary purpose is to translate the artist’s rough visual idea into a more concrete image without the real composition by painting. “Sketch-to-Image Generation Model” is also an LLM agent that utilizes a diffusion model conditioned on both the image sketch and the text description to transform the artist’s line drawing sketch into a fully rendered base image.

- Step 6:

- Creative Emotion Weaving—the combined emotion tags and text description (from step 4) feeds into a “prompt-generation LLM” to generate a fully developed prompt called “creative emotion prompt”.

- Step 7:

- Emotion Vision Rendering—initially the “Base Image” (from step 5) and the “creative emotion prompt” (resulting from step 6) were used as input for “emotion as a prompt” to generate a batch of the “Emotion Vision” images. This involves using the emotional prompt to refine the “Base Image”, imbuing it with the desired emotional qualities by the “Text-Conditional Image-to-Image Generation Model”. “Text-Conditional Image-to-Image Generation Model” is an LLM agent using an image-to-image (img2img) diffusion model to take a “Base Image” as input and a “creative emotion prompt” as text conditioning to generate a new image. It fine-tunes the visual output, ensuring it aligns with the intended emotional expression.

- Step 8:

- Rearrange art elements—generating “Emotion Vision” images is iterative and finally produces a batch of “Emotion Vision” images. By rearranging emotion tags and text descriptions from a random seed, an AI agent can rewrite the “creative emotion prompt” using different art elements, controlling attributes like color palettes, styles, or stroke expressions. Consequently, different emotion styles of “Emotion Vision” images were produced to maximize the inspirational effect.

- Step 9:

- Inspiration during creation—AI agent presents a batch of “Emotion Vision” images on the interface to inspire the artist’s creation journal.

3.2. Key Concept Design

3.2.1. Creative Emotion Capture

To capture the artist’s creative emotion, we used the artist’s voice description and a line drawing sketch to capture the affective displays of the artist’s creative journey. Voice is the most instinctive and powerful medium for expressing a creator’s emotions. To capture the feeling accompanying the artist at a creative moment, we used a microphone to record their vivid descriptions of their creation’s vision. The nuances in their tone reveal profound emotional shifts, enabling us to gain deep insights into their emotional state. This connection enriches our understanding of their creative process and engages with their artistic journey. Here, we used an LLM to automatically capture creative emotion in the voice description of their current artwork, as multimodal LLM can recognize the affective elements in their description.

Another emotional cue comes from a line drawing sketch in the initial stage of creation. Studying the stroke-based line drawing of artwork helps improve our learning, appreciation, and interaction with art [13]. If an artist uses a line drawing sketch to convey their creation’s vision, we can leverage Sketch-to-Image generative AI to amplify and substantiate their vision. The Sketch-to-Image generative AI is an innovative and mature tool that enables artists to transform basic sketches into realistic, sophisticated artwork. This AI tool offers a quick visual preview, guiding the transition from concept to final piece. It allows creators to focus more on refining their vision in the initial stages rather than getting lost in painting details. By using the Sketch-to-Image generative AI, artists can convert their draft drawings into fully developed pieces and foresee the emotional impact of their creations.

Throughout the creative process, the artist integrates the feelings or emotions they experience into the artwork as part of its elements. To clarify the emotional type of artist creation, based on related literature reviews such as Flow Theory [15], Self-Determination Theory [16], Problem-Solving Models of Creativity [17], Psychoanalytic Theory and Art Therapy Principles [18], and Emergentist Theories of Creativity [19], we summarized the typical emotional types in Table 1.

Table 1.

The 13 types of creative emotion.

An artist’s emotions can often coexist or change rapidly and dynamically during the creative process. For instance, frustration may quickly give way to elation in moments. The intensity of these emotions also varies greatly; not every artist will experience each emotion with the same strength for every piece they create [17]. The specific blend of feelings is unique to each artist and their artworks and can even differ from one period to the next. Ultimately, this makes the emotional landscape of creation a profoundly personal journey. This artwork journal is a dynamically changing sequence of emotional states.

3.2.2. Creative Emotion Weaving

Weaving emotion into artwork means seamlessly integrating the emotional atmosphere into the creative process, making it feel inherent to the mind rather than simply attached. In the previous step, we utilized a multimodal Emotion LLM to automatically capture the creative emotion in the voice descriptions into emotion prompt tags and text descriptions. In prompt engineering, prompt tags refer to special markers or keywords used within a prompt to structure it or give specific instructions to the LLM. It can also refer to metadata tags used to organize and manage prompts externally. Based on Table 1, we define 13 creative emotion tags as follows.

| #PassionAndLove, #ExcitementAndAnticipation, #CuriosityAndWonder, #FocusAndFlow, #FrustrationAndStruggle, #DoubtAndVulnerability, #DeterminationAndPerseverance, #JoyAndElation, #SatisfactionAndFulfillment, #SurpriseAndDiscovery, #ContemplationAndReflection, #CatharsisAndRelease, #CreativeAnxiety |

In Table 2, we present the theoretical justification for the 13 emotion tags, explaining how we derived them from established psychological and creative theories. Its purpose is to serve as a crucial bridge, connecting the abstract psychological theories to the concrete, lived experience of an artist engaged in their creation journal.

Table 2.

Theoretical justification of the 13 emotion tags.

The prompt generation LLM utilizes a weaving process that combines emotion tags and text descriptions to create a final prompt for image generation. This weaving process is automated and mediated by the LLM, allowing it to translate high-level emotional concepts into a detailed set of visual instructions. For example, this process can be illustrated as follows:

- Step 1:

- Creative Seed Input.

The process begins with the raw seed input from the initial capture phase.

| Sketch Concept: “A lone, gnarled tree on a barren hill.” Text Description: “An artwork about isolation and resilience.” Selected Emotion Tag: #DeterminationAndPerseverance |

- Step 2:

- Initial, Unrefined Prompt.

A simple combination of the text inputs and tags would yield a basic prompt that lacks emotional depth:

| “A lone, gnarled tree on a barren hill. An artwork about isolation and resilience.” |

- Step 3:

- LLM-Mediated Refinement (The “Weaving”).

The system then programmatically constructs a meta-prompt to instruct the LLM to act as an art director.

| “You are an art director. Revise the following prompt to visually embody the feeling of ‘Determination and Perseverance’. Do not just add these words. Instead, describe specific changes to the light, color palette, line quality, and composition that would convey this emotion.” |

- Step 4:

- The Final, Creative Emotion Prompt.

The LLM processes this instruction and generates a much richer, operational prompt that provides concrete visual guidance to the diffusion model:

| Prompt: ** A solitary, ancient, gnarled tree stands defiantly on a wind-swept, barren hill under a dramatic, stormy sky. To convey determination and perseverance, the tree’s lines must be thick and deeply etched, showing its age and struggle. The light should be stark, with a single, powerful beam breaking through the dark clouds to illuminate the tree, creating a high-contrast, heroic effect. The color palette should be muted and earthy (deep browns, grays) but with a defiant splash of deep green leaves on a single branch. The composition should place the tree slightly off-center, as if it is bracing against an unseen force, emphasizing its enduring struggle and resilience. |

By embedding the prompt tags into the final prompt, we can create instructional and customized prompts for the next step of the text-conditional image-to-image generation LLM.

3.2.3. Emotion Vision Rendering

To effectively convey various creative emotions, the LLM often presents visual variety by means of a diverse set of painting skills. In terms of diverse painting skills, it often stems from the variety and range of artistic choices of art elements present within a single artwork. These elements include line, shape, form, space, color, value, and texture, which interact dynamically to enhance the artwork’s overall impact.

These fundamental components play a crucial role in the creation of art and con-vey profound meanings and insights [20]. These art elements include the following:

- Lines or edges can show direction, movement, and emotions.

- Shapes composed of lines or color, like circles, squares, or free-form, can show the physical entities in a two-dimensional geometric area.

- Form is the three-dimensional version of shape with shading, perspective, or depth to present a stereoscopic feeling.

- Space can be filled with objects or left empty to create depth, which often refers to the area around and within a piece of art.

- Color presents a surface’s hue, saturation, and lightness, which can create feelings, add depth, and lead the viewer’s focus.

- Value helps create contrast, volume, and atmosphere in art, indicating how light or dark a color is.

- Texture describes the surface quality of a piece. It can be tangible or visual and add depth and insight.

Within a given artwork, the evaluation of variety involves a close examination of how different visual elements such as lines, shapes, colors, and textures interact with one another [1,20]. The key consideration is whether this interplay generates engaging interest and prevents a sense of monotony, or if it instead results in visual chaos [20]. In our design, we use “creative emotion weaving” to create the final prompt, including the art element description for image generation. An example of a prompt is below:

| Prompt: ** Imagine a sprawling, surreal landscape dominated by colossal, geometric forms made of shattered, iridescent glass. Use lines to depict both sharp, fractured edges and flowing, ethereal trails emanating from the broken structures. The space is vast and disorienting, with a strong sense of atmospheric perspective. The color palette should be predominantly cool (blues, greens, purples) in the distance, shifting to warm, vibrant hues (reds, oranges, yellows) near the viewer. Consider incorporating a single organic element (e.g., a lone, weathered tree or a swirling vortex of energy) to contrast with the rigid geometry. Think about the value shifts within the glass shards to create a sense of depth and realism, and contrast it with the almost flat texture in the background. Emotion Tag Focus: #CreativeAnxiety #FrustrationAndStruggle #DeterminationAndPerseverance #CatharsisAndRelease |

The “creative emotion prompt” will describe the generated image with different art elements, such as line, shape, form, space, color, value, and texture, to prompt the model to generate different “Emotion Vision” images. The choice of art elements pre-sented by the prompt tags can be selected randomly, showcasing the different artists’ craft skills and feelings involved. As Figure 1 reveals, step 8 will rearrange the art ele-ments randomly to construct another style of “Emotion Vision” images based on the woven emotion tags.

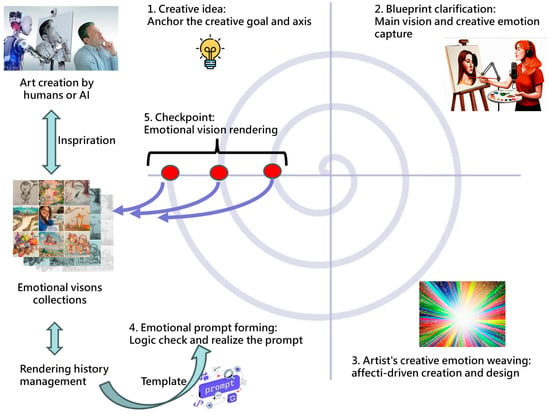

3.2.4. Iterative Inspiration Cycle

When applied in the art creation process, this mechanism demonstrates a cyclical and iterative methodology for craft artwork, which involves the effective use of related AI cloud services. This iterative nature helps to refine ideas and enhance their devel-opment within the creation journal. As shown in Figure 2, the “iterative inspiration cycle” outlines a process for creating artwork that involves several iterative steps:

Figure 2.

Iterative inspiration cycle.

- An initial creative idea forms in the artist’s mind.

- The artistic blueprint and the associated emotions are clarified through voice descriptions and sketch drawings.

- The artistic creation begins by focusing on embedding those emotions into the process.

- Using text-to-prompt AI, an emotional image prompt is generated and refined.

- At various checkpoints, prompt-to-image AI produces/rendering the “emotional vision” images.

- The accumulated emotional visions images can be collected as a collection.

This entire cycle is iterative, with feedback loops allowing for continuous refine-ment, drawing inspiration from a growing collection of “Emotional Vision” images, and forming a prompt template for further emotional image prompt generation.

3.2.5. Metrics for Emotional Vision

Evaluating artwork is a complex process that combines objective analysis with subjective interpretation. There are elements of the principles of art for art critiques, such as harmony, variety, and unity [20]. In our case, we provide “Emotion Vision” images to inspire artists in their creation journal. The first step for evaluation is to ensure that the “Emotion Vision” image aligns with the creator’s intention (or vision). Second, we consider the diversity of inspirations. Providing a wider range of “Emotion Vision” images is more advantageous for the creator, as it leads to more diverse possibilities of inspiration. In words, the evaluation will focus on the alignment and diversity of “Emotion Vision” images.

In terms of alignment, art criticism often considers the artist’s intention and the work’s conceptual depth [21]. Intention alignment emphasizes the artist’s unique perspective, conceptual insight, and the overall artistic intent conveyed. The challenge we faced was how to choose the metric for evaluating the alignment with the artist’s intention. CLIP score is a reference-free metric that can be used to evaluate the correlation between a generated caption for an image and the actual content of the image, as well as the similarity between texts or images. The formula of the CLIP score is listed as (1)

The metric measures the cosine similarity between the visual CLIP embedding Ei for an image i and the textual CLIP embedding Ec for a caption c. The score ranges from 0 to 100, and the closer to 100 the better. The CLIP score does not score by restricting any specific data distribution, which leads to difficulties in identifying the informative portions of samples [22]. For instance, if an image contains words that are also found in captions, it may yield a high CLIP score; however, this relationship between the image and caption is not the kind CLIP intends to evaluate [23]. In our case, the actual content of the image is the “Emotion Vision”, and the text caption is the original voice description in text form.

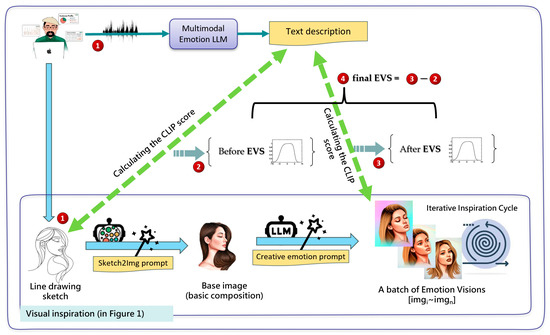

The diagram in Figure 3 illustrates the comparative workflow for calculating the EVS (Emotion Vision Score). (1) The process begins with the artist’s core intention, represented by the line drawing sketch and the text description. (2) The “Before EVS” is calculated using the full EVS formula from the CLIP score between these two initial inputs, establishing a baseline of alignment. (3) The “After EVS” is calculated using the full EVS formula from the CLIP score between a generated Emotion Vision image and the original text description, measuring alignment after the emotional prompt has been applied. (4) The final EVS is computed by the abstraction after EVS and before EVS to reflect inspirational value and diversity.

Figure 3.

The measurement of Emotion Vision Score (EVS).

As Figure 3 reveals, the “Emotion Vision” images are a text-conditional image-to-image generation driven by the combination of the emotion prompt of the original voice description and the line drawing sketch, so the difference between the “Emotion Vision” image and the line drawing sketch is the modification of the emotional prompt. For comparison, we must measure twice before and after emotional prompt is woven; that is, Before Emotion Vision Score and After Emotion Vision Score. A higher score indicates that the artist’s sketch intentions remain well-preserved when an emotional prompt is woven. Conversely, a lower score suggests that the artist’s sketch intentions are disrupted and interfered with by the emotional prompts during generation. However, a lower score also indicates a diverse phenomenon from the original line drawing sketch, which can also be beneficial for inspiration and offer more possibilities in the journal of art creation.

Specifically, values closer to 100 indicate that the result is nearly identical to the original line drawing, making it less noticeable and less effective for inspiration. In contrast, values closer to 0 indicate a significant departure from the original sketch, which is unsuitable for aligning with the artist’s intention but suitable for diversity. This presents a dilemma between aligning with the artist’s intention and embracing diversity. In summary, a middle range is considered reasonable and acceptable, as it balances the sketch intentions with the emotional prompt, providing effective inspiration.

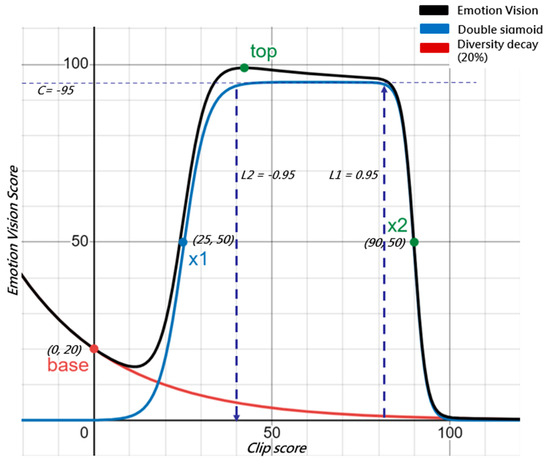

To accurately reflect the above-mentioned phenomena on the inspiration effect of “Emotion Vision” image, we adjust the CLIP score as “Emotion Vision Score (named as EVS)” using a double-sigmoid function plus diversity decay function as Formula (2) listed.

The Emotion Vision Score measures the average of inspirational effects on n “Emotion Vision” images. The double-sigmoid function can adjust its curve parameters to fit the inspiration effect of the CLIP score, as shown in Formula (3). A double sigmoid is a function created by combining two individual sigmoid functions in difference for the creation of more complex curve shapes, such as peaks (bell shapes), valleys, or S-curves with multiple inflection points [24]. The setting for double sigmoid and the diversity decay function is shown in Formulas (3) and (4):

A: The overall amplitude or scaling factor of the peak.

k1: The steepness of the first (falling) sigmoid.

k2: The steepness of the second (rising) sigmoid.

x1: The midpoint of the first (falling) sigmoid.

x2: The midpoint of second (rising) sigmoid (usually x1 > x2 to form a peak).

L1: The amplitude of the first sigmoid.

L2: The amplitude of the second sigmoid.

C: A vertical offset, shifting the entire curve up or down.

α: The ratio of diversity amplitude to overall when the CLIP score is zero.

k: Diversity decay parameter.

The related curve of EVS and CLIP score is presented in Figure 4, which illustrates a phenomenon where the effectiveness of conveying emotion through a metric, CLIP score.

Figure 4.

The transform curve of Emotion Vision Score (EVS) to CLIP score.

As each person experiences emotions differently, it leads to variations in the EVS curve among individuals. In the case of Figure 4, at the beginning of the curve at point (0, 20), it is assumed that the EVS value is about 20% because of the excitatory nature of diversity. As the similarity (the CLIP score) increases, the EVS rises. The intention, alignment, and diversity are balanced when it reaches near the middle range, and the best inspirational effect is achieved. However, when the similarity reaches 80% (assumed), the images become too like the “Line Drawing Sketch,” causing the emotional tension to start decreasing. Once it hits 100% similarity, the EVS drops to 0, indicating a lack of emotional passion. There are some other parameters that control the shape of the curve.

- Midpoints (x1, x2) show how these parameters control the location and width of the optimal inspiration peak. For instance, adjusting x1 shifts the point where inspiration begins to decline, defining the upper bound of the ideal similarity range. Steepness (k1, k2 in Formula (3)) demonstrates how these parameters control the sharpness of the rise and fall of the EVS curve, modeling how “forgiving” or “strict” the criteria for inspiration are.

- Amplitude and Offset (A, C in Formula (3)) can be explained as scaling and baseline parameters that map the function to a desired scoring range (e.g., 0–100).

- Diversity Ratio and Decay Rate (α, k in Formula (4)): We will provide a clearer analysis of how a controls the initial “boost” from diversity at a CLIP score of zero and how k controls how rapidly this boost fades as similarity increases.

Finally, the difference between “before EVS” and “after EVS” was calculated, and the emotional inspiration effect can be measured. Importantly, we are not primarily concerned with the image’s quality. Instead, we focus on how effectively the “Emotion Vision” images can inspire the artist’s creation.

3.2.6. Mathematical Modeling Aspects of the Emotion Vision Score (EVS)

The Emotion Vision Score (EVS) is not merely an adjustment of an existing metric, but a new mathematical construct designed to quantify the inspirational potential of AI-generated art. Its formulation is rooted in the observation that standard metrics, which often reward maximal similarity, are inadequate for evaluating creative output. The relationship between the similarity of a generated image to an artist’s initial concept and its inspirational value is fundamentally non-monotonic.

A simple linear or single sigmoid function is insufficient for modeling artistic inspiration because it fails to capture the nuanced judgment of a creator. The core theoretical insight is that inspiration is maximized within a specific, balanced range of similarity, with diminished value at the extremes. The observations come from the three phenomena:

- Low Similarity (CLIP score is low): When a generated image is too divergent from the artist’s sketch and textual description, it creates a conceptual disconnect. While it may be innovative, it does not honor the artist’s original intent and is unlikely to inspire the specific art project.

- High Similarity (CLIP score is high): When a generated image closely resembles the initial sketch, it offers no new perspective, creative leap, or unexpected development. It lacks transformative value and therefore also fails to inspire.

- Optimal Inspirational Range: The highest inspirational value lies in a “sweet spot” where the generated image maintains an optimal balance. It respects the structural and thematic essence of the original concept while introducing engaging, novel elements that advance the artist’s vision.

To mathematically model these phenomena, a standard symmetric function like a Gaussian curve is overly restrictive, as artistic judgment is rarely symmetric. A more flexible model is needed. We formally constructed the core of the EVS using a double-sigmoid function, defined in Formula (3), which was created by taking the difference between two distinct logistic (sigmoid) functions. The subtraction of these two functions creates a peak whose position, width, and asymmetry can be finely tuned via the parameters (x1, x2, k1, k2), providing a much more robust and realistic model of artistic evaluation than a simple bell curve. Based on Formula (3), the extensive physical meaning can be interpreted as follows:

- The inspiration growth phase (L1 term): The first logistic function models the initial rise in inspirational value. As the CLIP score (x) moves away from zero, the image becomes more relevant to the artist’s intention, and its inspirational potential grows accordingly.

- The replication penalty phase (L2 term): The second logistic function models the decline in inspiration as the image becomes not too derivative. As the CLIP score approaches 100, this term’s value increases, and, when subtracted from the first term, it creates a sharp downturn in the total score, penalizing mere replication.

The double-sigmoid component effectively models value from similarity. However, it does not fully capture the “happy accidents” in art—instances of pure, unexpected novelty that can be highly inspirational. To address this issue, we introduce the diversity decay function (Formula (4)) as an essential element for rewarding novelty, particularly when dealing with lower CLIP scores. This function establishes an “inspirational floor” or “bonus,” ensuring that even images with low semantic similarity are not immediately discarded. It assigns baseline value based on their potential to introduce diverse and unexpected ideas. The use of an exponential decay is rooted in a key assumption: the intrinsic value of raw diversity diminishes as the generated image becomes more aligned with the artist’s original vision. In other words, the “bonus” for novelty is most critical when an image significantly deviates from the initial concept. As the image becomes a better fit (i.e., as the CLIP score x increases), the need for an external diversity reward decreases, allowing the core double-sigmoid function to take over the evaluation. In Formula (4), the parameter α controls the magnitude of this bonus relative to the peak inspiration score A.

By combining these two functions, the EVS provides a comprehensive metric that balances the competing, yet complementary, demands of intentional alignment and creative novelty, thereby offering a more holistic and theoretically grounded measure of AI-generated artistic inspiration.

4. Experiments and Discussion

Our solution aims to inspire artists during their creative journey. In the experiments, we will evaluate two aspects: the influence of the inspiration mechanism and the feasibility of our proposed evaluation metrics—Emotion Vision Score.

4.1. Experimental Setup

To prepare the experimental data, we conduct the experiments using famous paintings from various representative art movements. The primary reason for selecting well-known art movements’ artworks is that they embody significant cultural, technological, and societal shifts that resonate today, particularly in creative expression following different philosophies and ideals. The collected dataset consists of seven major art movements, with approximately 42 pieces in each category, totaling 294. The seven major art movements are as follows: Impressionism, Post-Impressionism, Expressionism, Cubism, Surrealism, Abstract Expressionism, and Pop Art.

Initially, we employ popular image processing technology to convert these artworks into sketch line drawings that capture the main brushstrokes. These sketches simulate the artist’s initial composition during the creative process, as illustrated in the “sketch line drawing” shown in Figure 3. Additionally, we leverage the sentiment analysis capabilities of large language models (LLMs) to create text summaries from these artwork images. These summaries aim to emulate the descriptions that artists might provide regarding their creative process, which is represented as “Text Description” in Figure 3. Sketch line drawings are then converted into colorful painted versions called “Base Image” using a sketch-to-image diffusion model, as shown in Figure 3. “Base Image” then serves as the basis for the stage of creative emotion weaving. In the creative emotion weaving, “creative emotion prompts” are represented as 13 creative emotion tags used for the image-to-image diffusion model for text-conditional generation. These prompts are intended to infuse specific emotional characteristics into the base image, allowing the creation of a batch of “Emotion Vision” images with different creative emotions.

4.2. The Performance of Creative Inspiration

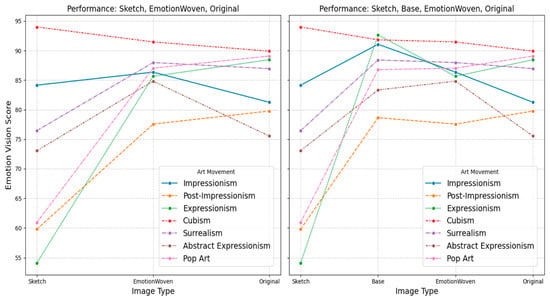

To assess the impact of the inspiration mechanism, we will measure the “Before Emotion Vision Score” and “After Emotion Vision Score” based on the CLIP score. To calculate the “Before Emotion Vision Score”, we calculate CLIP scores between the “Text Description” and the “Line Drawing Sketch.” This provides a baseline for evaluating the inspiration effect before applying specific emotion prompts. We also compute text-image CLIP scores for the “Base Image” and the original artwork (serving as a label) for further comparison. To calculate the “After Emotion Vision Score”, we calculate CLIP scores between the “Text Description” and each of the “Emotion Vision” images. These “Emotion Vision” images have been generated based on 13 creative emotion tags, resulting in 13 CLIP scores. They quantify the alignment of the generated “Emotion Vision” images with the “Text Description.” Finally, we converted the 16 CLIP scores into EVS using Formula (2) (13 for the Emotion Vision images, 1 for the sketch, 1 for the base image, and 1 for the original artwork). The line charts in Figure 5 show EVS’s statistical results.

Figure 5.

The performance of Emotion Vision Score (EVS) on different art movements.

In the left chart of Figure 5, the “sketch” provides a baseline for comparing inspirational effects across different art movements, but they start with very different scores due to variations in artistic craft, style, and technique. The “Expressionism” art movement receives a very low score and is not well-suited to be represented with sketch lines. In contrast, the “Cubism” art movement is highly appropriate for expression through sketch lines. The “EmotionWoven” is the average score of 13 Emotion Vision images, which presents a higher score compared with “sketch” (line drawing sketch), having a higher inspiration effect. The “Cubism” art movement is exceptional, as Cubism’s geometric abstraction and multiple viewpoints in the sketch phase might already contribute to higher emotional tension [25], weaving emotion into line drawing sketches, on the contrary, disrupting their inspiring impact.

In the right chart of Figure 5, include the “Base” item to compare the painting version of the “sketch” image. This comparison demonstrates a significant inspirational effect that is nearly identical to that of “EmotionWoven.” The nearly identical score phenomenon is reasonable since the “Base” image is also generated from the “Text Description” prompt. The similarity also indicates that our mechanism does not impede the creative intention after weaving the creative emotion tags. Additionally, the “Impressionism” and “Expressionism” art movements have a greater inspirational score on “Base” than on “EmotionWoven”. This observation can be inferred as follows:

- Impressionism aims to capture the ephemeral qualities of light and atmosphere, often evoking subtle emotions such as tranquility, wonder, or nostalgia, which are closely tied to the depicted scene [25]. Therefore, weaving emotion into the “Base” image of Impressionism may compromise the color, light, and atmosphere display. Expressionism seeks to convey feelings and emotions directly. Artists often utilized intense and non-naturalistic colors, along with agitated brushstrokes, to express subjective experiences, anxieties, and psychological states [25]. Consequently, weaving emotion in the “Base” image of Expressionism may also interfere with its direct composition and color display.

- The “original” is the score of the original artwork, in which the “Abstract Expressionism” and “Impressionism” art movements have a significantly lower score than on the “Base” and “EmotionWoven”. Its scores show an interesting dip from “Base” to “Original” after peaking at “Emotion-Woven”. We deduced that Abstract Expressionism is more challenging for artists in artistic creation as they often emphasize non-representational forms and spontaneous gesture through energetic brushstrokes, dripping paint, and bold colors or serene color fields to express complex, profound, usually subconscious feelings [25]. In other words, our mechanism, referred to as “EmotionWoven,” has a significant inspirational effect compared to the traditional art creation method referred to as “Original,” especially in “Abstract Expressionism” and “Impressionism” art movements.

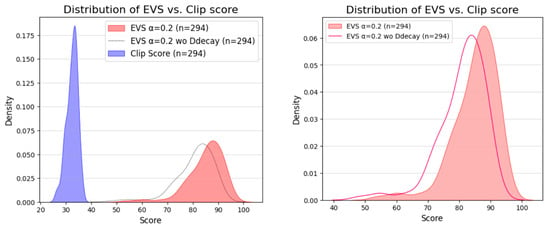

4.3. Emotion Vision Score Metric Analysis

The evaluation of the inspiration effect is based on the EVS metric. In the second part of the experimental analysis, we focus on this metric and its characteristics. To clarify these key points, we illustrate the distribution of the CLIP score and the EVS in Figure 6.

Figure 6.

The distribution of the CLIP score and the EVS.

In the left chart of Figure 6, the CLIP score represents a highly concentrated, low-scoring distribution with minimal variance, whereas the EVS represents a more spread-out, higher-scoring distribution, significantly near-human-habituated 100 range. In various evaluation contexts, such as school grades, percentages, or quality ratings, scores closer to 100 are typically associated with higher quality, excellence, or successful outcomes. EVSs, primarily in the 70 s to 90 s range, are intuitively aligned with common human practices of assessing performance metrics, where higher numbers indicate better results.

There are two EVS distributions: one is for 20% diversity decay included (EVS α = 0.2), the other is without diversity decay (EVS α = 0.2 wo Ddecay). According to the right chart, the inclusion of a “diversity decay” distribution obviously reduces variance and compresses the distribution towards higher scores. This effectively eliminates some of the lower scores found in the “without” version, resulting in more refined inspirational suggestions for the artist. The “diversity decay” component acts as a penalty or adjustment that lowers scores for less diverse or ideal items and raises scores for those that are more diverse or ideal. As a result, the distribution for the EVS metric becomes more refined and also more spread out. In the figure, the EVS distribution appears to be slightly negatively skewed, with a longer tail extending towards lower scores and a steeper drop-off towards higher scores. The longer tail clusters the most scores toward the high end, with only a few lower scores dragging down the tail. This reinforces the idea that the EVS metric is generally robust, yielding promising results most of the time for maximizing inspirational suggestions. Also, the long tail makes it easy to identify outliers. This allows for a focused investigation into why these specific instances scored poorly, helping to pinpoint areas for targeted improvement, error correction, or a deeper understanding of phenomena.

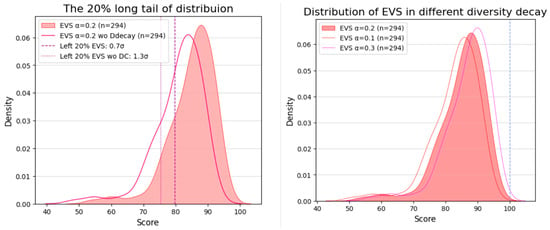

Another key point is the α ratio of EVS’s “diversity decay” component. In Figure 7, the 20% long tail portion of the EVS with a 0.2 diversity decay ranges from 0 to 80, while the 20% long tail portion of the EVS without diversity decay spans from 0 to 75. The ratio of α = 0.2 results in a right shift of about 5 units in the range. This adjustment is a sweet and balanced percentage point, making the range more refined without exceeding the maximum threshold of 100 too much. When α = 0.3, the range shifts right too much to exceed the threshold of 100, whereas, for α = 0.1, the range shifts left back and offsets the effect of diversity decay.

Figure 7.

The α ratio of EVS’s “diversity decay” component.

4.4. Limitations of the Experimental Design

This study’s primary limitation is the dataset’s nature and the absence of a direct human-in-the-loop evaluation. Our experimental dataset was derived by converting well-known artworks into sketch-and-description pairs. This approach, while indirect, was a deliberate choice for this initial phase of research for three reasons:

- Establishing a controlled and reproducible first step: The primary goal of the current work was to establish the feasibility and internal validity of the proposed mechanism, particularly focusing on the Emotion Vision Score (EVS). Human creativity is inherently subjective and variable, making it difficult to control in initial experiments. We could create a standardized, reproducible benchmark by using famous art movements with well-documented stylistic and emotional contexts (e.g., the angst of Expressionism and the geometry of Cubism). This allowed us to systematically test whether the EVS metric behaves as theoretically intended across diverse artistic styles before introducing the complexities of an on-site user study.

- Simulating the generalized creative process: Converting a finished artwork into a line-drawing sketch reasonably simulates an artist’s initial compositional idea. The analysis of the original artwork provides a proxy for the rich “Text Description” that an artist might provide about their vision. This allowed us to provide a proof of concept for the entire mechanism—from the initial concept to the inspirational output—and validate that the mechanism could generate significantly different results based on various emotional prompts.

However, we recognize that this simulation is not a substitute for real-world application. The true measure of an inspirational tool lies in its ability to assist living artists in their unique creative journeys.

4.5. Benchmarking of the EVS Metric

A primary challenge in benchmarking the EVS is the lack of a “gold standard” computational metric for artistic inspiration. Standard image metrics like SSIM (Structural Similarity Index Measure), LPIPS (Learned Perceptual Image Patch Similarity) [26,27], or even the CLIP score itself are designed to measure fidelity or semantic similarity, operating on the principle that higher similarity to a reference is always better.

In contrast, the EVS is based on the non-monotonic hypothesis that inspiration is maximized not by perfect replication but by finding an optimal balance between conceptual alignment and creative novelty. Consequently, directly comparing the EVS to these monotonic metrics is not a meaningful benchmark, as they measure fundamentally different qualities. Instead, validating the EVS should involve assessing whether it is theoretically sound, internally consistent, and, most importantly, predictive of human creative judgment. This study employed several forms of internal validation to establish the EVS’s credibility before a full human-subject benchmark:

- Face Validity: The mathematical formulation of the EVS, combining a double-sigmoid and a diversity decay function, was explicitly designed to model the established psychological principle that creativity flourishes in a “sweet spot” between the familiar and the novel. The function’s shape is a direct mathematical representation of this theory, giving it strong face validity.

- Convergent Validity with Domain Knowledge: The results presented in Figure 5 provide a form of convergent validation. The EVS metric generated scores that align with established knowledge from art history. For instance, “Cubism”, an art form defined by its geometric structure, received a high EVS even from the initial sketch, whereas “Abstract Expressionism”, which relies heavily on color and gesture, received a lower initial score but showed significant improvement after emotion weaving. This demonstrates that the EVS is sensitive to stylistic nuances in a way that is consistent with expert human understanding.

- Parameter Sensitivity Analysis: The analysis of the diversity decay component (a) in Figure 7 serves as a sensitivity analysis. It shows that the metric behaves predictably as its internal parameters are adjusted, confirming that it is a stable and well-behaved mathematical function.

5. Conclusions

Our research aims to establish a mechanism that helps artists find inspiration and develop ideas during their creative process. By focusing on emotions as the driving force, we intend to assist artists in capturing their creative emotions and using them as guidance or visions to help them create masterpieces. The total process is an iterative inspiration cycle with feedback loops allowing for continuous refinement, demonstrating an incremental methodology for craft artwork.

Within the mechanism, several innovative designs have been proposed. We design the “Emotion Vision” images to inspire artists in their creation journals. The “Emotion Vision” images are produced from sketch line drawing and creative emotion elements, which can assist the artist in capturing the creative emotion energy digitally and intelligently. The experimental results showed that our mechanism has a positive inspirational effect compared to the traditional art creation method, significantly on “Abstract Expressionism” and “Impressionism” artworks.

In addition, a dedicated metric, the Emotion Vision Score, has been proposed for measuring their emotional inspiration effect, not based on the image’s quality, but rather on how effectively the “Emotion Vision” images can inspire artists. This metric not only focuses on balancing the sketch intentions with creative emotion but also considers the diversity of inspiration, helping the mechanism to filter out the most effective “Emotion Vision” images.

Here, we use many cloud multimodal LLM services and generation AI to build the total inspiration mechanism. This usage pattern invokes interesting topics. The various LLMs are like different human beings. The personality or properties of each LLM can represent different effects of creativity and inspiration. As we begin to rely on them to help create our artwork, the boundary between originality and intelligence in the art world is the next topic that needs to be discussed. In addition, the actual effectiveness of an inspirational mechanism can be assessed by how well it helps living artists in their creative journeys. A thorough human-in-the-loop evaluation is necessary in the next step to validate these findings. This future research will connect our computational metrics with the direct, subjective experience of artistic inspiration.

In summary, this novel approach integrates emotional intelligence into AI for art creation, enabling it to understand and replicate human emotion in its outputs. By enhancing emotional depth and generative AI consistency, this research aims to advance digital art creation and contribute to the evolution of artistic expression through AI technology.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to copyright restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

Abbreviations

The following abbreviations are used in this manuscript:

| GAI | Generative Artificial Intelligence |

| LLM | Large Language Model |

| EVS | Emotion Vision Score |

References

- Zhou, E.; Lee, D. Generative artificial intelligence, human creativity, and art. PNAS Nexus 2024, 3, pgae052. [Google Scholar] [CrossRef] [PubMed]

- Lee Boonstra, Prompt Engineering (White Paper). Available online: https://www.kaggle.com/whitepaper-prompt-engineering (accessed on 23 April 2025).

- Deonna, J.; Teroni, F. The creativity of emotions. Philos. Explor. 2025, 28, 165–179. [Google Scholar] [CrossRef]

- Galanos, T.; Liapis, A.; Yannakakis, G.N. AffectGAN: Affect-Based Generative Art Driven by Semantics. In Proceedings of the 2021 9th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), Nara, Japan, 28 September–1 October 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar]

- Rothenberg, A. Creative Emotions and Motivations. In Flight from Wonder: An Investigation of Scientific Creativity; Oxford University Press: Oxford, UK, 2014; pp. 59–72. [Google Scholar]

- Naa Anyimah Botchway, C. Emotional Creativity. In Creativity; Brito, S.M., Thomaz, J., Eds.; IntechOpen: London, UK, 2022. [Google Scholar]

- Sundquist, D.; Lubart, T. Being Intelligent with Emotions to Benefit Creativity: Emotion across the Seven Cs of Creativity. J. Intell. 2022, 10, 106. [Google Scholar] [CrossRef] [PubMed]

- Čábelková, I.; Dvořák, M.; Smutka, L.; Strielkowski, W.; Volchik, V. The predictive ability of emotional creativity in motivation for adaptive innovation among university professors under COVID-19 epidemic: An international study. Front. Psychol. 2022, 13, 997213. [Google Scholar] [CrossRef] [PubMed]

- Rooij, A.; Corr, P.J.; Jones, S. Creativity and Emotion: Enhancing Creative Thinking by the Manipulation of Computational Feedback to Determine Emotional Intensity. In Proceedings of the 2017 ACM SIGCHI Conference on Creativity and Cognition. Singapore, 27–30 June 2017; pp. 148–157. [Google Scholar]

- Wu, Z.; Gong, Z.; Ai, L.; Shi, P.; Donbekci, K.; Hirschberg, J. Beyond silent letters: Amplifying llms in emotion recognition with vocal nuances. arXiv 2024, arXiv:2407.21315. [Google Scholar]

- Alzoubi, A.M.A.; Qudah, M.F.A.; Albursan, I.S.; Bakhiet, S.F.A.; Alfnan, A.A. The Predictive Ability of Emotional Creativity in Creative Performance Among University Students. SAGE Open 2021, 11, 215824402110088. [Google Scholar] [CrossRef]

- Koley, S.; Bhunia, A.K.; Sekhri, D.; Sain, A.; Chowdhury, P.N.; Xiang, T.; Song, Y.Z. It’s All About Your Sketch: Democratising Sketch Control in Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Prudviraj, J.; Jamwal, V. Sketch & Paint: Stroke-by-Stroke Evolution of Visual Artworks. arXiv 2025, arXiv:2502.20119. [Google Scholar]

- Chatterjee, S. DiffMorph: Text-less Image Morphing with Diffusion Models. arXiv 2024, arXiv:2401.00739. [Google Scholar]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience; Harper & Row: New York, NY, USA, 1990. [Google Scholar]

- Deci, E.L.; Ryan, R.M. Self-determination theory. In Handbook of Theories of Social Psychology; Sage Publications Ltd.: Thousand Oaks, CA, USA, 2012; Volume 1, pp. 416–436. [Google Scholar]

- Finke, R.A.; Ward, T.B.; Smith, S.M. Creative Cognition: Theory, Research, and Application; MIT Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Hogan, S. Art Therapy Theories: A Critical Introduction; Routledge: Abingdon, UK, 2015. [Google Scholar]

- Gero, J.S. Creativity, emergence and evolution in design. Knowl.-Based Syst. 1996, 9, 435–448. [Google Scholar] [CrossRef]

- Adeyekun, A.J. The Elements and Principles of Art. 2019, p. 1. Available online: https://www.academia.edu/100095283/THE_ELEMENTS_AND_PRINCIPLES_OF_ART (accessed on 23 April 2025).

- Clarke, A.; Hulbert, S.; Summers, F. Towards a Fair, Rigorous and Transparent Fine Art Curriculum and Assessment Framework. Arts 2018, 7, 81. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J. Learning transferable visual models from natural language supervision. In Proceedings of the 38th International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Zhou, M.; Wang, Z.; Zheng, H.; Huang, H. Long and short guidance in score identity distillation for one-step text-to-image generation. arXiv 2024, arXiv:2406.01561. [Google Scholar]

- Roper, L. Using Sigmoid and Double-Sigmoid Functions for Earth-States Transitions. 2000. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=718b9fdd2ed27d0193179ec0eb01a2ac622d25e3 (accessed on 23 April 2025).

- Meecham, P.; Sheldon, J. Modern Art: A Critical Introduction, 2nd ed.; Routledge: Abingdon, UK, 2005. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).