Abstract

In this paper, we propose a sparse partial envelope model that performs response variable selection efficiently under the partial envelope model. We discuss its theoretical properties including consistency, an oracle property and the asymptotic distribution of the sparse partial envelope estimator. A large-sample situation and high-dimensional situation are both considered. Numerical experiments demonstrate that the sparse partial envelope estimator has excellent response variable selection performance both in the large-sample situation and the high-dimensional situation. Moreover, simulation studies and real data analysis suggest that the sparse partial envelope estimator has a much more competitive performance than the standard estimator, the oracle partial envelope estimator, the active partial envelope estimator and the sparse envelope estimator, whether it is in the large-sample situation or the high-dimensional situation.

Keywords:

response variable selection; dimension reduction; partial envelope model; Grassmann manifold; oracle property MSC:

62B05; 62H12; 62R07

1. Introduction

Consider the multivariate linear regression model, which has the stochastic predictor vector and the multivariate response vector ,

where is the unknown intercept, is the unknown regression coefficient matrix, the error vector has mean zero and unknown covariance matrix , which is independent of X. The data comprise n independent realizations of Y, which are observed at corresponding values of . The multivariate linear regression model (1) is a cornerstone of multivariate statistics, and researching the interrelation between X and Y through the regression coefficient matrix is the main focus. In this article, we not only center on the correlation between X and Y, but we also pay attention to the response variable selection. Although a response has zero coefficients, it can still improve the estimation efficiency of the nonzero coefficients. In this paper, we suppose that since one of the main focuses of this article is response variable selection.

Cook et al. [] introduced a response envelope model, and it is based on the idea that a projection of the response vector Y may be immaterial to the purpose of estimating , whereas contributing extraneous variation induces the estimator of to be more variable. Envelope estimation explains such extraneous variation, and this operation makes the estimator of potentially much more efficient. On the basis of the research results in Cook et al. [], many scholars have extended the idea of envelopes to a more general background and have proposed a lot of new models to obtain greater efficiency gains (Su and Cook [,,], Cook et al. [], Su et al. [], Cook and Zhang [,,], Khare et al. [], Li and Zhang [], Zhang and Li [], Pan et al. [], Zhu and Su []).

Su et al. [] proposed the sparse envelope model, which carries out variable selection on the responses and maintains the efficiency gains provided by the envelope model, and discussed response variable selection in both the standard multivariate linear regression and the envelope background. Meanwhile, they established consistency and the oracle property and acquired the asymptotic distribution of the sparse envelope estimator. Zhu and Su [] proposed the envelope-based sparse partial least squares (SPLS) by employing a connection between envelope models and partial least squares (PLS). They established the consistency, oracle property and asymptotic normality of the envelope-based sparse partial least squares estimator, and considered the large-sample scenario and high-dimensional scenario. Meanwhile, they developed the envelope-based sparse partial least squares estimators under the setting of generalized linear models and discussed its theoretical properties including consistency, oracle property and asymptotic distribution.

In this article, based on the research work of Su et al. [], we propose a novel sparse partial envelope model that can implement response variable selection and improve parameter estimation efficiency. It has the following major contributions. First, the theoretical properties of the sparse partial envelope estimator can be studied. We build -consistency, asymptotic normality and the oracle property in the large-sample situation and investigate the rate of convergence and selection consistency in the high-dimensional situation. Second, through the simulation studies, we find that the sparse partial envelope model has excellent response variable selection performances both in small r large n situations and small n large r situations. Furthermore, the sparse partial envelope estimator is much more efficient than the standard estimator, the oracle partial envelope estimator, the active partial envelope estimator and the sparse envelope estimator in small r large n situations. Third, via the real data analysis, we also discover that the sparse partial envelope model has an obvious efficiency gain than the sparse envelope model in small n large r situations. In short, the sparse partial envelope model is more flexible than the sparse envelope model.

The rest of the article is organized as follows. Section 2 reviews the envelope model and the partial envelope model. Section 3 demonstrates how the sparse partial envelope model is proposed. Section 4 shows theoretical properties in the sparse partial envelope model estimators. Simulation studies are carried out in Section 5. A real data analysis is given in Section 6. Some remarks are displayed in Section 7. The proofs of theorems and propositions are provided in the part of Appendix.

2. Review of the Envelope Model and Partial Envelope Model

The envelope model (Cook et al. []) is designed to discover the smallest subspace that satisfies the following two conditions:

The sign ‘∼’ represents identically distributed, and the sign ‘⨿’ represents statistically independent. The symbol projects onto the subspace and . Property (a) implies that the distribution of does not rely on X, so has no information about . Property (b) implies that is conditionally independent of given X, and thus, cannot transmit information about by virtue of a link with . The entire immaterial information in Y can be obtained by detecting the smallest subspace that satisfies the requirements in (2). Let . Cook et al. [] showed that conditions (2) are equal to the following two conditions:

Condition (2b) holds if and only if and are uncorrelated given X, and it is equivalent to claiming that is a reducing subspace of . Conditions (3) indicate that we can obtain all of the immaterial information by selecting to be the intersection of all reducing subspaces of that include , which is called the -envelope of and denoted by or shortened to .

Let , and let and denote semi-orthogonal basis matrices for and separately. Via imposing conditions (3) on the standard model (1), the coordinate form of the envelope model can be expressed as below:

where , is the coordinates of with respect to , and and are both positive definite matrices. Su and Cook [] extended the envelope model to the partial envelope model. The partial envelopes pay attention to the coefficients consistent with the predictors of interest. They partition X into two sets of predictors and , , , and partition correspondingly the columns of into and . Then, model (1) can be rephrased as , where is corresponding to the coefficients of interest. The -envelope for is mainly thought over, leaving as an unrestricted parameter. This generates the parametric structure and , where denotes the projection onto called the partial envelope for . This is the same as the envelope structure, except the partial envelop is correlated with instead of the larger space . In order to emphasize the partial envelope, is regarded as the full envelope. Due to , the partial envelope is included in the full envelope, . More analogous descriptions of the envelope model and the partial envelope model can be found in Zhang et al. [], Zhang et al. [], and Zhang and Huang [].

Let , and let be a semi-orthogonal matrix with , and its columns form a basis for . Let be an orthogonal matrix and be the coordinates of related to the basis matrix . Then, a coordinate version of the partial envelope model can be written as follows:

where and are both positive definite matrices, and they serve as coordinates of and separately which are related to the basis matrices for and . For a more in-depth description, represents the population residuals from the multivariate linear regression of on . The predictor X is centered. In this way, the linear model can be re-parameterized as , where is a linear combination of and . Next, denotes the population residuals from the regression of Y on alone. A linear model which contains alone is written as . The partial envelope is identical to the full envelope for in the regression of on . In other words, the partial envelope can be interpreted in terms of , which is applied to the regression of on . The predictors are centered, so the maximum likelihood estimator of is simply . The estimators of the remaining parameters require the estimator of . See Zhang et al. [] for a similar characterization.

The estimator of the partial envelope is acquired by solving the following optimization problem:

where denotes an Grassmann manifold, which is the set of all -dimensional subspaces in an r-dimensional space, denotes the sample covariance matrix of the residuals from the least squares regression of on , and denotes the sample covariance matrix of . The optimization is carried out on , (6) is a Grassmann manifold optimization problem. The objective function is non-convex. Because the estimation of contains manifold optimization, it can be slow in high-dimensional settings. To solve these problems, Cook et al. [] converted the problems into a non-manifold optimization through a reparameterization of . In general, , which is composed of the first rows of , is assumed to be nonsingular. Then,

where and . Note that characterizes since A depends on only through . Because A is unconstrained, under this parameterization, the optimization problem in (6) is converted to the following unconstrained non-manifold optimization problem:

Once we obtain from (7), , and then the partial envelope estimator of is , where is the ordinary least squares estimator of . By the results of Su and Cook [], the partial envelope estimator is as efficient as or more efficient than .

3. Sparse Partial Envelope Model

We first define active responses and inactive responses. In Su et al. [], if the corresponding rows of are composed of zeros, such response variables are called inactive; if its corresponding rows in consist of nonzero values, such response variables are called active. Because , the regression coefficients of the inactive responses are zero. However, an active response may also have zero regression coefficients. Properties of the active responses were researched by Su et al. [] (Proposition 1) under the background of the sparse response envelope. Analogous results are built for the sparse partial envelope model: if the regression coefficients of an active response are all zero, then the response must have a relation to a response that has nonzero regression coefficients. This proposition demonstrates that if an active response has zero regression coefficients, it still provides information in estimating the nonzero regression coefficients. This is a fresh characteristic of response variable selection.

Without the loss of generality, we can write , and let q denote the dimension of , where denotes the active responses and denotes the inactive responses. The subscripts and are employed to a quantity if it is associated with active and inactive responses. For instance, and denote the number of active and inactive responses, and . Then, , and for the partial envelope model (5) have the following sparse structure:

where is a semi-orthogonal matrix, is its completion, is an orthogonal matrix, and denotes the coefficients for the active responses and the zero matrix has the dimension . Because , the inactive responses do not occur in the material part.

We call (5) the sparse partial envelope model if , and have the sparse structure (8). Its estimator of is the sparse partial envelope estimator. Under the sparse partial envelope model, also has a sparse structure, and we denote the coefficients for the active responses as and the zero matrix has the dimension . The completion of has the general form , where is a completion with a block diagonal structure, and R represents a rotation of the orthogonal basis. The dimension of should be at most the dimension of , so . When , there is no immaterial information in the active responses for the sparse envelope model, and , but as long as , there is still immaterial information in the active responses for the sparse partial envelope model. When , there are no inactive responses and all rows in are nonzero. Then, the sparse partial envelope model is equivalent to the partial envelope model.

The parameterization of A maintains the sparse structure of . In other words, indicates that a row in is composed of all zeros if and only if the corresponding row in A is composed of all zeros. Hence we can determine the inactive responses from the sparsity structure of A. In order to make the estimator of a sparse estimator, the row-wise sparsity in A is induced by adding an adaptive group lasso penalty to the objective function in (7):

where is the norm of a vector, denotes the ith row of A, is the tuning parameter and the s are the adaptive weights. According to Zou [], we set , where is a tuning parameter and is a -consistent estimator of . The tuning parameter can be selected from a small candidate set such as (Zou []).

If r grows to infinity with n, we denote r by . When , and are both singular, and this is problematic because the objective function in (9) relies on and the optimization algorithm that is employed to solve (9) needs . These issues can be solved by employing sparse permutation invariant covariance estimation (Rothman et al. [], SPICE) to acquire estimators of and . Since sparse permutation invariant covariance estimation is the only one that does not need a sparsity structure for the target parameter for the sake of establishing the consistency of its estimator. In the sparse partial envelope model, and may not involve zero elements. We employ sparse permutation invariant covariance estimators of and and denote them as and . Then, we acquire and by taking the inverses of and and substitute and for and in (9), and the objective function is

The optimizations of (9) and (10) are similar to the optimization problems discussed in Su et al. [] and Zhu and Su []. Once we obtain from (9) or (10), can be formed by employing an orthogonal basis of , and is taken as a complement of . The sparse partial envelope estimators of and are

The estimators for the constituent parameters are and . Apart from the fact that and have the special structures in (8), the sparse partial envelope estimators have the identical form to the partial envelope estimators. Because the sparse partial envelope estimator is asymptotically equivalent to the maximum likelihood estimator of the oracle partial envelope model, see Section 4, we can employ likelihood-based procedures such as the Akaike information criterion, the Bayesian information criterion or likelihood ratio testing to select . For selecting and , we prefer cross-validation over the Bayesian information criterion and other likelihood-based procedures.

4. Theoretical Properties of the Sparse Partial Envelope Estimator

In this part, we investigate theoretical properties of the sparse partial envelope estimator. Theorems 1–3 provide the consistency and oracle properties of the sparse partial envelope estimator in the large-sample scenario when r is fixed and n tends to infinity. Theorems 4 and 5 give the selection consistency and convergence rate in the scenario when both and n tend to infinity. Set , and let and .

Theorem 1.

Theorem 1 establishes the -consistency of the sparse partial envelope estimator of and . Notice that although the objective function for the sparse partial envelope estimator originates from the normal likelihood, we do not require normality to establish the -consistency of and .

Proof of Theorem 1.

We denote the objective function in (9) as . In order to prove Theorem 1, we will demonstrate that for any small , there exists a sufficiently large constant C, such that

If (12) holds, then there exists a local minimizer of such that . Hence, is a -consistent estimator of A. Because is merely a function of A, is a -consistent estimator of . Since , and is a -consistent estimator of , then is a -consistent estimator of .

Now, we only need to prove (12). We compute by employing the Taylor expansion. Because the form of is a little complex, we write it into four parts

We first center on and then expand ,

where and are the first and second directional derivatives (Dattorro []). The first directional derivative is

The second directional derivative is

Let

then

Substitute and into the expansion for , and we can acquire

Now, we expand . The first directional derivative is

Let and be the variance matrix of , the variance matrix of and the covariance matrix of and in population, and let and be the corresponding sample versions. Then, taking advantage of Cook and Setodji [],

where is the centred data matrix of , whose ith row is . Because and ,

where by means of the central limit theorem, each element in , , and converges in distribution to a normal random variable with mean 0. As

we can expand as

The second equality is because , so

and

By the Cauchy–Schwarz inequality for the matrix trace (Magnus and Neudecker []),

The second directional derivative of is

Substitute and into the expansion for , and we obtain

Notice that and have a similar structure, except that is replaced by . Let . By means of the central limit theorem, converges in distribution to a normal random variable with mean 0. Then, we implement the same expansion to and obtain

Now, we expand . Let be the ith row of , then

The second inequality is based on the Taylor expansion at . When as , . Combine the results for , , and , and we have

Let be the smallest eigenvalue of M. The matrix M appears in (5.7) of Cook et al. []. By Shapiro [], M is a positive definite matrix and . Then, we have

where is the smallest eigenvalue of . When for sufficiently large C, the terms with order dominate the terms with order . When for sufficiently large C, conclusion (12) follows. □

Theorem 2.

Suppose that the conditions in Theorem 1 hold, and further suppose that . Then, for .

Theorem 2 establishes the selection consistency of the sparse partial envelope estimator and shows that the inactive responses are identified by the sparse partial envelope model with the probability tending to 1.

Proof of Theorem 2.

We prove Theorem 2 by contradiction. Assume that for . Let be the ith column of . The first derivative of concerning should be 0 evaluated at the local minimum . The first derivative of concerning is

Since , and are -consistent estimators of , and A, so and , then

Then,

On the other side, let v be the element in that has the largest absolute value, then where denotes the absolute value. Because we have , there is, at the lowest, one element in that tends to infinity. With (13), this is a contradiction of (14). Hence, for , we have . □

Next, we define the oracle partial envelope estimator and study its properties. Under the partial envelope model, the inactive response involves information on through its covariance with the active response. Then, the oracle partial envelope model is defined as

The oracle partial envelope model (15) is analogous to the sparse partial envelope models (5) and (8), and the difference between them is that, in (15), we know q and which rows in and are formed from only zeros. A subscript ‘O’ is attached if an estimator is the oracle partial envelope estimator. Let be the sample covariance matrix of the residuals from the regression of on , and let be the upper left block of . Let . Under the basis , we denote the coordinates as , where

Proposition 1 provides the maximum likelihood estimator and its asymptotic distribution. Let . The symbol ‘’ denotes a convergence in the distribution.

Proposition 1.

Suppose that the oracle partial envelope model (15) holds and the errors are normally distributed. Then, the maximum likelihood estimator of under the oracle partial model is , where

Furthermore,

where , and .

By means of Proposition 1, we can find that occurs in the objective function for and hence influences . The active partial envelope model that involves only the active partial responses is defined as follows:

Proposition 2.

Suppose that the conditions in Proposition 1 hold. Then, the maximum likelihood estimator of under the active partial envelope model is , where

Furthermore,

where , and .

From Proposition 2, we see that , so , and the oracle partial envelope model (15) is more efficient than the active partial envelope model (16) in estimating . Hence, containing also improves efficiency in the oracle partial envelope model.

Proof of Proposition 1 and Proposition 2.

We prove Proposition 2 based on the standard theory of the partial envelope model in Su and Cook []. Next, we prove Proposition 1. In this proof, we do not employ the normality of the errors but just require that the errors have finite fourth moments. The derivation of the maximum likelihood estimator of is analogous to the derivation of the maximum likelihood estimator of under the partial envelope model in Su and Cook []. We take advantage of Proposition 4.1 in Shapiro [] to obtain the asymptotic variance. First, we match our notations with Shapiro’s by checking the assumptions in Proposition 4.1 in Shapiro []. Similar to those in the proof of Theorem 2 in Su and Cook [], we can verify that when the errors have finite fourth moments, x is asymptotically normally distributed. We use Shapiro’s to denote our . Let l be the log-likelihood function in (14) and let be its maximum value. The minimum discrepancy function is defined as . Since is acquired from the normal likelihood function, it satisfies the four conditions in Section 3 of Shapiro []. We use Shapiro’s to denote our . Hence, the function g connects and , and is twice differentiable. All the conditions in Proposition 1 are satisfied. Let be the estimator of under the oracle partial envelope model (15), then is asymptotically normally distributed with zero mean and some covariance matrix.

We employ the normal errors to provide closed-form expressions for the asymptotic variance of . Proposition 2 demonstrates that the asymptotic variance has the form , where ‘†’ denotes Moore–Penrose inverse, J is the Fisher information, and H is the Jacobian matrix

where , and is a commutation matrix (Magnus and Neudecker []). After some algebra, we can obtain the closed form for the asymptotic variance of :

in distribution, where , and . Notice that we ignore , and in the J and H matrices. This does not influence the results since they are not contained in the parameterization of and , and their maximum likelihood estimates are asymptotically independent of the estimates of and . □

Next, we continue to discuss the theoretical properties of the sparse partial envelope estimator.

Theorem 3.

Suppose that the conditions in Theorem 2 hold. Then, as , is asymptotically normally distributed with mean zero and asymptotic variance is equal to that of . Further, assume that the errors are normally distributed, then we have a closed form for the asymptotic variance V: , where .

From Theorem 3, we can see that the sparse partial envelope estimator is asymptotically normal and has an asymptotic distribution. Together with Theorem 2, it shows that the sparse partial envelope estimator enjoys the oracle property: the sparse partial envelope model selects the inactive responses with the probability tending to 1 and estimates the coefficients for the active responses with the same efficiency as does the oracle partial envelope model.

Proof of Theorem 3.

Let denote the nonzero rows in the sparse partial envelope estimator , and denote the nonzero rows in the oracle partial envelope estimator. Suppose we can prove for a sequence , then with . Hence, . So in probability. By Slutsky’s theorem, has the identical asymptotic distribution to . If we can prove for , therefore, the conclusion of Theorem 3 follows. Since , . Concerning the selection of , we can take .

We set B to be a matrix, and

The objective function to estimate B is

where is the ith row of B. Since the sparse partial envelope model enjoys the selection consistency, . For the sake of proving , it is sufficient to demonstrate that for any small , there exists a sufficiently large constant C, such that

If (17) holds, then we have for . Now, we prove (17). Following the proof of Theorem 1, we expand and calculate . The objective function can be partitioned into four parts: . The first directional derivatives of and at are

Because is a minimizer of ,

Then, .

The computation on the second directional derivatives of and at and the expansion of are parallel to those in Theorem 1. Combining all those terms together, we have

where includes the nonzero rows in A and . According to the definition of , we have , so the second term is dominated by the first term. If the trace in the first term is positive, then we can establish (17), and we have

where is the smallest eigenvalue of , and is the smallest eigenvalue of . The derivation of the last inequality is identical to the derivation of a similar inequality at the end of the proof of Theorem 1. □

Now, we discuss the convergence rate and selection consistency of the sparse partial envelope estimator when tends to infinity with n. We make the same assumptions as Su et al. []:

- (A1) There exist positive constants and such that and , where and are the largest and smallest eigenvalues of .

- (A2) The samples of are independent and identically sampled from a sub-Gaussian distribution, for example, for some constant and every . Samples of X are independent and identically distributed, and follows a sub-Gaussian distribution, for example, for some constant and every . Let and denote the number of nonzero off-diagonal elements in the lower triangle of and , respectively, and let . We employ to denote the Frobenius norm of a matrix.

Theorem 4.

Theorem 4 shows that the convergence rate of the sparse partial envelope estimator is finite by the convergence rate of and . If there is a different inverse covariance matrix estimator, then we can improve the convergence rate of the sparse partial envelope estimator to a faster rate. Meanwhile, we need (A1) and (A2) for the consistency of and .

Proof of Theorem 4.

We first show that

where denotes the max norm of a matrix, which is the maximum of the absolute values of all elements in the matrix. In order to build (18) and (19), it is sufficient to demonstrate that there exist positive constants and such that

Let Q be an m-dimensional random vector with mean and covariance matrix , and follow a sub-gaussian distribution. Assuming that are n independent and identically distributed samples of Q, then and

From Lemma 1 in Ravikumar et al. [], there exist positive constants such that

where , and denotes the absolute value. Let for some . By the union sum inequality, there exists a positive constant such that

with probability tending to 1 as .

Let with mean , which has a block diagonal covariance matrix with diagonal blocks being and . Then, there exists the constant such that . For fixed , there exists the constant such that . So, we have

Because , for some , we have

Since , we have

If , then , where is the matrix max norm. Exploiting this fact, for some , we have

and hence, (18) and (19) hold.

Let . The objective function in (10) can be denoted as . Theorem 4 holds if for any small , there exists a sufficiently large constant C such that

Let . Following the computations as in the proof of Theorem 1, we calculate the Taylor expansion of at A and obtain

Notice that

Let be the spectral norm of a matrix. For two matrices and , . So,

and . Then,

Now, we have

So,

Collecting all these inequalities and results together, we apply these inequalities to the terms in the first four lines of , then

where , and . Based on the proof at the end of Theorem 1, there exists the positive constant by Theorem 1 such that

Then, with sufficiently large C, the second order term of dominates the first-order term, and with the probability tending to 1. Therefore, (21) holds, and . Since is a simple and continuous function of A, then .

Because

there exists the constant such that

Since , then

Hence, the sparse partial envelope estimator converges to with rate . □

Theorem 5.

Assume that the conditions in Theorem 4 hold, as and . Then, as , and as .

Theorem 5 establishes the selection consistency of the sparse partial envelope estimator. When grows with n, the sparse partial envelope estimator correctly identifies active and inactive responses with the probability tending to 1.

Proof of Theorem 5.

We make

then is the smallest norm of the non-sparse rows in A. Because

then with the probability tending to 1. This means for . For , . Hence, the sparse partial envelope estimator identifies the nonzero rows with the probability tending to 1.

For , assuming , we take the derivative of concerning and evaluate at , and we have

Since , then we have

But

Because , this is a contradiction. Hencem we have for as . □

5. Simulation Study

Now, in this part, we report results on the numerical performance of the sparse partial envelope estimator on parameter estimation and response variable selection. We discussed it through the large-sample setting and the high-dimensional setting. In the first situation, we set , , , , and . The matrix was obtained by orthogonalizing a matrix of independent uniform variates. Then, we employed the structure in (8) to construct , and . The elements in were independent random variables. The errors were simulated independently from distributions, and the error covariance matrix followed the structure , where and was a block diagonal matrix with the upper left block being and the lower right block being . The coefficient was a matrix of independent normal variates. The predictors were generated from a multivariate normal distribution with mean 0 and covariance matrix , and the elements in were generated from independent random variables. The sample size were 50, 150, 350, 750, 1350, and 2150, respectively, and we generated 200 replications for each sample size. With each sample size, the standard deviation of each element in over the replicates was computed, which we called the actual standard deviations of the elements in . The bootstrap standard errors were acquired by calculating the standard deviations for 200 bootstrap samples as a way to estimate the actual estimation standard deviations. Because of the excessively long computation time, the results of the sparse envelope model were based on 20 replications.

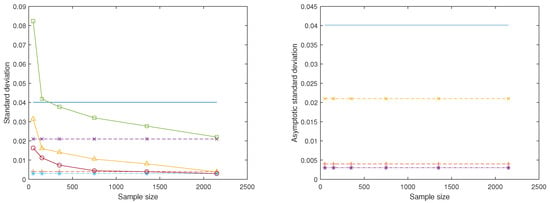

For each replication, we fitted the standard model (1), the oracle partial envelope model (15), the active partial envelope model (16), the sparse partial envelope models (5) and (8). Then, we acquired their estimators of and computed the estimation standard deviation for each element in . The results for a randomly selected element in are summarized in Figure 1. For better visibility, we only display the asymptotic standard deviation of the standard model. From the left panel of Figure 1, we can see that the actual standard deviations of the three models from large to small are the active partial envelope model, the oracle partial envelope model and the sparse partial envelope model, respectively. From the right panel of Figure 1, we can see that the asymptotic standard deviations of the four models from large to small are the standard model, the active partial envelope model, the oracle partial envelope model and the sparse partial envelope model separately. To sum up, we can observe that the sparse partial envelope estimator is more efficient than the standard estimator, the oracle partial envelope estimator and the active partial envelope estimator. The ratio of the asymptotic standard deviation of the standard estimator to that of the sparse partial envelope estimator is 11.68. The ratio of the asymptotic standard deviation of the oracle partial envelope estimator to that of the sparse partial envelope estimator is 1.27. The ratio of the asymptotic standard deviation of the active partial envelope estimator to that of the sparse partial envelope estimator is 6.22. The difference between the sparse partial envelope estimator and the oracle partial envelope estimator becomes quite small as the sample size n increases, which confirms the oracle property stated in Theorem 3.

Figure 1.

Comparison of the standard deviations for four model estimators. The − line marks the asymptotic standard deviation of the standard model. The lines ‘’ and with + mark the actual and asymptotic standard deviations of the oracle partial envelope model. The lines ‘’ and with × mark the actual and asymptotic standard deviations of the active partial envelope model. The lines ‘’ and with * mark the actual and asymptotic standard deviations of the sparse partial envelope model.

We also studied the variable selection performance of the sparse partial envelope estimator on true positive rate (TPR) and true negative rate (TNR) over 200 repeated samples. The true positive rate is calculated by , where is the number of active responses correctly chosen, and the true negative rate is calculated by , where is the number of inactive responses correctly chosen. Table 1 reports the variable selection results in the first situation under the large-sample setting. Compared to the sparse envelope estimator, the sparse partial envelope estimator has a better selection performance in this case. The proposed sparse partial envelope estimation procedure can correctly identify all the active sets, and the true negative rate tends to 1 as n increases, which confirms the selection consistency stated in Theorem 2.

Table 1.

Comparison of selection performances of the sparse partial envelope estimator and the sparse envelope estimator in the first situation.

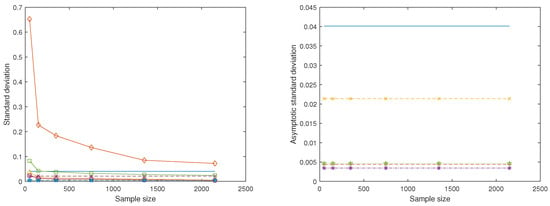

In the second situation, except for letting , the other parameters were the same as those in the first situation. When , there is no immaterial information in the active responses for the sparse envelope model, and , but as long as , there is still immaterial information in the active responses for the sparse partial envelope model. In this sense, the sparse partial envelope model is more flexible than the sparse envelope model. Figure 2 plots the standard deviations of a chosen element in and when . From the left panel of Figure 2, we can see that the actual standard deviations of the three models from large to small are the active partial envelope model, the oracle partial envelope model and the sparse partial envelope model, respectively. From the right panel of Figure 2, we can see that the asymptotic standard deviations of the four models from large to small are the standard model, the active partial envelope model, the oracle partial envelope model and the sparse partial envelope model separately. So, in summary, we can obtain that the sparse partial envelope estimator is more efficient than the standard estimator, the oracle partial envelope estimator and the active partial envelope estimator. The ratio of the asymptotic standard deviation of the standard estimator to that of the sparse partial envelope estimator is 11.68. The ratio of the asymptotic standard deviation of the oracle partial envelope estimator to that of the sparse partial envelope estimator is 1.27. The ratio of the asymptotic standard deviation of the active partial envelope estimator to that of the sparse partial envelope estimator is 6.22. The ratio of the asymptotic standard deviation of the sparse envelope estimator to that of the sparse partial envelope estimator is 1.35. As the sample size n increases, the difference between the sparse partial envelope estimator and the oracle partial envelope estimator also diminishes step by step.

Figure 2.

Comparison of the standard deviations for five model estimators. The − line marks the asymptotic standard deviation of the standard model. The lines ‘’ and with + mark the actual and asymptotic standard deviations of the oracle partial envelope model. The lines ‘’ and with × mark the actual and asymptotic standard deviations of the active partial envelope model. The lines ‘’ and ⋯ with ✩ mark the actual and asymptotic standard deviations of the sparse envelope model. The lines ‘’ and with * mark the actual and asymptotic standard deviations of the sparse partial envelope model.

Table 2 reports the variable selection results in the second situation. From Table 2, we can see that the variable selection performance of the sparse partial envelope estimator is the same as that of the sparse envelope estimator. Table 3 shows that comparisons of computing time in minutes among the standard model, the oracle partial envelope model, the active partial envelope model, the sparse partial envelope model and the sparse envelope model. It is obvious that the calculation speeds of the sparse partial envelope model and the sparse envelope model are significantly slower than those of the standard model, the oracle partial envelope model and the active partial envelope model, and the computation speed of the sparse envelope model is the slowest of the five models.

Table 2.

Comparison of selection performances of the sparse partial envelope estimator and the sparse envelope estimator in the second situation.

Table 3.

Comparison of computing time in minutes for the five models in the second situation.

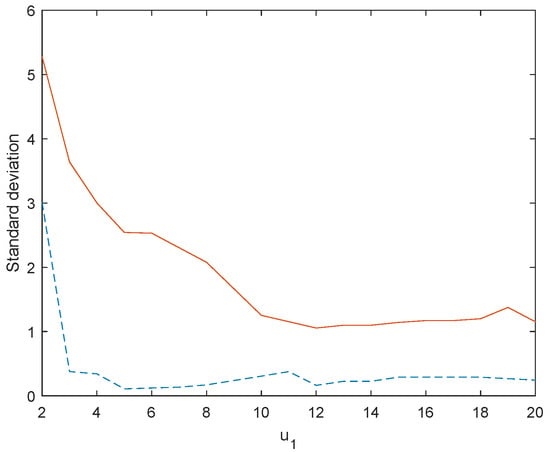

In the third situation, we set , , , , and and varied from 2 to 20. The matrix was obtained by orthogonalizing the matrix of independent standard normal variates. Accordingly, we used the structure in (8) to build , and . The elements in were independent normal variates with mean 0 and variance 0.16, and the error covariance matrix had the structure with and . The predictors were generated from a multivariate normal distribution with mean 0 and covariance matrix , and the predictors were generated from a multivariate normal distribution with mean 0 and covariance matrix . The standard deviation of a randomly chosen element in is displayed under different in Figure 3. We see that when is small, there is a bigger immaterial part, and so we anticipate a more substantial efficiency gain by employing the sparse partial envelope estimator. Meanwhile, we see that the standard deviation of the sparse partial envelope model is significantly smaller than that of the standard model, so we can acquire that the sparse partial envelope estimator is much more efficient than the standard estimator.

Figure 3.

Comparison of the standard deviations for the sparse partial envelope estimator (dashed) and the standard estimator (solid).

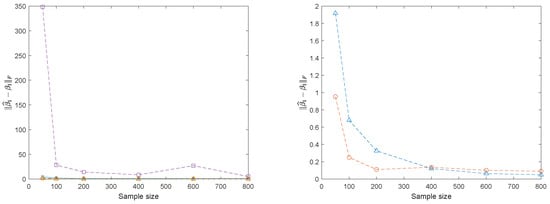

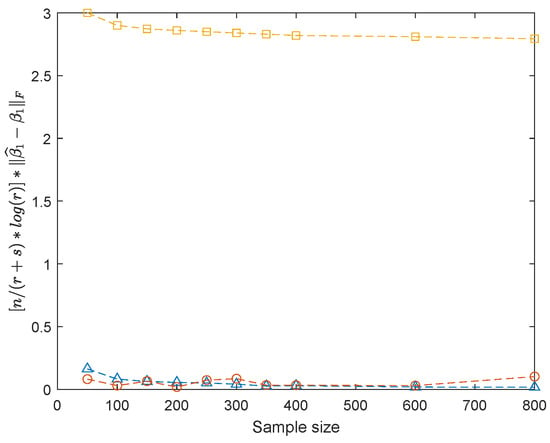

In the fourth situation, we had and set , , , , and . The first rows in were and the remaining rows in were . Then, we employed the structure in (8) to construct , and . The elements in were independent random variables, and the error covariance matrix followed the structure , where and was a block diagonal matrix with the upper left block being and lower right block being . The coefficient was a matrix of independent normal variates. The predictors and were generated from independent random variables. The sample sizes were 50, 100, 200, 400, 600, and 800, respectively, and we generated 200 replications for each sample size. Table 4 shows the variable selection performance of the sparse partial envelope estimator in the fourth situation under the high-dimensional setting. Figure 4 plots the average of over 200 replications versus sample size n, and Figure 5 describes the convergence of , which is indicated in Theorem 4. Again, the left panel in Figure 4 provides a comparison of among the standard estimator, the sparse partial envelope actual estimator and the sparse partial envelope bootstrap estimator, and the right panel highlights the comparison between sparse partial envelope actual estimator and sparse partial envelope bootstrap estimator. Meanwhile, Figure 5 also provides a comparison of among the standard estimator, the sparse partial envelope actual estimator and the sparse partial envelope bootstrap estimator. The bootstrap estimator of is computed based on the average of 200 bootstrap samples. With each bootstrap sample, we acquired the sparse partial envelope estimator and calculated . Figure 4 and Figure 5 both demonstrate that is a good approximation to and also display that is much smaller than .

Table 4.

The selection performance of the sparse partial envelope estimator in the fourth situation under the high-dimensional setting.

Figure 4.

Comparison of the standard estimator (dashed with □), the sparse partial envelope actual estimator (dashed with ◯) and bootstrap estimator (dashed with ▵).

Figure 5.

Comparison of the standard estimator (dashed with □), the sparse partial envelope actual estimator (dashed with ◯) and bootstrap estimator (dashed with ▵).

In the fifth situation, we still had and set , , , , and . The first rows in were and the remaining rows in were . The other parameters were the same as those in the fourth situation. The sample sizes were 50, 100, 200, 400, 600, and 800, respectively, and we generated 200 replications for each sample size. Table 5 shows the variable selection performance of the sparse partial envelope estimator in the fifth situation under the high-dimensional setting.

Table 5.

The selection performance of the sparse partial envelope estimator in the fifth situation under the high-dimensional setting.

6. Real Data Analysis

This section is dedicated to a real data example that elaborates aspects of the sparse partial envelope model in the case of . This example comes from full-scale experiments at a paper factory in Norway. It is well known that the quality of paper is typically depicted by several variables and is then highly multivariate. Furthermore, the production of paper is a very complex process, relying on a host of variables, where some can be controlled and others cannot. The experiment was carried out at the factory of the paper plant Saugbruksforeningen, Norway. The data consist of 30 observations (rows) and 41 variables (columns), and we can acquire observations. See Aldrin [] for a much more detailed description.

In this experiment, the goal is to find the influence of 9 predictor variables on the quality of the paper measured by 32 response variables. Response variables correspond to columns 1 to 32, which depict various qualities of the paper, so . Predictor variables correspond to columns 33 to 41. The first three predictor variables are in column 33, in column 34 and in column 35, respectively, and are changed methodically by the experiment. The next three predictor variables which are corresponding to columns 36 to 38, are established by , and . The last three predictor variables, which correspond to columns 39 to 41, are established by , and . So, we can obtain . The predictors , , , , and are assigned to the main predictors, so . The sparse partial envelope model was fitted to the data, and cross-validation suggested . The model identified all the response variables as active responses. We estimated and in the sparse partial envelope model by the average of 200 bootstrap samples and also estimated in the sparse envelope model by the average of 200 bootstrap samples. The ratio of the estimated to is 2.34, and the ratio of the estimated in the sparse envelope model to in the sparse partial envelope model is 1.73. By and large, they both demonstrate an obvious efficiency gain as a result of the sparse partial envelope model.

For purpose of testing the prediction performance, we randomly split the data into two parts of equal size. Half of the data are used as the training set and the other half are used as the testing set. The prediction error is computed as

Then, the prediction error is averaged over 100 random splits, and the average prediction error is reported. The sparse envelope model has a prediction error of 11.8162, and the sparse partial envelope model has a prediction error of 2.8131, which is a 76% reduction.

7. Discussion

In this article, we propose a sparse partial envelope model under the multivariate linear regression model, which can implement response variable selection and improve parameter estimation efficiency. Then, we demonstrate its theoretical properties containing consistency, an oracle property and the asymptotic distribution of the sparse partial envelope estimator. Meanwhile, we both consider the large-sample scenario and high-dimensional scenario. Finally, we provide simulation studies and real data analysis to support the theories. In this paper, the predictor and response variables in the sparse partial envelope model are both vector-valued. Interesting topics for further studies involve researching the case where the predictor and response variables in the sparse partial envelope model are applied to matrix-valued data or tensor-valued data.

Author Contributions

Conceptualization, Y.W.; methodology, Y.W.; software, J.Z.; validation, Y.W. and J.Z.; formal analysis, Y.W.; investigation, Y.W. and J.Z.; resources, Y.W.; data curation, J.Z.; writing—original draft preparation, Y.W. and J.Z.; writing—review and editing, Y.W. and J.Z.; visualization, J.Z.; supervision, Y.W.; project administration, J.Z.; funding acquisition, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research is supported by the Natural Science Research Start-up Foundation of Recruiting Talents of the Nanjing University of Posts and Telecommunications (Grant No. NY223064).

Data Availability Statement

The dataset is publicly available and can be downloaded from Aldrin (1996).

Acknowledgments

We thank the editor, associate editor, and reviewers for their constructive comments that have improved this paper substantially.

Conflicts of Interest

The authors declare that there are no conflicts of interests.

References

- Cook, R.D.; Li, B.; Chiaromonte, F. Envelope models for parsimonious and efficient multivariate linear regression. Stat. Sin. 2010, 20, 927–1010. [Google Scholar]

- Su, Z.; Cook, R.D. Partial envelopes for efficient estimation in multivariate linear regression. Biometrika 2011, 98, 133–146. [Google Scholar] [CrossRef]

- Su, Z.; Cook, R.D. Inner envelopes: Efficient estimation in multivariate linear regression. Biometrika 2012, 99, 687–702. [Google Scholar] [CrossRef]

- Su, Z.; Cook, R.D. Estimation of multivariate means with heteroscedastic errors using envelope models. Stat. Sin. 2013, 23, 213–230. [Google Scholar] [CrossRef][Green Version]

- Cook, R.D.; Helland, I.S.; Su, Z. Envelopes and partial least squares regression. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2013, 75, 851–877. [Google Scholar] [CrossRef]

- Su, Z.; Zhu, G.; Chen, X.; Yang, Y. Sparse envelope model: Efficient estimation and response variable selection in multivariate linear regression. Biometrika 2016, 103, 579–593. [Google Scholar] [CrossRef]

- Cook, R.D.; Zhang, X. Foundations for envelope models and methods. J. Am. Stat. Assoc. 2015, 110, 599–611. [Google Scholar] [CrossRef]

- Cook, R.D.; Zhang, X. Algorithms for envelope estimation. J. Comput. Graph. Stat. 2016, 25, 284–300. [Google Scholar] [CrossRef]

- Cook, R.D.; Zhang, X. Fast envelope algorithms. Stat. Sin. 2018, 28, 1179–1197. [Google Scholar]

- Khare, K.; Pal, S.; Su, Z. A bayesian approach for envelope models. Ann. Stat. 2017, 45, 196–222. [Google Scholar] [CrossRef]

- Li, L.; Zhang, X. Parsimonious tensor response regression. J. Am. Stat. Assoc. 2017, 112, 1131–1146. [Google Scholar] [CrossRef]

- Zhang, X.; Li, L. Tensor envelope partial least-squares regression. Technometrics 2017, 59, 426–436. [Google Scholar] [CrossRef]

- Pan, Y.; Mai, Q.; Zhang, X. Covariate-adjusted tensor classification in high dimensions. J. Am. Stat. Assoc. 2019, 114, 1305–1319. [Google Scholar] [CrossRef]

- Zhu, G.; Su, Z. Envelope-based sparse partial least squares. Ann. Stat. 2020, 48, 161–182. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Z.; Xiong, Y. Efficient estimation of reduced-rank partial envelope model in multivariate linear regression. Random Matrices Theory Appl. 2021, 10, 2150024. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Z.; Jiang, Z. Groupwise partial envelope model: Efficient estimation in multivariate linear regression. Commun. Stat.-Simul. Comput. 2023, 52, 2924–2940. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Z. Scale invariant and efficient estimation for groupwise scaled envelope model. J. Korean Stat. Soc. 2024, 53, 1027–1048. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Z.; Zhu, L.X. Scaled partial envelope model in multivariate linear regression. Stat. Sin. 2023, 33, 663–683. [Google Scholar] [CrossRef]

- Cook, R.D.; Forzani, L.; Su, Z. A note on fast envelope estimation. J. Multivar. Anal. 2016, 150, 42–54. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Rothman, A.J.; Bickel, P.J.; Levina, E.; Zhu, J. Sparse permutation invariant covariance estimation. Electron. J. Stat. 2008, 2, 494–515. [Google Scholar] [CrossRef]

- Dattorro, J. Convex Optimization & Euclidean Distance Geometry; Meboo Publishing: Palo Alto, CA, USA, 2016. [Google Scholar]

- Cook, R.D.; Setodji, C.M. A model-free test for reduced rank in multivariate regression. J. Am. Stat. Assoc. 2003, 98, 340–351. [Google Scholar] [CrossRef]

- Magnus, J.R.; Neudecker, H. Matrix Differential Calculus with Applications in Statistics and Econometrics; John Wiley & Sons: Hoboken, NJ, USA, 2019. [Google Scholar]

- Shapiro, A. Asymptotic theory of overparameterized structural models. J. Am. Stat. Assoc. 1986, 81, 142–149. [Google Scholar] [CrossRef]

- Magnus, J.R.; Neudecker, H. The commutation matrix: Some properties and applications. Ann. Stat. 1979, 7, 381–394. [Google Scholar] [CrossRef]

- Ravikumar, P.; Wainwright, M.J.; Raskutti, G.; Yu, B. High-dimensional covariance estimation by minimizing ℓ1-penalized log-determinant divergence. Electron. J. Stat. 2011, 5, 935–980. [Google Scholar] [CrossRef]

- Aldrin, M. Moderate projection pursuit regression for multivariate response data. Comput. Stat. Data Anal. 1996, 21, 501–531. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).