Abstract

The ‘black box’ nature of machine learning (ML) approaches makes it challenging to understand how most artificial intelligence (AI) models make decisions. Explainable AI (XAI) aims to provide analytical techniques to understand the behavior of ML models. XAI utilizes counterfactual explanations that indicate how variations in input features lead to different outputs. However, existing methods must also highlight the importance of features to provide more actionable explanations that would aid in the identification of key drivers behind model decisions—and, hence, more reliable interpretations—ensuring better accuracy. The method we propose utilizes feature weights obtained through adaptive feature weight genetic explanation (AFWGE) with the Pearson correlation coefficient (PCC) to determine the most crucial group of features. The proposed method was tested on four real datasets with nine different classifiers for evaluation against a nonweighted counterfactual explanation method (CERTIFAI) and the original feature values’ correlation. The results show significant enhancements in accuracy, precision, recall, and F1 score for most datasets and classifiers; this indicates the superiority of the feature weights selected via AFWGE with the PCC over CERTIFAI and the original data values in determining the most important group of features. Focusing on important feature groups elaborates the behavior of AI models and enhances decision making, resulting in more reliable AI systems.

Keywords:

explainable artificial intelligence; counterfactual explanation; genetic algorithm; machine learning; feature importance; Pearson correlation MSC:

68-06; 68W50; 68T05; 00B25

1. Introduction

The decision-making process of the majority of AI models is difficult to understand since they employ ‘black box’ machine learning (ML) methodologies. Users mostly find it hard to trust these systems due to their lack of clarity. Moreover, the inferences of intelligent models from instances cannot be monitored in detail because the training dataset is usually too large, and the learned model is too complicated for users [1]. As a result of these difficulties, researchers have become interested in explainable artificial intelligence (XAI). By offering analytical techniques to investigate and comprehend the behavior of the model, this field of research aims to validate important ML system properties that developers optimize, such as robustness, causality, usability, and trust. Explainable ML is concerned with providing post hoc explanations for existing black box models [2]. Explainability can answer explanatory questions such as ‘what’ questions (e.g., what event happened?), ‘how’ questions (e.g., how did that event happen?), and ‘why’ questions (e.g., why did that event happen?) [3].

A counterfactual explanation is a commonly used explanation approach that determines how the output changes by changing input features [4]. Simple explanations that are reflective of human cognition may be provided via counterfactual explanations, which can also encourage spontaneous causal reasoning about the result of a specific model [5]. Moreover, counterfactual explanations encourage analyzing the features of data instances and capturing the relationships between them and their impact on the outcomes [6]. Recent studies provide counterfactual explanations by perturbing input data to understand the limitations and biases of AI models and their training data rather than changing their predictions [7]. These explanations should change a few features, making them easy to understand and follow for end users, to improve human trust in AI models [8]. In fact, counterfactual explanations serve as significant mechanisms for assisting users in understanding automated systems and their decision-making processes. They emphasize that when the purpose of XAI is to enhance human–machine team performance, rather than just explaining model prediction, it is essential to study the user’s understanding of these decisions. For that, a more user-centered and psychologically grounded approach to XAI is required to study how explanations change the way users understand AI models [9].

For the AI model itself, providing counterfactual explanations highlighting the most important features as well as a group of features that affect the decision not only enhances the explainability of AI models for users but also boosts their performance. However, to the best of our knowledge, none of the proposed explanation techniques provide counterfactuals that include the importance of individual features or groups of features in the explanation. Our proposed method, thus, aims to bridge this gap by utilizing the feature weights from counterfactual explanations to identify the most crucial group of features that may have a significant impact on the decision-making process. Feature selection (FS) is one of the important steps in ML that keeps only the most meaningful group of features of a dataset while excluding others considered either irrelevant or redundant. This process enhances the performance of any ML model by increasing its accuracy, decreasing the complexity of the computational process, and reducing the amount of overfitting that might occur during training [10,11]. Some approaches that are frequently used for FS are correlation-based methods, which evaluate the associations between features in order to determine the most important groups of features. The Pearson correlation coefficient (PCC) is a widely used method for FS in ML learning, often integrated with other techniques, such as genetic algorithms (GAs), to enhance performance [12]. Therefore, in the current research, we used the PCC together with the feature weights obtained through adaptive feature weight genetic explanation (AFWGE) [13] to identify the most important group of features underlying a model’s decision. AFWGE is a novel technique that embeds feature weights while evolving and optimizing the counterfactual explanations, aiming to produce more accurate explanations than their nonweighted counterparts, as used in [14]. Therefore, it is intriguing to explore the potential of this technique in identifying the most important group of features that collectively have a significant impact on the decision-making process, which was the primary objective of this study.

The remaining parts of this paper are organized as follows: Section 2 provides an overview of related work, while Section 3 describes the formulation of the problem. Then, the proposed approach is explained in Section 4, followed by the provision of comprehensive experimental results in Section 5. Finally, Section 6 presents a summary and recommendations for future research.

2. Related Work

This section provides an overview of the most significant studies on counterfactual explanations and feature selection methods. The review focuses on foundational and recent works in these fields, highlighting their contributions and limitations. A summary of these works is presented in Table 1 for clarity and comparison.

2.1. Counterfactual Explanation

A counterfactual explanation is a post hoc explanation for potentially sophisticated black box models. These methods seek to explain how a fixed model leads to a particular prediction by perturbing variables to measure how the prediction changes. The counterfactual explanation describes the change to an input data point that would change a model’s prediction of it. Counterfactual explanations were proposed as a new approach in [15] to clarify automated choices, solving a number of concerns brought up by other studies regarding algorithmic interpretability and responsibility.

It was suggested [16] that an individual may modify a model’s decision by changing the feasible input variables. Multiple tests were conducted using an integer programming procedure for a credit scoring process. For data input, three real datasets, GiveMeCredit, German, and Credit [17], were utilized in each test. Differences were found in the difficulty of obtaining the given counterfactual condition across genders, indicating a substantial variation in the counterfactual generation cost. The authors of [14] presented a unified methodology to understand classification models and assess their robustness and fairness in the CERTIFAI tool. They used a unique GA to provide counterfactual explanations for the robustness, transparency, interpretability, and fairness of AI models. CERTIFAI was used in experiments to estimate the reliability of traditional classifiers such as decision trees (DTs), support vector machines (SVMs), and multilayer perceptron (MLP). Moreover, this tool was applied to assess the interpretability and fairness of such classifiers on the UCI Adult dataset. The study findings show how the proposed GA can give users convincing counterfactual explanations.

GeCo was introduced in [18] to seek optimal counterfactual explanations with the fewest modifications. GeCo also uses GAs to prioritize counterfactuals based on the number of modifications. The tests included a comparison of GeCo with five competitive tools, MACE [19], DiCE [20], WIT [21], CERTIFAI, and SimCF [22], using four real datasets. The datasets were Credit [17], Adult, Allstate, and Yelp. GeCo efficiently generated a plausible counterfactual explanation in a linear runtime that is almost as optimal as the ideal explanation in terms of distance. A new formulation of the optimization problem of counterfactual generation is presented in [23]. This formulation results in a single sparse counterfactual explanation that is efficiently produced through the normalization of continuous features and the selection of predictive features using a custom GA. The effectiveness of the proposed technique was assessed using credit-scoring datasets: German and Home Loan Equity. The assessment was based on a comparison of the generated counterfactual explanations with the provided explanations by credit-scoring specialists and the explanations of CERTIFAI [14] as a comparison. The results show that the presented method generates more sparse counterfactuals than CERTIFAI. In [6], a framework is proposed to adjust explanations based on user emotions, creating a more personalized, context-aware interaction with AI systems. By integrating emotional features, this approach aims to enhance user trust and satisfaction, making AI explanations more accessible and intuitive. This work underscores the need for a balance between factual accuracy and empathetic design in XAI, offering insights that align well with human-centered AI methodologies.

Most of the suggested explanation methods only estimate the importance of certain features using the model’s outcomes. After counterfactuals are generated, the importance of a feature is usually measured as the number of modifications of that feature in the generated counterfactuals [14]. With the exception of [13], none of the explanation methods manage to identify the importance of individual features or groups of features within the explanations themselves. On the contrary, the authors of [13] introduced an adaptive feature weight genetic explanation (AFWGE) method. This novel approach for generating counterfactual explanations uses GA with embedded feature weights as a reliable indicator of feature importance, providing valuable insights into understanding and interpreting the mechanisms underlying the model. By embedding feature weights as part of the counterfactual solution, AFWGE optimizes feature values and their weights alongside each other during the evolutionary process of the GA to generate more accurate counterfactual decisions. The empirical results on four datasets (Adult [24], Diagnostic Wisconsin Breast Cancer [25], Pima Indians Diabetes [26], and Iris [27]) show that AFWGE has significantly enhanced counterfactual generation performance, producing more efficient counterfactual explanations than CERTIFAI [14], which does not include feature weights in the counterfactual explanations. The results indicate that the counterfactual distances produced via AFWGE are significantly shorter than those of CERTIFAI. Similarly, the number of feature changes with AFWGE is significantly smaller than with CERTIFAI. Additionally, AFWGE changes features according to weights, demonstrating closer correlations to the dataset features’ correlations compared to the number of feature changes’ correlations exhibited by CERTIFAI. Moreover, AFWGE generates counterfactual explanations that fulfill fundamental properties: validity, proximity, sparsity, plausibility, and actionability of explanations.

2.2. Feature Selection (FS) Using the Pearson Correlation Coefficient (PCC)

One of the helpful phases in data modeling is feature selection, which aids in eliminating noisy, redundant, and irrelevant data. The goal is to create a predictive model with fewer input variables. It eliminates features from the model that might be redundant or useless predictors. Reducing the input features may lead to an improvement in the model’s performance in addition to lowering the computational cost of modeling. Additionally, we can increase the model’s generality by removing weak predictors [10].

A model may have its own features selected in many methods. Finding the most relevant and significant features may be accomplished by using the correlation between the features. Correlation-based feature selection (CFS) works well with higher-dimensional datasets. The CFS approach selects relevant features only based on the fundamental features of the data, without the use of algorithms for ML [28]. The features that are selected via the use of CFS yield a better level of accuracy in comparison to other methods [29]. In a dataset, it is common for some features to have a strong correlation with others. The presence of such strongly correlated features results in duplicate information that does not help enhance the model’s performance. The correlation among features is calculated using the CFS approach, and the highly correlated features, meaning they are similar, are not included [30]. Assuming that features p1 and p2 have a strong correlation, indicating that they contain similar types of information, a classification model that incorporates both p1 and p2 has an equivalent level of predictive capability to a dataset that includes either p1 or p2 individually.

A new FS method is introduced in [31], where a three-step process is used to reduce the number of features: First, remove redundant features using pairwise correlation. Then, let different methods select their own feature sets independently. After that, make sure that the individual feature sets have the same size. The individual feature sets are blended using both union and quorum procedures. Network anomaly detection was evaluated on both combined and individual sets with classifiers such as random forest (RF), DT, K-nearest neighbor (K-NN), Gaussian naïve Bayes, and logistic regression (LR). The experimental results on the UNSW-NB15 dataset indicated that the RF algorithm, when using both the union and quorum feature sets, achieved F1 scores of 99% and 99.02%, respectively.

The authors of [32] presented a gene FS technique that utilizes ReliefF [33] and a PCC algorithm. First, this technique uses ReliefF to evaluate the feature weight, then it sorts the features based on the weight and filters out genes that are not important based on a predetermined threshold. Next, any redundancies in the chosen feature subsets are removed using the PCC approach. To validate the proposed FS approach, three gene expression datasets were used for testing (Colon, Leukemia, and Prostate) with two classifiers, K-NN and SVM. In the experiment, the proposed approach achieved improved classification accuracy by successfully removing irrelevant and redundant genes.

The method introduced in [34] for selecting features consists of two stages. In the first step, the PCC is used to group features by finding the features that are correlated and then dividing them into groups based on how correlated they are (high, medium, or low). In the second step, the Triplet FS (TFS) method is introduced to prevent collinearity among features. This way, the features are selected based on the different correlations between each group. Lastly, the features in the triplet group are selected, and the LR approach is used to identify the disease. With an accuracy of 95.4%, an FPR of 1%, an FNR of 4%, a sensitivity of 97%, and a specificity of 96%, the classifier was able to differentiate between benign and malign types on the Breast Cancer dataset. Combining the PCC method with the TFS algorithm raised the accuracy, sensitivity, and specificity of breast cancer diagnosis. In [35], the authors focused on enhancing the accuracy of breast cancer diagnostics through machine learning techniques. The authors proposed a four-layered data exploration approach, including feature distribution analysis, correlation assessment, feature elimination, and hyperparameter optimization, to identify robust features for classifying tumors as malignant or benign. They developed and evaluated predictive models using the Wisconsin Diagnostic Breast Cancer (WDBC) and Breast Cancer Coimbra Dataset (BCCD). The results demonstrated that the polynomial SVM achieved 99.3% accuracy; LR, 98.06%; and KNN, 97.35% on the WDBC dataset, indicating the effectiveness of their approach.

The authors of [12] provided a hybrid FS method combining the PCC with a GA. The classifier decides whether someone has an illness by means of the acquired features as input. The chosen datasets—heart disease, diabetes, and hepatitis—were obtained from the UCI repository. The tests were intended to evaluate the performance of the GA and the PCC. The suggested approach had respective accuracies of 96.87%, 89.53%, and 97.03% on the hepatitis, diabetes, and heart disease datasets. The results revealed that the hybrid GA with PCC efficiently identifies relevant features, leading to superior accuracy for medical datasets. Another hybrid methodology introduced in [36], is an example of an approach to FS, namely, the ScC model. Stability (measuring how constant or stable the feature is) and correlation are employed as the criteria for eliminating data instances, specifically, instances with high stability and low correlation values. The suggested ScC model selects the most relevant features to maximize the accuracy of the classification. Four distinct classification algorithms were used to gauge the effectiveness of ScC. The performance of the proposed approach was evaluated using nine different benchmark datasets: Austra, Heart Disease, Phishing, Sonar, Iono, South-German-Credit, Spam-Base, Messidor, and Pop-Failure. Three state-of-the-art dimensionality-reduction methods were considered for comparison with the ScC method: PCA, rough set (RS), and weight-guided (WG). The results indicated that the ScC method reduces a given dataset with high predictive accuracy.

Two enhanced feature selection approaches using correlation and clustering are provided in [37]. In the correlation-based approach, feature correlation is used to determine a relationship type for each feature by selecting features based on a specific threshold. In the clustering-based version, the correlation data are analyzed using clustering to determine features that stand in for the whole cluster. The selected group of features of the two approaches was evaluated against the ReliefF feature selection approach by comparing the models’ performances. The two proposed approaches produced superior results, where their selected features increased the learning models’ accuracy.

The PCC is effective in eliminating redundant features and improving classification accuracy when combined with other methods such as ReliefF and GAs [12,32,34]. Of course, it has proven to have higher accuracy and provide better feature reduction when compared to conventional FS methods. More importantly, the PCC was successfully used with very good results for gene expression analysis [32], medical diagnosis [12], and network anomaly detection [31], proving its flexibility and efficiency.

The current study aimed to employ the feature weights obtained via the AFWGE method [13] with the commonly used PCC [29] to identify and manage redundancy among features. AFWGE is an approach for generating counterfactual explanations of AI models where a custom GA is employed, incorporating adaptive feature weights to enhance the algorithm’s performance. AFWGE makes use of experimentally determined feature weights. These weights take specific values in the algorithm, where they are equally initialized and then evolved adaptatively during the search. Consequently, search accuracy, exploration, exploitation, convergence speed, and overall algorithm behavior are guided by these adaptive weights. In fact, these weights have a significant impact on the behavior of the method since they are also optimized together with the main optimization of the counterfactual solution. In this current study, we advanced AFWGE by integrating the feature weights generated by AFWGE with the commonly used PCC to manage feature redundancy effectively. Here, the feature weights computed by AFWGE serve as a reliable indicator of feature importance, guiding the PCC computations to evaluate the correlations among features and identify the most crucial feature groups. This approach not only reduces redundancy but also enhances the interpretability, providing deeper insights into the underlying mechanisms of the AI model.

The weights for the features computed via AFWGE guide the computations of the PCC efficiently for evaluating the correlations between features and identifying the most crucial group of features. Thus, highly correlated feature weights are finally reduced to one feature. This ensures that the finally obtained set of features is nonredundant and contains the most influential ones that increase the efficiency of the learning model to avoid overfitting. Incorporating feature weights with the PCC provides a finer and more effective process for FS, which eventually improves model performance and explainability. Interestingly, this study highlights the role of AFWGE feature weights as reliable indicators of feature importance, providing valuable insights into understanding and interpreting the underlying mechanisms of the model.

Table 1.

Summary of the literature review.

Table 1.

Summary of the literature review.

| Method | Model | Property | ||

|---|---|---|---|---|

| Counterfactual Explanation | Integer programming | LR | Produce flip-sets | [16] |

| GA | DT, SVM, MLP | Assess interpretability | [14] | |

| GA | MLP | Real-time performance | [18] | |

| GA | RF, artificial neural networks | Select predictive features using RF | [23] | |

| Emotion lexicons | Large language models | Emotional features for human-centered AI | [6] | |

| GA | MLP | Feature weights obtained through adaptive feature weight genetic explanation | [13] | |

| Features Selection | Ensemble learning | RF, DT, K-NN, Gaussian naïve Bayes, LR | Union and quorum procedures | [31] |

| PCC and ReliefF | K-NN, SVM | Removing irrelevant and redundant genes | [32] | |

| PCC and TFS | LR | Lowering the number of false positive and false negative reports | [34] | |

| 4-layered data exploration | SVM, LR, KNN | Improving diagnostic accuracy | [35] | |

| PCC and GA | K-NN | Hybrid GA with PCC efficiently identifies relevant features | [12] | |

| Stability and correlation | LR, fast and large margin, RF, gradient-boosted trees | Pick optimal reduction with high stability and low correlation | [36] | |

| Correlation and clustering | LR, artificial neural networks | Superior to ReliefF | [37] |

3. Problem Formulation

The feature selection problem is concerned with finding the best subgroup of features from a dataset to train the learning model, where this subgroup improves performance compared with using all features or at least provides the same performance as using all features, which can be mathematically described as follows:

Let be the dataset with n samples and p features, and let be the target variable. Define as the set of all features. We want to select a subgroup that optimizes the performance of the learning model. Let denote the subset of containing only the features in . Let be the performance metric (e.g., accuracy, precision, recall, and F1 score) of the learning model trained on and evaluated on . The feature selection problem can be formulated as an optimization problem [38]:

where is a small tolerance value that allows subgroup to perform slightly worse than using all features . This formulation seeks the subset that maximizes the performance of the learning model with a guarantee that it is at least as good as when using all features within tolerance .

Subgroup must contain the features most relevant to the prediction problem that are not redundant. Feature is relevant if a change in the feature’s value results in a change in the value of the predicted class variable . Feature is strongly relevant if the use of in the predictive model eliminates the ambiguity in the classification of instances. Feature is weakly relevant if becomes strongly relevant when a subset of the features is removed from the set of available features. This implies that a feature is irrelevant if it is not strongly relevant, and it is not weakly relevant [39]. For redundancy, feature is redundant relative to class variable and a second feature if has stronger predictive power for than for the class variable [40]. However, to make feature selection decisions, several researchers have explained a redundant feature as one that has a high correlation with all the other features [41].

4. Proposed Method

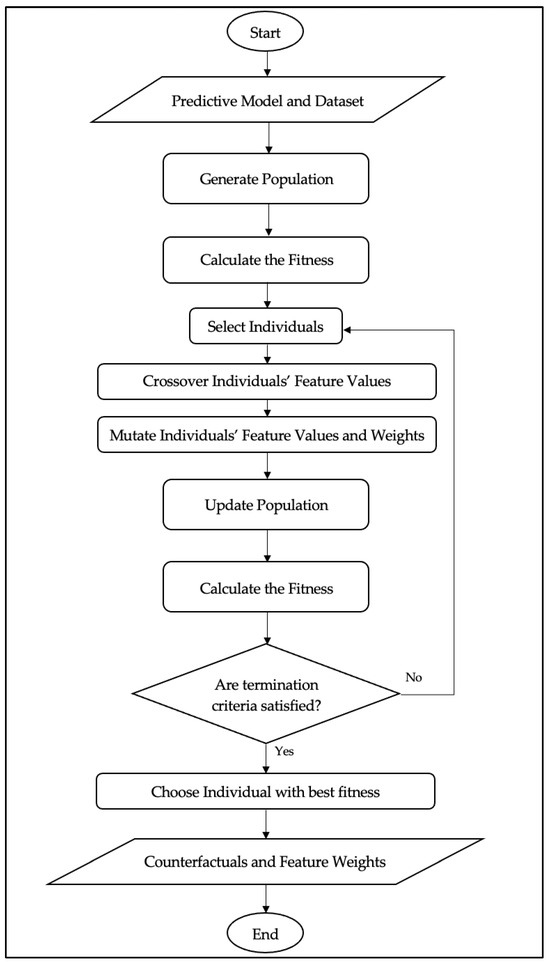

To optimize the feature selection and identify the most important group of features, we compute the PCC of the feature weights that are produced via the adaptive feature weight genetic explanation (AFWGE) in the generated counterfactuals, as explained in detail in [13]. AFWGE is a method that extends the standard GA with adaptive feature weights, allowing for improved counterfactual explanations. For a standard GA, the approach considers a normal population of random solutions, usually called chromosomes. Every chromosome represents one potential solution to the problem under study. The solutions are iteratively updated based on which operations, such as selection, crossover, and mutation, are applied, with the goal of optimizing a fitness function. It is an iterative process that repeats until some termination condition is met, such as when a certain number of generations is reached. AFWGE follows this structure but introduces adaptive feature weights that evolve together with counterfactual solutions.

AFWGE aims to analyze the explainability of a predictive model given the model as a black box model and the data instances. First, AFWGE initializes the population of chromosomes, with each chromosome composed of two parts: the feature values and the weights assigned to those features (Figure 1). The feature weights are initialized uniformly and adaptively evolve throughout the search process with the purpose of influencing the algorithm’s behavior. After that, the rating process based on an objective function is realized; in this sense, it is assumed that those solutions are ranked as better if they produce a minimal distance concerning the original instance. This is followed by the selection step, where a subset of the fittest within the population is selected for crossover. During the crossover phase, a number of pairs of chromosomes exchange feature values over selected features and create offspring. After that, mutation is applied to the offspring after crossover. In this stage, feature values and weights are both mutated. This population is then filtered out after mutation in order to remove all the chromosomes returning the same prediction as the original instance. This ensures that only those chromosomes whose predictions differ from the ones of the original instance remain in the population, while the algorithm focuses on the construction of valid counterfactual explanations. The process iterates until it meets a stop condition, such as when the best fitness score has not improved over five generations. Finally, AFWGE provides a list of the counterfactual explanations with the final feature weights. The analysis highlights the role of feature weights as reliable indicators of feature importance, providing valuable insights for understanding and interpreting the mechanisms underlying the model.

Figure 1.

AFWGE flowchart.

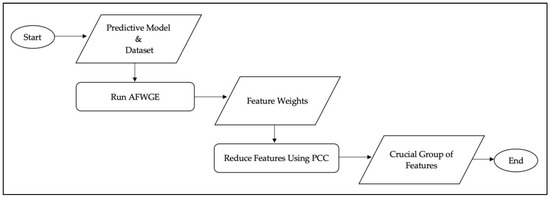

Therefore, when given a black box predictive model and dataset as inputs to the AFWGE method, this produces superior counterfactual explanations with the weights of the final features (Figure 2). Then, these feature weights are used as the input and combined with correlation-based redundancy reduction, where the correlation between the features is computed using the PCC [42] to obtain the most crucial group of features. This technique calculates the linear relationship between each of the two features’ weights, thus detecting possible redundancy. If two features are found to have high absolute PCC values with each other, then we retain the one that has lower absolute PCC values with the other features and eliminate the other. The PCC is represented by Equation (3):

Figure 2.

Process flow diagram of the proposed method.

The PCC, computed using Equation (3), is used to assess the linear correlation between two variables, X and Y. The function cov(X, Y) is the covariance of X and Y. σX and σY are the deviations of X and Y, respectively; μx and μy are the respective means; and E is the expected value.

We can obtain a formula for ρ(X,Y) by substituting the estimates of the covariances and variances based on a sample input into the formula above. Given paired data {(x1,y1), (x2,y2), …, (xn,yn)}, consisting of n pairs, ρ(X,Y) is defined as per Equation (4), where n is sample size, xi, yi are the individual sample points indexed with i, and and are the sample means of variables X and Y, respectively.

ρ(X,Y) ranges from + 1 to −1, where a value of + 1 indicates that X is completely positively linearly correlated to Y. Conversely, a value of 0 signifies that X is not linearly correlated to Y at all. Finally, a value of −1 indicates that X is completely negatively linearly correlated to Y. X and Y are typically considered to exhibit an extremely strong correlation with each other when ρ(X,Y) is greater than 0.8. Moreover, X and Y exhibit a strong correlation to each other when ρ(X,Y) is greater than 0.6 [43].

5. Computational Experiments

We empirically tested the usefulness of our proposed method for users, model developers, and regulators in two steps: first, training and testing the classifier with original Data (having all the features); second, training and testing the classifier with the updated dataset that had the set of crucial features alone, obtained via our proposed method. In the following subsections, we explain all the details concerning the experimental setup and the implementation environment used to test our approach, followed by all the details of the results obtained.

5.1. Experimental Setup

5.1.1. Datasets

We took the same four real datasets used in AFWGE [13] and CERTIFAI [14]: (1) the Adult dataset [24], used to predict whether the income of an adult exceeds 5000 USD/year using U.S. census data from 1994; (2) the Diagnostic Wisconsin Breast Cancer dataset [25] to diagnose whether a breast tumor is malignant or benign; (3) Pima Indians Diabetes [26] to predict whether or not a patient has diabetes, based on certain diagnostic measurements, where all patients are women at least 21 years old and of Pima Indian heritage; and (4) the Iris dataset [27] to predict the type of the Iris plant from three types. Table 2 provides the details of the dataset considered for the experiments.

Table 2.

Characteristics of the datasets.

5.1.2. Rival Methods

The aim of the experiments was to select the most important group of features underlying a counterfactual explanation by computing the PCC for the feature weights from AFWGE and then finding the most important group of features, as explained in Section 4. After that, the dataset was updated by taking the important group features that were obtained and excluding the rest. Next, we trained and tested the classifier on the updated dataset.

As previously motioned, both CERTIFAI and AFWGE use custom GAs. However, the main difference between them is that AFWGE assesses feature importance by incorporating feature weights as part of the solution and dynamically evolves them alongside feature values throughout the evolutionary process, while CERTIFAI assesses feature importance by computing the number of feature changes in the produced counterfactual explanations. Therefore, for each dataset, we compared each classifier’s performance using three different feature groups:

- Original data feature groups: The important group of features identified using the PCC for feature values from Original Data;

- AFWGE feature groups: The important group of features identified using the PCC for the feature weights produced via AFWGE [13];

- CERTIFAI feature groups: The important group of features identified using the PCC for the number of feature changes, as produced via CERTIFAI [14].

5.1.3. Evaluation Metrics

To measure the performance of each classifier, we used the following widely accepted performance statistics: accuracy, precision, recall, and F1 score.

5.1.4. The Classification Models

We chose nine ML classifiers to evaluate the same four datasets: Adult, Breast Cancer, Pima Indians Diabetes, and Iris. These included three simple learners: LR, linear and radial SVM (L-SVM and R-SVM), and DT and ensemble learners (RF, which uses a set of homogenous DTs as its base classifiers). Additionally, we used AdaBoost, K-NN, Gradient Boosting, and MLP.

5.1.5. Setup

We used the scikit-learn library within the Python implementation environment on macOS. The classifier parameters were left as the default by the scikit-learn library. All datasets were evaluated with the same model configurations. To obtain the feature weights selected via AFWGE and the number of features changes of CERTIFAI, we reimplemented AFWGE and CERTIFAI in Python 3.10 on MAC studio Apple (Cupertino, CA, USA) M1 Ultra with 128 GB RAM on the same four real datasets used in AFWGE and CERTIFAI with the same parameters as in [13]. We implemented all three methods for finding the most important group of features, using either AFWGE, CERTIFAI, or Original Data in Python 3.10. All experiments were run on a MAC 1.4 GHz Quad-Core Intel Core i5 with 8 GB RAM. For the PCC threshold, we applied multiple tests with values equal to 0.6, 0.7, 0.8, 0.9, 0.95, and 0.99. These threshold values were chosen to systematically evaluate the impact of varying levels of correlation on feature reduction. These values provided a balanced range from high to extremely high correlation, allowing us to explore the trade-off between feature redundancy and model interpretability. Studies aiming to improve model performance also indicated better results with a PCC threshold of 0.6 or higher, which is a commonly used threshold in feature selection studies [44]. While these thresholds performed well across the datasets used in this study, their applicability may vary depending on the dataset characteristics, such as feature dimensionality and the degree of correlation among features. However, selecting an optimal threshold for a dataset may require more analysis to understand its correlation structure.

5.2. Experimental Results

This section presents the outcomes of training and testing the classifiers using the three different methods for calculating the most important group of features and comparing them according to the aforementioned evaluation criteria. First, we present the PCC results of each method. Then, we assess and compare the classifier metrics, each in a separate subsection: accuracy, precision (Appendix A), recall (Appendix B), and F1 score. Next, we provide an extensive evaluation of the proposed method’s performance. Finally, we provide insights into the relationship between the collective importance of feature groups and the importance of individual features.

5.2.1. PCC Results

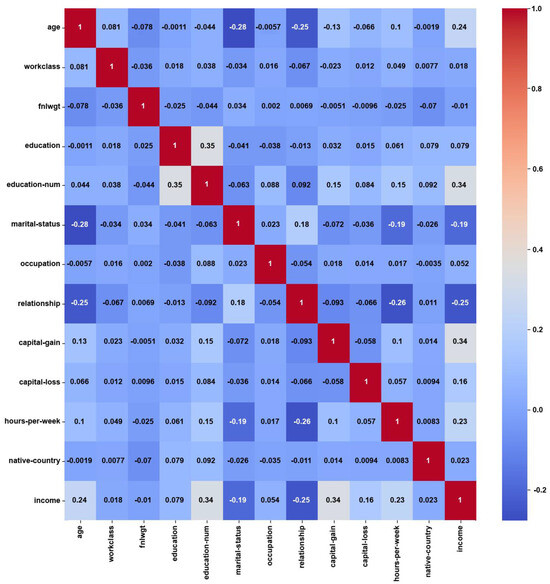

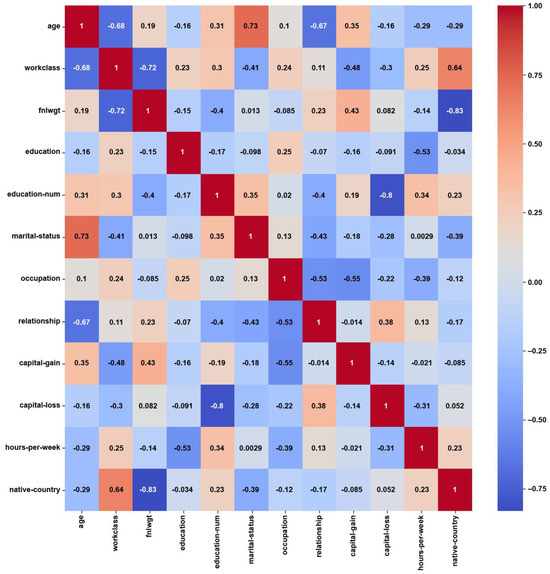

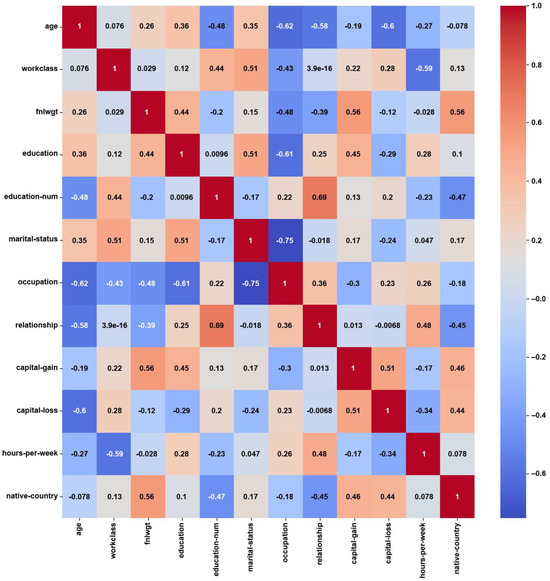

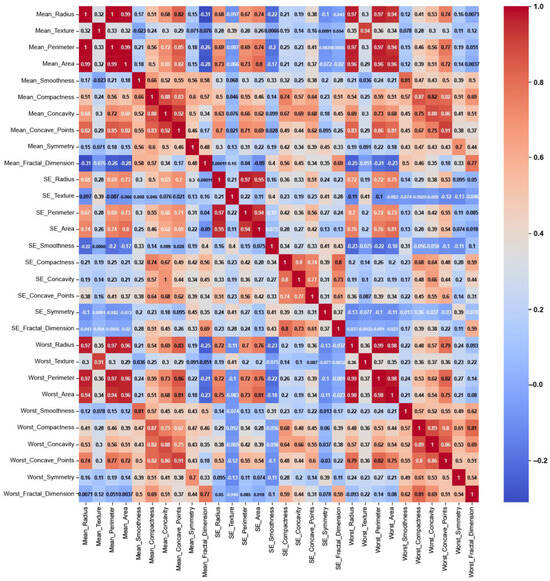

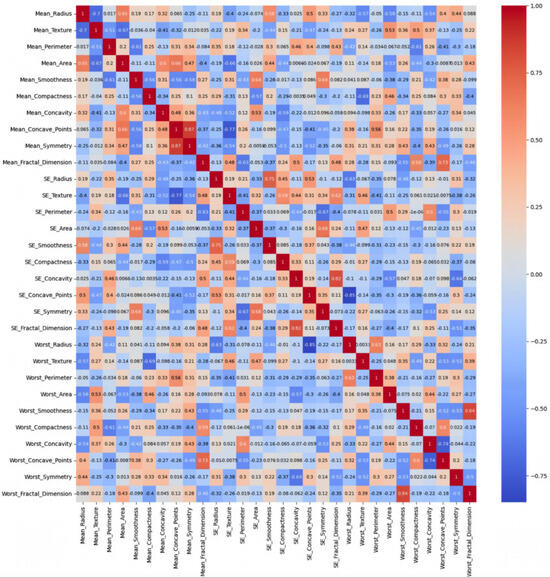

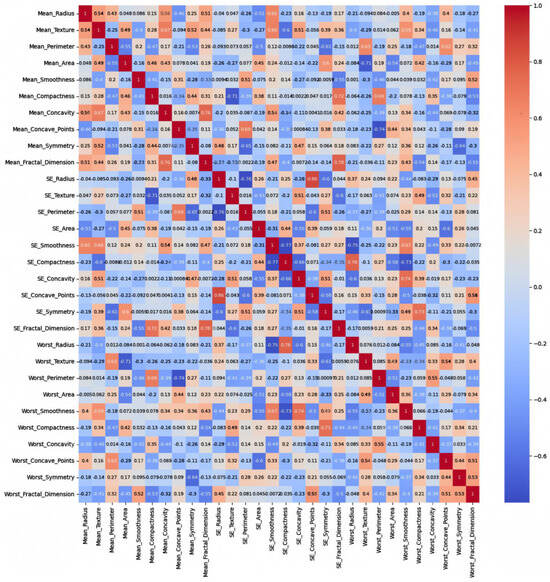

Examining the heatmaps of the Pearson correlation coefficients for the Adult, Breast Cancer, Pima Indians Diabetes, and Iris datasets was crucial for understanding the relationships between features in each dataset. These heatmaps are visual representations of the strength and direction of the linear relationship between two features. These give insights into the relevance of features and possible redundancies. Moreover, the heatmaps demonstrate how the different inputs used to compute the PCC for each method can impact the correlation values for each dataset, as shown next. This comparison highlights how the AFWGE feature weights serve as indicators of feature correlations compared to the number of feature changes in CERTIFAI and the original feature values.

- Adult:

For the Adult dataset, the heatmap in Figure 3 shows weak correlations, with maximum PCC values of around ±0.3, which might have been caused by the notable class imbalance. About 75% of the instances belong to the ‘income ≤ 50 K’ class, while the remaining 25% belong to the ‘income > 50 K’ class. On the other hand, the feature weights by AFWGE (Figure 4) and the number of feature changes by CERTIFAI (Figure 5) show higher correlations, with maximum PCC values of around ±0.8 and ±0.7, respectively. These high correlations are not due to the unified numerical type of features (i.e., the weights by AFWGE or the number of changes by CERTIFAI); rather, the weights of the features by AFWGE and the number of feature changes by CERTIFAI seem to provide more efficient encoding of categorical features and expressing all features, in general, to interpret correlations accurately.

Figure 3.

Adult heatmap of Original Data PCC using feature values from Original Data.

Figure 4.

Adult heatmap of AFWGE PCC using the feature weights produced by AFWGE.

Figure 5.

Adult heatmap of CERTIFAI PCC using the number of feature changes produced by CERTIFAI.

- Breast Cancer:

This dataset is balanced with two classes (malignant and benign), consisting entirely of numerical features related to medical measurements, and is likely to show stronger and more meaningful correlations. The heatmap of the Original Data PCC using the feature values from the Breast Cancer dataset in Figure 6 shows strong correlations, with maximum PCC values of around 0.9 and PCC values of at least 0.7 for most features; it represents 21% of all feature correlation pairs (92 pairs out of 435). Ambiguity is introduced by the high correlations among the features since feature selection methods might struggle to distinguish which feature to keep when multiple features are highly correlated. This can lead to uncertain decisions about which features are truly significant, potentially leading to the exclusion of valuable features or the inclusion of redundant ones [37]. On the other hand, the feature weights of AFWGE (Figure 7) and the number of feature changes of CERTIFAI (Figure 8) show how these high correlations are filtered by eliminating a number of them. Feature correlation pairs with PCC values of at least 0.7 were eliminated by AFWGE (15 pairs out of 435) and CERTIFAI (20 pairs out of 435). This filtration prevents ambiguity in the feature selection process.

Figure 6.

Breast Cancer heatmap of Original Data PCC using feature values from Original Data.

Figure 7.

Breast Cancer heatmap of AFWGE PCC using the feature weights produced by AFWGE.

Figure 8.

Breast Cancer heatmap of CERTIFAI PCC using the number of feature changes produced by CERTIFAI.

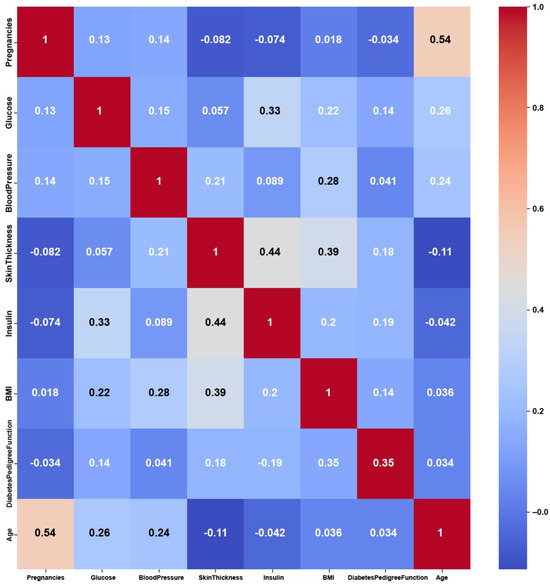

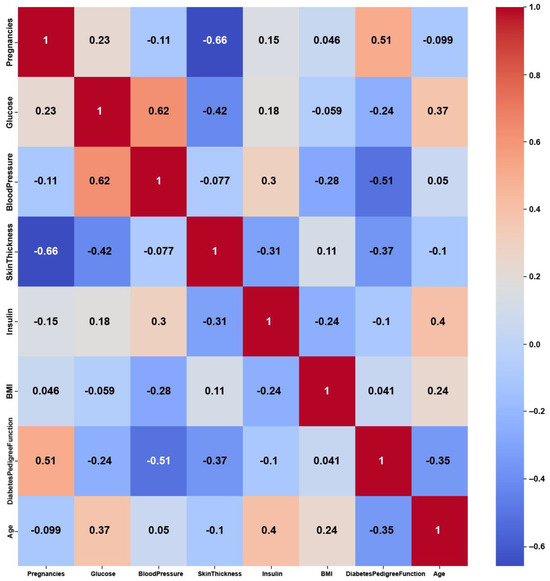

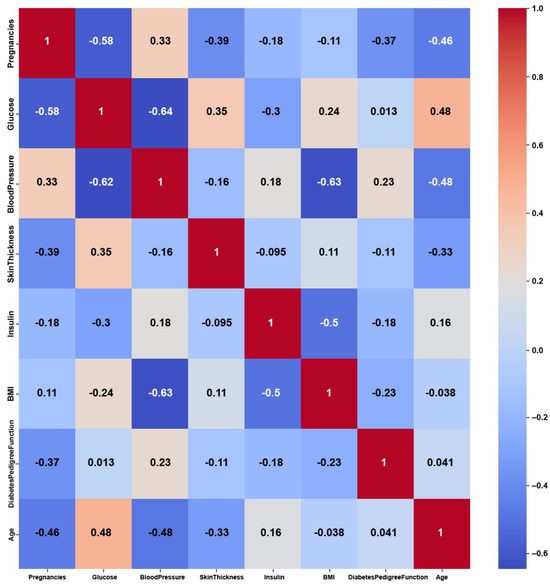

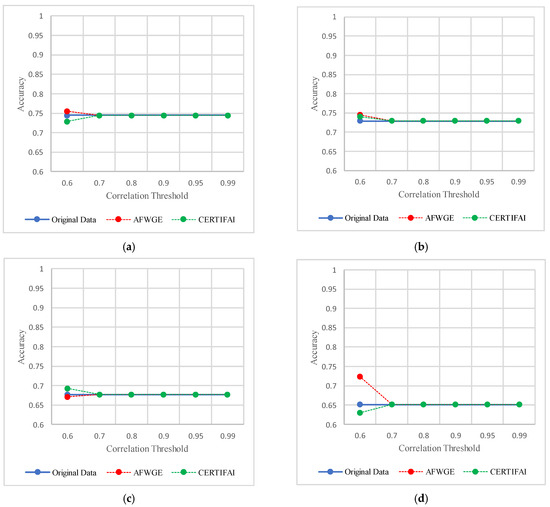

- Pima Indians Diabetes:

The Pima Indians Diabetes dataset consists of numerical features, yet it shows weak correlations among these features, as shown in Figure 9, with maximum PCC values of around 0.5. The weak correlations might have been due to dataset imbalance, with 35% labeled as diabetic and 65% of the instances labeled as nondiabetic. Nevertheless, the features might not be strongly correlated with each other, but they are still relevant for predicting diabetes. On the other hand, the feature weights of AFWGE (Figure 10) and the number of feature changes of CERTIFAI (Figure 11) show higher correlations than Original Data. However, they still do not have strong PCC values, i.e., not exceeding 0.6 for either AFWGE or CERTIFAI.

Figure 9.

Pima Indians Diabetes heatmap of Original Data PCC using feature values from Original Data.

Figure 10.

Pima Indians Diabetes heatmap of AFWGE PCC using the feature weights produced by AFWGE.

Figure 11.

Pima Indians Diabetes heatmap of CERTIFAI PCC using the number of feature changes produced by CERTIFAI.

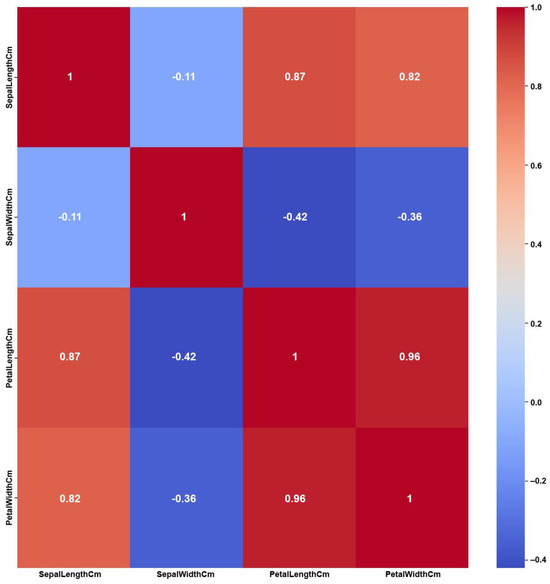

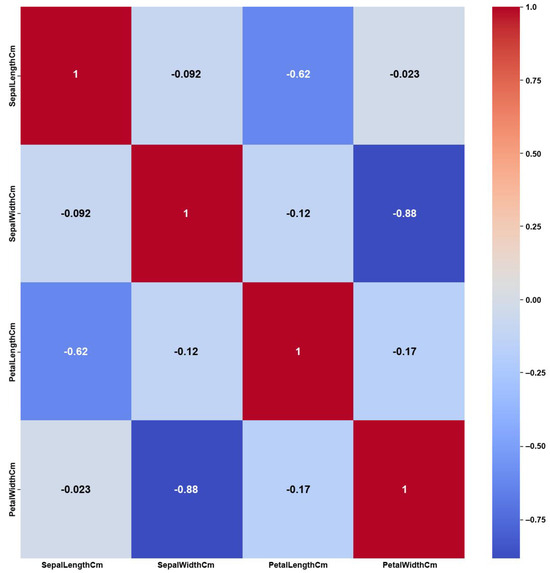

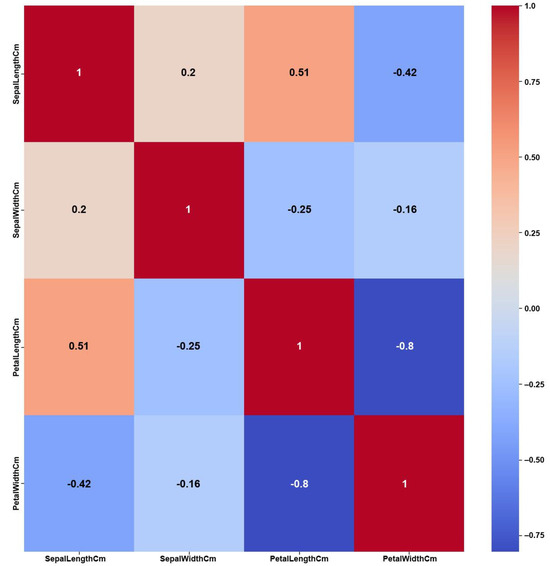

- Iris

The Iris dataset’s simplicity, having a small number of features and balanced classes, shows clear and strong correlations among the features, as shown in the Original Data heatmap (Figure 12). The figure shows a maximum PCC value of around 0.9 and PCC values of at least 0.8 for most of the features, i.e., 50% of all feature correlation pairs (three pairs out of six). Again, as previously discussed in the Breast Cancer case, this high amount of feature correlations can lead to ambiguous decisions in feature selection. However, the feature weights of AFWGE (Figure 13) and the number of feature changes by CERTIFAI (Figure 14) show how these high correlations are filtered by eliminating a number of them, which helps to prevent ambiguity in the feature selection process. Feature correlation pairs with PCC values of at least 0.8 were eliminated in AFWGE and in CERTIFAI (i.e., from three pairs to one pair out of six).

Figure 12.

Iris heatmap of Original Data PCC using feature values from Original Data.

Figure 13.

Iris heatmap of AFWGE PCC using the feature weights produced by AFWGE.

Figure 14.

Iris Heatmap of CERTIFAI PCC using the number of feature changes produced by CERTIFAI.

5.2.2. Accuracy

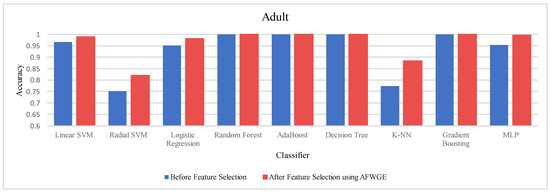

- Adult dataset:

When comparing the performance using all features against the performance using only the important group of features across the three methods—Original Data, CERTIFAI, and AFWGE—on the Adult dataset, we obtained distinct outcomes, as shown in Table 3. For Original Data, there was no change in the accuracy of any of the nine classifiers. In contrast, the AFWGE method achieved enhanced accuracies for the L-SVM, R-SVM, LR, K-NN, and MLP classifiers, while the accuracies for RF, AdaBoost, DT, and Gradient Boosting remained unchanged. Meanwhile, the CERTIFAI method did not affect the accuracies of most classifiers; however, it led to an improvement in the K-NN classifier’s accuracy and a reduction in the MLP classifier’s accuracy.

Table 3.

The percentage of average accuracy change when using each method on the Adult dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is indicated in bold.

The percentage change in accuracy across Original Data, AFWGE, and CERTIFAI reflects how each of the resulting groups of features affects the model’s ability to classify instances correctly. When comparing the values in Table 3, we can identify which method performs best and which performs worst. A positive percentage change in accuracy (highlighted in purple) indicates improvement, while a negative percentage change (highlighted in orange) indicates a reduction. The method with the highest percentage change (in bold) had the most significant positive impact on accuracy. In Table 2, the group of features selected by AFWGE shows the highest positive changes across multiple classifiers.

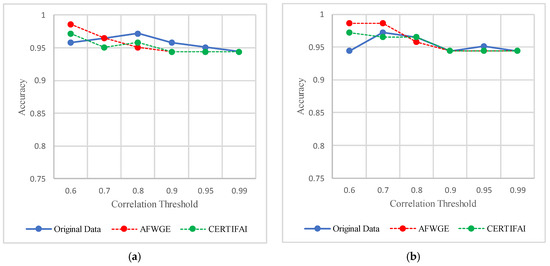

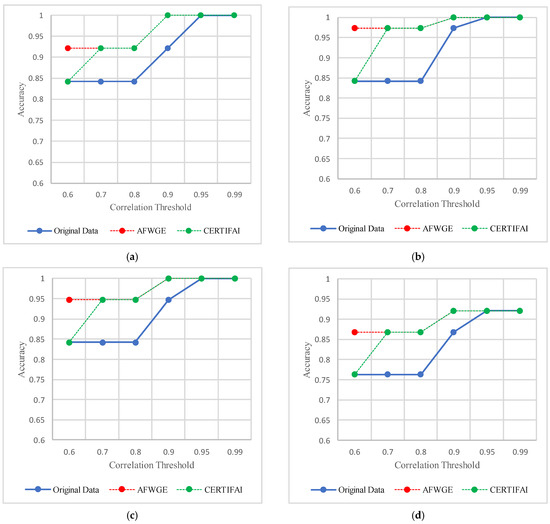

The threshold values for the PCCs were 0.6 and 0.7 for selecting the crucial group of features. The performance in terms of accuracy was the best for the most affected classifiers with the selected features at these thresholds, as shown in Figure 15. This increase in accuracy suggests that the features chosen at these PCC thresholds capture the main characteristics of the data, leading to the enhanced predictive performance of the classifiers.

Figure 15.

Classifier accuracies on the Adult dataset for three rival methods using different PCC threshold values. (a) Linear SVM. (b) LR. (c) K-NN. (d) MLP.

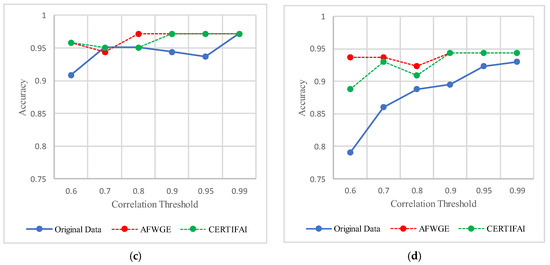

Moreover, we conducted a Wilcoxon signed rank test to see if there was a statistically significant difference between the classifiers’ accuracies before and after using the group of features selected by AFWGE. The Wilcoxon test uses the following null (h0) and alternative (hA) hypotheses: h0: the average value of accuracies is equal between the two groups; hA: the average value of accuracies is not equal between the two groups. The test indicates that there was, indeed, a significant difference (at a significance level of α = 0.05); thus, the null hypothesis was rejected. This is verification that the average value of accuracies after using the selected feature group of AFWGE is significantly better than using all the Adult dataset features (Figure 16).

Figure 16.

Classifier accuracies on the Adult dataset before and after using the group of features selected by AFWGE.

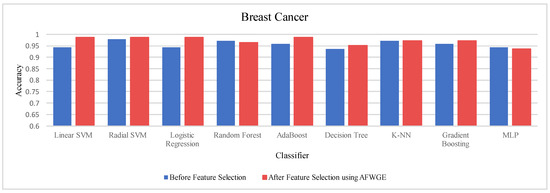

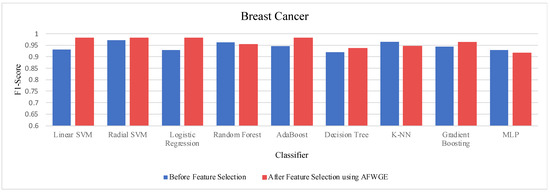

- Breast Cancer dataset:

Table 4 shows the performance results of the three methods —Original Data, CERTIFAI, and AFWGE—on the Breast Cancer dataset. The results in Table 3 reveal the following findings: For Original Data, all nine classifiers, except L-SVM and LR, dropped in accuracy. In contrast, using AFWGE resulted in better accuracies for the L-SVM, R-SVM, LR, AdaBoost, DT, and Gradient Boosting classifiers while that for the K-NN classifier remained the same. However, there was a resultant decrease in accuracy for the RF and MLP classifiers. In contrast, when using the CERTIFAI method, the L-SVM, LR, DT, and Gradient Boosting classifiers showed enhanced accuracy, while there was a decrease in the accuracy of the R-SVM, RF, AdaBoost, K-NN, and MLP classifiers.

Table 4.

The percentage of average accuracy change using each method on the Breast Cancer dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

Again, from Table 4, the percentage changes in accuracy for the Original Data, AFWGE, and CERTIFAI methods reveal how each method’s resulting group of features impacts the model’s ability to classify instances correctly. From this table, it is obvious that the group of features of AFWGE shows the highest positive changes across multiple classifiers.

For the Breast Cancer dataset, the most important group of features was selected when the PCC threshold was equal to 0.6, 0.7, and 0.8. The selected features at these thresholds provided the best performance in terms of accuracy for the most affected classifiers, as shown in Figure 17. The selected features at these PCC thresholds capture the important characteristics of the data, enhancing the accuracy performance of the classifiers.

Figure 17.

Classifier accuracies on the Breast Cancer dataset using three rival methods with different PCC threshold values. (a) Linear SVM. (b) LR. (c) K-NN. (d) MLP.

Again, the results were subjected to a Wilcoxon signed rank test to test the statistically significant difference between the classifier’s accuracies before and after using AFWGE’s selected group of features. Additionally, the test indicated that there was a significant difference at a significance level of α = 0.05; thus, the null hypothesis was rejected. This is verification that the average value of accuracy after using AFWGE’s selected crucial features is significantly better than that using all the Breast Cancer dataset features (Figure 18).

Figure 18.

Classifier accuracies on the Breast Cancer dataset before and after using the group of features selected by AFWGE.

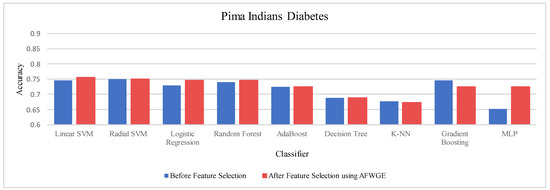

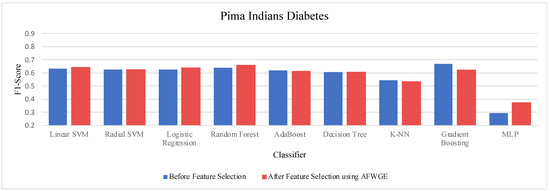

- Pima Indians Diabetes dataset:

Table 5 demonstrates the accuracy results on the Pima Indians Diabetes dataset for the three compared methods. When evaluating the results in Table 4, we observed distinct patterns, whereas, for Original Data, there was no change in the accuracy for any of the nine classifiers. In contrast, AFWGE improved the accuracies of the L-SVM, LR, RF, and MLP classifiers, though it had no effect on the R-SVM, AdaBoost, or DT classifiers. However, the accuracies of the K-NN and Gradient Boosting classifiers went down. For the CERTIFAI approach, the accuracy for the LR, AdaBoost, and K-NN classifiers increased, and the accuracy of the L-SVM, R-SVM, RF, DT, Gradient Boosting, and MLP classifiers decreased.

Table 5.

The percentage of average accuracy change using each method for the Pima Indians Diabetes dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

Again, from Table 5, the most important group of features of AFWGE shows the highest positive changes across multiple classifiers for the Pima Indians Diabetes dataset, indicating the best performance among the three rival methods.

As shown in Figure 19, the selected features at a PCC threshold of 0.6 provided the best performance in terms of accuracy for the most affected classifiers.

Figure 19.

Classifier accuracies on the Pima Indians Diabetes dataset using three rival methods with different PCC threshold values. (a) Linear SVM. (b) LR. (c) K-NN. (d) MLP.

Once more, we performed a Wilcoxon signed rank test between the classifiers’ accuracies before and after using the most important group of features of AFWGE. The results, again, showed that there was a significant difference at α = 0.05; thus, the null hypothesis was rejected. Thus, we can conclude that the average value of accuracy after AFWGE is significantly better than using all the Pima Indians Diabetes dataset’s features (Figure 20).

Figure 20.

Classifier accuracies on the Pima Indians Diabetes dataset before and after using the group of features selected by AFWGE.

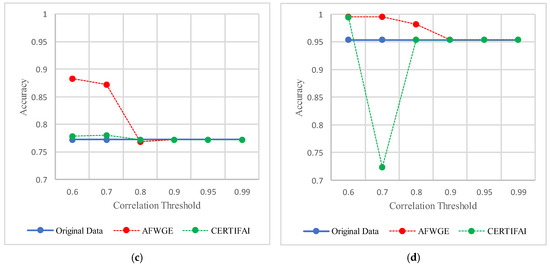

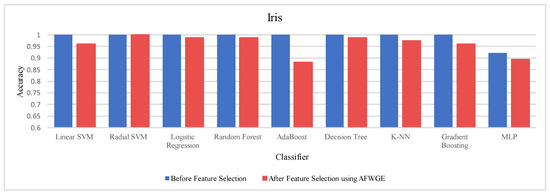

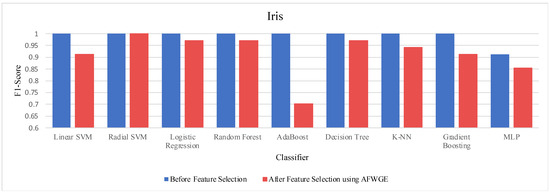

- Iris dataset:

Prior to utilizing the selected group of features, all classifiers employed on the Iris dataset demonstrated exceptionally high accuracies, nearly equivalent to 1.0, often indicating overfitting. Certain characteristics of the Iris dataset, such as its small size, low dimensionality, and distinct class separability, can contribute to overfitting. However, when the Original Data method was used, the accuracies of all nine classifiers were reduced, as shown in Table 6. Similarly, the AFWGE method resulted in a moderate decrease in accuracies for all nine classifiers, with the exception of the R-SVM classifier, which maintained the same accuracy. The CERTIFAI method also led to reduced accuracies for all nine classifiers. Nevertheless, the results in Table 6 demonstrate that the most important group of features of AFWGE exhibited the minimum reduction in accuracy across multiple classifiers.

Table 6.

The percentage of average accuracy change using each method for the Iris dataset for each classifier, where a negative change is in orange, and the best for each classifier is in bold.

As shown in Figure 21, the most important group of features was selected when the PCC threshold was equal to 0.9, 0.95, and 0.99. This limited the reduction in accuracy at these PCC thresholds; however, it effectively minimized overfitting, demonstrating that the selected features retained the essential characteristics of the data without allowing the classifiers to overfit. Therefore, the selected features are the most important features, supporting both generalizability and interpretability.

Figure 21.

Classifier accuracies on the Iris dataset using the three rival methods with different PCC threshold values. (a) Linear SVM. (b) LR. (c) K-NN. (d) MLP.

We ran another Wilcoxon signed rank test to check whether there was a statistically significant difference between the classifiers’ accuracies before and after using the most important group of features of AFWGE. Once again, the findings demonstrated a significant difference at a significance level of α = 0.05; thus, the null hypothesis was rejected. Therefore, it can be concluded that the average value of the accuracies after using AFWGE is significantly better than using all the Iris dataset features since it reduces overfitting (Figure 22).

Figure 22.

Classifier accuracies on the Iris dataset before and after using the group of features selected by AFWGE.

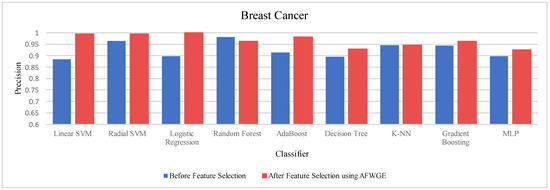

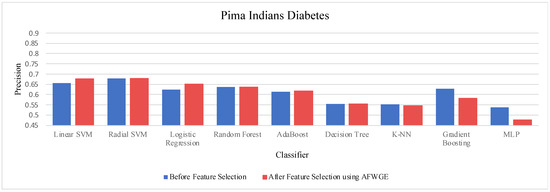

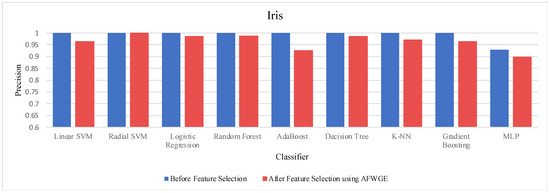

5.2.3. Precision

The results demonstrate a clear improvement in classifiers’ performance when using the selected group of features, particularly with the AFWGE method. This highlights the effectiveness of AFWGE in enhancing feature selection. The details and comprehensive analysis of these results are provided in the Appendix (See Appendix A).

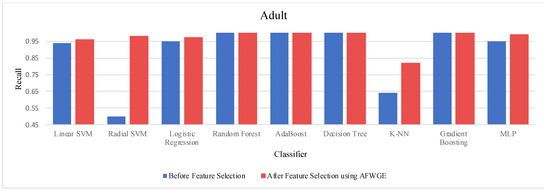

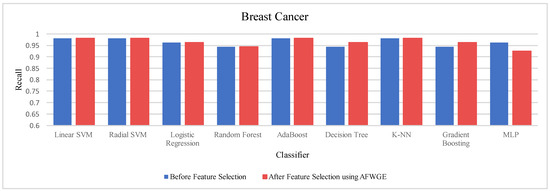

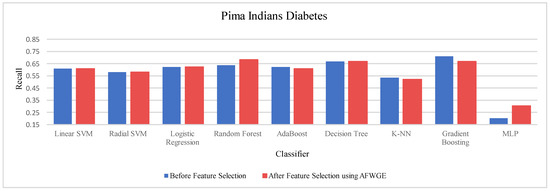

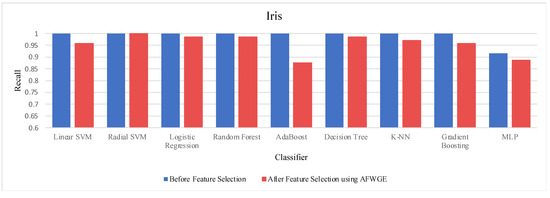

5.2.4. Recall

Using the selected set of features, especially the AFWGE method, significantly improves classifier performance, according to the results. This demonstrates how effectively AFWGE improves feature selection. Results are thoroughly analyzed and detailed in the Appendix (See Appendix B).

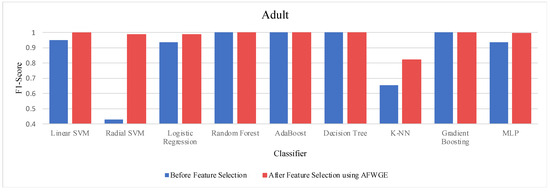

5.2.5. F1-Score

The results show that classifier performance is much enhanced by using the selected group of features, specially the AFWGE method. This illustrates that AFWGE enhances feature selection effectively. The results are presented in the appendix with analysis (See Appendix C).

5.3. Results: Analysis and Discussion

The detailed results presented above show how the classifiers’ performance improved using the resulting selected group of features, especially when using the AFWGE method. The important group of features obtained through the weights of AFWGE with the PCC improved all performance metrics for all the tested classifiers. Since AFWGE provided the highest positive percentage change for all the classifiers’ performance metrics, we used the Wilcoxon test to evaluate the significance of AFWGE’s superiority over the Original Data and CERTIFAI methods. The results of this testing are presented in Table 7, where the method(s) with statistically significant performance is (are) indicated in each case.

Table 7.

Superior method(s) based on the Wilcoxon test for each performance criterion for all nine classifiers.

Moreover, a summary of the statistical significance results using the Wilcoxon test to indicate whether performance was significantly enhanced when using the group of features selected by AFWGE is shown in Table 8. From this table, it is demonstrated that AFWGE provided a significant improvement across all four performance metrics for all nine classifiers on the four datasets, except for precision and recall on the Pima Indians Diabetes dataset. Nonetheless, on this dataset, the Wilcoxon test indicated that AFWGE provided a significant improvement in the F1 score, which is defined as the harmonic mean of precision and recall and is derived from the confusion matrix; thus, it can completely describe the outcome of a classification task [45].

Table 8.

Summary of the Wilcoxon test for all performance criteria used to assess the enhancement in performance before and after using the selected group of features for the AFWGE method and each dataset. Yes denotes a significant enhancement, and No denotes no significant enhancement.

In fact, out of the four datasets, only the Pima Indians Diabetes dataset has an imbalance toward the negative class (nondiabetic), since 65% of the instances are classified as not having diabetes, and 35% are classified as having diabetes. An ML model’s ability to accurately detect instances of the minority class (diabetic) can be negatively affected by this imbalance if the model becomes biased toward the majority class (nondiabetic). The overall accuracy and F1 score may have improved for this reason; these measures can conceal weak performance when applied to minority classes. Because the majority class is bigger and controls the measure, accuracy might become better. Since the F1 score is the average of precision and recall, it can show growth even if one of the parts is much lower, in case the other part becomes good enough. It is more important to correctly identify the minority class when using precision and recall measures. Therefore, if the model performance worsens when correctly predicting the positive class (diabetic), it has a stronger effect on precision and recall [46].

Finally, it is worth noting that lower metric results do not necessarily mean worse performance. For example, on the Iris dataset, most performance criteria were approximately equal to one (i.e., 100%) before using the selected group of features, which indicated overfitting. In fact, FS is a widely recognized task in ML, which has the aim of reducing the chances of overfitting a model on a dataset [47]. Therefore, the performance criteria with the Iris dataset were reduced significantly using the selected group of features.

5.4. Analysis of the Importance of a Group of Features vs. Individual Feature Importance

The strategy for identifying the contribution of each feature through feature importance vs. the contribution of a group of features was also significant in the current analysis. Table 9 shows the selected group of features for each dataset obtained via the AFWGE method with the PCC, as explained previously. Mostly, correlation analysis is performed to identify hidden patterns in data (e.g., as in [37]) to distinguish the most important group of features, i.e., the group of features that collectively have a crucial impact on performance. For example, as can be seen for the Pima Indians Diabetes dataset, the feature importance ranking using AFWGE [13], LIME (local interpretable model-agnostic), and LPI (local permutation importance) explanations [48] of “Blood Pressure” is higher than for “Insulin”. However, the most important group of features of AFWGE inferred from our FS results, as shown in Table 9, does not reveal the same information. From this table, we can see that the best performance of the classifiers on the Pima Indians Diabetes dataset included “Insulin” instead of “Blood Pressure”, although the latter had higher importance than “Insulin” as an individual feature (as mentioned above).

Table 9.

The most important group of features selected by AFWGE on each dataset.

Thus, we inferred that the process of determining which group of features in a learning model’s dataset is the most important for performance, and this is distinct from the process of simply calculating the importance of each individual feature and selecting the highest among them. The concept of feature importance focuses on the contribution of individual features to the predictions made by the model. On the other hand, determining which features constitute an important group requires an understanding of how different combinations of features interact with one another and collectively influence the model’s performance. As previously mentioned, an example is the case of the Pima Indians Diabetes dataset, where “Insulin” was included in the most important group of features instead of “Blood Pressure”, even though it had lower weight as an individual feature of importance. It seems that, somehow, “Insulin”, together with other selected important features, influenced the decision of the model and compensated for the presence of “Blood Pressure” in the selected feature set. When using the PCC, we could identify the feature groups that best captured the most important patterns to gain a deeper understanding of the data’s fundamental structure. For instance, in high-stakes domains such as healthcare or finance, users can rely on AFWGE-derived feature weights to focus on the most relevant relationships among the features, making counterfactual explanations more actionable and aligned with domain-specific requirements.

The utilization of the counterfactual explanations of AFWGE, with its embedded feature weights, contribute to the effectiveness of the PCC approach. Thus, it is possible to identify the most important group of features more effectively by combining these weights with correlation analysis. AFWGE provides interpretability by adaptively learning feature weights throughout the optimization process. This continuous adaptation allows AFWGE to capture the dynamic influence of each feature within the counterfactual generation process. Furthermore, the incorporation of the PCC allows the method to reduce feature redundancy, providing more stable and relevant insights for end users to understand feature relationships and dependencies. Thus, our proposed method helps end users focus on the most critical features to recognize which features most significantly influence the model’s predictions rather than being confused by redundant or less informative ones.

This helps to ensure that the selected groups of features are both individually relevant and collectively significant in improving the learning model’s performance.

6. Conclusions

Feature selection (FS) is an important process in machine learning (ML), where the goal is to find and select the most relevant features from a dataset to enhance the model’s performance and reduce overfitting. Our proposed method leverages the feature weights obtained using the adaptive feature weight genetic explanation (AFWGE) method, combined with the Pearson correlation coefficient (PCC), to identify the most important group of features, thereby optimizing FS and enhancing model performance. Extensive testing was performed using the proposed approach on four real-world datasets that encompass both continuous and categorical features, each with nine separate classifiers. By adopting a comprehensive approach, we were able to evaluate the effectiveness of the proposed method with different models and with various types of data.

The results demonstrated that the important group of features identified by AFWGE, together with the PCC, offers a significant improvement in terms of accuracy, precision (Appendix A), recall (Appendix B), and F1 score on most datasets and for most classifier models. Moreover, the results demonstrated the superiority of weights from AFWGE over the number of feature changes from CERTIFAI and the Original Data values with the PCC in enhancing the model’s performance.

This study aimed to improve the interpretability of AI models through crucial features’ selection. Machine learning interpretability is becoming increasingly important, especially as ML algorithms grow more complex. In many cases, less performant but explainable models are preferred over more performant black box models, highlighting the need for transparency in decision making. This is why research around XAI has recently become a growing field achieving significant advances. Would users, model developers, and regulators feel confident using a machine learning model if they cannot explain what it does? XAI techniques in FS, such as AFWGE, aim to address this by explaining why certain features are selected or considered important by the model, helping to clarify the relationship between input features and the model’s decision-making process. This is where our AFWGE feature weights with the PCC can be highly beneficial; they provide interpretability by indicating the influence of each feature on the target, making it applicable to any ML model. Focusing on the most important group of features for reversing the decision and producing the desired outcome will help not only individuals, model developers, and regulators to enhance the model’s behavior but also with understanding its behavior and making more informed decisions. This effort will help bridge the gap between model accuracy and explainability, leading to more trustworthy AI systems.

Future work will involve extending the proposed method assessment against various existing XAI methods, with additional testing on higher-dimensional and noisier datasets to ensure the method’s robust applicability and performance across diverse scenarios. Moreover, primarily due to the adaptive nature of feature weights and the repeated evaluations required for optimization, this resulted in increased processing times for the AFWGE method. Thus, one of the future goals is optimizing computational efficiency, possibly through leveraging external libraries to parallelize the process. Additionally, we plan to improve the accuracy of the techniques and investigate the performance of hybrid approaches to identify the crucial group of features by integrating AFWGE with other XAI techniques, such as LIME or SHAP.

Author Contributions

Conceptualization, E.A. and M.H.; methodology, E.A. and M.H.; software, E.A.; validation, E.A. and M.H.; formal analysis, E.A. and M.H.; investigation, E.A.; resources, E.A.; data curation, E.A.; writing—original draft preparation, E.A.; writing—review and editing, M.H.; visualization, E.A. and M.H.; supervision, M.H.; project administration, M.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are openly available: [UCI Machine Learning & kaggle] [10.24432/C5XW20, 10.24432/C5DW2B, www.kaggle.com/datasets/uciml/pima-indians-diabetes-database (accessed on 1 July 2024), 10.24432/C56C76] [24,25,26,27].

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Precision

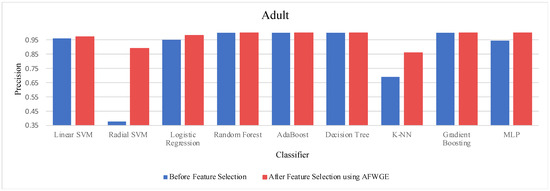

- Adult Dataset:

In analyzing the precision of the classifiers using the three methods, we observed the following results, presented in Table A1. With the Original Data method, there was no change in the precision of any of the nine classifiers. The AFWGE method, however, led to enhanced precision for the L-SVM, R-SVM, LR, K-NN, and MLP classifiers, while the precision for the RF, AdaBoost, DT, and Gradient Boosting classifiers remained unchanged. On the other hand, the CERTIFAI method produced no change in the precision of any of the nine classifiers. In Table A1, the most important group of features selected by AFWGE shows the highest positive changes across multiple classifiers.

Table A1.

The percentage of average precision change using each method on the Adult dataset for each classifier, where a positive change is in purple, and the best for each classifier is in bold.

Table A1.

The percentage of average precision change using each method on the Adult dataset for each classifier, where a positive change is in purple, and the best for each classifier is in bold.

| Classifier | Original Data | AFWGE | CERTIFAI |

|---|---|---|---|

| L-SVM | 0.0% | 1.45% | 0.0% |

| R-SVM | 0.0% | 136.91% | 0.0% |

| LR | 0.0% | 3.0% | 0.0% |

| RF | 0.0% | 0.0% | 0.0% |

| AdaBoost | 0.0% | 0.0% | 0.0% |

| DT | 0.0% | 0.0% | 0.0% |

| K-NN | 0.0% | 24.53% | 0.0% |

| Gradient Boosting | 0.0% | 0.0% | 0.0% |

| MLP | 0.0% | 5.6% | 0.0% |

Once again, the Wilcoxon signed rank test for the precision results before and after using the most important group of features selected by AFWGE indicated there was a significant difference at a significance level of α = 0.05; thus, the null hypothesis was rejected. Therefore, the average precision achieved after using the features selected by AFWGE was significantly better than that using all the Adult dataset’s features (Figure A1).

Figure A1.

Classifier precisions on the Adult dataset before and after using the group of features selected by AFWGE.

- Breast Cancer Dataset:

In evaluating the precision of the classifiers for the Breast Cancer dataset, we found varied results, as shown in Table A2. For the Original Data method, the precisions of all nine classifiers were reduced, except for those of the L-SVM, LR, and AdaBoost classifiers, which showed enhanced precision. The AFWGE method resulted in enhanced precision for all nine classifiers, except for the RF classifier, which experienced a reduction, and the K-NN classifier, which showed no change. With the CERTIFAI method, the precisions of the L-SVM, LR, AdaBoost, DT, and Gradient Boosting classifiers were enhanced, while the precisions of the R-SVM, RF, K-NN, and MLP classifiers were reduced. Once again, the results in Table A2 demonstrate that the group of features selected by AFWGE exhibited the highest positive changes across multiple classifiers.

Table A2.

The percentage of average precision change using each method on the Breast Cancer dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

Table A2.

The percentage of average precision change using each method on the Breast Cancer dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

| Classifier | Original Data | AFWGE | CERTIFAI |

|---|---|---|---|

| L-SVM | 4.09% | 12.49% | 2.29% |

| R-SVM | −1.29% | 3.12% | −0.08% |

| LR | 1.38% | 11.54% | 2.69% |

| RF | −2.81% | −1.89% | −0.37% |

| AdaBoost | 0.42% | 7.41% | 0.43% |

| DT | −1.54% | 3.78% | 0.59% |

| K-NN | −4.0% | 0.0% | −1.85% |

| Gradient Boosting | −0.87% | 1.96% | 0.29% |

| MLP | −4.67% | 3.12% | −1.46% |

The Wilcoxon signed rank test for the precision results before and after the group of features selected by AFWGE showed, once again, that there was a significant difference at α = 0.05. Thus, it was verified that using the group of features selected by AFWGE significantly enhanced the average precision as compared to using all the Breast Cancer dataset’s features (Figure A2).

Figure A2.

Classifier precisions on the Breast Cancer dataset before and after using the group of features selected by AFWGE.

- Pima Indians Diabetes Dataset:

Table A3 shows the precision results on the Pima Indians Diabetes dataset. It can be observed from this table that the Original Data method did not produce any change in the precision for any of the nine classifiers. The AFWGE method, on the other hand, resulted in enhanced precision for the L-SVM, LR, and AdaBoost classifiers; the precisions of the K-NN, Gradient Boosting, and MLP classifiers were reduced; and no change was observed for the R-SVM, RF, or DT classifiers. With the CERTIFAI method, the precisions of the LR, AdaBoost, K-NN, and MLP classifiers were enhanced, whereas the precisions of the L-SVM, R-SVM, RF, DT, and Gradient Boosting classifiers were reduced. Again, Table A3 demonstrates that using the group of features selected by AFWGE achieved high positive precision changes across multiple classifiers.

Table A3.

The percentage of average precision change using each method on the Pima Indians Diabetes dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

Table A3.

The percentage of average precision change using each method on the Pima Indians Diabetes dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

| Classifier | Original Data | AFWGE | CERTIFAI |

|---|---|---|---|

| L-SVM | 0.0% | 3.23% | −0.18% |

| R-SVM | 0.0% | 0.0% | −0.28% |

| LR | 0.0% | 4.55% | 1.01% |

| RF | 0.0% | 0.0% | −1.29% |

| AdaBoost | 0.0% | 0.55% | 0.34% |

| DT | 0.0% | 0.0% | −0.38% |

| K-NN | 0.0% | −1.23% | 1.44% |

| Gradient Boosting | 0.0% | −7.31% | −0.61% |

| MLP | 0.0% | −11.36% | 1.56% |

Nevertheless, the Wilcoxon signed rank test for the precisions before and after using the group of features selected by AFWGE this time showed that there was no significant difference at a significance level of α = 0.05; thus, we failed to reject the null hypothesis. There was no verification that the average precision after using the group of features selected by AFWGE was significantly better than that using all the Pima Indians Diabetes dataset features (Figure A3).

Figure A3.

Classifier precisions for the Pima Indians Diabetes dataset before and after using AFWGE’s selected group of features.

- Iris Dataset:

On the Iris dataset, once again, before using any important group of features, all classifiers applied exhibited very high precision (almost equal to 1.0, indicating overfitting). On the contrary, when using the Original Data method, the precision of all nine classifiers was reduced, as shown in Table A4. Similarly, the AFWGE method resulted in reduced precisions for all nine classifiers, except for the R-SVM classifier, which maintained the same precision. The CERTIFAI method also led to reduced precision for all nine classifiers. Nevertheless, as shown in Table A4, the group of features selected by AFWGE provided the minimum negative changes across multiple classifiers.

Table A4.

The percentage of average precision change using each method on the Iris dataset for each classifier, where a negative change is in orange, and the best for each classifier is in bold.

Table A4.

The percentage of average precision change using each method on the Iris dataset for each classifier, where a negative change is in orange, and the best for each classifier is in bold.

| Classifier | Original Data | AFWGE | CERTIFAI |

|---|---|---|---|

| L-SVM | −9.66% | −3.57% | −5.2% |

| R-SVM | −8.47% | 0.0% | −2.82% |

| LR | −8.93% | −1.39% | −3.75% |

| RF | −12.04% | −1.28% | −4.73% |

| AdaBoost | −18.41% | −7.5% | −10.3% |

| DT | −18.52% | −1.39% | −6.94% |

| K-NN | −9.68% | −2.9% | −4.84% |

| Gradient Boosting | −12.81% | −3.57% | −6.25% |

| MLP | −11.16% | −3.28% | −5.54% |

The Wilcoxon signed rank test for this experiment showed, once again, that the results were significantly different at α = 0.05. Therefore, we concluded that the precision after using the selected features from AFWGE was significantly better than that using all the Iris dataset’s features due to reduced overfitting (Figure A4).

Figure A4.

Classifier precisions for the Iris dataset before and after using the group of features selected by AFWGE.

Appendix B

Recall

- Adult Dataset:

In evaluating the recall of the classifiers shown in Table A5 for the Adult dataset, we observed no change in the recall for all nine classifiers using the Original Data method. On the other hand, AFWGE led to enhanced recall for the L-SVM, R-SVM, LR, K-NN, and MLP classifiers, while the recall for the RF, AdaBoost, DT, and Gradient Boosting classifiers remained unchanged. With the CERTIFAI method, the recalls of the L-SVM and LR classifiers were enhanced, the recalls of the K-NN and MLP classifiers were reduced, and there was no change in the recall of the R-SVM, RF, AdaBoost, or DT classifiers. Again, we found that using the most important group of features selected by AFWGE exhibited the highest positive changes across multiple classifiers with respect to the recall.

Table A5.

The percentage of average recall change using each method on the Adult dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

Table A5.

The percentage of average recall change using each method on the Adult dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

| Classifier | Original Data | AFWGE | CERTIFAI |

|---|---|---|---|

| L-SVM | 0.0% | 2.11% | 1.53% |

| R-SVM | 0.0% | 96.1% | 0.0% |

| LR | 0.0% | 2.53% | 0.59% |

| RF | 0.0% | 0.0% | 0.0% |

| AdaBoost | 0.0% | 0.0% | 0.0% |

| DT | 0.0% | 0.0% | 0.0% |

| K-NN | 0.0% | 27.83% | −0.05% |

| Gradient Boosting | 0.0% | 0.0% | 0.0% |

| MLP | 0.0% | 4.34% | −4.05% |

To determine if there was a statistically significant difference between the classifiers’ recalls before and after using the most important group of features selected by AFWGE, we once again carried out a Wilcoxon signed rank test. As before, the results indicated a significant difference at a significance level of α = 0.05. Thus, it was concluded that the average recall when using the most important group of features selected by AFWGE was significantly better than that using all the Adult dataset’s features (Figure A5).

Figure A5.

Classifier recalls on the Adult dataset before and after using the group of features selected by AFWGE.

- Breast Cancer dataset:

In assessing the recall of the classifiers using the three methods on the Breast Cancer dataset shown in Table A6, we noted that for the Original Data method, the recall of all nine classifiers was reduced, except for the LR and Gradient Boosting classifiers, which exhibited improvements. The AFWGE method showed no change in the recall for most classifiers, except for the DT and Gradient Boosting classifiers, which experienced enhancements, while the MLP classifier’s recall was reduced. For the CERTIFAI method, the recalls of the LR, RF, DT, and Gradient Boosting classifiers were enhanced, whereas the recalls of the L-SVM, R-SVM, AdaBoost, K-NN, and MLP classifiers were reduced. It is obvious from the aforementioned results that the most important group of features selected by AFWGE had a positive effect across multiple classifiers.

Table A6.

The percentage of average recall change using each method on the Breast Cancer dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

Table A6.

The percentage of average recall change using each method on the Breast Cancer dataset for each classifier, where a positive change is in purple, a negative change is in orange, and the best for each classifier is in bold.

| Classifier | Original Data | AFWGE | CERTIFAI |

|---|---|---|---|

| L-SVM | −0.63% | 0.0% | −0.31% |

| R-SVM | −1.89% | 0.0% | −0.31% |

| LR | 1.28% | 0.0% | 0.32% |

| RF | −0.33% | 0.0% | 0.33% |

| AdaBoost | −5.35% | 0.0% | −1.26% |

| DT | −1.63% | 1.96% | 0.65% |

| K-NN | −3.46% | 0.0% | −0.63% |

| Gradient Boosting | 0.65% | 1.96% | 0.65% |