Abstract

In this paper, an improved hybrid genetic-hierarchical algorithm for the solution of the quadratic assignment problem (QAP) is presented. The algorithm is based on the genetic search combined with the hierarchical (hierarchicity-based multi-level) iterated tabu search procedure. The following are two main scientific contributions of the paper: (i) the enhanced two-level hybrid primary (master)-secondary (slave) genetic algorithm is proposed; (ii) the augmented universalized multi-strategy perturbation (mutation process)—which is integrated within a multi-level hierarchical iterated tabu search algorithm—is implemented. The proposed scheme enables efficient balance between intensification and diversification in the search process. The computational experiments have been conducted using QAP instances of sizes up to 729. The results from the experiments with the improved algorithm demonstrate the outstanding performance of the new proposed approach. This is especially obvious for the small- and medium-sized instances. Nearly 90% of the runs resulted in (pseudo-)optimal solutions. Three new best-known solutions have been achieved for very hard, challenging QAP instances.

Keywords:

combinatorial optimization; heuristic algorithms; genetic algorithms; tabu search; quadratic assignment problem MSC:

68T20

1. Introduction

The quadratic assignment problem (QAP) [1] can be stated as follows: Given two positive-integer quadratic matrices , , and a set of all possible permutations of the integers from to , find a permutation that minimizes the following function:

The quadratic assignment problem was firstly introduced by Koopmans and Beckmann [2] in 1957 but still attracts the attention of many researchers. There are many actual practical applications that can be formulated as quadratic assignment problems, including office assignment, planning a complex of buildings, design (construction) of electronic devices, finding the tightest cluster, the grey-pattern problem, the turbine-balancing problem, the arrangement of microarray layouts, configuration of an airport, scheduling parallel production lines, and assigning runners in a relay team. (A more detailed description of these particular applications can be found in [3].)

One of the concrete examples of the applications of the QAP is the placement of electronic components on printed circuit boards. In this case, the entries of the matrix are associated with the number of connections between the pairs of components. Meanwhile, the entries of the matrix correspond to the distances between the fixed positions on the board. The permutation can then be interpreted as a configuration for the arrangement of components in the positions, where the element indicates the number of the position to which the i-th component is assigned. Then, can be thought of as the total weighted length/cost of the connections between the components when all components are placed into the corresponding positions.

The QAP is also an important theoretical mathematical task. It has been proven that the QAP is NP-hard [4]. The quadratic assignment problem can be solved exactly by using the exact solution approaches (like branch and band algorithms [5], reformulation-linearization techniques [6,7], or semidefinite programming [8]), but only in the cases where the problem size is quite limited (). For this reason, the heuristic and metaheuristic algorithms (methods) are widely applied for the approximate solution of the QAP.

Historically, the local search (LS) (also known as neighbourhood search) principle-based algorithms were the first heuristic algorithms that were examined on the QAP in the early 1960s [9,10]. Later, several efficient improvements of the LS algorithms for the QAP were proposed (see, for example, [11,12,13]). It seems that the most efficient enhancement of LS algorithms is breakout local search [14,15].

The other neighbourhood search principle-based class of heuristics includes the simulated annealing [16] and tabu search algorithms [17,18,19,20,21]. In particular, the robust tabu search algorithm proposed by Taillard in 1991 [17] is still one of the most successful heuristic algorithms for the QAP in terms of efficacy, simplicity, and elegance.

The population-based heuristic algorithms include many algorithms that operate on sets of solutions, rather than single solutions. And this fact seems to be of crucial importance for the QAP. Within this category of algorithms, genetic algorithms have been shown to be among the most powerful heuristic algorithms for the QAP. This is especially true for the hybrid genetic (memetic) algorithms [22,23,24,25,26,27,28,29,30,31,32,33].

Distribution algorithms and differential evolution algorithms have been estimated, while population-based algorithms have been used to try to solve the QAP [34,35,36].

Several other types of heuristic algorithms (known as nature-/bio-inspired algorithms) have also been examined: the ant colony optimization [37], particle swarm optimization [38], artificial bee colony algorithm [39,40,41], hyper-heuristic method [42], electromagnetism-like optimization [43], cat swarm optimization [44], biogeography-based optimization [45], slime mould algorithm [46], firefly optimization algorithm [47,48], flower pollination algorithm [49], teaching–learning-based optimization [50], golden ball heuristic [51], chicken swarm optimization [52], optics-inspired optimization [53], antlion optimization algorithm [54,55], water-flow algorithm [56], crow search algorithm [57], artificial electric field algorithm [58], and Harris hawks optimization algorithm [59].

Also, some specific tailored heuristic approaches can be mentioned, e.g., [60,61,62,63,64,65,66].

The following are some of the actual articles related to the (meta)heuristic algorithms for the QAP: [27,28,32,57,59,67,68,69].

In [27], the authors suggest a new original principle for parent selection in genetic algorithms, which is inspired by natural biological evolutionary processes. The essence is that parents of one gender choose mates with certain characteristics favoured over others. This rule is quite simple, delicate, and can be utilized in many variants of genetic algorithms.

The authors of [28] propose the hybrid genetic algorithm that combines a so-called elite genetic algorithm and tabu search procedure. The algorithm employs two sorts of elite crossover procedures (two-exchange mutation and tabu search-based crossover), which help balance exploitation and exploration during the search process.

In [32], the researchers investigate a special hybrid heuristic algorithm, which blends three well-known metaheuristics, namely the scatter search, critical event tabu search, and random key-based genetic algorithm. This scheme results in a highly competitive algorithm, which is especially efficient for large-scale problems, providing many well-known solutions for such problems.

Then, [57] introduces the accelerated system for solving the QAP, which uses CUDA programming. A program is executed on both a CPU and a graphics processing unit (GPU). The task is divided between a CPU and a GPU. The CPU program calls the program that runs on a GPU, where many instructions are run simultaneously.

In [59], a so-called Harris hawk optimization algorithm is proposed, which is a nature (bio)-inspired heuristic optimization algorithm. Such algorithms are also known as metaphor-based since they use various metaphors, rather than the direct, definite names. For example, the Harris hawk optimization algorithm has its source of inspiration in the hunting behaviour of cooperative birds (hawks). The behaviour is imitated by adopting the corresponding mathematical formalism. In addition, the algorithm uses the classical tabu search procedure to enhance the performance of the algorithm.

One of the most recent and effective algorithms is the so-called frequent pattern-based search, which, in fact, is based on the breakout local search and, in addition, exploits the information of the frequent patterns (fragments) of the solutions [67].

In [68], a greedy genetic-hybridization approach is presented. In the proposed algorithm, an initial population is obtained using the best solution of the greedy algorithm. Standard genetic operators are then applied to the population members. The resulting solution is used as an initial assignment of the greedy algorithm. The algorithm is also applicable to the optimal network design.

We also mention [69], which investigates the heuristic evolutionary optimization algorithm. The algorithm is based on the data-mining ideas. The algorithm efficiently mines the itemsets composed of frequent items and also exploits the pseudo-utility characteristic. The algorithm balances local and global searches.

In addition, one can point out some nature-inspired algorithms (including mathematics-inspired algorithms), which, although not straightforwardly linked to the QAP, still possess certain potentialities to be applied to this problem by employing some transformation/discretization mechanisms (transfer functions) (see, for example, [70,71,72,73,74,75]).

For more thorough discussions on the heuristic algorithms for the QAP, the reader is referred to [76,77,78].

In this work, our main ambition is to further improve the performance of the genetic-hierarchical algorithm presented in [29] by further capitalizing on the principle of hierarchy and, at the same time, universalizing and enhancing the perturbation (mutation) mechanism. On top of that, we are trying to fight against the most severe drawbacks of the heuristics algorithms, namely the slow convergence speed and stagnation of the search processes. Overall, our genetic algorithm incorporates ideas heavily based on hierarchical principles. Hence, this algorithm is called a “hierarchical algorithm”. (Notice that, generally, the concept of “hierarchy”—but within some other various backgrounds—is not fundamentally new and is considered in a few works (see, for example, [79,80,81,82,83]).)

The scientific contribution of the paper is twofold:

- The enhanced two-level (two-layer) hybrid primary (master)-secondary (slave) genetic algorithm is proposed, in particular, in the context of the quadratic assignment problem;

- The augmented universalized multi-strategy perturbation (mutation process)—which is integrated within a multi-level (k-level) hierarchical iterated tabu search algorithm (HITS)—is implemented.

In particular, in the first stage, the outstanding quality population (super population) is created by means of a cloned primary genetic algorithm—a secondary (slave) algorithm. Then, in the second phase, the created population is improved by a primary (master) genetic algorithm, which adopts the hierarchical iterated tabu search algorithm combined with the universal, versatile, and flexible perturbation procedure.

2. An Improved Hybrid Genetic-Hierarchical Algorithm for the QAP

2.1. Preliminaries

Before describing the principle of functioning of our improved genetic-hierarchical algorithm, we provide some (basic) definitions for the sake of more clarity.

Let () be a permutation and also let () and (, ) be two elements in the permutation . Then, we define as follows: ; . A two-exchange neighbourhood function : assigns for each a set of neighbouring solutions . is defined in the following way: , where and denote the (Hamming) distance between the solutions and .

The solution can be obtained from the existing solution by accomplishing the pairwise interchange move : , which swaps the th and th elements in the particular solution. Thus, .

Let and be two neighbouring solutions. Then, the difference in the objective function values —which is obtained when the two elements and of the current permutation have been interchanged—is calculated according to this formula (also see [84]):

Furthermore, one can use memory (RAM) to store the values (), as proposed by Frieze et al. (1989) [84] and Taillard (1991) [17]. Note that, after the exchange of the elements and , the values must be updated (new values are obtained) according to the following formula:

If , or or or , then the previous formula must be applied. Thus, the complexity of recalculating of all the values is .

Moreover, if matrix and/or matrix are symmetric, then formulas become simpler. Assume that only matrix is symmetric. Then, one can transform the asymmetric matrix to the symmetric matrix by summing up the corresponding entries in , i.e., , , , , [20,85]. The simplified formula is as follows:

In a similar way, Formula (3) also becomes simpler [20,85]:

If , , then Formula (4) must be applied.

An additional time-saving trick can be used. Suppose that we dispose of three-dimensional matrices and . Also, let , and let , , …, n. As a result, we can apply the following effective formula for calculation of the difference in the values of the objective function () [20,29]:

Similarly, the formula for the recalculation of the difference in the objective function values, , also becomes much more compact and faster [20,29]:

2.2. (General) Structure of the Algorithm

Essentially, our genetic algorithms consist of two main parts: the primary genetic algorithm (master genetic algorithm) and secondary genetic algorithm (slave genetic algorithm), which is de facto operationalized as a clone of the primary (master) algorithm. Each of them, in turn, incorporates the hierarchical iterated tabu search algorithm integrated with the perturbation (mutation) procedure. The principal idea of the used algorithm is similar to that of the hybrid genetic algorithm [29], where global explorative/diversified search is in cooperation with the local exploitative/intensified search. The exploitative search, in particular, is performed by the iterated hierarchical tabu search procedure (see Section 2.7), and the diversification is ensured through the enhanced perturbation (mutation) mechanism (see Section 2.7.2).

In our genetic algorithm, the solution (permutation) elements are directly linked to the genes of individuals’ chromosomes, so no encoding is required. Meanwhile, the objective function value is associated with the fitness of individuals. The population of individuals, , in our algorithm has a three-tuple list-like representation, i.e., it is organized as a structured list of size , where is the population size:

Every member of the population (i.e., the element of the list) appears as a triplet in the form , where , () is the th solution of the population, () denotes the th value of the objective function corresponding to , and is the th matrix that contains the pre-computed differences in the objective function values (i.e., , , ). Storing differences directly in the RAM memory ensures faster execution of the genetic algorithm because there is no longer the need to re-compute the difference matrix from scratch every time. So, even if the construction of the initial population occurs at time, the resulting time complexity of the remaining genetic algorithm is nearly proportional to . (Notice that memory complexity is, in our algorithm, proportional to , where denotes the initial population size factor (coefficient), and is the population size (see Section 2.3). This fact raises no issues for modern computers.)

The basic components of the genetic algorithm are as follows: (1) construction of initial population; (2) parent selection; (3) crossover procedure; (4) improvement of the offspring by the hierarchical iterated tabu search algorithm; (5) population replacement.

The top-level pseudocode of the genetic algorithm is provided in Algorithm 1.

Remark. If there is no population change, then the current generation is recognized as an idle generation. And if the number of consecutive idle generations exceeds the predefined limit, , then the genetic algorithm is restarted from a new population.

| Algorithm 1 Top-level pseudocode of the hybrid genetic-hierarchical algorithm |

| Hybrid_Genetic_Hierarchical_Algorithm; // input: n—problem size, A, B—data matrices // output: p✸—the best found solution // parameters: PS—population size, G—total number of generations, DT—distance threshold, Lidle_gen—idle generations limit, // CrossVar—crossover operator variant, PerturbVar—perturbation/mutation variant begin create the initial population P of size PS; p✸ ← GetBestMember(P); // initialization of the best so far solution for i ← 1 to G do begin // main loop sort the members of the population P in the ascending order of the values of the objective function; select parents p′, p″ ∈ P for crossover procedure; perform the crossover operator on the solution-parents p′, p″ and produce the offspring p′′′; apply improvement procedure Hierarchical_Iterated_Tabu_Search to the offspring p′′′, get the (improved) offspring p✩; if z(p✩) < z(p✸) then p✸ ← p✩; // the best found solution is memorized if idle generations detected then restart from a new population else obtain new population P from the union of the existing parents’ population and the offspring P ∪ {p✩} (such that |P| = PS) endfor; return p✸ end. |

Notes. 1. The subroutine returns the best solution for the particular population. 2. The crossover operator is performed considering the crossover variant parameter . 3. The hierarchical iterated tabu search procedure is executed with regard to the perturbation (mutation) variant parameter . 4. The population management is organized bearing in mind the distance threshold value .

2.3. Initial Population Creation

The genetic algorithm starts with a pre-initialization stage, i.e., the construction of the pre-initial (“primordial”) population of size , where () is a predefined parameter (coefficient) that controls the size of the pre-initial population. Every solution of the “primordial” population is created by using a secondary/slave genetic algorithm (cloned master genetic algorithm) in such a way that the best-evolved solution of the slave algorithm becomes the current incumbent solution in the initial population. The idea of doing so is simply to generate a superior-quality starting population.

The slave genetic algorithm uses, in turn, the greedy randomized adaptive search procedure (GRASP) [86] for the creation of the starting solutions. These solutions are created one at a time, such that every solution appears unique due to the random nature of GRASP. In addition, every solution created by GRASP is subjected to improvement by the hierarchical iterated tabu search algorithm. The process continues until solutions are constructed and improved.

After the pre-initial population is improved, the truncation (culling) of the obtained population is performed. In particular, worst members of the primordial population are discarded, and only members survive for future generations. This approach is similar to that proposed in [87,88].

There is a special trick. In particular, if the improved solution (p✩) is better than the current best-found solution, then the improved solution replaces the best-found solution. Otherwise, it is checked if the minimum mutual distance () between the new solution p✩ and the current population solutions is greater than the predetermined distance threshold, . If this is the case, then the new solution joins the population. Otherwise, the new solution is discarded and a random solution is included instead.

Remark. The distance threshold is connected to the size of the problem, , through the following equation: , where is the distance-threshold factor (), which is up to the user’s choice.

2.4. Parent Selection

In our genetic algorithm, the solutions’ parents are selected using a rank-based selection rule [89].

2.5. Crossover Operator

In our algorithm, the crossover operator is applied to two selected parents to produce one offspring, which is subject to improvement by the HITS algorithm. The crossover operator occurs at every generation; that is, the crossover probability is equal to . We dispose of two types of permutation-oriented crossover operators: cohesive crossover [22] and universal crossover [90]. The first operator is problem-dependent and adopts problem-specific information, while the second one is of pure general nature. The parameter is to decide which crossover operator should be used. The computational time complexity of the crossover operators is proportional to and does not contribute much to the overall time budget of the genetic algorithm. More details on these crossover operators can be found in [22,90].

2.6. Population Replacement

We have, in particular, implemented a modified variant of the well-known “” update rule [91]. Our rule respects not only the quality of the solutions but also the distances between solutions. The idea is to preserve the minimum distance threshold, , between population members. The new replacement rule is formally denoted as “”, where . (This rule is also used for the initial population construction (see Section 2.3)). So, if the minimum (mutual) distance between the newly obtained offspring and the individuals in the population is less than , then the offspring is omitted. (The exception is the situation where the offspring is better than the best individual population.) Otherwise, the offspring enters the current population, but only under the condition that it is better than the worst population member. The worst individual is removed in this case. Also, if the new offspring is better than the best population individual, then the offspring replaces, in particular, the best individual population. This replacement strategy ensures that only individuals who are diverse enough survive for further generations.

2.7. Improvement of Solutions—Hierarchical Iterated Tabu Search

Every produced offspring is subject to improvement by the hierarchical iterated tabu search, which can also be thought of as a multi-layered tabu search procedure where the basic idea is the cyclic (multiple) reuse of the tabu search algorithm. A similar idea of the multi-reuse of iterative/local searches was utilized in some other algorithms (like the hierarchical iterated local search [79], iterated tabu search [92], and multilevel tabu search [93]).

The -level (-layer) hierarchical iterated tabu search algorithm consists of three main ingredients: (1) call of the -level hierarchical iterated tabu search procedure; (2) selection (acceptance) of the solution for perturbation; (3) perturbation of the selected solution. The “edge case” is , which means the self-contained and autonomous TS algorithm. In most cases, or are enough.

The perturbed solution serves as an input for the -level TS procedure. This procedure returns an optimized solution, and so on. The solution acceptance rule is very simple: We always accept the recently found improved solution.

The overall iterative process continues until a predefined number of iterations have been performed (see Algorithm 2). The best-found solution in the course of this process is regarded as the final solution of HITS. The resulting time complexity of HITS is proportional to , although the proportionality coefficient may be quite large. The overall complexity of the IHGHA algorithm can be formulated as , where ; is the initial population size factor, is the population size, is the number of generations, is the number of iterations of hierarchical iterated tabu search, and is the number of iterations of the self-contained tabu search procedure. (The concrete values of , , , , can be found in Table 1 in Section 3.1.)

| Algorithm 2 Pseudocode of the multi-level (k-level) hierarchical iterated tabu search algorithm |

| Hierarchical_Iterated_Tabu_Search; // input: p—current solution // output: p✩—the best found solution // parameter: k—current level (k > 0), Q⟨k⟩, Q⟨k− 1⟩, …, Q⟨0⟩—numbers of iterations begin p✩ ← p; for q⟨k⟩ ← 1 to Q⟨k⟩ do begin apply k − 1-level hierarchical iterated tabu search algorithm to p and get p∇; if z(p∇) < z(p✩) then p✩ ← p∇; // the best found solution is memorized if q⟨k⟩ < Q⟨k⟩ then begin p ← Candidate_Acceptance(p∇, p✩); apply perturbation process to p endif endfor end. |

Note. The tabu search procedure (see Algorithm 3) is in the role of the 0-level (i.e., the self-contained) tabu search algorithm.

| Algorithm 3 Pseudocode of the tabu search algorithm |

| Tabu_Search; // input: n—problem size, // p—current solution, Ξ—difference matrix // output: p•—the best found solution (along with the corresponding difference matrix) // parameters: τ—total number of tabu search iterations, h—tabu tenure, α—randomization coefficient, // Lidle_iter—idle iterations limit, HashSize—maximum size of the hash table begin clear tabu list TabuList and hash table HashTable; p• ← p; q ← 1; q′ ← 1; secondary_memory_index ← 0; improved ← FALSE; while (q ≤ τ) or (improved = TRUE) do begin // main cycle Δ′min ← ∞; Δ″min ← ∞; v′ ← 1; w′ ← 1; for i ← 1 to n − 1 do for j ← i + 1 to n do begin // n(n − 1)/2 neighbours of p are scanned Δ ← Ξ(i, j); forbidden ← iif(((TabuList(i, j) ≥ q) or (HashTable((z(p) + Δ) mod HashSize) = TRUE) and (random() ≥ α)), TRUE, FALSE); aspired ← iif(z(p) + Δ < z(p•), TRUE, FALSE); if ((Δ < Δ′min) and (forbidden = FALSE)) or (aspired = TRUE) then begin if Δ < Δ′min then begin Δ″min := Δ′min; v″ := v′; w″ := w′; Δ′min := Δ; v′ := i; w′ := j endif else if Δ < Δ″min then begin Δ″min := Δ; v″ := i; w″ := j endif endif endfor; if Δ″min < ∞ then begin // archiving second solution, Ξ, v″, w″ secondary_memory_index ← secondary_memory_index + 1; (secondary_memory_index) ← p, Ξ, v″, w″ endif; if Δ′min < ∞ then begin // replacement of the current solution and recalculation of the values of ←; recalculate the values of the matrix Ξ; if z(p) < z(p•) then begin p• ← p; q′ ← q endif; // the best so far solution is memorized TabuList(v′, w′) ← q + h; // the elements p(v′), p(w′) become tabu HashTable((z(p) + Δ′min) mod HashSize) ← TRUE endif; improved ← iif(Δ′min < 0, TRUE, FALSE); if (improved = FALSE) and (q − q′ > Lidle_iter) and (q <τ − Lidle_iter) then begin // retrieving solution from the secondary memory random_access_index ← random(β × secondary_memory_index, secondary_memory_index); p, Ξ, v″, w″ ←(random_access_index); ←; recalculate the values of the matrix Ξ; clear tabu list TabuList; TabuList(v″, w″) ← q + h; // the elements p(v″), p(w″) become tabu q′ ← q endif; q ← q + 1 endwhile end. |

Notes. 1. The immediate function returns if , otherwise it returns . 2. The function returns a pseudo-random number uniformly distributed in . 3. The function returns a pseudo-random number in . 4. The values of the matrix are recalculated according to the formula (7). 5. denotes the random access parameter (we used ).

2.7.1. Tabu Search Algorithm

The -level HITS algorithm (the ITS algorithm) uses a self-contained tabu search (TS) procedure. It is this procedure that is wholly responsible for the direct improvement of a particular solution and is in the role of the intensification of the search process. Briefly speaking, the TS procedure analyses the whole neighbourhood of the incumbent solution and accepts the non-tabu (i.e., non-prohibited) move that most improves (least degrades) the objective function. In order to avoid the cycling process, the return to the recently visited solutions is forbidden for some time (tenure).

Going into more detail, the tabu list (list of prohibitions), , is operationalized as a matrix of size , where is the problem size. Suppose that in the course of the algorithm, the -th and -th elements in the permutation have been interchanged. Then, the tabu list (matrix) entry memorizes the current iteration number, (), plus the tabu tenure, (), i.e., the number of the future iteration starting at which the corresponding elements may again be interchanged. (Thus, . Of course, initially, all the values of are equal to zero. The value of depends on the problem size, (we have used the values between and ).) We also use the hash table, . So, the formalistic tabu criterion, , can specifically be defined as provided in this expression: , where is the current solution, are the element indices (), and denotes the hash table size. It should be noted that, in our algorithm, the tabu status is disregarded at random moments with a (very) small probability. This strategy enables an increase in the number of non-prohibited solutions and does not suppress the search directions too much. In this case, the modified tabu criterion, , is defined in the following way: , where is a uniform random real number within the interval , () denotes the randomization parameter (we used ).

The aspiration criterion, , is used alongside the tabu criterion. This means that the tabu status is ignored if the predefined condition is satisfied. In particular, the currently attained solution appears better than the best solution found so far. The formalized aspiration criterion, , is defined according to the following equation: , where denotes the best value of the objective function found so far.

Having the tabu criterion and aspiration criterion defined, a move (acceptance) criterion, , is defined as follows: , where denotes the minimum value of the objective function found at the current iteration. (At the beginning, .)

The best move is determined by the use of the following formula: , where is the current solution, .

More to this, our TS algorithm utilizes an additional memory—a secondary memory, (a similar approach is used in [94]). The purpose of using this memory is to archive high-quality solutions, which, although they are evaluated as very good, are not selected. Thus, if the best solution stays unchanged for more than iterations, then the entire tabu list is wiped out and the search is reset to one of the “second” solutions in . (Here, denotes the number of iterations of the TS algorithm, and is an idle iterations limit factor such that .)

The pseudo-code of the tabu search algorithm is shown in Algorithm 3.

Remark 1.

The TS procedure is finished as soon as the total number of TS iterations, , have been executed.

Remark 2.

After finishing the tabu search procedure, the obtained solution is subjected to perturbation, which is described in the next section.

2.7.2. Perturbation (Mutation) Process

Roughly speaking, the perturbation is a stochastic, smaller, or larger move, or consecutive moves within a particular neighbourhood. The well-known examples of perturbations include classical random swap moves (shuffling) and complex destruction (-reconstruction) moves.

It is strongly believed that the perturbation component of the iterative heuristic algorithms has a very significant impact on the overall efficacy of these algorithms. This component constitutes one of the crucial factors in revealing new, undiscovered regions and directions in the solution space during the course of the search process, and this fact is acknowledged in several important research works in prestigious sources [95,96,97,98,99,100,101,102,103,104,105,106]. For example, in [96], it is ascertained that “perturbation is an effective strategy used to jump out of a local optimum and to search a new promising region”. In [97], it is confirmed that perturbations can really help escape traps in (deep) local optima, which can occur in tabu search algorithms. A combination of several perturbation schemes was also tried [99,100,101,102,103,104]. For example, authors of [102,103] use both weak perturbations and strong perturbations because it was observed that weak perturbations are not sufficient for the algorithms to continue their search successfully. Therefore, the authors propose employing strong perturbations to jump out of local optima traps and bring the search to unexplored, distant regions in the search space. In [105], the authors use another term (“shake procedure”) for the perturbation procedure, but the essence remains the same.

The perturbation is characterized in terms of a strength (mutation rate) parameter—denoted as —which may be static or dynamic, and which can be defined as the number of moves during the perturbation process. This parameter is, in fact, the most influential quantitative factor in the perturbation process, and the size of perturbation strength, , greatly affects the search progress. The larger the value of , the larger number of elements is affected in the perturbation process (the more disrupted element becomes the solution, and the larger element is the distance between an unaffected solution and a permuted one), and vice versa. However, perturbation strength that is too large will cause the random–restart-like search. Meanwhile, perturbation strength that is too small will not be able to jump out of local optima, or will simply fall into a cyclic process [106].

Our perturbation process is, therefore, adaptive. It is adaptive in the sense that the perturbation strength depends on the particular problem (instance) size. That is, instances of different sizes will have different perturbation strengths. In order to adapt the perturbation strength to the instances of different sizes, the actual perturbation strength, , is set to , where () is the perturbation strength factor. It is obvious that .

Regarding the formal definition of the perturbation procedure, it can be described by using a two-tuple operator : , such that, if , then and . So, transforms the incumbent solution into a new solution from the neighbourhood , such that .

In our genetic algorithm, the perturbation process is integrated within the hierarchical iterated tabu search algorithm. In particular, the perturbation is applied to a chosen—improved—solution, which is picked in accordance with the predetermined candidate solution-acceptance rule. (In our algorithm, we used two rules: (1) accept every new (last) improved solution; (2) accept the best-so-far-found solution.) The permuted solution serves as an input for the tabu search procedure.

We dispose two main sorts of perturbation procedures: (1) random perturbation, (2) quasi-greedy (greedy-random) perturbation. In turn, two types of random perturbations are proposed: (i) uniform (steady) random perturbation; (ii) Lévy perturbation. In both cases, the perturbation process is based on the random pairwise interchanges of the elements of the permutation. So, in the first case, the value of the perturbation rate factor is fixed (immutable) (). Meanwhile, in the second case, the perturbation rate factor follows the Lévy distribution law [107,108]. In this case, the factor is as follows: , where denotes the current iteration number, refers to the perturbation rate factor at th iteration, is from the interval , and the function (operator) “wraps” the particular number within the interval . (step size) is calculated using Lévy distribution. It can be evaluated using the following formula: , where and are zero mean Gaussian random variables of variances and . Here, , where is a parameter (we used ) [109,110]. is a Gamma function that can practically be expressed as [111]. Note that all random perturbations are quite aggressive and vigorous and introduce a considerable extent of diversification to the search process.

We have also designed three variants of the quasi-greedy (greedy-random) perturbations. All of them are based on “softly” (partially) randomized tabu search, where the tabu search process is intertwined with the random moves (the terms “tabu search-driven perturbation” and “tabu shaking” [98] can also be used). The tabu-based perturbation is very similar to the ordinary tabu search procedure. Two solutions—the best available non-tabu solution and the second-best non-tabu solution—are found by exploring the whole neighbourhood of the current solution. After this, only exactly one of these solutions is accepted with the probability, called switch probability, (), where the precise value of is assigned by the user. The following are the three distinct particular variants: (1) (quasi-greedy perturbation 1); (2) (quasi-greedy perturbation 2); (3) (quasi-greedy perturbation 3). Notice that the tabu-based perturbations are rather weak and allow for a small amount of diversification. Still, they are quite promising because they take the solutions’ quality into consideration by avoiding too much deterioration of the solutions.

All the above-mentioned five perturbation procedures can be juxtaposed in many different ways to achieve the most relevant balance between exploration and exploitation in the search process. We argue that these procedures are among the most influential and sensitive factors for the efficiency of our genetic algorithm (as can be seen in the next section). The top-level pseudocode (in Backus–Naur-like syntax form) of the perturbation process is presented in Algorithm 4, which is a template for a family of multi-type perturbation procedures. It can be perceived that there altogether exists at least different potential variations of Algorithm 4: variations if and variations if (also see Table A1 in Appendix A). So, this indeed offers a very large degree of flexibility and versatility to the perturbation process, and the researchers can choose a variation that suits their individual demands to the highest level. Note that such an approach is in connection to what is known as multi-strategy algorithms [112] and automatic design of algorithms (see, for example, [113,114]).

| Algorithm 4 High-level (abstract) pseudocode of the universalized multi-type (multi-strategy) perturbation procedure |

| Universalized_Multi-Type_Perturbation; // input: p—current solution //—(best) obtained perturbed/reconstructed solution // parameters: ω—perturbation strength (mutation rate) factor, Nperturb—number of perturbation iterations // PerturbVar—perturbation variant begin p⋲← EMPTY_SOLUTION; [apply uniform random perturbation|Lévy perturbation; get permuted solution p; p ← p;] for i ← 1 to Nperturb do begin [apply uniform random perturbation|Lévy perturbation; get permuted solution p; p ← p;] [apply quasi-greedy perturbation 1|2|3; get permuted solution p; memorize best permuted solution as p⋲;] [apply uniform random perturbation|Lévy perturbation; get permuted solution p; p ← p] endfor; [apply uniform random perturbation|Lévy perturbation get permuted solution p;] if p⋲ is not EMPTY_SOLUTION then p← p⋲ end. |

Notes. 1. The uniform random perturbation and Lévy perturbation procedures are applied to the incumbent solution considering the particular perturbation strength (mutation rate) factor . Remark. 2. All the scenarios of Algorithm 4 are managed by using the switch parameter .

3. Computational Experiments

3.1. Experiment Setup

The improved hybrid genetic-hierarchical algorithm is coded by using C# programming language. The computational experiments have been conducted using a x86 series personal computer with Intel 3.1 GHz 4 cores processor, 8 GB RAM, and 64-bit MS Windows operating system. No parallel processing is used, and only one core is assigned to a separate algorithm.

In the experiments, we have used the test (benchmark) data instances from the electronic public library of the QAP instances—QAPLIB [115,116], as well as the papers by De Carvalho et al. (2006) [117] and Drezner et al. (2005) [118] (see also [119]). (The best-known solutions are from QAPLIB and [26,27,32,119,120,121,122,123].) Overall, data instances were examined. The sizes of the instances vary between and .

As the main algorithm quantitative performance criteria, we adopt the average deviation () of the objective function and the number of best-known (pseudo-optimal) found solutions (). The average deviation is calculated by the following formula: , where is the average objective function value and denotes the best-known value of the objective function. The average deviation and the number of best-known solutions are calculated over independent runs of the algorithm (where ).

At every separate run, the algorithm with the fixed set of control parameters is applied to the particular benchmark data instance. Each time, the genetic algorithm is starting from a new, distinct random initial population. The execution of the algorithm is terminated if the total number of generations, , has been reached, or if the best-known (pseudo-optimal) solution has been found.

The particular values of the control parameters used in the genetic algorithm are shown in Table 1.

Table 1.

Values (ranges of values) of the control parameters of the improved hybrid genetic algorithm used in the experiments.

Table 1.

Values (ranges of values) of the control parameters of the improved hybrid genetic algorithm used in the experiments.

| Parameters | Values | Remarks |

|---|---|---|

| Population size, | ||

| Initial population size factor, | ||

| Number of generations, | ||

| Distance threshold, | ||

| Idle generations limit, | ||

| Total number of iterations of hierarchical iterated tabu search, Q | ||

| Number of iterations of tabu search, τ | ||

| Tabu tenure, h | h > 0 | |

| Randomization coefficient for tabu search, | ||

| Idle iterations limit, | ||

| Perturbation (mutation) rate factor, ω | ||

| Switch probability, | ||

| Perturbation variant (variation), PerturbVar | ||

| Number of runs of the algorithm, |

3.2. Main Results: Comparison of Algorithms and Discussion

The results of the conducted experiments are presented in Table 2. In this table, we provide the following information: —best-known value of the objective function, —average deviation of the objective function, —the average time (CPU time) per one run of the algorithm.

Table 2.

Results of IHGHA for the set of 140 instances of QAPLIB [115,116,117,119].

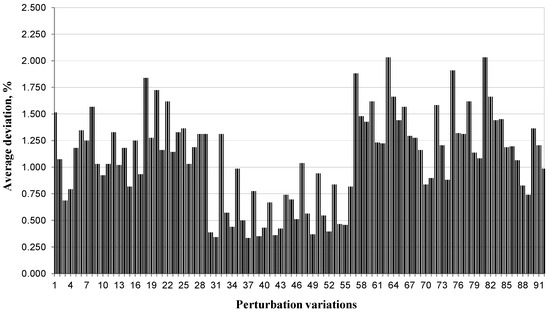

These results were taken by properly adjusting the most suitable variations of the perturbation process (the sensitivity of results on the variations of perturbation procedure obtained in the preliminary experiments can be observed in Figure A1 in Appendix A—the represented results indicate that the performance of IHGHA heavily depends on perturbation process). Only the best-attained selected results are presented in Table 2, while the results of preparatory experimentation are omitted for convenience and brevity’s sake. It can be viewed that the results from Table 2 evidently demonstrate the excellent performance and reliability of the proposed genetic algorithm from both the quality of solutions and the computational resources point of view.

Regarding the comparison of our algorithm and other heuristic algorithms for the QAP, it can be seen, first of all, that our results (see Table 2) are obviously better than those reached in the previous version of the hybrid genetic-hierarchical algorithm (see Table 9 in [29]).

We have also compared our algorithm and the other state-of-the-art heuristic algorithms for the QAP, in particular, the frequent pattern-based search algorithm [67] and the newest elaborated versions of the hybrid genetic algorithm [27,118]. The results of comparisons are shown in Table 3, Table 4, Table 5 and Table 6. As can be seen, these results again illustrate the superior efficacy of IHGHA. It appears that the results are seemingly in our favour with respect to all the other competitors considered here. This is very evident in our algorithm’s run times, especially for the small- and medium-scaled problems. (Notice that the difference in run time between algorithms can exceed a factor of for some instances; see, for example, the results of comparison of IHGHA and HGA for the instances tai27e∗, tai45e∗, tai75e∗, and tai125e∗.)

Table 3.

Comparison of the results of IHGHA and frequent pattern-based search (FPBS) algorithm [67] (part I, part II).

Table 4.

Comparison of the results of IHGHA and hybrid genetic algorithm (HGA) [118] (part I, part II).

Table 5.

Comparison of the results of IHGHA and hybrid genetic algorithm with biologically inspired parent selection (HGA-BIPS) [27].

Table 6.

Comparison of the results of IHGHA and hybrid genetic algorithm with biologically inspired parent selection (HGA-BIPS) [27].

In the final analysis, our main observations are as follows:

- Based on Table 2, we were able to achieve % success rate for almost all examined instances (in particular, for instances out of ). These instances are solved to (pseudo-)optimality within very reasonable computation times, which are, to our knowledge, record-breaking in many cases. The exception is only a handful of instances (namely, bl100, bl121, bl144, ci144, tai80a, tai100a, tho150, tai343e∗, and tai729e∗). Among these instances, the instances bl100, bl121, bl144, tai100a, tai343e∗, and tai729e∗ are overwhelmingly difficult for the heuristic algorithms and still need new revolutionizing algorithmic solutions.

- The best-known solution was, in total, found in runs out of runs ( of the runs). We also found the best-known solution at least once out of runs for instances out of ( of the instances). And we achieved an average deviation of less than for instances out of ( of the instances). The cumulative average deviation over instances is equal to .

- On top of this, we were successful in achieving three new best-known solutions for the instances bl100, bl121, and bl144, which are presented in Table 7, Table 8 and Table 9.

Table 7. History of discovering the best-known solutions for the QAP instance bl100.

Table 7. History of discovering the best-known solutions for the QAP instance bl100. Table 8. History of discovering the best-known solutions for the QAP instance bl121.

Table 8. History of discovering the best-known solutions for the QAP instance bl121. Table 9. History of discovering the best-known solutions for the QAP instance bl144.

Table 9. History of discovering the best-known solutions for the QAP instance bl144.

Overall, the results from Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8 and Table 9 demonstrate that our algorithm is quite successful with respect to the three essential aspects: (i) effectiveness in obtaining the zero percentage average deviation of the yielded solutions for most of the examined problems; (ii) ability to reaching out for the (pseudo-)optimal or near-optimal solutions within extremely small running times for the small- and medium-scaled instances; (iii) potential to achieving the best-known/record-breaking solutions for the hard instances. Overall, it could be argued that the obtained results confirm the usefulness of the proposed two-layer architecture of the hybrid genetic algorithm and the relevance of our multi-type perturbation process, which is integrated within the hierarchical tabu search algorithm and which seems to be very well-suited for our hybrid genetic algorithm.

It should be noted that there is still room for further improvements. In this regard, the parameter adjustment/calibration is one of the key factors in obtaining the increased efficacy of the algorithm. In this respect, two main ways are manual and automatic calibration.

In the first case, the designer’s and/or user’s acquired experience and competence play an extremely important role. On the other hand, the determination of the most sensitive and influential parameters is also of the highest importance.

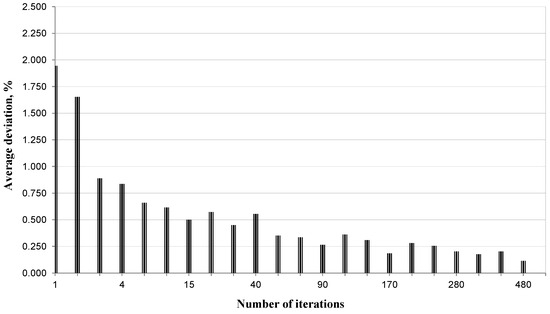

As a representative example, we demonstrate how, in our case, the performance of the algorithm is increased by simply manipulating the number of iterations of the algorithm. The results are presented in Figure A2 in Appendix A.

Regarding the automatic parameter adaptation, we believe that this could be one of the very promising future research directions.

Last but not least, the performance of IHGHA can be improved even more by applying an elementary, straightforward approach of parallelization in a distributed-like manner. Where autonomous, independent clones of the algorithm are assigned to separate cores of the computer. So, instead of running a single copy of the algorithm times, the algorithm is run only time on cores (with different values of the random number generator’s seed). The anticipated effect is a decrease in the total run time by a factor of .

4. Concluding Remarks

In this paper, we have presented the improved version of the hybrid genetic-hierarchical algorithm (IHGHA) for the solution of the well-known combinatorial optimization problem—the quadratic assignment problem. The algorithm was extensively examined on QAP benchmark instances of various categories.

The following are the main essential points with respect to the presented algorithm:

- Two-level scheme of the hybrid primary (master)-secondary (slave) genetic algorithm is proposed;

- The multi-strategy perturbation process—which is integrated within the hierarchical iterated tabu search algorithm—is introduced.

This multi-type perturbation process plays an extremely significant role in our hybrid genetic algorithm due to its flexibility and versatility, and we think that it will be very interesting and helpful for researchers to contribute to furthering the progress of heuristic algorithms.

The obtained results confirm the excellent efficiency of the proposed algorithm. The set of more than a hundred QAP instances with sizes up to is solved very effectively. This is especially noticeable for the small- and medium-sized instances. Three new record-breaking solutions have been achieved for the extraordinarily hard QAP instances, and we hope that our algorithm can serve as a landmark for the new heuristic algorithms for the QAP.

As to future work, it would be worthy to investigate the automatic (adaptive) selection/determination of the perturbation procedure variants in the hierarchical tabu search of the genetic algorithm.

Author Contributions

Conceptualization, A.M.; Methodology, A.M.; Software, A.M. and A.A.; Validation, A.O. and D.V.; Formal analysis, A.M.; Investigation, A.M. and A.A.; Resources, A.M.; Data curation, A.M.; Writing—original draft, A.M.; Writing—review & editing, A.O., D.V. and G.Ž.; Visualization, D.V.; Supervision, A.M.; Project administration, A.M.; Funding acquisition, A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Variations of the perturbation procedure.

Table A1.

Variations of the perturbation procedure.

| Perturbation Variations | |||

|---|---|---|---|

| 1—URP | 24—LP, QGP 1, URP | 47—URP, MQGP 3, URP | 70—M(URP, QGP 2), LP |

| 2—LP | 25—LP, QGP 1, LP | 48—URP, MQGP 1, LP | 71—M(URP, QGP 3), LP |

| 3—QGP 1 | 26—LP, QGP 2, URP | 49—URP, MQGP 2, LP | 72—M(LP, QGP 1), LP |

| 4—QGP 2 | 27—LP, QGP 2, LP | 50—URP, MQGP 3, LP | 73—M(LP, QGP 2), LP |

| 5—QGP 3 | 28—LP, QGP 3, URP | 51—LP, MQGP 1, URP | 74—M(LP, QGP 3), LP |

| 6—URP, QGP 1 | 29—LP, QGP 3, LP | 52—LP, MQGP 2, URP | 75—M(QGP 1, URP) |

| 7—URP, QGP 2 | 30—MQGP 1 | 53—LP, MQGP 3, URP | 76—M(QGP 2, URP) |

| 8—URP, QGP 3 | 31—MQGP 2 | 54—LP, MQGP 1, LP | 77—M(QGP 3, URP) |

| 9—LP, QGP 1 | 32—MQGP 3 | 55—LP, MQGP 2, LP | 78—M(QGP 1, LP) |

| 10—LP, QGP 2 | 33—URP, MQGP 1 | 56—LP, MQGP 3, LP | 79—M(QGP 2, LP) |

| 11—LP, QGP 3 | 34—URP, MQGP 2 | 57—M(URP, QGP 1) | 80—M(QGP 3, LP) |

| 12—QGP 1, URP | 35—URP, MQGP 3 | 58—M(URP, QGP 2) | 81—URP, M(QGP 1, URP) |

| 13—QGP 1, LP | 36—LP, MQGP 1 | 59—M(URP, QGP 3) | 82—URP, M(QGP 2, URP) |

| 14—QGP 2, URP | 37—LP, MQGP 2 | 60—M(LP, QGP 1) | 83—URP, M(QGP 3, URP) |

| 15—QGP 2, LP | 38—LP, MQGP 3 | 61—M(LP, QGP 2) | 84—URP, M(QGP 1, LP) |

| 16—QGP 3, URP | 39—MQGP 1, URP | 62—M(LP, QGP 3) | 85—URP, M(QGP 2, LP) |

| 17—QGP 3, LP | 40—MQGP 2, URP | 63—M(URP, QGP 1), URP | 86—URP, M(QGP 3, LP) |

| 18—URP, QGP 1, URP | 41—MQGP 3, URP | 64—M(URP, QGP 2), URP | 87—LP, M(QGP 1, URP) |

| 19—URP, QGP 1, LP | 42—MQGP 1, LP | 65—M(URP, QGP 3), URP | 88—LP, M(QGP 2, URP) |

| 20—URP, QGP 2, URP | 43—MQGP 2, LP | 66—M(LP, QGP 1), URP | 89—LP, M(QGP 3, URP) |

| 21—URP, QGP 2, LP | 44—MQGP 3, LP | 67—M(LP, QGP 2), URP | 90—LP, M(QGP 1, LP) |

| 22—URP, QGP 3, URP | 45—URP, MQGP 1, URP | 68—M(LP, QGP 3), URP | 91—LP, M(QGP 2, LP) |

| 23—URP, QGP 3, LP | 46—URP, MQGP 2, URP | 69—M(URP, QGP 1), LP | 92—LP, M(QGP 3, LP) |

Notes. URP—uniform (steady) random perturbation, LP—Lévy perturbation, QGP—quasi-greedy perturbation, MQGP—multiple (cyclic) quasi-greedy perturbation, M(URP, QGP)—multiple (cyclic) uniform (steady) random and quasi-greedy perturbation, M(LP, QGP)—multiple (cyclic) Lévy and quasi-greedy perturbation, M(QGP, URP)—multiple (cyclic) quasi-greedy and uniform (steady) random perturbation, M(QGP, LP)—multiple (cyclic) quasi-greedy and Lévy perturbation.

Figure A1.

Percentage average deviation of solutions vs. perturbation variations for the QAP instance bl49 using short runs of IHGHA.

Figure A2.

Percentage average deviation of solutions versus number of iterations for the QAP instance bl49.

References

- Çela, E. The Quadratic Assignment Problem: Theory and Algorithms; Kluwer: Dordrecht, The Netherland, 1998. [Google Scholar]

- Koopmans, T.; Beckmann, M. Assignment problems and the location of economic activities. Econometrica 1957, 25, 53–76. [Google Scholar] [CrossRef]

- Drezner, Z. The quadratic assignment problem. In Location Science; Laporte, G., Nickel, S., Saldanha da Gama, F., Eds.; Springer: Cham, Switzerland, 2015; pp. 345–363. [Google Scholar] [CrossRef]

- Sahni, S.; Gonzalez, T. P-complete approximation problems. J. ACM 1976, 23, 555–565. [Google Scholar] [CrossRef]

- Anstreicher, K.M.; Brixius, N.W.; Gaux, J.P.; Linderoth, J. Solving large quadratic assignment problems on computational grids. Math. Program. 2002, 91, 563–588. [Google Scholar] [CrossRef]

- Hahn, P.M.; Zhu, Y.-R.; Guignard, M.; Hightower, W.L.; Saltzman, M.J. A level-3 reformulation-linearization technique-based bound for the quadratic assignment problem. INFORMS J. Comput. 2012, 24, 202–209. [Google Scholar] [CrossRef]

- Date, K.; Nagi, R. Level 2 reformulation linearization technique-based parallel algorithms for solving large quadratic assignment problems on graphics processing unit clusters. INFORMS J. Comput. 2019, 31, 771–789. [Google Scholar] [CrossRef]

- Ferreira, J.F.S.B.; Khoo, Y.; Singer, A. Semidefinite programming approach for the quadratic assignment problem with a sparse graph. Comput. Optim. Appl. 2018, 69, 677–712. [Google Scholar] [CrossRef]

- Armour, G.C.; Buffa, E.S. A heuristic algorithm and simulation approach to relative location of facilities. Manag. Sci. 1963, 9, 294–304. [Google Scholar] [CrossRef]

- Buffa, E.S.; Armour, G.C.; Vollmann, T.E. Allocating facilities with CRAFT. Harvard Bus. Rev. 1964, 42, 136–158. [Google Scholar]

- Murthy, K.A.; Li, Y.; Pardalos, P.M. A local search algorithm for the quadratic assignment problem. Informatica 1992, 3, 524–538. [Google Scholar]

- Pardalos, P.M.; Murthy, K.A.; Harrison, T.P. A computational comparison of local search heuristics for solving quadratic assignment problems. Informatica 1993, 4, 172–187. [Google Scholar]

- Angel, E.; Zissimopoulos, V. On the quality of local search for the quadratic assignment problem. Discret. Appl. Math. 1998, 82, 15–25. [Google Scholar] [CrossRef]

- Benlic, U.; Hao, J.-K. Breakout local search for the quadratic assignment problem. Appl. Math. Comput. 2013, 219, 4800–4815. [Google Scholar] [CrossRef]

- Aksan, Y.; Dokeroglu, T.; Cosar, A. A stagnation-aware cooperative parallel breakout local search algorithm for the quadratic assignment problem. Comput. Ind. Eng. 2017, 103, 105–115. [Google Scholar] [CrossRef]

- Misevičius, A. A modified simulated annealing algorithm for the quadratic assignment problem. Informatica 2003, 14, 497–514. [Google Scholar] [CrossRef]

- Taillard, E.D. Robust taboo search for the QAP. Parallel Comput. 1991, 17, 443–455. [Google Scholar] [CrossRef]

- Misevicius, A. A tabu search algorithm for the quadratic assignment problem. Comput. Optim. Appl. 2005, 30, 95–111. [Google Scholar] [CrossRef]

- Fescioglu-Unver, N.; Kokar, M.M. Self controlling tabu search algorithm for the quadratic assignment problem. Comput. Ind. Eng. 2011, 60, 310–319. [Google Scholar] [CrossRef]

- Misevicius, A. An implementation of the iterated tabu search algorithm for the quadratic assignment problem. OR Spectrum 2012, 34, 665–690. [Google Scholar] [CrossRef]

- Shylo, P.V. Solving the quadratic assignment problem by the repeated iterated tabu search method. Cybern. Syst. Anal. 2017, 53, 308–311. [Google Scholar] [CrossRef]

- Drezner, Z. A new genetic algorithm for the quadratic assignment problem. INFORMS J. Comput. 2003, 15, 320–330. [Google Scholar] [CrossRef]

- Misevicius, A. An improved hybrid genetic algorithm: New results for the quadratic assignment problem. Knowl.-Based Syst. 2004, 17, 65–73. [Google Scholar] [CrossRef]

- Benlic, U.; Hao, J.-K. Memetic search for the quadratic assignment problem. Expert Syst. Appl. 2015, 42, 584–595. [Google Scholar] [CrossRef]

- Ahmed, Z.H. A hybrid algorithm combining lexisearch and genetic algorithms for the quadratic assignment problem. Cogent Eng. 2018, 5, 1423743. [Google Scholar] [CrossRef]

- Drezner, Z.; Drezner, T.D. The alpha male genetic algorithm. IMA J. Manag. Math. 2019, 30, 37–50. [Google Scholar] [CrossRef]

- Drezner, Z.; Drezner, T.D. Biologically inspired parent selection in genetic algorithms. Ann. Oper. Res. 2020, 287, 161–183. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, F.; Zhou, Y.; Zhang, Z. A hybrid method integrating an elite genetic algorithm with tabu search for the quadratic assignment problem. Inf. Sci. 2020, 539, 347–374. [Google Scholar] [CrossRef]

- Misevičius, A.; Verenė, D. A hybrid genetic-hierarchical algorithm for the quadratic assignment problem. Entropy 2021, 23, 108. [Google Scholar] [CrossRef]

- Ryu, M.; Ahn, K.-I.; Lee, K. Finding effective item assignment plans with weighted item associations using a hybrid genetic algorithm. Appl. Sci. 2021, 11, 2209. [Google Scholar] [CrossRef]

- Silva, A.; Coelho, L.C.; Darvish, M. Quadratic assignment problem variants: A survey and an effective parallel memetic iterated tabu search. Eur. J. Oper. Res. 2021, 292, 1066–1084. [Google Scholar] [CrossRef]

- Wang, H.; Alidaee, B. A new hybrid-heuristic for large-scale combinatorial optimization: A case of quadratic assignment problem. Comput. Ind. Eng. 2023, 179, 109220. [Google Scholar] [CrossRef]

- Ismail, M.; Rashwan, O. A Hierarchical Data-Driven Parallel Memetic Algorithm for the Quadratic Assignment Problem. Available online: https://ssrn.com/abstract=4517038 (accessed on 22 January 2024).

- Arza, E.; Pérez, A.; Irurozki, E.; Ceberio, J. Kernels of Mallows models under the Hamming distance for solving the quadratic assignment problem. Swarm Evol. Comput. 2020, 59, 100740. [Google Scholar] [CrossRef]

- Pradeepmon, T.G.; Panicker, V.V.; Sridharan, R. A variable neighbourhood search enhanced estimation of distribution algorithm for quadratic assignment problems. OPSEARCH 2021, 58, 203–233. [Google Scholar] [CrossRef]

- Hameed, A.S.; Aboobaider, B.M.; Mutar, M.L.; Choon, N.H. A new hybrid approach based on discrete differential evolution algorithm to enhancement solutions of quadratic assignment problem. Int. J. Ind. Eng. Comput. 2020, 11, 51–72. [Google Scholar] [CrossRef]

- Gambardella, L.M.; Taillard, E.D.; Dorigo, M. Ant colonies for the quadratic assignment problem. J. Oper. Res. Soc. 1999, 50, 167–176. [Google Scholar] [CrossRef]

- Hafiz, F.; Abdennour, A. Particle swarm algorithm variants for the quadratic assignment problems—A probabilistic learning approach. Expert Syst. Appl. 2016, 44, 413–431. [Google Scholar] [CrossRef]

- Dokeroglu, T.; Sevinc, E.; Cosar, A. Artificial bee colony optimization for the quadratic assignment problem. Appl. Soft Comput. 2019, 76, 595–606. [Google Scholar] [CrossRef]

- Samanta, S.; Philip, D.; Chakraborty, S. A quick convergent artificial bee colony algorithm for solving quadratic assignment problems. Comput. Ind. Eng. 2019, 137, 106070. [Google Scholar] [CrossRef]

- Peng, Z.-Y.; Huang, Y.-J.; Zhong, Y.-B. A discrete artificial bee colony algorithm for quadratic assignment problem. J. High Speed Netw. 2022, 28, 131–141. [Google Scholar] [CrossRef]

- Adubi, S.A.; Oladipupo, O.O.; Olugbara, O.O. Evolutionary algorithm-based iterated local search hyper-heuristic for combinatorial optimization problems. Algorithms 2022, 15, 405. [Google Scholar] [CrossRef]

- Wu, P.; Hung, Y.-Y.; Yang, K.-J. A Revised Electromagnetism-Like Metaheuristic For.pdf. Available online: https://www.researchgate.net/profile/Peitsang-Wu/publication/268412372_A_REVISED_ELECTROMAGNETISM-LIKE_METAHEURISTIC_FOR_THE_QUADRATIC_ASSIGNMENT_PROBLEM/links/54d9e45f0cf25013d04353b9/A-REVISED-ELECTROMAGNETISM-LIKE-METAHEURISTIC-FOR-THE-QUADRATIC-ASSIGNMENT-PROBLEM.pdf (accessed on 22 January 2024).

- Riffi, M.E.; Bouzidi, A. Discrete cat swarm optimization for solving the quadratic assignment problem. Int. J. Soft Comput. Softw. Eng. 2014, 4, 85–92. [Google Scholar] [CrossRef]

- Lim, W.L.; Wibowo, A.; Desa, M.I.; Haron, H. A biogeography-based optimization algorithm hybridized with tabu search for the quadratic assignment problem. Comput. Intell. Neurosci. 2016, 2016, 5803893. [Google Scholar] [CrossRef] [PubMed]

- Houssein, E.H.; Mahdy, M.A.; Blondin, M.J.; Shebl, D.; Mohamed, W.M. Hybrid slime mould algorithm with adaptive guided differential evolution algorithm for combinatorial and global optimization problems. Expert Syst. Appl. 2021, 174, 114689. [Google Scholar] [CrossRef]

- Guo, M.-W.; Wang, J.-S.; Yang, X. An chaotic firefly algorithm to solve quadratic assignment problem. Eng. Lett. 2020, 28, 337–342. [Google Scholar]

- Rizk-Allah, R.M.; Slowik, A.; Darwish, A.; Hassanien, A.E. Orthogonal Latin squares-based firefly optimization algorithm for industrial quadratic assignment tasks. Neural Comput. Appl. 2021, 33, 16675–16696. [Google Scholar] [CrossRef]

- Abdel-Baset, M.; Wu, H.; Zhou, Y.; Abdel-Fatah, L. Elite opposition-flower pollination algorithm for quadratic assignment problem. J. Intell. Fuzzy Syst. 2017, 33, 901–911. [Google Scholar] [CrossRef]

- Dokeroglu, T. Hybrid teaching–learning-based optimization algorithms for the quadratic assignment problem. Comp. Ind. Eng. 2015, 85, 86–101. [Google Scholar] [CrossRef]

- Riffi, M.E.; Sayoti, F. Hybrid algorithm for solving the quadratic assignment problem. Int. J. Interact. Multimed. Artif. Intell. 2017, 5, 68–74. [Google Scholar] [CrossRef]

- Semlali, S.C.B.; Riffi, M.E.; Chebihi, F. Parallel hybrid chicken swarm optimization for solving the quadratic assignment problem. Int. J. Electr. Comput. Eng. 2019, 9, 2064–2074. [Google Scholar] [CrossRef]

- Badrloo, S.; Kashan, A.H. Combinatorial optimization of permutation-based quadratic assignment problem using optics inspired optimization. J. Appl. Res. Ind. Eng. 2019, 6, 314–332. [Google Scholar] [CrossRef]

- Kiliç, H.; Yüzgeç, U. Tournament selection based antlion optimization algorithm for solving quadratic assignment problem. Eng. Sci. Technol. Int. J. 2019, 22, 673–691. [Google Scholar] [CrossRef]

- Kiliç, H.; Yüzgeç, U. Improved antlion optimization algorithm for quadratic assignment problem. Malayas. J. Comput. Sci. 2021, 34, 34–60. [Google Scholar] [CrossRef]

- Ng, K.M.; Tran, T.H. A parallel water flow algorithm with local search for solving the quadratic assignment problem. J. Ind. Manag. Optim. 2019, 15, 235–259. [Google Scholar] [CrossRef]

- Kumar, M.; Sahu, A.; Mitra, P. A comparison of different metaheuristics for the quadratic assignment problem in accelerated systems. Appl. Soft Comput. 2021, 100, 106927. [Google Scholar] [CrossRef]

- Yadav, A.A.; Kumar, N.; Kim, J.H. Development of discrete artificial electric field algorithm for quadratic assignment problems. In Proceedings of the 6th International Conference on Harmony Search, Soft Computing and Applications. ICHSA 2020. Advances in Intelligent Systems and Computing, Istanbul, Turkey, 16–17 July 2020; Nigdeli, S.M., Kim, J.H., Bekdaş, G., Yadav, A., Eds.; Springer: Singapore, 2021; Volume 1275, pp. 411–421. [Google Scholar] [CrossRef]

- Dokeroglu, T.; Ozdemir, Y.S. A new robust Harris Hawk optimization algorithm for large quadratic assignment problems. Neural Comput. Appl. 2023, 35, 12531–12544. [Google Scholar] [CrossRef]

- Acan, A.; Ünveren, A. A great deluge and tabu search hybrid with two-stage memory support for quadratic assignment problem. Appl. Soft Comput. 2015, 36, 185–203. [Google Scholar] [CrossRef]

- Chmiel, W.; Kwiecień, J. Quantum-inspired evolutionary approach for the quadratic assignment problem. Entropy 2018, 20, 781. [Google Scholar] [CrossRef]

- Drezner, Z. Taking advantage of symmetry in some quadratic assignment problems. INFOR Inf. Syst. Oper. Res. 2019, 57, 623–641. [Google Scholar] [CrossRef]

- Dantas, A.; Pozo, A. On the use of fitness landscape features in meta-learning based algorithm selection for the quadratic assignment problem. Theor. Comput. Sci. 2020, 805, 62–75. [Google Scholar] [CrossRef]

- Öztürk, M.; Alabaş-Uslu, Ç. Cantor set based neighbor generation method for permutation solution representation. J. Intell. Fuzzy Syst. 2020, 39, 6157–6168. [Google Scholar] [CrossRef]

- Alza, J.; Bartlett, M.; Ceberio, J.; McCall, J. Towards the landscape rotation as a perturbation strategy on the quadratic assignment problem. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, GECCO ’21, Lille, France, 10–14 July 2021; Chicano, F., Ed.; ACM: New York, NY, USA, 2021; pp. 1405–1413. [Google Scholar] [CrossRef]

- Amirghasemi, M. An effective parallel evolutionary metaheuristic with its application to three optimization problems. Appl. Intell. 2023, 53, 5887–5909. [Google Scholar] [CrossRef]

- Zhou, Y.; Hao, J.-K.; Duval, B. Frequent pattern-based search: A case study on the quadratic assignment problem. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 1503–1515. [Google Scholar] [CrossRef]

- Baldé, M.A.M.T.; Gueye, S.; Ndiaye, B.M. A greedy evolutionary hybridization algorithm for the optimal network and quadratic assignment problem. Oper. Res. 2021, 21, 1663–1690. [Google Scholar] [CrossRef]

- Ni, Y.; Liu, W.; Du, X.; Xiao, R.; Chen, G.; Wu, Y. Evolutionary optimization approach based on heuristic information with pseudo-utility for the quadratic assignment problem. Swarm Evol. Comput. 2024, 87, 101557. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Saber, S.; Hezam, I.M.; Sallam, K.M.; Hameed, I.A. Binary metaheuristic algorithms for 0–1 knapsack problems: Performance analysis, hybrid variants, and real-world application. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102093. [Google Scholar] [CrossRef]

- Alvarez-Flores, O.A.; Rivera-Blas, R.; Flores-Herrera, L.A.; Rivera-Blas, E.Z.; Funes-Lora, M.A.; Nino-Suárez, P.A. A novel modified discrete differential evolution algorithm to solve the operations sequencing problem in CAPP systems. Mathematics 2024, 12, 1846. [Google Scholar] [CrossRef]

- El-Shorbagy, M.A.; Bouaouda, A.; Nabwey, H.A.; Abualigah, L.; Hashim, F.A. Advances in Henry gas solubility optimization: A physics-inspired metaheuristic algorithm with its variants and applications. IEEE Access 2024, 12, 26062–26095. [Google Scholar] [CrossRef]

- Zhang, J.; Ye, J.-X.; Lin, J.; Song, H.-B. A discrete Jaya algorithm for vehicle routing problems with uncertain demands. Syst. Sci. Control Eng. 2024, 12, 2350165. [Google Scholar] [CrossRef]

- Zhang, Y.; Xing, L. A new hybrid improved arithmetic optimization algorithm for solving global and engineering optimization problems. Mathematics 2024, 12, 3221. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, T.; Cai, L.; Yang, R. Triangulation topology aggregation optimizer: A novel mathematics-based meta-heuristic algorithm for engineering applications. Expert Syst. Appl. 2024, 238 Pt B, 121744. [Google Scholar] [CrossRef]

- Loiola, E.M.; De Abreu, N.M.M.; Boaventura-Netto, P.O.; Hahn, P.; Querido, T. A survey for the quadratic assignment problem. Eur. J. Oper. Res. 2007, 176, 657–690. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Manogaran, G.; Rashad, H.; Zaied, A.N.H. A comprehensive review of quadratic assignment problem: Variants, hybrids and applications. J. Amb. Intel. Hum. Comput. 2018, 9, 1–24. [Google Scholar] [CrossRef]

- Achary, T.; Pillay, S.; Pillai, S.M.; Mqadi, M.; Genders, E.; Ezugwu, A.E. A performance study of meta-heuristic approaches for quadratic assignment problem. Concurr. Comput. Pract. Exp. 2021, 33, 1–29. [Google Scholar] [CrossRef]

- Hussin, M.S.; Stützle, T. Hierarchical iterated local search for the quadratic assignment problem. In Hybrid Metaheuristics, HM 2009, Lecture Notes in Computer Science; Blesa, M.J., Blum, C., Di Gaspero, L., Roli, A., Sampels, M., Schaerf, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5818, pp. 115–129. [Google Scholar] [CrossRef]

- Battarra, M.; Benedettini, S.; Roli, A. Leveraging saving-based algorithms by master–slave genetic algorithms. Eng. Appl. Artif. Intell. 2011, 24, 555–566. [Google Scholar] [CrossRef]

- Liu, S.; Xue, J.; Hu, C.; Li, Z. Test case generation based on hierarchical genetic algorithm. In Proceedings of the 2014 International Conference on Mechatronics, Control and Electronic Engineering, MEIC 2014, Shenyang, China, 15–17 November 2014; Atlantis Press: Dordrecht, The Netherland, 2014; pp. 278–281. [Google Scholar] [CrossRef]

- Ahmed, A.K.M.F.; Sun, J.U. A novel approach to combine the hierarchical and iterative techniques for solving capacitated location-routing problem. Cogent Eng. 2018, 5, 1463596. [Google Scholar] [CrossRef]

- Misevičius, A.; Palubeckis, G.; Drezner, Z. Hierarchicity-based (self-similar) hybrid genetic algorithm for the grey pattern quadratic assignment problem. Memet. Comput. 2021, 13, 69–90. [Google Scholar] [CrossRef]

- Frieze, A.M.; Yadegar, J.; El-Horbaty, S.; Parkinson, D. Algorithms for assignment problems on an array processor. Parallel Comput. 1989, 11, 151–162. [Google Scholar] [CrossRef]

- Merz, P.; Freisleben, B. Fitness landscape analysis and memetic algorithms for the quadratic assignment problem. IEEE Trans. Evol. Comput. 2000, 4, 337–352. [Google Scholar] [CrossRef]

- Li, Y.; Pardalos, P.M.; Resende, M.G.C. A greedy randomized adaptive search procedure for the quadratic assignment problem. In Quadratic Assignment and Related Problems. DIMACS Series in Discrete Mathematics and Theoretical Computer Science; Pardalos, P.M., Wolkowicz, H., Eds.; AMS: Providence, RI, USA, 1994; Volume 16, pp. 237–261. [Google Scholar]

- Drezner, Z. Compounded genetic algorithms for the quadratic assignment problem. Oper. Res. Lett. 2005, 33, 475–480. [Google Scholar] [CrossRef]

- Berman, O.; Drezner, Z.; Krass, D. Discrete cooperative covering problems. J. Oper. Res. Soc. 2011, 62, 2002–2012. [Google Scholar] [CrossRef]

- Tate, D.M.; Smith, A.E. A genetic approach to the quadratic assignment problem. Comput. Oper. Res. 1995, 22, 73–83. [Google Scholar] [CrossRef]

- Misevičius, A.; Kuznecovaitė, D.; Platužienė, J. Some further experiments with crossover operators for genetic algorithms. Informatica 2018, 29, 499–516. [Google Scholar] [CrossRef]

- Sivanandam, S.N.; Deepa, S.N. Introduction to Genetic Algorithms; Springer: Heidelberg, Germany; New York, NY, USA, 2008. [Google Scholar]

- Smyth, K.; Hoos, H.H.; Stützle, T. Iterated robust tabu search for MAX-SAT. In Advances in Artificial Intelligence: Proceedings of the 16th Conference of the Canadian Society for Computational Studies of Intelligence, Halifax, NS, Canada, 11–13 June 2003.; Lecture Notes in Artificial Intelligence; Xiang, Y., Chaib-Draa, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2003; Volume 2671, pp. 129–144. [Google Scholar] [CrossRef]

- Mehrdoost, Z.; Bahrainian, S.S. A multilevel tabu search algorithm for balanced partitioning of unstructured grids. Int. J. Numer. Meth. Engng. 2016, 105, 678–692. [Google Scholar] [CrossRef]

- Dell’Amico, M.; Trubian, M. Solution of large weighted equicut problems. Eur. J. Oper. Res. 1998, 106, 500–521. [Google Scholar] [CrossRef]

- Durmaz, E.D.; Şahin, R. An efficient iterated local search algorithm for the corridor allocation problem. Expert Syst. Appl. 2023, 212, 118804. [Google Scholar] [CrossRef]

- Polat, O.; Kalayci, C.B.; Kulak, O.; Günther, H.-O. A perturbation based variable neighborhood search heuristic for solving the vehicle routing problem with simultaneous pickup and delivery with time limit. Eur. J. Oper. Res. 2015, 242, 369–382. [Google Scholar] [CrossRef]

- Lu, Z.; Hao, J.-K.; Zhou, Y. Stagnation-aware breakout tabu search for the minimum conductance graph partitioning problem. Comput. Oper. Res. 2019, 111, 43–57. [Google Scholar] [CrossRef]

- Sadati, M.E.H.; Çatay, B.; Aksen, D. An efficient variable neighborhood search with tabu shaking for a class of multi-depot vehicle routing problems. Comput. Oper. Res. 2021, 133, 105269. [Google Scholar] [CrossRef]

- Qin, T.; Peng, B.; Benlic, U.; Cheng, T.C.E.; Wang, Y.; Lü, Z. Iterated local search based on multi-type perturbation for single-machine earliness/tardiness scheduling. Comput. Oper. Res. 2015, 61, 81–88. [Google Scholar] [CrossRef]

- Canuto, S.A.; Resende, M.G.C.; Ribeiro, C.C. Local search with perturbations for the prize-collecting Steiner tree problem in graphs. Networks 2001, 38, 50–58. [Google Scholar] [CrossRef]

- Shang, Z.; Zhao, S.; Hao, J.-K.; Yang, X.; Ma, F. Multiple phase tabu search for bipartite boolean quadratic programming with partitioned variables. Comput. Oper. Res. 2019, 102, 141–149. [Google Scholar] [CrossRef]

- Lai, X.; Hao, J.-K. Iterated maxima search for the maximally diverse grouping problem. Eur. J. Oper. Res. 2016, 254, 780–800. [Google Scholar] [CrossRef]

- Ren, J.; Hao, J.-K.; Rodriguez-Tello, E.; Li, L.; He, K. A new iterated local search algorithm for the cyclic bandwidth problem. Knowl.-Based Syst. 2020, 203, 106136. [Google Scholar] [CrossRef]

- Avci, M. An effective iterated local search algorithm for the distributed no-wait flowshop scheduling problem. Eng. Appl. Artif. Intell. 2023, 120, 105921. [Google Scholar] [CrossRef]

- Lai, X.; Hao, J.-K. Iterated variable neighborhood search for the capacitated clustering problem. Eng. Appl. Artif. Intell. 2016, 56, 102–120. [Google Scholar] [CrossRef]

- Li, R.; Hu, S.; Wang, Y.; Yin, M. A local search algorithm with tabu strategy and perturbation mechanism for generalized vertex cover problem. Neural Comput. Appl. 2017, 28, 1775–1785. [Google Scholar] [CrossRef]

- Lévy, P. Théorie de l’Addition des Variables Aléatoires; Gauthier-Villars: Paris, France, 1937. [Google Scholar]

- Pavlyukevich, I. Lévy flights, non-local search and simulated annealing. J. Comput. Phys. 2007, 226, 1830–1844. [Google Scholar] [CrossRef]

- Chambers, J.M.; Mallows, C.L.; Stuck, B.W. A method for simulating stable random variables. J. Am. Stat. Assoc. 1976, 71, 340–344. [Google Scholar] [CrossRef]

- Mantegna, R.N. Fast, accurate algorithm for numerical simulation of Lévy stable stochastic processes. Phys. Rev. E 1994, 49, 4677–4683. [Google Scholar] [CrossRef]

- Nemes, G. New asymptotic expansion for the Gamma function. Arch. Math. 2010, 95, 161–169. [Google Scholar] [CrossRef]

- Li, W.; Li, H.; Wang, Y.; Han, Y. Optimizing flexible job shop scheduling with automated guided vehicles using a multi-strategy-driven genetic algorithm. Egypt. Inform. J. 2024, 25, 100437. [Google Scholar] [CrossRef]

- López-Ibáñez, M.; Mascia, F.; Marmion, M.-E.; Stützle, T. A template for designing single-solution hybrid metaheuristics. In Proceedings of the Companion Publication of the 2014 Annual Conference on Genetic and Evolutionary Computation, GECCO Comp ’14, Vancouver, BC, Canada, 12–16 July 2014; Igel, C., Ed.; ACM: New York, NY, USA, 2014; pp. 1423–1426. [Google Scholar] [CrossRef]

- Meng, W.; Qu, R. Automated design of search algorithms: Learning on algorithmic components. Expert Syst. Appl. 2021, 185, 115493. [Google Scholar] [CrossRef]

- Burkard, R.E.; Karisch, S.; Rendl, F. QAPLIB—A quadratic assignment problem library. J. Glob. Optim. 1997, 10, 391–403. [Google Scholar] [CrossRef]

- QAPLIB—A Quadratic Assignment Problem Library—COR@L. Available online: https://coral.ise.lehigh.edu/data-sets/qaplib/ (accessed on 10 November 2023).

- De Carvalho, S.A., Jr.; Rahmann, S. Microarray layout as a quadratic assignment problem. In German Conference on Bioinformatics, GCB 2006, Lecture Notes in Informatics—Proceedings; Huson, D., Kohlbacher, O., Lupas, A., Nieselt, K., Zell, A., Eds.; Gesellschaft für Informatik: Bonn, Germany, 2006; Volume P-83, pp. 11–20. [Google Scholar]

- Drezner, Z.; Hahn, P.M.; Taillard, E.D. Recent advances for the quadratic assignment problem with special emphasis on instances that are difficult for metaheuristic methods. Ann. Oper. Res. 2005, 139, 65–94. [Google Scholar] [CrossRef]

- Taillard—QAP. Available online: http://mistic.heig-vd.ch/taillard/problemes.dir/qap.dir/qap.html (accessed on 30 November 2023).

- Misevičius, A. Letter: New best known solution for the most difficult QAP instance “tai100a”. Memet. Comput. 2019, 11, 331–332. [Google Scholar] [CrossRef]

- Drezner, Z. Tabu search and hybrid genetic algorithms for quadratic assignment problems. In Tabu Search; Jaziri, W., Ed.; In-Tech: London, UK, 2008; pp. 89–108. [Google Scholar] [CrossRef]

- Drezner, Z.; Marcoulides, G.A. On the range of tabu tenure in solving quadratic assignment problems. In Recent Advances in Computing and Management Information Systems; Athens Institute for Education and Research: Athens, Greece, 2009; pp. 157–168. [Google Scholar]

- Drezner, Z.; Misevičius, A. Enhancing the performance of hybrid genetic algorithms by differential improvement. Comput. Oper. Res. 2013, 40, 1038–1046. [Google Scholar] [CrossRef]