Abstract

Analysis of matrix variate data is becoming increasingly common in the literature, particularly in the field of clustering and classification. It is well known that real data, including real matrix variate data, often exhibit high levels of asymmetry. To address this issue, one common approach is to introduce a tail or skewness parameter to a symmetric distribution. In this regard, we introduce here a new distribution called the matrix variate skew-t distribution (MVST), which provides flexibility, in terms of heavy tail and skewness. We then conduct a thorough investigation of various characterizations and probabilistic properties of the MVST distribution. We also explore extensions of this distribution to a finite mixture model. To estimate the parameters of the MVST distribution, we develop an EM-type algorithm that computes maximum likelihood (ML) estimates of the model parameters. To validate the effectiveness and usefulness of the developed models and associated methods, we performed empirical experiments, using simulated data as well as three real data examples, including an application in skin cancer detection. Our results demonstrate the efficacy of the developed approach in handling asymmetric matrix variate data.

Keywords:

ECME algorithm; image segmentation; mixture models; matrix variate distributions; skewed distributions; truncated normal distribution; truncated t distribution MSC:

60E05; 62E15; 62F10; 60B20

1. Introduction

The advent of modern data-collection technologies, such as electronic sensors, cell phones and web browsers, has resulted in an abundance of multivariate data sources. Much of these data can be represented as matrix variate (three-way) data, with two ways associated to the row and column dimension of each matrix variate observation and the third one representing subjects (see [1]). Matrix data can occur in different application domains, such as spatial multivariate data, longitudinal data on multiple response variables or spatio-temporal data. For this reason, statistical methods that can effectively utilize three-way data have become increasingly popular. The matrix variate normal (MVN) distribution is one of the most commonly used matrix variate elliptical distributions. However, for many real phenomena, the tails of the MVN distribution are lighter than required, with a direct impact on the corresponding model. In particular, in robust statistical analysis, heavy-tailed distributions are essential, and these include slash and t distributions. Matrix variate t (MVT) distribution has been discussed by [2], and some distributional properties of it have also been studied by [2].

Flexibility and robustness are often lacking in symmetric models when dealing with highly asymmetric data. To address this issue, a recognized method is to add a tail or skewness parameter to a symmetric distribution. Several formulations have been discussed in the literature in the form of continuous mixtures of normal variables, where a mixing variable operates on the mean or on the variance, or on both the mean and the variance of a multivariate normal variable. A general formulation was presented in [3], which encompasses a large number of existing constructions involving continuous mixtures of normal variables. Given a real-valued function and a positive-valued function , a generalized mixture of a p variate normal distribution is given by

where denotes equality in distribution, , , and U and W are univariate random variables, with being mutually independent.

The representation in (1) can be extended to the matrix variate case as

where and are matrices representing the location and skewness, respectively, and are mutually independent. It is worth noting that the univariate nature of functions and simplifies the stochastic representation in (2), leading to more suitable properties for , and it also facilitates easier parameter estimation. Furthermore, the representation in (1) can be considered, after rearranging into a vector (denoted by ), as

In this work, we introduce and study in detail finite mixtures of a new simple matrix variate skew-t (FM-MVST) distribution, based on (2), for dealing with clustering and classification of asymmetric and heavy-tailed matrix variate data. The proposed model’s simplicity in both density function and stochastic representation leads to a convenient strategy for parameter estimation using the expectation–conditional maximization either (ECME; [4]) algorithm, which is a variant of the EM algorithm [5]. In addition, using simulated and real datasets, we show how the proposed EM algorithm can be implemented for determining the ML estimates of the model parameters for the finite mixture of the proposed model.

The rest of this paper is organized as follows. Section 2 discusses the relevant previous research. Section 3 presents the formulation of the MVST distribution and discusses how the ECME algorithm can be proposed for ML estimation of model parameters. In Section 4, the finite mixture of MVST distributions is defined, and then, the implementation of the EM algorithm for fitting the FM-MVST model is presented. The proposed methods are illustrated by two simulation studies in Section 5 and also by the analysis of three real data datasets in Section 6. Finally, some concluding remarks and possible avenues for future work are outlined in Section 7.

2. Related Studies

To address highly asymmetric data and to have more flexibility, the functions and in (2) can also be considered as p-dimensional random variables. This approach may introduce complexity in both density-function and parameter-estimation issues. For instance, ref. [6] extended the scale and shape mixtures of multivariate skew normal distributions to a matrix variate setting and studied special cases and their properties. Here, we concentrate on simplifying the proposed model and the associated estimation procedure by focusing on the univariate case of and . However, it should be noted that the proposed model here is distinct from the model presented in [6], and it cannot be viewed as a special case of it.

Based on (2), various cases have been introduced. For example, ref. [7] introduced a matrix variate skew-t distribution using the following matrix variate normal variance–mean mixture representation:

where and are matrices representing the location and skewness, respectively, and with denoting the inverse gamma distribution. Herein, we denote the random variable with representation (4) by and its resulting density function is given by

where

and is the modified Bessel function of the third kind with index x. From (4), some other matrix variate skew distributions can be introduced by assuming different distributions for W. For more details, one may refer to [8].

Ref. [9] introduced a new family of matrix variate distributions, based on the matrix variate mean mixture of normal (MVMMN) distributions, as

Based on (6), three special cases, including the restricted matrix variate skew-normal (RMVSN), exponentiated MVMMN (MVMMNE) and mixed-Weibull MVMMN (MVMMNW) distributions, have been studied by using half-normal, exponential and Weibull distributions for W, respectively. Several other skew matrix variate distributions have also been discussed in the literature; see [10,11,12].

One common statistical challenge faced by researchers is identifying sub-populations or clusters within multivariate data. Recently, researchers have explored the use of finite-mixture models for matrix variate data in applications such as image analysis, genetics and neuroscience. These models offer a flexible framework for capturing complex patterns in the data and can provide insights into the underlying sub-populations or clusters; see [8,13,14,15,16,17,18].

3. Methodology

3.1. The Model

An -variate random matrix is said to have a matrix variate skew-t (MVST) distribution, with location matrix , and scale matrices and , shape matrix and flatness parameters , if its probability density function (pdf) is

where denotes all the model parameters, and denotes the cumulative distribution function (cdf) of the student’s t distribution with degrees of freedom. The MVST distribution reduces to the RMVSN distribution [9] when .

Moreover, the MVST distribution possesses the stochastic representation

where , and . Herein, represents a doubly truncated normal distribution defined in the interval , and denotes the indicator function of set . It is important to highlight that the MVSTIG distribution, represented by (4), utilizes a single mixing random variable. In contrast, the proposed MVST distribution, represented by (8), incorporates two mixing random variables, W and U, which can significantly increase the model’s flexibility.

From (8), it is easy to show that

where ⊗ is the Kronecker product and denotes the p-variate restricted skew-t distribution (see [19,20]).

The stochastic representation given in (8) not only facilitates random number generation, but also enables the implementation of the EM algorithm for determining the maximum likelihood (ML) estimates of the parameters of the MVST distribution. This leads to the hierarchical representation

where and W are treated as latent variables. Then, has the joint pdf as

where and are the pdfs of and , respectively, and denotes the pdf of the gamma distribution with mean .

Integrating out W and , respectively, from (10), we obtain the joint pdfs

where , and

where denotes the cdf of the standard normal distribution.

Dividing (10) by (11), we obtain

Additionally, dividing (10) by (12), we obtain

From (7) and (12), it is easy to see that

where

Furthermore, by using (7) and (11), it can be shown that

where represents a doubly truncated t distribution with degrees of freedom defined in the interval . From the conditional density in (15), we find

where

Additionally, using the law of iterated expectations, we can obtain

and

where

3.2. Parameter Estimation via the ECME Algorithm

Suppose constitutes a set of -dimensional observed samples of size N arising from the MVST model. In the EM framework, the latent variables are and . With these, the complete data are given by .

According to (10), the log likelihood function of corresponding to the complete data , excluding additive constants and terms that do not involve parameters of the model, is given by

In the kth iteration, the E step requires the calculation of the so-called Q function, which is the conditional expectation of (21), given the observed data and the current estimate , where the superscript (k) denotes the updated estimates at the kth step of the iterative process. To evaluate the Q function, we then need the following conditional expectations:

which have explicit expressions as given earlier, but also the expectation

which is difficult to evaluate explicitly. So, we perform the ECME algorithm, which replaces the conditional maximization Q function (CMQ) step with the conditional maximization log likelihood (CML) step, to avoid computing the expectation in (23).

The CMQ steps are implemented, to update estimates of in the order of , , , and by maximizing, one by one, the Q function obtained in the E step. After some algebraic manipulations, they are summarized in the following CMQ and CML steps:

- CMQ step 1: Fixing , we update by maximizing (24) with respect to , leading to

- CMQ step 2: Fixing , and , we then update by maximizing (24) over , yielding

- CMQ step 3: Fixing , and , we update by maximizing (24) over , yielding

- CMQ step 4: Fixing , we obtain by maximizing (24) over , yielding

An update of can be achieved by directly maximizing the constrained actual log likelihood function. This gives rise to the following CML step:

- CML step: Update by optimizing the following constrained log likelihood function:

4. Fitting Finite Mixtures of MVST Distributions

4.1. The Model

We consider N independent random variables observed from a G-component mixture of MVST distributions, whose pdf is given by

where , and is the set containing all the parameters of the considered mixture model. To pose this mixture model as an incomplete data problem, we introduce allocation variables , where a particular element is equal to 1 if belongs to group g and is equal to zero, otherwise. Observe that follows a multinomial random vector with one trial and cell probabilities , denoted by . The hierarchical representation in (9), originally designed for the single distribution, can be extended to the mixture modeling framework, as follows:

It then follows from the hierarchical structure in (25), on the basis of the observed data and the latent data , and , excluding additive constants, the complete data log likelihood function of based on the complete data is

The expected value of (26) to start the E step, given the current parameter , requires some conditional expectations, including

and

for which we utilize the CML step, as mentioned in the preceding section. Consequently, the conditional expectation of the complete data log likelihood is obtained as

Thus, the implementation of the ECME algorithm proceeds as follows:

- E step: Given , compute , , and given in (27), for and ;

- CM step 1: Calculate

- CM step 2: Update as

- CM step 3: Update as

- CM step 4: Update as

- CM step 5: Update as

- CML step: Update by optimizing the constrained log likelihood function as

4.2. Initialization

In order to speed up the convergence process, it is important to establish a set of reasonable starting values. To start the ECME algorithm for fitting the FM-MVST model, an intuitive scheme to partition data into G components is to create an initial partition of data , using the K-means algorithm [21,22]. This yields a validate estimate of , which, in turn, yields . Then, we compute the sample mean, covariance matrix of rows and covariance matrix of columns of as good initial estimates for , and , as follows:

where and denote the j-th column and r-th row of , respectively, and and are the j-th column and r-th row of , respectively. The initial component skewness matrices, , are taken as the values randomly selected in the interval . Finally, we initialize by taking it to be as small as 5 or 10.

4.3. Identifiability

Model identifiability is key to securing unique and consistent estimates of model parameters. With regard to the mixtures of MVST distributions, the estimates of and are only unique up to a strictly positive constant. To resolve this issue, a constraint needs to be placed, such as setting the trace of equal to n [13] or fixing [23]. Herein, we set the first diagonal element of as 1 [8]. This scaling procedure can be implemented at each iteration or at convergence, and either method has minimal impact on the final estimates and classifications achieved. To obtain the final parameter estimates, the resulting is divided by the first diagonal element of , and then is multiplied by the first diagonal element of .

5. Empirical Study

5.1. Finite-Sample Properties of ML Estimators

Here, we conducted a simulation study for examining the accuracy of the parameter estimates obtained by using the proposed ECME algorithm in Section 3.2. We generated 500 Monte Carlo samples of sizes , 500, 1000 and 2000 from the two-component FM-MVST model, in the two scenarios (low- and moderate-dimensional) described in Appendix A. Scenario I was characterized by matrices of size , indicating that each matrix consisted of 3 rows and 4 columns. This structure allowed for a total of 12 elements within each matrix. On the other hand, Scenario II generated matrices of size . In this case, each matrix was composed of 10 rows and 2 columns, resulting in a total of 20 elements per matrix. This increased number of elements was beneficial for examining the performance of the proposed algorithm in accurately recovering true parameters in moderate dimensional scenarios.

The accuracy of the obtained parameter estimates was assessed by the average of the root mean squared error (RMSE) of the elements of each estimated parameter. The results shown in Table 1 indicate the good performance of the proposed estimation method. Regardless of the considered scenario, it can be seen that the RMSE values all tended to zero with increasing sample size, indicating the satisfactory asymptotic properties of the ML estimates obtained by the proposed ECME algorithm.

Table 1.

Average RMSE based on 500 replications for the evaluation of ML estimates.

5.2. Comparison of Classification Accuracy

To examine the classification accuracy of the FM-MVST model, we generated 1000 samples from each of the scenarios given in Appendix A. In each scenario, we compared the FM-MVST model described in Section 4 with finite mixtures of matrix variate normal (FM-MVN) and matrix variate t (FM-MVT) distributions, which were readily available in the R package MixMatrix. We also implemented the EM algorithm described in [8], to fit finite mixtures of MVSTIG (FM-MVSTIG) distributions. Additionally, the finite mixtures of the reduced RMVSN (FM-RMVSN) distributions were fitted as a sub-model of the FM-MVST model.

Model performance was assessed by comparing the classification accuracy and model selection criteria for all the fitted models. For classification accuracy, we report the adjusted rand index (ARI; [24]), which took the value of 1 when the two partitions perfectly matched, and the misclassification rate (MCR) of the map clustering for each model. ARI serves as a measure of the similarity between two data clusters, which can provide insights into the robustness of clustering results. By contrast, MCR focuses on the accuracy of classification, emphasizing the proportion of misclassified instances, thus offering a more direct assessment of predictive performance. Furthermore, the Bayesian information criterion (BIC; [25]) value was also reported as a model selection criterion. BIC incorporates both the goodness-of-fit and the complexity of the model, penalizing for overfitting, which can be particularly relevant when evaluating clustering algorithms.

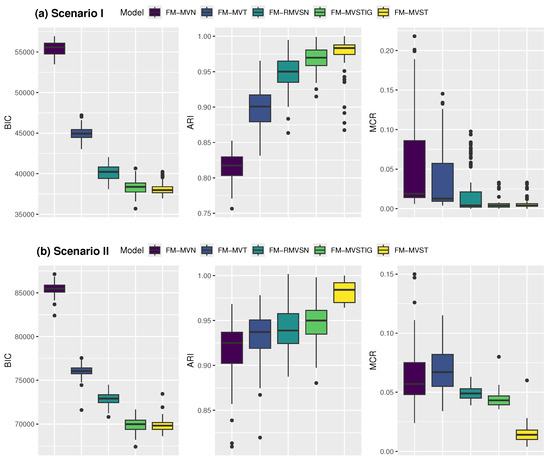

We ran 100 simulations for each scenario and computed the classification accuracy and model selection criteria for each simulation. Table 2 presents the average BIC, ARI and MCR values along with their standard errors (Std), and the results are illustrated via the box plots shown in Figure 1. As one would expect, the model selection criteria selected the true model from which the data were generated. This outcome highlights the effectiveness of the selected metrics in distinguishing between models based on their ability to capture the underlying data structure. The consistency observed across the simulations further strengthens the case for the reliability of these model selection criteria in practical applications.

Table 2.

Simulation results, based on 100 replications, for performance comparison of four mixture models in two scenarios.

Figure 1.

Box plots of BIC, ARI and MCR values for the competing models in two scenarios: (a) Scenario I and (b) Scenario II.

6. Real Data Analysis

In this section, we illustrate the results of applying the proposed methodology to three well-known real datasets.

6.1. Landsat Data

The first application concerned the Landsat data (LSD), originally obtained by NASA, and available at Irvine machine learning repository (http://archive.ics.uci.edu/ml, accessed on 1 September 2024). Multi-spectral satellite imagery allows for multiple observations over a spatial grid, resulting in matrix-valued observations. The LSD comprises lines that consist of four spectral values representing nine pixel neighborhoods in a satellite image. Essentially, each line corresponds to a observation matrix. Additionally, every observation matrix in the LSD is classified into one of six distinct categories: red soil, cotton crop, gray soil, damp gray soil, soil with vegetation stubble and very damp gray soil. For our analysis, we concentrated on three specific categories: red soil, gray soil and soil with vegetation stubble, which had sizes of 461, 397 and 237, respectively.

Table 3 presents a summary of the ML fitting results, including the maximized log likelihood values, BIC, ARI and MCR of the four fitted models. The results reveal that the log likelihood value for the FM-MVN distribution was lower than that for the FM-MVT distribution, indicating a poorer fit. In contrast, the skewed distributions (FM-MVST, FM-MVSTIG and FM-RMVSN) outperformed their respective models. Particularly noteworthy was the superior performance of the FM-MVST model. The estimated tailedness parameters were , and , indicating a distribution of matrix observations characterized by long-tailed behavior.

Table 3.

Summary results from fitting various models to the LSD data.

6.2. Apes Data

The second application considered the apes dataset included in the shapes R package [26]. The description of the dataset, taken from [27], is as follows. In an investigation to assess the cranial differences between the sexes of apes, 29 male and 30 female adult gorillas (Gorilla), 28 male and 26 female adult chimpanzees (Pan) and 30 male and 24 female adult orangutans (Pongo) were studied. Eight landmarks were chosen in the midline plane of each skull. These landmarks were anatomical landmarks and were located by an expert biologist. The dataset was stored as a list with two components: an array of coordinates in eight landmarks in two dimensions for each skull ( observation matrix and ), and a vector of group labels ().

All the competing models were fitted for , and their fitting results are reported in Table 4. It is clear that the FM-MNV model provided the worst fitting performance, whereas FM-MVST was the best model. Similarly to the analyses in the previous section, this may be an indication that the components of FM-MVN were not skewed and heavy-tailed enough to adequately model the data. On a related note, the estimated tailedness parameters were , , , , and , highlighting the presence of clusters with high levels of tailed behavior.

Table 4.

Summary results from fitting various models to the apes data.

6.3. Melanoma Data

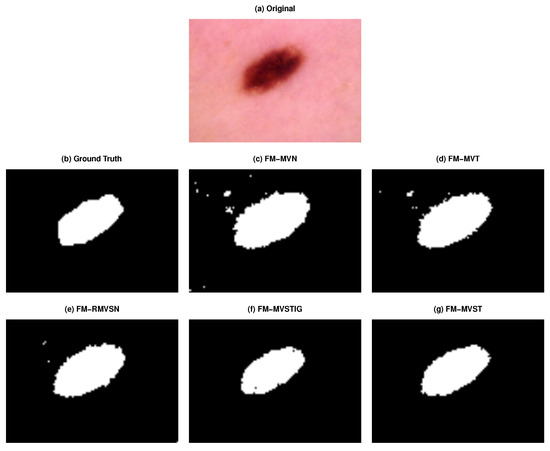

The performance of the FM-MVST model in skin cancer detection was demonstrated in the third and final application. The objective of the skin cancer detection project was to develop a framework for analyzing and assessing the risk of melanoma, using dermatological photographs taken with a standard consumer-grade camera. Segmentation of the lesion is a crucial step for developing a skin cancer detection framework. The objective, then, was to find the border of the skin lesion. It was important that this step was performed accurately, because many features used to assess the risk of melanoma are derived based on the lesion border. The set of images included images extracted from the public databases DermIS and DermQuest, along with manual segmentations (ground truth) of the lesions, available at https://uwaterloo.ca/vision-image-processing-lab/research-demos/skin-cancer-detection, accessed on 1 September 2024.

A skin image in pixels is displayed in Figure 2a. The next objective was to segment the image into two labels. We considered all the pixels of three numerical RGB components denoting red, green and blue intensities and a grayscale intensity, such as , which could be transformed into . Upon considering each pixel as a matrix, each pixel was then be grouped into clusters, where every cluster was assumed to have a different distribution.

Figure 2.

Segmentation of lesion: (a) original, (b) ground truth, and (c–g) segmented images obtained using different models.

It follows from Table 5 that the FM-MVST model provided the best fit, in terms of BIC, as well as the lowest misclassification error for the binary classification of each pixel. The estimates of the tailedness parameters were and , signifying the appropriateness of the use of heavy-tailed t distributions. Furthermore, the superiority of the FM-MVST model is reflected visually in Figure 2c–g, which depict the comparative segmentation performance of the fitted models in grayscale. The figures illustrate differences in identifying the lesion area, and they indicate that the proposed model exhibits a clearer boundary and a more consistent region of the lesion.

Table 5.

Summary results from fitting various models to the melanoma data.

It is noteworthy that all the model selection criteria applied to the three datasets strongly favored the proposed FM-MVST model. The datasets varied significantly, in terms of dimensional and structural characteristics, which underscores the flexibility and effectiveness of the FM-MVST model. This model demonstrated a superior ability to accurately capture the skewness and leptokurtic features present in the data, outperforming the alternative models. The adaptability of the FM-MVST model across diverse datasets not only showcases its robustness but also reinforces its potential as a valuable tool for data analysis in various applications.

7. Concluding Remarks

We have introduced here a new family of matrix variate distributions that can capture both skewness and heavy-tailedness simultaneously. This MVST model was based on a stochastic representation that facilitated our developing an ECME algorithm for the maximum likelihood estimation of the model parameters. We evaluated the effectiveness and efficiency of the proposed algorithm through two simulation studies. Additionally, we used the proposed approach to analyze three real data datasets, demonstrating its capability in modeling asymmetric matrix variate data. Future developments of this approach could include accommodating censored data, determining the optimal number of mixing distributions for the clustering problem and including different distributions for the variables W and U in the stochastic representation. Another interesting extension could involve incorporating the FM-MVST distribution into a mixture-of-regression framework. We are currently looking into these problems, and we hope to report our findings in a future paper.

Author Contributions

Conceptualization, A.J.; Methodology, A.M. and A.J.; Software, A.M.; Investigation, A.J.; Writing—original draft, A.M.; Writing—review & editing, N.B.; Supervision, N.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

The parameters used to generate the data in Section 5.1 are given in the following Table. Here, is used to denote the vector of length p with all its entries as 1 and to denote the p-dimensional identity matrix.

Table A1.

The parameters used in the generation of data (scenarios I and II).

Table A1.

The parameters used in the generation of data (scenarios I and II).

| Scenario | Parameter | Component 1 | Component 2 |

|---|---|---|---|

| I | 0.3 | 0.7 | |

| 3 | 5 | ||

| II | 0.4 | 0.6 | |

| 4 | 4 |

References

- Kroonenberg, P.M. Applied Multiway Data Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Dickey, J.M. Matricvariate generalizations of the multivariate t distribution and the inverted multivariate t distribution. Ann. Math. Stat. 1967, 38, 511–518. [Google Scholar] [CrossRef]

- Arellano-Valle, R.B.; Azzalini, A. A formulation for continuous mixtures of multivariate normal distributions. J. Multivar. Anal. 2021, 185, 104780. [Google Scholar] [CrossRef]

- Liu, C.; Rubin, D.B. The ECME algorithm: A simple extension of EM and ECM with faster monotone convergence. Biometrika 1994, 81, 633–648. [Google Scholar] [CrossRef]

- Dempster, A.P.; Laird, N.M.; Rubin, D.B. Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc. Ser. (Methodol.) 1977, 39, 1–22. [Google Scholar] [CrossRef]

- Rezaei, A.; Yousefzadeh, F.; Arellano-Valle, R.B. Scale and shape mixtures of matrix variate extended skew normal distributions. J. Multivar. Anal. 2020, 179, 104649. [Google Scholar] [CrossRef]

- Gallaugher, M.P.; McNicholas, P.D. A matrix variate skew-t distribution. Stat 2017, 6, 160–170. [Google Scholar] [CrossRef]

- Gallaugher, M.P.; McNicholas, P.D. Finite mixtures of skewed matrix variate distributions. Pattern Recognit. 2018, 80, 83–93. [Google Scholar] [CrossRef]

- Naderi, M.; Bekker, A.; Arashi, M.; Jamalizadeh, A. A theoretical framework for Landsat data modeling based on the matrix variate mean-mixture of normal model. PLoS ONE 2020, 15, e0230773. [Google Scholar] [CrossRef]

- Chen, J.T.; Gupta, A.K. Matrix variate skew normal distributions. Statistics 2005, 39, 247–253. [Google Scholar] [CrossRef]

- Domínguez-Molina, J.A.; González-Farías, G.; Ramos-Quiroga, R.; Gupta, A.K. A matrix variate closed skew-normal distribution with applications to stochastic frontier analysis. Commun. Stat.—Theory Methods 2007, 36, 1691–1703. [Google Scholar] [CrossRef]

- Zhang, L.; Bandyopadhyay, D. A graphical model for skewed matrix-variate non-randomly missing data. Biostatistics 2020, 21, e80–e97. [Google Scholar] [CrossRef]

- Viroli, C. Finite mixtures of matrix normal distributions for classifying three-way data. Stat. Comput. 2011, 21, 511–522. [Google Scholar] [CrossRef]

- Thompson, G.Z.; Maitra, R.; Meeker, W.Q.; Bastawros, A.F. Classification with the matrix-variate-t distribution. J. Comput. Graph. Stat. 2020, 29, 668–674. [Google Scholar] [CrossRef]

- Tomarchio, S.D.; Punzo, A.; Bagnato, L. Two new matrix-variate distributions with application in model-based clustering. Comput. Stat. Data Anal. 2020, 152, 107050. [Google Scholar] [CrossRef]

- Tomarchio, S.D.; Gallaugher, M.P.; Punzo, A.; McNicholas, P.D. Mixtures of matrix-variate contaminated normal distributions. J. Comput. Graph. Stat. 2022, 31, 413–421. [Google Scholar] [CrossRef]

- Tomarchio, S.D. Matrix-variate normal mean-variance Birnbaum–Saunders distributions and related mixture models. Comput. Stat. 2024, 39, 405–432. [Google Scholar] [CrossRef]

- Naderi, M.; Tamandi, M.; Mirfarah, E.; Wang, W.L.; Lin, T.I. Three-way data clustering based on the mean-mixture of matrix-variate normal distributions. Comput. Stat. Data Anal. 2024, 199, 108016. [Google Scholar] [CrossRef]

- Lin, T.I.; Wu, P.H.; McLachlan, G.J.; Lee, S.X. A robust factor analysis model using the restricted skew-t distribution. Test 2015, 24, 510–531. [Google Scholar] [CrossRef]

- Lee, S.X.; McLachlan, G.J. Finite mixtures of canonical fundamental skew t-distributions: The unification of the restricted and unrestricted skew t-mixture models. Stat. Comput. 2016, 26, 573–589. [Google Scholar] [CrossRef]

- Macqueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, Davis, CA, USA, 21 June–18 July 1965; University of California Press: Berkeley, CA, USA, 1967. [Google Scholar]

- Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Sarkar, S.; Zhu, X.; Melnykov, V.; Ingrassia, S. On parsimonious models for modeling matrix data. Comput. Stat. Data Anal. 2020, 142, 106822. [Google Scholar] [CrossRef]

- Hubert, L.; Arabie, P. Comparing partitions. J. Classif. 1985, 2, 193–218. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 461–464. [Google Scholar] [CrossRef]

- Dryden, I.L. shapes Package; Version 1.2.6; Contributed package; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Dryden, I.; Mardia, K. Statistical Shape Analysis: With Applications in R; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 2016. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).