Abstract

This paper investigates the properties of the change in persistence detection for observations with infinite variance. The innovations are assumed to be in the domain of attraction of a stable law with index . We provide a new test statistic and show that its asymptotic distribution under the null hypothesis of non-stationary series is a functional of a stable process. When the change point in persistence is not known, the consistency is always given under the alternative, either from stationary to non-stationary or vice versa. The proposed tests can be used to identify the direction of change and do not over-reject against constant series, even in relatively small samples. Furthermore, we also consider the change point estimator which is consistent and the asymptotic behavior of the test statistic in the case of near-integrated time series. A block bootstrap method is suggested to determine critical values because the null asymptotic distribution contains the unknown tail index, which results in critical values depending on it. The simulation study demonstrates that the block bootstrap-based test is robust against change in persistence for heavy-tailed series with infinite variance. Finally, we apply our methods to the two series of the US inflation rate and USD/CNY exchange rate, and find significant evidence for persistence changes, respectively, from to and from to .

Keywords:

persistence change; infinite variance; near-integrated time series; change point estimator; block bootstrap MSC:

62F03; 62F05

1. Introduction

There is growing evidence to show that the parameters of autoregressive models fitted to many economic, financial and hydrology time series are not fixed across time; see, Busetti [1], Chen [2] and Belaire [3]. Being able to correctly characterize a time series into its separate stationary and non-stationary components has important implications for effective model building and accurate forecasting in economics and finance, especially concerning government budget deficits and inflation rates (e.g., Sibbertsen [4]). A number of testing procedures were proposed depending on whether the initial regime is or . Kim [5] proposed regression-based ratio tests of the constant null against the alternative of a single change in persistence, either from to or vice versa. Leybourne [6] discussed testing for the null hypothesis that the series is throughout the sample versus the alternative that it switches from to and vice versa. When the direction of change is unknown, Leybourne [7] considered standardized cumulative sums of squared subsample residuals that are used to identify the direction of change and do not spuriously reject when the series is a constant process. Since then, there have been further studies on the persistence change. For example, Cerqueti [8] presented panel stationary tests against changes in persistence, and Kejriwal [9] provided a bootstrap test for multiple persistence changes in a heteroskedastic time series. For more research on persistence change, we refer to Jin [10], Jin [11], Wingert [12], and Grote [13].

The above tests are designed to detect a change in persistence with finite variance and do not consider heavy-tailed series with infinite variance. However, Mittnik [14] argued that many types of data on economics and finance have a heavy tail character. It is of greater practical significance to test for the change point with heavy-tailed observations. Therefore, many scholars have paid more attention to the detection of change in persistence under heavy-tailed time series models. In the case of the process null hypothesis, Han [15] used the ratio test statistic to consider the change-point detection with heavy-tailed innovations and proved its consistency as there is a persistence change presence. For the problem of the null hypothesis of the process and the alternative hypothesis involving a change in persistence switching from to , Qin [16] applied a Dickey–Fuller-type ratio test statistic to study for infinite variance observations. Related to online monitoring issues, Chen [17] adopted a kernel-weighted quasi-variance test to monitor the persistence change in heavy-tailed series. For more persistence change estimation and detection in heavy-tailed sequences with infinite variance, see Yang [18], Jin [19], Jin [20], and Wang [21].

However, the existing test methods on heavy-tailed sequences consider the tail index just belonging to , without , and suppose beforehand that the direction of persistence change is known. In this paper, we propose a new test statistic to test the null hypothesis against the alternative of a change in persistence, either or . The innovations are assumed to be stationary time series with heavy-tailed univariate marginal distributions, which are in the domain of attraction of -stable law with . We take into account two types of time series models, a pure AR(1) model and a AR(1) model with a constant or a constant plus linear time trend. Recently, the asymptotic inference for a least squares (LS) estimate when the autoregressive parameter is close to 1 (i.e., the series is nearly non-stationary) has been receiving considerable attention in the statistics and econometric literature, such as Chan [22] and Cheng [23]. Therefore, we are also interested in deriving the asymptotic behavior of the proposed test in the context of the near-unit root. Since the critical values of asymptotic distribution depend on the unknown tail index . To solve this problem, a block bootstrap approximation method suggested by Paparoditis [24] is used to determine the critical values, and then its validity is proved. The performance of the bootstrap-based test in small samples is evaluated via an extensive Monte Carlo study. Finally, the feasibility of our proposed test procedures is illustrated through empirical analysis.

Although the main results of this paper bear some formal analogy with Leybourne [7], it offers a number of important new implications. First, it extends the work of persistence change detection with heavy-tailed sequences to the case wherein the innovation process is in the domain of attraction of a stable law with index . Thus, we can perform the examination of structural change in persistence even if the mean of real data does not exist. Second, under the circumstances of infinite variance, both for non-stationary and nearly non-stationary series, the order of is T. This is somewhat intriguing as this order was originally motivated by the consideration of the magnitude of the observed Fisher’s information number in the finite variance case, as can be seen in Chan [25]. Third, as we all know, Kim [5] proved the ratio type test statistic diverges at the rate of , but it only can apply to the alternative hypothesis involving a persistence change, . The Dickey–Fuller type ratio test proposed by Leybourne [26] correctly rejects the null of no persistence change, and the tail in which the rejection occurs can also be used to identify the direction of change. The deficiency cannot be ignored since the divergence rate is less than . However, it is satisfying that the divergence rate of our proposed test statistic can reach . In addition, we do not need to assume that the direction of any possible change is known and the test almost never rejects in the left (right) tail when there is a change from to [–].

This paper is organized as follows. Section 2 introduces the model, some necessary assumptions, and the test statistic. Section 3 details the large sample properties of the tests under both the constant persistence model of the non-stationary and the persistence change models. Moreover, the asymptotic distributions under and the near-unit root are given and a consistent change-point proportion estimators under an alternative hypothesis are also established. The algorithm of the block bootstrap is presented in Section 4. Monte Carlo simulations are presented in Section 5 to assess the validity of our proposed test procedures in finite samples. Section 6 applies our procedures to two time-series data. We conclude the paper in Section 7. All proofs of the theoretical results are gathered in Appendix A.

2. Materials and Methods

As a model for a change in persistence, we adopt the following data-generating process (DGP):

where are unknown parameters and p is an integer greater than zero. is a p-order autoregressive sequence, and innovation lies in the domain of attraction of a stable law that is taken to satisfy the following, quite general, dependent process assumption.

Assumption 1.

(i) is an independent and identically distributed sequence. (ii) Assume that all the characteristic roots of lie outside the unit circle. (iii) is in the domain of attraction of a stable law of order and we have if and has a distribution symmetric of approximately 0 if . The normal distribution corresponds to .

Remark 1.

Under Assumption 1, Phillips [27] have already proved that the scaled partial sums admit a functional central limit theorem, viz., , where and the random variable is a Lévy process. Similarly, from the studies by Ibragimov [28] and Resnick [29], it can be concluded that , where the random variable is a κ-stable process. The exact definition of a κ-stable process appearing in the following is not necessarily known, but the quantities can be represented as for some slowly varying function .

Within Model 1, the sequence is a process if , while it is a process if . We consider four possible scenarios. The first of these is that is throughout the sample period; that is, . We denote this . The second, denoted by , is that displays a change in persistence from to behavior at time , where denotes the integer part of its argument; that is, for and for in the context of Model 1 or Model 2. The third, denoted , is that is changing to at time . In contrast to the second case, it is for and for . The final possibility is that is throughout; that is, , , . We denote this . Here, the change-point proportion is assumed to be in , an interval in , which is symmetric around 0.5. Without loss of generality, we can make and .

In practice, because both the location and direction of the change-point proportion are unknown in advance, we follow the approach of Leybourne [26] that consider the (two-tailed) test which rejects the large or small values of the statistic formed from the minimized Dickey–Fuller ratio statistic. In order to improve the performance of testing the null hypothesis against the alternative hypothesis or , the modified ratio test statistic is defined by

where , are LS estimates based on and , respectively, and . For convenience, let for , for .

In the next section, we provide representations for the asymptotic distribution of the statistic under the constant null and prove that a test that rejects the large (small) values of is consistent under (). As a by-product of this, a consistent estimator of is provided. Furthermore, it is shown that the asymptotic distribution of under or the near-integrated time series degenerates and this renders the test conservative against the constant process or near-integrated time series.

3. Results

We will establish the asymptotic null distribution of the proposed test. Throughout, we use ‘→’ to denote the weak convergence of the associated probability measures, and use to denote a stable process with the tail index .

Theorem 1.

Suppose that is generated by Model 1 under and let Assumption 1 hold. Then, provided that , we have

where

Furthermore, if we are interested in the model with a constant or a constant plus linear time trend, the following data-generating process is suggested as:

In Model 2, the deterministic kernel is either a constant or a constant plus linear time trend , where and . is a p-order autoregressive process as defined in Model 1. Similarly, satisfies Assumption 1, while there is an additional restriction on the tail index . This is because the LS estimates of and are expressed as and , whose consistency is destroyed if , resulting in the loss of validity of the subsequent block bootstrap method. Through some algebraic calculation, we can derive the following results under Model 2.

Lemma 1.

Suppose that is generated by Model 2 under and let Assumption 1 hold. Let the superscript denote the de-meaned , and the de-meaned and de-trended cases, respectively. Then, provided that ,

where and are defined in Appendix A.

Remark 2.

Theorem 1 and Lemma 1 indicate that, under , although the asymptotic distributions are the functional of the κ-stable process, they have a different story in each model. Moreover, as shown in (2) and (3), the explicit form of asymptotic distribution is complicated and not standard, and depends on the unknown tail index κ. Therefore, the block bootstrap method is used to determine the critical values, which will be introduced in Section 4 below.

In Theorem 2, we detail the large sample behavior of test under the persistence change alternative hypotheses and and give consistent estimators of . The results stated hold for both the Model 1 and Model 2 cases.

Theorem 2.

Let be generated by Model 1 or Model 2 and Assumption 1 hold. Then,

(i) Under , we have and ;

(ii) Under , we have and ,

where signifies convergence in probability.

Remark 3.

The results in Theorem 2 imply that a consistent test of against () is obtained from the upper-tail (lower-tail) distribution of test Ξ. A rejection in the upper (lower) tail is indicative of a change from to (from to ) because Ξ is consistent at the rate of under and tends towards 0 with the rate under . This means that the tail that the test rejects will also correctly identify the true direction of change. Therefore, even if the direction of change is unknown, as will typically be the case in practice, it is clear from Theorem 2 that Ξ can also be employed as a two-tailed persistence change test. In addition, the modified numerator (denominator) of test Ξ yields a consistent estimator of the change-point fraction .

In Theorem 3, we now establish the behavior of test under the constant process ; again, this result applies to both Model 1 and Model 2 cases.

Theorem 3.

Let be generated by Model 1 or Model 2 and Assumption 1 hold. Then, under , we obtain .

Remark 4.

The straightforward result is that the test Ξ will be conservative when run at conventional significance levels (say, the level or smaller) and will never reject under in large samples. That is because, under , the relevant critical values are lower (in the left-hand tail) and higher (in the right-hand tail) than the value 1.

In this paper, we need to consider the case that , where is a real number, that is, the sequence is the near-unit root process. For a Gaussian nearly non-stationary AR(1) model, it is shown that the asymptotic distribution of the LS estimate of obtained by Chan [30] can be expressed as a functional of the Ornstein–Uhlenbeck process. Based on the above research, the following Theorem 4 gives the asymptotic distribution of under the heavy-tailed near unit root.

Theorem 4.

Let be generated by Model 1 and Assumption 1 hold. Then, under , there is

where

and satisfies , .

Similarly, the asymptotic distribution under Model 2 with can also be directly obtained.

Lemma 2.

Suppose be generated by Model 2 under and let Assumption 1 hold; we only look at the case with and have

where and are defined in Appendix A.

Remark 5.

Note that, when , Theorem 4 is the result of Theorem 1; thus, Theorem 4 provides an asymptotic result for the infinite variance near-integrated AR(1) model. It can be seen that the asymptotic distribution is not only related to the κ-stable process, but also to parameter γ. Likewise, the conclusion of Lemma 2 is equal to that of Lemma 1 when . It should be noted that Lemma 2 only gives the asymptotic distribution for the case of . For , it is similar to Lemma 1, and does not be described in detail here. In Section 5, we will give the rejection rates of the proposed test statistic under different γ to verify that our statistic is conservative in the case of the near-unit root and will not accept the assumption of persistence change.

4. Block Bootstrap Approximation

The key implication of Theorem 1 and Lemma 1 is that, under a heavy-tailed sequence, the asymptotic null distributions of the persistence change tests depend on the tail index . In practice, the stable index is often unknown and a complicated computation procedure is usually involved in estimating it. A cursory way to estimate is proposed by Mandelbrot [31], but the accuracy is not enough. To avoid the nuisance parameter , we propose the following block bootstrap test.

First calculate the centered residuals

for , where is the LS estimate of based on the observed date .

Choose a positive integer and let be drawn i.i.d. with the distribution uniform on the set ; here, we take , where denotes the integer part, although different choices for K are also possible. The procedure constructs a bootstrap pseudo-series , where , as follows:

where , .

Compute the statistic and

where , are LS estimates based on and , respectively, and .

Repeating step 2 and step 3 a great number of times (e.g., P times), we obtain the collection of pseudo-statistics .

Compute the bootstrap quantile of the empirical distribution of being greater than T, denoted by . We reject the null hypothesis if , because the empirical distribution of is an approximation to the sampling distribution of under null hypothesis and we can say that is true.

Remark 6.

The block bootstrap is a central part in the residual-based block bootstrap procedure; note, however, that the block bootstrap is not directly applied to the data, neither its first differences; rather, the pseudo-series is obtained by randomly integrating the selected blocks of centered residuals . Compared with other sampling methods, the block bootstrap method has more advantages for dependent sequences. The reason is that each block retains the dependence of the sequence, but the blocks are independent of each other. So, this resample procedure can accurately reproduce the sampling distribution of the test statistic under the null hypothesis. As in Arcones [32], we present Assumption 2 to ensure the convergence in the probability of bootstrap distribution.

Assumption 2.

As , and .

Theorem 5.

If Assumption 1, Assumption 2, and hold, then under the Model 1, we can derive

where is the same as in Theorem 1, which will not be shown in detail here.

Remark 7.

Theorem 5 just gives the convergence of under the infinite variance case and guarantees that the block bootstrap method has the same asymptotic distribution under the null hypothesis so that an accurate critical value can be obtained. We omit the block bootstrap method under Model 2, which is similar to Model 1. In the next section, we will demonstrate the feasibility of the block bootstrap in small samples through numerical simulation.

5. Numerical Results

In this section, we use Monte Carlo simulation methods to investigate the finite sample size and power properties of the tests based on the block bootstrap developed in Section 3 and Section 4. Our simulation study is based on samples of sizes 300 and 500, with 3000 replications at the nominal level. Since the optimal block bootstrap size, b, is difficult to select, we take based on the experience, as can be seen in Paparoditis [24], which satisfies Assumption 2 and C is a constant. Here, we set and the choice of the best length is not the focus of this article, but its effectiveness has been explained in the aforementioned literature.

We consider the data generated by the following autoregressive integrated moving average process

where and is independent and identically distributed (i.i.d.) in the domain of the attraction of a stable law of order . The design parameter .

First of all, Table 1 reports the partial size and power when the innovation process is i.i.d. with , and , , and . Here, represents the proposed test statistic in this paper, and Q represents the statistic used by Qin [16]. The empirical size and power, calculated as the rejection frequency of the tests under and , are provided for the stable index . It can be seen from Table 1 that all empirical sizes of and Q tend towards the significance level of 5%. However, the power values of the are better than that of Q. Especially when the tail index is small, such as when , the power values of Q are less than 0.02, which is not enough to reject the null hypothesis, but the power value of the can reach 0.5. This is enough to show the advantages of the proposed test statistic. Their power values decrease with the decrease in because the smaller indicates the more outliers. Similarly, their power values decrease with the increase in , but it can be seen that Q is more sensitive to the change in . Therefore, it can be concluded that the proposed statistic in this paper has a more robust test performance for the persistence change under the heavy tail. Next, we present our numerical simulation results in detail.

Table 1.

Empirical rejection frequencies of and Q.

5.1. Properties of the Tests under the H1, H0, and Near-Unit Root

In this section, we investigate the finite sample size properties of the tests when data are generated by (7) with the constant parameter. Table 2 and Table 3 report the empirical rejection frequencies of the and , for and , respectively. Where is the right test and is the left test, these are based on the upper tail and lower tail , respectively.

Table 2.

Properties of the tests under the , , and near-unit root.

Table 3.

Empirical rejection frequencies for .

We can see the following regularities from the results in Table 2 and Table 3. Firstly, when (null hypothesis, ), the empirical size tends towards the significance level of 5%. As the tail index decreases, the experience size does not fluctuate greatly, for example, when , the empirical size of the is 0.0473, 0.0403, and 0.044 for , which still tends towards a nominal level. Notice that the empirical size under is better than that under or , which indicates that the dependency of the innovation process has some influence on the test. Secondly, for the vast majority of the entries in Table 2 and Table 3 pertaining to (cases where , ), the empirical rejection frequencies of both the upper and lower tails -test are seen to lie well below the nominal level, as predicted by the asymptotic unbiasedness result of Theorem 3. The empirical rejection frequencies increase gradually with the decrease in the tail index, and the empirical rejection frequencies of are generally higher than that of , but still lower than the nominal level. It is worth noting that even when , the empirical rejection frequencies are much smaller than the nominal level, which indicates that the proposed test statistic is conservative under the process. However, when , and , empirical rejection frequencies are severely distorted, such as when and empirical rejection frequency of is 0.514. What is interesting is that this phenomenon only occurs in the case of , and there is no distortion for . Finally, in the case of near-integrated time series, the empirical rejection frequencies are not high enough to reject the null hypothesis. Especially when , the empirical rejection frequencies tend to the significance level, which confirms the conclusion of this paper, that is, when is smaller, the sequence is closer to the process (null hypothesis). Similarly, with the increase in , the empirical rejection frequencies of the gradually increase, while that of the gradually decreases. In summary, the straightforward result is that a test based on will be conservative when run at conventional significance levels and will never be rejected under a near-integrated time series in large samples, regardless of whether this is in i.i.d or a dependent innovation process.

5.2. Properties of the Tests under the H10 and H01

In this section, we report the empirical rejection frequencies of the test when the data are generated according to the switch DGP (7) with , and , . We consider the following values of the autoregressive and breakpoint parameters: and , respectively. We only present the results of and is similar.

Table 4 and Table 5 report resulting empirical rejection frequencies for the upper-tail and lower-tail -tests (all tests were run at the nominal 5% level) for samples of size and , respectively. Table 6 and Table 7 report the Monte Carlo sample mean and sample standard deviation of the corresponding persistence change-point estimators, and , respectively. Where and are estimators of the true breakpoint under and , respectively.

Table 4.

Empirical rejection frequencies under for .

Table 5.

Empirical rejection frequencies under for .

Table 6.

Monte Carlo mean and standard error of , .

Table 7.

Monte Carlo mean and standard error of , .

From Table 4 and Table 5, the following conclusions can be obtained on power properties. As expected, the smaller the , the smaller the power values of , but even , the power values of are also enough to reject the null hypothesis, for example, when , , and , the power values of are 0.5557, 0.482, and 0.4097 for . However, although the empirical rejection frequencies of increase with the increase in ‘outliers’, they are also all less than 0.02, that is, the lower-tail -test will not reject the null hypothesis under . This suggests that the tests may be reliable for identifying the direction of persistence change, even if under the small sample or . The empirical power of drops significantly as the changing size decreases, which is a common conclusion like what other change point test procedures can obtain. It is also worth noting that the power is always higher with a larger , which occurs because of a larger , which means that a greater proportion of the sample contains the component. It is clear from the different values of that the sensitivity of rejection frequencies to whether the innovation process is independent does not vary considerably. This shows that the proposed test statistic is robust for different -values under the alternative hypothesis. As the sample size of T increases, power values become higher and higher in each case of the tests, which proves consistent with the results of Theorem 2. The above conclusions also confirm the effectiveness of the block bootstrap method.

Turning to the results for the breakpoint estimators and in Table 6 and Table 7, respectively, a number of comments seem appropriate. First of all, it is clear that and appear to converge on the true breakpoint, , as would be expected in Theorem 2. Secondly, the smaller the tail index, the larger the standard deviation. It is not surprising that a smaller tail index implies more ‘outliers’. Moreover, () performs best for (). This is also unsurprising as this case provides the sharpest distinction in the cases considered between the and phases of the process. Finally, an interesting finding is that performs significantly better than for , slightly better than for , and slightly worse than for .

Although not reported, we also consider the power properties under the corresponding switch DGP. These experiments yield qualitatively similar results to those observed in Table 4 and Table 5 for -tests on switching the upper and lower tail and switching for (). This is because this model can also be viewed as a process with a switch from to at when the data are taken in reverse order.

To summarize, the conclusions to be drawn from the results in this section seem clear. The test procedures introduced in Section 3 and Section 4 provide a functional method to effectively detect to or to persistence change for infinite variance sequences. In addition, although the proposed test statistic is based on the null hypothesis, the empirical size is still controlled well when a series is or a near-integrated time series throughout, and empirical power has good performance compared to Q’s. From the above research, we can also conclude that, for the block bootstrap method, is a reasonable choice in most cases to effectively control the empirical size and obtain a satisfactory empirical power.

6. Empirical Applications

Especially in many financial time series, the persistence change appears frequently. In this section, we illustrate the proposed test statistic by two examples of time series data, namely data on the US inflation rate and USD/CNY exchange rate which come from multpl.com, and the website of the Federal Reserve Bank of St. Louis, respectively. At the 5% nominal level, we find clear evidence of change from stationary to non-stationary or non-stationary to stationary in these two series.

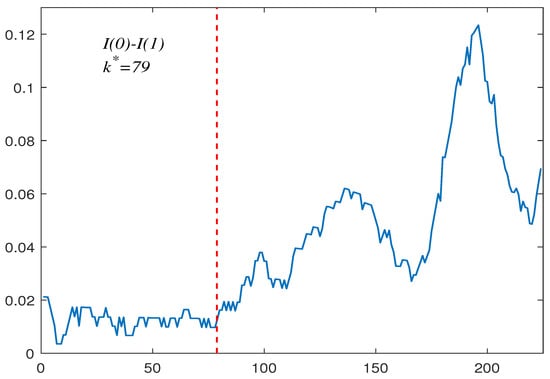

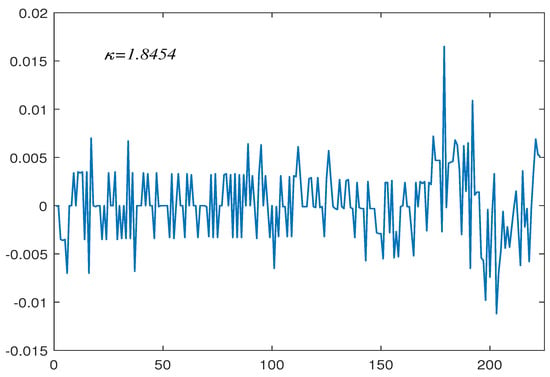

The first group contains 224 US inflation rate monthly data from September 1958 to April 1977. Figure 1 reports the observations in this set. Chen [33] considered this dataset using a bootstrap test of change in persistence, and concluded that the data contain a change from to . Moreover, they also derived that the estimated change period from to is May 1965. In this paper, we apply our proposed block bootstrap-based method to verify this conclusion. First, we perform a first-order difference on the data shown in Figure 1 to obtain Figure 2. The differential data were fitted using software provided by John [34] to obtain a tail index of and its corresponding upper- and lower-tail critical values are and , respectively. As suspected, the existence of a change in persistence is confirmed to be . This indicates that the data undergo a change from to . The estimated change period from to is (May 1965) (see the vertical line in Figure 1). This coincides well with the result obtained by Chen [2]’s.

Figure 1.

US inflation rate data .

Figure 2.

First−order difference in inflation rate data.

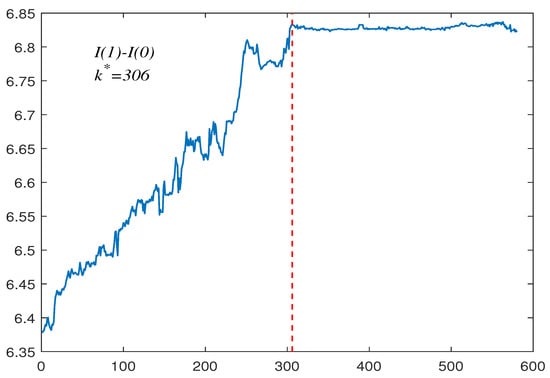

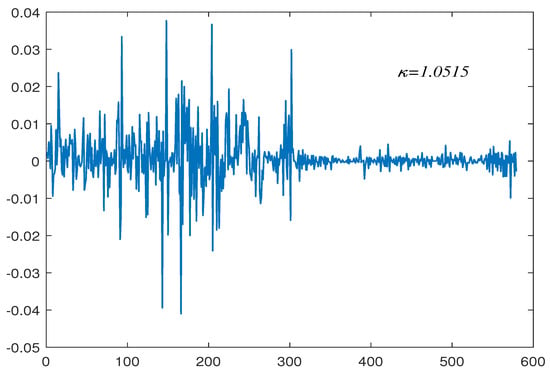

The second group contains a 580 USD/CNY exchange rate from 13 May 2009 to 31 August 2011 (see Figure 3). We again fit its first-order difference data in the same way with a tail index of . This also reflects that the data contain a lot of outliers. Its corresponding upper- and lower-tail critical values are and , respectively. The presence of a persistence change is confirmed as , which also indicates that the data have undergone a change from to . In this example, on the basis of the estimated break fraction (15 June 2010), it is reasonable to split the whole sample into two regions, where is and is . To make our conclusion more reliable, we also applied the test proposed by Kim [5], and we found that, on 16 June 2010, the data change from to , which further demonstrate the effectiveness of the method we proposed.

Figure 3.

USD/CNY exchange rate data, .

Evidently, one may question whether this reject signal was caused by persistence change or those outliers. To test this doubt and make our conclusion more reliable, we also applied our procedure to test the first-order difference data in Figure 2 and Figure 4. The proposed procedure in this paper that used the same parameter choices does not reject the null hypothesis. This result again suggests that the original data undergo a persistence change and the first-order difference data are a stationary sequence. In addition, we divide the two sets of data into two segments according to the estimated break fraction and test them separately, before finding that neither of them rejected the null hypothesis. This indicates that there is only one persistence change in both sets of data.

Figure 4.

First-order difference in the exchange rate data.

Actually, in the text of the analysis of real-world data, besides for change point models, several ways of analyzing financial time series are possible, such as Cherstvy [35], Yu [36], Kantz [37], who introduced three strategies for the analysis of financial time series based on time-averaged observables, which contained the time-averaged mean-squared displacement as well as the aging and delay time methods for the varying fractions of the financial time series. They found that the observed features of the financial time series dynamics agree well with our analytical results for the time-averaged measurables for geometric Brownian motion, underlying the famed Black–Scholes–Merton model. It was useful for financial data analysis and disclosing new universal features of stock market dynamics.

7. Conclusions

In this paper, we propose a new statistic to test the persistence change with heavy-tailed innovations, and neither the direction of change nor the location of the change can be assumed to be known. We derive the asymptotic distribution of the test statistic under the that is a complicated functional of the -stable process. We also demonstrate that this test is consistent against changes in persistence, either from to or from to , and prove the consistency of breakpoint estimators. In particular, to determine the critical values for the null distribution of the test statistic containing unknown tail index , we adopt an approach based on the block bootstrap which is a variation on the sampling methodology. The robustness of the block bootstrap method is verified by numerical simulation, and the test obtained displays no tendency of rejecting against constant or nearly integrated time series. Empirical applications suggest that our procedures work well in practice. In conclusion, the proposed test statistic based on block bootstrap constitutes a functional tool for detecting changes in persistence with heavy-tailed innovations.

Author Contributions

Methodology, S.Z.; Software, H.J.; Writing—review & editing, M.S. All authors have read and agreed to the published version of the manuscript.

Funding

The authors would like to thank financial support from NNSF (Nos. 62273272, 71473194), SNSF (No. 2020JM-513).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Proof of Theorem 1.

For the present, assume is fixed. We start with the following statistic

where are the LS estimates based on and , respectively.

Firstly, for the numerator of

where . It is easily shown that

By using the conclusion about stochastic integral from Knight [38], we can derive

and

Thus, it leads to

and

For the denominator of , we have

where

Similarly, we obtain

and

Thus, it follows

Using the continuous mapping theorem, the desired result is available

□

Proof of Lemma 1.

Before presenting the proof, we should define the following random processes, where for ,

and for ,

First consider the proof for the case of de-meaned data. Under Model 2, we obtain

for the numerator of the statistic and

for the denominator of statistic, where and are the LS estimates based on and , respectively. It is shown that

For the numerator of statistic, we can obtain

This is because

and

Similarly, we have

Next, let us think about the proof for the de-meaned and de-trended case. Note that, unlike de-meaned case, under the null, for the numerator of statistic and for the denominator of the statistic. Here, , , and , are the LS estimates based on and , respectively.

With the same algebraic calculation, it is shown that

The rest of the proof technique is similar to the de-meaned case, and we can obtain

□

For the remainder of the Appendix A, we omit the corresponding proofs under Model 2; which are straightforward but tedious and follow the same logical method as those presented under Model 1.

Proof of Theorem 2.

We first consider part of the Theorem 2 for the result under .

Firstly, we consider the numerator of the statistic. When , is made up of and , and we have

Furthermore, we can derive

and

That is because and under the process. The first term was proved by Phillips [27]. For the second term, since , we have . And, because from Phillips [27] and , it follows .

So, we can obtain

Then, for the numerator of , we can receive

When , is . Similarly, we can obtain

and

Therefore, it is easily shown that

Then, consider the denominator of the statistic. When , is . Hence, we have

When , is made up of the and process, we have

and

So, we can obtain

Let , then we have the following result:

where C is a constant because, at , we have

And, (A16) holds because Phillips [27] have shown that , and when there is .

Since

one can apply an argument similar to Lemma 3 of Amemiya [39]. Let be an open interval in containing . We denote by the complement of G in . Then, is compact and exists. In fact, . Let and be the event for all ’. Then, it can be shown that the event implies that which in turn implies that . Therefore, . Since by the uniform convergence in (A15), we have , which implies that . The stated result for under then follows immediately.

Next, consider the results in part (ii) relating to . Under this alternative, it is easily seen that . For , is the process. Hence, we have

When , is made up of and . It is easy to show that

and

So, we obtain

Then, we have the following result:

Proof of Theorem 3.

For the numerator and denominator of the statistic, we have

where was defined in (A16). Similarly, for , we have

we have

Proof of Theorem 4.

Under Model 1, we have

which were proved by Chan [30].

Due to , we can derive

Then, Theorem 4 can be obtained immediately. Furthermore, for the results about in Lemma 2, the proofs are similar and therefore omitted here. But, we should give the following definitions

□

Before giving the proof of Theorem 5, we need to introduce the following two Lemmas.

Lemma A1.

Let

where for . If Assumption 1 and Assumption 2 hold, then

Proof.

Without loss of generality, we assume that and for , by construction of the block bootstrap method, we have

where and . The fact that

and . We only consider the first term on the right-hand side of (A25) in the following. We first show uniformly that, in r,

in probability. To establish (A26), use the definitions of to verify that

We then have

Similarly,

Let

Then, we have . Since we have when , so

Lemma A2.

Let

where for . If Assumption 1 and Assumption 2 hold, then

The proof is similar to Lemma A1.

Proof of Theorem 5.

For the numerator, note that

By Lemmas A1 and A2, we can easily obtain the formulas

and

So, it is straightforward to show that

By an application of the continuous mapping theorem and the denominator is dealt with analogously, we can obtain

□

References

- Busetti, F.; Taylor, A.R. Tests of stationarity against a change in persistence. J. Econom. 2004, 123, 33–66. [Google Scholar] [CrossRef]

- Chen, W.; Huang, Z.; Yi, Y. Is there a structural change in the persistence of wti–brent oil price spreads in the post-2010 period? Econ. Model. 2015, 50, 64–71. [Google Scholar] [CrossRef]

- Belaire-Franch, J. A note on the evidence of inflation persistence around the world. Empir. Econ. 2019, 56, 1477–1487. [Google Scholar] [CrossRef]

- Sibbertsen, P.; Willert, J. Testing for a break in persistence under long-range dependencies and mean shifts. Stat. Pap. 2012, 53, 357–370. [Google Scholar] [CrossRef]

- Kim, J.Y. Detection of change in persistence of a linear time series. J. Econom. 2000, 95, 97–116. [Google Scholar] [CrossRef]

- Leybourne, S.; Kim, T.H.; Taylor, A.R. Detecting multiple changes in persistence. Stud. Nonlinear Dyn. Econom. 2007, 11. [Google Scholar] [CrossRef]

- Leybourne, S.; Taylor, R.; Kim, T.H. Cusum of squares-based tests for a change in persistence. J. Time Ser. Anal. 2007, 28, 408–433. [Google Scholar] [CrossRef]

- Cerqueti, R.; Costantini, M.; Gutierrez, L.; Westerlund, J. Panel stationary tests against changes in persistence. Stat. Pap. 2019, 60, 1079–1100. [Google Scholar] [CrossRef]

- Kejriwal, M. A robust sequential procedure for estimating the number of structural changes in persistence. Oxf. Bull. Econ. Stat. 2020, 82, 669–685. [Google Scholar] [CrossRef]

- Hao, J.; Si, Z. Spurious regression between long memory series due to mis-specified structural break. Commun.-Stat.-Simul. Comput. 2018, 47, 692–711. [Google Scholar]

- Hao, J.; Si, Z.; Jinsuo, Z. Modified tests for change point in variance in the possible presence of mean breaks. J. Stat. Comput. Simul. 2018, 88, 2651–2667. [Google Scholar]

- Wingert, S.; Mboya, M.P.; Sibbertsen, P. Distinguishing between breaks in the mean and breaks in persistence under long memory. Econ. Lett. 2020, 193, 1093338. [Google Scholar] [CrossRef]

- Grote, C. Issues in Nonlinear Cointegration, Structural Breaks and Changes in Persistence. Ph.D. Thesis, Leibniz Universität Hannover, Hannover, Germany, 2020. [Google Scholar]

- Mittnik, S.; Rachev, S.; Paolella, M. Stable paretian modeling in finance: Some empirical and theoretical aspects. In A Practical Guide to Heavy Tails; Birkhäuser: Boston, MA, USA, 1998; pp. 79–110. [Google Scholar]

- Han, S.; Tian, Z. Bootstrap testing for changes in persistence with heavy-tailed innovations. Commun. Stat. Theory Methods 2007, 36, 2289–2299. [Google Scholar] [CrossRef]

- Qin, R.; Liu, Y. Block bootstrap testing for changes in persistence with heavy-tailed innovations. Commun. Stat. Theory Methods 2018, 47, 1104–1116. [Google Scholar] [CrossRef]

- Chen, Z.; Tian, Z.; Zhao, C. Monitoring persistence change in infinite variance observations. J. Korean Stat. Soc. 2012, 41, 61–73. [Google Scholar] [CrossRef]

- Yang, Y.; Jin, H. Ratio tests for persistence change with heavy tailed observations. J. Netw. 2014, 9, 1409. [Google Scholar] [CrossRef]

- Hao, J.; Si, Z.; Jinsuo, Z. Spurious regression due to the neglected of non-stationary volatility. Comput. Stat. 2017, 32, 1065–1081. [Google Scholar]

- Hao, J.; Jinsuo, Z.; Si, Z. The spurious regression of AR(p) infinite variance series in presence of structural break. Comput. Stat. Data Anal. 2013, 67, 25–40. [Google Scholar]

- Wang, D. Monitoring persistence change in heavy-tailed observations. Symmetry 2021, 13, 936. [Google Scholar] [CrossRef]

- Chan, N.H.; Zhang, R.M. Inference for nearly nonstationary processes under strong dependence with infinite variance. Stat. Sin. 2009, 19, 925–947. [Google Scholar]

- Cheng, Y.; Hui, Y.; McAleer, M.; Wong, W.K. Spurious relationships for nearly non-stationary series. J. Risk Financ. Manag. 2021, 14, 366. [Google Scholar] [CrossRef]

- Paparoditis, E.; Politis, D.N. Residual-based block bootstrap for unit root testing. Econometrica 2003, 71, 813–855. [Google Scholar] [CrossRef]

- Chan, N.H.; Wei, C.Z. Asymptotic inference for nearly nonstationary AR(1) processes. Ann. Stat. 1987, 15, 1050–1063. [Google Scholar] [CrossRef]

- Leybourne, S.J.; Kim, T.H.; Robert Taylor, A. Regression-based tests for a change in persistence. Oxf. Bull. Econ. Stat. 2006, 68, 595–621. [Google Scholar] [CrossRef]

- Phillips, P.C.; Solo, V. Asymptotics for linear processes. Ann. Stat. 1992, 20, 971–1001. [Google Scholar] [CrossRef]

- Ibragimov, I.A. Some limit theorems for stationary processes. Theory Probab. Appl. 1962, 7, 349–382. [Google Scholar] [CrossRef]

- Resnick, S.I. Point processes, regular variation and weak convergence. Adv. Appl. Probab. 1986, 18, 66–138. [Google Scholar] [CrossRef]

- Chan, N.H. Inference for near-integrated time series with infinite variance. J. Am. Assoc. 1990, 85, 1069–1074. [Google Scholar] [CrossRef]

- Mandelbrot, B. The variation of some other speculative prices. J. Bus. 1967, 40, 393–413. [Google Scholar] [CrossRef]

- Arcones, M.A.; Giné, E. The bootstrap of the mean with arbitrary bootstrap sample size. Ann. IHP Probab. Stat. 1989, 25, 457–481. [Google Scholar]

- Chen, Z.; Jin, Z.; Tian, Z.; Qi, P. Bootstrap testing multiple changes in persistence for a heavy-tailed sequence. Comput. Stat. Data Anal. 2012, 56, 2303–2316. [Google Scholar] [CrossRef]

- John, P.N. Numerical calculation of stable densities and distribution functions. Commun. Stat. Stoch. Model. 1997, 13, 759–774. [Google Scholar]

- Cherstvy, A.G.; Vinod, D.; Aghion, E.; Chechkin, A.V.; Metzler, R. Time averaging, ageing and delay analysis of financial time series. New J. Phys. 2017, 19, 063045. [Google Scholar] [CrossRef]

- Yu, Z.; Gao, H.; Cong, X.; Wu, N.; Song, H.H. A Survey on Cyber-Physical Systems Security. IEEE Internet Things J. 2023, 10, 21670–21686. [Google Scholar] [CrossRef]

- Kantz, H.; Schreiber, T. Nonlinear Time Series Analysis; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Knight, K. Limit theory for autoregressive-parameter estimates in an infinite-variance random walk. Can. J. Stat. 1989, 17, 261–278. [Google Scholar] [CrossRef]

- Amemiya, T. Regression analysis when the dependent variable is truncated normal. Econom. Econom. Soc. 1973, 41, 997–1016. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).