Abstract

Multiple-input multiple-output (MIMO) linear time-invariant (LTI) systems exhibit enormous computational costs for high-dimensional problems. To address this problem, we propose a novel approach for reducing the dimensionality of MIMO systems. The method leverages the Takenaka–Malmquist basis and incorporates the strictly complex-valued relevant vector machine (SCRVM). We refer to this method as covariance-free maximum likelihood (CoFML). The proposed method avoids the explicit computation of the covariance matrix. CoFML solves multiple linear systems to obtain the required posterior statistics for covariance. This is achieved by exploiting the preconditioning matrix and the matrix diagonal element estimation rule. We provide theoretical justification for this approximation and show why our method scales well in high-dimensional settings. By employing the CoFML algorithm, we approximate MIMO systems in parallel, resulting in significant computational time savings. The effectiveness of this method is demonstrated through three well-known examples.

Keywords:

model order reduction; rational approximation; Takenanka–Malmquist; sparse Bayesian learning MSC:

93A30; 41A20; 93C05

1. Introduction

A reduction in dimensionality is crucial in multiple-input multiple-output (MIMO) system models due to the high computational costs associated with high-dimensional problems. A MIMO linear time-invariant (LTI) system with l outputs and k inputs can be represented by a matrix of transfer functions in the following form [1,2,3,4,5,6]:

Here, , m is the system order, and , .

The n-th approximate model is given by the following [1,2,3,4,5,6]:

where , n is the reduced system order, and , .

Numerous techniques have been developed for model reduction in linear invariant systems. These techniques encompass a wide range of approaches, including linear matrix inequalities [7], error minimization [8], magnitude and phase criteria [9], balanced truncation [10,11], rational interpolation [12], the Krylov method [13], adaptive Fourier decomposition (AFD) [14], Routh approximations, and Padé-type model reductions [15,16]. However, those methods incur substantial computational costs and necessitate prior knowledge of the actual system, which is typically unknown in practice. Consequently, a persistent demand exists for rapid and efficient methods to reduce the order of LTI systems. To address this research gap, this study explores novel MIMO system model reduction approaches that are computationally efficient and do not rely on explicit knowledge of the underlying system. By leveraging the latest advancements in the field, we seek to develop effective techniques that can reliably reduce the order of LTI systems while preserving their essential dynamic characteristics.

AFD and SCRVM methods are effective in reducing LTI systems. These methods rely on the rational orthogonal basis, also called the Takenaka–Malmquist (TM) system [17]. In the case of the open right-half plane , the TM system is defined as follows:

where and are the complex value real parts. The basis (with ) forms a basis of the Hardy-2 space if and only if

The shifted Cauchy kernel, denoted as , is a component of the TM system. These systems are commonly used in model reduction due to their parameter-based linear model structure [18,19,20,21,22].

In recent years, the relevance vector machine (RVM) has emerged as a robust framework for solving sparse coding problems and providing uncertainty quantification [23,24,25]. RVM, also called sparse Bayesian learning (SBL), was proposed by Tipping [26] in 2001. RVM has achieved significant success in high-spectral image classification [27,28,29] and reconstruction [30]. It also has found applications in various fields such as direction-of-arrival estimation (DOA) [31,32,33], classification [25], compressive sensing [34], feature selection [35], signal processing [36,37,38], image reconstruction [30,39], financial prediction [40], and more.

The RVM offers computational efficiency by progressively “pruning” irrelevant vectors during the inference process, reducing computation time for covariance matrix inversion. This advantage is precious for high-dimensional problems. However, when dealing with large-scale datasets, RVM faces challenges due to the computational costs of the iterative process, scaling in time and in space, where T is the iteration time, and M is the number of parameters. Additionally, for complex-valued data, the computational time increases to with a storage space of . There exist several methods to reduce the costs of the iterative process, such as iteratively reweighted least-squares (IRLS) [41], approximate message passing (AMP) [42], and variational inference (VI) [43]. A popular method that does not require the computation of the inverse matrix, called inverse-free sparse Bayesian learning (IFSBL) [33,43], is often faster in practice. IFSBL bypasses matrix inversion via relaxed evidence lower bound (ELBO), employing a variational EM scheme for efficient, fast-convergence SBL. However, these methods need more scalability at very high dimensions M and more accurate recovery of the sparse codes. Researchers have proposed approximate inference algorithms and acceleration techniques like CoFEM [44], which have significant advantages in both scalability and accuracy over other SBL approaches. Unfortunately, CoFEM only handles real-valued data. This paper introduces a novel method called covariance-free maximum likelihood (CoFML) to address the complex-valued issue similar to CoFEM. CoFML, which omits explicit covariance matrix calculations, provides unbiased posterior estimates through linear system solutions and advanced numerical algebra, resulting in a fast, accurate, and sparsely approximated model.

The paper is organized as follows: Section 2 presents an innovative approach to enhance the strictly complex-valued relevant vector machine (SCRVM) algorithm’s computational efficiency in scenarios involving high-dimensional problems, which we call CoFML. Section 3 provides a theoretical analysis of CoFML. Numerical examples are presented in Section 4, followed by conclusions in Section 5.

2. Reduction in MIMO Systems

2.1. Strictly Complex-Valued Relvence Vector Machine Inference

We expand the MIMO LTI systems in (1) row by row into L SISO LTI systems , with denoting the l-th system of , where and . We define the single-input signal and the single-output signal as , and N is the number of examples. The transfer function of the l-th systems is represented as follows:

where , and . The noise vector is assumed to be complex Gaussian-distributed, with . Here, , includes both the real and imaginary components of the complex noise, and denotes the variance. Notably, those systems have the same denominator, so we have . By considering the real and imaginary parts separately and assuming independence among , the likelihood function for the complete data set can be expressed as follows:

where , , , , and is the imaginary part of a complex number.

The above notation represents a -dimensional Gaussian distribution over with a mean of and variance of . For simplicity, the implicit conditioning on the set of input vectors is omitted in Equation (6) and subsequent expressions during inference.

The prior distribution for the coefficients is modeled as a zero-mean Gaussian distribution:

where , , and . To enforce sparsity with equal sparsity patterns for the real and imaginary parts, we set for . For convenience, we define , so that where is the zero matrix. Additionally, we assign Gamma distributions [24] as hyperpriors for and the noise variance :

We set [26] to ensure flat priors.

By using Bayes’ rule, the posterior covariance and mean are given by

and

respectively. To facilitate subsequent inference, we introduce the notation , where . We define , leading to .

To infer the SCRVM, we use the augmented vector , , and the augmented matrix [45]. Using a simple relation of the composite augmented vector and matrix, given by , , , and , where , and . Hence, we have , , and . By using these simple transformations, we obtain the following:

where

and

where and .

To simplify the inference, we introduce the notation and (with ), yielding the following:

and

Therefore, the distribution of Equation (10) can also be rewritten as follows:

Then, we also have

where is a Hermitian positive definite matrix [46].

So, we can represent the joint probability density function of MIMO LTI systems as follows:

Using the maximum likelihood method [24] to find its maximum value, we obtain the following:

and

where , is the i-th elements of (14), and is the trace of a matrix.

2.2. An Estimator for the Diagonal of Covariance Matrix

We adopt the technique in [47] to estimate the diagonal components of .

Proposition 1.

Let ) be random probe vectors, where each comprises independent and identically distributed elements such that for all . Moreover, it also assumes that is independent of . For each . Let , where Λ is (13) and . Consider the estimator , where, for each ,

Then, the provides an unbiased estimate of .

Proof.

The expected value of is given by the following:

because the for all , and we have . The proof is completed. □

In particular, we assume that are independent Rademacher variables, which means . Then, we can simplify (20) to the following form:

Theorem 1.

Let be the estimator given by Equation (21), satisfying the conditions of Proposition 1. Then, is one of the optimal estimators for .

Proof.

By using Proposition 1 and the calculated methods of [44] that confirm , we can calculate the variance of as follows:

where . In the numerator, due to the independence of and when , we observe that . Consequently, serves as an optimal estimator for . This completes the proof. □

Then, we transform the inversion problem into the problem of solving a linear system; that is, solving the linear equation , where and . Furthermore, we can concurrently solve these systems by considering the matrix equation , where the inputs and are defined as follows:

By labeling the columns of the solution matrix as

our desired quantities for CoFML, and , can be calculated using (21). Then, we perform the update in (18) as

where .

Then, we choose the conjugate gradient (CG) algorithm to solve the multiple linear system . This requires converting the matrix–vector multiplication of the CG algorithm for a single linear system into a matrix–matrix multiplication.

Lemma 1.

Consider the CG algorithm applied to solve for , where is a positive definite matrix, and . Here, and represent the i-th column of and , respectively. Let be the initial solution, be the exact solution, and denote the solution obtained by the CG algorithm at the k-th step. We can establish the following relationship:

where denotes the norm induced by the positive definite matrix for any vector , and [48]. From (23), we observe that when is ill-conditioned (), the convergence of the CG algorithm tends to be slow. However, in SBL iterations, many diagonal elements of are pushed towards infinity [26], resulting in a large value of K. To address this issue, we incorporate a preconditioned matrix into the CG algorithm, which will be discussed in the following section.

3. Preconditioned Conjugate Gradient Method for SCRVM

3.1. Preconditioned Matrix

Here, we would rather solve the equivalent system than solve , where , , and . The matrix is called the preconditioned matrix. Before presenting the convergence proof of the preconditioned conjugate gradient method, the parallel conjugate gradient (PCG) algorithm is outlined, as shown in Algorithm 1.

| Algorithm 1 |

1: 2: 3: 4: 5: 6: 7: 8: 9: 10: 11: 12: 13: 14: 15: 16: 17: |

However, in practice, it is impossible to obtain in Algorithm 1. So, we usually use the condition to replace . Finally, we call the final Algorithm 1 CoFML. After running Algorithm 1, we can obtain the approximate model (2). In particular, when and the basis consists of real numbers, the l-th system of the second approximating model is given by the following:

To ensure the l-th steady-state values of the reduced model are equal to the original systems (1), we use the following constraint:

3.2. Convergence of CoFML

When the values of are small and , it is common for specific values to approach infinity and effectively “prune” the associated “nuisance” parameters while maintaining finite values for [26]. We designate these retained parameters as the “true” parameters.

Definition 1

(CoFML Convergence). In Algorithm 1, let , and the fulfillment of -convergence for CoFML is established. Partition the indices into a “nuisance” set denoted as and a “true” set denoted as , respectively. In this partition, is finite when , while when .

Here, we denote the resulting matrices with the appropriate “true” and “nuisance” columns removed as and , respectively. By leveraging the expression for the inverse of a partitioned matrix, we can demonstrate the following relationship:

Building upon the derivation above, we can establish the convergence theorem for the preconditioned matrix.

Theorem 2

(PCG Convergence). Let and , respectively, represent the inverse-covariance matrix and preconditioned matrix at the t-th iteration of Algorithm 1. We let , , and be the initial solution, is the exact solution, and represents the solution obtained by the algorithm at the k-th step and t-th iteration. Then, given -convergence, it follows that

where .

Proof.

From Lemma 1, we have the following residual:

where and In (26), we notice that our goal is to bound .

Let . So, we obtain the following:

where .

Ref. (27) demonstrates that if is an eigenvalue of , then the eigenvalue of is . is similar to if there exists an invertible matrix , such that . Similar matrices have the same eigenvalues [49]. Consider , and , so the eigenvalue of is . Since the absolute value of the eigenvalue of a matrix does not exceed its spectral norm, we have

It follows that and , so we have

where we first let on both sides of (26), and then substitute (28) into it to obtain (25). The Proof is completed. □

3.3. Computational Complexities of CoFML

After satisfying the convergence conditions mentioned above, we analyze the algorithm’s overall complexity. During each of the T iterations of CoFML, a maximum of steps of conjugate gradient (CG) is required, resulting in an overall time complexity of and space complexity of for each of the L systems.

4. Examples

This section provides three examples to illustrate the algorithm described above. The l-th system impulse response is defined as follows:

where is a high-order transfer function, and Q is chosen to be greater than the real part of the largest pole of . The l-th system impulse response energy (IRE) is calculated as follows:

Then, we consider three well-known examples when , respectively.

Example 1.

We first consider a SISO LTI system [16,50,51] as follows:

where , and .

In this example, we use frequency-domain measurements within the interval and basis functions within the interval . By applying the CoFML method, we obtain , and take the real part of . Then, the 2nd partial sum is given by the following:

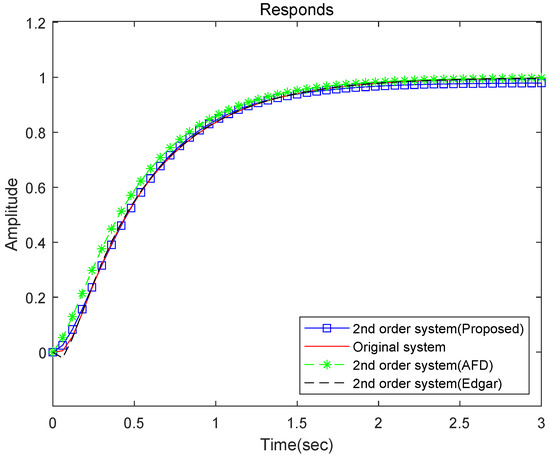

Figure 1 shows the step response comparison of the original system and the simplified model obtained by other methods, while Table 1 compares the IRE with different techniques. Figure 1 and Table 1 show that the CoFML method is effective.

Figure 1.

Step responses of the original and reduced models.

Table 1.

IRE of the reduced models.

Example 2.

The next example is studied in [53] which is a 4th-order system, where

Here we use frequency-domain measurements in the interval and basis numbers in the interval . Using the CoFML method, we obtain , and take the real part of the . Then, the 2nd partial sum is given by the following:

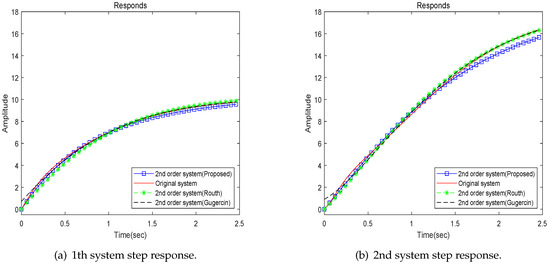

Figure 2 shows the original and other reduced model step responses, while Table 2 compares the IRE with different methods. Figure 2 and Table 2 show the adequate CoFML model.

Figure 2.

Step responses of the original and reduced models.

Table 2.

IRE of the reduced models.

Example 3.

We finally consider the transfer function study in [54]:

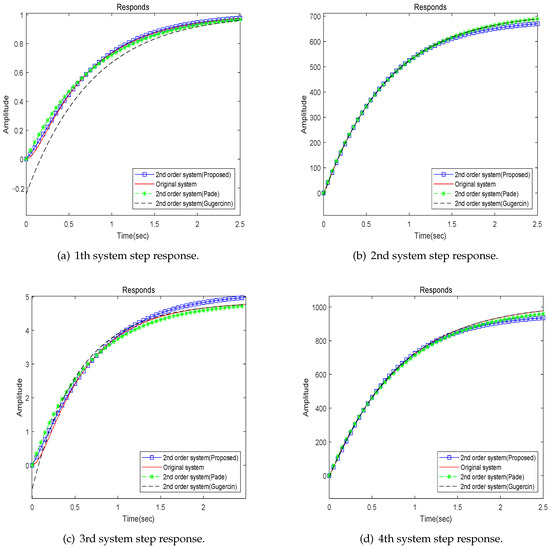

In this example, we use frequency-domain measurements within the interval and basis functions within the interval . By applying the CoFML method, we obtain , and take the real part of the . With these values, the 2nd partial sum is given by:

Figure 3 shows the step responses of the original system and the reduced models obtained using different methods. In contrast, Table 3 and Table 4 compare the IRE using other methods. From Figure 3 and Table 3 and Table 4, we can observe that the CoFML method is effective.

Figure 3.

Step responses of the original and reduced models.

Table 3.

IRE of the reduced models.

Table 4.

IRE of the reduced models.

5. Conclusions

In this paper, we developed the CoFML method to accelerate SCRVM. By solving the SCRVM inversion problem with unbiased estimates of the matrix diagonal elements, CoFML showed superior time and space efficiency compared to existing SCRVM techniques, especially when the number of unknowns M was large. Next, we theoretically analyzed the convergence of the CoFML algorithm, including convergence under preconditioned matrices. In addition, three well-known examples of results demonstrated that CoFML outperforms existing model reduction methods for MIMO systems, even with a small data set. Moreover, the applicability of CoFML extends to any scenario involving complex sparse Bayesian methods where covariance computation is required. This versatility opens up opportunities for its use in various fields such as compressed sensing, direction of arrival (DOA) estimation, multi-target tracking, and beyond.

Author Contributions

Methodology, W.X.; writing—original draft, J.S. All authors have read and agreed to the published version of the manuscript.

Funding

This researchis supported by the Science Foundation of Shaoguan University (No. SY2021KJ11) and the Scientific Computing Research Innovation Team of Guangdong (No. 2021KCXTD052).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ogata, K. Discrete-Time Control Systems; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 1995. [Google Scholar]

- d’Azzo, J.J.; Houpis, C.D. Linear Control System Analysis and Design: Conventional and Modern; McGraw-Hill Higher Education: New York, NY, USA, 1995. [Google Scholar]

- Khalil, I.S.; Doyle, J.C.; Glover, K. Robust and Optimal Control; Prentice Hall: Upper Saddle River, NJ, USA, 1996; Volume 2. [Google Scholar]

- Goodwin, G.C.; Graebe, S.F.; Salgado, M.E. Control System Design; Prentice Hall: Upper Saddle River, NJ, USA, 2001; Volume 240. [Google Scholar]

- Lathi, B.P.; Green, R.A. Linear Systems and Signals; Oxford University Press: New York, NY, USA, 2005; Volume 2. [Google Scholar]

- Albertos, P.; Antonio, S. Multivariable Control Systems: An Engineering Approach; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Geromel, J.; Kawaoka, F.; Egas, R. Model reduction of discrete time systems through linear matrix inequalities. Int. J. Control 2004, 77, 978–984. [Google Scholar] [CrossRef]

- Mittal, A.; Prasad, R.; Sharma, S. Reduction of linear dynamics systems using an error minimization technique. J.-Inst. Eng. India Part Electr. Eng. Div. 2004, 84, 201–206. [Google Scholar]

- Sandberg, H.; Lanzon, A.; Anderson, B.D. Model approximation using magnitude and phase criteria: Implications for model reduction and system identification. Int. J. Robust Nonlinear Control-IFAC-Affil. J. 2007, 17, 435–461. [Google Scholar] [CrossRef]

- Gugercin, S.; Sorensen, D.; Antoulas, A. A modified low-rank Smith method for large-scale Lyapunov equations. Numer. Algorithms 2003, 32, 27–55. [Google Scholar] [CrossRef]

- Penzl, T. Algorithms for model reduction of large dynamical systems. Linear Algebra Its Appl. 2006, 415, 322–343. [Google Scholar] [CrossRef]

- Gugercin, S.; Antoulas, A.C.; Beattie, C. H2 model reduction for large-scale linear dynamical systems. SIAM J. Matrix Anal. Appl. 2008, 30, 609–638. [Google Scholar] [CrossRef]

- Magruder, C.; Beattie, C.; Gugercin, S. Rational Krylov methods for optimal 2 model reduction. In Proceedings of the 49th IEEE Conference on Decision and Control (CDC), Atlanta, GA, USA, 15–17 December 2010; pp. 6797–6802. [Google Scholar]

- Mi, W.; Qian, T.; Wan, F. A fast adaptive model reduction method based on Takenaka–Malmquist systems. Syst. Control Lett. 2012, 61, 223–230. [Google Scholar] [CrossRef]

- Freund, R.W. Padé–Type Model Reduction of Second-Order and Higher-Order Linear Dynamical Systems. In Dimension Reduction of Large-Scale Systems: Proceedings of the Workshop held in Oberwolfach, Germany, 19–25 October 2003; Benner, P., Sorensen, D.C., Mehrmann, V., Eds.; Lecture Notes in Computational Science and Engineering; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Parmar, G.; Mukherjee, S.; Prasad, R. System reduction using eigen spectrum analysis and Padé approximation technique. Int. J. Comput. Math. 2007, 84, 1871–1880. [Google Scholar] [CrossRef]

- Walsh, J.L. Interpolation and Approximation by Rational Functions in the Complex Domain; American Mathematical Soc.: Providence, RI, USA, 1935; Volume 20. [Google Scholar]

- Heuberger, P.S.C.; Hof, P.M.J.V.D.; Bosgra, O.H. A generalized orthonormal basis for linear dynamical systems. In Proceedings of the 32nd IEEE Conference on Decision and Control, New Orleans, LA, USA, 13–15 December 1995. [Google Scholar]

- Ward, N.F.D.; Partington, J.R. Rational wavelet decompositions of transfer functions in hardy-sobolev classes. Math. Control Signals Syst. 1995, 8, 257–278. [Google Scholar] [CrossRef]

- Akçay, H.; Ninness, B. Orthonormal basis functions for modelling continuous-time systems. Signal Process. 1999, 77, 261–274. [Google Scholar] [CrossRef]

- Akçay, H.; Heuberger, P. A frequency-domain iterative identification algorithm using general orthonormal basis functions. Automatica 2001, 37, 663–674. [Google Scholar] [CrossRef]

- Hof, P.V.D.; Wahlberg, B.; Heuberger, P.; Ninness, B.; Bokor, J.; e Silva, T.O. Modelling and Identification with Rational Orthogonal Basis Functions—ScienceDirect. IFAC Proc. Vol. 2000, 33, 445–455. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4. [Google Scholar]

- Berger, J.O. Statistical Decision Theory and Bayesian Analysis; Springer: Berlin/Heidelberg, Germany, 1985. [Google Scholar]

- Luo, J.; Vong, C.M.; Wong, P.K. Sparse Bayesian extreme learning machine for multi-classification. IEEE Trans. Neural Netw. Learn. Syst. 2013, 25, 836–843. [Google Scholar]

- Tipping, M.E. Sparse Bayesian learning and the relevance vector machine. J. Mach. Learn. Res. 2001, 1, 211–244. [Google Scholar]

- Demir, B.; Erturk, S. Hyperspectral image classification using relevance vector machines. IEEE Geosci. Remote Sens. Lett. 2007, 4, 586–590. [Google Scholar] [CrossRef]

- Mianji, F.A.; Zhang, Y. Robust hyperspectral classification using relevance vector machine. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2100–2112. [Google Scholar] [CrossRef]

- Liu, X.; Chen, X.; Li, J.; Zhou, X.; Chen, Y. Facies identification based on multikernel relevance vector machine. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7269–7282. [Google Scholar] [CrossRef]

- Ospina-Acero, D.; Marashdeh, Q.M.; Teixeira, F.L. Relevance vector machine image reconstruction algorithm for electrical capacitance tomography with explicit uncertainty estimates. IEEE Sens. J. 2020, 20, 4925–4939. [Google Scholar] [CrossRef]

- Zhang, J.; Qiu, T.; Luan, S. An efficient real-valued sparse Bayesian learning for non-circular signal’s DOA estimation in the presence of impulsive noise. Digit. Signal Process. 2020, 106, 102838. [Google Scholar] [CrossRef]

- Dai, J.; So, H.C. Real-valued sparse Bayesian learning for DOA estimation with arbitrary linear arrays. IEEE Trans. Signal Process. 2021, 69, 4977–4990. [Google Scholar] [CrossRef]

- Lu, J.; Yang, Y.; Yang, L. An efficient off-grid direction-of-arrival estimation method based on inverse-free sparse Bayesian learning. Appl. Acoust. 2023, 211, 109521. [Google Scholar] [CrossRef]

- Ji, S.; Dunson, D.; Carin, L. Multitask compressive sensing. IEEE Trans. Signal Process. 2008, 57, 92–106. [Google Scholar] [CrossRef]

- Cheng, H.; Chen, H.; Jiang, G.; Yoshihira, K. Nonlinear feature selection by relevance feature vector machine. In Proceedings of the International Workshop on Machine Learning and Data Mining in Pattern Recognition, Leipzig, Germany, 18–20 July 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 144–159. [Google Scholar]

- Wipf, D.P.; Rao, B.D. Bayesian learning for sparse signal reconstruction. In Proceedings of the 2003 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP’03), Hong Kong, 6–10 April 2003; Volume 6, p. VI–601. [Google Scholar]

- Huang, K.; Aviyente, S. Sparse representation for signal classification. Adv. Neural Inf. Process. Syst. 2006, 19. [Google Scholar] [CrossRef]

- Zhang, Z.; Rao, B.D. Sparse signal recovery with temporally correlated source vectors using sparse Bayesian learning. IEEE J. Sel. Top. Signal Process. 2011, 5, 912–926. [Google Scholar] [CrossRef]

- Bilgic, B.; Goyal, V.K.; Adalsteinsson, E. Multi-contrast reconstruction with Bayesian compressed sensing. Magn. Reson. Med. 2011, 66, 1601–1615. [Google Scholar] [CrossRef]

- Hossain, A.; Nasser, M. Recurrent support and relevance vector machines based model with application to forecasting volatility of financial returns. J. Intell. Learn. Syst. Appl. 2011, 3, 230. [Google Scholar] [CrossRef][Green Version]

- Wipf, D.; Nagarajan, S. Iterative Reweighted ℓ1 and ℓ2 Methods for Finding Sparse Solutions. IEEE Trans. Signal Process. 2010, 4, 317–329. [Google Scholar]

- Fang, J.; Zhang, L.; Li, H. Two-Dimensional Pattern-Coupled Sparse Bayesian Learning via Generalized Approximate Message Passing. IEEE Trans. Image Process. 2016, 25, 2920–2930. [Google Scholar] [CrossRef]

- Duan, H.; Yang, L.; Fang, J.; Li, H. Fast inverse-free sparse Bayesian learning via relaxed evidence lower bound maximization. IEEE Signal Process. Lett. 2017, 24, 774–778. [Google Scholar] [CrossRef]

- Lin, A.; Song, A.H.; Bilgic, B.; Ba, D. Covariance-free sparse Bayesian learning. IEEE Trans. Signal Process. 2022, 70, 3818–3831. [Google Scholar] [CrossRef]

- Boloix-Tortosa, R.; Murillo-Fuentes, J.J.; Velázquez, I.S.; Pérez-Cruz, F. Complex-Valued Kernel Methods for Regression. arXiv 2016, arXiv:1610.09915. [Google Scholar]

- Schreier, P.J.; Scharf, L.L. Statistical Signal Processing of Complex-Valued Data: The Theory of Improper and Noncircular Signals; Cambridge University Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Bekas, C.; Kokiopoulou, E.; Saad, Y. An estimator for the diagonal of a matrix. Appl. Numer. Math. 2007, 57, 1214–1229. [Google Scholar] [CrossRef]

- Young, D.M. Iterative Solution of Large Linear Systems; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Strang, G. Linear Algebra and Its Applications; Pearson Education India: Bangalore, India, 2012. [Google Scholar]

- Mukherjee, S. Order Reduction of Linear Systems using Eigenspectrum Analysis. J. Inst. Eng. (India) Electr. Eng. Div. 1996, 77, 76–79. [Google Scholar]

- Therapos, C.P.; Diamessis, J.E. A new method for linear system reduction. J. Frankl. Inst. 1984, 317, 359–371. [Google Scholar] [CrossRef]

- Edgar, T.F. Least squares model reduction using step response. Int. J. Control 1975, 22, 261–270. [Google Scholar] [CrossRef]

- Hutton, M.; Friedland, B. Routh approximations for reducing order of linear, time-invariant systems. IEEE Trans. Autom. Control 1975, 20, 329–337. [Google Scholar] [CrossRef]

- Shamash, Y. Linear system reduction using Pade approximation to allow retention of dominant modes. Int. J. Control 1975, 21, 257–272. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).