Abstract

Knowledge graph embedding (KGE) has been identified as an effective method for link prediction, which involves predicting missing relations or entities based on existing entities or relations. KGE is an important method for implementing knowledge representation and, as such, has been widely used in driving intelligent applications w.r.t. question-answering systems, recommendation systems, and relationship extraction. Models based on convolutional neural networks (CNNs) have achieved good results in link prediction. However, as the coverage areas of knowledge graphs expand, the increasing volume of information significantly limits the performance of these models. This article introduces a triple-attention-based multi-channel CNN model, named ConvAMC, for the KGE task. In the embedding representation module, entities and relations are embedded into a complex space and the embeddings are performed in an alternating pattern. This approach helps in capturing richer semantic information and enhances the expressive power of the model. In the encoding module, a multi-channel approach is employed to extract more comprehensive interaction features. A triple attention mechanism and max pooling layers are used to ensure that interactions between spatial dimensions and output tensors are captured during the subsequent tensor concatenation and reshaping process, which allows preserving local and detailed information. Finally, feature vectors are transformed into prediction targets for embedding through the Hadamard product of feature mapping and reshaping matrices. Extensive experiments were conducted to evaluate the performance of ConvAMC on three benchmark datasets compared with state-of-the-art (SOTA) models, demonstrating that the proposed model outperforms all compared models across all evaluation metrics on two of the datasets, and achieves advanced link prediction results on most evaluation metrics on the third dataset.

Keywords:

knowledge graph embedding (KGE); link prediction; convolutional neural network (CNN); multi-channel convolution; triple attention MSC:

68W11; 94-04

1. Introduction

Knowledge graphs consist of effective triplets in the form of head entity, relation, and tail entity, and are represented as directed graphs that depict the knowledge of entities and their relationships in the objective world. In these graphs, nodes and edges, respectively, represent the entities and relations between entities in the real world. As an effective knowledge resource, knowledge graphs are widely applied in artificial intelligence tasks, including recommendation systems [1], question-answering systems [2], and information retrieval systems. Large-scale knowledge graphs, such as YAGO [3], Freebase [4], DBpedia [5], and WordNet [6], store vast amounts of structured data. However, many effective triplets are lost during the construction of a knowledge graph, resulting in poor performance in downstream tasks.

The primary task in a knowledge graph is to predict missing entities or relations within the graph. An effective method currently used for link prediction is called knowledge graph embedding (KGE). It aims to embed entities and relations into a vector space, where numerous additions or multiplications are performed. Finally, a scoring function evaluates whether the predicted entities or relations conform to objective facts. Existing KGE models could broadly be categorized into translation-based models, semantic matching models, and neural-network-based models. Typical examples of translation-based models include TransE [7], TransH [8], and TransR [9]. These models use distance as a criterion to measure the semantic similarity between entities and relations and are characterized by their use of additive operations. The main representatives of semantic matching models are DistMult [10] and ComplEx [11], which capture the semantics of relationships through matrix multiplication, and are typical examples of multiplicative operation models. Based on these approaches, powerful models such as RotatE [12] and TuckER [13] have been further developed.

With the success of neural networks in the field of knowledge graphs, convolutional neural networks (CNNs) enter the researchers’ horizons. For instance, ConvE [14] utilizes a two-dimensional (2D) convolution to extract features from a matrix, where the elements of the matrix represent entity or relationship information. However, only one convolutional channel is used as an input channel, which limits the interaction between entities and relations. Additionally, the interpretability of the reshaping and concatenation process is not strong. HypER [15] enhances the interaction between entities and relations by using entities as the input for convolution and relations as filters. In M-DCN [16], multi-scale filters are dynamically generated in the convolutional layer, with the weights of the filters dynamically related to each relationship to learning different features between input embeddings. Although these models have optimized CNNs and achieved commendable results, the process of feature interaction is still not perfect, and the extraction of detailed information is insufficient, thus limiting their performance.

The scale of knowledge graphs in the real world is increasing, which requires higher performance from models, especially in terms of their computational efficiency and scalability. The data volume of knowledge graphs is rapidly increasing, and how to handle larger entities and relations has become a major challenge. The time and space complexities of many proposed models may grow exponentially on large-scale datasets, requiring a search for more efficient models. One can also build a distributed computing framework to process large-scale knowledge-graph data.

Therefore, in this article, a highly expressive KGE model, named ConvAMC, is elaborated on. In this model, initially, entities and relations are embedded as a whole to preserve their original semantic information as much as possible. Entities and relations are then reshaped and concatenated in an alternating manner to extract additional feature interactions. Secondly, a multi-channel convolution is utilized in the convolutional process to learn different features between input embeddings and output feature mappings. To capture local features and detailed information during the interaction between entities and relations, a max pooling layer is inserted in each convolutional channel. This approach preserves key feature information while reducing the number of model’s parameters and computational load to prevent overfitting. To enhance the capture of interactions between spatial dimensions and input tensor channels during the multi-channel convolutional process, an attention module is introduced. This module applies a triple attention mechanism in each convolutional channel, focusing specifically on the entities and relations of triplets to extract their interaction features more comprehensively. The computational cost added by the cross-channel convolutional process remains within an acceptable range. Finally, the feature mapping tensors obtained from the multi-channel convolution are concatenated and reshaped again, then mapped to a complex space after a 2D convolution. The vectors of entities and relations are computed using the Hadamard product, returning scores for the predicted triplets.

The main contributions of this article can be summarized as follows:

- A multi-channel CNN is proposed to ensure interaction between entities and relations across multiple channels, along with a max pooling layer added to each convolutional channel to capture more local features.

- The CNN structure is optimized by introducing a triple attention mechanism during the multi-channel convolutional process, enhancing the extraction of global information for entities and relations across the convolutional channels.

- The feature mapping tensors obtained from the multi-channel convolution are concatenated in the form of a Hadamard product, and traditional inner product operations are abandoned in the complex space. Instead, a more comprehensive Hadamard product is employed to achieve better predictions.

In conducted extensive experiments, the ConvAMC model is compared with state-of-the-art (SOTA) models. By comparing link prediction results, it was found that ConvAMC achieves better embedding effects and leads on most metrics.

The rest of this article is organized as follows. In Section 2, the related work about KGE is briefly reviewed and analyzed. The research problem is formally defined and the details of the ConvAMC are introduced in Section 3. Experimental results achieved by the proposed model on three public databases are presented in Section 4. Finally, the conclusions and future work directions are presented in Section 5.

2. Related Work

The use of KGE models to predict missing entities and relations in knowledge graphs has received widespread attention, leading to a range of classic KGE models. As mentioned in the Introduction, KGE models can be divided into three main categories: translation-based models, semantic matching models, and neural-network-based models.

A typical representative of the translation-based models is TransE [7], which views the relationship between entities in a fact triplet as a translation distance in a low-dimensional vector space. For a given fact triplet , TransE learns to generate vectors and aims to maintain that for valid triplets. The loss function is optimized as follows:

where denotes the L1 or L2 norm, denotes an invalid triplet, γ denotes the hyperparameter margin, and is used to extract the larger score between 0 and . TransE is a fast and efficient model for link prediction, but it is only suitable for one-to-one relationships and does not perform well in handling complex relationships. Consequently, many embedding models have extended it, such as TransH [8], which introduces a hyperplane. This simple approach overcomes the shortcomings of TransE in handling complex relationships while maintaining almost the same model complexity as TransE. TransR [9] builds on the foundation of TransH by assuming that entities and relations exist on different semantic hyperplanes. TransD [17] represents entities using two vectors and constructs a triplet-specific mapping matrix to facilitate subsequent projections, thus significantly improving the performance. These extended models have variously enhanced the performance of TransE.

KGE methods, based on semantic matching models, utilize tensors to represent entities and relations. These models employ tensor decomposition for modeling the relationships and propose a scoring function composed of a bilinear product of entity vectors and object vectors, and a full-rank relationship matrix. RESCAL [18] is one of the earliest models based on semantic matching. It employs an optimized scoring function to address the problem of KGE. However, RESCAL tends to overfit due to the substantial number of parameters required during its training. Inspired by this, DistMult [10] a special case of RESCAL- replaces the full-rank relationship matrix with a diagonal matrix, effectively reducing the number of parameters and establishing a method for training symmetric relationships. While DistMult reduces the likelihood of overfitting, it also imposes certain limitations on entity embeddings, making it unable to model asymmetric relationships. ComplEx extends the embedding space of DistMult into the complex space, utilizing complex conjugate relationships to represent the subject and object embeddings of triplets. This introduces asymmetry into tensor decomposition, addressing the limitation of DistMult, which can only handle symmetric relationships. This enhancement improves the capability to manage various relationship patterns. Inspired by Euler’s formula, RotatE [12] projects entities and relations into a complex vector space and interprets relations as rotations between entities. The scores of triplets are computed using the Hadamard product. RotatE is not only scalable but also excels in inferring and simulating various relationship patterns, particularly in modeling symmetric relationships, making it a powerful model. However, the performance of RotatE in handling commutative and non-commutative combination modes still needs improvement. Therefore, based on RotatE, Rotate3D was proposed in [19], which maps entities into a 3D space and represents relations as rotations. By using the composition property of rotations in the 3D space, Rotate3D can naturally preserve the order of relations and outperforms RotatE in modeling both commutative and non-commutative composition patterns.

The current research, focused on KGE models, has gradually shifted toward neural-network-based models. For instance, ConvE [14] represents a significant breakthrough in the application of CNNs to the KGE field, whereby the characteristics of 2D CNNs are analyzed, and input features are processed using 2D convolutional filters to produce corresponding output vectors. Then, scores for predicted triplets are obtained by computing the inner product of these output vectors with the embeddings of the tail entities. ConvKB [20] considers that one-dimensional (1D) convolution operations are beneficial for capturing global relationships; hence, it uses 1D convolutional filters to convolve embeddings to calculate scores for triplets. HypER [15] utilizes a set of 1D convolutional filters specific to relationships, generated by a hypernetwork, to fully convolve the embeddings of head entities. ConvR [21] improves upon the interaction flaws introduced by ConvE by using adaptive relationship-specific filters instead of global filters. AcrE [22] employs a novel CNN structure, using various types of filters to extract interaction features between entities and relations. In M-DCN [16], filters of different weights are generated in the convolutional layer, with each dynamically related to a relation to the model’s diverse types of relationships. CTKGC [23] proposes combining a 2D CNN with a translation-based model, suggesting that the multiplicative approximation of entities and relations is equivalent to the factual representation of the object. ConvEICF [24] enhances and optimizes four critical aspects of CNNs in link prediction tasks. ConvHLE [25] introduces a network model, designed for convolution of high- and low-level feature interactions, which effectively captures entity and relation information, generating more information-rich vector representations. In addition, in order to overcome the huge storage challenge caused by excessive embedding parameters in the knowledge graphs for practical applications, researchers have proposed KGE distillation models, such as DualDE [26], which compresses KGEs into a low-dimensional space, considers the interaction between students and teachers, effectively transfers the teacher’s knowledge to students, greatly reduces the number of parameters, and improves its efficiency and performance.

Models, based on CNNs, are recognized as having better feature extraction and expressive capabilities compared to the other two categories of models. However, the parameters of these models do not scale with the expansion of datasets. Consequently, when facing increasingly large knowledge graph datasets, these models can only extract features at a single level, and there remains room for improvement in extracting deeper local information.

Table 1 summarizes the scoring functions, embedding spaces, and relationship parameters of several classic and advanced KGE models, where and denote the embedding dimensions of entities and relations, respectively, and denote the Euclidean space and the complex space, respectively, ∗ denotes a convolution operation, denote the reshaping and concatenation of the head entity, relation, and tail entity within a triplet, denotes the conjugate form of the tail entity in the complex space, Re() denotes the real part, denotes the nonlinear function operation, and denotes the dot product operation.

Table 1.

Comparison of scoring functions, embedding spaces, and relationship parameters of different KGE models.

Starting with the mathematical meaning of the convolution, presented as follows:

the essence of convolution is the process of flipping, multiplying, and adding. In CNNs, the loss function gradually decreases as the number of iterations increases during training, so that the model’s parameters converge to a stable value. The output of the convolutional layer can be represented as follows:

where denotes the input feature map, denotes the convolution kernel weight, denotes the bias, and denotes the convolution operation.

During the training of the proposed model, we use gradient descent to optimize the model’s parameters, as follows:

where denotes the learning rate and denotes the loss function.

In the convolutional channels of the proposed ConvAMC model, the loss function is bounded and continuous with respect to the model’s parameters. The gradient descent algorithm is also bounded, ensuring the convergence of the CNN layers.

The triple attention mechanism used to enhance information flow and feature expression ability of the proposed model involves three different attentions, namely, self-attention, context-attention, and inter-attention, with the following weighted attention computations:

where and denote the matrices of the queries, and denote the matrices of the keys, respectively, for each attention type, and denotes the dimension of the keys.

The stability of the triple attention mechanism lies in the normalization operation, where the SoftMax function converts the input into a probability distribution, ensuring that the sum of all weights is equal to 1, as per the following formula:

If the input is bounded, then the output is also bounded.

During the model training process, stability also relates to changes in the gradient of the loss function with respect to attention weights:

where denotes the final output. As the derivative of the SoftMax function is a bounded function, small changes in the input will not cause drastic fluctuations in the output, thus ensuring the stability of the gradient, which implies stability in the triple attention mechanism utilized by the proposed model.

3. Proposed Model

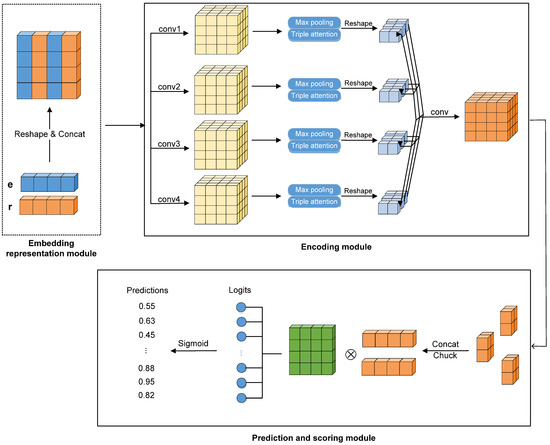

In this section, a detailed description is provided of the novel CNN-based KGE model proposed in this article, named ConvAMC. The structure of ConvAMC, shown in Figure 1, comprises three main modules: an embedding representation module, an encoding module, and a prediction and scoring module.

Figure 1.

The end-to-end structure of the proposed ConvAMC model (e denotes entity embedding; r denotes relation embedding; Reshape and Concat denote reshaping and splicing operations, respectively; conv denotes convolution operation; Chuck denotes segmentation operation;  denotes the Hadamard product operation).

denotes the Hadamard product operation).

denotes the Hadamard product operation).

denotes the Hadamard product operation).

The embedding representation module initializes all entities and relations to form a K-dimensional embedding matrix of entities and relations, facilitating subsequent processing and computation of entities and relations. The encoding module processes the embedding matrix of entities and relations through a multi-channel convolution and a triple attention mechanism to obtain the feature mapping matrix of entities and relations, which serves as an input matrix for the rating prediction and scoring module, where this matrix is projected into the complex space and is split into real and imaginary parts, based on the embedding dimensions of entities and relations. The scores of each candidate entity are obtained by using a scoring function, whereby the scores are arranged from high to low.

3.1. Embedding Representation Module

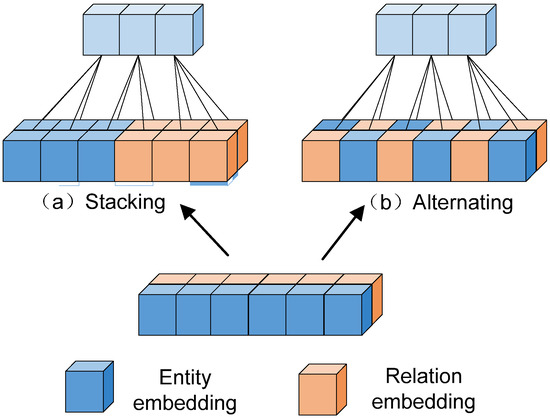

In knowledge graphs, triplets exist in forms such as text, numerical values, and symbols, which cannot be directly computed. Therefore, -dimensional embeddings are initialized for all entities and relations , generating vector representations for entities and relations . In order to more accurately establish the connections between entities and relations, the entity embeddings and relation embeddings are treated as a whole to capture more semantic information. During the vector reshaping process, the traditional stacking method is abandoned in favor of the alternating reshaping method, aimed at enhancing the interaction between entities and relations to capture more latent information. As shown in Figure 2, the alternating reshaping method is used to connect an entity and a relation, resulting in the following matrix [16]:

where denotes the concatenation operation of entities and relations as a whole, resulting in the following matrix after the alternating reshaping process:

where [∙] denotes the reshaping operation and . 2D convolution operations are employed to generate more interaction features between the combined entity and relation, thus more clearly articulating the connections between the entity and the relation.

Figure 2.

Two reshaping methods: (a) stacking; (b) alternating.

3.2. Encoding Module

In the encoding module, to best match the mapping relationships between head entities, relations, and tail entities, a linear transformation is chosen during the interaction between entities and relations. Initially, entity features and relation features are concatenated to form a long feature vector. This concatenated feature vector is then input into a linear layer, where a linear transformation is applied to derive new embedding representations for entities and relations. The concatenated feature representation is mapped to the complex space to better capture the complex relationships between entities and relations. The entity-relation interaction method is shown below:

where and , and after the interaction between entities and relations, the reshaped result is dimensioned as , where and denote the dimensions of the input 2D matrix such that . Consequently, convolution is used to extract additional feature information for modeling the entities and relations.

During the convolutional process, the features extracted by a single convolutional channel contain less detail but more comprehensive semantic information. To capture multi-level feature information and further improve the quality of entity-relation interactions, four 2D convolutional channels, each having a max pooling layer, are employed. New feature matrices generated during the interaction between entities and relations serve as input matrices for the convolutional channels, allowing for better extraction of detailed features between channels. Subsequently, the most prominent features of the feature matrices are extracted through the max pooling layers, significantly reducing the size of the feature tensor and alleviating the computational pressure, compensating for the lack of detail in features extracted by the convolutional process.

A max pooling operation is performed on each convolutional channel to compute the channel attention. Using the multi-layer perceptron (MLP) to extract channel features, the interaction features of entities and relations after max pooling operation are represented as follows:

where denotes the tanh function.

During the convolutional process, the use of max pooling operations results in some information loss, which is not conducive to prediction tasks. To address this issue, a triple attention mechanism is employed between each convolutional channel. Compared to the channel attention method used in CBAM, which shows significant performance improvements, these improvements do not stem from enhanced interaction between channels but rather from prioritizing the dimension reduction during the channel attention computation, which is superfluous for capturing local dependencies between channels. In contrast, the triple attention mechanism, utilized by the proposed model, emphasizes capturing interactions between spatial dimensions and input tensor channels rather than reducing the dimensions. This eliminates the indirect correspondence between channels and weights, and the additional computational cost is negligible. Therefore, the effectiveness of feature extraction is further enhanced. The feature extraction results of the four convolutional channels are shown below:

where denotes the -th convolutional channel, denotes the interaction features extracted by the -th convolutional channel, denotes the utilized triple attention mechanism, and denotes the utilized max pooling operation. For ease of computation during convolution operations, padding is applied in the convolutional channels to ensure the accuracy of feature extraction.

Subsequently, the processed feature maps of the convolutional channels are concatenated, reshaped, and fused through a fully connected layer, as follows:

where re (⋅) denotes a reshape operation and concat(⋅) denotes a concatenation operation.

Regularization is applied through dropout on the maximum pooling operation and triple attention mechanism in each channel, including feature maps after convolution and hidden layers after fully connected layer projection. The commonly used scoring technique in KGE can be significantly improved through the addition of dropout operation. Batch normalization (BN) is used after each layer to stabilize, regularize, and improve convergence speed. The Adam optimizer, as a fast and computationally efficient gradient-based optimization tool, is used to minimize the loss function, helping to reduce the computational overhead through this series of operations. The processed feature mapping matrix is obtained as follows:

where C represents the multi-channel convolutional processing, M represents the max pooling operation processing, and T represents the triple attention mechanism.

Finally, the feature interactions obtained through multiple convolutional channels, max pooling operations, and the triple attention mechanism are connected and reshaped through fully connected layers. The output matrix, obtained through the 2D convolution operation, is used as an input to the prediction and scoring module.

The overall mathematical model of ConvAMC can be summarized as follows:

3.3. Prediction and Scoring Module

In the prediction and scoring module, a scoring pattern is employed to obtain scores for all candidate objects. The semantic similarity of all candidates is computed by extracting the interaction features between the head entity and the relation and considering the calculated scores. The candidate with the highest score, indicating the highest match, is selected as the final prediction result.

During the encoding process, the feature extraction result undergoes a linear transformation to ensure consistency with the dimensions of the predicted entity. Subsequently, the tensor is reshaped into a vector . The reshaped feature vector is then concatenated according to the Hadamard product formula to produce the output of the convolutional channel. This output is split into two parts, based on the dimensions of the entity embeddings and relation embeddings, corresponding to the real part () and the imaginary part () of the feature vectors in the complex space. A matrix is then used to project the parameterized linear transformation into the complex space. The prediction results are obtained by the Hadamard product on the real and imaginary parts of the entity and the relation, and each prediction score is calculated using the Sigmoid function. The highest-scoring candidate object is selected as the prediction result by using the following scoring function:

The design of our scoring function is mainly inspired by the Hadamard product, whereby the feature interaction matrix of entities and relations processed through multi-channel convolution, max pooling operation, and triple attention mechanism is divided into two parts—a real and an imaginary part in the complex space. Due to the special method of computing the Hadamard product, we can transform the method of obtaining the real and imaginary parts of entities and relations in the complex space, thus increasing the efficiency of the model in processing symmetric and antisymmetric relationships in knowledge graphs.

3.4. Loss Function

It is expected that when training the proposed model, the scores for valid triplets will be equal to 1, whereas for invalid triplets, they will be equal to 0. The model parameters are trained using the cross-entropy loss function, and label smoothing techniques are utilized for optimization to achieve better results. The loss function is defined as follows:

where and denote, respectively, the set of valid and invalid triplets in the test set.

4. Experiments and Results

4.1. Datasets

Extensive experiments on the link prediction task were conducted using the proposed ConvAMC model on three knowledge-graph benchmark datasets: FB15k-237 [28], WN18RR [14], and YAGO3-10 [29]. Detailed information about these datasets is shown in Table 2. As indicated in [14,28], the WN18 and FB15k datasets contain too many reversible relationships, making the prediction of most triplets overly simplistic. Consequently, the subsets WN18RR and FB15k-237 were created to eliminate reversible relationships.

Table 2.

Detailed information of the datasets, considered for use in the experiments.

FB15k [7] is extracted from Freebase and includes 14,591 entities and 1345 types of relations, encompassing real-world information about movies, celebrities, sports teams, awards, and other aspects. WN18 [7] is extracted from WordNet and includes 40,943 entities and 18 types of relations. The entities primarily reflect word meanings, while the relations represent lexical relationships between words, such as hypernymy and superlative relationships. FB15k-237 is a subset of FB15k, from which reversible relationships have been removed. It includes 14,541 entities and 237 types of relations. WN18RR is a subset of WN18, from which reversible relationships have been removed, including 40,943 entities and 11 types of relations. YAGO3-10 is a subset of YAGO, containing 123,182 entities and 37 types of relations, primarily describing attributes of people, professions, names, and identity information. Each entity participates in multiple relationships.

4.2. Evaluation Metrics

In order to comprehensively and objectively evaluate the performance of the proposed ConvAMC model from different perspectives on embedding effects, four main metrics were used, namely the mean reciprocal rank (MRR), Hits@10, Hits@3, and Hits@1, defined as follows:

where denotes the number of triplets in the test set, while denotes the rank of the correctly predicted tail entity among all candidate entities. MRR is defined as the arithmetic mean of the reciprocal ranks of all triplets in the test set, exhibiting smoothness and less susceptibility to outliers. Higher values of MRR and Hits@k indicate better link prediction performance of a model and more effective KGE. The values of all these metrics range from 0 to 1.

4.3. SOTA Models for Comparison

ConvAMC was compared with various SOTA models categorized into three groups: (1) translation-based models, such as TransE [7] and RotatE [12]; (2) semantic matching models, like DistMult [10], ComplEx [11], and TuckER [13], with the latter improving upon the former and offering stronger competitiveness in KGE; and (3) neural-network-based models, including ConvE [14], InteractE [30], CTKGC [23], LTE-ConvE [31], ConvHLE [25], and ConvEICF [24].

4.4. Experimental Setting

During the operation of ConvAMC, hyperparameters were selected via grid search on the validation set. The selection ranges for the hyperparameters were as follows: batch size, default embedding dimension for entities and relations , learning rate , decay weight ratio , label smoothing ratio , input layer dropout rate , embedding layer dropout rate, and feature mapping dropout rate and , with a convolution kernel size of 3 × 3.

For optimal performance across different datasets, the hyperparameter selections were as follows. For the FB15k-237 dataset, , , , , , , . For the WN18RR dataset, , , , , , , . For the YAGO3-10 dataset, , , , , , , and .

Additionally, batch normalization was performed after each operation to prevent model overfitting and accelerate the model convergence. The Adam optimizer was used to minimize the model’s loss, and label smoothing was utilized to reduce overfitting problems caused by nonlinear output saturation on the labels.

4.5. Results and Analysis

4.5.1. Link Prediction

To validate the practicality of the ConvAMC model proposed in this article, comparisons were made with recent SOTA models for link prediction on three commonly used datasets, namely WN18RR, FB15k-237, and YAGO3-10. Analysis of the obtained results, shown in Table 3, Table 4 and Table 5, is conducted below. Most of results were taken from the original papers, cited in the tables, although a small portion of data is missing because it was not mentioned in the original publications. The best results in the tables are displayed in bold.

Table 3.

Link prediction results on the WN18RR dataset.

Table 4.

Link prediction results on the FB15k-237 dataset.

Table 5.

Link prediction results on the YAGO3-10 dataset.

The experimental results show that ConvAMC surpasses SOTA models according to all metrics, on two of the datasets—FB15k-237 and YAGO3-10. On the FB15k-237 dataset, ConvAMC outperforms the best SOTA model by 7.3% in MRR, 10.3% on Hit@1, 8.5% in Hit@3, and 4.7% in Hit@10. Similarly, on the YAGO3-10 dataset, ConvAMC holds a definitive lead in all metrics, with increases of 7.2% in MRR, 8.4% in Hit@1, 11.2% for Hit@3, and 9.3% in Hit@10 compared to the best performing SOTA model. On the WN18RR dataset, ConvAMC is ranked first, based on Hits@1, with a growth value of 4.0%. However, its ranking for Hits@3 and Hits@10 has dropped to second and fifth place, respectively, and for MRR it has fallen to tenth place.

Overall, the proposed ConvAMC model holds the lead when predicting objects ranked in the top three positions, indicating its advantage in link prediction accuracy. It is also undeniable that the proposed model holds the leading position on Hit@1. Compared to semantic matching models, which are easy to train and can quickly build knowledge graphs, the proposed model achieves comprehensive leadership in all metrics, except for a slight underperformance in MRR and Hit@10 on the WN18RR dataset compared to RotatE and partly to TuckER and ComplEx. Across the other metrics and on the other two datasets, ConvAMC established an all-around lead. When compared to other CNN-based models, ConvAMC leads in all metrics to varying degrees, which is particularly evident in Hit@1 and Hit@3. This thoroughly demonstrates the proposed model’s significant advantage in link prediction accuracy.

Overall, the demonstrated effectiveness and versatility of ConvAMC in link prediction tasks is primarily due to the use of a joint embedding approach for entities and relations during the embedding process, which maximally preserves the original semantic connections between entities and relations as they are fused into a 2D matrix. In the process of generating a 2D matrix for CNNs, the embeddings of entities and relations are reshaped and concatenated, which can alter semantic information. To address this issue, four convolutional channels are used to reshape and concatenate the embeddings of entities and relations, extracting interaction information between the channels, thus endowing a reshaped 2D matrix with more interaction features. At the same time, multi-channel convolution may lead to the loss of certain information details. The use of max pooling layers and a triple attention mechanism highlights feature information within the 2D matrix, making the interaction features between channels more complete and prominent, thereby providing more reference information for subsequent prediction tasks. Additionally, adjustments have been made in the choice of embedding space. A complex space has been chosen, which more fully expresses semantic information. Vectors in the complex space, consisting of real and imaginary parts, can reflect different attributes of entities and relations from various perspectives, offering richer semantic information.

4.5.2. Symmetric Relationship Modeling

The WN18RR and FB15k-237 datasets contain a large number of entities with various relationships; hence, the practicality of the proposed model for symmetric relationships can be validated on these datasets. Symmetric relationships should exhibit the property . In the Hadamard product, the approach involves extracting the imaginary part of the embedding vectors by using the real parts of the entity and relation embeddings and extracting the real part of the embedding vectors by using the imaginary parts of the entity and relation embeddings. From a mathematical perspective, this involves swapping the real and imaginary parts of the embedding vectors for entities and relations, and observing whether the experimental results are within an acceptable error range compared to the normal model’s prediction scores. From the perspective of mathematical formulas, this assesses whether the commutative property of the Hadamard product is satisfied. The ConvAMC’s ability to model complex relationships was evaluated using MRR, Hit@1, Hit@3, and Hit@10 as metrics. The obtained experimental results are presented in Table 6.

Table 6.

ConvAMC’s link prediction results after swapping real and imaginary parts of the embedding vectors for entities and relations.

As evident from Table 6, ConvAMC can successfully model symmetric relationships within an acceptable error margin (0.3%) across all four evaluation metrics used. By swapping the real and imaginary parts of the embedding vectors for entities and relations in the complex space, the following formulae have been satisfied:

Based on the above formulae, one can infer the following:

According to the obtained experimental results in Table 6 and the presented mathematical reasoning, the proposed model is capable of effectively handling symmetric relationships between entities.

4.5.3. Ablation Study

A set of ablation study experiments was conducted to investigate the effectiveness of each main module within the proposed ConvAMC model, with experiments conducted on the FB15k-237 and WN18RR datasets, as shown in Table 7. First, to study the role of the triple attention mechanism, it was removed during the multi-channel convolutional process (referred to as ’without TA’). Next, to explore the advantages of the multi-channel convolution in extracting multi-level information, a single-channel convolution was used instead (referred to as ’without MC’). Finally, to examine the role of the max pooling in the feature extraction process of the multi-channel convolution, the max pooling layer was removed (referred to as ’without MP’).

Table 7.

Ablation study results.

In order to fully demonstrate the work conducted in the ablation study experiments and present the specific contributions of each module more clearly, we have modified the scoring function applicable to individual ablation study experiments, based on the original scoring function after removing (i) the triple attention mechanism, as shown in (23); (ii) the max pooling operation, as shown in (24); and (iii) the multi-channel convolution, as shown in (25):

Our ablation study experimental model is inspired by TeAST [39], which uses empirical and analytical methods to demonstrate the effectiveness of each model component. First, we removed the triple attention mechanism from the encoding module, as per (23). According to the obtained experimental results, shown in Table 7, on both datasets, the values of all four metrics decreased, indicating that our idea of using a triple attention mechanism in multi-channel convolution is correct, as it can help the model capture the interaction between spatial dimensions and input tensor channels, providing more interaction information for the feature mapping matrix of entities and relations. Next, we removed the max pooling operation from the encoding module, as per (24), whereby the values of all four metrics decreased on both datasets, which indicates that using a max pooling operation to compute the channel attention in the feature extraction process on each convolutional channel is effective, as it can significantly reduce the size of the feature tensor and extract more significant feature information from the entity- and relation exchange matrices. Finally, we removed the multi-channel convolution from the encoding module and used an ordinary single-channel convolution instead. According to the obtained experimental results, shown in Table 7, on both datasets, the values of all metrics decreased, which indicates that using a multi-channel convolution in the encoding module is effective, as it can extract interactive features of entities and relations at different levels from multiple input channels in a multi-channel manner, and then concatenate and reshape them to form a brand new mapping matrix, which has more complete semantic information, which helps the model improve its accuracy in subsequent prediction tasks.

According to the experimental results shown in Table 7, the predictive performance comparison between ’without TA’ and the full ConvAMC model demonstrates that on the WN18RR dataset, the MRR, Hit@1, Hit@3, and Hit@10 values decreased by 3.5%, 2.4%, 3.6%, and 4.7%, respectively. On the FB15k-237 dataset, the values of these metrics decreased by 6.7%, 8.8%, 8.5%, and 5.1%, respectively, indicating that introducing the triple attention mechanism in multi-channel CNNs effectively enhances link prediction performance. The comparison between ’without MP’ and the full ConvAMC model shows that on the WN18RR dataset, the MRR, Hit@1, Hit@3, and Hit@10 values decreased by 2.8%, 2.2%, 3.0%, and 3.3% respectively, whereas, on the FB15k-237 dataset, they decreased by 5.9%, 7.0%, 7.7%, and 4.0%, respectively, demonstrating that the max pooling layer added to the multi-channel convolution process enhances the extraction of local information. By comparing ’without MC’ and the full ConvAMC model, it is observed that on the WN18RR dataset, the MRR, Hit@1, Hit@3, and Hit@10 values decreased by 0.5%, 1.3%, 3.2%, and 3.9%, respectively, and on the FB15k-237 dataset, they decreased by 7.3%, 8.1%, 8.8%, and 4.5%, respectively, proving the advantages of multi-channel CNNs in extracting multi-level information.

Overall, according to the results of the ablation study experiments, it can be observed that the impact on link prediction of ConvAMC is relatively minor on the WN18RR dataset, while it is more significant on the FB15k-237 dataset. As noted in Table 2, FB15k-237 contains a greater variety and complexity of relationships with fewer entities. Therefore, it is believed that ConvAMC is better suited to handle knowledge graphs with a larger number and more complex relationships, as it can extract additional feature interactions between channels during the extraction of entity- and relation information. Moreover, compared to the single-channel convolution, the increased computational cost of the multi-channel convolution is within an acceptable range.

4.5.4. Influence of Convolutional Kernel Size

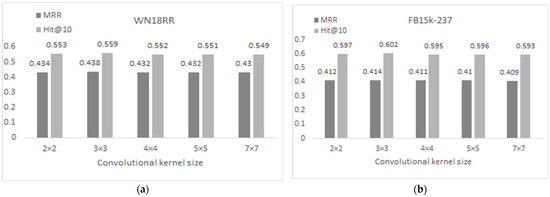

To investigate the role of the convolution kernel size in the link prediction performance of the proposed model, corresponding experiments were conducted on the FB15k-237 and WN18RR datasets, with the following kernel sizes: {2 × 2, 3 × 3, 4 × 4, 5 × 5, to 7 × 7}. The obtained MRR and Hit@10 results are depicted in Figure 3.

Figure 3.

The influence of the convolutional kernel size on ConvAMC’s link prediction performance. (a) WN18RR results; (b) FB15k-237 results.

Overall, the size of the convolution kernel has a minimal impact on the FB15k-237 dataset, with MRR and Hit@10 varying only within a range of 1.2% and 1.5%, respectively. From Figure 3, it is evident that the 3 × 3 convolution kernel performs the best, both in terms of MRR and Hit@10. The slightly smaller 2 × 2 kernel performs better than the 4 × 4 and 5 × 5 kernels. When the kernel size increases to 7 × 7, there is a noticeable decline in both MRR and Hit@10. On the WN18RR dataset, the impact of the kernel size is slightly greater than that on FB15k-237, with both MRR and Hit@10 within a range of 1.8%. Similarly, the 3 × 3 kernel exhibits the best performance here, with the 2 × 2 kernel slightly outperforming the 4 × 4 and 5 × 5 kernels again. When the kernel size is increased to 7 × 7, there is a significant drop in both MRR and Hit@10.

When the convolution kernel is smaller than 3 × 3, the feature interaction during the multi-channel convolutional process does not extract comprehensive information. However, when the kernel size exceeds 3 × 3, the amount of information overlooked during the convolutional process increases, particularly when the kernel size reaches 7 × 7, resulting in more information loss and the greatest impact on the model performance. Accurately assessing the size of the convolution kernel ensures better capture of local interactions between embeddings, while reducing the dimensions helps avoid overfitting.

5. Conclusions and Future Work

Single-level feature interaction between entities and relations is a common approach used in most KGE models but with certain limitations. To address this issue, an attention-based multi-channel CNN model, named ConvAMC, has been introduced in this article to facilitate multi-level feature interactions between entities and relations. During the feature interaction process, the model focuses on capturing local and detailed information, thereby enriching its expressive capabilities. Notably, ConvAMC excels in performing on knowledge graph datasets containing a variety of complex relationships and also offers advantages in link prediction accuracy. The performance of the proposed model has been comprehensively evaluated on three benchmark datasets, showing that it surpasses the SOTA models across all evaluation metrics on two of these datasets. Experiments on symmetric relationship modeling have demonstrated that the proposed model is also effective in handling symmetric relationships. Additionally, the impact of convolution kernel size on the link prediction performance of the model has also been studied. In the future, we will extend and optimize the model, using large-scale knowledge graphs for inference and querying in social networks, recommendation systems, and search engines. In addition, we will construct and analyze a multimodal knowledge graph by combining other data types.

However, the proposed ConvAMC model has its own limitations. Although max pooling layers and a triple attention mechanism have been utilized to reduce the computational overhead, the multi-channel CNN design inevitably occupies considerable time and space. Therefore, the proposed model does not have an advantage in terms of training time. Additionally, while focusing more on the interaction process and acquisition of local information during the multi-channel convolutional process for entities and relations, the semantic information carried by the names of entities and relations also merits study. In future work, plans are in place to further reduce the time and space dimensions of the proposed model through other means. As the sampling of useful negative triplet training samples in link prediction tasks is equally important, the exploration of the use of the latest generative adversarial networks (GANs) to generate negative triplets will be fostered. At the same time, we are also considering expanding the functionality of the model, with the main goal of handling temporal knowledge graphs or integrating it with federated learning frameworks to increase its practicality and generalization.

Author Contributions

Conceptualization, L.S. and Z.J.; methodology, W.L., L.S., Y.W. and C.D.; validation, Z.J., I.G. and W.L.; formal analysis, C.D., I.G. and Y.W.; investigation, Y.W. and C.D.; data curation, W.L., Y.W. and C.D.; writing—original draft preparation, W.L.; writing—review and editing, I.G., L.S. and Z.J.; supervision, L.S.; project administration, Z.J. and I.G. All authors have read and agreed to the published version of the manuscript.

Funding

This publication has emanated from research conducted with the financial support of the National Key Research and Development Program of China under grant no. 2017YFE0135700, and the European Union-NextGenerationEU, through the National Recovery and Resilience Plan of the Republic of Bulgaria (project DUECOS BG-RRP-2.004-0001-C01).

Data Availability Statement

The original data utilized in this study are openly available in the Convolutional 2D Knowledge Graph Embeddings resources (ConvE) at https://github.com/TimDettmers/ConvE, accessed on 9 May 2024.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Saxena, S.; Sangani, R.; Prasad, S.; Kumar, S.; Athale, M.; Awhad, R.; Vaddina, V. Large-Scale Knowledge Synthesis and Complex Information Retrieval from Biomedical Documents. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 2364–2369. [Google Scholar]

- Mouromtsev, D.; Wohlgenannt, G.; Haase, P.; Pavlov, D.; Emelyanov, Y.; Morozov, A. A Diagrammatic Approach for Visual Question Answering over Knowledge Graphs. In The Semantic Web: ESWC 2018 Satellite Events, Proceedings of the 15th International Conference, ESWC 2018, Heraklion, Crete, Greece, 3–7 June 2018; Gangemi, A., Gentile, A.L., Giovanni Nuzzolese, A., Rudolph, S., Maleshkova, M., Paulheim, H., Pan, J.Z., Alam, M., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11155, pp. 34–39. [Google Scholar]

- Suchanek, F.M.; Kasneci, G.; Weikum, G. Yago: A Core of Semantic Knowledge. In Proceedings of the 16th International Conference on World Wide Web, Banff, AB, Canada, 8–12 May 2007; pp. 697–706. [Google Scholar]

- Bollacker, K.; Evans, C.; Paritosh, P.; Sturge, T.; Taylor, J. Freebase: A Collaboratively Created Graph Database for Structuring Human Knowledge. In Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver, BC, Canada, 9–12 June 2018; pp. 1247–1250. [Google Scholar]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. Dbpedia: A Nucleus for a Web of Open Data. In Proceedings of the International Semantic Web Confermahdisoltani, Busan, Republic of Korea, 11–15 November 2007. [Google Scholar]

- Miller, G.A. WORDNET: A Lexical Database for English. In Proceedings of the Human Language Technology: Proceedings of a Workshop, Plainsboro, NJ, USA, 21–24 March 1993. [Google Scholar]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating embeddings for Modeling Multi-Relational Data. Adv. Neural Inf. Process. Syst. 2013, 26, 2787–2795. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge Graph Embedding by Translating on Hyperplanes. In Proceedings of the AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014. [Google Scholar]

- Lin, Y.; Liu, Z.; Sun, M.; Liu, Y.; Zhu, X. Learning Entity and Relation Embeddings for Knowledge Graph Completion. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Yang, B.; Yih, S.W.-t.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, É.; Bouchard, G. Complex Embeddings for Simple Link Prediction. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 2071–2080. [Google Scholar]

- Sun, Z.; Deng, Z.-H.; Nie, J.-Y.; Tang, J. Rotate: Knowledge Graph Embedding by Relational Rotation in Complex Space. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019; pp. 1–18. [Google Scholar]

- Balažević, I.; Allen, C.; Hospedales, T.M. Tucker: Tensor Factorization for Knowledge Graph Completion. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), HongKong, China, 3–7 November 2019; pp. 5185–5194. [Google Scholar]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2d Knowledge Graph Embeddings. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Balažević, I.; Allen, C.; Hospedales, T.M. Hypernetwork Knowledge Graph Embeddings. In Proceedings of the Artificial Neural Networks and Machine Learning–ICANN 2019: Workshop and Special Sessions: 28th International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019; pp. 553–565. [Google Scholar]

- Zhang, Z.; Li, Z.; Liu, H.; Xiong, N.N.; Engineering, D. Multi-Scale Dynamic Convolutional Network for Knowledge Graph Embedding. IEEE Trans. Knowl. 2020, 34, 2335–2347. [Google Scholar] [CrossRef]

- Ji, G.; He, S.; Xu, L.; Liu, K.; Zhao, J. Knowledge Graph Embedding via Dynamic Mapping Matrix. In Proceedings of the 53rd Annual Meeting of the Association For Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Beijing, China, 26–31 July 2015; pp. 687–696. [Google Scholar]

- Nickel, M.; Tresp, V.; Kriegel, H.-P. A Three-Way Model for Collective Learning on Multi-Relational Data. In Proceedings of the Icml—The 28th International Conference on Machine Learning, Washington, DC, USA, 28 June–2 July 2021; pp. 3104482–3104584. [Google Scholar]

- Gao, C.; Sun, C.; Shan, L.; Lin, L.; Wang, M. Rotate3d: Representing Relations as Rotations in Three-Dimensional Space for Knowledge Graph Embedding. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Virtual Event, 19–23 October 2020; pp. 385–394. [Google Scholar]

- Dai Quoc Nguyen, T.D.N.; Nguyen, D.Q.; Phung, D. A Novel Embedding Model for Knowledge Base Completion Based on Convolutional Neural Network. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), New Orleans, NY, USA, 1–6 June 2018. [Google Scholar]

- Jiang, X.; Wang, Q.; Wang, B. Adaptive Convolution for Multi-Relational Learning. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 978–987. [Google Scholar]

- Ren, F.; Li, J.; Zhang, H.; Liu, S.; Li, B.; Ming, R.; Bai, Y. Knowledge Graph Embedding with Atrous Convolution and Residual Learning. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 13–18 September 2020; pp. 1532–1543. [Google Scholar]

- Feng, J.; Wei, Q.; Cui, J.; Chen, J. Novel Translation Knowledge Graph Completion Model Based on 2D Convolution. Appl. Intelligence 2022, 52, 3266–3275. [Google Scholar] [CrossRef]

- Zhang, F.; Qiu, P.; Shen, T.; Cheng, J. Multi-Aspect Enhanced Convolutional Neural Networks for Knowledge Graph Completion. In ECAI 2023; Frontiers in Artificial Intelligence and Applications; IOS Press: Amsterdam, The Netherlands, 2023; pp. 2994–3001. [Google Scholar]

- Wang, J.; Zhang, Q.; Shi, F.; Li, D.; Cai, Y.; Wang, J.; Li, B.; Wang, X.; Zhang, Z.; Zheng, C. Knowledge Graph Embedding Model with Attention-Based High-Low Level Features Interaction Convolutional Network. Inf. Process. Manag. 2023, 60, 103350. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, W.; Chen, M.; Chen, H.; Cheng, X.; Zhang, W.; Chen, H. Dualde: Dually Distilling Knowledge Graph Embedding for Faster and Cheaper Reasoning. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Virtual Event, 21–22 February 2022; pp. 1516–1524. [Google Scholar]

- Kazemi, S.M.; Poole, D. Simple Embedding for Link Prediction in Knowledge Graphs. arXiv 2018, arXiv:1802.04868. [Google Scholar]

- Toutanova, K.; Chen, D. Observed Versus Latent Features for Knowledge Base and Text Inference. In Proceedings of the 3rd Workshop on Continuous Vector Space Models and Their Compositionality, Beijing, China, 31 July 2015; pp. 57–66. [Google Scholar]

- Mahdisoltani, F.; Biega, J.; Suchanek, F.M. YAGO3: A Knowledge Base from Multilingual Wikipedias. In Proceedings of the Conference on Innovative Data Systems Research, Asilomar, CA, USA, 4–7 January 2015. [Google Scholar]

- Vashishth, S.; Sanyal, S.; Nitin, V.; Agrawal, N.; Talukdar, P. Interacte: Improving Convolution-Based Knowledge Graph Embeddings by Increasing Feature Interactions. Proc. AAAI Conf. Artif. Intell. 2020, 34, 3009–3016. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, J.; Ye, J.; Wu, F. Rethinking Graph Convolutional Networks in Knowledge Graph Completion. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 798–807. [Google Scholar]

- Demir, C.; Ngomo, A.-C.N. Convolutional Complex Knowledge Graph Embeddings. In Proceedings of the Semantic Web: 18th International Conference, ESWC 2021, Virtual Event, 6–10 June 2021; pp. 409–424. [Google Scholar]

- Liu, X.; Tan, H.; Chen, Q.; Lin, G. Ragat: Relation Aware Graph Attention Network for Knowledge Graph Completion. IEEE Access 2021, 9, 20840–20849. [Google Scholar] [CrossRef]

- Li, J.; Su, X.; Zhang, F.; Gao, G. TransERR: Translation-based Knowledge Graph Embedding via Efficient Relation Rotation. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING 2024), Turin, Italy, 20–25 May 2024; pp. 16727–16737. [Google Scholar]

- Rossi, A.; Barbosa, D.; Firmani, D.; Matinata, A.; Merialdo, P. Knowledge Graph Embedding for Link Prediction: A Comparative Analysis. ACM Trans. Knowl. Discov. Data 2021, 15, 1–49. [Google Scholar] [CrossRef]

- Le, T.; Le, N.; Le, B. Knowledge Graph Embedding by Relational Rotation and Complex Convolution for Link Prediction. Expert Syst. Appl. 2023, 214, 119122. [Google Scholar] [CrossRef]

- Le, T.; Huynh, N.; Le, B. RotatHS: Rotation Embedding on the Hyperplane with Soft Constraints for Link Prediction on Knowledge Graph. In Proceedings of the Computational Collective Intelligence: 13th International Conference, ICCCI 2021, Rhodes, Greece, 29 September–1 October 2021; pp. 29–41. [Google Scholar]

- Chami, I.; Wolf, A.; Juan, D.-C.; Sala, F.; Ravi, S.; Ré, C. Low-Dimensional Hyperbolic Knowledge Graph Embeddings. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Virtual Event, 5–10 July 2020; pp. 6901–6914. [Google Scholar]

- Li, J.; Su, X.; Gao, G. Teast: Temporal Knowledge Graph Embedding via Archimedean Spiral Timeline. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15460–15474. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).