Abstract

This paper presents a novel composite heuristic algorithm for global optimization by organically integrating the merits of a water cycle algorithm (WCA) and gravitational search algorithm (GSA). To effectively reinforce the exploration and exploitation of algorithms and reasonably achieve their balance, a modified WCA is first put forward to strengthen its search performance by introducing the concept of the basin, where the position of the solution is also considered into the assignment of the sea or river and its streams, and the number of the guider solutions is adaptively reduced during the search process. Furthermore, the enhanced WCA is adaptively cooperated with the gravitational search to search for new solutions based on their historical performance within a certain stage. Moreover, the binomial crossover operation is also incorporated after the water cycle search or the gravitational search to further improve the search capability of the algorithm. Finally, the performance of the proposed algorithm is evaluated by comparing with six excellent meta-heuristic algorithms on the IEEE CEC2014 test suite, and the numerical results indicate that the proposed algorithm is very competitive.

Keywords:

global optimization; water cycle algorithm; gravitational search; niching technique; binomial crossover operation MSC:

80W50; 68T20; 90C59; 90C26

1. Introduction

With the development of science and technology, global optimization problems can be found in almost all applications, and have attracted great interest from researchers [1,2,3,4,5]. In order to deal with these real problems satisfactorily, many meta-heuristic algorithms have been presented by the inspiration of natural phenomena, animal behaviours, human activities and physical criteria, such as particle swarm optimization (PSO) [6], genetic algorithm (GA) [7], differential evolution (DE) [8], ant colony optimization [9], fireworks algorithm (FA) [10], joint operations algorithm [11], squirrel search algorithm [12], gaining–sharing knowledge-based algorithm [13], and so on. Specifically, more nature-inspired approaches can be further found in the literature [14]. As we all know, these approaches have been proved to be accurate, reasonably fast and robust optimizers, and have been applied in many fields, including pattern recognition [15], feature selection [16], image processing [17], multitask optimization [18], multimodal optimization [19], and data clustering [20]. However, they still do not guarantee the convergence to the global optimum, especially for complicated problems [21,22]. Hence, it is necessary to study more promising versions for them.

Since the performance of each heuristic optimization algorithm heavily depends on its balance between exploration and exploitation of the search space, many variants of the classical algorithms and hybrid algorithms have been developed by incorporating new mechanisms or combining with other approaches [23,24,25,26,27,28,29,30,31,32,33,34,35,36]. For example, Yan and Tian [25] presented an enhanced adaptive DE variant, with respect to the classical DE algorithm, by making full use of the information of the current individual, its best neighbour and a randomly selected neighbour to predict the promising region around it. Li et al. [26] proposed a novel integrated DE algorithm by dividing the population into the leader and adjoint sub-populations based on a leader–adjoint model and, respectively, adopting two mutation strategies for them. For the particle swarm optimization algorithm, to integrate the advantages of the update strategies from different PSO variants, Xu et al. [27] developed a strategy learning framework to learn an optimal combination of strategies. For the gravitational search algorithm, Sun et al. [23] improved its search performance by learning from the unique neighbourhood and historically global best agent to avoid premature convergence and high runtime consumption. Moreover, by integrating the benefits of the genetic algorithm and particle swarm optimization algorithm, Yu et al. [24] developed a hybrid GAPSO algorithm and applied it to the integration of process planning and scheduling. To further improve the search ability of the fireworks optimization method, Zheng et al. [29] additionally incorporated some differential evolution operators into the framework of the fireworks optimization method, and proposed a hybrid fireworks optimization method. Through properly introducing the search operations of differential evolutions in the cultural algorithm and particle swarm optimization algorithm, Awad et al. [30] and Zuo et al. [28], respectively, put forward a novel hybrid algorithm. Furthermore, by incorporating a local pattern search method into a water cycle algorithm to enhance its convergence speed, Taib et al. [34] presented a hybrid water cycle algorithm for data clustering, while by introducing a reinforcement learning technique into the framework of the particle swarm optimization algorithm, Li et al. [36] proposed an enhanced PSO variant for global optimization problems, where a neighbourhood differential mutation operator is also employed to increase the diversity of the algorithm. Thereby, designing new strategies and combining different approaches or algorithms is still an effective and popular way to enhance the performance of an algorithm.

In last decade, the gravitational search algorithm (GSA) [37] and water cycle algorithm (WCA) [38] were proposed to solve the optimization problem according to the Newton’s law of gravity and motion and the water cycle phenomenon, respectively. In particular, the particles in the population, considered as objects and their performance are evaluated as their mass, absorb each other by the gravitational force in the GSA, while the total population is divided into streams, rivers and the sea according to their fitness values in the WCA, and the rivers and the sea are regarded as leaders to guide other points towards better points, thus avoiding the search in inappropriate regions. Since they were born, they have attracted more and more attention from researchers and been applied to different fields [39,40,41,42,43,44,45,46,47,48,49,50,51,52,53]. For instance, with respect to the water cycle algorithm, to strengthen its search performance, Sadollaha et al. [39] further developed the concept of evaporation and incorporated the evaporation procedures into the original WCA, while Heidari et al. [40] adopted a chaotic mapping to set its parameters. By considering and simulating the real-world percolation process, Qiao et al. [44] designed a percolation operator and then developed an improved WCA for clustering analysis. Meanwhile, by adding the solution mapping technique in the WCA, Osaba et al. [45] put forward a discrete WCA variant to solve the symmetric and asymmetric travelling salesman problem; moreover, by introducing two different surrogate modes, Chen et al. [52] proposed a novel water cycle algorithm for high-dimensional expensive optimization. Furthermore, in order to alleviate the low precision and slow convergence of the WCA, Ye et al. [53] further presented an enhanced version of the WCA based on quadratic interpolation, where a new non-linear adjustment strategy is designed for distance control parameters, mutation operations are probabilistically performed, and the quadratic interpolation operator is introduced to improve the local exploitation capability of the algorithm. Specifically, more detailed works on the improvements and applications of the WCA can be found in [48]. On the other hand, for the gravitational search algorithm, by introducing chaotic mappings to control the gravitational constants, Mirjalili and Gandomi [41] presented a chaotic GSA version; whereas Lei et al. [43] proposed a hierarchical gravitational search algorithm by adaptively adjusting the gravitational constants and introducing the population topology. Meanwhile, to alleviate the stagnation of the GSA, Oshi [50] put forward an enhanced GSA variant by incorporating chaos-embedded opposition-based learning into the basic GSA, introducing a sine–cosine-based chaotic mapping to set the gravitational constants. More detailed descriptions of recent GSA variants can be found in [49]. Noticeably, even though the WCA and GSA variants have been proven to be more effective than their original versions, the GSA versions still easily fall into local optima and become very costly when the particles are only guided by superior agents or too many agents, respectively; while the WCA variants have a poorer exploration ability in the search space since the processes of the streams flowing to their corresponding rivers and consequent sea are similar to the mutation in differential evolution [54]. Therefore, it is necessary to develop an improved version of them to effectively balance exploration and exploitation.

Based on above discussions, this paper presents a novel hybrid heuristic algorithm (HMWCA) by combining the water cycle algorithm and the gravitational search algorithm. To effectively enhance the search capability of the algorithm and obtain a better balance between exploration and exploitation, a modified WCA was first developed by designing a crude niching technique and using it to assign the sea, rivers and their corresponding streams in the WCA, and dynamically adjusting the total number of rivers and the sea in an adaptive manner. Furthermore, the resulting water cycle search and gravitational search were organically integrated to explore or exploit the search space by making full use of their history performance within certain iterations. Moreover, in order to further improve the search effectiveness of the algorithm, the binomial crossover operation was further incorporated after the gravitational search or modified water cycle search to adjust the transmission of the search information. Then, the HMWCA could not only effectively balance the exploration and exploitation, but also save the computing resources during the search process. Finally, numerical experiments were carried out to evaluate the performance of the HMWCA by comparing with six excellent meta-heuristic algorithms on 30 CEC2014 benchmark functions [55] with different dimensions, and the experimental results showed that the proposed algorithm was very competitive. Furthermore, the HMWCA was also applied to spread-spectrum radar polyphase code design (SSRPPCD) [56].

For clarity, compared with the existing works, the main contributions and novelties of this paper are listed below.

- (1)

- A crude niching technique was designed and adopted to define the sea, rivers and their corresponding streams in the WCA, and a non-linear setting was introduced to adjust the total number of rivers and the sea during the search process. Thereby, a modified WCA is presented, which could possess a better balance between exploration and exploitation.

- (2)

- To further strengthen the adaptivity and robustness of the algorithm for various search stages and problems, the resulting water cycle search and gravitational search were further integrated according to their history performance within certain iterations.

- (3)

- The binomial crossover operation was additionally introduced in the proposed algorithm when the gravitational search or modified water cycle search were executed. This might further promote control over the transmission of the search information.

Therefore, the proposed algorithm could have a more promising search performance.

The reminder of this paper is organized as follows. Section 2 gives brief descriptions of the classical gravitational search algorithm and water cycle algorithm. The proposed algorithm is presented in Section 3. Section 4 provides and discusses the experimental results against benchmark functions. Finally, conclusions are drawn in Section 5.

2. The Classical GSA and WCA

In this section, we shall simply describe the classical gravitational search algorithm and water cycle algorithm.

2.1. Gravitational Search Algorithm

According to the law of gravity and mass interaction, Rashedi et al. [37] proposed a new heuristic optimization algorithm, GSA, for optimization problems. In the GSA, agents are considered as objects and their performance are measured by their masses. All objects attract each other by a gravity force, thus leading to a global movement towards objects with heavier masses. For a minimization problem, , the concrete steps of the GSA can be described as follows. Herein, is the search space, and D are the dimensions of ,

Consider a system with objects, and () is the position of the ith object with being its d-th dimension, the gravitational force from agent j on agent i at a specific time t is defined as:

where is the active gravitational mass related to agent j, is the passive gravitational mass related to agent i, is a small constant, is the Euclidean distance between the agents i and j, and is the gravitational constant at time t.

The total force acting on agent i in the d-th dimension is a randomly weighted sum of d-th components of the forces exerted from other agents:

where is a random number in the interval [0,1]. Then, the acceleration is given by

where is the mass of object i. The velocity and position of agent i are, respectively, calculated by

and

where , and is a random number subjected to uniform distribution.

Moreover, the masses of objects are calculated by the following equations:

where , represents the fitness value of the ith object at time t, and and are defined by:

2.2. Water Cycle Algorithm

The water cycle algorithm (WCA) is a new meta-heuristic optimizer inspired by real-world observations of the water cycle and how rivers and streams flow downhill towards the sea [38]. Same as in Section 2.1, a minimization problem is considered, where is the search space and D is the dimensions of . Then the main contents of the WCA can be described as follows.

In the WCA, after the initialization of the population, the best individual is chosen as the sea, and a number of better streams are chosen as rivers. Thus, the population can be redefined as

where is the size of population, while the total number of rivers and the sea and the number of streams can be computed by

and

respectively. Moreover, the cost of a raindrop is calculated as follows:

where . Then the corresponding flow intensity of rivers and the sea are calculated by

where is the number of streams flowing to a specific river or sea. During the search process, the streams flowing to the sea and rivers, and the rivers flowing to the sea are, respectively, updated by the following equations:

where t represents the number of the current iteration, and is a constant. In particular, when the new position is better than its corresponding river or sea, their positions will be exchanged.

Furthermore, as one of the most important procedures in the WCA, evaporation is used to prevent the algorithm from premature convergence, and its detail procedures can be described as follows.

(a) If , , then the raining process will be executed for its corresponding streams by

where and are the lower and upper bounds of the search space, respectively.

(b) If the streams flowing to the sea are satisfied the above evaporation condition, then let

where the coefficient represents the concept of variance to show the range of searching regions around the best solution, is a normally distributed random number, and is a small number close to zero to control the search intensity. To enhance the search near the sea, adaptively decreases as follows:

where T is the maximum number of iterations.

Overall, even though the GSA and WCA have promising performances for optimization problems and applied in actual optimization problems [37,38], the WCA easily becomes trapped into local optima since the streams and rivers always flow to few super individuals, while the GSA requires a large amount of computing resources, since each object is attracted by the sum of the forces exerted from other objects. Thereby, it is necessary to further develop a more promising optimizer.

3. Proposed Algorithm

As mentioned above, the GSA has a strong global search ability, while the WCA performs better in local searches. Consequently, we develop a novel hybrid algorithm (HMWCA) to balance exploration and exploitation by making full use of their advantages.

3.1. Modified WCA

Although the WCA has been broadly researched and successfully adopted to solve various real-world optimization problems [33,34,35,36,38,39,40,44,45,46,47,48,52,53], it still easily falls into premature convergence, especially for complicated functions. The reasons for this might be due to the fact that the number of rivers, streams and the sea in the original WCA are fixed during the whole search process once they are formed at the start of the algorithm. Obviously, it is not suitable for the search states to change. Moreover, the fitness values of the population are only taken into account to form the sea, rivers and their streams, which might lead to premature convergence since the superior individuals are crowded in general. To overcome these shortcomings, a modified WCA (MWCA) was proposed by simultaneously considering both the fitness values and positions of the population to design the sea, rivers and streams in the WCA and adjusting the total number of rivers and the sea in an adaptive manner.

As pointed out in [57], niching techniques can divide the whole population into smaller niches according to both the fitness value and distance, and maintain the diversity of the population during the search process. Then a special niching method, named clustering for speciation [57], was considered and modified here to form the sea, rivers and their corresponding streams in the WCA. Accordingly, the intensity of the flow for the rivers and sea would be regarded as the cluster size in clustering for speciation.

To enhance the global search capability, the intensity of the flow for rivers and sea was set as follows:

and

where returns the nearest integer less than or equal to A. For clarity, the detail procedures of the modified niching approach generating the sea, rivers and streams, are shown in Algorithm 1.

| Algorithm 1 Modified niching approach. |

|

Moreover, to enhance the exploitation at the later search stage, the total number of rivers and sea is adjusted in an adaptive manner as follows:

where returns the nearest integer to A, t and T are the number of the current iteration and the maximum number of iterations, respectively. Obviously, decreases gradually as the number of iteration increases. Then, the initial total number of the sea and rivers plays an important role in the performance of the algorithm. A too low a value for would increase the chances of becoming trapped into local optima, while a too large a value might decrease the capability of the algorithm to efficiently exploit each promising region. Thus, we let , and its suitability is shown in Section 4.1. More specifically, when the value of is changed, the sea, rivers and streams will be reassigned by Algorithm 1.

By integrating the above changes within the classical WCA, a variant of the WCA (MWCA) was developed, and its framework is clearly described in Algorithm 2. Differing from the classical WCA [38], the fitness value and position of individuals are both considered to form the sea, rivers and their streams (see Steps 4–7 in Algorithm 1), and the total number of rivers and the sea are dynamically reduced during the search process. Therefore, the WCA variant is helpful for both keeping the diversity of the population and enhancing the convergence of the algorithm during the search process. Moreover, it is worth noting that the modified niching method is more close to the real river system, where the streams flow to the nearest river. Thereby, for convenience, a subpopulation consisting of the sea or rivers and its corresponding streams is called a basin in the following.

| Algorithm 2 The framework of the MWCA. |

|

3.2. The Hybridization of MWCA and GSA

As described in the last subsection, the MWCA can maintain the diversity of population and has a strong search capability. However, the sea, rivers and their streams are only updated by Equations (14)–(16), respectively, and these update approaches are similar to the mutation in DE, which easily leads to premature convergence [40]. To overcome this shortcoming, by properly integrating the stronger exploration of the gravitational search, a hybrid approach was designed to further enhance the search ability of the algorithm. Specifically, the concrete procedures of this hybrid method are described in the following.

In order to effectively balance the exploration and exploitation, the water cycle search and gravitational search are combined and alternatively operated to update the population based on their history performance within constant iterations . In detail, for each basin, the performance of the current operation is evaluated by the successful number over the iterations. Meanwhile, similar to [58], if , meaning that this operation has promising performance, then it continues to execute for this basin in the next stage; otherwise, another operation is conducted. Clearly, is a key factor to the performance of the hybrid approach, and a large will not evaluate the search stage in time, while a small cannot precisely measure the performance. Thus, we let , shown to be a suitable choice from the experiments in Section 4.1.

Moreover, to save the computing costs, unlike the traditional gravitational search algorithm [37], each object is only attracted by superior objects measured by their fitness values. Furthermore, to effectively balance exploration and exploitation, and accelerate the convergence speed at the later search stage, we let

Clearly, decreases gradually with the lapse of iterations. Then each object is absorbed by almost all objects in the previous search stage and a few superior ones in the later stage, respectively. Thus, this setting can ensure the exploration and convergence of the algorithm at different search stages.

Noticeably, compared with the original WCA and GSA [37,38], the proposed hybrid approach can not only effectively balance exploration and exploitation, but also decrease the cost of computation.

3.3. The Crossover Operation

Considering that the update approaches of the streams and rivers in the WCA are similar to the mutation in differential evolution, and the mutation operator always performs together with the crossover operation in DE, a crossover operation was added in the proposed method after the water cycle search or gravitational search.

In particular, to enhance the performance of the algorithm, the binomial crossover [54] was employed and an adaptive method [59] was also introduced to set its corresponding crossover rate. In particular, for one solution (river or stream) at iteration t, when one new position has been generated by the water cycle search or gravitational search, its new position will be created by

where , is the crossover rate, denotes the d-th dimension of , and returns a random integer within , which ensures that receives at least one component from . Moreover, the value of can be obtained by

where is the normal distribution with standard deviation and mean

Herein, is the weighted arithmetic mean [59], and denotes the set of all successful values at iteration t. Furthermore, similar to [59], c is set to , and is initialized to .

From the above descriptions, the binomial crossover operation and an adaptive parameter setting were incorporated in the proposed algorithm to update the information of all solutions during the search process. Thereby, this operation can further adjust the search ability of the algorithm, and adaptively control the transmission of the search information during the search process.

Overall, by integrating the above-presented approaches, the framework of the proposed algorithm is described in Algorithm 3.

From Algorithm 3, we see that the population is first divided into basins by using the modified niching method. Next, the object in each basin is updated by the hybrid approach and binomial crossover, and then the evaporation condition is judged. Finally, is updated and the condition of forming the new basins is judged. If satisfied, then the population is divided again. Obviously, differing from the classical WCA [38], the sea, rivers and streams are formed by the modified niching method to enhance the diversity of the population, and the population is updated by the hybrid approach and binary crossover operation to balance exploration and exploitation. Therefore, the HMWCA has a promising performance to escape from local optima and find the global optima.

| Algorithm 3 The framework of the HMWCA. |

|

3.4. Complexity Analysis

In this subsection, the complexity of the HMWCA is further analysed and presented. Obviously, the main differences between the HMWCA and the original WCA [38] are the modified niching method, the hybridization of the water cycle search and gravitational search, and the binomial crossover operation. According to [38], the complexity of the original WCA is .

For the modified niching method, it must sort the population based on their fitness values and calculate the distances between the sea, rivers and the population. Moreover, note that the modified niching method is only performed times during the whole search process. Then their complexity are and , respectively. Therefore, the complexity of the modified niching method is . Meanwhile, with respect to the hybridization of the water cycle search and gravitational search, according to [37,38], the complexity of the water cycle search and gravitational search are and , respectively. In addition, the complexity of the alternate criterion between them is . Then, the complexity of the hybridization of the water cycle search and gravitational search is . Moreover, for the binomial crossover operation, according to [60], its complexity is .

Overall, the total complexity of the HMWCA is , which can be simplified as . Note that the gravitational search is operated in alternation during the search process in the HMWCA, and the complexity of the original GSA is . Therefore, the HMWCA has acceptable complexity.

4. Numerical Experiments

In this section, the performance of the proposed HMWCA is evaluated on 30 well-known benchmark functions - from the CEC2014 with both and [55]. Meanwhile, the sensitivity of the parameters in the HMWCA are discussed against eight typical functions: unimodal functions and , simple multimodal functions and , hybrid functions and , and composition functions and . Moreover, four variants of the HMWCA are designed and compared to show the effects of the proposed components. Furthermore, a comparison of the HMWCA with six well-known optimization algorithms, including the original WCA [38], two competitive variants of the WCA (the ER_WCA [39] and CWCA [40]), the original GSA [37] and two other well-known heuristic algorithms (CLPSO [22] and HSOGA [61]), was conducted to discuss the efficiency of the HMWCA.

In these experiments, the maximum number of function evaluations () was set to 60,000 for all problems with both and , and all problems were independently ran 25 times. Moreover, for fair comparisons, the size of the population was set to 50 in the HMWCA, the same as in the original WCA and its two variants, the ER_WCA and CWCA.

4.1. The Sensitivities of Parameters and

In this subsection, the sensitivity of parameters in HMWCA is discussed by a series of tuning experiments. In particular, to obtain the reasonable values of and , the HMWCA was ran on , , , , , , and , varying and from 4 to 7 and from 5 to 20 in steps of 1 and 5, respectively. Table 1 reports the experimental results, where the “Mean Error” and “Std Dev” represent the average and standard deviation of the obtained error function values from 25 runs, respectively, and the best results are marked by bold for each function (the same as below).

Table 1.

Experimental results of the HMWCA with different values for and .

From Table 1, we see that , , , and obtain the best values when = 15 and = 5, obtained the best values when = 10, = 10 and 15, obtains the best values when = 10 and = 8, while obtains the best values when = 15 and = 8. Moreover, it can also been seen that there is no best values when = 5 or 20, and only two best values when = 10, while the HMWCA obtains 4, 2 and 2 best values when = 5, 8 and 10, respectively. Then, the value for should not be too small or too large, with 15 and 5 as reasonable choices for and for most functions, respectively. Therefore, we let = 15 and = 5 in this paper.

4.2. The Effectiveness of the Proposed Components in the HMWCA

To clearly show the effects of the modified niching method, the hybridization of the water cycle search and gravitational search, and the binomial crossover operation on the performance of the HMWCA, we first designed four variants of the HMWCA as follows.

- (1)

- HMWCA1: the method in the original WCA [38] was used to form the sea, rivers and streams instead of the modified niching method in the HMWCA.

- (2)

- HMWCA2: the population was only updated by the water cycle search in the HMWCA during the whole search process.

- (3)

- HMWCA3: after the water cycle search or gravitational search at each generation, the binomial crossover operation is not further used in the HMWCA.

- (4)

- HMWCA4: the total number of and rivers and the sea is unchanged in the HMWCA during the search process. In particular, similar to the original WCA [38] and its variants [39,40], was set to 4 in the HMWCA.

Then, a comparison of the HMWCA with the above four variants was made against all problems from the CEC2014 with D=30. The experimental results are listed in Table 2, and the rank of each algorithm against each problems was used to measure their performance.

Table 2.

Experimental results of the HMWCA and its four variants on the functions with .

From Table 2, we see that for unimodal functions –, the HMWCA obtains the best results for and the HMWCA2 obtains the best results for and , potentially due to the fact that the gravitational search degrades the local search ability. For simple multimodal functions –, the HMWCA obtains the best results, except the HMWCA2 achieves the best results for and the HMWCA1 achieves the best results for . In particular, the HMWCA has similar top results for with the HMWCA1, HMWCA2 and HMWCA3. For hybrid functions –, the HMWCA has the best results, except the HMWCA2 achieves the best results for and . For composition functions –, HMWCA obtains the best values for , and , the HMWCA2 obtains the best values for , and , and the HMWCA3 attains the best values for and . Furthermore, the HMWCA1, HMWCA2, HMWCA3, HMWCA4 and HMWCA obtain 75, 67, 107, 141 and 44 in terms of their total rank and , , , and in terms of their average rank for all problems, respectively. Therefore, it can be noted that the modified niching method, the hybridization of the water cycle search and gravitational search, and the binomial crossover operation are helpful to enhance the search performance of the HMWCA.

4.3. Comparisons and Discussion

In this subsection, to evaluate the performance of the HMWCA, six well-known optimization algorithms including the original WCA [38], two competitive variants of the WCA (the ER_WCA [39] and CWCA [40]), the original GSA [37] and two other well-known heuristic algorithms (CLPSO [22] and HSOGA [61]) were used as a comparison with CEC2014 problems with both and . Among these compared algorithms, the ER_WCA [39] is an improved version of the WCA [38] to balance exploration and exploitation by defining the new concept of the evaporation rate for different rivers and streams. The CWCA [40] is a well-known variant of the WCA incorporating chaotic patterns into the stochastic processes of the WCA. CLPSO [22] is an improved PSO using personal historical best information of all particles to update the velocity of particles [22], and the HSOGA [61] is a hybrid self-adaptive orthogonal genetic algorithm designing a self-adaptive orthogonal crossover operator.

In our experiments, to perform fair comparisons, was set to 60,000 for all the above algorithms, the size of population in the HMWCA was set to 50, consistent with the traditional WCA, ER_WCA and CWCA.The other parameters involved are listed in Table 3. The parameters of the other three algorithms, including the GSA, CLPSO and HSOGA, are the same as in their original papers.

Table 3.

Parameters setting.

Furthermore, the average error (Mean Error) and standard deviation (Std Dev) of the function errors from 25 independent runs were recorded to measure their performances, and a Wilcoxon’s rank sum test () and their ranks were conducted on the experimental results draw statistically sound conclusions. Table 4 and Table 5 report the experimental results for all problems with and , respectively, where the best results are marked in bold for each function, and “+”, “−” or “≈ ” to denote that the HMWCA performance was better, worse, or equivalent to the corresponding algorithms, respectively.

Table 4.

Experimental results of the HMWCA and six well-known algorithms on the functions with .

Table 5.

Experimental results of the HMWCA and six well-known algorithms on the functions with .

From Table 4, we can see that for unimodal functions –, the HMWCA obtains the best results for all functions, except the ER_WCA has the best results for , where the HMWCA is significantly better than the CWCA, GSA, CLPSO and HSOGA achieving 3 in terms of its rank. For simple multimodal functions –, the HMWCA obtains the best results for most functions, including , , , – and , while CLPSO attains the best results for , , and , the GSA achieves the best results for , and , while the CWCA has the best results for . For hybrid functions –, the HMWCA obtains the best results for , and , the ER_WCA has the best results for and , and CLPSO has the best results for . For composition functions –, the HMWCA obtains the best results for and –, the GSA obtains the best results for –, and CLPSO achieves the best results for . In summary, according to the results of the Wilcoxon’s rank sum tests reported in Table 4, the HMWCA is better than the WCA, CWCA, ER_WCA, GSA, CLPSO and HSOGA on the 29, 25, 27, 24, 23, and 29 test functions, respectively, equivalent on the 0, 2, 0, 1, 0, and 0 test functions, respectively, and slightly worse on the 1, 3, 3, 5, 7, and 1 test functions, respectively. Moreover, the WCA, CWCA, ER_WCA, GSA, CLPSO, HSOGA and HMWCA obtain , , , , , and in terms of their average rank for all functions, respectively.

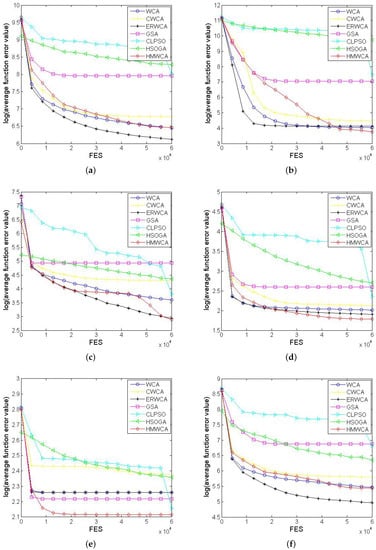

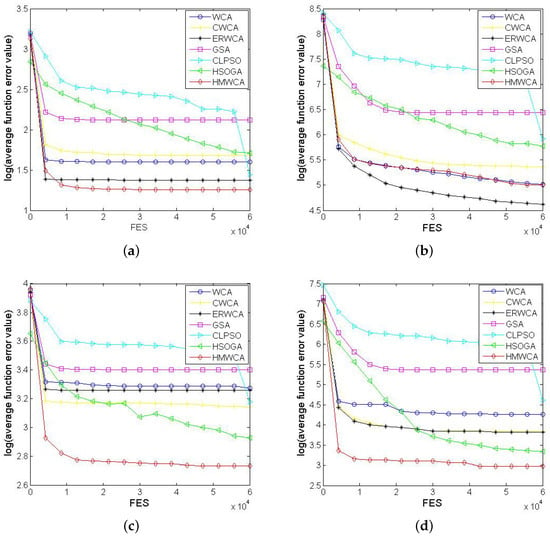

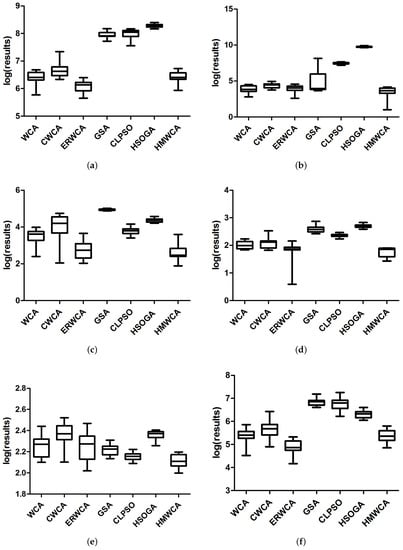

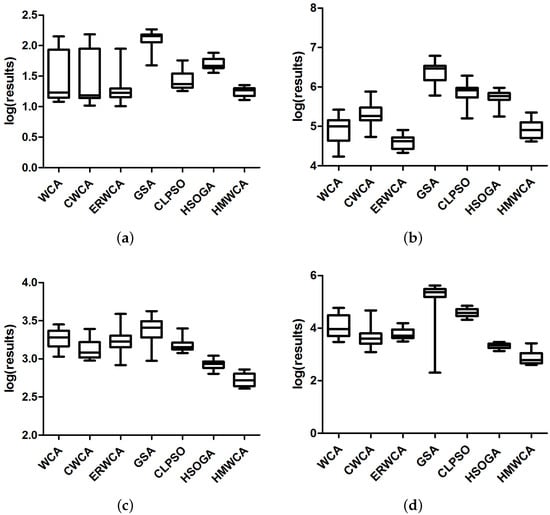

For clarity, the evolution curves of these compared algorithms on ten functions, including , , , , , , , , and , are plotted in Figure 1 and Figure 2, and their results from 25 independent runs are compared in Figure 3 and Figure 4. From Figure 1, Figure 2, Figure 3 and Figure 4, we can see that the HMWCA has a more promising performance than others. In detail, for unimodal functions and , the HMWCA has a better exploration ability than the WCA and ER_WCA, and worse than the GSA. Meanwhile, for complex functions, the HMWCA has a better performance for most functions, especially for , , and . Therefore, the HMWCA has an effective performance.

Figure 1.

The evolution curves of the seven studied algorithms for functions (a) , (b) , (c) , (d) , (e) and (f) with .

Figure 2.

The evolution curves of the seven studied algorithms for functions (a) , (b) , (c) , (d) with .

Figure 3.

The comparisons of the results of the seven studied algorithms for the functions (a) , (b) , (c) , (d) , (e) and (f) with .

Figure 4.

The comparisons of the results of the seven studied algorithms for the functions (a) , (b) , (c) , (d) with .

From Table 5, we can see that for unimodal functions –, the HMWCA is significantly better than the CWCA, GSA, CLPSO and HSOGA, while the ER_WCA achieves the best results for all functions. This might be because the gravitational search reduces the exploitation ability of the HMWCA. For simple multimodal functions –, the HMWCA obtains the best results for , and , the ER_WCA obtains the best results for and , while the CWCA, GSA and WCA have the best results for , and , respectively, and CLPSO obtains the best results for , and . In particular, the HMWCA and GSA obtain the best results for , while the CWCA and CLPSO have the best results for . For hybrid functions –, the HMWCA obtains the results for , and , while the ER_WCA has the best results for , and . For composition functions –, the HMWCA obtains the best results for and , the GSA has the best results for and , the HSOGA obtains the best results for –, and the CWCA obtains the best results for . According to the statistical results of the Wilcoxon’s rank sum test reported in Table 5, the HMWCA is better than the WCA, CWCA, ER_WCA, GSA, CLPSO and HSOGA for the 22, 24, 21, 24, 22, and 26 test functions, respectively, equivalent on 0, 0, 0, 1, 0, and 0 test functions, respectively, and slightly worse on 8, 6, 9, 5, 8, and 4 test functions, respectively. Furthermore, in terms of their average rank, the WCA, CWCA, ER_WCA, GSA, CLPSO, HSOGA and HMWCA obtain , , , , , and for all functions, respectively. Therefore, the HMWCA is a more promising optimizer.

4.4. Algorithm Efficiency

In this subsection, a comparisons of the HMWCA with the WCA and GSA are conducted to show the running efficiency of the HMWCA on six typical functions, including , , , , and . This experiment was conducted in MATLAB 2015a on a personal computer (Pentium(R) Dual-Core CPU at 3.06 GHz and 4 GB memory), and the average running time of 25 independent runs was recorded to evaluate their efficiencies. The maximum number of function evaluations was considered as the termination condition and set to 60,000 for all algorithms. Table 6 reports their numerical results, where the time represents the CPU time when is met.

Table 6.

The average CPU time of the HMWCA, WCA and GSA.

From Table 6, we can see that the HMWCA is faster than the GSA and slower than the WCA. It should be noted that the water cycle search and gravitational search are alternatively operated during the search process according to their performances. This determines that the HMWCA cost more than the WCA but less than the GSA. Thus, the HMWCA has acceptable complexity.

4.5. Application

Herein, the HMWCA is further applied to solve a practical problem, named the spread-spectrum radar polyphase code design (SSRPPCD) [56]. This problem aims to select an appropriate waveform, and can be modelled as the following min–max non-linear non-convex optimization problem.

where for , , for and

Clearly, the objective function is piecewise smooth and has numerous local optima. Table 7 lists the numerical results of the HMWCA, WCA, CWCA and ER_WCA over 25 runs with n = 30 and = 60,000, where the best algorithm among them is marked in bold. From Table 7 one can see that the HMWCA has the best results in terms of the “Best” result, “Worse” result and “Average” value, while it has a poorer performance than the CWCA and ER_WCA in term of the standard deviation. Therefore, the HMWCA’s performance is more promising for this problem.

Table 7.

Numerical results of the HMWCA on the SSRPPCD.

5. Conclusions

To integrate the advantages of the WCA and GSA, this paper proposed the HMWCA with the GSA for global optimization. To effectively balance exploitation and exploration, a modified niching technique was first designed and used to assign the sea, rivers and their corresponding streams in the WCA, and the total number of rivers and the sea were adjusted in an adaptive manner during the search process. Moreover, the gravitational search and water cycle search were combined and alternatively operated to explore or exploit the search space according to their performances within certain iterations. Furthermore, to improve the search capability, the binomial crossover operation was also employed and operated after the water cycle search or gravitational search, and an adaptive method was used to set its parameter. Finally, the HMWCA was compared with six well-known optimization algorithms to evaluate its performance on 30 IEEE CEC2014 benchmark functions with different dimensions, and applied to the spread-spectrum radar polyphase code design (SSRPPCD). The experimental results show that the proposed algorithm is very competitive.

Further research should focus on the application of the HMWCA to constrained optimization problems as well as real-world optimization problems to further test its performance. Furthermore work should be conducted to design other improved optimization approaches by hybridising the WCA with other heuristic algorithms.

Author Contributions

Conceptualization, M.T. and J.L.; methodology, M.T.; software, M.T.; validation, J.L., W.Y. and J.Z.; formal analysis, M.T.; investigation, M.T.; resources, W.Y.; data curation, J.Z.; writing—original draft preparation, M.T.; writing—review and editing, W.Y. and J.Z.; visualization, M.T.; supervision, M.T.; project administration, J.L. and J.Z.; funding acquisition, J.L. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Natural Science Basic Research Program of the Shaanxi Province of China No. 2022JQ-624, the Fund of Science and Technology Department of Shaanxi Province No. 2023-JC-QN-0097, and the Scientific Research Startup Foundation of Xi’an Polytechnic University (BS202052).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during and analysed during the current study are available from the corresponding author on reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sha, D.Y.; Hsu, C.Y. A new particle swarm optimization for the open shop scheduling problem. Comput. Oper. Res. 2008, 35, 3243–3261. [Google Scholar] [CrossRef]

- Zhang, B.; Pan, Q.K.; Meng, L.L.; Lu, C.; Mou, J.H.; Li, J.Q. An automatic multi-objective evolutionary algorithm for the hybrid flowshop scheduling problem with consistent sublots. Knowl.-Based Syst. 2022, 238, 107819. [Google Scholar] [CrossRef]

- Das, R.; Akay, B.; Singla, R.K.; Singh, K. Application of artificial bee colony algorithm for inverse modelling of a solar collector. Inverse Probl. Sci. Eng. 2017, 25, 887–908. [Google Scholar] [CrossRef]

- Omran, M.G.; Engelbrecht, A.P.; Salman, A.A. Differential evolution methods for unsupervised image classification, 2008. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC 2005), Edinburgh, UK, 2–4 September 2005. [Google Scholar]

- Zhang, Z.; Han, Y. Discrete sparrow search algorithm for symmetric traveling salesman problem. Appl. Soft Comput. 2022, 118, 108469. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization, 1995. In Proceedings of the Icnn95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology. In Control and Artificial Intelligence; University of Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Storn, R. Differential evolution-a simple and efficient heuristic for global optimization over continuous space. J. Glob. Optim. 1997, 11, 341. [Google Scholar] [CrossRef]

- Colorni, A. Distributed optimization by ant colonies. In Proceedings of the First European Conference on Artificial Life, Paris, France, 11–13 December 1991. [Google Scholar]

- Ying, T.; Zhu, Y. Fireworks Algorithm for Optimization, 2010. In Proceedings of the First International Conference, ICSI 2010, Beijing, China, 12–15 June 2010. [Google Scholar]

- Sun, G.; Zhao, R.; Lan, Y. Joint operations algorithm for large-scale global optimization. Appl. Soft Comput. 2016, 38, 1025–1039. [Google Scholar] [CrossRef]

- Jain, M.; Singh, V.; Rani, A. A novel nature-inspired algorithm for optimization: Squirrel search algorithm. Swarm Evol. Comput. 2018, 44, 148–175. [Google Scholar] [CrossRef]

- Wagdy, A.; Khater, A.; Hadi, A.A. Gaining-sharing knowledge based algorithm for solving optimization problems Algorithm. Int. J. Mach. Learn. Cybern. 2020, 11, 1501–1529. [Google Scholar]

- Ma, Z.Q.; Wu, G.H.; Suganthan, P.N.; Song, A.J.; Luo, Q.Z. Performance assessment and exhaustive listing of 500+ nature-inspired metaheuristic algorithms. Swarm Evol. Comput. 2023, 77, 101248. [Google Scholar] [CrossRef]

- Ilonen, J.; Kamarainen, J.K.; Lampinen, J. Differential Evolution Training Algorithm for Feed-Forward Neural Networks. Neural Process. Lett. 2003, 17, 93–105. [Google Scholar] [CrossRef]

- Bello, R.; Gomez, Y.; Nowe, A.; Garcia, M.M. Two-Step Particle Swarm Optimization to Solve the Feature Selection Problem, 2007. In Proceedings of the International Conference on Intelligent Systems Design & Applications, Rio de Janeiro, Brazil, 20–24 October 2007. [Google Scholar]

- Cuevas, E.; Zaldivar, D.; Perez-Cisneros, M. A novel multi-threshold segmentation approach based on differential evolution optimization. Expert Syst. Appl. 2010, 37, 5265–5271. [Google Scholar] [CrossRef]

- Li, J.Y.; Zhan, Z.H.; Tan, K.C.; Zhang, J. A Meta-knowledge transfer-based differential evolution for multitask optimization. IEEE Trans. Evol. Comput. 2022, 26, 719–734. [Google Scholar] [CrossRef]

- Liao, Z.W.; Mi, X.Y.; Pang, Q.S.; Sun, Y. History archive assisted niching differential evolution with variable neighborhood for multimodal optimization. Swarm Evol. Comput. 2023, 76, 101206. [Google Scholar] [CrossRef]

- Chen, J.X.; Gong, Y.J.; Chen, W.N.; Li, M.T.; Zhang, J. Elastic differential evolution for automatic data clustering. IEEE Trans. Cybern. 2021, 51, 4134–4147. [Google Scholar] [CrossRef]

- Hrstka, O.; Kuerová, A. Improvement of real coded genetic algorithm based on differential operators preventing premature convergence. Adv. Eng. Softw. 2004, 35, 237–246. [Google Scholar] [CrossRef]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Sun, G.; Zhang, A.; Wang, Z.; Yao, Y.; Ma, J.; Couples, G.D. Locally informed Gravitational Search Algorithm. Knowl.-Based Syst. 2016, 104, 134–144. [Google Scholar] [CrossRef]

- Yu, M.; Zhang, Y.; Chen, K.; Zhang, D. Integration of process planning and scheduling using a hybrid GA/PSO algorithm. Int. J. Adv. Manuf. Technol. 2015, 78, 583–592. [Google Scholar] [CrossRef]

- Yan, X.Q.; Tian, M.N. Differential evolution with two-level adaptive mechanism for numerical optimization. Knowl.-Based Syst. 2022, 241, 108209. [Google Scholar] [CrossRef]

- Li, Y.Z.; Wang, S.H.; Yang, H.Y.; Chen, H.; Yang, B. Enhancing differential evolution algorithm using leader-adjoint populations. Inf. Sci. 2023, 622, 235–268. [Google Scholar] [CrossRef]

- Xu, H.Q.; Gu, S.; Fan, Y.C.; Li, X.S.; Zhao, Y.F.; Zhao, J.; Wang, J.J. A strategy learning framework for particle swarm optimization algorithm. Inf. Sci. 2023, 619, 126–152. [Google Scholar] [CrossRef]

- Zuo, X.; Li, X. A DE and PSO based hybrid algorithm for dynamic optimization problems. Soft Comput. 2014, 18, 1405–1424. [Google Scholar] [CrossRef]

- Zheng, Y.J.; Xu, X.L.; Ling, H.F.; Chen, S.Y. A hybrid fireworks optimization method with differential evolution operators. Neurocomputing 2015, 148, 75–82. [Google Scholar] [CrossRef]

- Awad, N.H.; Ali, M.Z.; Suganthan, P.N.; Reynolds, R.G. CADE: A hybridization of Cultural Algorithm and Differential Evolution for numerical optimization. Inf. Sci. 2017, 378, 215–241. [Google Scholar] [CrossRef]

- Lynn, N.; Suganthan, P.N. Ensemble particle swarm optimizer. Appl. Soft Comput. 2017, 55, 533–548. [Google Scholar] [CrossRef]

- Shehadeh, H.A. A hybrid sperm swarm optimization and gravitational search algorithm (HSSOGSA) for global optimization. Neural Comput. Appl. 2021, 33, 11739–11752. [Google Scholar] [CrossRef]

- Chen, H. Hierarchical Learning Water Cycle Algorithm. Appl. Soft Comput. 2020, 86, 105935. [Google Scholar] [CrossRef]

- Taib, H.; Bahreininejad, A. Data clustering using hybrid water cycle algorithm and a local pattern search method. Adv. Eng. Softw. 2021, 153, 102961. [Google Scholar] [CrossRef]

- Veeramani, C.; Senthil, S. An improved Evaporation Rate-Water Cycle Algorithm based Genetic Algorithm for solving generalized ratio problems. RAIRO-Oper. Res. 2020, 55, S461–S480. [Google Scholar] [CrossRef]

- Li, W.; Liang, P.; Sun, B.; Sun, Y.; Huang, Y. Reinforcement learning-based particle swarm optimization with neighborhood differential mutation strategy. Swarm Evol. Comput. 2023, 78, 101274. [Google Scholar] [CrossRef]

- Saryazdi, N.P. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar]

- Eskandar, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm—A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110, 151–166. [Google Scholar] [CrossRef]

- Sadollah, A.; Eskandar, H.; Bahreininejad, A.; Kim, J.H. Water cycle algorithm with evaporation rate for solving constrained and unconstrained optimization problems. Appl. Soft Comput. 2015, 30, 58–71. [Google Scholar] [CrossRef]

- Heidari, A.A.; Abbaspour, R.A.; Jordehi, A.R. An efficient chaotic water cycle algorithm for optimization tasks. Neural Comput. Appl. 2017, 28, 57–85. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H. Chaotic gravitational constants for the gravitational search algorithm. Appl. Soft Comput. 2017, 53, 407–419. [Google Scholar] [CrossRef]

- Wang, H. A hierarchical gravitational search algorithm with an effective gravitational constant. Swarm Evol. Comput. 2019, 46, 118–139. [Google Scholar] [CrossRef]

- Lei, Z.; Gao, S.; Gupta, S.; Cheng, J.; Yang, G. An aggregative learning gravitational search algorithm with self-adaptive gravitational constants. Expert Syst. Appl. 2020, 152, 113396. [Google Scholar] [CrossRef]

- Qiao, Y. A simple water cycle algorithm with percolation operator for clustering analysis. Soft Comput. 2019, 23, 4081–4095. [Google Scholar] [CrossRef]

- Osaba, E.; Del, J.; Sadollah, A.; Nekane, B.M. A discrete water cycle algorithm for solving the symmetric and asymmetric traveling salesman problem. Appl. Soft Comput. 2018, 71, 277–290. [Google Scholar] [CrossRef]

- Kudkelwar, S. An application of evaporation-rate-based water cycle algorithm for coordination of over-current relays in microgrid. Sadhana Acad. Proc. Eng. Sci. 2020, 45, 237. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, H.; Luo, H. Research on the construction of stock portfolios based on multiobjective water cycle algorithm and KMV algorithm. Appl. Soft Comput. 2022, 115, 108186. [Google Scholar] [CrossRef]

- Nasir, M.; Sadollah, A.; Choi, Y.H.; Kim, J.H. A comprehensive review on water cycle algorithm and its applications. Neural Comput. Appl. 2020, 32, 17433–17488. [Google Scholar] [CrossRef]

- Mittal, H.; Tripathi, A.; Pandey, A.C.; Pal, R. Gravitational search algorithm: A comprehensive analysis of recent variants. Multimed. Tools Appl. 2021, 80, 7581–7608. [Google Scholar] [CrossRef]

- Oshi, S.K. Chaos embedded opposition based learning for gravitational search algorithm. Appl. Intell. 2023, 53, 5567–5586. [Google Scholar]

- Wang, Y.R.; Gao, S.C.; Yu, Y.; Cai, Z.H.; Wang, Z.Q. A gravitational search algorithm with hierarchy and distributed framework. Knowl.-Based Syst. 2021, 218, 106877. [Google Scholar] [CrossRef]

- Chen, C.H.; Wang, X.J.; Dong, H.C.; Wang, P. Surrogate-assisted hierarchical learning water cycle algorithm for high-dimensional expensive optimization. Swarm Evol. Comput. 2022, 75, 101169. [Google Scholar] [CrossRef]

- Ye, J.; Xie, L.; Wang, H. A water cycle algorithm based on quadratic interpolation for high-dimensional global optimization problems. Appl. Intell. 2023, 53, 2825–2849. [Google Scholar] [CrossRef]

- Price, K.V.; Storn, R.M.; Lampinen, J.A. Differential Evolution—A Practical Approach to Global Optimization. Nat. Comput. 2005, 141, 2. [Google Scholar]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem definitions and evaluation criteria for the CEC 2014 special session and competition on single objective real-parameter numerical optimization. In Computational Intelligence Laboratory, Zhengzhou University, Zhengzhou China and Technical Report; Nanyang Technological University: Singapore, 2013. [Google Scholar]

- Das, S.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems; Jadavpur University: Kolkata, India; Nanyang Technological University: Singapore, 2011. [Google Scholar]

- Sheng, W.; Swift, S.; Zhang, L.; Liu, X. A weighted sum validity function for clustering with a hybrid niching genetic algorithm. IEEE Trans. Syst. Man, Cybern. Part (Cybern.) 2005, 35, 1156–1167. [Google Scholar] [CrossRef]

- Udit, H.; Swagatam, D.; Dipankar, M. A cluster-based differential evolution algorithm with external archive for optimization in dynamic environments. IEEE Trans. Cybern. 2013, 43, 881–897. [Google Scholar]

- Zhang, J.Q.; Sanderson, A.C. JADE: Adaptive Differential Evolution With Optional External Archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Epitropakis, M.G.; Plagianakos, V.P.; Vrahatis, M.N. Balancing the exploration and exploitation capabilities of the differential evolution algorithm. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008. [Google Scholar]

- Jiang, Z.Y.; Cai, Z.X.; Wang, Y. Hybrid Self-Adaptive Orthogonal Genetic Algorithm for Solving Global Optimization Problems. J. Softw. 2010, 21, 1296–1307. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).