Abstract

The stability problem of stochastic networks with proportional delays and unsupervised Hebbian-type learning algorithms is studied. Applying the Lyapunov functional method, a stochastic analysis technique and the It formula, we obtain some sufficient conditions for global asymptotic stability. We also discuss the estimation of the second moment. The correctness of the main results is verified by two numerical examples.

MSC:

34D23; 92B20

1. Introduction

In 2007, Gopalsamy [1] investigated a Hopfield-type neural network with an unsupervised Hebbian-type learning algorithm and constant delays:

where means the neuronal state of the ith neuron; represents the resetting feedback rate of the neuron i; represents the synaptic vector; means the uptake of the input signal; ; denotes the synaptic weights; and are disposable scaling constants; is an external input signal vector; and is the neuronal activation function. Let

We rewrite (1) as

If random disturbance terms and proportional delays are added to system (2), we obtain the following stochastic networks:

where , and represent stochastic perturbations, denotes n-dimensional Brownian motion with natural filtering on a complete probability space ; and is a proportional delay factor with . The meanings of other terms are same as systems (1) and (2). We give the initial conditions of system (3) as follows:

where and are all continuous and bounded functions on . One method to expand the structure of Hopfield-type networks is to study higher-order or second-order interactions of neurons. We found that learning algorithms have been used in the neural network literature. Huang et al. [2] studied attractivity and stability problems for networks with Hebbian-type learning and variable delays. Gopalsamy [3] considered a new model of a neural network of neurons with crisp somatic activations which have some fuzzy synaptic modifications and which incorporates a Hebbian-type unsupervised learning algorithm. Chu and Nguyen [4] discussed Hebbian learning rules and their application in neural networks. The authors of [5] investigated a type of fuzzy network with Hebbian-type unsupervised learning on time scales and obtained stability via the Lyapunov functional method. For more results on high-order networks, see, e.g., [6,7,8,9,10].

In the real world, network systems are inevitably affected by random factors, and studying the dynamic behavior of stochastic network systems has important theoretical and practical value. In recent decades, high-order stochastic network systems have been receiving more attention. Liu, Wang and Liu [11] investigated the dynamic properties of stochastic high-order neural networks with Markovian jumping parameters and mixed delays by using the LMI approach. Using fuzzy logic system approximation, Xing, Peng and Cao [12] dealt with fuzzy tracking control for a high-order stochastic system. In [13], a stochastic nonlinear system with actuator failures has been studied. In very recent years, the dynamic properties of higher-order neural networks have been studied, see, e.g., [14,15,16,17].

Motivated by the above work, this paper is devoted to studying a type of stochastic network with proportional delays and an unsupervised Hebbian-type learning algorithm. We study the dynamic behavior of system (3) by using random analysis techniques and the Lyapunov functional method. Due to the presence of random terms and proportional delays in system (3), constructing a suitable Lyapunov function will be very difficult. In this article, we will fully consider the above special term and construct a new Lyapunov function, which can conveniently obtain stability results. We give the main innovations of this paper as follows:

- (1)

- There exist few results for stochastic networks with proportional delays and unsupervised Hebbian-type learning algorithms. Our research has enriched the research content and developed the research methods for the considered system.

- (2)

- In order to construct an appropriate Lyapunov function, the proportional delays and random terms are taken into consideration. The Lyapunov function in the present paper is different from the corresponding ones in [4,5].

- (3)

- In contrast to the existing research methods, we introduce some new research methods (including inequality techniques, stochastic analysis techniques and the It formula) to deal with the proportional delays and the unsupervised Hebbian-type learning algorithm. Particularly, we construct a new function and obtain the stochastic stability results of system (3) using the stability theory of stochastic differential systems and some inequality techniques. Furthermore, using the stochastic analysis technique and the It formula, we obtained the estimation of the second moment.

The remaining parts are arranged as follows. Section 2 presents some basic lemmas and definitions. In Section 3, we use the Lyapunov function method to deal with global asymptotic stability and the estimation of the second moment for (3). Section 4 gives two examples for verifying our main results. Finally, we give some conclusions.

Throughout the paper, the following assumptions hold.

(H1)

There are constants such that

(H2)

There are constants such that

2. Preliminaries

Definition 1.

If satisfies

then is an equilibrium point of (2). If and , then systems (3) and (2) have the same equilibrium point .

Let be a non-negative function space, where . means that exists as the continuous first and second derivatives for , respectively.

Definition 2

([18]). The following stochastic differential system is given

Denote the operator as

where

Definition 3

([19]). An n-dimensional open field containing the origin is defined by If there is positive definite function such that , then the function has an infinitesimal upper bound.

Definition 4

([19]). If is positive definite and for , then the function is called an infinite positive definite function.

Definition 5.

If is a solution of system (3) and is any solution of system (3) satisfying

we call stochastically globally asymptotically stable.

Lemma 1

([19]). If is negative definite, where , then the zero solution of system (5) is globally asymptotically stable.

In [1], we can find the detailed proof of the existence of the solution for system (3).

Theorem 1

([1]). Suppose that assumptions (H) and (H) hold and

Then, system (2) has a unique equilibrium point.

From Definition 1, the conditions of Theorem 1 also guarantee the existence of a unique equilibrium point for system (3).

3. Stability of Equilibrium

Theorem 2.

Suppose that all conditions of Theorem 1 are satisfied. Then, system (3) has an equilibrium point which is stochastically globally asymptotically stable, provided that

and

Proof.

Due to Theorem 1, system (3) has a unique equilibrium point . Let and . By (3), we have

where

Let

and

By (9), we get

and

where is a identity matrix. It follows by (10), (11) and Definition 2 that

Using the inequality and (12), we get

It follows from (6), (7) and (13) that . Therefore, is negative definite. It is easy to see that is positive definite. We claim that has an infinitesimal upper bound. In fact, in view of assumption (H), we get

In view of Definition 3, there exists an infinitesimal upper bound of . It follows by (9) that

Thus, for . Therefore, in view of Definition 4, is an infinite positive definite function for the second variable . Based on Lemma 1, there is an equilibrium point of (3) which is stochastically globally asymptotically stable. □

We further study the properties of solutions of system (3) and discuss the estimation of the second moment.

Theorem 3.

Suppose that (H) and (H) are satisfied. Furthermore, there exists a positive constant such that

Then, for any solution of system (3) that satisfies initial condition (4), we obtain that

where

Proof.

By (3), we have

Multiplying on both sides of (16) and using (15) yields

Integrating two sides of (17) on , we get

Thus,

Using the Schwarz inequality and It integration’s property, we get

Using (18) and (19) yields

On the other hand, from the second equation of (3), we have

Integrating two sides of (21) on , we get

Using the above proof and (22), we derive

From (20) and (23), we derive

The proof of Theorem 3 is completed. □

4. Examples

Example 1.

The following Hopfield-type stochastic networks with unsupervised Hebbian-type learning algorithms and proportional delays are given:

where

After a simple calculation, we have

and

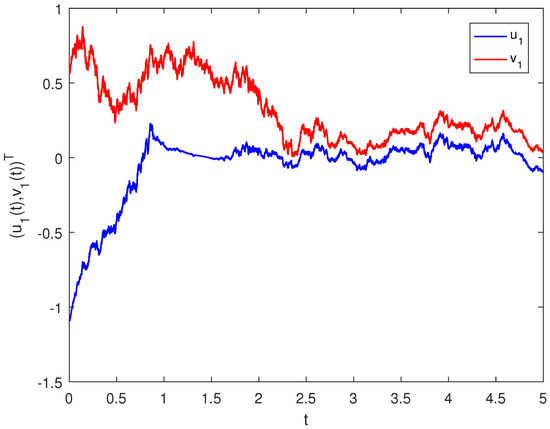

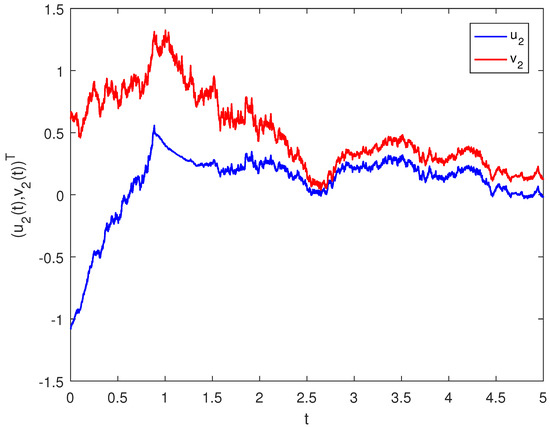

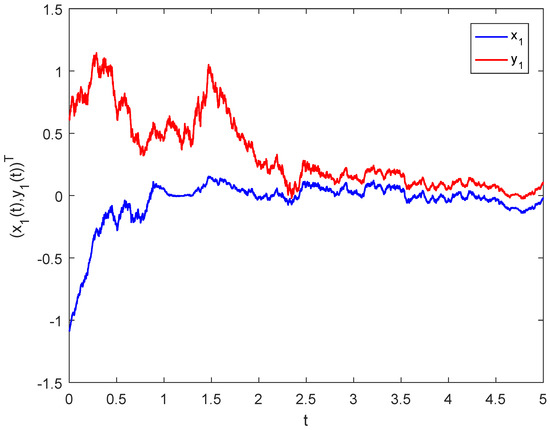

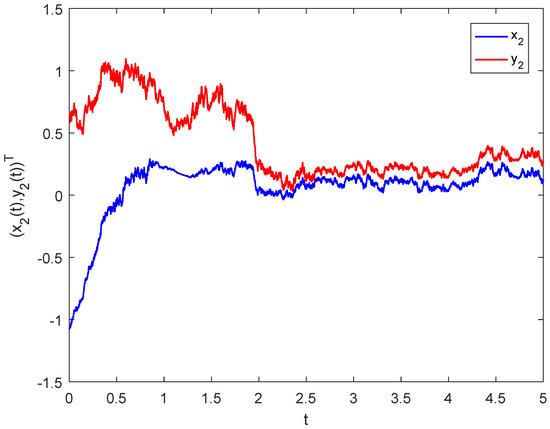

Thus, all conditions of Theorems 1 and 2 are satisfied and for system (24) there exists a globally asymptotically stable equilibrium . Figure 1 and Figure 2 show that system (24) has an equilibrium point which is stochastically globally asymptotically stable. Figure 1 and Figure 2 show that the solution of (24) approaches equilibrium at .

Figure 1.

Simulation results for the solution in system (24).

Figure 2.

Simulation results for the solution in system (24).

Example 2.

To further verify the results of Theorems 1 and 2, according to system (3), we provide the following example:

where

From the expressions of and , we obtain

Thus, assumptions (H) and (H) hold. After a simple calculation, we have

and

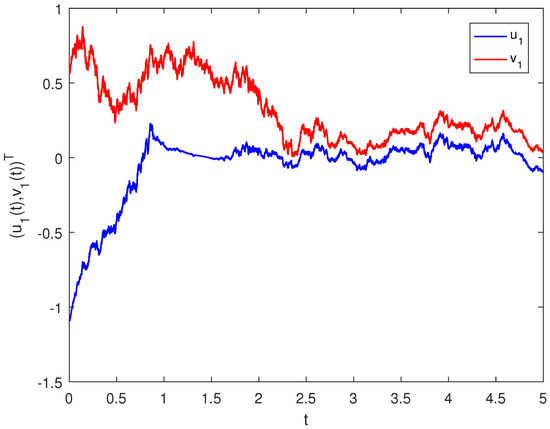

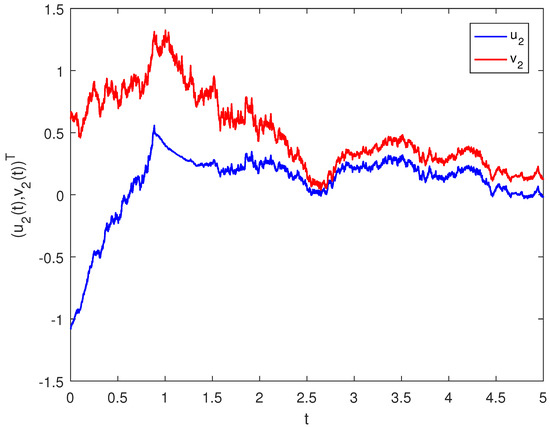

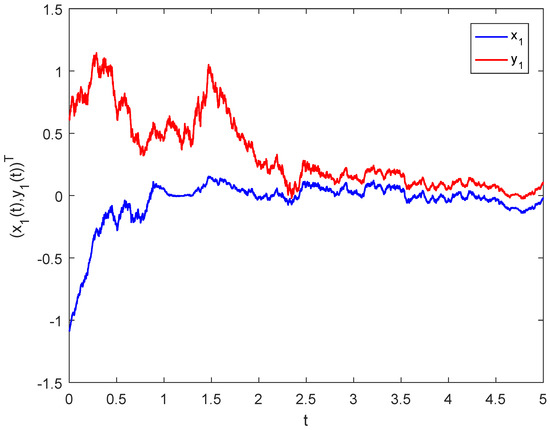

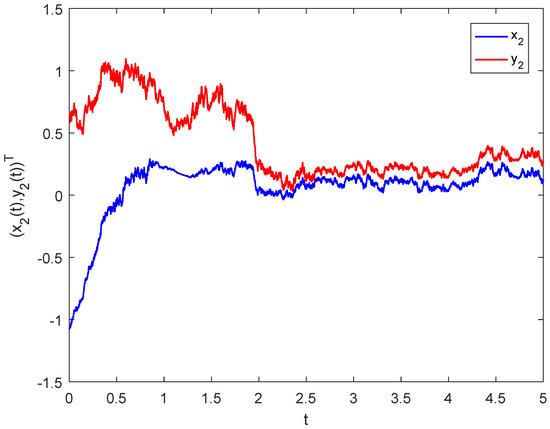

Therefore, all conditions of Theorems 1 and 2 are satisfied and system (25) has a globally asymptotically stable equilibrium . From Figure 3 and Figure 4, it is easy to see that system (25) has an equilibrium point at which is stochastically globally asymptotically stable.

Figure 3.

Simulation results for the solution in system (25).

Figure 4.

Simulation results for the solution in system (25).

5. Conclusions

By using the Lyapunov functional method and stochastic analysis techniques, we derive some sufficient conditions to ensure the global asymptotic stability of system (3). We also give an estimation of the second moment for the solution of system (3). An example is given to demonstrate the correctness of the obtained results.

There are still many issues worth further research in system (3), such as network models with pulse structures, networks on time scales, networks with fuzzy terms, and so on.

Author Contributions

Methodology, X.W. and X.C.; Writing—original draft, F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No. 11971197).

Data Availability Statement

Data are contained within the article.

Acknowledgments

The authors would like to thank the editor and the referees for their valuable comments and suggestions that improved the quality of our paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gopalsamy, K. Learning dynamics in second order networks. Nonlinear Anal. Real World Appl. 2007, 8, 688–698. [Google Scholar] [CrossRef]

- Huang, Z.; Feng, C.; Mohamad, S.; Ye, J. Multistable learning dynamics in second-order neural networks with time-varying delays. Int. J. Comput. Math. 2011, 88, 1327–1346. [Google Scholar] [CrossRef]

- Gopalsamy, K. Learning dynamics and stability in networks with fuzzy syapses. Dyn. Syst. Appl. 2006, 15, 657–671. [Google Scholar]

- Chu, D.; Nguyen, H. Constraints on Hebbian and STDP learned weights of a spiking neuron. Neural Netw. 2021, 135, 192–200. [Google Scholar] [CrossRef]

- Chen, J.; Huang, Z.; Cai, J. Global Exponential Stability of Learning-Based Fuzzy Networks on Time Scales. Abstr. Appl. Anal. 2015, 2015, 83519. [Google Scholar] [CrossRef]

- Huang, Z.; Raffoul, Y.; Cheng, C. Scale-limited activating sets and multiperiodicity for threshold-linear networks on time scales. IEEE Trans. Cybern. 2014, 44, 488–499. [Google Scholar] [CrossRef]

- Cao, J.; Liang, J.; Lam, J. Exponential stability of high order bidirectional associative memory neural networks with time delays. Phys. D 2004, 199, 425–436. [Google Scholar] [CrossRef]

- Kosmatopoulos, E.; Polycarpou, M.; Christodoulou, M.; Ioannou, P. High order neural network structures for identification of dynamical systems. IEEE Trans. Neural Netw. 1995, 6, 422–431. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, K. Existence and global exponential stability of a periodic solution to interval general bidirectional associative memory (BAM) neural networks with multiple delays on time scales. Neural Netw. 2011, 24, 427–439. [Google Scholar] [CrossRef]

- Kamp, Y.; Hasler, M. Recursive Neural Networks for Associative Memory; Wiley: New York, NY, USA, 1990. [Google Scholar]

- Liu, Y.; Wang, Z.; Liu, X. An LMI approach to stability analysis of stochastic high-order Markovian jumping neural networks with mixed time delays. Nonlinear Anal. Hybrid Syst. 2008, 2, 110–120. [Google Scholar] [CrossRef]

- Xing, J.; Peng, C.; Cao, Z. Event-triggered adaptive fuzzy tracking control for high-order stochastic nonlinear systems. J. Frankl. Inst. 2022, 359, 6893–6914. [Google Scholar] [CrossRef]

- Wang, J.; Liu, Z.; Chen, C.; Zhang, Y. Event-triggered fuzzy adaptive compensation control for uncertain stochastic nonlinear systems with given transient specification and actuator failures. Fuzzy Sets Syst. 2019, 365, 1–21. [Google Scholar] [CrossRef]

- Yang, G.; Li, X.; Ding, X. Numerical investigation of stochastic canonical Hamiltonian systems by high order stochastic partitioned Runge–Kutta methods. Commun. Nonlinear Sci. Numer. Simul. 2021, 94, 105538. [Google Scholar] [CrossRef]

- Wang, H.; Zhu, Q. Output-feedback stabilization of a class of stochastic high-order nonlinear systems with stochastic inverse dynamics and multidelay. Int. J. Robust Nonlinear Control. 2021, 13, 5580–5601. [Google Scholar] [CrossRef]

- Xie, X.; Duan, N.; Yu, X. State-Feedback Control of High-Order Stochastic Nonlinear Systems with SiISS Inverse Dynamics. IEEE Trans. Autom. Control. 2011, 56, 1921–1936. [Google Scholar]

- Fei, F.; Jenny, P. A high-order unified stochastic particle method based on the Bhatnagar-Gross-Krook model for multi-scale gas flows. Comput. Phys. Commun. 2022, 274, 108303. [Google Scholar] [CrossRef]

- Mao, X. Stochastic Differential Equations and Applications; Horwood: Buckinghamshire, UK, 1997. [Google Scholar]

- Forti, M.; Tesi, A. New conditions for global stability of neural networks with application to linear and quadratic programming problems. IEEE Trans. Circuits Syst. 1995, 42, 354–366. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).