Abstract

This paper provides a real application of a popular swarm-intelligence optimisation method. The aim is to analyse the efficiency of various settings of the marine predator algorithm (MPA). Four crucial numerical parameters of the MPA are statistically analysed to propose the most efficient setting for solving engineering problems. Besides population size, particle velocity parameter P, Lévy flight parameter , and fish aggregating device (FAD) probabilities are studied. Finally, 193 various settings, including fixed values and dynamic changes of the MPA parameters, are experimentally compared when solving 13 engineering problems. Standard statistical approaches are employed to highlight significant differences in various MPA settings. The setting of two MPA parameters (P, FADs) significantly influences MPA performance. Three newly proposed MPA settings outperform the original variant significantly. The best results provide the MPA variant with the dynamic linear change of P from to 0. These parameters influence the velocity of prey and predator individuals in all three stages of the MPA search process. Decreasing the value of P showed that decreasing the velocity of individuals during the search provides good performance. Further, lower efficiency of the MPA with higher FAD values was detected. It means that more occasional use of fish aggregating devices (FADs) can increase the solvability of engineering problems. Regarding population size, lower values () provided significantly better results compared with the higher values ().

Keywords:

marine predator algorithm; swarm intelligence algorithms; engineering problems; optimisation; parameter tuning; experimental comparison MSC:

62-08; 62-11; 68T05; 68T20; 65K10

1. Introduction

In many areas of human lives, an optimal system setting is required to decrease time and cost demands significantly. A popular area of real-world optimisation is represented by engineering tasks, such as product design, optimal shape, resource scheduling, space mapping, etc. [1]. A better solution to engineering problems impacts the economic and ecological aspects of human resources. There are many approaches to solving engineering optimisation problems, and the group of efficient optimisers inspired by nature systems and particles (hunting, collecting food, reproducing, building nests, etc.) is called swarm intelligence (SI) algorithms. These methods model the behaviour of particles independently and also the relationship between the particles [2].

The history of SI algorithms was introduced in 1995 when the particle swarm optimisation (PSO) algorithm was proposed [3]. During almost three decades of development, many variants of the SI algorithms were designed. Besides the PSO, popular variants of SI algorithms include the grey wolf optimiser [4], artificial bee colony [5], cuckoo search [6], firefly algorithm [7], self-organising migration algorithm [8], and many others. The efficiency of some selected SI optimisation methods was analysed and compared in many experimental studies [9,10,11,12]. This paper analyses the performance of a recently proposed SI metaheuristic inspired by marine predators’ widespread foraging strategy called the marine predator algorithm (MPA).

Before studying the efficiency of MPA, a definition of the global optimisation (GO) problem is provided. The GO problem is represented by the objective (goal) function , which is defined on the D-dimensional search space bounded by . Then, the point is called the global minimum of the problem if .

Regarding real-world engineering problems, the search space is constrained by equality (h) or inequality (g) limits given by:

These constraints define a feasible area where the solutions should be located. It is only feasible if the solution satisfies all the constraints. Notice that a positive tolerance area is taken as an acceptable region in the case of equality constraints. Finally, the solutions are evaluated by the objective function f and penalised by leaving the feasible area.

This paper aims to study the control parameters of the MPA algorithm to achieve good performance on real-world engineering problems. The MPA algorithm was selected for this experiment based on preliminary experiments where it achieved very promising results compared to well-known swarm intelligence algorithms. The original MPA algorithm uses three numerical control parameters, and the authors of this method recommended some combination of the values. Here, 193 various settings of four MPA parameters (including the parameters of population size N) are implemented, evaluated, and compared statistically to achieve some conclusions. The achieved results provide the researchers insight into the efficiency of the MPA settings when solving engineering problems. Moreover, the newly proposed dynamic change of the MPA parameters outperforms the original MPA settings.

The rest of the paper is organised as follows. A brief description of the marine predator algorithm and its control parameters are in Section 2 and Section 3. Several enhanced MPA variants and their applications are in Section 2.2. Settings of the experiment, engineering problems, and results of the comparison are discussed in Section 4 and Section 5. The conclusions and some recommendations are made in Section 6.

2. MPA: Marine Predator Algorithm

In 2020, Faramarzi et al. introduced a new nature-inspired swarm intelligence metaheuristic using Lévy flight and Brownian motion for model movements of predators in the ocean called the marine predator algorithm (MPA) [13]. The main idea of the MPA is to simulate a typical pattern of foraging of predators (i.e., tuna fish, sunfish, shark, etc.) under the water. Many researchers have found that the most accurate simulation of the moving and food-searching strategy of the marine predators is Lévy flight represented rather by many small steps. Moreover, ‘smooth’ walking in some stages of the particles’ search process enables Brownian motion. Brownian motion enables the sampling of random values by employing the Gaussian distribution function. The Lévy flight provides steps of random walk defined by Lévy distribution with flight length and power-law exponent :

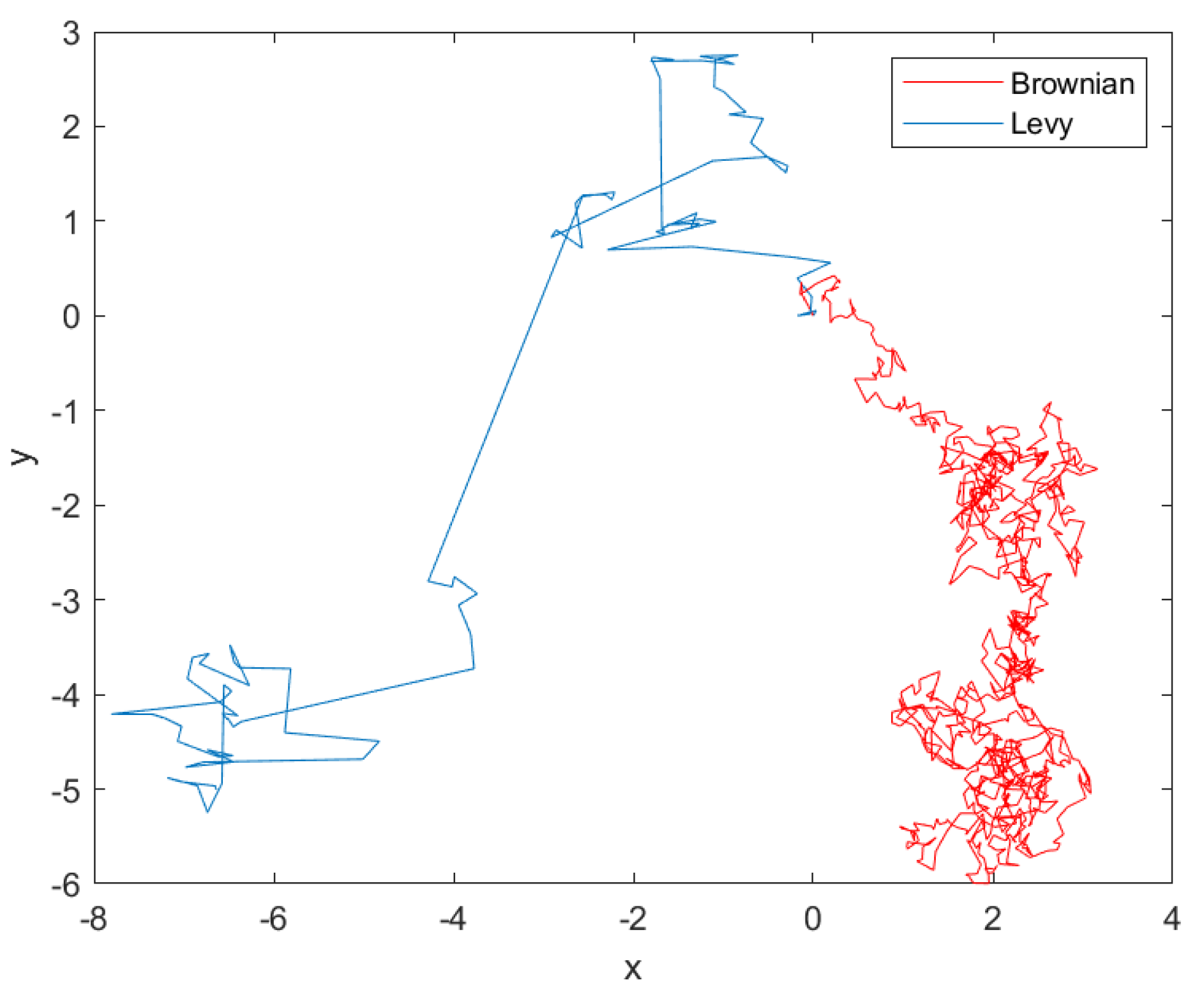

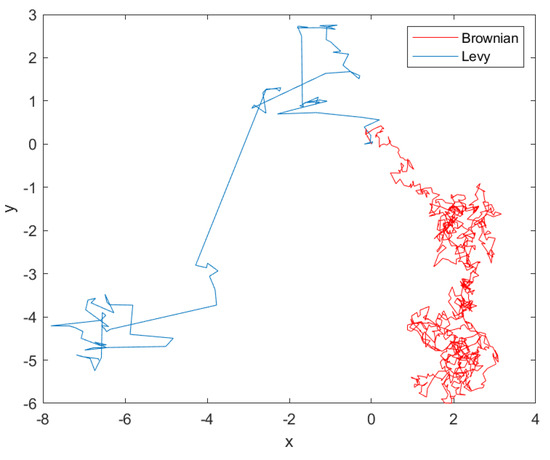

Brownian motion and Lévy flight produce a random movement of elements in the predefined search space. The main difference between these two techniques is that whereas Brownian motion is defined by steps of a similar length, Lévy flight enables occasional bigger steps (see Figure 1).

Figure 1.

Trajectory of steps for Brownian motion and Lévy flight.

Both methods started from the same position and took steps in different directions. The step size of Brownian motion is more consistent (rather ‘smooth’), whereas Lévy flight produces steps with various sizes.

2.1. Three Phases of MPA

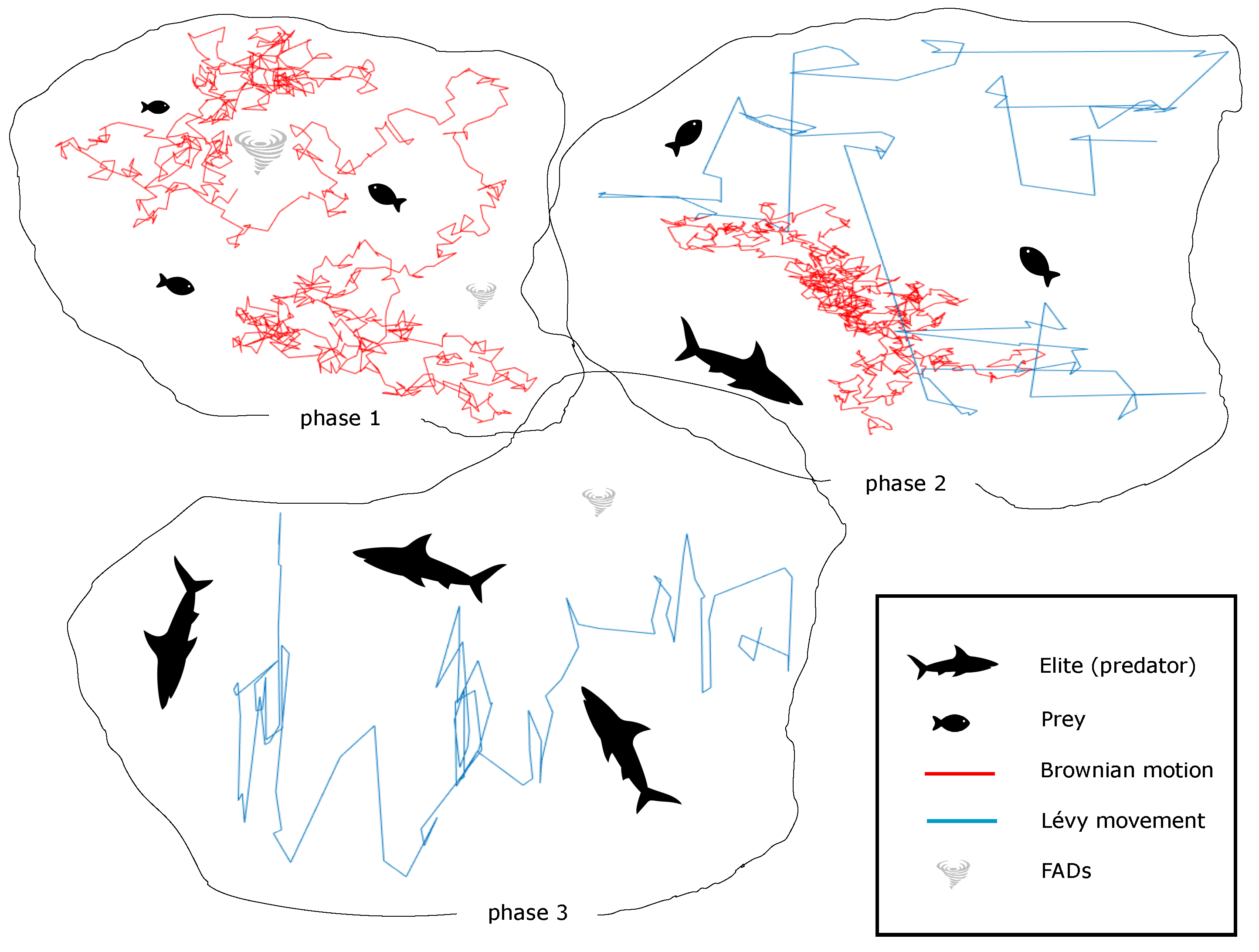

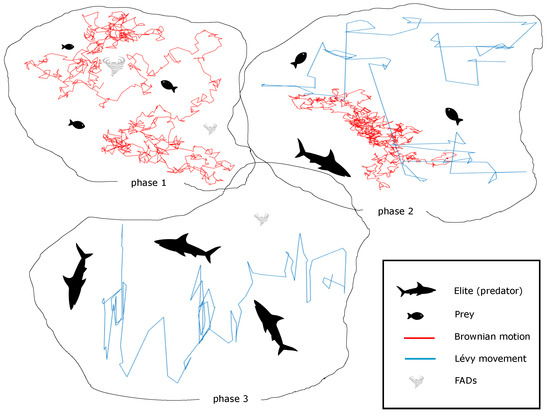

The MPA method belongs to a set of nature-inspired swarm-intelligence population-based optimisation algorithms. As the most population-based algorithms, MPA starts with a randomly initialised population P in the search space . Besides the population (swarm) of predators, the elite predator position () is stored to be used in the moving process. After the initialisation, the update process of the predators’ position started. This process is represented by three phases of moving the predator and prey, as depicted in Figure 2.

Figure 2.

Three phases of the marine predator algorithm (MPA).

2.1.1. First Phase

In the first phase, the beginning of the predator’s moving process is initialised. In this phase, the highest velocity (speed) of predator foraging speed is achieved. The idea is to simulate the exploration phase, where the areas of potentially good food sources are detected. This phase of MPA is expected in the first third of the optimisation process when the number of function evaluations (FES) is lower than the total number of function evaluations allocated for the run (maxFES). Then, the new position of the particle is updated using the current position () and step:

where is the input control parameter of the first stage, is the vector of random numbers from Brownian movement, and represents the best particle position in the history of the run.

2.1.2. Second Phase

The second phase occurs when the current number of function evaluations (FES) is in the second third of the run, . This phase simulates where prey and predator move similarly because both are foraging the food positions. In this phase, exploration and exploitation occurred, where half the population explored the search space and the second half was used for exploitation. Thus, particles () in the first half of the population are updated using Lévy flight:

and the second half of the population is updated, employing the Brownian movement:

where and are vectors of random numbers from Lévy and Brownian movement, is the position of the best solution, is the MPA control parameter, and the control parameter of the predator’s step is .

2.1.3. Third Phase

In the third phase (), the lowest velocity of particles is achieved. In this scenario, the predator is faster than the prey, i.e., the particles follow the update by the exploration phase:

Predators (e.g., sharks) spend much of their lives in Eddy’s formation or fish aggregating devices (FADs). This effect inspired the authors of MPA to add a new enhanced element to the optimiser to avoid stagnation in the area of the local minima.

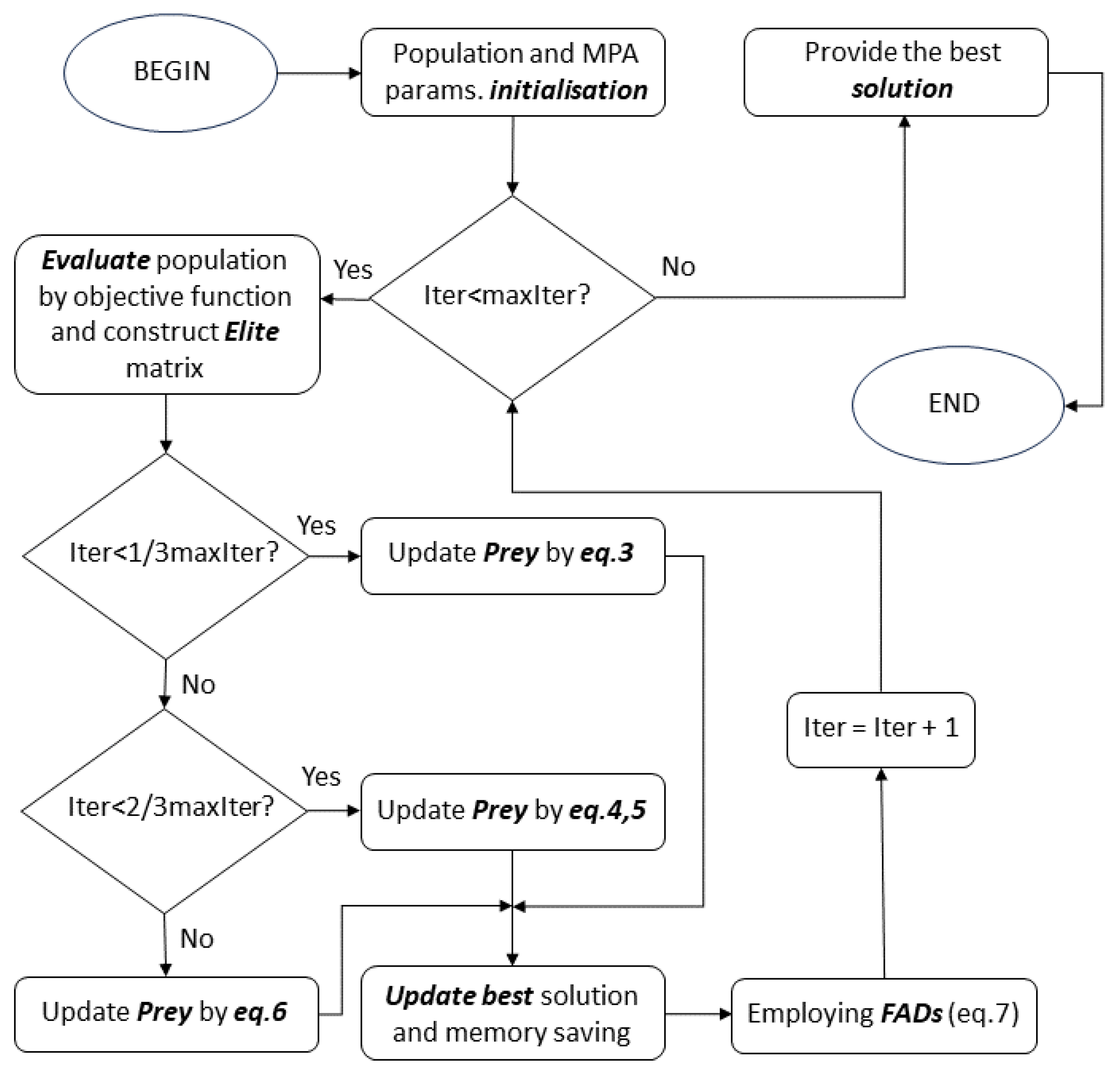

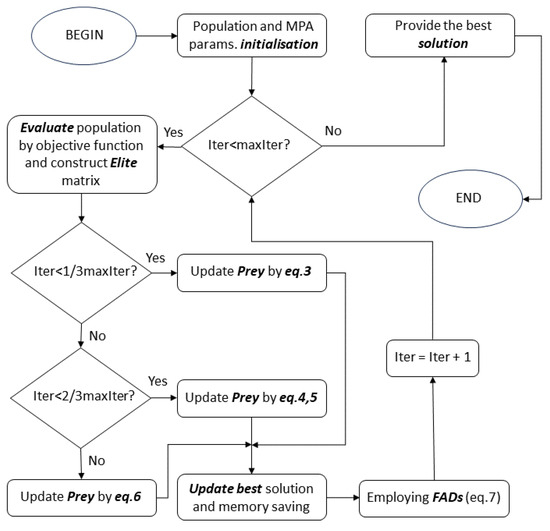

where is the probability of the FADs effect, vector contains random values from transformed to 0 if they are under or 1, and are vectors with maximal and minimal values of prey locations, and are randomly selected indices of prey. For better illustration, the flowchart of the MPA algorithms is depicted in Figure 3.

Figure 3.

Flowchart of the marine predator algorithm.

Briefly, the phases of the MPA enable exploration of the search space, moving the prey in the first phase (see Figure 1). Both prey and predators search for their food sources (half of the population works on exploration and half on exploitation). Finally, predators exploit the allocated food areas (switching from Brownian to Lévy steps).

The MPA algorithm was successfully enhanced and applied to many various optimisation problems. Some examples of the most popular MPA experiments are presented as follows.

2.2. Applications of MPA

In 2020, Abdel-Basset et al. proposed a new hybrid model of the improved marine predator algorithm (MPA) to detect COVID-19 [14]. The new variant of MPA introduced a ranking-based mechanism for faster convergence of the population towards the best solution. A new IMPA variant was used to detect COVID-19 in the X-ray images of the human lungs. The proposed IMPA outperformed five nature-inspired optimisers and also the original MPA variant.

In 2020, Al-qaness et al. proposed a model MPA with an Adaptive Neuro-Fuzzy Inference System (ANFIS) to forecast the number of COVID-19 cases in four world countries—Italy, USA, Iran, and Korea [15]. Standard time-series quantifiers measured the quality of the estimated numbers. The proposed MPA-ANFIS model is compared with ABC, ANFIS, FPASSA, GA, PSO, and SCA. The results illustrate that the proposed MPA-ANFIS is not the fastest method, but it provides the most accurate forecast of the COVID-19 case numbers in these countries.

In 2020, Sahlol et al. introduced an enhanced MPA variant to classify the COVID-19 images [16]. The authors combined convolutional neural networks (CNN) with MPA to develop a new FO-MPA optimiser. The proposed method is applied to X-ray COVID-19 images from two Kaggle datasets. The results are compared with nine nature-inspired algorithms, including the original MPA. The proposed FO-MPA provided the best mean results. Only the Harris Hawk optimiser and the original MPA found better solutions.

In 2020, Elaziz et al. proposed a model of MPA cooperating with the moth–flame optimiser (MFO) [17]. A newly designed MPAMFO was applied to the segmentation of CT images of COVID-19 cases. The results are compared with eight nature-inspired methods, including the original MPA and MFO. The proposed MPAMFO provided the most robust results in several levels of thresholding of human head CT images.

In 2020, Yousri et al. simulated applying the MPA optimiser to achieve the maximal energy of photovoltaic plants [18]. The performance of MPA is measured by the simulation of , , and arrays and compared with three optimisers (MRFO, HHO, and PSO) and the regular TCT connection. The results illustrated that the MPA is the best-performing method that reduces time complexity by 5–20%.

In 2020, Soliman et al. employed the MPA to estimate the parameters of two different marketable triple-diode photovoltaic systems [19]. The experiment’s results are compared with those of four nature-inspired methods. The authors concluded that the MPA can provide accurate results for any marketable photovoltaic system.

In 2020, Abdel-Basst et al. proposed an energy-aware model of MPA to solve the task scheduling of fog computing [20]. The authors introduced two MPA versions—the first improved exploitation capability using the last updated positions instead of the best one. The second used a ranking-based strategy and mutation with the random regeneration of part of the population after a predefined number of iterations. Three MPA variants are compared with five nature-inspired algorithms. The results illustrated the high performance of the proposed MPA variants.

In 2021, Qinsong et al. proposed a modified variant of MPA with an opposition-based learning (OBL) approach based on chaotic maps [21]. Besides OBL, a new updated process of individuals using an inertia weight coefficient was performed. Further, a nonlinear step size for balance between exploration and exploitation was introduced. The efficiency of the new MMPA was measured by artificial problems (CEC 2020) and four engineering problems. The results illustrated the MMPA superiority on most optimisation tasks.

In 2021, Abd Elminaam et al. proposed a new variant of MPA using a k-nearest neighbor strategy [22]. This approach enables the classification of records of the training datasets using Euclidean distances. A new MPA-kNN variant was applied to 18 datasets to classify features and instances. The results were compared with seven other metaheuristics, and the proposed MPA achieved the best performance.

In 2023, Aydemir et al. introduced an elite evolution strategy approach for MPA when solving engineering problems [23]. The idea was to introduce a random mutation for elite individuals, controlling the convergence of MPA. The proposed MPA variant was applied to CEC 2017 problems and several engineering problems. Achieved results were compared with several counterparts and illustrated the performance of the new MPA.

In 2023, Kumar et al. introduced a new variant of MPA with chaotic maps for engineering problems [24]. The idea was to employ a chaos approach in MPA to avoid repeated locations (positions) during the search. The CMPA only has control parameter to control the amount of chaos used in the search process. Twelve various chaos settings were used to achieve the best performance in engineering problems. The results illustrate that different CMPA chaos settings achieved the best results in different engineering problems.

The authors of these studies focused on applying the MPA algorithms to various optimisation problems. The studies did not analyse the setting of the MPA parameters for practical (real-world) use. In this paper, a comprehensive analysis of the MPA control parameters is performed to illustrate the proper setting of this optimiser for future applications. Thirteen real-world engineering optimisation problems were used to illustrate the performance of various MPA settings.

3. MPA Control Parameters

The MPA algorithm is controlled by several control parameters (see Equations (3)–(7)). Besides the population size N, three crucial numerical parameters play a significant role in the performance of this optimiser when searching for the solution to the problems. The aim of this paper is to study various MPA settings to achieve insight into the efficiency of this optimiser when solving engineering problems. Therefore, several rather equidistant values were proposed for each MPA numerical parameter and combined with the remaining parameters. Various (small, middle, and large) values of the MPA numerical parameters were employed.

3.1. Fish Aggregating Devices Strategy

The first parameter studied here is called , employed in Equation (7). This value helps the predators to employ the fish aggregating device (FAD) strategy. The authors recommended the value of . Three fixed values are studied in the presented experiment, . Moreover, six linear-change settings are used, where the value of the FAD is linearly updated (increased or decreased) during the search process: (0-1), (1-0), (0-0.5), (0.5-1), (1-0.5), (0.5-0).

3.2. Velocity of Particles

The velocity of predator and prey individuals in the MPA is controlled by , and the authors recommended setting . This parameter is used in Equations (3)–(6). In this study, two more interesting values of this parameter are added. Therefore, three fixed values are used in the experiment, . Further, six linear change settings are investigated, where the value is linearly updated (increased or decreased) during the search process: (0-1), (1-0), (0-0.5), (0.5-1), (1-0.5), (0.5-0).

3.3. Lévy Flight

The Lévy flight strategy is used to move particles in the second and third phases (see Equations (4) and (6). This movement strategy is based on the vector of , controlled by the parameter . The authors of MPA recommended using , three fixed values are used in this study, . Moreover, six linear change settings of the parameter are used, where the value is linearly updated (increased or decreased) during the search process: (0-1.5), (1.5-0), (0-0.75), (0.75-1.5), (1.5-0.75), (0.75-0).

4. Experiment Settings

This study aims to analyse the performance of three crucial MPA control parameters. As described above, several various settings of P, FADs, and were mentioned in the experiment. In total, 188 combinations of the MPA control parameters were employed for this study. Moreover, five variants of MPA with the recommended settings and linearly decreased population size are also included in the comparison. Four numbers distinguish variants of MPA to make the experiment results more transparent. Variants of the MPA algorithm are labelled by ‘(MPA_)N_FADs’. For example, ‘MPA_10_0.2’ (or ‘’) denotes the original settings of MPA with population size .

Thirteen real-world engineering problems were selected for this study to show the performance of the MPA variants. All the problems are minimisation, i.e., the minimal function value is searched. For each problem and MPA setting, 30 independent runs are performed to achieve robustness of the statistical comparison. The run is divided into 11 equidistant stages to illustrate the performance of the methods during the search process. For each run of the algorithm and problem, the solution was achieved when number of function evaluations was reached. These real-world engineering problems were also collected for another study, where more detail is provided [25]. All the problems are represented by constrained problems, where search space is bounded by linear and nonlinear limits to define feasible areas. The dimensionality of these problems (D) and function value of true solution () are provided in Table 1.

Table 1.

Detail of real-world engineering problems—dimensionality D and true solution [25].

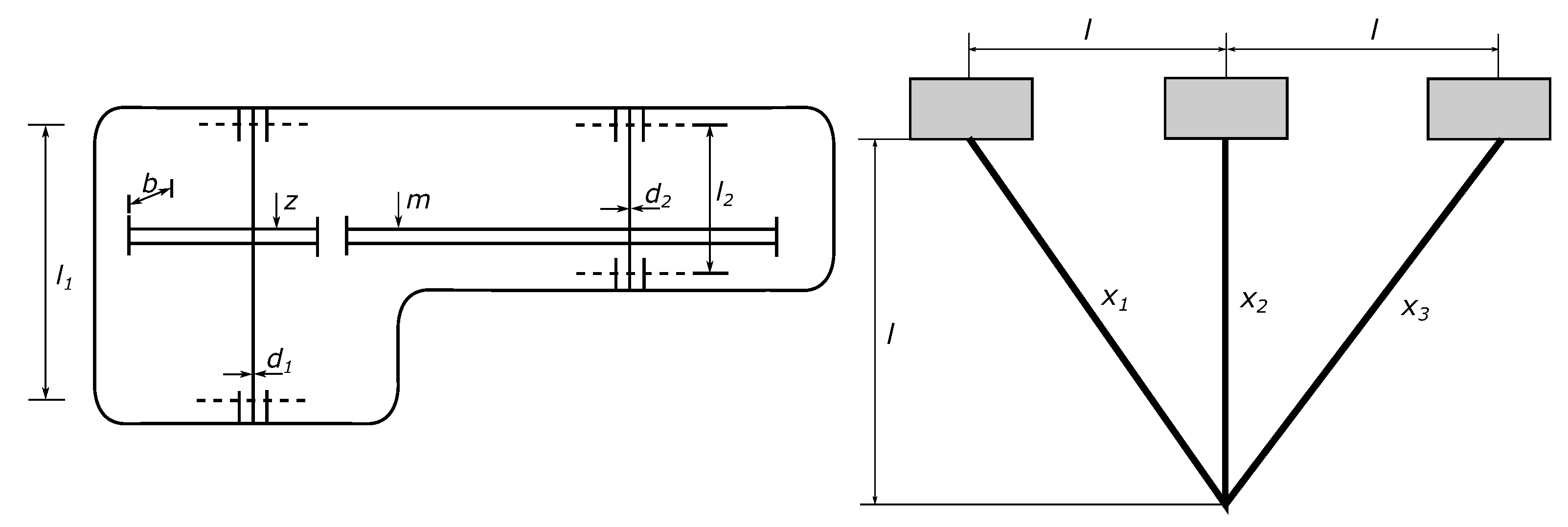

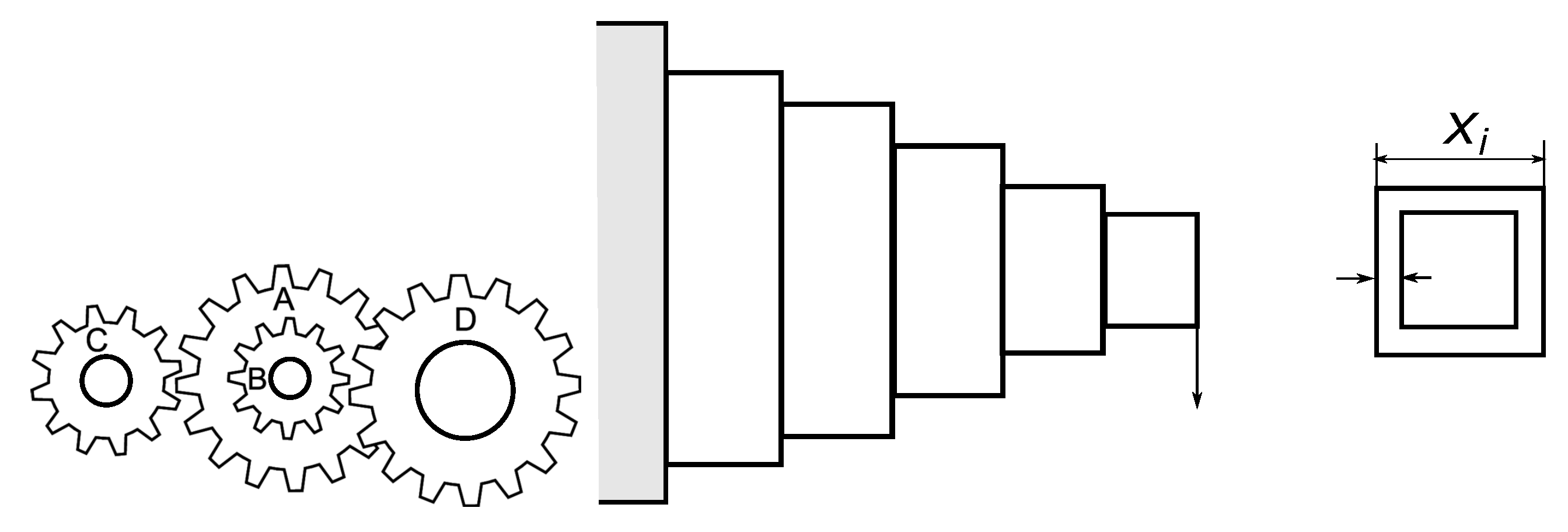

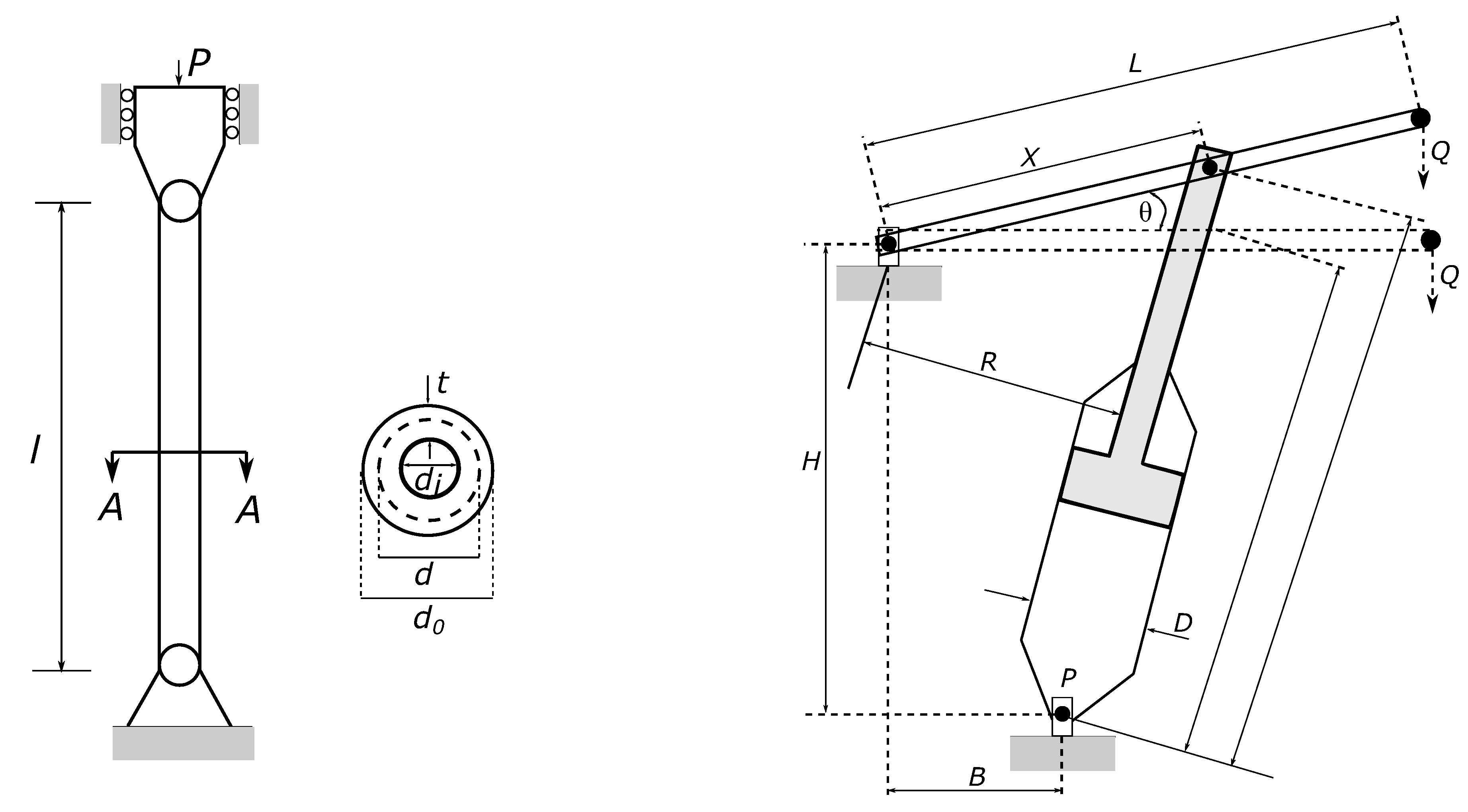

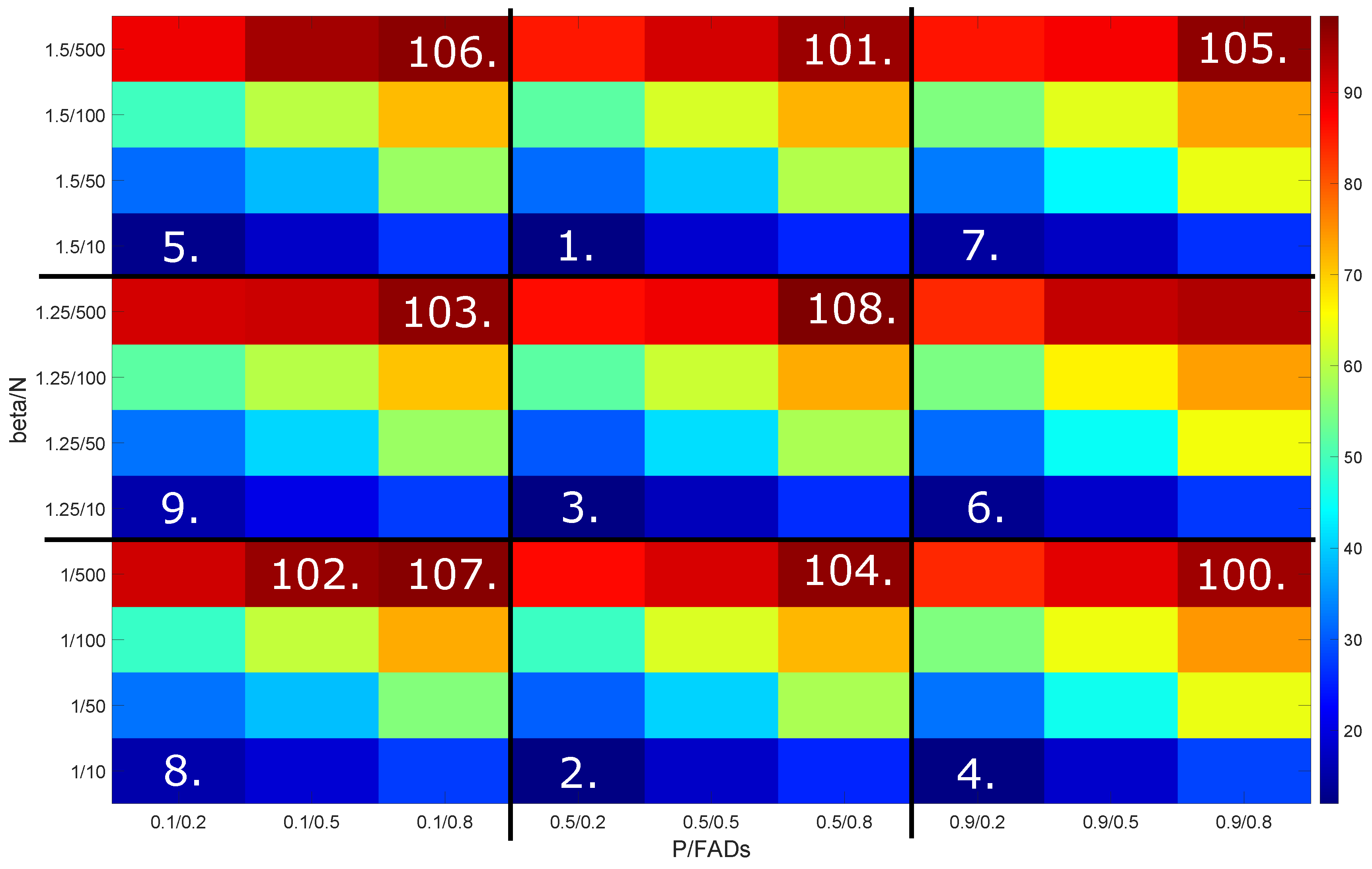

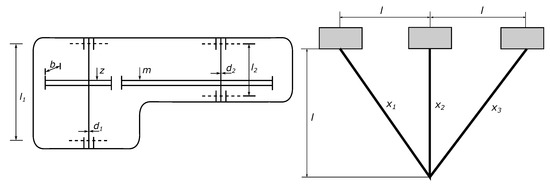

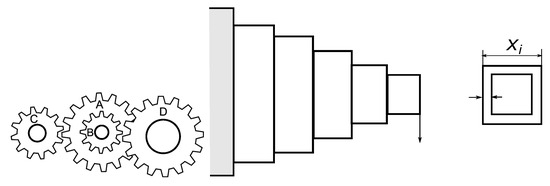

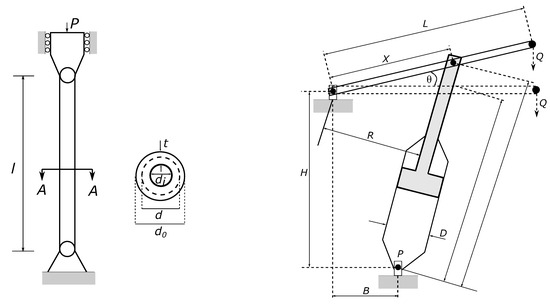

The dimensionality of the selected engineering problems is rather low (). It causes lower complexity, where the movement of particles in a low-dimensional space is faster. For a better illustration, the design of six engineering problems is provided in Figure 4, Figure 5, Figure 6 and Figure 7.

Figure 4.

Speed reducer design problem and three-bar truss problem.

Figure 5.

Gear train design problem and cantilever beam design problem.

Figure 6.

Tubular columns design problem and piston lever problem.

Figure 7.

Tension/compression spring design problem and concrete beam design problem.

5. Results

This study compares 193 MPA variants with various settings of the control parameters when solving 13 real-world engineering optimisation problems. The experiment provides a huge amount of data results to analyse. An advanced statistical comparison is performed instead of standard descriptive values and plots for better illustration. At first, the absolute mean ranks from the Friedman tests of all 193 MPA settings are provided for each stage separately inTable 2, Table 3, Table 4 and Table 5.

Table 2.

Absolute mean ranks from the Friedman tests of 11 stages.

Table 3.

Absolute mean ranks from the Friedman tests of 11 stages.

Table 4.

Absolute mean ranks from the Friedman tests of 11 stages.

Table 5.

Absolute mean ranks from the Friedman tests of 11 stages.

Each table row represents one MPA setting, defined by four control parameters in the first four columns. The remaining columns of the table present the absolute mean ranks, where a lower rank means better results of the MPA settings regarding all 13 engineering problems. The rows are sorted using the ranks in the final stage of the run (last column). As such, the best-performing settings are at the top of the table, and the efficiency of the MPA decreases towards the end of the table. The best results provide three MPA settings with the recommended settings of FADs and , and linear change of P, (0.5-0), (0.5-1), (1-0). The recommended MPA setting takes the fourth position.

It is possible to provide several remarks regarding MPA parameters:

- The efficiency of MPA is decreased with increasing population size.

- The best linear population size reduction is in the 20th position.

- Twenty of the best MPA variants use recommended .

- Six of the worst performing MPA settings employ .

- Seven out of seventeen of the best-performing MPA variants use a linear change of .

- The variant of MPA (original settings and linear change of FADs) achieves good results in the first five search stages. That information is interesting for the cases where the low time demands are provided.

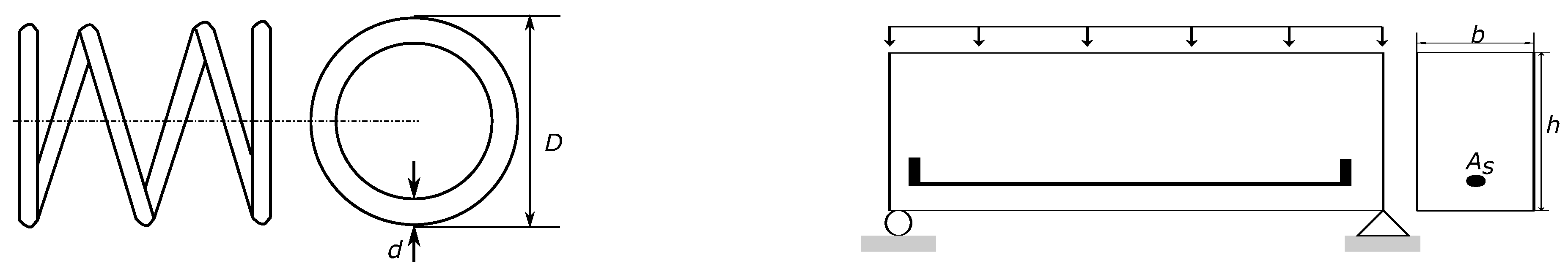

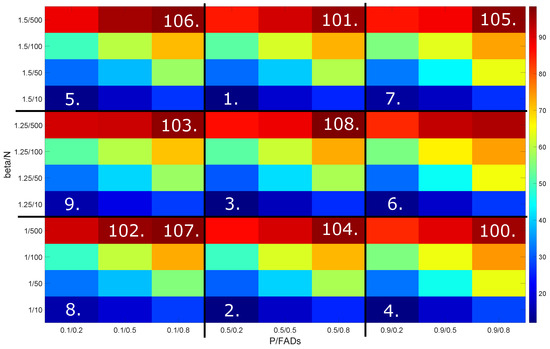

Decreasing the population size of MPA did not provide better results compared to a fixed small population size (). Therefore, these variants were removed from the illustration of the mean ranks from the Friedman tests (Figure 8). The settings of all MPA variants are labelled on the axes, where values of and N are on the vertical axis, and P and FADs are on the horizontal axis. The rectangle is divided into nine smaller rectangles based on and P values. Absolute mean ranks denoting the ten best-performing and ten worst-performing MPA variants are included in this figure.

Figure 8.

Absolute ranks from the Friedman test computed for 108 combinations of marine predator algorithm control parameter settings.

The mean ranks are evaluated by colour, from the least mean rank (best-performing setting, dark blue) to the biggest mean rank (worst-performing, dark red) setting, according to the colour bar on the right side. Dark blue horizontal rows illustrate the good performance of the small population size. A decreasing saturation of blue from the left to the right side of smaller rectangles means that the efficiency of MPA is decreasing with the increasing value of FAD. Conversely, the smaller rectangles’ colour patterns are similar, which points out the smaller influence of P and parameters. Analysing Table 2, Table 3, Table 4 and Table 5 and Figure 8 results in the selection of the best-performing MPA variants for further analysis.

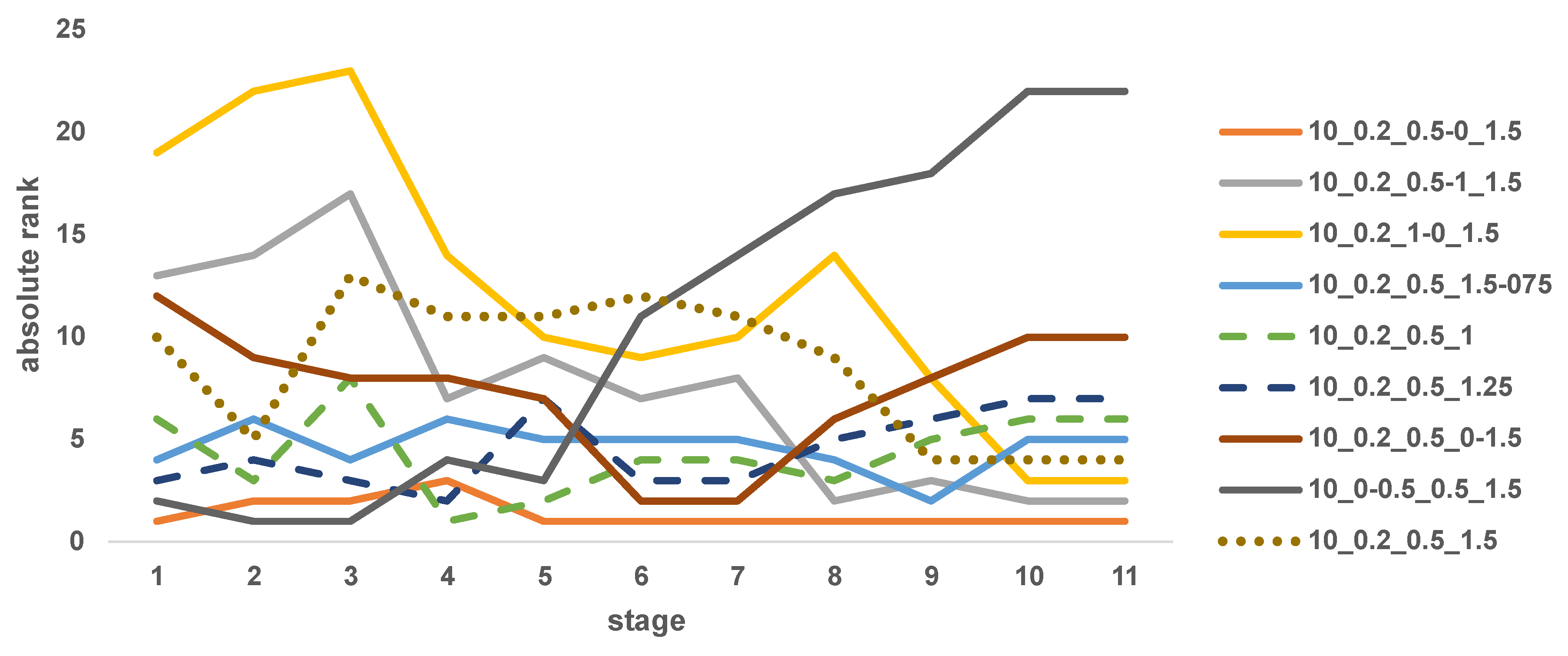

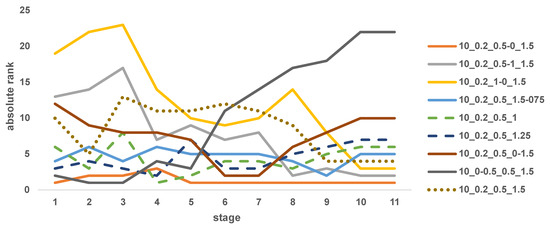

The absolute ranks of the top nine MPA variants during 11 stages are depicted in Figure 9. The positions of the lines on the right side illustrate the final efficiency of the optimisers, where dots present the recommended original setting. The best setting (10_0.2_0.5-0_1.5) is very efficient during the search process. Surprisingly, the combination of 10_0-0.5_0.5_1.5 (increasing FADs) is very efficient in the early stages, and then it is substantially worse. Oppositely, an efficiency of 10_0.2_1-0_1.5 (decreasing P) is increasing from the worst position to the third place.

Figure 9.

Absolute ranks of the best nine marine predator algorithm settings from the Friedman test.

The next step of the MPA variant analysis is to compare the median values of the best-performing settings for each problem separately (Table 6). The median values were computed from the independent runs of the algorithms in the last stage. The results of the original MPA settings are in the first column. Although the variability of the achieved results is not substantial, the best-achieved result for each problem is highlighted.

Table 6.

Median values for all problems by nine best-performing marine predator algorithms (MPAs) with given fish aggregating devices, P and (all ).

The most frequently best-performing MPA variant was 10_0.2_0.5-0_1.5 (five times out of 13). The original setting never achieved the best results separately. Also, the Kruskal–Wallis tests were performed to show significant differences between the nine algorithms for each problem separately. The tests were performed in each stage of the run. The results are significant if the achieved significance level is lower than . The significant results in the final stage were only for the 11th problem. Also, there were significant differences in some stages of the problems ().

Finally, the number of first, second, third, and last positions of the nine best-performing MPA variants for each problem and all stages are illustrated in Table 7. The results of the best-performing methods for each problem and stage are highlighted. The algorithms are ordered such that the most frequently best-performing one is on the left side of the table. In most cases, the MPA setting achieved the best results by 10_0.2_0.5-0_1.5, outperforming other MPA variants from the 6th to 11th stage. This setting provides the best results in 28 cases out of 143 (11 stages, 13 problems). On the other side, it achieves the worst results in five cases. Two other combinations of the MPA parameters achieved very promising results in the early stages of the optimisation process: 10_0.2_0.5_1.5-0.75 and 10_0-0.5_0.5_1.5.

Table 7.

Number of the best, second best, third, and last positions of the Kruskal–Wallis tests for nine best-performing marine predator algorithms (MPAs) with given fish aggregating devices (FADs), P, and (all ).

More details on the MPA performance of the nine best-performing variants come from the two-sample non-parametric Wilcoxon test. The recommended (original) MPA setting is compared with the remaining variants separately on each problem (Table 8). A number of better results (bet.), significantly better results from the Wilcoxon tests (s.bet.), worse results (wor.), and significantly worse (s.wor.) results of eight newly proposed MPA variants are provided. The methods are ordered from better performing on the left side to worse performing on the right side. The MPA variant 10_0.2_0.5-0_1.5 achieved similar results to the second MPA 10_0.2_1-0_1.5, which was three times outperformed by the original MPA. The variant MPA 10_0.2_0.5_1.5-0.75 achieved the same number of wins (4) and loses (4) compared to the original MPA.

Table 8.

Number of better, significantly better, worse, and significantly worse cases of nine best performing marine predator algorithms (MPAs) compared with the original setting from the Wilcoxon tests (all ).

6. Conclusions

This paper studied the performance of the successful swarm-intelligence optimisation heuristic inspired by marine predators (MPA). The study aims to analyse the MPA parameters when solving real-world engineering problems. Besides the population size N, the MPA optimiser uses three numerical parameters—P, FADs, and . The authors of MPA recommended , , and . Although the MPA was introduced when applied to real-world problems, a more comprehensive experimental comparison of various settings was performed.

Four fixed values and the linear reduction of N were used. Further, three fixed values for each of the three MPA parameters were employed, along with six various linear (increase, decrease) settings. Therefore, 193 various settings of the MPA parameters were compared when solving 13 real-world constrained engineering problems. Achieved results were statistically analysed to illustrate significant differences.

The original MPA setting was outperformed by three variants with the linear change of the particle velocity parameter, (0.5-0), (0.5-1), (1-0)}. Decreasing values of P (from 1 or to 0) illustrate the situation where the MPA individual’s velocity is decreased during the search process. It is interesting that increasing velocity enables us to outperform the original MPA settings. This finding is very interesting to the real applications of this optimiser.

Further, it was found that the MPA performance decreases when a bigger population size is applied. This fact is caused mostly by the small dimensionality of the real-world problems despite the solutions of the engineering problems limited by the feasible regions.

Finally, the efficiency of MPA is low when the higher value of FAD is employed. This parameter directly influences the frequency of using Eddy’s formation and fish aggregating devices during the search process. From Equation (7), it is clear that using FADs causes a random position of the current solution, which is slowing down the convergence of the MPA population.

A different setting of the parameter has no substantial influence on MPA results. This parameter is called the power law index, and it controls the Lévy flight process, which is used to generate new positions in the second and third phases. Notice that the value of is from .

The most efficient MPA variant in the comparison (10_0.2_0.5-0_1.5) has robust performance during all 11 stages of the search process (based on the absolute rank). This MPA variant achieved the best solution in 5 problems out of 13. Better results were achieved by variant 10_0.2_0.5_1 on the first problem.

Achieved results enable us to illustrate the influence of the MPA parameters when solving engineering problems. The linear change of the P value has the potential to be analysed in future research.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data were measured in MATLAB 2020b during the experiments.

Conflicts of Interest

The funders had no role in the design of the study, in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Abualigah, L.; Abd Elaziz, M.; Khasawneh, A.M.; Alshinwan, M.; Ibrahim, R.A.; Al-qaness, M.A.A.; Mirjalili, S.; Sumari, P.; Gandomi, A.H. Meta-heuristic optimization algorithms for solving real-world mechanical engineering design problems: A comprehensive survey, applications, comparative analysis, and results. Neural Comput. Appl. 2022, 34, 4081–4110. [Google Scholar] [CrossRef]

- Chu, S.C.; Huang, H.C.; Roddick, J.F.; Pan, J.S. Overview of Algorithms for Swarm Intelligence. In Computational Collective Intelligence, Technologies and Applications, Proceedings of the Third International Conference, ICCCI 2011, Gdynia, Poland, 21–23 September 2011; Jędrzejowicz, P., Nguyen, N.T., Hoang, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 28–41. [Google Scholar]

- Eberhart, R.; Kennedy, J. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. Artificial Bee Colony (ABC) Optimization Algorithm for Solving Constrained Optimization Problems. In Foundations of Fuzzy Logic and Soft Computing, Proceedings of the 12th International Fuzzy Systems Association World Congress, IFSA 2007, Cancun, Mexico, 18–21 June 2007; Melin, P., Castillo, O., Aguilar, L.T., Kacprzyk, J., Pedrycz, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 789–798. [Google Scholar]

- Yang, X.S.; Deb, S. Cuckoo search via Lévy flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; IEEE: New York, NY, USA, 2009; pp. 210–214. [Google Scholar]

- Yang, X.S. Firefly algorithm, Levy flights and global optimization. In Research and Development in Intelligent Systems XXVI; Springer: Berlin/Heidelberg, Germany, 2010; pp. 209–218. [Google Scholar]

- Zelinka, I. SOMA—Self-Organizing Migrating Algorithm. In New Optimization Techniques in Engineering; Springer: Berlin/Heidelberg, Germany, 2004; pp. 167–217. [Google Scholar]

- Bujok, P.; Tvrdik, J.; Polakova, R. Comparison of nature-inspired population-based algorithms on continuous optimisation problems. Swarm Evol. Comput. 2019, 50, 100490. [Google Scholar] [CrossRef]

- Bujok, P. Enhanced Tree-Seed Algorithm Solving Real-World Problems. In Proceedings of the 2020 7th International Conference on Soft Computing & Machine Intelligence (ISCMI 2020), Stockholm, Sweden, 14–15 November 2020; IEEE: New York, NY, USA, 2020; pp. 12–16. [Google Scholar] [CrossRef]

- Bujok, P. Harris Hawks Optimisation: Using of an Archive. In Artificial Intelligence and Soft Computing, Proceedings of the 20th International Conference on Artificial Intelligence and Soft Computing (ICAISC 2021), Virtual Event, 21–23 June 2021; Rutkowski, L., Scherer, R., Korytkowski, M., Pedrycz, W., Tadeusiewicz, R., Zurada, J., Eds.; Polish Neural Network Soc; Univ Social Sci Lodz; Czestochowa Univ Technol, Dept Intelligent Comp Syst; IEEE Computat Intelligence Soc, Poland Chapter; Czestochowa Univ Technol, Inst Computat Intelligence; Part I; Lecture Notes in Artificial Intelligence; Springer: Cham, Switzerland, 2021; Volume 12854, pp. 415–423. [Google Scholar] [CrossRef]

- Bujok, P.; Lacko, M. Slime Mould Algorithm: An Experimental Study of Nature-Inspired Optimiser. In Bioinspired Optimization Methods and Their Applications, Proceedings of the 10th International Conference on Bioinspired Optimization Methods and Their Applications (BIOMA), Maribor, Slovenia, 17–18 November 2022; Lecture Notes in Computer Science; Mernik, M., Eftimov, T., Crepinsek, M., Eds.; Springer: Cham, Switzerland, 2022; Volume 13627, pp. 201–215. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Elhoseny, M.; Chakrabortty, R.K.; Ryan, M. A Hybrid COVID-19 Detection Model Using an Improved Marine Predators Algorithm and a Ranking-Based Diversity Reduction Strategy. IEEE Access 2020, 8, 79521–79540. [Google Scholar] [CrossRef]

- Al-qaness, M.A.A.; Ewees, A.A.; Fan, H.; Abualigah, L.; Abd Elaziz, M. Marine Predators Algorithm for Forecasting Confirmed Cases of COVID-19 in Italy, USA, Iran and Korea. Int. J. Environ. Res. Public Health 2020, 17, 3520. [Google Scholar] [CrossRef]

- Sahlol, A.T.; Yousri, D.; Ewees, A.A.; Al-qaness, M.A.A.; Damasevicius, R.; Abd Elaziz, M. COVID-19 image classification using deep features and fractional-order marine predators algorithm. Sci. Rep. 2020, 10, 15364. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Ewees, A.A.; Yousri, D.; Alwerfali, H.S.N.; Awad, Q.A.; Lu, S.; Al-Qaness, M.A.A. An Improved Marine Predators Algorithm With Fuzzy Entropy for Multi-Level Thresholding: Real World Example of COVID-19 CT Image Segmentation. IEEE Access 2020, 8, 125306–125330. [Google Scholar] [CrossRef]

- Yousri, D.; Babu, T.S.; Beshr, E.; Eteiba, M.B.; Allam, D. A Robust Strategy Based on Marine Predators Algorithm for Large Scale Photovoltaic Array Reconfiguration to Mitigate the Partial Shading Effect on the Performance of PV System. IEEE Access 2020, 8, 112407–112426. [Google Scholar] [CrossRef]

- Soliman, M.A.; Hasanien, H.M.; Alkuhayli, A. Marine Predators Algorithm for Parameters Identification of Triple-Diode Photovoltaic Models. IEEE Access 2020, 8, 155832–155842. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Elhoseny, M.; Bashir, A.K.; Jolfaei, A.; Kumar, N. Energy-Aware Marine Predators Algorithm for Task Scheduling in IoT-Based Fog Computing Applications. IEEE Trans. Ind. Inform. 2021, 17, 5068–5076. [Google Scholar] [CrossRef]

- Fan, Q.; Huang, H.; Chen, Q.; Yao, L.; Yang, K.; Huang, D. A modified self-adaptive marine predators algorithm: Framework and engineering applications. Eng. Comput. 2022, 38, 3269–3294. [Google Scholar] [CrossRef]

- Abd Elminaam, D.S.; Nabil, A.; Ibraheem, S.A.; Houssein, E.H. An Efficient Marine Predators Algorithm for Feature Selection. IEEE Access 2021, 9, 60136–60153. [Google Scholar] [CrossRef]

- Aydemir, S.B.; Onay, F.K. Marine predator algorithm with elite strategies for engineering design problems. Concurr.-Comput.-Pract. Exp. 2023, 35, e7612. [Google Scholar] [CrossRef]

- Kumar, S.; Yildiz, B.S.; Mehta, P.; Panagant, N.; Sait, S.M.; Mirjalili, S.; Yildiz, A.R. Chaotic marine predators algorithm for global optimization of real-world engineering problems. Knowl.-Based Syst. 2023, 261, 110192. [Google Scholar] [CrossRef]

- Bayzidi, H.; Talatahari, S.; Saraee, M.; Lamarche, C.P. Social Network Search for Solving Engineering Optimization Problems. Comput. Intell. Neurosci. 2021, 2021, 8548639. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).