Abstract

In this article, the problem of solving a strongly monotone variational inequality problem over the solution set of a monotone inclusion problem in the setting of real Hilbert spaces is considered. To solve this problem, two methods, which are improvements and modifications of the Tseng splitting method, and projection and contraction methods, are presented. These methods are equipped with inertial terms to improve their speed of convergence. The strong convergence results of the suggested methods are proved under some standard assumptions on the control parameters. Also, strong convergence results are achieved without prior knowledge of the operator norm. Finally, the main results of this research are applied to solve bilevel variational inequality problems, convex minimization problems, and image recovery problems. Some numerical experiments to show the efficiency of our methods are conducted.

Keywords:

monotone inclusion problem; variational inequality problem; projection and contraction method; Tseng method; strong convergence; inertial term MSC:

05A30; 30C45; 11B65; 47B38

1. Introduction

Let be a nonempty, closed, and convex subset of a real Hilbert space with inner product and induced norm . Let be an operator. The classical variational inequality problem (VIP) is derived as follows: Find , such that

We denote by the solution set of the VIP (1). Problem (1) has a wide range of applications; several methods for solving this problem have been developed by many researchers (see [1,2,3] and the references in them).

On the other hand, the monotone inclusion problem (MIP) is formulated as follows: Find , such that

where is a real Hilbert space, is a single-valued monotone operator, and is a maximal monotone operator. We denote by the solution set of the MIP (2); it is referred to as the set of zero points of . Several optimization problems can be reformulated into the MIP (2). Some of these problems include convex minimization problems, equilibrium problems, image/signal processing problems, DC programming problems, split feasibility problems, and variational inequality problems; see [4,5,6]. The numerous applications of this problem have attracted the attention of a large number of researchers in the last few years, and many methods for solving the problem have also been developed; see [7,8,9]. One of the first methods for solving this problem is the forward–backward algorithm (FBA), which is defined by sequence as follows:

where is the step size, and and are denoted as forward and backward operators (also referred to as resolvent operators), respectively. The FBA for solving the MIP was independently studied by Lion and Mercier [6], and Passty [10]. In recent years, the convergence analysis and modifications of this method have been deeply exploited by many authors; see [4,11,12] and the references in them. We should note that the weak convergence result of method (3) requires the operator (D) to be strongly monotone; that is a strong assumption. In order to weaken this restriction, several methods have been developed by a large number of researchers; see [6,11,13] and the reference therein. One of the first methods considered to weaken this assumption was introduced by Tseng [13]. This method is called the Tseng splitting algorithm; it is also known as the forward–backward–forward method. Precisely, this method is defined as follows:

where is the step size, which can be updated automatically by the Armijo-type line-search technique. The author proves the weak convergence result of method (4) when operator D is Lipschitz continuous and monotone, and operator E is a maximal monotone operator. In [14], Zhang and Wang merge the FBA (3) and the projection and contraction method to obtain an iterative method that also surmounts the limitations of the FBA. Precisely, this method is defined as follows:

where , , ; is a control sequence, operator D is monotone–Lipschitz continuous, and E denotes the maximal monotone operator.

It is important to note that algorithms (4) and (5) only converge weakly in infinite dimensional spaces. However, in machine learning and CT reconstruction, strong convergence is more desirable in infinite dimensional spaces [12]. Therefore, it is necessary to modify (3), such that it can achieve strong convergence in real Hilbert spaces. In the last two decades, so many modifications of the forward–backward method have been constructed to obtain strong convergence results in real Hilbert spaces; see [11,12,15,16] and the references in them.

In recent years, the construction of inertial-based algorithms has attracted massive interest from researchers. The idea of including inertial terms in iterative methods for solving optimization problems was initiated by Polyak [17] and it has been confirmed by numerous authors that the inclusion of an inertial term in a method acts as a boost to the convergence speed of the method. A common feature of the inertial-type algorithm is that the next iteration depends on the combination of two previous iterates; for more details, see [3,18,19]. Many inertial-type algorithms have been studied and numerical tests have demonstrated that the inertial effects on these methods greatly improve their performances; see [1,3,20]. Recently, Lorenz and Pock [17] introduced and studied the following inertial FBA to solve the MIP (2):

Note that method (6) only convergences weakly in real Hilbert spaces; numerical tests by the authors proved that their method outperforms several existing methods without inertial terms.

Several mathematical problems, such as variational inequality problems, equilibrium problems, split feasibility problems, and split minimization problems, are all special MIP cases. These problems have been applied to solve diverse real-world problems, such as modeling inverse problems arising from phase retrieval, modeling intensity-modulated radiation therapy planning, sensor networks in computerized and data compression, optimal control problems, and image/signal processing problems [21,22,23].

The bilevel programming problem is a constrained optimization problem in which the constrained set is a solution set of another optimization problem. This problem is enriched with many applications in modeling Stackelberg games, the convex feasibility problem, determination in Wardrop equilibria for network flow, domain decomposition methods for PDEs, optimal control problems, and image/signal processing problems [23]. When the first-level problem is a VIP and the second-level problem is a fixed point set of a mapping, then the bilevel problem is known as the hierarchical variational inequality problem. In [24,25,26], Yamada introduced the following method, called the hybrid steepest-descent iterative method, to solve the hierarchical VIP:

where is a strongly monotone–Lipschitz continuous operator and is a nonexpansive mapping.

In this paper, we consider the problem of solving a VIP over the solution set of the MIP in a real Hilbert space. This problem is formulated as follows:

where is a strongly monotone–Lipschitz continuous operator, D is a monotone–Lipschitz continuous operator, and E is a maximal monotone operator.

Inspired by the inertial technique, the Tseng splitting algorithm, projection, and contraction method, and hybrid steepest decent method, we introduce two efficient iterative algorithms to solve problem (8). We prove the strong convergence results of the suggested method under some standard assumptions on the control parameters. Also, the strong convergence results are achieved without prior knowledge of the operator norm. Instead, the stepsizes are self-adaptively updated. Furthermore, we apply our main results to solve the bilevel variational inequality problem, convex minimization problem, and image recovery problem. We conduct numerical experiments to show the practicability, applicability, and efficiency of our methods. Our results improve, generalize, and unify the results presented in [4,12,13,27], as well as several others in the literature.

This article is organized as follows: In Section 2, we present some established definitions and lemmas that will be useful in deriving our main results. In Section 3, we present the proposed method and establish its convergence analysis. In Section 4, we show the applications of our main results to real-world problems. In Section 5, several numerical tests are carried out in finite and infinite dimensional spaces to demonstrate the computational efficiency of the proposed methods. Lastly, in Section 6, a summary of the obtained results is given.

2. Preliminaries

Let be a nonempty, closed, and convex subset of a real Hilbert space . We represent the weak and strong convergence of to p by and , respectively. For every point , the unique nearest point, which is denoted by , exists in , such that . The mapping is called the metric projection of onto and it is known to be nonexpansive.

Lemma 1

([28]). Let be a real Hilbert space and a nonempty closed convex subset of . Suppose and . Then ⇔, .

Lemma 2

([28]). Let be a real Hilbert space. Then for every and , we have

- (i)

- ;

- (ii)

- ;

- (iii)

- .

Lemma 3

([29]). Let be a sequence of non-negative real numbers, such that

where with . If for every subsequence of , the following inequality holds:

then .

Definition 1.

Let be a real Hilbert space and be a mapping. Then, is called

- (1)

- L-Lipschitz continuous, if exists, such thatIf , then is a contraction.

- (2)

- η-strongly monotone, if there exists a constant , such that

- (3)

- η-inverse strongly monotone (η-co-coercive), if there exists a constant , such that

- (4)

- Monotone, if

Definition 2.

Let be a multi-valued operator. Then

- (a)

- The graph of E is defined by

- (b)

- Operator E is said to be monotone if

- (c)

- Operator E is said to be maximal monotone if E is monotone and its graph is not a proper subset of the graph of any of the monotone operators.

- (d)

- For all , the resolvent of E is a single-valued mapping defined bywhere and I is an identity operator on .

Lemma 4

([30]). Let be a maximal monotone mapping and be a monotone and L-Lipschitz continuous operator. Then, the mapping is a maximal monotone mapping.

Lemma 5

([31]). Suppose that , and is η-strongly monotone and continuous, such that . Then for any nonexpansive mapping , we can associate a mapping defined by . Then, is a contraction provided ; that is,

where .

3. Main Results

In this section, we construct two methods for approximating the solution of the variational inequality problem over the solution set of the monotone inclusion problem. We establish the strong convergence results of the methods in the settings of real Hilbert spaces. The following assumptions will be useful in achieving our main results:

Assumption 1.

(A1) Operator is monotone and -Lipschitz continuous, and is a maximal monotone operator.

- (A2)

- The solution set denoted by .

- (A3)

- is η-strongly monotone and -Lipschitz continuous.

- (A4)

- , such that and . The positive sequence satisfies .

- (A5)

- Let , with , with , and with .

Remark 1.

From (9) and assumption , it is not hard to see that

Remark 2.

Obviously, the step size (16) properly contains some well-known step sizes considered in [12,18,32] and many others.

Lemma 6.

Assume that Assumption 1 holds and is the sequence generated by Algorithm 1, then defined by (16) is well-defined, and .

Proof.

The remaining part of the proof of this lemma is similar to that in [33], so we omit it here. □

| Algorithm 1 A modified accelerated projection and contraction method. |

|

Lemma 7.

If assumption is performed, then a positive integer K exists, such that

Proof.

Since and , then a positive integer exists, such that

For , and , we have

and this means that a positive integer exists, such that

Setting , which means that

□

Lemma 8.

Suppose that Assumption 1 holds and is the sequence generated from Algorithm 1. Then, for , the following inequality holds:

Proof.

Since , we have that . Since , it follows that

Now, due to the maximal monotonicity of E, we have that

Thus,

From (13), it implies that

By the monotonicity of D, it follows that

By (19), it follows that

Lemma 9.

Let and be sequences generated by Algorithm 1. Let and be subsequences of and , respectively. If and , then .

Proof.

The proof is similar to that of Lemma 7 in [5]. Thus, we omit it here. □

Lemma 10.

Let be the sequence generated by Algorithm 1. Then, is bounded.

Proof.

Let . From (10) and Assumption 1, we have , , and this implies that

It implies from (22) that there exists , such that

This implies that is bounded. Consequently, we have that and are also bounded sequences.

Theorem 1.

Suppose that Assumption 1 holds and is the sequence defined by Algorithm 1. Then, converges strongly to the unique solution of problem (8).

Proof.

for some .

The proof of the theorem will be divided into three steps.

- Claim 1:

Indeed, by (15), Lemma 2, and Lemma 5, we have

for some . By (24), we have

for some . Now, using (21), (29), and (30), we have

From (31), it implies that

for some .

- Claim 2:

- Claim 3: sequence converges to zero. For this, recalling Lemma 3 and Remark 1, it suffices to show that for every subsequence of satisfying

Now, we assume that is a subsequence of , such that (35) holds. Then

Owing Claim 1, and , we have

Consequently, we have

By (15), we have

By Lemma 7, a positive integer K exists, such that , for all . Now, if , then following the Lipschitz continuity of D, (12)–(14) and (40), we have that

This implies that

By Lemma 6, we have that , and this implies that . Furthermore, by assumption , we have that and . Due to (36) and (42), we have that

Next, if , then due to (14), we know that also holds. Now, by the boundedness of , then there exists a subsequence of , which is weakly convergent to some ; furthermore,

From (38), we have that as . This implies from Lemma 9 and (43) that . By (44) and the assumption that is the unique solution to problem (8), we have

Using Remark 1 and (46), we obtain

Thus, from Claim 2, assumption , (47), and Lemma 3, it follows that , as required. □

Next, we present the second method in Algorithm 2.

| Algorithm 2 A modified accelerated Tseng splitting method |

|

Remark 3.

From (48), and Assumption 1 , we observe that

Lemma 11.

If assumption is performed, then a positive integer K exists, such that

Proof.

The proof is similar to the proof of Lemma 7. □

Lemma 12.

Suppose Assumption 1 holds and is the sequence defined by Algorithm 2. Then, for all , we have the following inequality:

Proof.

From the definition of , it is obvious that

Observe that (55) holds if . If , we have

this implies that Thus, we have that (55) holds for and . Now, let . Then, from Lemma 2 and (55), we have

Next, we show that

From (50), we have that . Due to the maximal monotonicity of D, we know that there exists , such that

which means that

From the definition of , we have . Using the fact that and that is a maximal monotone operator, we obtain

Lemma 13.

Let and be sequences generated by Algorithm 2. Let and be subsequences of and , respectively. If and , then .

Proof.

The proof is similar to the proof of Lemma 9. □

Lemma 14.

Let be the sequence generated by Algorithm 2. Then, is bounded.

Proof.

Now, due to the boundedness of and Lemma 11, there exists , such that , for all . This, together with (61), yields

The remaining part of the proof is similar to that of Lemma 10. Therefore, we omit it here and this completes the proof of the Lemma. □

Theorem 2.

Suppose that Assumption 1 holds and is the sequence defined by Algorithm 2. Then, converges strongly to the unique solution of problem (8).

Proof.

The proof of the theorem will be divided into three steps.

- Claim (i):

From (63), it implies that

- Claim (ii):for some . □

The proof of Claim (ii) is similar to that of Claim 2 of Theorem 1. Therefore, we omit it here.

- Claim (iii): sequence converges to zero. For this, recalling Lemma 3 and Remark 3, it suffices to show that for every subsequence of , satisfying

Now, we assume that is a subsequence of , such that (65) holds. Then

Owing to Claim (i), and and the boundedness of , we have

Consequently, we have

Again, by (52), we have

The remaining part of the proof is similar to Claim 3 of Theorem 1. Hence, we omit it here and complete the proof of the theorem.

4. Applications

In this section, we consider the applications of our methods to the bilevel variational inequality problem, convex minimization problem, and image recovery problem.

4.1. Application to the Bilevel Variational Inequality Problem

Let be a real Hilbert space and be a nonempty closed convex subset of . Let be two single-valued operators. Then, the bilevel variational inequality problem (BVIP) is formulated as follows:

where denotes the solution set of the variational inequality problem (VIP):

Assume that , the VIP (72) is equivalent to the following inclusion problem:

where is the sub-differential of the indicator function and it is a maximal monotone operator [34]. In this case, according to [35], the resolvent of E is the metric projection ; that is, . Thus, the following corollaries follow immediately from Theorem 1 and Theorem 2, respectively:

Corollary 1.

Let be a real Hilbert space and a nonempty closed convex subset of ; let be a monotone and L-Lipschitz continuous operator, be a strongly monotone and monotone operator, and be a maximal monotone operator. Assume that and that assumptions – hold. If , a sequence generated by Algorithm 3:

| Algorithm 3 A modified accelerated projection and contraction method. |

|

Then, sequence converges strongly to a unique solution of the (BVIP) (71).

Corollary 2.

Let be a real Hilbert space and be a nonempty closed convex subset of ; let be a monotone and L-Lipschitz continuous operator, be a strongly monotone and monotone operator, and be a maximal monotone operator. Assume that and that assumptions – hold. If , a sequence generated by Algorithm 4:

| Algorithm 4 A modified accelerated Tseng splitting method. |

|

Then, sequence converges strongly to a unique solution of the (BVIP) (71).

4.2. Application to the Convex Minimization Problem

Let be a convex differentiable function and be a proper lower-semi-continuous and convex function. Then, the convex minimization problem (CMP) is formulated as follows:

It is well-known that problem (74) is a special case of the MIP; that is, it is equivalent to the problem: . It is a known fact that if is -Lipschitz continuous, then it is L-inverse strongly monotone and is a maximal monotone operator. The solution set of the CMP (74) is denoted by . In Theorems 1 and 2, we set and , , where is a -contraction mapping. It is not hard to see that is Lipschitz continuous and -strongly monotone. Consequently, if we take , then, we obtain the following corollaries from Theorems 1 and 2, respectively.

Corollary 3.

Let be a real Hilbert space, be a L-Lipschitz continuous operator, and be a maximal monotone operator. Assume that and that assumptions – hold. If , a sequence generated by Algorithm 5:

| Algorithm 5 A Modified Accelerated Projection and Contraction Method. |

|

Then, sequence converges strongly to an element in .

Corollary 4.

Let be a real Hilbert space, be a L-Lipschitz continuous operator, and be a maximal monotone operator. Assume that and that assumptions – hold. If , a sequence generated by Algorithm 6:

| Algorithm 6 A modified accelerated Tseng splitting method. |

|

Then, sequence converges strongly to an element in .

4.3. Application to the Image Restoration Problem

The general image recovery problem can be formulated by the inversion of the observation model defined by

where h is the unknown additive random noise, is the known original image, D is the linear operator involved in the considered image recovery problem, and z is the known degraded observation. Model (75) is closely equivalent to different concepts of optimization problems. In recent years, the norm has been widely used by many authors in these kinds of problems. The regularization, which can be employed to remove noise in the recovery process, is defined by

where , , D is a matrix and . Next, we use various algorithms, as listed above, to find the solution to the following CMP:

where D is an operator representing the mask and g is the degraded image.

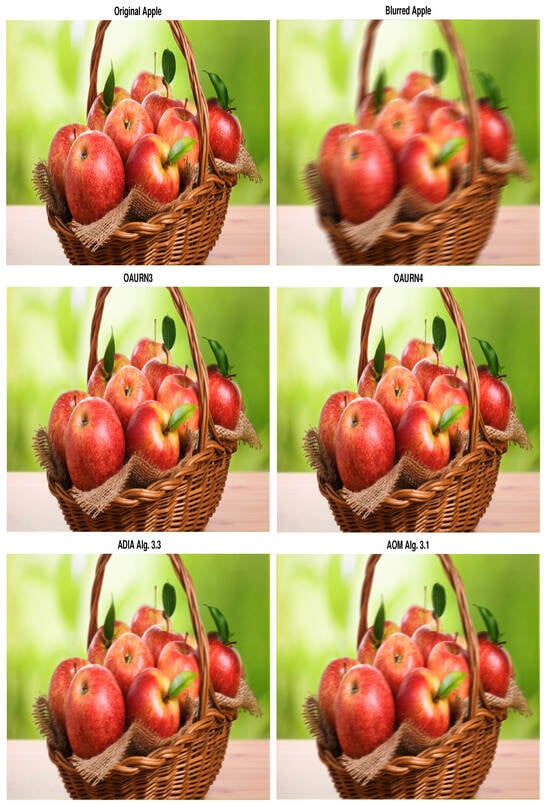

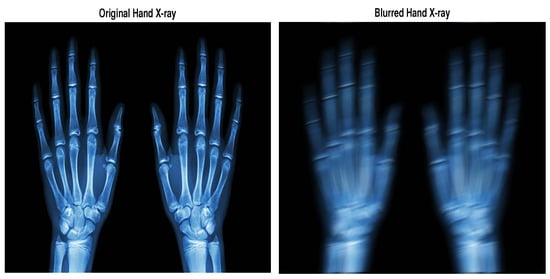

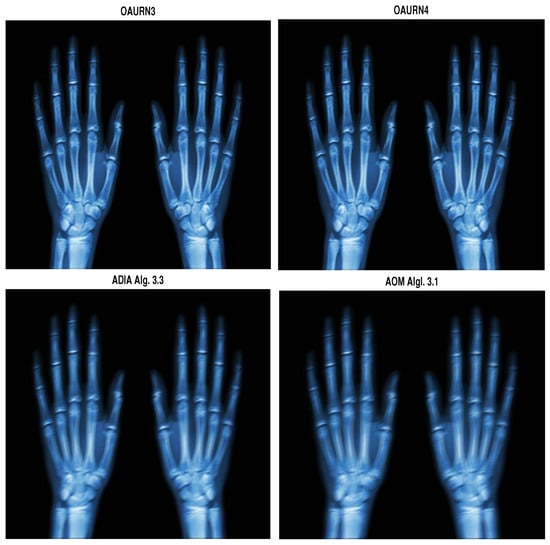

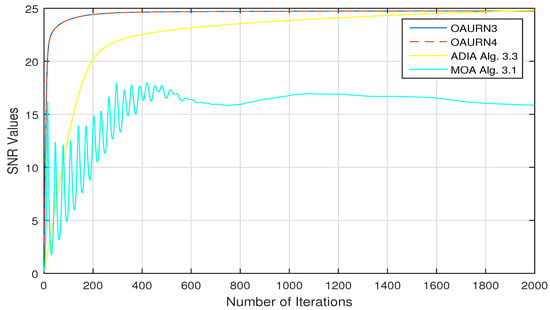

In this numerical experiment, , , and in all the algorithms, set and . We define the gradient by

Moreover, we compare the image recovery efficiency of method Algorithms 5 (in short, OAURN3) and 6 (in short, OAURN4) with Algorithm 3.3 by Adamu et al. [36] (in short, ADIA Alg. 3.3) and Algorithm 3.1 by Alakoya et al. [15] (in short, AOM Alg. 3.1). For all algorithms, we use the stopping criterion and choose the following parameters for all the methods:

- In OAURN2 and OAURN3, we set , , , , , , , , and .

- In ADIA Alg. 3.3, we set , , , , and .

- In AOM Alg. 3.1, we set , , , , , , , , , , and .

The test images are a hand X-ray and an apple. The performances of the algorithms are measured via the signal-to-noise ratio (SNR), defined by

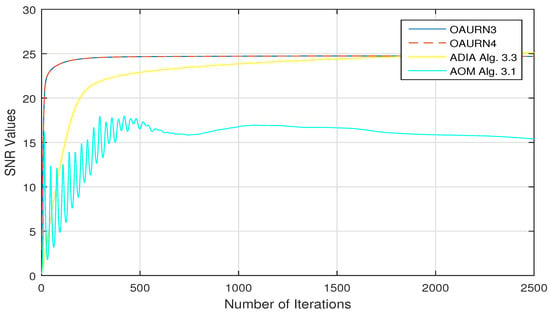

where is the restored image and p is the original image. We consider the blur function in MATLAB “special (‘motion‘, 40, 70)” and add random noise. All numerical simulations were performed using MATLAB R2020b and carried out on a PC Desktop with an Intel® Core™ i7-3540M CPU @ 3.00GHz × 4 and 400.00GB memory. The numerical results are presented in Figure 1, Figure 2, Figure 3 and Figure 4 and Table 1.

Figure 1.

Comparison of restored images via various methods when the number of iterations is 2000 for the apple image.

Figure 2.

Comparison of restored images via various methods when the number of iterations is 2500 for the hand X-ray image.

Figure 3.

Graphs of SNR for the methods OAURN1, OAURN2, ADIA Alg. 3.3, and AOM Alg 3.1 for the apple image.

Figure 4.

Graphs of SNR for the methods OAURN1, OAURN2, ADIA Alg. 3.3, and AOM Alg 3.1 for the Hand X-ray image.

Table 1.

Numerical comparison for the methods OAURN1, OAURN2, ADIA Alg. 3.3, and AOM Alg. 3.1.

5. Numerical Experiments

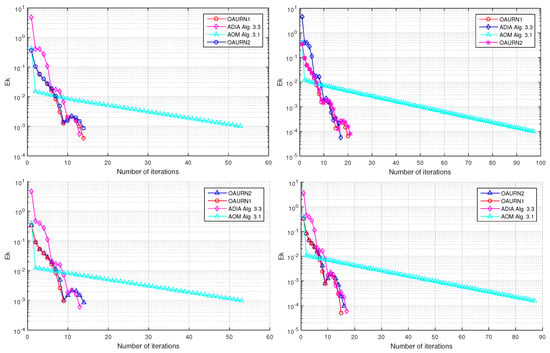

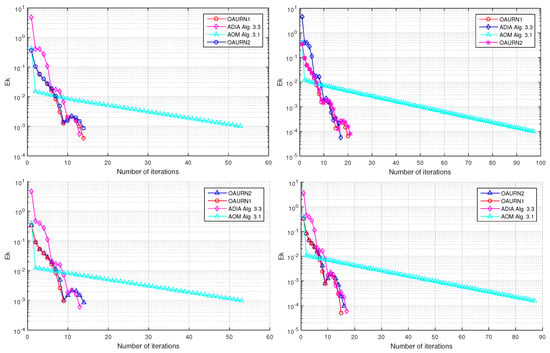

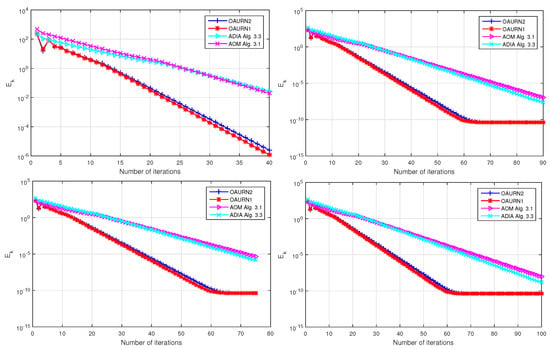

In this section, we present some numerical experiments to illustrate the numerical behavior of Algorithms 1 (in short, OAURN1) and 2 (in short, OAURN2). Moreover, we compare them with Algorithm 3.3 by Adamu et al. [36] (in short, ADIA Alg. 3.3) and Algorithm 3.1 by Alakoya et al. [15] (in short, AOM Alg. 3.1). We choose the parameters of all the methods as follows:

- In the proposed Algorithms 1 and 2, we set , , , , , , , , and .

- In ADIA Alg. 3.3, we set , , , , and .

- In AOM Alg. 3.1, we set , , , , , , , , , , and .

Example 1.

Let and let the operators be defined by

It is not hard to verify that D is -inverse strongly monotone, E is a maximal monotone operator, and is -strongly monotone and 0.5-Lipschitz continuous. For this experiment, we take the stopping and consider the following cases:

- Case I: and ;

- Case II: and ;

- Case III: and ,

- Case IV: and .

The obtained numerical results are presented in the following Table 2 and Figure 5. It can be seen that our method outperforms the compared methods.

Table 2.

Numerical results of Example 1.

Figure 5.

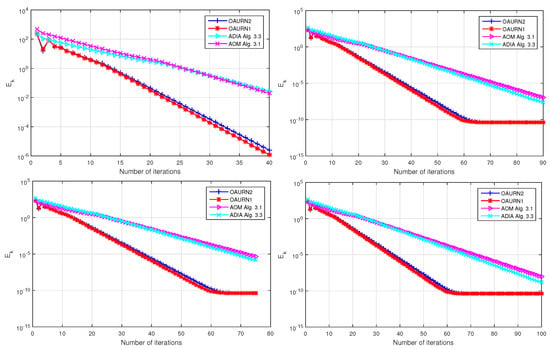

Example 1, Case I (top left); Case II (top right); Case III (bottom left); Case IV (bottom right).

Example 2.

Let , where and , . We now define the operators by

It is easy to check that D is 2-inverse strongly monotone, E is a maximal monotone operator, and is -strongly monotone and 0.8-Lipschitz continuous. For this experiment, we take the stopping and consider the following cases:

- Case A: and .

- Case B: and .

- Case C: and .

- Case D: and .

The obtained numerical results are shown in Table 3 and Figure 6; it can be seen that our method outperformed the compared methods.

Table 3.

Numerical results of Example 2.

Figure 6.

Example 2, Case A (top left); Case B (top right); Case C (bottom left); Case D (bottom right).

6. Conclusions

In this work, two efficient iterative methods for solving the strongly monotone variational inequality problem over the solution set of the monotone inclusion problem have been introduced. These methods are accelerated by the inertial technique. The new methods use self-adaptive step sizes rather than depending on prior knowledge of the operator norm and the Lipschitz constants of the operators involved. We obtained the strong convergence results of these methods under some mild conditions on the control parameters. We applied our results to solve the variational inequality problem, bilevel variational inequality problem, convex minimization problem, and image recovery problems. Numerical experiments were carried out to authenticate the applicability of our methods and to further show the superiority of the proposed method over some existing methods. The new results improve, extend, complement, and unify some existing results in [4,12,13,27] and several others.

Author Contributions

Conceptualization, A.E.O.; Methodology, G.C.U.; Software, J.A.A.; Validation, Funding acquisition, Formal analysis, H.A.N.; Writing—original draft, A.E.O.; Supervision, G.C.U. and O.K.N. All authors have read and agreed to the published version of the manuscript.

Funding

The authors extend their appreciation to Prince Sattam Bin Abdulaziz University for funding this research through project number (PSAU/2023/01/90101).

Data Availability Statement

The data used to support the findings of this study are included within the article.

Conflicts of Interest

The authors declare that they have no competing interest.

References

- Abuchu, J.A.; Ofem, A.E.; Ugwunnadi, G.C.; Narain, O.K.; Hussain, A. Hybrid Alternated Inertial Projection and Contraction Algorithm for Solving Bilevel Variational Inequality Problems. J. Math. 2023, 2023, 3185746. [Google Scholar] [CrossRef]

- Ofem, A.E. Strong convergence of a multi-step implicit iterative scheme with errors for common fixed points of uniformly L–Lipschitzian total asymptotically strict pseudocontractive mappings. Results Nonlinear Anal. 2020, 3, 100–116. [Google Scholar]

- Ofem, A.E.; Mebawondu, A.A.; Ugwunnadi, G.C.; Işık, H.; Narain, O.K. A modified subgradient extragradient algorithm-type for solving quasimonotone variational inequality problems with applications. J. Inequal. Appl. 2023, 2023, 73. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Hieu, D.V.; Cho, Y.J. Relaxed forward–backward splitting methods for solving variational inclusions and applications. J. Sci. Comput. 2021, 88, 85. [Google Scholar] [CrossRef]

- Gibali, A.; Thong, D.V. Tseng type methods for solving inclusion problems and its applications. Calcolo 2018, 55, 49. [Google Scholar] [CrossRef]

- Lions, P.L.; Mercier, B. Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 1979, 16, 964–979. [Google Scholar] [CrossRef]

- Ofem, A.E.; Mebawondu, A.A.; Ugwunnadi, G.C.; Cholamjiak, P.; Narain, O.K. Relaxed Tseng splitting method with double inertial steps for solving monotone inclusions and fixed point problems. Numer. Algor. 2023. [Google Scholar] [CrossRef]

- Ofem, A.E.; Işik, H.; Ali, F.; Ahmad, J. A new iterative approximation scheme for Reich–Suzuki type nonexpansive operators with an application. J. Inequal. Appl. 2022, 2022, 28. [Google Scholar] [CrossRef]

- Shehu, Y.; Dong, Q.L.; Jiang, D. Single projection method for pseudo-monotone variational inequality in Hilbert spaces. Optimization 2019, 68, 385–409. [Google Scholar] [CrossRef]

- Passty, G.B. Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 1979, 72, 383–390. [Google Scholar] [CrossRef]

- Izuchukwu, C.; Reich, S.; Shehu, Y.; Taiwo, A. Strong Convergence of Forward-Reflected-Backward Splitting Methods for Solving Monotone Inclusions with Applications to Image Restoration and Optimal Control. J. Sci. Comput. 2023, 94, 73. [Google Scholar] [CrossRef]

- Tan, B.; Cho, S.Y. Strong Convergence of inertial forward-backward methods for solving monotone inclusions. Appl. Anal. 2022, 101, 5386–5414. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, Y. Proximal algorithm for solving monotone variational inclusion. Optimization 2018, 67, 1197–1209. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Ogunsola, O.J.; Mewomo, O.T. An inertial viscosity algorithm for solving monotone variational inclusion and common fixed point problems of strict pseudocontractions. Bol. Soc. Mat. Mex. 2023, 29, 31. [Google Scholar] [CrossRef]

- Thong, D.V.; Anh, P.K.; Dung, V.T.; Linh, D.T.M. A Novel Method for Finding Minimum-norm Solutions to Pseudomonotone Variational Inequalities. Netw. Spat. Econ. 2023, 23, 39–64. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Thong, D.V.; Hieu, D.V.; Rassias, T.M. Self adaptive inertial subgradient extragradient algorithms for solving pseudomonotone variational inequality problems. Optim. Lett. 2020, 14, 115–144. [Google Scholar] [CrossRef]

- Thong, D.V.; Liu, L.L.; Dong, Q.L.; Long, L.V.; Tuan, P.A. Fast relaxed inertial Tseng’s method-based algorithm for solving variational inequality and fixed point problems in Hilbert spaces. J. Comput. Appl. Math. 2023, 418, 114739. [Google Scholar] [CrossRef]

- Shehu, Y.; Yao, J.C. Rate of convergence for inertial iterative method for countable family of certain quasi–nonexpansive mappings. J. Nonlinear Convex Anal. 2020, 21, 533–541. [Google Scholar]

- Ofem, A.E.; Igbokwe, D.I. A new faster four step iterative algorithm for Suzuki generalized nonexpansive mappings with an application. Adv. Theory Nonlinear Anal. Its Appl. 2021, 5, 482–506. [Google Scholar]

- Ofem, A.E.; Abuchu, J.A.; George, R.; Ugwunnadi, G.C.; Narain, O.K. Some new results on convergence, weak w2–stability and data dependence of two multivalued almost contractive mappings in hyperbolic spaces. Mathematics 2022, 10, 3720. [Google Scholar] [CrossRef]

- Eslamian, M. Variational inequality over the solution set of split monotone variational inclusion problem with application to bilevel programming problem. Filomat 2023, 37, 8361–8376. [Google Scholar]

- Ofem, A.E.; Udofia, U.E.; Igbokwe, D.I. A robust iterative approach for solving nonlinear Volterra Delay integro-differential equations. Ural Math. J. 2021, 7, 59–85. [Google Scholar] [CrossRef]

- Okeke, G.A.; Ofem, A.E.; Abdeljawad, T.; Alqudah, M.A.; Khan, A. A solution of a nonlinear Volterra integral equation with delay via a faster iteration method. AIMS Math. 2023, 8, 102–124. [Google Scholar] [CrossRef]

- Yamada, I. The hybrid steepest decent method for variational inequality problem over intersection of fixed point sets of nonexpansive mapping. In Inherently Parallel Algorithm in Feasibility and Their Application; Butnariu, D., Censor, Y., Reich, S., Eds.; Elsevier: Amsterdam, The Netherlands, 2001; pp. 473–504. [Google Scholar]

- Lorenz, D.A.; Pock, T. An inertial forward–backward algorithm for monotone inclusions. Math. Imaging Vis. 2015, 51, 311–325. [Google Scholar] [CrossRef]

- Goebel, K.; Reich, S. Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings; Marcel Dekker: New York, NY, USA, 1984. [Google Scholar]

- Xu, H.K. Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Brezis, H.; Chapitre, I.I. Operateurs maximaux monotones. North-Holland Math. Stud. 1973, 5, 19–51. [Google Scholar]

- Anh, P.K.; Anh, T.V.; Muu, L.D. On Bilevel split pseudomonotone variational inequality problems with applications. Acta Math. Vietnam. 2017, 42, 413–429. [Google Scholar] [CrossRef]

- Liu, H.; Yang, J. Weak convergence of iterative methods for solving quasimonotone variational inequalities. Comput. Optim. Appl. 2020, 77, 491–508. [Google Scholar] [CrossRef]

- Wang, Z.; Lei, Z.; Long, X.; Chen, Z. A modified Tseng splitting method with double inertial steps for solving monotone inclusion problems. J. Sci. Comput. 2023, 96, 92. [Google Scholar] [CrossRef]

- Kitkuan, D.; Kumam, P.; Martinez-Moreno, J. Generalized Halpern-type forward-backward splitting methods for convex minimization problems with application to image restoration problems. Optimization 2020, 69, 1–25. [Google Scholar] [CrossRef]

- Rockafellar, R. On the maximality of sums of nonlinear monotone operators. Trans. Amer. Math. Soc. 1970, 149, 75–88. [Google Scholar] [CrossRef]

- Adamu, A.; Deepho, J.; Ibrahim, A.H.; Abubakar, A.A.B. pproximation of zeros of sum of monotone mappings with applications to variational inequality and image restoration problems. Nonlinear Funct. Anal. Appl. 2021, 26, 411–432. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).