Abstract

The cognitive diagnosis model (CDM) is an effective statistical tool for extracting the discrete attributes of individuals based on their responses to diagnostic tests. When dealing with cases that involve small sample sizes or highly correlated attributes, not all attribute profiles may be present. The standard method, which accounts for all attribute profiles, not only increases the complexity of the model but also complicates the calculation. Thus, it is important to identify the empty attribute profiles. This paper proposes an entropy-penalized likelihood method to eliminate the empty attribute profiles. In addition, the relation between attribute profiles and the parameter space of item parameters is discussed, and two modified expectation–maximization (EM) algorithms are designed to estimate the model parameters. Simulations are conducted to demonstrate the performance of the proposed method, and a real data application based on the fraction–subtraction data is presented to showcase the practical implications of the proposed method.

Keywords:

cognitive diagnosis model; DINA model; penalized likelihood; Shannon entropy; EM algorithm MSC:

62P25

1. Introduction

CDMs are widely used in the field of educational and psychological assessments. These models are used to extract the examinees’ latent binary random vectors, which can provide rich and comprehensive information about examinees. Different CDMs are proposed for different test scenarios. The popular CDMs include Deterministic Input, Noisy “And” gate (DINA) model [1], Deterministic Input, Noisy “Or” gate (DINO) model [2], Noisy Inputs, Deterministic “And” gate (NIDA) model [3], Noisy Inputs, Deterministic “Or” gate (NIDO) model [2], Reduced Reparameterized Unified Model (RRUM) [4,5] and Log-linear Cognitive Diagnosis Model (LCDM) [6]. The differences among the above-mentioned CDMs are the modeling methods of the positive response probabilities. CDMs can be summarized in more flexible frameworks such as the Generalized Noisy Inputs, Deterministic “And” gate (GDINA) model [7] and the General Diagnostic Model (GDM) [8]. The simplicity and interpretability of the DINA model have positioned it as one of the most popular CDMs.

The DINA model, also known as the latent classes model [9,10,11,12,13], is a mixture model, so it still suffers from the drawbacks of the mixture model. Too many latent classes may overfit the data, which means that data should have been characterized by a simpler model. Too few latent classes cannot characterize the true underlying data structure well and yield poor inference. In practical terms, identifying the empty latent classes will improve the model’s interpretability and explain the data well. Chen [14] showed that the theoretical optimal convergence rate of the mixture model with the unknown number of classes is slower than the optimal convergence rate with the known number of classes. This means that the inference would strongly benefit from the known number of classes. Therefore, from both practical and theoretical views, eliminating the empty latent classes is a crucial issue in the DINA model.

Common reasons for empty attribute profiles include small sample sizes or highly correlated attributes. Let us explore a few examples to illustrate further. In a scenario where the sample size is smaller than the number of attribute profiles, it is inevitable that some attribute profiles will be empty. In another scenario with two attributes and , the relation is assumed that if and only if . Under the assumption of extremely correlated attributes, attribute profiles and do not appear. Situations with empty attribute profiles can occur in various scenarios [15].

The hierarchical diagnostic classification model [15,16] is a well-known method to eliminate empty attribute profiles. In the literature, directed acyclic graphs are employed to describe the relationships among the attributes, and the directions of edges impose strict constraints on attributes. If there is a directed edge from to , the attribute profile is forbidden. Gu and Xu [15] utilized penalized EM to select the true attribute profiles, avoid overfitting, and learn attribute hierarchies. Wang and Lu [17] compared two exploratory approaches of learning attribute hierarchies in the LCDM and DINA models. In essence, the attribute hierarchy can be regarded as a specific family of correlated attributes that can be effectively represented and described through a graph model.

The penalized methods have been widely researched in many statistical problems. In the regression model, the least absolute shrinkage and selection operator (LASSO) and its variants are analyzed by [18,19,20]. Fan and Li [21] proposed a nonconcave penalty smoothly clipped absolute deviation (SCAD) to reduce the bias of estimators. In the Gaussian mixture model, Ma and Wang, Huang et al. [22,23] proposed penalized likelihood methods to determine the number of components. In CDMs, Chen et al. [10] used SCAD to obtain the sparse item parameters and recovery Q matrix. Xu and Shang [11] applied a “ norm” penalty to CDM and suggested a truncated “ norm” penalty as the approximate calculation.

In the hierarchical diagnostic classification model, directed acyclic graphs of attributes often need to be specified in advance. A limitation of this model is that it is difficult to specify a graph in real scenarios. The penalty of the penalized EM proposed by Gu and Xu [15] involves two tuning parameters that complicate the implementation. Therefore, we hope to propose a method that does not require specifying a directed acyclic graph in advance and has a concise penalty term.

This paper makes two primary contributions. Firstly, it introduces an entropy-based penalty, and secondly, it develops the corresponding algorithms to utilize this penalty. This paper proposes a novel approach for estimating the DINA model, combining Shannon entropy and the penalized method. In information theory, “uncertainty” can be interpreted informally as the negative logarithm of probability, and Shannon entropy is the average effect of the “uncertainty”. Shannon entropy can be used to characterize the distribution of attribute profiles. By utilizing the proposed method, the empty attribute profiles can be eliminated. We further develop the EM algorithm for the proposed method and conduct some simulations to verify the proposed method.

The rest of the paper is organized as follows. In Section 2, we give an overview of the DINA model and the estimation method. A definition of the feasible domain is defined to characterize the latent classes. Section 3 introduces the entropy penalized method, and the EM algorithm is employed to estimate the DINA model. The numerical studies of the entropy penalized method are shown in Section 4. Section 5 presents real data analysis based on the fractions–subtraction data. The summary of the paper and future research are given in Section 6. The details of the EM algorithm and proof are given in Appendix A, Appendix B and Appendix C.

2. DINA Model

2.1. Review of DINA Model

Firstly, some useful notations are introduced. For the examinee , the attribute profile , also known as the latent class, is a K-dimensional binary vector , and the corresponding response data to J items is a J-dimensional binary vector , where “⊤” is the transpose operation. Let and denote the collection of all and , respectively. The Q matrix is a binary matrix, where if item j requires the attribute k, then the element is 1, otherwise, the element is 0. The j-th row vector is denoted by . Given the fixed K, there are latent classes. We use a multinomial distribution with the probability to describe the attribute profile , where , and the population parameter denotes the collection of probabilities for all attribute profiles.

The DINA model [1] supposes that, in an ideal scenario, the examinees with all required attributes will provide correct answers. For examinee i and item j, the ideal response is defined as , where is defined as 1. The slipping and guessing parameters are defined by conditional probabilities and , respectively. The parameters and are the collections of all and , respectively.

In the DINA model, the positive response probability can be constructed as

If both and are observed, the likelihood function is

Given data and attribute profile , the parameters and can be directly estimated by the maximum likelihood estimators:

where is the indicator function. When is latent, by integrating out , the marginal likelihood is

which is the primary focus of this paper.

2.2. Estimation Methods

EM and Markov chain Monte Carlo (MCMC) are two estimation methods for the DINA model. De la Torre [24] discussed the marginal maximum likelihood estimation for the DINA model, and the EM algorithm was employed where the objective function was Equation (4). Gu and Xu [15] proposed a penalized expectation–maximization (PEM) with the penalty

where controls the sparsity of and is a small threshold parameter the same order as , the constant . There are two tuning parameters and in PEM. Additionally, a variational EM algorithm is proposed as an alternative approach.

Culpepper [25] proposed a Bayesian formulation for the DINA model and used Gibbs sampling to estimate parameters. The algorithm can be implemented by the R package “dina”. The Gibbs sampling can be extended by a sequential method in the DINA and GDINA with many attributes [26], which provides an alternative approach to the traditional MCMC. As the focus of this paper does not revolve around the MCMC, we refrain from details.

2.3. The Property of DINA as Mixture Model

For a fixed K, the DINA model can be viewed as a mixture model comprising latent classes (i.e., components). In contrast to the Gaussian mixture model, where a change in the number of components will introduce or remove the mean and covariance parameters, the DINA model behaves differently. Specifically, a change in the number of latent classes does not necessarily affect the presence of item parameters. This means that there are two cases: (i) the latent classes have changed while the structure of the item parameters does not change, and (ii) the latent classes and the structure of the item parameters change simultaneously. To account for the two cases, a formal definition of the feasible domain of latent classes is introduced.

Definition 1.

Given the subset of latent classes. If for any or , , there exist some latent classes in , whose response function (i.e., the distribution of response data), is determined by or . We say that is a feasible subset of latent classes and all feasible s make up the feasible domain .

If all , the probability of is strictly 0. There exist some subsets that will spoil the item parameter space. Let us see the following examples

Assume the response vector . If is from , then for , , we have

which means that the ’s response function is determined by . For , , we have

which means that the ’s response function is determined by . The Equations (7) and (8) are obtained by calculating the ideal responses. To determine the response function of and , all item parameters and are required. Based on similar discussions, ’s response function is determined by , and ’s response function is determined by . To determine the response function of and , all item parameters and are required.

Then, a different case is presented. If is from , ’s response function is determined by , ’s response function is determined by , and ’s response function is determined by . The item parameter cannot affect the response function of any attribute profile. Hence, is called a redundant parameter. Meanwhile, this indicates that there does not exist a slipping behavior for item 4, and we can let the redundant parameter . If is from , the item parameter space collapses from 8-dimensional to 7-dimensional. It is obvious that the subset will not spoil item parameter space, and the feasible domain depends on Q. However, a lemma can be given as follows. The proof is deferred to Appendix A.

Lemma 1.

If contains and , then always lies in the feasible region , where and are K-dimensional vectors with all 0 and 1, respectively.

3. Entropy Penalized Method

3.1. Entropy Penalty of DINA

Shannon entropy is , where the value of notation is taken to be 0 according to [27,28]. This section focuses on the case within the feasible domain, and the entropy penalized log-likelihood function with the constraint is

where the penalty parameter whose the interpretation coincides with [15]. Analogously, the penalty parameter still controls the sparsity of . The two penalties have different scales because is not close to . If omitting the condition , the penalty going to the negative infinity implies that one latent class will be randomly selected (i.e., extremely sparse). The penalty going to zero implies all information comes from observed data. Compared with PEM, the proposed method only needs one tuning parameter.

The essential differences between the PEM and Entropy penalization methods are emphasized. PEM utilizes the term to handle the population probability , where is pre-specified rather than determined by some fit indices. Hence, the selection of will affect the performance of PEM. In the Entropy penalization method, is well defined, and we do not need extra parameters. The performance of the Entropy penalization method is completely determined by the parameter .

Treating as the latent data, the expected log-likelihood function is

where the expectation is taken with respect to the distribution . Considering the constraint , the Lagrange function can be defined as

Let the derivatives of and be 0, for any , we obtain

where is the posterior probability of the examinee i belonging to the latent class . The iterative formula of is proportional to , where the superscript “” indicates the values coming from the t-th iteration. Based on the iterative formula, a theorem is given to shrink the interval of .

Theorem 1.

For the DINA model with a fixed integer K, the penalty parameter λ of the penalized function Equation (9) should be in interval .

This theorem also indicates that and N have the same order, and is the rate. This paper focuses on . Algorithm 1 shows the schedules of EM for the DINA model within the feasible domain. When to implement algorithms, the algorithms of Gu and Xu [15] introduce an additional pre-specified constant c to update the population parameter , where is a small constant. Algorithm 1 does not rely on a pre-specified constant. In the method establishment and algorithm implementation, the algorithms of Gu and Xu [15] involve three parameters , while Algorithm 1 only involves a parameter . We emphasize again that the parameter in the two methods has different scales. The calculation and proof are omitted, and more details are deferred to Appendix B.

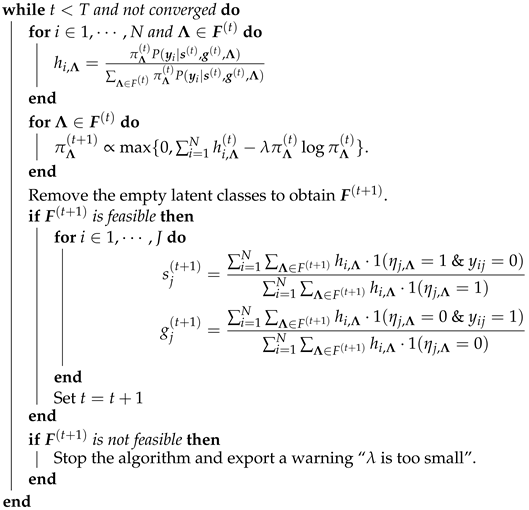

| Algorithm 1: EM of Entropy Penalized Method within the Feasible Domain. |

Output: and . |

Chen and Chen [29] proposed the extended Bayesian information criteria (EBIC) to conduct model selection from a large model space. The EBIC has the following form:

where is the number of nonempty latent classes and indicates the binomial coefficient “m choose n”. The smaller EBIC indicates a preferred model. In this paper, EBIC is used to select from the mentioned grid structure.

In the implementation of the EM algorithm, we assume that the initial includes all latent classes to avoid missing important latent classes. The gaps between item parameters are used to check the convergence. If are not feasible, it means that the penalty parameter is too small.

3.2. Modified EM

This section considers the case that is not necessarily feasible. In this case, some or will disappear, which means that the observed data cannot provide any information about or . These redundant item parameters are set to zero. We focus on the Lagrange function without constraints as

Because the space of item parameters may be collapsed, the dimension of item parameters needs to be recalculated. Meanwhile, EBIC will become

where and indicate the numbers of nonzero slipping and guessing parameters, respectively. If all and exist, the summation of and is , which implies Equation (13).

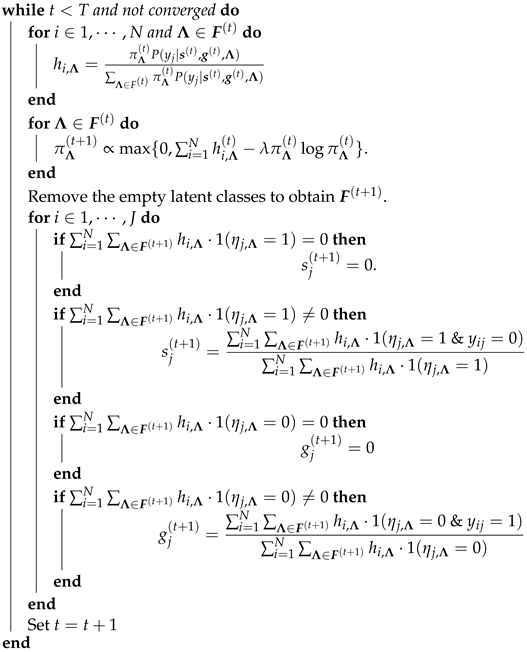

The corresponding EM is shown in Algorithm 2, where the discussions of and convergence are similar. However, this algorithm does not distinguish between feasible and not. To the best of our knowledge, no algorithms have handled the case without the feasible domain. More details are shown in Appendix B.

| Algorithm 2: EM of Entropy Penalized Method without the Feasible Domain. |

Output: and . |

4. Simulation Studies

Three simulation studies are conducted to implement the standard EM accounting for all latent classes as a baseline for comparison to verify the selection validity of EBIC and the performance of the entropy penalized method, respectively. Each simulation study serves a specific purpose and contributes to the overall assessment of the proposed approach. For all simulation studies, we set , and Q matrix has the following structure:

The Q matrix with two identity matrices satisfies the identifiable conditions [12,30,31]. All attributes are required by four items, and the design of the Q matrix is balanced.

4.1. Study I

In this study, the settings are , and . The attribute profiles are generated from , and each attribute profile of has a probability of 1/7.

For the proposed EM, the penalty parameter . The method to explore will be discussed in the next simulation study. For each attribute , the classification accuracy is evaluated by the posterior marginal probability as

where is the posterior probability of examinee i having attribute profile . Given the posterior marginal probability, the logarithm of the posterior marginal likelihood as

reflects the global classification accuracy, where denotes the true attribute during data generation.

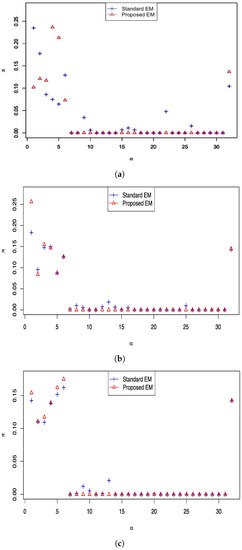

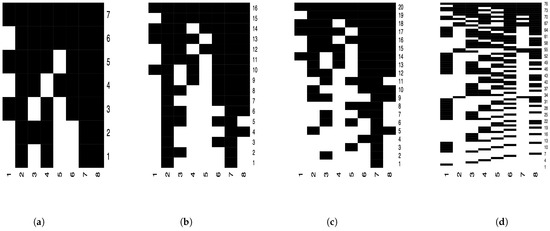

This study focuses on the estimators of and the classification accuracy. The results of are shown in Figure 1. We observe that for both settings of N, the performance of standard EM is poor as it fails to eliminate any irrelevant attribute profiles. When the sample size is N, the probability can be treated as a threshold to distinguish irrelevant attribute profiles. This method can only eliminate the partially irrelevant attribute profiles. In fact, finding an appropriate threshold is challenging. In contrast, regardless of sample sizes, the proposed EM can find the true attribute profiles and set the probability of all irrelevant attribute profiles to zero. The logarithm of the posterior marginal likelihood for the six settings, namely , are −204.239, −453.163, −508.047, −514.681, −1046.273 and −1054.014 for settings , respectively. The likelihood of the proposed EM is consistently larger than that of the standard EM, indicating that the proposed EM enjoys higher classification accuracy. The results also show that the proposed method works well for the small sample size . Especially considering the posterior marginal likelihood, the proposed method has obvious advantages over the standard EM. Note that the discussion regarding the estimation of item parameters will be shown in the third simulation study.

Figure 1.

The results of estimated for different sample sizes. The horizontal axis represents attribute profiles which can be concluded as , , , …, , …, . The rules can also apply to different Ks. (a) The results of sample size . (b) The results of sample size . (c) The results of sample size .

4.2. Study II

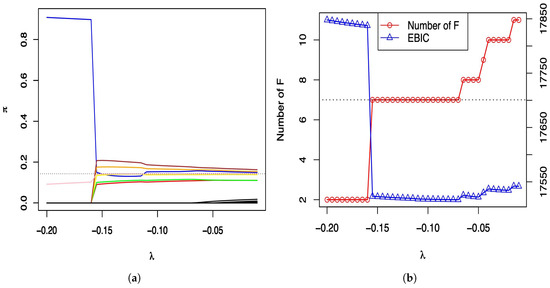

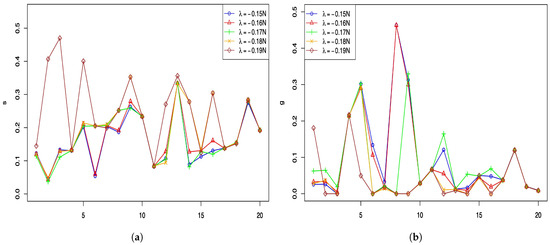

In this study, the solution paths of versus are elaborated. The running settings of N, , and are the same as the settings of Study I. The penalty parameter . Due to the similarity of results, only the results of the sample size are presented. Figure 2 shows the solution paths of , and EBIC versus .

Figure 2.

Solution paths of the estimated , the number of and EBIC versus . (a) Solution paths of . (b) Number of and EBIC.

In Figure 2a, the colored lines indicate the true latent classes, while the black lines indicate the irrelevant latent classes. The dotted line represents the probability is 1/7. Based on the figure, the interval can efficiently estimate the true and eliminate the empty latent classes. For the large , the recovery of does not appear to worsen much when estimating the probability of irrelevant latent classes. However, irrelevant latent classes cannot be strictly zero. In Figure 2b, the left and right vertical axes show results of the number of and EBIC, respectively. The dotted line represents the true number of . The Figure 2b shows that when the correct number of is selected, the EBIC achieves the minimum. This study is an illustration of how to explore the values using EBIC.

4.3. Study III

In this study, the effects of sample sizes and item parameters are evaluated. We consider and item parameters . The response data are generated with the more complex , and each latent class of has the probability 1/12. In each setting, 200 independent data are generated. 1.5

Firstly, the information criteria EBIC is used to select appropriate for each setting. The selection precision of the latent classes and Bias, RMSE of item parameters are used to evaluate the proposed method. The selection precision and Bias, RMSE of item parameters are listed in Table 1. The notation “ST/AT” denotes the ratio between selected true latent classes and all true latent classes. The notation “ST/AS” denotes the ratio between selected true latent classes and all selected latent classes. The subscript of Bias and RMSE indicates the type of item parameters. When the sample size N increases and decreases, both the selection precision and the performance of item parameters’ estimators will be better. If and , the true can be completely recovered. The RMSE and Bias of the guessing parameters are lower than the slipping parameters, which is due to the DINA model itself, as the guessing parameter is estimated from all latent groups that do not fully master the required attributes for an item. In contrast, the slipping parameter is estimated only for the latent group with complete mastery for that specific vector.

Table 1.

The selection precision of the latent classes and Bias, RMSE of item parameters. The results are based on 200 independent data.

5. Real Data Analysis

In this section, fraction–subtraction data are analyzed. For more about the data, please refer to the literature [7,32,33]. This data set contains responses of middle school students to items, where the responses are coded as 0 or 1. The test measures attributes, so there are possible latent classes. The Q-matrix and item contents are shown in Table 2.

Table 2.

The Q-matrix and contents of the fractions–subtraction data.

Because the sample size is not significantly larger than possible latent classes , we cannot ensure there are enough latent classes to guarantee that true is feasible. Algorithm 2 is suggested for analyzing the real data. Firstly, EBIC is used to select the penalty parameter . The results around the optimal EBIC are shown in Table 3, which is based on a stable interval of EBIC. We observe that when , the EBIC achieves the minimum. If , the number changes from 76 to 20, and two guessing parameters disappear. For , if slightly increases, the model will be more complicated. Based on this fact, we discard the s that are not less than .

Table 3.

EBIC and exploratory results about , , .

Next, the evaluation is based on Theorem 1 and estimators , , . We note that does not satisfy Theorem 1, so the corresponding estimator eliminates many classes. Figure 3 presents the estimated for different , and we can see that the results of Figure 3a are consistent with the conclusion of Theorem 1. The conclusion is that the s no larger than are discarded. In addition, combined with Figure 3a–c, we know that attribute 7 is the most basic attribute. Figure 4a,b display the estimators of guessing and slipping parameters, respectively. According to the estimated , the results of strongly shifted on items 2, 3, 5, 9, and 16. For different s, the behavior of estimated is too complex, and significant differences are found in items 8, 9, and 13.

Figure 3.

The estimators of item parameters and as the penalty parameter varies in the set . (a) . (b) . (c) . (d) .

Figure 4.

The estimators of item parameters and as the penalty parameter varies in the set . (a) Slipping parameters. (b) Guessing parameters.

Until now, the candidate penalties are and . The penalty supports the criteria EBIC, and prefers a simpler model. Furthermore, a denser grid between will give more detailed results.

6. Discussion

In this paper, we study the penalized method for the DINA model. There are two contributions. Firstly, the entropy penalized method is proposed for the DINA model. The feasible domain is defined to describe the relation between latent classes and the parameter space of item parameters. This framework allows for distinguishing irrelevant attribute profiles. Second, based on the definition of the feasible domain, two modified EM algorithms are developed. In practice, it is recommended to perform exploratory analyses using Algorithm 2 before proceeding further, which can provide valuable insights and guidance to understand the data structure.

While this paper focuses on the DINA model, a natural extension would be the application of the entropy penalized method to other CDMs. Additionally, it is worth noting that this paper study involves situations with a maximum dimension of , which is relatively low. In high-dimensional cases of K, improving the power and performance of the entropy-penalized method is an interesting topic. A more challenging question is indicating how the specification of irrelevant latent classes may affect the classification accuracy and the estimation of the model. Those topics are left for future research.

Author Contributions

J.W. provided original idea and wrote the paper; Y.L. provided feedback on the manuscript and all authors contributed substantially to revisions. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Humanities and Social Sciences Youth Foundation, Ministry of Education of China (No. 22YJC880073), the Guangdong Natural Science Foundation of China (2022A1515011899), and the National Natural Science Foundation of China (No. 11731015).

Data Availability Statement

The full data set used in the real data analysis is openly available as an example data set in the R package CDM on CRAN at https://CRAN.R-project.org/package=CDM (accessed on 25 August 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Proof of Lemma 1

Proof.

Assume the response vector . The vector cannot be because item j in the diagnostic test must measure some attribute. If is from , then . For , , we have

which means that the ’s response function is determined by . If is from , then . For , , we have

which means that the ’s response function is determined by . Hence, we only need and to conclude that all item parameters and are required. □

Appendix B. The Details of EM Algorithm

For Algorithm 1, the Lagrange function becomes

where is defined as . Given and , the expectation is taken with respect to the distribution , and is nothing but the posterior probability of examinee i belonging to the latent class . If , Equation (12) can be strictly reduced to

If , the term will be positive and close to zero, the equation . Equation (A4) can be treated as the alternative of Equation (12). By taking summation over all , we could obtain,

According to Equations (A4) and (A5), the iterative formula is

where is negative. It implies that is proportional to . Equation (A6) can also be used to explain why should be negative. We assume that is non-negative. For any , the posterior probability is positive and the term with is positive. Due to the positive , we obtain that is positive, for all . This result means that, from iteration t to iteration , the positive cannot eliminate any attribute profiles. Hence, should be negative.

The derivatives of item parameters and are

Therefore, the solutions of item parameters are

For Algorithm 2, if some item parameters disappear, the derivatives and make no sense. The event is reflected in is that or takes the value 0. In Algorithm 2, we should find those items and set the corresponding item parameters to 0. This is the key difference between Algorithms 1 and 2.

Appendix C. Proof of Theorem 1

Proof of Theorem 1.

The denominator of Equation (A6) must be positive, so , for all . Due to , then must be positive. Noting the discrete Shannon entropy , the conclusion can be obtained. □

References

- Junker, B.W.; Sijtsma, K. Cognitive assessment models with few assumptions, and connections with nonparametric item response theory. Appl. Psychol. Meas. 2001, 25, 258–272. [Google Scholar] [CrossRef]

- Templin, J.L.; Henson, R.A. Measurement of psychological disorders using cognitive diagnosis models. Psychol. Methods 2006, 11, 287–305. [Google Scholar] [CrossRef]

- Maris, E. Estimating multiple classification latent class models. Psychometrika 1999, 64, 187–212. [Google Scholar] [CrossRef]

- Hartz, S.M. A Bayesian Framework for the Unified Model for Assessing Cognitive Abilities: Blending Theory with Practicality. Diss. Abstr. Int. B Sci. Eng. 2002, 63, 864. [Google Scholar]

- DiBello, L.V.; Stout, W.F.; Roussos, L.A. Unified cognitive/psychometric diagnostic assessment likelihood-based classification techniques. In Cognitively Diagnostic Assessment; Routledge: London, UK, 1995; pp. 361–389. [Google Scholar]

- Henson, R.A.; Templin, J.L.; Willse, J.T. Defining a family of cognitive diagnosis models using log-linear models with latent variables. Psychometrika 2009, 74, 191–210. [Google Scholar] [CrossRef]

- de la Torre, J. The generalized DINA model framework. Psychometrika 2011, 76, 179–199. [Google Scholar] [CrossRef]

- von Davier, M. A general diagnostic model applied to language testing data. ETS Res. Rep. Ser. 2005, 2005, i-35. [Google Scholar] [CrossRef]

- Liu, J.; Xu, G.; Ying, Z. Data-driven learning of Q-matrix. Appl. Psychol. Meas. 2012, 36, 548–564. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, J.; Xu, G.; Ying, Z. Statistical analysis of Q-matrix based diagnostic classification models. J. Am. Stat. Assoc. 2015, 110, 850–866. [Google Scholar] [CrossRef]

- Xu, G.; Shang, Z. Identifying latent structures in restricted latent class models. J. Am. Stat. Assoc. 2018, 113, 1284–1295. [Google Scholar] [CrossRef]

- Gu, Y.; Xu, G. Identification and Estimation of Hierarchical Latent Attribute Models. arXiv 2019, arXiv:1906.07869. [Google Scholar]

- Gu, Y.; Xu, G. Partial identifiability of restricted latent class models. Ann. Stat. 2020, 48, 2082–2107. [Google Scholar] [CrossRef]

- Chen, J. Optimal rate of convergence for finite mixture models. Ann. Stat. 1995, 23, 221–233. [Google Scholar] [CrossRef]

- Gu, Y.; Xu, G. Learning Attribute Patterns in High-Dimensional Structured Latent Attribute Models. J. Mach. Learn. Res. 2019, 20, 1–58. [Google Scholar]

- Templin, J.; Bradshaw, L. Hierarchical diagnostic classification models: A family of models for estimating and testing attribute hierarchies. Psychometrika 2014, 79, 317–339. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Lu, J. Learning attribute hierarchies from data: Two exploratory approaches. J. Educ. Behav. Stat. 2021, 46, 58–84. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2006, 68, 49–67. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Ma, J.; Wang, T. Entropy penalized automated model selection on Gaussian mixture. Int. J. Pattern Recognit. Artif. Intell. 2004, 18, 1501–1512. [Google Scholar] [CrossRef]

- Huang, T.; Peng, H.; Zhang, K. Model selection for Gaussian mixture models. Stat. Sin. 2017, 27, 147–169. [Google Scholar] [CrossRef]

- de la Torre, J. DINA model and parameter estimation: A didactic. J. Educ. Behav. Stat. 2009, 34, 115–130. [Google Scholar] [CrossRef]

- Culpepper, S.A. Bayesian estimation of the DINA model with Gibbs sampling. J. Educ. Behav. Stat. 2015, 40, 454–476. [Google Scholar] [CrossRef]

- Wang, J.; Shi, N.; Zhang, X.; Xu, G. Sequential Gibbs Sampling Algorithm for Cognitive Diagnosis Models with Many Attributes. Multivar. Behav. Res. 2022, 57, 840–858. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Thomas, M.; Joy, A.T. Elements of Information Theory; Wiley-Interscience: Hoboken, NJ, USA, 2006. [Google Scholar]

- Chen, J.; Chen, Z. Extended Bayesian information criteria for model selection with large model spaces. Biometrika 2008, 95, 759–771. [Google Scholar] [CrossRef]

- Xu, G. Identifiability of restricted latent class models with binary responses. Ann. Stat. 2017, 45, 675–707. [Google Scholar] [CrossRef]

- Xu, G.; Zhang, S. Identifiability of diagnostic classification models. Psychometrika 2016, 81, 625–649. [Google Scholar] [CrossRef]

- Tatsuoka, C. Data analytic methods for latent partially ordered classification models. J. R. Stat. Soc. Ser. C (Appl. Stat.) 2002, 51, 337–350. [Google Scholar] [CrossRef]

- de la Torre, J.; Douglas, J.A. Higher-order latent trait models for cognitive diagnosis. Psychometrika 2004, 69, 333–353. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).