Abstract

Deep reinforcement learning (DRL) has attracted strong interest since AlphaGo beat human professionals, and its applications in stock trading are widespread. In this paper, an enhanced stock trading strategy called Dual Action and Dual Environment Deep Q-Network (DADE-DQN) for profit and risk reduction is proposed. Our approach incorporates several key highlights. First, to achieve a better balance between exploration and exploitation, a dual-action selection and dual-environment mechanism are incorporated into our DQN framework. Second, our approach optimizes the utilization of storage transitions by utilizing independent replay memories and performing dual mini-batch updates, leading to faster convergence and more efficient learning. Third, a novel deep network structure that incorporates Long Short-Term Memory (LSTM) and attention mechanisms is introduced, thereby improving the network’s ability to capture essential features and patterns. In addition, an innovative feature selection method is presented to efficiently enhance the input data by utilizing mutual information to identify and eliminate irrelevant features. Evaluation on six datasets shows that our DADE-DQN algorithm outperforms multiple DRL-based strategies (TDQN, DQN-Pattern, DQN-Vanilla) and traditional strategies (B&H, S&H, MR, TF). For example, on the KS11 dataset, the DADE-DQN strategy has achieved an impressive cumulative return of 79.43% and a Sharpe ratio of 2.21, outperforming all other methods. These experimental results demonstrate the performance of our approach in enhancing stock trading strategies.

MSC:

68T07

1. Introduction

The primary objective of investing in financial assets is usually to optimize returns while effectively managing risk. This objective revolves around striking a balance between risk and returns to achieve sustainable long-term investment returns. However, traditional methods typically involve human traders who analyze market data and trends, evaluate price fluctuations, and execute buy and sell trades based on their decisions. In contrast, algorithmic trading uses computer programs to automate decision making and trade execution. These programs operate using pre-defined algorithms and rules, eliminating the need for human intervention. Algorithmic trading mainly includes rule-based and machine learning (ML)-based approaches. Rule-based algorithmic trading involves several steps. These include analyzing and modeling the market and developing a set of rules based on market trends, price fluctuations, and other factors. Afterward, decisions and trading are carried out based on those rules. On the other hand, machine learning-based algorithmic trading involves training machine learning models to discover market patterns and then making decisions and trades based on those patterns.

However, rule-based algorithmic trading methods have been widely used to solve financial decision problems that consider the time series of financial assets as a sequence of random variables controlled by a stochastic process [1]. These methods often oversimplify real-world financial markets by assuming discrete time points, leading to suboptimal outcomes and potential financial losses. To overcome this limitation and develop models that can effectively capture the complex nature of financial markets without oversimplifying them is a huge mathematical and computational challenge.

AlphaGo’s success in competing against human professionals has sparked interest in reinforcement learning (RL) as a promising approach for statistical modeling and data processing in finance [2]. Significant progress has been made in several areas by combining RL with deep learning techniques. Notable examples include AlphaGo [3], which used RL and Monte Carlo tree search to defeat top Go players, as well as AlphaStar and OpenAI Five demonstrated extraordinary performance in StarCraft II and Dota 2 through DRL-based methods [4,5]. DRL involves an agent interacting with an unknown environment in a sequential manner, utilizing deep learning techniques to make decisions based on acquired information with the objective of maximizing cumulative rewards. RL techniques have demonstrated significant potential in addressing complex sequential decision problems.

Advances in DRL and massive financial datasets have motivated researchers to apply DRL algorithms to financial markets. The researchers focus on modeling and analyzing financial markets by adapting DRL techniques. Using historical asset prices as input, they design neural networks to optimize trading actions to maximize returns or Sharpe ratios. DRL has been successful in several financial areas, including optimal execution, portfolio optimization, and market making.

In this paper, a Dual Action and Dual Environment Deep Q-Network, named DADE-DQN, which is a trading strategy that extends and enhances the DQN algorithm, is proposed. First, to achieve a better balance between exploration and exploitation, a dual-action selection and dual-environment mechanism is added to the DQN framework. This enables agents to explore new actions while utilizing what they have learned. Second, for faster convergence and more efficient learning, our approach optimizes storage conversion by exploiting independent replay memories and performing dual mini-batch updates. Third, a novel deep network structure combining LSTM and attention mechanisms is introduced, which enhances the network’s ability to capture key features and patterns. In addition, a creative feature selection method is proposed to efficiently improve the input data by utilizing mutual information to identify and eliminate irrelevant features.

In summary, our study makes the following key contributions:

- A novel DRL model, Dual Action and Dual Environment Deep Q-Network, named DADE-DQN, is proposed. The proposed model incorporates dual action selection and dual environment mechanisms into the DQN framework to effectively balance exploration and exploitation.

- Optimized utilization of storage transitions by leveraging independent replay memory and performing dual mini-batch updates leads to faster convergence and more efficient learning.

- A novel deep network architecture of combining LSTM and attention mechanisms is introduced to capture important features and patterns in stock market data for improving the network’s ability.

- An innovative feature selection method is proposed to efficiently enhance input data by utilizing mutual information in order to identify and eliminate irrelevant features.

- Evaluations on six datasets show that the presented DADE-DQN algorithm demonstrates excellent performance compared to multiple DRL-based strategies such as TDQN, DQN-Pattern, DQN-Vanilla, and traditional strategies such as B&H, S&H, MR, and TF.

The rest of this paper is arranged as follows. Section 2 describes the related work. Section 3 generally formalizes the stock trading problem and describes the proposed approach in detail. The experimental results are shown and analyzed in Section 4. Section 5 presents the discussion. Section 6 presents the conclusions and future work.

2. Related Work

This section reviews two research areas: the improvement of DRL algorithms and DRL in financial trading.

2.1. Improvements in DRL Algorithms

Recent advancements in RL and deep learning have brought new ideas to various fields. In 2013, Mnih and Silver et al. introduced the deep Q-network (DQN) algorithm, which employed an empirical replay pool and a target network to stabilize the training process. They demonstrated that DQN achieved human-level performance in Atari games [6]. Since the proposal of DQN, several improved versions based on DQN have emerged, categorized into enhancements of training algorithms [7,8,9,10,11,12,13,14,15], introductions of new learning mechanisms [16,17], and improvements to neural network structures [18,19].

In terms of training algorithm improvements, Hasselt et al. proposed the double DQN algorithm, combining DQN with double Q-learning to address the issue of overestimating action value functions in Q-learning [7]. Lipton et al. introduced Bayes-by-Backprop Q-networks to improve the performance of DQNs in scenarios with sparse rewards and large action spaces, utilizing -greedy exploration [8]. Mossalam et al. developed the deep optimistic linear support learning algorithm, which combined an optimistic linear support framework with deep Q-learning to solve high-dimensional multi-objective decision problems with unknown relative importance among objectives [9]. Mahajan et al. proposed a symmetric DQN that explored symmetries in the environment and incorporates them into the function approximation process [10]. Taitler et al. introduced the Guided DQN algorithm to address the control strategy learning problem in air hockey hitting [11]. Levine et al. presented the least squares DQN, which combined DQN with linear least squares [12]. Leibfried et al. extended information-theoretical bounded rationality to high-dimensional state spaces and incorporated internal penalty signals in DQNs to mitigate cumulative reward overestimation and reduce sampling complexity [13]. Anschel et al. proposed the averaged DQN algorithm to alleviate the negative effects of variability and instability in DRL algorithms [14]. Hester et al. introduced Deep Q Learning from the Demonstrations algorithm, significantly accelerating the learning process by utilizing demonstration data [15].

Regarding the new learning mechanisms, Schaul et al. proposed prioritized experience replay, which utilized an importance sampling mechanism to alleviate bias introduced during the update process. This technique improved the performance of DQN and DDQN in Atari games [16]. Sorokin et al. introduced the attention mechanism deep recurrent Q network, which incorporated an attention mechanism into the DRQN structure. This addition not only enhanced computational speed but also improved the interpretability of modality [17].

Concerning improvements to neural network structures, Hausknecht et al. presented the deep recurrent Q-network algorithm to address partially observable states. This approach combined a long-short memory (LSTM) network with DQN, replacing the first fully connected layer of DQN with an LSTM layer [18]. Wang et al. proposed the dueling network architecture, where the convolutional layer in the Q network was split into two branches: one representing the scalar state value function and the other representing the state-action advantage function. These branches were then combined in the output module to obtain the Q value for each action [19].

2.2. DRL in Financial Trading

Given the abundance of financial data and advancements in machine learning technology, trading strategies have increasingly focused on RL as a prominent research direction [20,21]. Researchers have integrated RL with deep neural networks, enabling the development of DRL methods for learning trading strategies from historical data. These approaches can be classified into three categories: value-based [22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38], policy-based [39,40,41,42,43,44], or actor–critic [45,46,47,48,49,50]. However, when it comes to generating trading strategies for single stock, the DRL algorithms primarily adopt value-based approaches. Table 1 shows a representative selection of modeling options in datasets, state and action spaces, reward functions, methods, and performance metrics used in the various articles on value-based RL. It is important to note that this list is not exhaustive but instead captures a significant portion of the diversity in the entire corpus. For instance, Chen et al. proposed a framework that combined DQN with Monte Carlo tree search to learn an optimal trading policy [24]. Jeong et al. introduced a novel approach using deep Q-learning for financial trading decisions, focusing on stock quantity prediction, strategy selection, and transfer learning across different markets [25]. Ma et al. developed a parallel multi-module DRL algorithm for stock trading, employing multiple neural networks and RL techniques to capture both current market data and long-term historical trends [29]. Li et al. utilized a combination of Convolutional Neural Networks (CNN) and LSTM networks with Double DQN and Dueling DQN algorithms to learn optimal dynamic trading strategies from stock data and candlestick charts [33]. Taghian et al. proposed a framework that integrated DQN with technical indicators to learn an optimal trading policy for individual assets [34]. Other studies have explored variations of DQN, such as Double DQN, and applied them to optimize parameters in automated trading systems [36]. Liu et al. introduced a new approach for algorithmic trading that employed DRL, combining financial data, news sentiment data, and technical indicator data. Their framework used an actor–critic method and a dueling network to extract temporal features and candlestick chart features for learning the optimal trading policy [35].

Table 1.

Value-based RL algorithms for single-asset trading.

In the stock market, stock price fluctuations are influenced by various factors, including price and liquidity indicators. To tackle the challenges of overfitting, computational complexity, and the impact of irrelevant variables, researchers employ feature factor filtering methods. For example, Nesselroade et al. provided a detailed explanation of the correlation coefficient, which was used to assess the correlation and strength of the relationship between two variables [51]. The Pearson coefficient was employed to analyze the degree of correlation between features and response variables. Cai et al. used the Pearson coefficient to identify influential factors by measuring the linear correlation between variables [52]. Li et al. proposed a method for stock price prediction that combined the Pearson correlation coefficient and Broad Learning System for multi-indicator feature selection [53]. These methods aim to select relevant factors and construct an initial indicator system for model training. Various techniques have been utilized, such as correlation coefficients, including the Pearson coefficient, to assess the strength of relationships between variables. However, mutual information has also been employed for feature selection to capture nonlinear relationships between features and response variables. Guo et al. utilized mutual information to capture the non-linear relationships between stocks, considering it a more effective measure than correlation coefficients in volatile stock markets [54]. Kong et al. conducted feature selection based on time series mutual information and minimum redundancy maximum correlation techniques to analyze micro-trading patterns specific to stocks prior to price increases [55]. Vasileios et al. combined proximal policy optimization with rule-based safety mechanisms to both maximize profit and loss returns and minimize trading risk.

Motivated by the previously mentioned literature review, this paper introduces a novel DRL model called DADE-DQN (Dual Action and Dual Environment Deep Q-Network). The model incorporates dual-action selection and dual-environment mechanisms into the DQN framework, effectively achieving a balance between exploration and utilization. Meanwhile, faster convergence and more efficient learning are achieved by employing independent replay memories and performing dual mini-batch updates to optimize the utilization of storage transitions. In addition, a novel deep network structure combining LSTM and attention mechanism is proposed to improve the performance of the network. By incorporating the attention mechanism, the agent can focus on crucial features and patterns in the stock market data. However, as the stock market is influenced by various factors, accurately representing the state in RL becomes challenging. To overcome this problem, a mutual information-based factor filtering approach is used to effectively remove irrelevant features and address issues such as overfitting and computational complexity associated with large datasets.

3. Materials and Methods

Driven by the progress of DQN, the DADE-DQN method was proposed to improve stock trading strategies using a dual-action, dual-environment deep Q-network approach The DQN algorithm has been widely utilized in optimizing trading strategies using stock states as inputs.

3.1. Materials

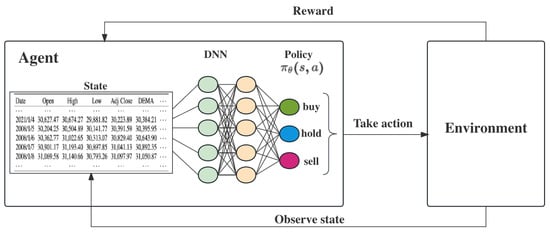

RL is a framework that involves an agent, a set of states S, and a set of actions A for each state [56]. The goal of reinforcement learning is to improve decision making by allowing the agent to learn from the consequences of its actions. Markov Decision Process (MDP) is a mathematical framework used to model decision-making problems in which an agent interacts with an environment to achieve certain goals. It is widely used in various fields, including finance and economics, to analyze and optimize decision-making processes. In the context of the financial market, both in a broad sense and specifically in the stock market, MDPs can be employed to understand and potentially improve decision-making strategies [57]. Figure 1 illustrates the agent’s interaction with the Markov decision process environment.

Figure 1.

The interaction diagram of an agent with the Markov decision process environment. Different colored circles represent different neurons.

When an agent performs an action , it transitions from one state to another. Each action taken in a particular state provides the agent with a reward, denoted as . The agent’s goal is to maximize its cumulative reward by considering the potential future rewards. This is achieved by adding the maximum achievable future reward to the reward obtained in the current state, thereby influencing the agent’s current action based on the potential future rewards. The potential future reward is calculated as the weighted sum of the expected values of rewards for all future steps starting from the current state. Mathematically, it can be represented as Equations (1) and (2).

where represents the optimal policy, represents the current policy, is the discount factor (), represents immediate rewards at time step t, and R denotes the cumulative reward. The discount factor determines the relative importance of future rewards in the overall cumulative reward calculation.

3.1.1. States

In the context of algorithmic trading, the RL environment can be considered as an abstraction that contains the trading mechanism and all relevant information that can influence the agent’s trading activity. At each time step t, the RL agent receives a set of information including RL status (current trading position, available cash, etc.), stock information (opening price, closing price, volume, etc.), technical indicators of the stock market (moving average convergence divergence, relative strength index, average direction index, etc.), macroeconomic information, news information, etc.

Therefore, it is important to select and properly process information from the environment. Many existing studies rely on the use of raw stock information as input to RL, which may not provide enough information for RL agents to make effective differentiation and informed decisions. In this paper, mutual information is used as a technique to select highly relevant features. The dimension of the state of the environment at time t is denoted as , where m corresponds to the different technical indicators obtained from the price information and n represents the time window length. It can be expressed as Equations (3) and (4).

where, denotes the ith normalized technical indicator on the tth day; n represents the number of days in the past.

3.1.2. Actions

At each time step t, the RL agent first obtains the environment state . According to the RL policy , the agent selects an action . The state–action value is referred to as the Q-value and represents the expected cumulative reward, expressed as Equation (5).

This Q-value represents the expected cumulative reward when taking action under state according to policy . The optimal Q-value, denoted , is determined by the optimal policy that maximizes the Q-value. The Bellman equation can be expressed as Equation (6):

The optimal Q-value can be recursively can be expressed as Equation (7):

where represents the action taken in the subsequent state .

This paper assumes that the agent’s actions do not influence the financial market. Consequently, the action space can be expressed as Equation (8).

where , , and is determined by the Q-network at each time step t.

To incorporate the position information , the final action and updated position information are computed as Equations (9) and (10).

where the symbol ⊕ indicates a process of element-wise multiplication followed by addition. This operation involves multiplying corresponding values of two elements and subsequently summing the products. In this context, it implies that each element of is individually multiplied by the corresponding element of , and the resulting products are then aggregated to yield the ultimate value of .

The position information takes values from the set , where indicates no initial position. A positive value of (e.g., ) indicates a long position, while a negative value (e.g., ) indicates a short position. In the action space , when and , the agent establishes a long position. When and , it closes this long position. Similarly, when and , the agent opens a short position and closes this short position when and . These trading strategies are intuitive and realistic, allowing agents to behave more similarly to human traders.

3.1.3. Reward Function

The reward function is an important component of the RL algorithm that determines the reward assigned to each action taken by the agent. Its purpose is to maximize the cumulative reward over time. In this study, the short-term Sharpe Ratio (SSR) award is used to address this challenge [37]. The SSR reward function effectively contains information about the subsequent k days and also the agent’s location. Thus, it is an appropriate indicator of the actual reward in the RL context. Mathematically, the SSR reward function is defined as Equations (11)–(13).

where represents the returns over the next k days, calculated as the percentage change in price () from time t to .

3.2. Methods

3.2.1. Dual Action and Dual Environment Deep Q-Network (DADE-DQN)

In the financial market, achieving higher profits while minimizing risks is a difficult task for traders. Inspired by the DQN algorithm, a novel approach called Dual Action and Dual Environment Deep Q-Network (DADE-DQN) is proposed to address this challenge. DADE-DQN is an extension of the DQN algorithm specifically designed for the trading problem. Typically, DQN-based RL agents require a large number of training events to learn the optimal strategy and maximize the cumulative reward. However, in stock trading, this can lead to overfitting and poor performance on the test set due to high data noise, randomness, and limited real stock data. Our goal is to overcome overfitting and explore the best policy efficiently with limited data. The main features of the DADE-DQN algorithm are as follows:

Optimal and Exploit Replay Memory: The DADE-DQN algorithm utilizes two separate replay memories: an optimal replay memory (D) and an exploit replay memory (). This separation allows for more effective learning by selectively replaying transitions based on their potential for exploration or exploitation.

Dual Action Selection: In DADE-DQN, the agent takes two different actions at the same time, obtaining two sets of transactions from the environment. This approach addresses the issue of limited data to a certain extent. During training, using these two sets of transactions, the maximum and minimum Q values corresponding to the actions generated by the Q network are optimized alternatively. This allows the RL agent to avoid selecting the worst action. The selection of the best action is based on maximizing the Q-value, while the selection of the exploited action is based on minimizing the Q-value. This dual action selection strategy enhances the agent’s ability to explore while using its knowledge to exploit the learned values.

Dual Minibatch Update: The DADE-DQN algorithm performs dual minibatch updates based on the selected actions. In one time interval, it samples transitions from the optimal replay memory (D) and uses the argmax action selection to update the Q value for the next state. In another time interval, it samples transitions from the exploitation replay memory () and updates the Q-values using argmin action selection. This dual minibatch update process facilitates more efficient learning and better utilization of the replay memories.

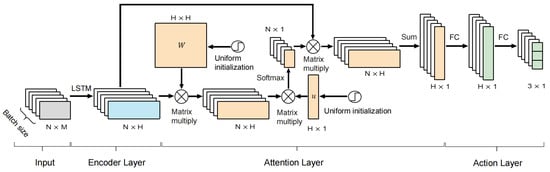

Novel Q-network: The efficacy of DRL algorithms relies largely on the structure of the value/policy network employed by agents. Therefore, a network structure is designed to approximate the action-value function of DADE-DQN. The network topology consists of four main layers: an input layer, an encoder layer, an attention layer, and an action layer, as shown in Figure 2. In recent years, attention mechanisms have shown good potential in computer vision (CV) and natural language processing (NLP) fields. In this paper, a hierarchical attention mechanism proposed by Yang et al. is used to design the proposed network [58].

Figure 2.

The Q-network structure of the proposed algorithm.

Input: As stated in Section 3.1.1, the input part contains M components, consisting of several technical indicators and the open, close, high, and low price of the previous N days as the state of the time step t. During the training process, a mini-batch is selected to train the network.

Encoder Layer: Initially, the input is passed through a LSTM network to obtain a hidden representation, denoted as , which effectively captures the temporal information [59]. LSTM is a specialized type of recurrent neural network (RNN) that alleviates the vanishing gradient problem and facilitates the learning of long-term dependencies.

The following equations outline the fundamental operations, where ⊙ denotes the element-wise product (Hadamard product) as Equations (14)–(18).

The core of LSTM lies in the cell state, which can selectively add or remove information through specialized structures called gates. Gates, which include a sigmoid neural network layer and a point-wise product operation, regulate the flow of information. The sigmoid functions have output values between 0 and 1, where 1 represents “keep this information completely” and 0 represents “discard this information entirely”. LSTM cells contain three gates: the forget gate, input gate, and output gate, which together protect and manage the cell state. The forget gate uses the sigmoid neural network layer that uses the past cell output and the current cell input to determine which information should be discarded from the cell state . The input gate works alongside a tanh layer to control the addition of new information. The tanh layer generates a vector , which is then added to the cell state . Subsequently, the sigmoid layer outputs a value between 0 and 1 for each element in , determining the extent to which the new information is assimilated. The output gate governs the amount of information that is filtered out from the current cell state.

Attention Layer: The hidden states from different days have different levels of importance to the agent. To address this problem, an attention mechanism is introduced to extract the weights assigned to each day and hidden state, thereby aggregating the representation of these summarized states. The hierarchical attention mechanism used in this study operates as Equations (19)–(21).

In our implementation, the hidden representation is initially transformed by matrix W, which is uniformly initialized, resulting in as a weighted representation of . The importance of the states is then measured based on the similarity between and a uniformly initialized vector u. A softmax function is applied to obtain normalized weights . Subsequently, the state v is computed as a weighted sum of the hidden representations. This process generates an attention value vector v, which encapsulates all the information related to the different days and hidden states in the hidden representation . The matrix W and the vector v are learned together during the training process.

Action Layer: Finally, the attention value vector v is fed into an action network consisting of two fully connected layers to obtain the Q values of the three available actions.

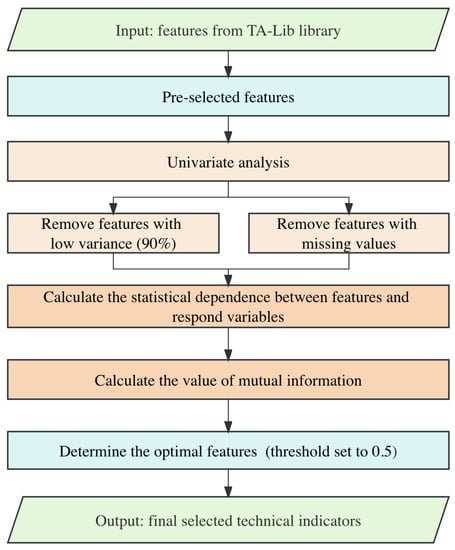

3.2.2. Feature Selection Based on Mutual Information

In this section, mutual information is used to measure the statistical dependence or the amount of information shared between two random variables. Developed within the field of information theory by Claude Shannon, mutual information has become an important concept in various fields. The goal is to select several technical indicators as explanatory variables, with the closing price of the day serving as the response variable.

The feature selection process begins by pre-selecting several features from the library as potential explanatory variables. Then, univariate analysis is conducted to identify features with low variance and a significant number of missing values, which are excluded from further analysis. Subsequently, mutual information is employed to measure the interdependence between all remaining explanatory variables and the response variable, providing insights into the correlation between each explanatory variable and the closing price. Finally, a higher threshold is set to filter out the final technical factors. This threshold is used to select the characteristics that exhibit a high correlation with the closing price. Figure 3 illustrates the flow of feature selection.

Figure 3.

The flow of feature selection.

Mutual information quantifies the statistical dependence between two random variables. It is based on the concept of entropy, which measures the uncertainty or randomness of a random variable. The mutual information between two random variables, denoted as , is calculated using the probabilities of their joint distribution and their individual marginal distributions and . The formula for calculating mutual information can be expressed as Equation (22).

where, represents the joint probability distribution of variables X and Y, while and represent their respective marginal probability distributions. The resulting mutual information value, , is always non-negative, with a higher value indicating a stronger dependency between the variables. A value of zero indicates that the variables are independent.

In specific cases, a sample threshold can be set for the mutual information. This threshold is used as a criterion for selecting the final technical factor features. Factors with mutual information values above the threshold were considered to have a strong dependence on the closing price and were included in the final set of selected features.

3.2.3. DADE-DQN Training

To summarize, Algorithm 1 provides the pseudo-code of the entire DADE-DQN algorithm, which is the basis of our proposed trading strategy. The strategy is updated based on further learning from daily observations during the trading process. This iterative process ensures the continuous learning and refinement of the trading strategy.

| Algorithm 1 DADE-DQN algorithm |

|

4. Experiments and Results

In this section, the process of selecting the dataset and associated features is first investigated. Subsequently, the details of the experiment settings are discussed. Finally, the experimental results are examined and discussed. The effectiveness of the proposed model is assessed by evaluating its performance on six different stock index datasets.

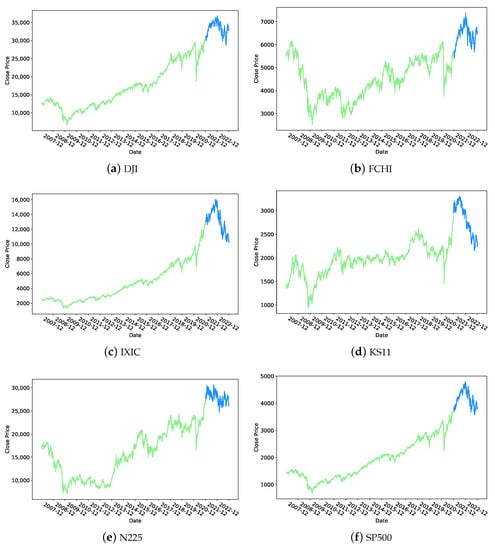

4.1. Datasets

In order to fully assess the performance and robustness of our model, a range of indices from different regions and economic backgrounds were used in the experiments, including the NASDAQ Composite (IXIC), the S&P 500 (SP500), and the Dow Jones Industrial Average (DJI) in the U.S., as well as the CAC 40 (FCHI) in France, the Korea Composite Stock Price Index (KOSPI, KS11) in Korea, and the Nikkei 225 (N225) in Japan. The duration of the dataset spans from 1 January 2007, to 31 December 2022. The dataset was divided into a training set, covering 1 January 2007, to 31 December 2022, and a test set, covering 1 January 2007, to 31 December 2022. Figure 4 shows the price movement of the seven assets. The training period was the green line and the test period was the blue line.

Figure 4.

The price movement of the seven assets [from 1 January 2007 to 31 December 2022] [green parts: training set; blue parts: test set].

4.2. Feature Selection Results

To identify relevant explanatory variables, 57 features were selected from the TA-Lib software library, as shown in Table 2. Subsequently, a series of steps were performed to filter and refine these features. First, univariate analyses were first performed to exclude features with low variance (greater than 90%) and those with a significant number of missing values. Following this, mutual information scores were calculated to assess the interdependence between the explanatory and response variables, as demonstrated in Table 3.

Table 2.

Description of exploited technical indicators.

Table 3.

Mutual information scores for selected technical indicators.

To establish a strong relationship with the closing price, 24 features were selected based on a threshold of . These selected features were detailed in Table 4. For each time step, the RL environment state was constructed by considering the closing prices and technical indicators from the previous n days. Consequently, the size of the environment state at time t could be represented as an matrix, where . This matrix included the opening price, closing price, high price, low price, and 20 different technical indicators based on price and volume. Further information regarding these indicators can be found in Table 4. In this context, the previous n days were represented by , with more details in the experiment settings.

Table 4.

Mutual information scores for selected technical indicators with a threshold of 0.5.

4.3. Experiment Settings

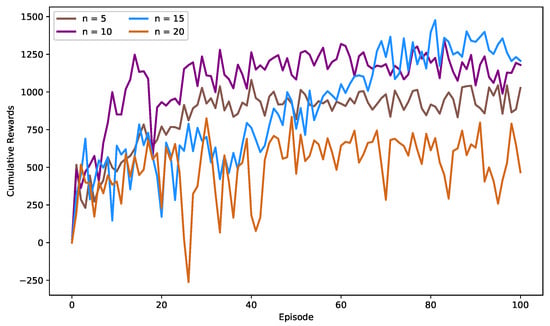

To assess the performance of the DADE-DQN model with different window lengths, experiments were conducted using the DJI dataset. Figure 5 depicts the cumulative rewards curves obtained from training DADE-DQN with window lengths of 5, 10, 15, and 20, respectively. As can be seen in the figure, the training curve with a window length of 10 was balanced between stability and cumulative return. Furthermore, Table 5 presents the results of various performance metrics, including the cumulative return, annualized return, Sharpe ratio, and maximum drawdown, for window lengths of 5, 10, 15, and 20, respectively. Upon analyzing the table, it became apparent that a window length of 10 yields superior results compared to other window lengths. Consequently, the window length chosen for this study was 10.

Figure 5.

The cumulative rewards curve for DADE-DQN training with window lengths of 5, 10, 15, and 20 on the DJI dataset.

Table 5.

Performance of DADE-DQN on DJI dataset with window lengths of 5, 10, 15 and 20.

The implementation of the models was carried out using the PyTorch library in Python. The baseline methods were adopted from the code provided by Theate et al. [31] (Note: https://github.com/ThibautTheate/An-Application-of-Deep-Reinforcement-Learning-to-Algorithmic-Trading (accessed on 1 August 2023)) and Taghian et al. [34] (Note: https://github.com/MehranTaghian/DQN-Trading (accessed on 1 August 2023)) to build the algorithm.

The experimental setup involved several key components as follows:

- Preprocessing: Preprocessing was performed by normalizing each input variable to ensure consistent scaling and prevent gradient explosions. This normalization resulted in a mean of 0 and a standard deviation of 1 for the normalized data.

- Initialization: Xavier initialization was employed to enhance the convergence of the algorithm by setting the initial weights in a manner that maintained a constant variance of gradients across the deep neural network layers.

- Performance metrics: To accurately evaluate the performance of the trading strategies, four performance metrics were selected, namely cumulative return (CR), Sharpe ratio (SR), maximum drawdown (MDD), and annualized return (AR). These metrics provide a comprehensive and objective analysis of the strategy’s benefits and drawbacks in terms of profitability and risk management.

- Baseline methods: To objectively evaluate the advantages and disadvantages of the DADE-DQN algorithm, a comparison was made with traditional methods like buy and hold (B&H), sell and hold (S&H), mean reversion with moving averages (MR), and trend following with moving averages (TF) [60,61,62], as well as DRL methods including TDQN [31], DQN-Vanilla, and DQN-Pattern [34].

It was worth mentioning that tuning the hyperparameters of DRL algorithms was a complex and time-consuming task. In this study, the trial-and-error method was used to determine the hyperparameter values. Table 6 presents the hyperparameter values used in the DADE-DQN model.

Table 6.

The hyperparameters values of DADE-DQN.

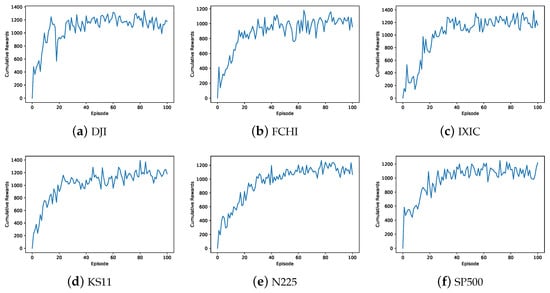

Figure 6 illustrates the trend of the cumulative reward during the training process of the DADE-DQN model using SSR on six datasets. Figure 6 shows a gradual increase in the cumulative reward as the number of training episodes progresses, eventually reaching a state of convergence. The cumulative rewards served as a crucial performance metric to evaluate the effectiveness of the DADE-DQN algorithm, quantifying the total reward obtained by the model over multiple episodes of training and reflecting its ability to make profitable trading decisions. Initially, the cumulative reward exhibited fluctuations and variability as the model explores and learned from the environment. However, as training progresses, the DADE-DQN algorithm improved its policy and decision-making capabilities, resulting in a steady increase in cumulative rewards.

Figure 6.

The trend of the cumulative reward during the training process of the DADE-DQN model using SSR on six datasets.

Therefore, the convergence of the cumulative rewards indicated that the DADE-DQN model learned an effective trading strategy and achieved a stable state. At this point, the model acquired sufficient knowledge from the training data and consistently generates profitable trading decisions. The convergence of cumulative returns meant that the DADE-DQN algorithm successfully captured the underlying patterns and dynamics of the financial markets and used historical price data, technical indicators, and environmental conditions to make informed trading choices that maximized cumulative returns.

4.4. Experimental Results

This section presented a comparison between the proposed DADE-DQN framework and seven baseline methods to evaluate its performance. The experimental results for the six stock index datasets are provided in Table 7. The trading performance was assessed using four performance metrics: cumulative return (CR), Sharpe ratio (SR), annualized return (AR), and maximum drawdown (MDD).

Table 7.

Performances of various trading methods on six assets.

Table 7 shows the performance of various trading methods on six assets. Among the listed metrics, the DADE-DQN strategy achieved the best scores, indicating its promising performance. The DADE-DQN strategy achieved significantly higher cumulative returns compared to other strategies. Additionally, it almost demonstrated the smallest maximum drawdown, highlighting its strong profitability and risk aversion on the six datasets. For instance, on the IXIC dataset, the DADE-DQN strategy achieved a cumulative return of 49.15%, surpassing all other methods. It also demonstrated a Sharpe ratio of 0.99, indicating favorable risk-adjusted returns. Furthermore, the maximum drawdown of the DADE-DQN strategy on the IXIC dataset was 16.35%, which was the lowest among all the methods. Similar observations could be made for the other assets listed in the table. The DADE-DQN consistently outperformed the baseline methods with respect to cumulative return, Sharpe ratio, and annualized return, demonstrating its superior performance. These results validated the effectiveness of the proposed DADE-DQN framework in generating profitable trading strategies with reduced risk.

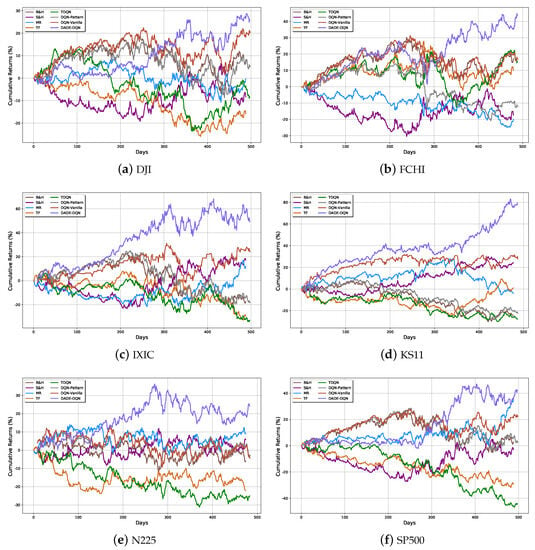

Figure 7 illustrates the cumulative return curves obtained by applying various trading strategies, including B&H, S&H, MR, TF, TDQN, DQN-Vanilla, DQN-Pattern, and the proposed DADE-DQN strategy. The figure clearly shows the superior performance of the DADE-DQN strategy compared to the other strategies. It exhibited a remarkable ability to mitigate the risk of significant losses while achieving excess returns. Moreover, the total assets under the DADE-DQN strategy exhibited a smoother upward trend compared to the benchmark strategy.

Figure 7.

Performance of different models on six datasets.

The observed performance in Figure 7 aligns with the conclusions derived from the performance metrics in Table 7. The DADE-DQN strategy consistently outperformed the baseline methods across different assets, confirming its effectiveness in generating profitable trading strategies. It not only avoided the substantial risk of loss but also generated excess returns, surpassing the benchmark strategy and other comparative approaches. This result further confirmed the effectiveness of the DADE-DQN framework in generating profitable trading strategies. It successfully balanced risk and reward, providing investors with a more stable and profitable investment approach compared to traditional strategies and DRL methods.

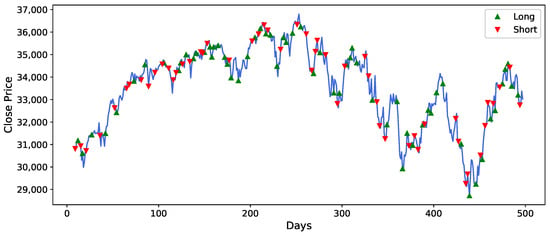

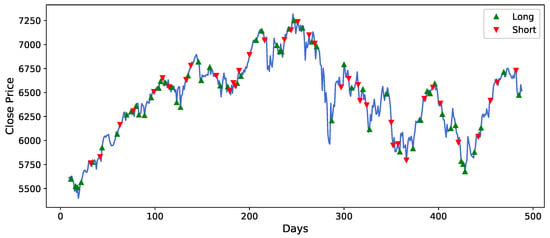

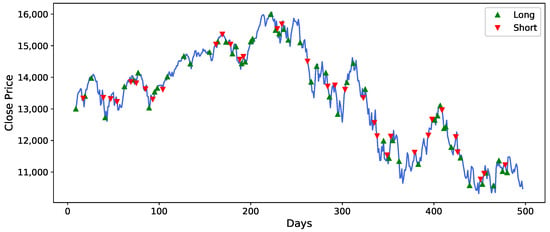

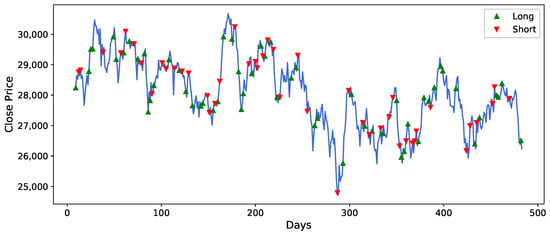

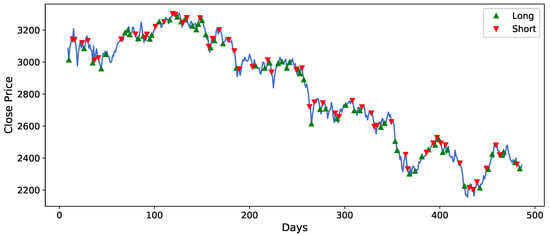

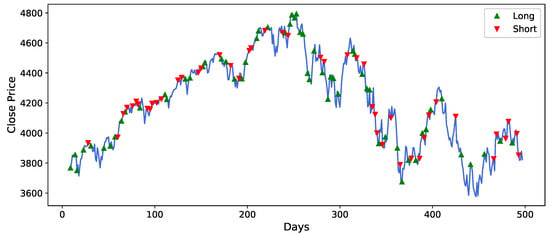

Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 illustrates the trading signals generated by the DADE-DQN strategy for different assets. The figures showcase the strategy’s ability to accurately detect market trends and effectively adapt its position to mitigate risks, particularly during periods of significant market shocks. The trading signals provided by the DADE-DQN strategy demonstrated its capability to identify favorable market conditions and adjust positions accordingly. By leveraging its DRL framework to analyze historical price and technical indicator data, it could capture important market patterns and make informed decisions. During periods of severe market shocks, such as sudden downturns or volatility spikes, the DADE-DQN strategy demonstrated its adaptability by swiftly changing the direction of positions. This allowed it to hedge against potential risks and minimize losses.

Figure 8.

Trading signals of DJI based on DADE-DQN algorithm.

Figure 9.

Trading signals of FCHI based on DADE-DQN algorithm.

Figure 10.

Trading signals of IXIC based on DADE-DQN algorithm.

Figure 11.

Trading signals of N225 based on DADE-DQN algorithm.

Figure 12.

Trading signals of KS11 based on DADE-DQN algorithm.

Figure 13.

Trading signals of SP500 based on DADE-DQN algorithm.

5. Discussion

This study introduces the DADE-DQN algorithm, an extension of the DQN algorithm, aimed at enhancing stock trading strategies. Table 7 presents a comprehensive overview of performance across various trading methods for each of the six assets. Of significance, the DADE-DQN strategy consistently exhibits exceptional performance, underscoring its potential. Notably, it consistently achieves the highest cumulative returns when compared to all other methods. Additionally, its strong risk-management capabilities are evident through consistently low maximum drawdowns, emphasizing its capacity for balanced profitability and risk reduction across diverse datasets. These empirical findings have important implications for policymakers, traders, and finance experts.

Comparative analysis involving multiple DRL-based strategies (TDQN, DQN-Pattern, DQN-Vanilla) and traditional strategies (B&H, S&H, MR, TF) under identical conditions clearly establishes the superiority of the DADE-DQN algorithm. Across various trading objectives, the DADE-DQN algorithm consistently outperforms benchmark methods, achieving remarkable cumulative returns and Sharpe ratios. Notably, on the KS11 dataset, the DADE-DQN strategy stands out with an impressive cumulative return of 79.43% and a Sharpe ratio of 2.21, showcasing its ability to generate profitable trading strategies while effectively managing risk.

The compelling results validate the effectiveness of the proposed DADE-DQN framework in generating profitable trading strategies while mitigating risk. This conclusion is further supported by Figure 7, which presents cumulative return curves for different trading strategies, including DADE-DQN, across various assets. The visual representation unequivocally demonstrates the outstanding performance of the DADE-DQN strategy, consistently achieving excess returns while effectively controlling the risk of significant losses. Importantly, the cumulative return curve of the DADE-DQN strategy exhibits a smoother upward trend in comparison to benchmark strategies, highlighting its enhanced stability and profitability.

Moreover, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 provide detailed insights into the specific trading signals generated by the DADE-DQN strategy for different assets. These figures illustrate the strategy’s proficiency in identifying market trends and adeptly adjusting positions to manage risk, especially during periods of market turbulence. The trading signals capture favorable market conditions and dynamically adapt, showcasing the strategy’s versatility. Leveraging deep reinforcement learning, the strategy effectively analyzes historical price data and technical indicators, enabling it to identify crucial market patterns and make informed decisions. Importantly, in response to abrupt market changes, such as sudden declines or heightened volatility, the DADE-DQN strategy displays resilience by swiftly readjusting positions to mitigate risk. Hence, the robust performance of the DADE-DQN strategy, demonstrated in both backtesting and real-world application, highlights its potential to enhance stock trading strategies, offering investors stability and favorable returns.

In summary, the effectiveness of the DRL strategy in identifying market trends and dynamically adjusting positions to manage risk contributes to its overall profitability and establishes its role as a reliable trading strategy across diverse market scenarios. This underscores its potential to enhance stock trading strategies, providing investors with stability and favorable returns.

6. Conclusions and Future Work

Firstly, the primary value of this paper lies in the introduction of the DADE-DQN algorithm, an extension of the DQN algorithm, which presents a significant enhancement to stock trading strategies. The novel dual action selection and dual environment mechanism incorporated into the DADE-DQN algorithm effectively addresses the challenges posed by limited financial data and algorithmic stability. By striking a balance between exploration and exploitation, this mechanism elevates the performance of the algorithm, surpassing traditional methods. The incorporation of LSTM and attention mechanisms enriches the deep network architecture, enhancing its capacity to capture intricate patterns and important features within stock market data. Furthermore, the introduction of a feature selection method based on mutual information demonstrates innovation in enhancing model interpretability and efficiency.

Secondly, by moving forward, several avenues for improvement exist. While the DADE-DQN algorithm showcased promising results, we acknowledge the need for further refinement. In particular, our current trading strategy, involving all-or-nothing position adjustments, might expose traders to higher risks and transaction fees during actual trading. We also recognize the absence of consideration for the impact of trading actions on the environment and the issue of slippage, both of which are pertinent aspects of real-world trading scenarios.

Finally, future research endeavors should focus on developing more sophisticated trading position mechanisms that enhance control over risk exposure while targeting increased returns. Additionally, exploring action distribution strategies to address uncertainties and risks is an exciting avenue for investigation. By accounting for the environmental impact of trading actions and tackling the slippage problem, we can attain a more accurate representation of actual trading conditions. These advancements will contribute to more reliable and effective trading strategies, offering practical implications for financial professionals and decision-makers alike.

Author Contributions

Conceptualization, methodology, data curation, writing—original draft preparation. Y.H. and Y.S.; software, validation, visualization, investigation, Y.H. and C.Z.; supervision, writing—review and editing, project administration, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by the Faculty Research Grants, Macau University of Science and Technology (Project no. FRG-22-001-INT), and the Science and Technology Development Fund, Macau SAR (File no. 0096/2022/A).

Data Availability Statement

Our stock data are available for download at http://finance.yahoo.com (accessed on 1 February 2023).

Acknowledgments

The authors would like to express the appreciation to Zhen Guo, a student at the School of Computer Science and Engineering, Macau University of Science and Technology. His insightful inputs and suggestions have significantly enriched the content of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hamilton, J.D. Time Series Analysis; Princeton University Press: Princeton, NJ, USA, 2020. [Google Scholar]

- Hambly, B.; Xu, R.; Yang, H. Recent Advances in Reinforcement Learning in Finance. Math. Financ. 2021, 33, 435–975. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Hassabis, D. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Babuschkin, I.; Czarnecki, W.M.; Mathieu, M.; Dudzik, A.; Chung, J.; Choi, D.H.; Powell, R.; Ewalds, T.; Georgiev, P.; et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 2019, 575, 350–354. [Google Scholar] [CrossRef] [PubMed]

- Berner, C.; Brockman, G.; Chan, B.; Cheung, V.; Debiak, P.; Dennison, C.; Farhi, D.; Fischer, Q.; Hashme, S.; Hesse, C.; et al. Dota 2 with Large Scale Deep Reinforcement Learning. arXiv 2019, arXiv:1912.06680. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Graves, A.; Antonoglou, I.; Wierstra, D.; Riedmiller, M. Playing Atari with Deep Reinforcement Learning. Comput. Sci. 2013. [Google Scholar]

- Hasselt, H.V.; Guez, A.; Silver, D. Deep Reinforcement Learning with Double Q-Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Lipton, Z.C.; Gao, J.; Li, L.; Li, X.; Ahmed, F.; Deng, L. Efficient exploration for dialog policy learning with deep BBQ networks & replay buffer spiking. arXiv 2016, arXiv:1608.05081. [Google Scholar]

- Mossalam, H.; Assael, Y.M.; Roijers, D.M.; Whiteson, S. Multi-objective deep reinforcement learning. arXiv 2016, arXiv:1610.02707. [Google Scholar]

- Mahajan, A.; Tulabandhula, T. Symmetry Learning for Function Approximation in Reinforcement Learning. arXiv 2017, arXiv:1706.02999. [Google Scholar]

- Taitler, A.; Shimkin, N. Learning control for air hockey striking using deep reinforcement learning. In Proceedings of the 2017 International Conference on Control, Artificial Intelligence, Robotics & Optimization (ICCAIRO), Prague, Czech Republic, 20–22 May 2017; pp. 22–27. [Google Scholar]

- Levine, N.; Zahavy, T.; Mankowitz, D.J.; Tamar, A.; Mannor, S. Shallow updates for deep reinforcement learning. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Leibfried, F.; Grau-Moya, J.; Bou-Ammar, H. An Information-Theoretic Optimality Principle for Deep Reinforcement Learning. arXiv 2017, arXiv:1708.01867. [Google Scholar]

- Anschel, O.; Baram, N.; Shimkin, N. Averaged-dqn: Variance reduction and stabilization for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 176–185. [Google Scholar]

- Hester, T.; Vecerík, M.; Pietquin, O.; Lanctot, M.; Schaul, T.; Piot, B.; Sendonaris, A.; Dulac-Arnold, G.; Osband, I.; Agapiou, J.P.; et al. Learning from Demonstrations for Real World Reinforcement Learning. arXiv 2017, arXiv:1704.03732. [Google Scholar]

- Schaul, T.; Quan, J.; Antonoglou, I.; Silver, D. Prioritized experience replay. arXiv 2015, arXiv:1511.05952. [Google Scholar]

- Sorokin, I.; Seleznev, A.; Pavlov, M.; Fedorov, A.; Ignateva, A. Deep Attention Recurrent Q-Network. arXiv 2015, arXiv:1512.01693. [Google Scholar]

- Hausknecht, M.; Stone, P. Deep recurrent q-learning for partially observable mdps. In Proceedings of the 2015 AAAI Fall Symposium Series, Arlington, VA, USA, 12–14 November 2015. [Google Scholar]

- Wang, Z.; Schaul, T.; Hessel, M.; Hasselt, H.; Lanctot, M.; Freitas, N. Dueling network architectures for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 1995–2003. [Google Scholar]

- Mosavi, A.; Ghamisi, P.; Faghan, Y.; Duan, P.; Band, S. Comprehensive Review of Deep Reinforcement Learning Methods and Applications in Economics; Social Science Electronic Publishing: Rochester, NY, USA, 2020. [Google Scholar]

- Thakkar, A.; Chaudhari, K. A Comprehensive Survey on Deep Neural Networks for Stock Market: The Need, Challenges, and Future Directions. Expert Syst. Appl. 2021, 177, 114800. [Google Scholar] [CrossRef]

- Gao, X. Deep reinforcement learning for time series: Playing idealized trading games. arXiv 2018, arXiv:1803.03916. [Google Scholar]

- Huang, C.Y. Financial Trading as a Game: A Deep Reinforcement Learning Approach. arXiv 2018, arXiv:1807.02787. [Google Scholar]

- Chen, L.; Gao, Q. Application of Deep Reinforcement Learning on Automated Stock Trading. In Proceedings of the 2019 IEEE 10th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 18–20 October 2019; pp. 29–33. [Google Scholar]

- Jeong, G.H.; Kim, H.Y. Improving financial trading decisions using deep Q-learning: Predicting the number of shares, action strategies, and transfer learning. Expert Syst. Appl. 2019, 117, 125–138. [Google Scholar] [CrossRef]

- Li, Y.; Nee, M.; Chang, V. An Empirical Research on the Investment Strategy of Stock Market based on Deep Reinforcement Learning model. In Proceedings of the 4th International Conference on Complexity, Future Information Systems and Risk, Crete, Greece, 2–4 May 2019. [Google Scholar]

- Chakole, J.; Kurhekar, M. Trend following deep Q-Learning strategy for stock trading. Expert Syst. 2020, 37, e12514. [Google Scholar] [CrossRef]

- Dang, Q.V. Reinforcement learning in stock trading. In Advanced Computational Methods for Knowledge Engineering, Proceedings of the 6th International Conference on Computer Science, Applied Mathematics and Applications, ICCSAMA 2019, Hanoi, Vietnam, 19–20 December 2019; Springer International Publishing: Cham, Switzerland, 2019; pp. 311–322. [Google Scholar]

- Ma, C.; Zhang, J.; Liu, J.; Ji, L.; Gao, F. A Parallel Multi-module Deep Reinforcement Learning Algorithm for Stock Trading. Neurocomputing 2021, 449, 290–302. [Google Scholar] [CrossRef]

- Shi, Y.; Li, W.; Zhu, L.; Guo, K.; Cambria, E. Stock trading rule discovery with double deep Q-network. Appl. Soft Comput. 2021, 107, 107320. [Google Scholar] [CrossRef]

- Théate, T.; Ernst, D. An application of deep reinforcement learning to algorithmic trading. Expert Syst. Appl. 2021, 173, 114632. [Google Scholar] [CrossRef]

- Bajpai, S. Application of deep reinforcement learning for Indian stock trading automation. arXiv 2021, arXiv:2106.16088. [Google Scholar]

- Li, Y.; Liu, P.; Wang, Z. Stock Trading Strategies Based on Deep Reinforcement Learning. Sci. Program. 2022, 2022, 4698656. [Google Scholar] [CrossRef]

- Taghian, M.; Asadi, A.; Safabakhsh, R. Learning financial asset-specific trading rules via deep reinforcement learning. Expert Syst. Appl. 2022, 195, 116523. [Google Scholar] [CrossRef]

- Liu, P.; Zhang, Y.; Bao, F.; Yao, X.; Zhang, C. Multi-type data fusion framework based on deep reinforcement learning for algorithmic trading. Appl. Intell. 2023, 53, 1683–1706. [Google Scholar] [CrossRef]

- Tran, M.; Pham-Hi, D.; Bui, M. Optimizing Automated Trading Systems with Deep Reinforcement Learning. Algorithms 2023, 16, 23. [Google Scholar] [CrossRef]

- Huang, Y.; Cui, K.; Song, Y.; Chen, Z. A Multi-Scaling Reinforcement Learning Trading System Based on Multi-Scaling Convolutional Neural Networks. Mathematics 2023, 11, 2467. [Google Scholar] [CrossRef]

- Ye, Z.J.; Schuller, B.W. Human-Aligned Trading by Imitative Multi-Loss Reinforcement Learning. Expert Syst. Appl. 2023, 234, 120939. [Google Scholar] [CrossRef]

- Moody, J.; Saffell, M. Learning to trade via direct reinforcement. IEEE Trans. Neural Netw. 2001, 12, 875–889. [Google Scholar] [CrossRef]

- Lele, S.; Gangar, K.; Daftary, H.; Dharkar, D. Stock market trading agent using on-policy reinforcement learning algorithms. Soc. Sci. Electron. Publ. 2020. [Google Scholar] [CrossRef]

- Liu, F.; Li, Y.; Li, B.; Li, J.; Xie, H. Bitcoin transaction strategy construction based on deep reinforcement learning. Appl. Soft Comput. 2021, 113, 107952. [Google Scholar] [CrossRef]

- Wang, Z.; Lu, W.; Zhang, K.; Li, T.; Zhao, Z. A parallel-network continuous quantitative trading model with GARCH and PPO. arXiv 2021, arXiv:2105.03625. [Google Scholar]

- Mahayana, D.; Shan, E.; Fadhl’Abbas, M. Deep Reinforcement Learning to Automate Cryptocurrency Trading. In Proceedings of the 2022 12th International Conference on System Engineering and Technology (ICSET), Bandung, Indonesia, 3–4 October 2022; pp. 36–41. [Google Scholar]

- Xiao, X. Quantitative Investment Decision Model Based on PPO Algorithm. Highlights Sci. Eng. Technol. 2023, 34, 16–24. [Google Scholar] [CrossRef]

- Ponomarev, E.; Oseledets, I.V.; Cichocki, A. Using reinforcement learning in the algorithmic trading problem. J. Commun. Technol. Electron. 2019, 64, 1450–1457. [Google Scholar] [CrossRef]

- Liu, X.Y.; Yang, H.; Chen, Q.; Zhang, R.; Yang, L.; Xiao, B.; Wang, C.D. FinRL: A deep reinforcement learning library for automated stock trading in quantitative finance. arXiv 2020, arXiv:2011.09607. [Google Scholar]

- Liu, Y.; Liu, Q.; Zhao, H.; Pan, Z.; Liu, C. Adaptive quantitative trading: An imitative deep reinforcement learning approach. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 2128–2135. [Google Scholar]

- Lima Paiva, F.C.; Felizardo, L.K.; Bianchi, R.A.d.C.; Costa, A.H.R. Intelligent trading systems: A sentiment-aware reinforcement learning approach. In Proceedings of the Second ACM International Conference on AI in Finance, Virtual, 3–5 November 2021; pp. 1–9. [Google Scholar]

- Vishal, M.; Satija, Y.; Babu, B.S. Trading Agent for the Indian Stock Market Scenario Using Actor-Critic Based Reinforcement Learning. In Proceedings of the 2021 IEEE International Conference on Computation System and Information Technology for Sustainable Solutions (CSITSS), Bangalore, India, 16–18 December 2021; pp. 1–5. [Google Scholar]

- Ge, J.; Qin, Y.; Li, Y.; Huang, Y.; Hu, H. Single stock trading with deep reinforcement learning: A comparative study. In Proceedings of the 2022 14th International Conference on Machine Learning and Computing (ICMLC), Guangzhou, China, 18–21 February 2022; pp. 34–43. [Google Scholar]

- Nesselroade, K.P., Jr.; Grimm, L.G. Statistical Applications for the Behavioral and Social Sciences; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Cai, J.; Xu, K.; Zhu, Y.; Hu, F.; Li, L. Prediction and analysis of net ecosystem carbon exchange based on gradient boosting regression and random forest. Appl. Energy 2020, 262, 114566. [Google Scholar] [CrossRef]

- Li, G.; Zhang, A.; Zhang, Q.; Wu, D.; Zhan, C. Pearson Correlation Coefficient-Based Performance Enhancement of Broad Learning System for Stock Price Prediction. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 2413–2417. [Google Scholar] [CrossRef]

- Guo, X.; Zhang, H.; Tian, T. Development of stock correlation networks using mutual information and financial big data. PLoS ONE 2018, 13, e195941. [Google Scholar] [CrossRef]

- Kong, A.; Azencott, R.; Zhu, H.; Li, X. Pattern Recognition in Microtrading Behaviors Preceding Stock Price Jumps: A Study Based on Mutual Information for Multivariate Time Series. Comput. Econ. 2023, 1–29. [Google Scholar] [CrossRef]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Yue, H.; Liu, J.; Zhang, Q. Applications of Markov Decision Process Model and Deep Learning in Quantitative Portfolio Management during the COVID-19 Pandemic. Systems 2022, 10, 146. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12 June–17 June 2016; pp. 1480–1489. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chan, E. Algorithmic Trading: Winning Strategies and Their Rationale; John Wiley & Sons: Hoboken, NJ, USA, 2013; Volume 625. [Google Scholar]

- Narang, R.K. Inside the Black Box: A Simple Guide to Quantitative and High Frequency Trading; John Wiley & Sons: Hoboken, NJ, USA, 2013; Volume 846. [Google Scholar]

- Chan, E.P. Quantitative Trading: How to Build Your Own Algorithmic Trading Business; John Wiley & Sons: Hoboken, NJ, USA, 2021. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).