Abstract

Differential evolution (DE) is one of the most popular and widely used optimizers among the community of evolutionary computation. Despite numerous works having been conducted on the improvement of DE performance, there are still some defects, such as premature convergence and stagnation. In order to alleviate them, this paper presents a novel DE variant by designing a new mutation operator (named “DE/current-to-pbest_id/1”) and a new control parameter setting. In the new operator, the fitness value of the individual is adopted to determine the chosen scope of its guider among the population. Meanwhile, a group-based competitive control parameter setting is presented to ensure the various search potentials of the population and the adaptivity of the algorithm. In this setting, the whole population is randomly divided into multiple equivalent groups, the control parameters for each group are independently generated based on its location information, and the worst location information among all groups is competitively updated with the current successful parameters. Moreover, a piecewise population size reduction mechanism is further devised to enhance the exploration and exploitation of the algorithm at the early and later evolution stages, respectively. Differing from the previous DE versions, the proposed method adaptively adjusts the search capability of each individual, simultaneously utilizes multiple pieces of successful parameter information to generate the control parameters, and has different speeds to reduce the population size at different search stages. Then it could achieve the well trade-off of exploration and exploitation. Finally, the performance of the proposed algorithm is measured by comparing with five well-known DE variants and five typical non-DE algorithms on the IEEE CEC 2017 test suite. Numerical results show that the proposed method is a more promising optimizer.

Keywords:

numerical optimization; differential evolution; mutation strategy; control parameter setting; population reduction mechanism MSC:

80W50; 68T20; 90C59; 90C26

1. Introduction

Differential Evolution (DE), proposed by Storn and Price [1] in 1995, is a population-based stochastic search algorithm. Like other evolutionary algorithms, the DE algorithm contains mutation, crossover, and selection operations. Due to its simple structure and excellent performance, the DE algorithm has been widely studied and used in many scientific and engineering fields, such as engineering scheduling problems [2,3,4], resource allocation problems in mobile communication [5], UAV path planning problems [6], biomedical problems [7], and so on. However, the performance of the DE algorithm is still challenged when facing increasingly complex optimization problems, where a large number of local optima usually exist, and then premature convergence and stagnation easily occur.

As pointed out in Ref. [8], the performance of DE is mainly dependent on its mutation strategy and control parameter setting. In fact, the mutation strategy plays an important role in the search ability of DE on the search space during the evolution process, and the control parameter setting can adjust its search characteristic. Consequently, a series of works have been conducted on the improvement of DE performance in the past decades by enhancing the mutation operation [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41] and control parameter setting [9,28,30,42,43,44,45,46,47,48,49]. Particularly, the previous mutation approaches are always achieved through designing a new mutation operator [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26] and integrating multiple mutation strategies [27,28,29,30,31,32,33,34,35,36,37,38,39,40,41]. With respect to generating a new mutation operator, one important and popular way is to exploit the underlying information of the population to construct the search direction, thus enhancing the search effectiveness and efficiency of the algorithm. On the other hand, the combined mutation strategy often employs multiple operators with various search characteristics to balance the exploration and exploitation of the algorithm. It should be mentioned that compared to the combined mutation approaches, the development of a new operator does not require the determination of the candidate mutation operator pool in advance, and does not need to consider the selection rule of candidate operators, which are often very difficult to decide. Thus, it is desirable to develop a more promising new mutation operator.

Up to now, through fully exploiting the underlying information of the population, many mutation operators have been presented [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26]. For example, to balance well the exploration and exploitation of the algorithm, based on the scheme of “DE/current-to-best/1”, which makes the algorithm easily fall into the local optimum, Zhang et al. [9] proposed a new mutation operator, named “DE/current-to-pbest/1”, where the top p% individuals of the population are randomly used to guide the search and an external archive is used to store historical inferiority solutions to enhance the diversity of the population. To fully exploit the advantage of individuals, Gong and Cai [10] proportionally selected the parents for the mutation process based on the fitness values of individuals (rank-DE), and Wang et al. [11] simultaneously employed the fitness value and diversity of individuals to determine their probability of participating into the mutation. By employing the comprehensive ranking of individuals based on both fitness and diversity, Cheng et al. [24] proposed a mutation operator (FDDE). In order to promote the optimization ability of DE in solving complicated optimization problems, Liu et al. [25] devised a simple yet effective mutation scheme by using a non-linear selection probability for each individual, while by utilizing the population’s holistic information and the direction of differential vector, an enhanced mutation operator was designed to avoid the issue of local optimal trapping [26]. Although the above methods can effectively improve the performance of DE, the special characteristic of the individual is rarely considered to determine its guider under the framework of DE/current-to-guiding/1, which might be helpful to effectively adjust the search requirements of different individuals. Thus, it is desirable to devise a more adaptive and promising mutation operator for DE.

Moreover, for the existing control parameter settings, one of the most successful and attractive approaches is to design an adaptive control mechanism, which can adaptively adjust the search capability of the algorithm. To do this, numerous adaptive methods have been developed over recent decades [9,28,30,42,43,44,45,46,47,48,49]. For example, to ensure the robustness of the algorithm, Liu et al. [42] used a fuzzy logic controller to tune the control parameters of mutation and crossover operations, and then proposed a fuzzy adaptive differential evolution algorithm. By integrating the scale factor with individuals, Brest et al. [43] proposed another self-adapting parameter control strategy (jDE), where the successful parameter shall be continuously entered into the next generation and the failure one will be randomly recreated. Meanwhile, by making full use of the search information of the population, Zhang et al. [9] presented an adaptive parameter setting, and Tanabe et al. [44] further introduced the historical success information of the population to create the scale factor and crossover rate for each individual, and put forward a history-based adaptive approach (SHADE). On the other hand, for the population size, Tanabe et al. [45] presented a linear reduction mechanism to dynamically adjust the population during the whole evolutionary process. Further, by using the performance of the best individual, Xia et al. [48] developed an adaptive control mechanism to increase or decrease the population. Moreover, to adaptively adjust the population diversity and balance the exploration and exploitation of the algorithm, Zeng et al. [49] further proposed a sawtooth-linear population size adaptive method to maintain the search ability of the algorithm. Even though these methods above could effectively strengthen the adaptivity and robustness of the algorithm for different search states and problems, the ones with the historical search information are usually adopted to set the control parameters for the whole population, the result of which may be that when there are some biases that obtain the successful offspring, they lead to a serious degradation on the performance of the algorithm. Meanwhile, despite many adaptive mechanisms having been designed for the population size and having promoted the performance of DE, they often adopt one single rule to adjust the population size, and even require additionally measuring the search state of the population, which may be not able to fully satisfy the varying search needs of the algorithm at different evolutionary stages and may seriously increase the computational burden. Therefore, it is necessary to make further efforts in developing a more effective parameter control setting.

Based on the considerations above, in this paper, we propose a new DE algorithm, named differential evolution with group-based competitive control parameter setting (GCIDE). Specifically, the main contributions of this paper are as follows.

- (1)

- In order to make full use of the fitness value of individuals to adjust their search capability, under the framework of “DE/current-to-guiding/1”, a new mutation operator named “DE/current-to-pbest_id/1” is first proposed. In the new operator, each individual’s fitness value is employed to determine the chosen range of its associated guider among the whole population, i.e., decide the parameter p in the “DE/current-to-pbest/1” for each target individual. Compared to the existing related methods [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26], the proposed operator adaptively assigns a suitable guider for each individual to adjust its search capability based on its special characteristic. Thus, this new operator can effectively satisfy the search requirements of different individuals, thus enhancing the search effectiveness of the algorithm.

- (2)

- To alleviate the degradation of DE performance caused by the successful biases, a grouping-based competitive parameter control method is also proposed to create the scale factors and crossover rates for the population. In this proposed method, the population is first randomly and equally divided into k groups, the control parameters for the individuals in each group are independently generated with an adaptive manner, and the location parameters with the worst performance are competitively updated by using the successful parameters of the current population. Differing from the related adaptive methods [42,43,44,45], the proposed setting can simultaneously use multiple historical pieces of successful information to independently generate the control parameters for the population, and the worst one is dynamically replaced by the current successful information during the whole evolutionary process. Then, the developed setting is capable of not only avoiding being mislead by biases, but also maintaining the adaptive ability of the algorithm.

- (3)

- In order to further meet the different search needs of the algorithm at different search stages, by designing and integrating two different non-linear schemes, a piecewise population reduction mechanism is presented to dynamically adjust the population size during the evolutionary process. Unlike the previous approaches [46,47,48,49], this new method, respectively, adopts a non-linear formula with slower or quicker reduction speed to set the population size, and when a smaller population size is generated, the corresponding worst individuals will be removed from the current population. Thereby, this mechanism could more effectively enhance the exploration and exploitation of the algorithm at the early and later search stages, respectively.

Finally, to verify the performance of the GCIDE, numerical experiments are conducted by comparing with five well-known DE variants and five other non-DE heuristic algorithms on the 30 benchmark functions from IEEE CEC 2017 [50] and three real applications. Numerical results show that the GCIDE has a more competitive performance.

2. Related Work

In this section, we shall introduce the classical differential evolution algorithm and the typical previous works, respectively.

2.1. Classical Differential Evolution

Herein, the four basic operations of the classical differential evolution algorithm are described in detail, including initialization, mutation, crossover, and selection [1].

First, a population with NP solutions, , is randomly generated in the search space. denotes the i-th individual in SG, and G is the current number of iterations. In detail, the j-th dimension of can be generated by

Here, and denote the j-th dimension lower and upper boundaries of the decision space, respectively, and rand is a random number between 0 and 1 within uniform distribution.

After the initialization, for each target individual , its corresponding mutant vector is generated by special mutation operation. Herein, four commonly used mutation operations are described as follows:

DE/rand/1:

DE/best/1:

DE/rand-to-best/1:

DE/current-to-best/1:

Herein, F is a scaling factor ranging from [0, 1], are three randomly selected and exclusive indexes having , and denotes the best individual in the population at the generation G evaluated by its fitness value.

In order to avoid the mutant individuals exceeding the search space and suitably dealing with each variable according to its search characteristic, the following constraint handling technique in [9] is adopted here. Specifically, if is beyond the feasible domain region , it will be readjusted in the feasible domain region by

Subsequently, the trial vector is generated by crossover operation between the target vector and the mutation vector . The specific operation is as follow:

Here, is the crossover probability, and is a randomly generated index in .

Finally, the target vector is compared with the trial vector according to their fitness values, and the one with a better fitness value survives to the next generation. The binomial crossover operation is as follow:

Here, and

are the fitness values of and , respectively.

Noticeably, once the DE algorithm is called, the initialization will be first executed, and then the mutation, crossover, and selection operations are repeated until the pre-given termination criterion is satisfied.

2.2. Related Researches

As is well-known, the performance of DE is heavily related to its mutation operation and control parameter setting. As a result, various mutation strategies and control parameter settings have been presented to enhance the search ability of DE [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49]. Particularly, with respect to the mutation scheme, the information of individual or population have been fully exploited to design an improved mutation operator [9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26]. Concretely, by randomly choosing a guider for each individual from the top p% individuals, Zhang et al. [9] proposed mutation operator “DE/current-to-pbest/1”. Through employing the fitness values of individuals to decide the parents in the mutation process, Gong and Cai [10] presented a rank-based mutation operator. Meanwhile, by simultaneously considering the fitness value and diversity of individuals, Wang et al. [11] put forward an enhanced mutation operation. Similarly, to alleviate all individuals in the population having an equal chance to be selected as the parents, Cai et al. [13] combined the fitness and position information of the population simultaneously to choose the parents in the mutation. By utilizing both the fitness value and decision space information of the population, Sharifi-Noghabi et al. [15] also designed a union-based mutation operator. By randomly selecting three individuals from the population and using the best one as the base vector and the difference vectors as the disturb item, Mohamed and Suganthan [16] devised a triangular mutation operator. By utilizing both the fitness value and novelty of individuals, Xia et al. [18] also proposed a new parent selection method for the mutation of DE. To get a better perception of the landscape of objectives, Meng et al. [21] introduced a depth information-based external archive and used it to create the difference vector in the mutation process. By using the best individual and a randomly selected individual to generate the base vector, Ma and Bai [22] presented a novel mutation scheme. Moreover, via employing the comprehensive ranking of individuals based on both fitness and diversity, Cheng et al. [24] proposed a new mutation operator (FDDE). Liu et al. [25] devised a simple yet effective mutation scheme by using a non-linear selection probability for each individual, while Li et al. [26] developed an enhanced mutation operator to avoid the issue of local optimal trapping by utilizing the population’s holistic information and the direction of differential vector.

On the other hand, for integrating the benefits of multiple mutation operators, many combined strategies were also designed [27,28,29,30,31,32,33,34,35,36,37,38,39,40,41]. For example, by using four different mutation operators as the mutation pool, and dynamically choosing one among them for each individual based on their historical successful ratio, Qin et al. [27] presented a self-adaptive selection mechanism. By simultaneously employing three mutation operators for every individual to generate offspring, Wang et al. [28] developed a composite mutation strategy. Gui et al. [30] randomly divided the whole population into multiple groups and assigned different mutation operators for each individual within one group based on its role. Li et al. [31] introduced reinforcement learning to dynamically provide the selection chance of each candidate mutation operator. By introducing the idea of a cultural algorithm and different mutation strategies into belief space, Deng et al. [35] proposed a new combined mutation strategy to balance the global exploration ability and local optimization ability. Moreover, for constraint optimization problems, Qiao et al. [38] presented a self-adaptive resources allocation-based mutation strategy by assigning a suitable operator to each individual based on its performance feedback. Besides, by hybridizing with an estimation of distribution algorithm, Li et al. [40] designed a hybrid DE variant, and by dividing the whole population into leader-adjoint populations and separately assigning one operator for each subpopulation, Li et al. [41] further proposed a combined way to integrate the advantages of several different operators.

Moreover, with respect to the control parameters involved in the DE algorithm, many adaptive approaches have also been presented over recent decades [9,28,30,42,43,44,45,46,47,48,49]. In particular, for the scale factor and crossover rate, by making full use of the successful parameters, Zhang et al. [9] presented an adaptive parameter setting, and Tanabe et al. [44] further introduced the historical success information of the population and put forward a history-based adaptive approach (SHADE). By introducing a fuzzy logic controller, Liu et al. [42] proposed a fuzzy adaptive parameter control method, and by integrating the scale factor with individuals and adaptively updating it according to the performance of its corresponding offspring, Brest et al. [42] proposed another self-adapting parameter control strategy (jDE). Moreover, for the population size, by linearly reducing the size of the population during the evolution process, Tanabe et al. [45] presented a linear reduction mechanism. Meanwhile, by fully using the diversity of the population to evaluate its search state, Poláková et al. [47] dynamically increased or decreased the population, and by fully using the performance of the best individual to evaluate the search state of the population, Xia et al. [48] designed an adaptive control mechanism to dynamically adjust the size of the population. Moreover, by introducing a sawtooth-linear function to calculate the size of the population, Zeng et al. [49] further proposed a sawtooth-linear population size adaptive method to maintain the search ability of the algorithm.

Notably, as described above, one can find that the special characteristic of the individual is rarely considered to determine its guider under the framework of DE/current-to-guiding/1, the adaptive parameter settings with the historical search information are usually adopted to set the control parameters for the whole population, and the existing population size control approaches often adopt one single rule to adjust the population size, and even require additionally measuring the search state of the population. Thus, the biases that obtain the successful offspring could not be effectively handled and would lead to a serious degradation of the performance of the algorithm, while the varying search needs of the algorithm may not be fully satisfied at different evolutionary stages, and the computational cost of the algorithm might be seriously increased. Therefore, it is necessary to develop a more promising DE variant.

3. Proposed Algorithm

In this section, we provide a detailed description of the proposed algorithm (GCIDE), including a new mutation strategy, named DE/current-to-pbest_id/1, a group-based competitive control parameter setting, and a piecewise population size reduction mechanism.

3.1. New Mutation Strategy

As can be seen from the Introduction, the settings of p in the existing versions of DE/current-to-pbest/1 are usually fixed or controlled by a formula related to the number of iterations. These may result in inefficient searches for the algorithm and the risk of falling into local optimum. For this, a new mutation strategy named DE/current-to-pbest_id/1 is proposed in this paper as follows:

where

is the associated guiding individual for the target individual by randomly selecting from the top p of individuals in the current population.

From Equation (9), we can see that the selection of depends on the value of p for every target individual. A smaller p makes the population converge to the better individuals quickly. This can ensure the convergence speed of the algorithm to some extent, but increases the risk of falling into the local optimum. A larger p can ensure the diversity of the population during the evolutionary process to a certain extent, but has the limitation of slow convergence. Thus, by using the fitness information of individuals, the following equation is designed to set its corresponding p by

where is the fitness value of .

and are the minimum fitness value and maximum fitness value in the population, respectively, is a very small positive number to prevent p from generating invalid values in the later iteration, and is set to 0.01 here. From Equation (10), individuals with smaller fitness values are more inclined to exploitation and their corresponding p are smaller, while individuals with larger fitness values are more inclined to exploration, and their corresponding p are larger. Thus, this setting can make full use of the characteristics of different individuals, and thus balance well the exploration and exploitation of the algorithm.

3.2. Group-Based Competitive Control Parameter Setting

The widely used methods for adjusting the control parameters are the adaptive ones using the historical successful parameters. For example, in references [9,44], all the successful parameters of individuals are employed to update their generation distributions for the next population. However, when there are some biases which obtain the successful offspring in the last iteration, they may result in misleading the creation of the next parameters, and are used for every individual. This could cause a serious degradation of the performance of the algorithm. For this, a group-based competitive control parameter setting shall be proposed in this subsection.

In this new method, the population is first randomly divided into k groups with equal sizes, and the parameters including crossover rate Cr and scaling factor F of individuals in each group are independently generated. Specifically, for the i-th individual in the k-th group, its corresponding crossover rate Cri and scaling factor Fi are generated by

where is a normal distribution with mean and standard deviation 0.1, is a Cauchy distribution with mean and standard deviation 0.1. At the beginning of the algorithm, we let for . Notably, in order to avoid being mislead by the bias occurring in the last iteration, and also effectively ensure the adaptivity of the algorithm, the historical successful parameters are still adopted here, but they are only used to update the values of and for the group with the worst performance. Specifically, the detailed update procedures of and can be, respectively, described as

Herein, and are the sets of successful Cr and F, respectively, is the fitness deviation between the target individual and its offspring , and idx denotes the index of the group with the worst performance, which is characterized by the success rate (j = 1, 2, …, k) of the individuals in the group here as

Here, is a very small positive number and is set to 0.01, is the number of successful individuals in the j-th group, and is the number of unsuccessful individuals in the j-th group. Note that if there are multiple groups who have the same minimal success rate, the updated and will be employed for the group randomly selected among them. Obviously, from Equations (11)–(14), one can see that the number of groups k might importantly affect the performance of the proposed setting. In fact, a larger k may lead to the defect that the overly outdated successful parameters are stored for a long time and employed to generate the control parameters, which have not been suitable for the search requirements of the current population, thus degrading the search effectiveness of the algorithm. Thereby, the value of k should not be too large, and we set k to 4 in this paper by a series of tuning experiments, which can be further found in Section 4.1.

As can be seen from the above, this proposed setting not only makes full use of the successful parameters to maintain the adaptivity of the algorithm for varying search states, but also avoids being mislead by the successful biases. Thus, it is capable of enhancing the optimization of the algorithm further.

3.3. A Piecewise Population Reduction Mechanism

In most DE variants, although their parameters are automatically adjusted, the population size often remains constant throughout the search process. In fact, smaller population sizes tend to lead to faster convergence, but increase the risk of convergence to a local optimum. On the other hand, a larger population size encourages a wide search, but tends to converge more slowly.

Based on this consideration, we further propose a deterministic rule-based population reduction mechanism herein, which prefers to fully explore the solution space at the early stage of evolution, while converging quickly to find the best solution at the later stage of evolution. In detail, the specific formula for computing the population size is as follows:

Herein, and denote the initial and minimum population sizes, respectively, and and denote the maximum and current number of function evaluations, respectively. From Equation (15), it can be seen that at the early and later stages of evolution, the population size is monotonously reduced and has a slower and faster speed, separately. When the new population size is smaller than the current one, the next population will just consist of the best NP individuals in the current population based on their fitness values. Thus, this mechanism is able to effectively promote the balance between exploration and exploitation.

Specifically, by integrating the proposed mutation strategy, a group-based competitive control parameter setting and a piecewise population reduction mechanism, the overall procedure of the proposed algorithm GCIDE is also shown in Algorithm 1.

| Algorithm 1 The detailed procedure of GCIDE |

| 1: Input the initial parameters, including population size NP, the number of groups k, the prime values of and for each group, and the number of generation G = 0. |

| 2: Randomly initialize population by Equation (1); |

| 3: Evaluate the fitness value of each individual; |

| 4: while Termination condition is not satisfied |

| 5: Randomly divide the population with equal k groups; |

| 6: For each group, create the control parameters for its individuals by Equation (11); |

| 7: for i = 1:NP |

| 8: Calculate the p value for each individual by Equation (10); |

| 9: Perform mutation using Equation (9); |

| 10: Perform crossover using Equation (7); |

| 11: Perform selection using Equation (8); |

| 12: end for |

| 13: Calculate the success rate for each group by Equation (14); |

| 14: Update the control parameters by Equations (12)–(14); |

| 15: Calculate the new population size using Equation (15), and create the next population; |

| 16: G = G + 1 |

| 17: end while |

From Algorithm 1, one can see that in each generation, the population is first randomly divided into k groups, and the control parameters for individuals in each group are independently created by the corresponding location parameters. After this, the proposed mutation strategy is employed to create the mutant individual for each target individual, and the binomial crossover operation is used to generate its offspring. Subsequently, the greedy selection strategy is adopted to update the current population according to the fitness value, and the presented piecewise population reduction mechanism is further utilized to remove the unpromising individuals from the current population. In particular, the proposed mutation strategy makes full use of the fitness value of individuals to properly choose a guider from the population to create its search direction, which can adaptively adjust the search ability of each individual. Meanwhile, the proposed group-based competitive control parameter setting can simultaneously utilize multiple successful parameter information to generate the control parameters for the current population, and competitively update the worst historical location information with the current search records during the whole evolution process. This setting can not only maintain the adaptivity of the algorithm for varying search environments, but also alleviate being mislead by the successful biases. Moreover, the presented piecewise population reduction mechanism reduces the size of the population slowly and quickly at the early and later stages of evolution separately, which can effectively ensure the exploration of the algorithm at the beginning of the search process, and speed up the convergence of the algorithm at the final evolution stage. Therefore, the proposed GCIDE can properly adjust the search ability of each individual, and effectively satisfy the trade-off between exploration and exploitation of the algorithm.

3.4. Complexity Analysis

Compared with classic DE, whose complexity is , GCIDE has additional computational burdens caused by three parts, including the mutation operation, the parameter control setting, and the population reduction mechanism. Herein, is the maximal number of generations. During one generation, the computation complexity of the mutation operation just includes that of calculating the value of pi, which needs to find the maximum and minimum fitness values among the current population. Thus, its extra complexity is . Meanwhile, for the parameter control setting, its only extra part is to calculate the success rate of every group based on their fitness values. Thereby, its extra complexity is . Moreover, the extra complexity of the population reduction mechanism is . As a result, the total complexity of GCIDE is , simplified as . Therefore, the new algorithm will not cause extra serious computational burden.

4. Numerical Experimental Results and Analysis

In this section, a series of experiments are performed to verify the advantage of our proposed GCIDE. Firstly, the optimal choice of the newly introduced parameters was determined experimentally. Then, comparative experiments with five typical DE variants and five typical non-DE heuristic algorithms are conducted on the IEEE CEC 2017 test suite [50]. In this test suite, four different types of functions are included, namely unimodal functions, simple multimodal functions, hybrid functions, and composition functions. To obtain statistical results, each algorithm carries out 30 independent runs on each benchmark function. Moreover, a validity experiment is conducted on the newly proposed strategy of GCIDE. Finally, the performance of GCIDE is verified on three practical problems.

4.1. Sensitivity Analyses of k and NPini

From Section 3, we can find that there are two parameters in the proposed algorithm, the number of groups k and the initial population size NPini. To analyze the effects of k and NPini on the performance of GCIDE, numerous experiments are conducted on eight typical functions by setting k and NPini to different values. These eight chosen functions are unimodal functions F01 and F02, simple multimodal functions F05 and F07, hybrid functions F10 and F16, and composition functions F21 and F26; and k and NPini are, respectively, set to 4, 6, 8, and 10, and 12D, 18D, 23D, and 25D. Table 1 lists the numerical results of GCIDE with different k and NPini, while the best results of each function are marked in bold (the same below).

Table 1.

Numerical results of GCIDE with various k and NPini.

According to the results shown in Table 1, on unimodal functions F1 and F2, GCIDE gets the superior results when the initial population size is 12D and 23D and the group number is six and eight. Meanwhile, on simple multimodal functions F5 and F7, GCIDE has excellent performance when the initial population size is 23D and 25D and the group number is four. Besides, on the hybrid functions F10 and F16, one can see that a smaller initial population size and a larger group number have the better results. Finally, on the composition functions F21 and F26, a larger initial population size and a smaller group number are more advantageous for GCIDE. Moreover, Table 2 further reports the rankings of GCIDE with various parameter values, which are based on the Friedman test [51]. From Table 2, it can be seen that GCIDE has the best ranking when the initial population size is 23D and the group number is four. Therefore, these two parameter values will be used in this paper.

Table 2.

Ranking of GCIDE with various parameter values.

4.2. Comparison and Discussion

In this subsection, we shall evaluate the performance of GCIDE by comparing it with five well-known DE variants (JADE [9], jDE [43], FDDE [24], rank-DE [10], and SHADE [44]) and five other typical heuristic algorithms (EPSO [52], MKE [53], HGSA [54], HLWCA [55], AOA [56]), respectively.

Particularly, among the chosen five other heuristic algorithms above, EPSO is a recent and well-known variant of PSO, which properly integrates five different top PSO variants. MKE is a new memetic evolutionary algorithm by randomly selecting some Monkey King particles, and then separately transforming them to a small group of monkeys for exploitation. Meanwhile, HGSA and HLWCA are both heuristic optimizers inspired by physics. HGSA is an enhanced gravitational search algorithm by designing hierarchical structures to interact with the population to enhance the exploration of the algorithm, while HLWCA is a recent variant of the water cycle algorithm by introducing the idea of hierarchical learning. Moreover, AOA is a new meta-heuristic method, which simulates the distributional behavior of the main arithmetic operators in mathematics. Obviously, these methods above have a certain representativeness. Thus, it is meaningful and reasonable to compare GCIDE with them here.

In these experiments, the parameters involved in the above ten compared algorithms are set to the same settings as in their original papers, and those of our method are set to the mentioned settings in Section 3. For clarity, their detailed parameter settings are further given in Table 3.

Table 3.

Parameter settings.

4.2.1. Comparison with Five DE Variants

First, five DE variants are selected as the comparison algorithms to verify the performance of GCIDE, including JADE [9], jDE [43], FDDE [24], rank-DE [10], and SHADE [44]. Table 4 and Table 5 provide the comparison results of GCIDE and five compared algorithms on the IEEE CEC2017 benchmark test suite with D = 30 and D = 50, respectively. Herein, “+”, “−” and “=” indicate that GCIDE has better, worse, and similar performance than the compared algorithms, respectively, “Ranking” is the average rank based on the Friedman test [51] (the same below).

Table 4.

Numerical results of GCIDE, JADE, jDE, FDDE, rank-DE, and SHADE on IEEE CEC 2017 test suite when D = 30.

Table 5.

Numerical results of GCIDE, JADE, jDE, FDDE, rank-DE, and SHADE on IEEE CEC 2017 test suite when D = 50.

As can be seen from Table 4, when D = 30, the proposed GCIDE obtains better results in most test functions, except for nine, including F1–F3, F6, F18, F20, F27, and F29–F30. Specifically, compared to JADE, GCIDE wins 25, loses 3, and holds 2. Against jDE, GCIDE wins 23, loses 5, and holds 2. Compared with FDDE, GCIDE wins 20, loses 9, and holds 1. Compared with rank-DE, GCIDE wins 27, loses 2, and holds 1. Compared with SHADE, GCIDE wins 25, loses 3, and holds 2. Meanwhile, when D = 50, from Table 5, the proposed GCIDE has a clear advantage in both unimodal functions, simple multimodal functions, hybrid functions, and composition functions, and just loses the advantage in eight functions, including F1, F3–F4, F6, F11, F25, F28, and F30. Specifically, compared to JADE, GCIDE wins 25, loses 5, and holds 0. Against jDE, GCIDE wins 23, loses 7, and holds 0. Compared with FDDE, GCIDE wins 25, loses 5, and holds 0. Compared with rank-DE, GCIDE wins 26, loses 4, and holds 0. Compared with SHADE, GCIDE wins 25, loses 5, and holds 0.

To verify the significance of GCIDE, Table 6 and Table 7 list the statistical results of GCIDE and its competitors based on Wilcoxon signed-rank tests [51] when D = 30 and D = 50, respectively. From Table 6 and Table 7, it can be seen that GCIDE has more R+ values compared to the other five algorithms in the cases of both D = 30 and D = 50, and the obtained p-values are all much less than 0.05. Thus, there is a significant difference between GCIDE and its comparison algorithms.

Table 6.

Comparison results of GCIDE with JADE, jDE, FDDE, rank-DE, and SHADE based on Wilcoxon signed-rank tests when D = 30.

Table 7.

Comparison results of GCIDE with JADE, jDE, FDDE, rank-DE, and SHADE based on Wilcoxon signed-rank tests when D = 50.

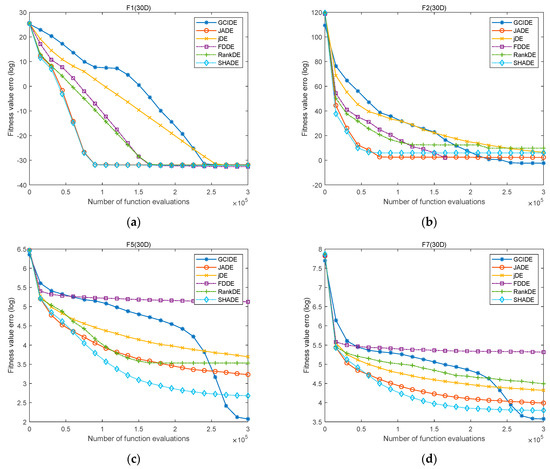

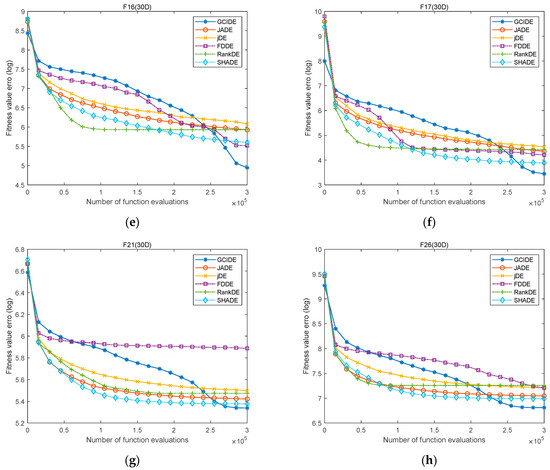

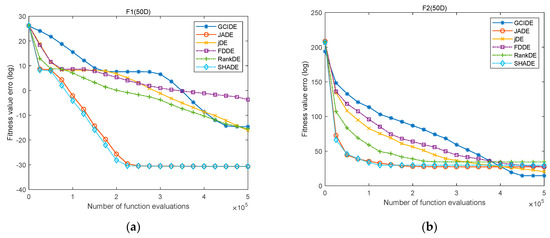

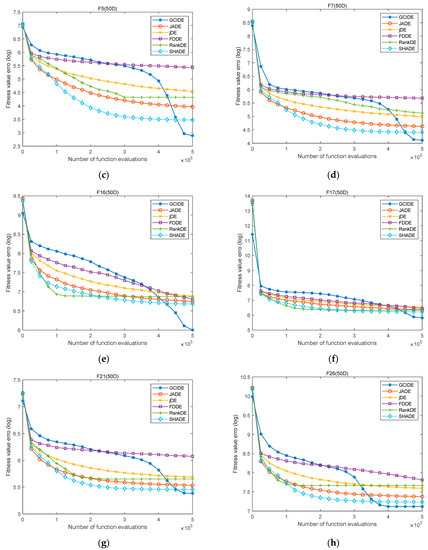

Moreover, to clearly show the convergence performance of GCIDE, the evolution curves of GCIDE and five compared algorithms are also depicted on eight typical functions, including F1, F2, F5, F7, F16, F17, F21, and F26. Figure 1 and Figure 2 draw their evolution curves when both D = 30 and D = 50, respectively. As shown in Figure 1, when D = 30, the convergence trend of GCIDE is slower in the early evolutionary stage and rapid when entering the late evolutionary stage. In particular, for simple multimodal functions F5 and F7, GCIDE converges quickly to find better solutions when other algorithms fall into local optima. For other hybrid and composition functions, GCIDE has a similar performance to its compared algorithms, but has a higher accuracy. Meanwhile, when D = 50, as shown in Figure 2, GCIDE still shows higher convergence accuracy. Therefore, GCIDE has a better convergence performance.

Figure 1.

The evolution curves of GCIDE, JADE, jDE, FDDE, rank−DE, and SHADE on F1 (a), F2 (b), F5 (c), F7 (d), F16 (e), F17 (f), F21 (g), F26 (h) with D = 30.

Figure 2.

The evolution curves of GCIDE, JADE, jDE, FDDE, rank−DE, and SHADE on F1 (a), F2 (b), F5 (c), F7 (d), F16 (e), F17 (f), F21 (g), F26 (h) with D = 50.

4.2.2. Comparison with Five Other Heuristic Algorithms

Five non-DE heuristic algorithms are further selected as comparison algorithms to show the performance of GCIDE, including EPSO [52], MKE [53], HGSA [54], HLWCA [55], and AOA [56]. Table 8 and Table 9 provide the comparison results of GCIDE and these five compared algorithms on the IEEE CEC2017 test suite with D = 30 and D = 50, respectively.

Table 8.

Numerical results of GCIDE, EPSO, MKE, HGSA, HLWCA, and AOA on IEEE CEC 2017 test suite when D = 30.

Table 9.

Numerical results of GCIDE, EPSO, MKE, HGSA, HLWCA, and AOA on IEEE CEC 2017 test suite when D = 50.

From Table 8, it can be seen that the proposed GCIDE obtains better results in most of the functions except for eleven test functions when D = 30, including F1, F4, F6, F9, F21, F22, F24–F26, F28, and F29. Specifically, compared to EPSO, GCIDE wins 23, loses 7, and holds 0. Against MKE, GCIDE wins 27, loses 3, and holds 0. Compared with HGSA, GCIDE wins 24, loses 6, and holds 0. Compared with HLWCA, GCIDE wins 28, loses 2, and holds 0. Compared with AOA, GCIDE is better on all test functions. Meanwhile, when D = 50, from Table 9, the proposed GCIDE is also absolutely superior on the nineteen functions, including F1, F3, F5, F7, F8, F10–F20, F22, F23, F27, and F29. Specifically, compared to EPSO, GCIDE wins 23, loses 7, and holds 0. Against MKE, GCIDE wins 27, loses 3, and holds 0. Compared with HGSA, GCIDE wins 22, loses 8, and holds 0. Compared with HLWCA, GCIDE wins 25, loses 5, and holds 0. Compared with AOA, GCIDE is better on all test functions.

To verify the significance of GCIDE, Table 10 and Table 11 list the statistical results of GCIDE and its competitors based on Wilcoxon signed-rank tests [51] when D = 30 and D = 50, respectively. From Table 10 and Table 11, it can be seen that GCIDE has more R+ values compared to the other five algorithms in the cases of both D = 30 and D = 50, and the obtained p-values are all much less than 0.05. Thus, there is a significant difference between GCIDE and its comparison algorithms.

Table 10.

Comparison results of GCIDE with EPSO, MKE, HGSA, HLWCA, and AOA based on Wilcoxon signed-rank tests when D = 30.

Table 11.

Comparison results of GCIDE with EPSO, MKE, HGSA, HLWCA, and AOA based on Wilcoxon signed-rank tests when D = 50.

4.3. Effectiveness of the Proposed Strategies

In this section, we conduct comparative experiments on the effectiveness of the strategies. For simplicity, we denote the variant of GCIDE using a fixed population size by GCIDE-1 and the variant of GCIDE without grouping the parameter control by GCIDE-2. Also, to investigate the effect of different mutation strategies on the performance of GCIDE, we further choose two additional mutation operators DE/rand/1 and DE/best/1, and denote the GCIDE with them by GCIDE (rand) and GCIDE (best), separately. Table 12 provides the numerical results of GCIDE and its four variants above on the IEEE CEC 2017 test suite with D = 30.

Table 12.

Numerical results of GCIDE and its four variants above on IEEE CEC 2017 test suite with D = 30.

As can be seen from Table 12, the mutation strategy has a large effect on the performance of GCIDE, and both DE/rand/1 and DE/best/1 fail to achieve good optimization performance. Besides, we can see that GCIDE-2 performs worse than GCIDE on the simple multi-peaked functions and combinatorial functions. Meanwhile, the overall performance of GCIDE-1 is poorer than GCIDE on all test functions. The reason for this is that the group-based parameter control method effectively reduces the mutual misleading of parameters, and the piecewise population size reduction mechanism can effectively enhance the performance of the algorithm by making the algorithm inclined to exploration in the early stage and exploitation in the later stage. Thereby, the proposed strategies can effectively promote the optimization performance of GCIDE.

4.4. Real Applications

In this subsection, three practical problems are further adopted and tested to show the practicality of GCIDE, including the Design of Tension/Compression Spring [57], the side collision problem of automobiles [58], and the Spread Spectrum Radar Polyphase Code Design [59].

4.4.1. Design of Tension/Compression Spring

First, the tension/compression spring design problem [57] is used to show the performance of GCIDE. This problem is subject to constraints on minimum deflection, shear stress, surge frequency, limits on the outside diameter, and design variables. The design variables are the mean coil diameter D(=x1), the wire diameter d(=x2), and the number of active coils N(=x3). The problem can be stated as:

and it is subject to the following constraints:

while the ranges of these variables are

The numerical results of GCIDE and five DE variants used in the last subsection on this problem are shown in Table 13, where each algorithm is run 30 times to obtain the mean, standard deviation, the best and worst values. From Table 13, it can be seen that GCIDE, FDDE, rank-DE, and SHADE have the same performance on mean, best and worst values, and GCIDE has the best result on standard deviation. This shows that GCIDE has strong solving ability and better stability than other compared algorithms on this real problem.

Table 13.

Numerical results of GCIDE, JADE, jDE, FDDE, rank-DE, and SHADE on the tension/compression spring design problem.

4.4.2. Side Collision Problem of Automobile

Moreover, there are common forms of collisions, such as frontal collisions, side collisions, and rear-end collisions. Among them, side collisions are less frequent than the other two forms of collisions, yet side collisions are the most harmful to drivers and passengers. Moreover, with the increasing influence of C-NCAP management rules on consumers, automakers are also paying more attention to the passive safety of cars. In detail, more descriptions and discussions on this problem can be found in reference [58].

For clarity, this practical problem can be modelled by the following optimization problem with 11 decision variables:

Here, is a function on the weight of the door, M denotes the penalty term here and is set to 1000, and denotes the constraint function, which can be shown below:

Here, is the B-pillar inner, is the B-pillar reinforcement, is the floor side inner, is the cross member, is the door beam, is the door beltline reinforcement, is the roof rail, is the materials of B-pillar inner, is the floor side inner, is the barrier height, and is the hitting position. The ranges of these variables are

As described above, we have D = 11 in this experiment. Table 14 lists the experimental results of GCDE, JADE, jDE, FDDE, rank-DE, and SHADE with 30 independent runs on this problem, and their mean, standard deviation, best and worst values of the 30 running results are given. From Table 14, we can see that GCIDE gets the best results in all terms of indicators. Thus, GCIDE is a more promising optimizer for this real problem.

Table 14.

Numerical results of GCIDE, JADE, jDE, FDDE, rank-DE, and SHADE on the side collision problem of automobile.

4.4.3. Spread Spectrum Radar Polyphase Code Design

Finally, the other real engineering problem, namely Spread Spectrum Radar Polyphase Code Design (SSRPPCD) [59], is also used to demonstrate the effectiveness of GCIDE. In detail, this problem can be mathematically described as:

Herein, , , for and

From the description of the problem, it is clear that the problem has a complex structure, which is a great challenge for the performance of the algorithm.

Table 15 lists the numerical results of GCDE and JADE, jDE, FDDE, rank-DE, and SHADE when D = 30, and their mean, standard deviation, best and worst values of the 30 running results are given. From Table 15, one can see that GCIDE has better performance than its all counterparts in terms of mean value, standard deviation, best value and worst value. Thus, GCIDE is better optimizer on this problem.

Table 15.

Numerical results of GCIDE, JADE, jDE, FDDE, rank-DE, and SHADE on the Spread Spectrum Radar Polyphase Code Design when D = 30.

5. Conclusions

In this paper, an enhanced DE (GCIDE) algorithm was presented for solving global optimization problems. In GCIDE, a new mutation operator named “DE/current-to-pbest_id/1” was first developed to properly adjust the search ability of each individual by using its fitness value to determine the chosen range of its guider. Meanwhile, a group-based competitive parameter control setting was proposed to maintain the various search requirements of the population by randomly and equally dividing the whole population into multiple groups, independently creating the control parameters for each group, and competitively updating the worst location parameter information with the current successful parameters. Moreover, a piecewise population size reduction mechanism was further put forward to enhance the exploration and convergence of the population at the early and later search stages, respectively. Compared to the existing DE variants, GCIDE adaptively adjusts the chosen range of the guider for each individual, independently generates the control parameters for each group, and competitively replaces the worst location parameter information among all groups with the current successful parameters, while reducing the population size with different speeds at different search periods. Thus, GCIDE is capable of effectively enhancing the adaptivity of the algorithm and balancing well its exploration and exploitation. At last, the performance of GCIDE was evaluated and discussed by conducting a series of experiments on the benchmark functions from the IEEE CEC 2017 test suite and three real applications. Experimental results indicated the superiority of GCIDE compared to ten typical or well-known compared methods.

It should be also mentioned that the fitness value of the individual is just used to measure its characteristic and then adjust its search performance in this paper, which might be not able to precisely evaluate its search potential. Besides, the special requirement of individuals is not fully taken into consideration during the generation of control parameters, and only a few real applications are introduced in the current paper. Thereby, we will further design a new evaluation approach on the search characteristic of individuals, a more effective and efficient adaptive parameter control method, and apply GCIDE to more practical optimization problems in the future.

Author Contributions

Conceptualization, Y.G. and M.T.; methodology, X.H.; software, Y.G. and Y.M.; validation, Y.G., Y.M. and M.T.; formal analysis, Y.G.; writing—original draft preparation, Y.G. and M.T.; writing—review and editing, Y.G. and M.T.; visualization, Y.G.; supervision, X.H. and Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China No. 12101477, and the Scientific Research Startup Foundation of Xi’an Polytechnic University (BS202052).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- de Fátima Morais, M.; Ribeiro, M.H.D.M.; da Silva, R.G.; Mariani, V.C.; dos Santos Coelho, L. Discrete differential evolution metaheuristics for permutation flow shop scheduling problems. Comput. Ind. Eng. 2022, 166, 107956. [Google Scholar] [CrossRef]

- Zou, D.; Gong, D. Differential evolution based on migrating variables for the combined heat and power dynamic economic dispatch. Energy 2022, 238, 121664. [Google Scholar] [CrossRef]

- Wang, G.-G.; Gao, D.; Pedrycz, W. Solving multiobjective fuzzy job-shop scheduling problem by a hybrid adaptive differential evolution algorithm. IEEE Trans. Ind. Inform. 2022, 18, 8519–8528. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, X.; Wu, Z. Spectrum allocation by wave based adaptive differential evolution algorithm. Ad Hoc Netw. 2019, 94, 101969. [Google Scholar] [CrossRef]

- Chai, X.; Zheng, Z.; Xiao, J.; Yan, L.; Qu, B.; Wen, P.; Wang, H.; Zhou, Y.; Sun, H. Multi-strategy fusion differential evolution algorithm for UAV path planning in complex environment. Aerosp. Sci. Technol. 2022, 121, 107287. [Google Scholar] [CrossRef]

- Kozlov, K.; Ivanisenko, N.; Ivanisenko, V.; Kolchanov, N.; Samsonova, M.; Samsonov, A.M. Enhanced differential evolution entirely parallel method for biomedical applications. In Proceedings of the Parallel Computing Technologies: 12th International Conference, St. Petersburg, Russia, 30 September–4 October 2013; pp. 409–416. [Google Scholar]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K. Differential evolution mutations: Taxonomy, comparison and convergence analysis. IEEE Access 2021, 9, 68629–68662. [Google Scholar] [CrossRef]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive Differential Evolution With Optional External Archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Gong, W.; Cai, Z. Differential evolution with ranking-based mutation operators. IEEE Trans. Cybern. 2013, 43, 2066–2081. [Google Scholar] [CrossRef]

- Wang, J.; Liao, J.; Zhou, Y.; Cai, Y. Differential evolution enhanced with multiobjective sorting-based mutation operators. IEEE Trans. Cybern. 2014, 44, 2792–2805. [Google Scholar] [CrossRef] [PubMed]

- Gong, W.; Cai, Z.; Liang, D. Adaptive ranking mutation operator based differential evolution for constrained optimization. IEEE Trans. Cybern. 2015, 45, 716–727. [Google Scholar] [CrossRef] [PubMed]

- Cai, Y.; Chen, Y.; Wang, T.; Tian, H. Improving differential evolution with a new selection method of parents for mutation. Front. Comput. Sci. 2016, 10, 246–269. [Google Scholar] [CrossRef]

- Yi, W.; Zhou, Y.; Gao, L.; Li, X.; Mou, J. An improved adaptive differential evolution algorithm for continuous optimization. Expert Syst. Appl. 2016, 44, 1–12. [Google Scholar] [CrossRef]

- Sharifi-Noghabi, H.; Rajabi Mashhadi, H.; Shojaee, K. A novel mutation operator based on the union of fitness and design spaces information for Differential Evolution. Soft Comput. 2016, 21, 6555–6562. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Suganthan, P.N. Real-parameter unconstrained optimization based on enhanced fitness-adaptive differential evolution algorithm with novel mutation. Soft Comput. 2017, 22, 3215–3235. [Google Scholar] [CrossRef]

- Meng, Z.; Pan, J.-S. HARD-DE: Hierarchical archive based mutation strategy with depth information of evolution for the enhancement of differential evolution on numerical optimization. IEEE Access 2019, 7, 12832–12854. [Google Scholar] [CrossRef]

- Xia, X.; Tong, L.; Zhang, Y.; Xu, X.; Yang, H.; Gui, L.; Li, Y.; Li, K. NFDDE: A novelty-hybrid-fitness driving differential evolution algorithm. Inf. Sci. 2021, 579, 33–54. [Google Scholar] [CrossRef]

- Wang, M.; Ma, Y.; Wang, P. Parameter and strategy adaptive differential evolution algorithm based on accompanying evolution. Inf. Sci. 2022, 607, 1136–1157. [Google Scholar] [CrossRef]

- Sheng, M.; Chen, S.; Liu, W.; Mao, J.; Liu, X. A differential evolution with adaptive neighborhood mutation and local search for multi-modal optimization. Neurocomputing 2022, 489, 309–322. [Google Scholar] [CrossRef]

- Meng, Z.; Yang, C.; Li, X.; Chen, Y. Di-DE: Depth Information-Based Differential Evolution With Adaptive Parameter Control for Numerical Optimization. IEEE Access 2020, 8, 40809–40827. [Google Scholar] [CrossRef]

- Ma, Y.; Bai, Y. A multi-population differential evolution with best-random mutation strategy for large-scale global optimization. Appl. Intell. 2020, 50, 1510–1526. [Google Scholar] [CrossRef]

- Guan, B.; Zhao, Y.; Yin, Y.; Li, Y. A differential evolution based feature combination selection algorithm for high-dimensional data. Inf. Sci. 2021, 547, 870–886. [Google Scholar] [CrossRef]

- Cheng, J.; Pan, Z.; Liang, H.; Gao, Z.; Gao, J. Differential evolution algorithm with fitness and diversity ranking-based mutation operator. Swarm Evol. Comput. 2021, 61, 100816. [Google Scholar] [CrossRef]

- Liu, D.; He, H.; Yang, Q. Function value ranking aware differential evolution for global numerical optimization. Swarm Evol. Comput. 2023, 78, 101282. [Google Scholar] [CrossRef]

- Li, X.; Wang, K.; Yang, H. PAIDDE: A permutation archive information directed differential evolution algorithm. IEEE Access 2022, 10, 50384–50402. [Google Scholar] [CrossRef]

- Qin, A.K.; Huang, V.L.; Suganthan, P.N. Differential Evolution Algorithm with Strategy Adaptation for Global Numerical Optimization. IEEE Trans. Evol. Comput. 2009, 13, 398–417. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, Z.; Zhang, Q. Differential Evolution with Composite Trial Vector Generation Strategies and Control Parameters. IEEE Trans. Evol. Comput. 2011, 15, 55–66. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Z.-Z.; Li, J.; Li, H.-X.; Yen, G.G. Utilizing cumulative population distribution information in differential evolution. Appl. Soft Comput. 2016, 48, 329–346. [Google Scholar] [CrossRef]

- Gui, L.; Xia, X.; Yu, F.; Wu, H.; Wu, R.; Wei, B.; Zhang, Y.; Li, X.; He, G. A multi-role based differential evolution. Swarm Evol. Comput. 2019, 50, 100508. [Google Scholar] [CrossRef]

- Li, Z.; Shi, L.; Yue, C.; Shang, Z.; Qu, B. Differential evolution based on reinforcement learning with fitness ranking for solving multimodal multiobjective problems. Swarm Evol. Comput. 2019, 49, 234–244. [Google Scholar] [CrossRef]

- Meng, Z.; Zhong, Y.; Yang, C. CS-DE: Cooperative strategy based differential evolution with population diversity enhancement. Inf. Sci. 2021, 577, 663–696. [Google Scholar] [CrossRef]

- Kumar, A.; Misra, R.K.; Singh, D.; Das, S. Testing a multi-operator based differential evolution algorithm on the 100-digit challenge for single objective numerical optimization. In Proceedings of the 2019 IEEE Congress on Evolutionary Computation (CEC), Wellington, New Zealand, 10–13 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 34–40. [Google Scholar]

- Fachin, J.M.; Reynoso-Meza, G.; Mariani, V.C.; dos Santos Coelho, L. Self-adaptive differential evolution applied to combustion engine calibration. Soft Comput. 2021, 25, 109–135. [Google Scholar] [CrossRef]

- Deng, W.; Ni, H.; Liu, Y.; Chen, H.; Zhao, H. An adaptive differential evolution algorithm based on belief space and generalized opposition-based learning for resource allocation. Appl. Soft Comput. 2022, 127, 109419. [Google Scholar] [CrossRef]

- Yi, W.; Chen, Y.; Pei, Z.; Lu, J. Adaptive differential evolution with ensembling operators for continuous optimization problems. Swarm Evol. Comput. 2022, 69, 100994. [Google Scholar] [CrossRef]

- Tan, Z.; Tang, Y.; Huang, H.; Luo, S. Dynamic fitness landscape-based adaptive mutation strategy selection mechanism for differential evolution. Inf. Sci. 2022, 607, 44–61. [Google Scholar] [CrossRef]

- Qiao, K.; Liang, J.; Yu, K.; Yuan, M.; Qu, B.; Yue, C. Self-adaptive resources allocation-based differential evolution for constrained evolutionary optimization. Knowl.-Based Syst. 2022, 235, 107653. [Google Scholar] [CrossRef]

- Zhang, H.; Sun, J.; Xu, Z.; Shi, J. Learning unified mutation operator for differential evolution by natural evolution strategies. Inf. Sci. 2023, 632, 594–616. [Google Scholar] [CrossRef]

- Li, Y.; Han, T.; Tang, S.; Huang, C.; Zhou, H.; Wang, Y. An improved differential evolution by hybridizing with Estimation of distribution algorithm. Inf. Sci. 2023, 619, 439–456. [Google Scholar] [CrossRef]

- Li, Y.; Wang, S.; Yang, H. Enhancing differential evolution algorithm using leader-adjoint populations. Inf. Sci. 2023, 622, 235–268. [Google Scholar] [CrossRef]

- Liu, J.; Lampinen, J. A fuzzy adaptive differential evolution algorithm. Soft Comput. 2005, 9, 448–462. [Google Scholar] [CrossRef]

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-Adapting Control Parameters in Differential Evolution: A Comparative Study on Numerical Benchmark Problems. IEEE Trans. Evol. Comput. 2006, 10, 646–657. [Google Scholar] [CrossRef]

- Tanabe, R.; Fukunaga, A. Success-history based parameter adaptation for differential evolution. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 71–78. [Google Scholar]

- Tanabe, R.; Fukunaga, A. Improving the search performance of SHADE using linear population size reduction. In Proceedings of the 2014 IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1658–1665. [Google Scholar]

- Ali, M.Z.; Awad, N.H.; Suganthan, P.N.; Reynolds, R.G. An Adaptive Multipopulation Differential Evolution With Dynamic Population Reduction. IEEE Trans. Cybern. 2017, 47, 2768–2779. [Google Scholar] [CrossRef] [PubMed]

- Poláková, R.; Tvrdík, J.; Bujok, P. Differential evolution with adaptive mechanism of population size according to current population diversity. Swarm Evol. Comput. 2019, 50, 100519. [Google Scholar] [CrossRef]

- Xia, X.; Gui, L.; Zhang, Y.; Xu, X.; Yu, F.; Wu, H.; Wei, B.; He, G.; Li, Y.; Li, K. A fitness-based adaptive differential evolution algorithm. Inf. Sci. 2021, 549, 116–141. [Google Scholar] [CrossRef]

- Zeng, Z.; Zhang, M.; Zhang, H.; Hong, Z. Improved differential evolution algorithm based on the sawtooth-linear population size adaptive method. Inf. Sci. 2022, 608, 1045–1071. [Google Scholar] [CrossRef]

- Awad, N.; Ali, M.; Liang, J.; Qu, B.; Suganthan, P. Problem definitions and evaluation criteria for the CEC 2017 special session and competition on single objective bound constrained real-parameter numerical optimization. In Technical Report; Nanyang Technological University: Singapore, 2016; pp. 1–34. [Google Scholar]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Lynn, N.; Suganthan, P.N. Ensemble particle swarm optimizer. Appl. Soft Comput. 2017, 55, 533–548. [Google Scholar] [CrossRef]

- Meng, Z.; Pan, J.S. Monkey king evolution: A new memetic evolutionary algorithm and its application in vehicle fuel consumption optimization. Knowl.-Based Syst. 2016, 97, 144–157. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, Y.; Gao, S.; Pan, H.; Yang, G. A hierarchical gravitational search algorithm with an effective gravitational constant. Swarm Evol. Comput. 2019, 46, 118–139. [Google Scholar] [CrossRef]

- Chen, C.; Wang, P.; Dong, H.; Wang, X. Hierarchical learning water cycle algorithm. Appl. Soft Comput. 2020, 86, 105935. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Arora, J. Introduction to Optimum Design; Elsevier: Amsterdam, The Netherlands, 2012. [Google Scholar]

- Bayzidi, H.; Talatahari, S.; Saraee, M.; Lamarche, C.-P. Social network search for solving engineering optimization problems. Comput. Intell. Neurosci. 2021, 2021, 8548639. [Google Scholar] [CrossRef] [PubMed]

- Das, S.; Suganthan, P.N. Problem definitions and evaluation criteria for CEC 2011 competition on testing evolutionary algorithms on real world optimization problems. Jadavpur Univ. Nanyang Technol. Univ. Kolkata 2010, 341–359. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).