Abstract

In this paper, firstly, we studied the relationship between negative concept lattices with increasing the granularity sizes of the attributes. Aiming to do this, negative concepts and covering relations were both classified into three types, and the sufficient and necessary conditions of distinguishing these kinds of negative concepts and covering relations are given, respectively. Further, based on the above analysis, an algorithm for updating negative concept lattices after the increase is proposed. Finally, the experimental results demonstrated that our algorithm performed significantly better than the direct construction algorithm.

Keywords:

concept analysis; negative concept lattices; update of negative concept lattices; increase of the granularity sizes of attributes MSC:

06C15; 68T30

1. Introduction

Formal concept analysis (FCA), proposed by Wille [1], has been widely applied in knowledge discovery [2,3,4,5,6]. In FCA, formal contexts, formal concepts, and concept lattices are the three cornerstones [7]. To deal with various data, formal contexts are extended to fuzzy contexts [8,9,10,11,12,13,14,15,16], decision contexts, incomplete contexts [17,18,19,20], multi-scale contexts [21,22,23,24], and triadic contexts [25]. Most of the studies on FCA concentrate on the following topics: concept lattices’ construction [26,27,28], knowledge reduction [29,30], rule acquisition [19,31,32,33,34,35,36], three-way FCA [37,38,39,40,41,42,43,44], and concept learning [45,46].

In classic FCA, attention is only paid to positive attributes, while negative attributes are neglected. In fact, positive attributes and negative attributes are of equal importance in many fields. Qi et al. [37] proposed negative operators. To be specific, using negative operators, the attributes that are not owned by any given set of objects and the objects that do not have any given set of attributes can be obtained. Based on negative operators, negative concepts and negative concept lattices are defined.

In classic contexts, an attribute is either owned or not owned by an object, that is the relation between an object and an attribute is a binary relation. However, in real data, attributes could be many-valued, and these many-valued attributes are called general attributes. A data table with general attributes needs to be transformed into a one-valued context by the scaling approach [7]. The scaling approach is to replace each general attribute with a sequence of one-valued attributes considered as the corresponding values of the general attribute at certain granularity sizes. For a general attribute, finding appropriate granularity sizes is expected according to a specific requirement. Since the requirements are different, changing the granularity sizes of general attributes is common.

If a classic or negative concept lattice is reconstructed every time whenever the granularity sizes of the attributes change, it is obvious that it will be very computationally expensive and time consuming. In order to avoid reconstructing classic or negative concept lattices, studying the issues of updating classical or negative concept lattices is important when the granularity sizes of attributes change. Many researchers [8,47,48,49] have investigated how to update classic concept lattices when the granularity sizes of attributes change. However, little work has been performed on how to update negative concept lattices when the granularity sizes of attributes change. This paper focused on how to update negative concept lattices with increasing the granularity sizes of the attributes.

The rest of this paper is structured as follows. Section 2 recalls the basic knowledge about formal concept analysis. Section 3 studies the relationship between negative concept lattices (negative concepts and covering relations) before and after increasing the granularity sizes of attributes. Section 4 develops a new algorithm (named NCL-Fold) to update the negative concept lattices after the increase. Section 5 carries out the comparison experiment for verifying the effectiveness of our algorithm. Section 6 concludes this paper.

2. Preliminaries

In this section, we present the related notions and propositions in FCA. A detailed description of them can be found in [1,37,47]. We write to denote the power set of a set.

A formal context is a triple , where G refers to a set of objects, M refers to a set of attributes, and I refers to a binary relation between G and M. Here, (or ) denotes that the object x has the attribute m, while (or ) denotes that the object x does not have the attribute m, where .

Let be a formal context. For and , the positive derivative operator ∗ and the negative derivative operator are as follows:

For these operators, there exist some useful properties. Take the negative derivative operator for example. For and , then:

- (1)

- and ;

- (2)

- and ;

- (3)

- and ;

- (4)

- ;

- (5)

- and ;

- (6)

- and .

Using these derivative operators, formal concepts and negative concepts are formed. For and :

(1) A formal concept is a pair , iff and ;

(2) A negative concept (N-concept) is a pair , iff and .

The partial-order relationship among formal concepts and N-concepts is defined as follows, respectively. For formal concepts and , N-concepts and of the formal context :

All formal concepts and N-concepts generated from the formal context compose the formal concept lattice and the N-concept lattice under the above partial-order relationships, respectively. The formal concept lattice and N-concept lattice of the formal context are denoted by and , respectively.

On the basis of the partial-order ⩽, the definition of the covering relation ≺ is proposed. For formal concepts and , N-concepts and of the formal context :

On the basis of the partial-order ≺, the definitions of lower neighbors (or children) and upper neighbors (or parents) are proposed. For formal concepts and , N-concepts and of the formal context :

(1) If , then is called a lower neighbor (or a child) of or is called an upper neighbor (or a parent) of ;

(2) If , then is called a lower neighbor (or a child) of or is called an upper neighbor (or a parent) of .

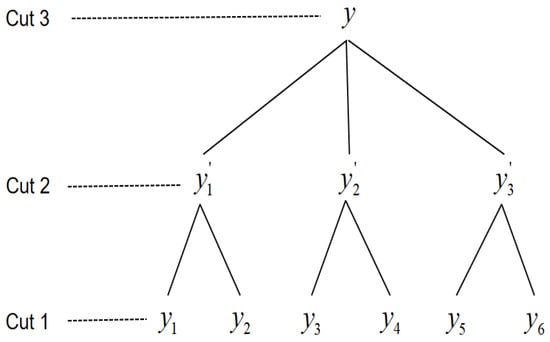

In addition, the notions of a granularity tree, cuts, and increasing the granularity sizes are given. A granularity tree (g-tree) of attribute m is a rooted tree, in which each node of the tree is labeled as a unique attribute name, and if, for any node v, the children of node v are nodes , then must be a partition of . For a set of nodes in the g-tree of attribute m, if, for each leaf node , there is only one node on the path from the root m to , the set of nodes is a cut at certain granularity sizes in the g-tree. For two cuts in a given g-tree and , if, for any , there exists such that , is called a refinement of , denoted by . Increasing the granularity sizes of an attribute m replaces the existing finer cut () of the attribute m with another coarser cut () of the attribute m, where and are two different cuts in the g-tree of attribute m, and .

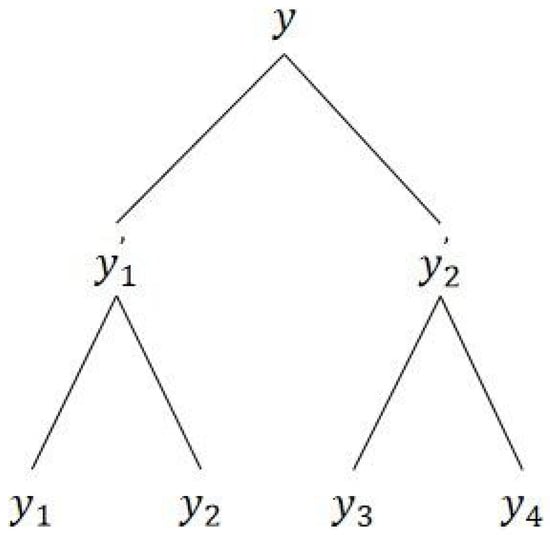

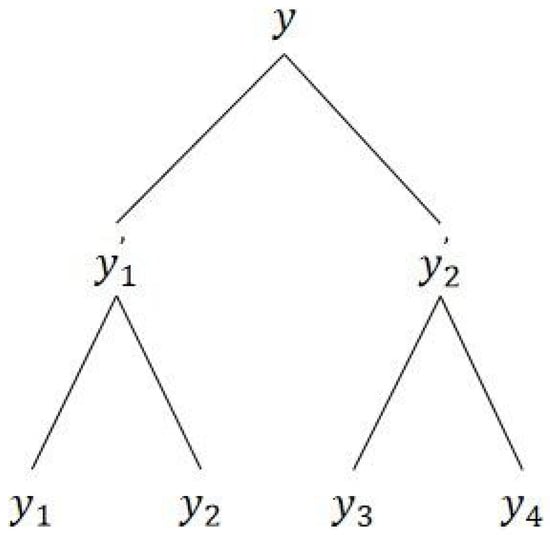

Example 1.

Table 1 depicts a context , where a, b, and c are three one-valued attributes and y is a many-valued attribute. The g-tree for attribute y is displayed in Figure 1. In the g-tree, there are five cuts: , , , , and . In Table 1, the cut is used. In Table 1, for attribute y, by replacing the finer cut with the coarser cut , which increases the granularity sizes, the context is transformed into the context (i.e., Table 2).

Table 1.

The context .

Figure 1.

The g-tree for attribute y in Table 1.

Table 2.

The context .

For a formal context , before and after increasing the granularity sizes of the attributes, the negative derivative operators, the N-concept lattices, the subconcept–supconcept relations, and the covering relations are denoted by , , , , and , , , , respectively, hereafter.

For the finer cut and the coarser cut of attribute m, there exists the attribute-value-transformation function such that . On the basis of the function , the following mappings are defined:

such that ;

such that ;

such that ;

such that ;

such that .

3. Relationship between N-Concept Lattices before and after Increasing the Granularity Sizes of Attributes

In this section, we discuss the relationship between N-concept lattices before and after increasing the granularity sizes of the attributes. The following theorems are useful to discuss the issue.

Theorem 1.

Let and be the N-concept lattices of a given formal context before and after increasing the granularity sizes of an attribute m, respectively, and and be the cuts of the attribute m before and after the increase. If , then, for each , is an extent in .

Proof.

It is obvious that increasing the granularity sizes of an attribute m is equal to adding attributes in and removing attributes in . In addition, for , we have . Thus, for each , must be an extent in if X is an extent in . □

Theorem 2.

Let and be the N-concept lattices of a given formal context before and after increasing the granularity sizes of an attribute m, respectively. If is an N-concept in , then X is an extent in .

Proof.

We discuss two situations for any :

(i) Assume . Obviously, holds. Thus, we can obtain that X is an extent in .

(ii) Assume . Since holds for , we can obtain , where . Hence, . Consequently, X is an extent in .

Finally, we can conclude that X is an extent in for any .

□

Based on Theorem 2, we can easily conclude the following theorem.

Theorem 3.

Let and be the N-concept lattices of a given formal context before and after increasing the granularity size of an attribute m, respectively. There does not exist new N-concepts in .

3.1. Relationship between N-Concepts before and after Increasing the Granularity Sizes of Attributes

We can describe N-concepts in N-concept lattices before and after increasing the granularity sizes of attributes in terms of the following definition.

Definition 1.

Let and be the N-concept lattices of a given formal context before and after increasing the granularity sizes of an attribute m, respectively, and and be the cuts of the attribute m before and after the increase, respectively. Then:

- (1)

- If and , is an old N-concept, denoted by ;

- (2)

- If and X is not an extent of any N-concept in , is a deleted N-concept, denoted by ;

- (3)

- if with and , is a tight N-concept, denoted by .

Next, we give the necessary and sufficient conditions of each category.

Theorem 4.

For an N-concept , we have:

- (1)

- is an old N-concept if and only ;

- (2)

- is a deleted N-concept if and only if the following statements are true: (i) ; (ii) there exists at least one N-concept among the parents of in such that satisfies the two conditions: and ;

- (3)

- is a tight N-concept if and only if the following statements are true: (i) ; (ii) there does not exist such an N-concept among the parents of in such that satisfies the two conditions: and .

Proof.

- (1)

- (⇒) If is an old N-concept, it is obvious that is an N-concept in and an N-concept in . Next, we prove by using reduction to absurdity. Assume that . According to the process of the proof in Theorem 2, we can obtain , where . Hence, , which contradicts . Hence, .

(⇒) If with is an N-concept in , and hold. Hence, is an N-concept in and an N-concept in . That is to say, is an old N-concept.

- (2)

- (⇒) Firstly, by using reduction to absurdity, we prove that . Assume that . Because the objects do not change before and after the increase, we can obtain . Furthermore, it follows that X is an extent in , which is not consistent with . Hence, .

Secondly, we prove the rest.

Because of , we have and . Since holds for , we can obtain: ; holds when ; holds when . Noting that is a deleted N-concept, it follows that and . Hence, . Therefore, there exists an N-concept such that , and .

Then, we prove that E satisfies the following conditions. Because of and , we have and . That is to say, and .

Finally, there must exist an N-concept such that , and .

(⇐) Assume that and there exists one N-concept with such that and . Obviously, holds. By the analysis in (⇒), it follows that . Since is a parent of and holds, we can conclude that . Thus, X is not an extent in . That is to say, is a deleted N-concept:

- (3)

- It is easy to reach this conclusion according to (1) and (2) in Theorem 4.

□

Based on Theorem 4, we show the following definition and remark.

Definition 2.

Let be the N-concept lattices of a given formal context before increasing the granularity sizes of an attribute m and be the cuts of the attribute m before the increase. For two N-concepts with and with and :

- (1)

- is described as a destroyer of , and is described as a victim of ;

- (2)

- The N-concept with and is described as a terminator of .

Remark 1.

For two N-concepts and in , if is a deleted N-concept and is a destroyer of , we have:

- (1)

- The number of destroyers of is either equivalent to one or more than one, and the set of all the destroyers is denoted by ;

- (2)

- The number of casualties of is either equivalent to one or more than one, and the set of all the victims of is denoted by .

- (3)

- The number of terminators of is equivalent to one, and the only terminator of is denoted by ;

- (4)

- The terminator of is the maximum one among all the destroyers of .Combining Definition 2 with Theorem 4 and Remark 1, we have the following theorem.

Theorem 5.

For a deleted N-concept , we have:

- (1)

- If is the terminator of , then must not be a deleted N-concept;

- (2)

- If is a destroyer of and is not the terminator of , then must be a deleted N-concept.

- (3)

- If is a destroyer of and is not a deleted N-concept, then must be the terminator of .

Proof.

- (1)

- Since is the terminator of , we can obtain . Obviously, must be an N-concept in . That is to say, is not a deleted N-concept.

- (2)

- Let be the terminator of . Obviously, . Since is the maximum one among all the destroyers of and with is a destroyer of , we have and . Hence, must be a deleted N-concept and is the terminator of .

- (3)

- It is easy to reach this conclusion according to (2) in Theorem 5.

□

3.2. Relationship between the Covering Relations before and after Increasing the Granularity Sizes of Attributes

We can describe the covering relations in N-concept lattices before and after increasing the granularity sizes of the attributes in terms of the following definition.

Definition 3.

Let and be the N-concept lattices of a given formal context before and after increasing the granularity sizes of an attribute m, respectively. Then:

- (1)

- If and hold, (or ) is an old covering relation;

- (2)

- If and hold, is a new covering relation;

- (3)

- If holds and at least one of and is a deleted N-concept, is a deleted covering relation.

For and with , two mappings are given by

Obviously, is not a bijection, but is a bijection.

Let with . If is the terminator of a certain N-concept, then we give the following remarks:

The covering relations among transformed concepts are listed in the following two theorems.

Theorem 6.

For , if is not the terminator of any N-concept, then .

Proof.

At first, by using reduction to absurdity, we prove that is not a deleted N-concept if is not the terminator of any N-concept and is a child of in . Assume that is a deleted N-concept, which implies . Obviously, there must exist an N-concept in such that , and is the terminator of . According to the definition of terminators, we can obtain that and . Because with is not the terminator of any N-concept and is a child of in , which implies , , we have . Hence, , which means . That is to say, cannot be a child of in , which is inconsistent with the fact that is a child of in . Thus, is not a deleted N-concept. Therefore, and hold. In addition, since there do not exist new N-concepts in , is a child of in . In summary, holds. □

Theorem 7.

For , if is the terminator of a certain N-concept, then .

Proof.

Since there does not exist new N-concepts in , it is obvious that .

Let be an N-concept . It is easily seen that there must exist such that , which implies . Hence, holds.

Let be an N-concept . It is easily seen that there must exist two N-concepts and in such that , and . Hence, according to the definition of terminators, we have , which implies that is an N-concept in or . That is to say, holds. Thus, we can obtain and .

In summary, . Finally, we can easily conclude that . □

Theorem 8.

Let and be the N-concept lattices of a given formal context before and after increasing the granularity sizes of an attribute m, respectively. For two non-deleted N-concept and in , the:

- (1)

- is an old covering relation, if and only if one of the following statements is true: (i) is not the terminator of any N-concept and is an N-concept in ; (ii) is the terminator of a certain N-concept and is an N-concept in ;

- (2)

- is a new covering relation, if and only if is the terminator of a certain N-concept and is an N-concept in .

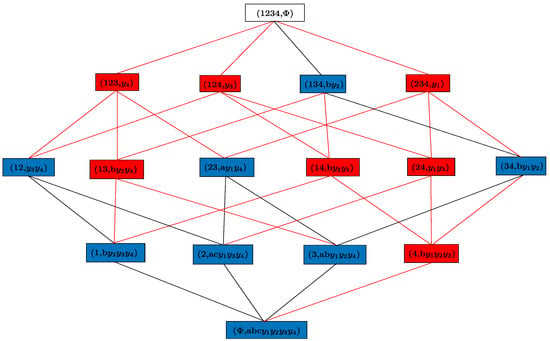

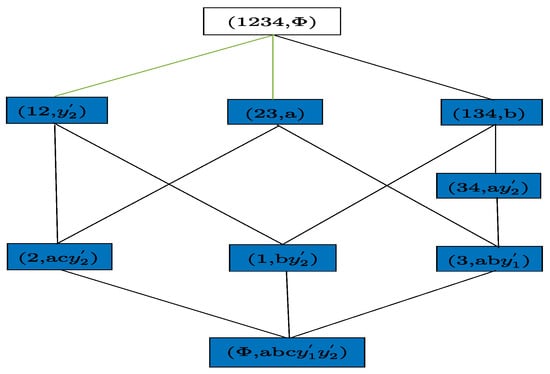

Example 2.

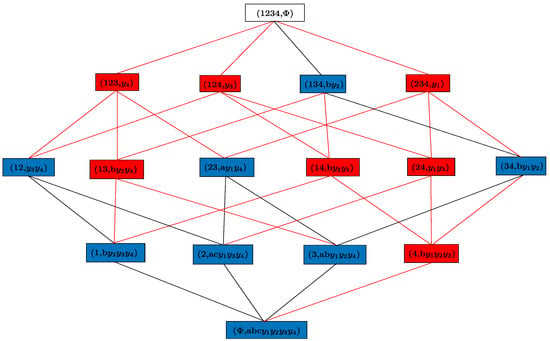

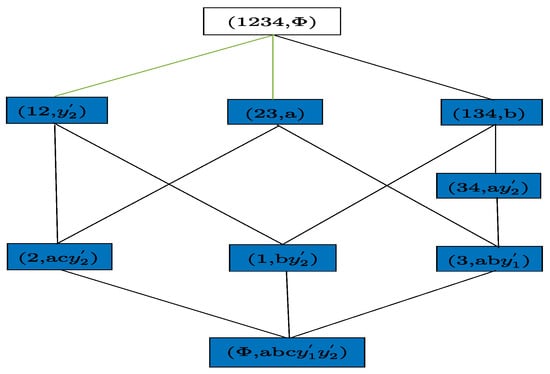

Let us continue with Example 1. Two formal contexts and (i.e., Table 3 and Table 4) are obtained from and by the scaling approach. The concept lattices and of and are displayed in Figure 2 and Figure 3, respectively. In Figure 2 and Figure 3, old N-concepts, deleted N-concepts, and tight N-concepts are the white, red, and blue nodes, respectively. In Figure 2 and Figure 3, old covering relations, deleted covering relations, and new covering relations are the black, red, and green lines, respectively.

Table 3.

The formal context .

Table 4.

The formal context .

Figure 2.

The N-concept lattice of Table 3.

Figure 3.

The N-concept lattice of Table 4.

4. The NCL-Fold Algorithm

In this section, we propose a new algorithm (called the NCL-Fold algorithm i.e., Algorithm 1) for increasing the granularity sizes of an attribute m, based on the relationship between N-concept lattices before and after the increase as discussed in Section 3.

| Algorithm 1 procedure . |

|

The procedure accepts three arguments: the cuts before and after increasing the granularity sizes of attribute m and and the N-concept lattice of a formal context before the increase. updates the N-concept lattice of a formal context after the increase.

The procedure first finds the top N-concept in (Line 1). Then, the procedure invokes the following Algorithm 2 to process every N-concept in (Line 2) and return updated (Line 3).

| Algorithm 2 procedure . | |

| 1: | for each child of |

| 2: | if has not been processed |

| 3: | |

| 4: | end if |

| 5: | end for |

| 6: | according to Theorem 4 and Definition 1, classify and modify the intent of |

| 7: | if is a deleted N-concept |

| 8: | mark as a destroyer and , for every parent with and of |

| 9: | end if |

| 10: | mark as a terminator if is not a deleted N-concept and is a destroyer |

| 11: | according to Definition 3 and Theorem 8, classify the covering relations relating to , set the new covering relations, and remove the deleted covering relations |

| 12: | |

| 13: | Mark as processed |

| 14: | return |

The procedure accepts four arguments: An N-concept , , , and . traverses all N-concepts in a recursive way.

If a child of is not visited, the algorithm recursively invokes using the child , , , and as arguments (Lines 1–5). Then, the N-concept is classified according to Theorem 4, and the intent of is modified according to Definition 1 (Line 6). In addition, for every parent of , if satisfies the conditions of a destroyer, is marked as a destroyer and is updated by adding and to (Lines 7–9). If satisfies the conditions of a terminator, is marked as a terminator (Line 10). Next, according to Definition 3 and Theorem 8, the covering relations relating to are fixed (Line 11). Of course, should be deleted from (Line 12). Finally, is marked as processed, and the updated is returned (Lines 13–14).

Now, we analyze the time complexity of Algorithms 1 and 2.

Firstly, we analyze the time complexity of Algorithm 2. The time complexity of Steps 6–10 is , where is the maximum number of parents of N-concepts in and is the number of attributes. The time complexity of Steps 11–12 is , where and is the number of attributes. Consequently, the time complexity of Algorithm 2 is , where is the number of N-concepts in .

Secondly, we can easily see that the time complexity of Algorithm 1 is , as well.

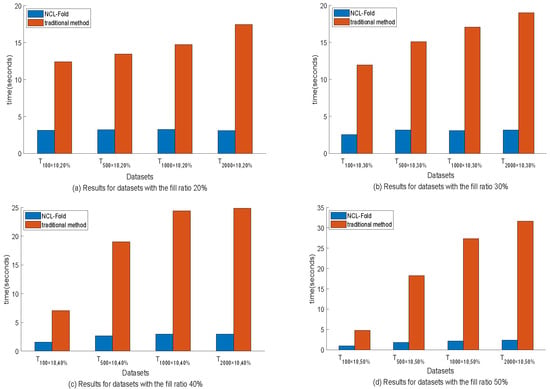

5. Experimental Evaluation

In this section, the main task was to compare our dynamic updating method algorithm NCL-Fold and the traditional method of directly constructing N-concept latices from datasets using the idea of FastAddIntent [27] in Matlab (Version R2018b). The experimental environment was a server equipped with the 64-bit operating system, Intel(R) Xeon(R) Silver 4210R CPU, 2.40 GHz, 128 GB RAM.

In the experiments, we used contexts that were randomly generated datasets with different fill ratios. The detailed information about the sixteen databases is shown in Table 5. In these datasets, every object owns the same number of attributes according to the fill ratios, and many-valued attribute y owns the same g-tree exhibited in Figure 4. In these datasets, the domain of y is Cut 1 in Figure 4. Figure 5 depicts the running time of our algorithm NCL-Fold and the traditional method on the sixteen random datasets from Cut 1 to Cut 2.

Table 5.

The detailed information about the sixteen random datasets.

Figure 4.

The g-tree for the attribute y.

Figure 5.

The running time of NCL-Fold and traditional method on Datasets 1–10 from Cut 1 to Cut 2.

From Figure 5, we can see that: (i) NCL-Fold significantly outperformed the traditional method in every case; (ii) the performance gap was bigger when the number of attributes, fill ratios, and changes of granularity sizes were the same, respectively, and the number of objects increased.

6. Conclusions

In this part, we present the main work of this paper and the future research work:

(1) The main work of this paper:

In this paper, we analyzed the relationship between N-concept lattices with increasing the granularity sizes of attributes. Furthermore, we proposed a new algorithm (named NCL-Fold) to update and maintain N-concept lattices with increasing the granularity sizes of attributes. Finally, we conducted experiments, and the experimental results indicated that NCL-Fold has good performance.

(2) Future research work:

Since changing the granularity sizes of attributes includes increasing the granularity sizes of the attributes and decreasing the granularity sizes of the attributes, how to quickly update N-concept lattices when decreasing the granularity sizes of the attributes could be developed in future. In addition, based on our method, how to detect appropriate granularity sizes of the attributes deserves to be studied in the future.

Author Contributions

Conceptualization, J.X.; Methodology, J.X.; Validation, L.Z. and J.Y.; Writing—original draft preparation, J.X.; Writing—review and editing, L.Z. and J.Y.; Visualization, L.Z. and J.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Foundation for Fostering Talents in Kunming University of Science and Technology (No. KKSY201902007).

Data Availability Statement

Publicly available datasets are not used in this study, and datasets in this study are randomly generated.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wille, R. Restructuring lattice theory: An approach based on hierarchies of concepts. In Ordered Sets; Rival, I., Ed.; Reidel: Dordrecht, The Netherlands; Boston, MA, USA, 1982; pp. 445–470. [Google Scholar]

- Aswani Kumar, C.; Srinivas, S. Mining associations in health care data using formal concept analysis and singular value decomposition. J. Biol. Syst. 2010, 18, 787–807. [Google Scholar] [CrossRef]

- Dias, S.; Vieira, N. Concept lattices reduction: Definition, analysis and classification. Expert Syst. Appl. 2015, 42, 7084–7097. [Google Scholar] [CrossRef]

- Kuznetsov, S. Machine learning and formal concept analysis. Lect. Notes Comput. Sci. 2004, 2961, 287–312. [Google Scholar]

- Poelmans, J.; Ignatov, D.; Kuznetsov, S.; Dedene, G. Formal concept analysis in knowledge processing: A survey on applications. Expert Syst. Appl. 2013, 40, 6538–6560. [Google Scholar] [CrossRef]

- Yang, L.; Xu, Y. Decision making with uncertainty information based on lattice-valued fuzzy concept lattice. J. Univers. Comput. Sci. 2010, 16, 159–177. [Google Scholar]

- Ganter, B.; Wille, R. Formal Concept Analysis: Mathematical Foundations; Springer: New York, NY, USA, 1999. [Google Scholar]

- Bĕlohlávek, R.; Sklenăŕ, V.; Zackpal, J. Crisply generated fuzzy concepts. In Proceedings of Formal Concept Analysis; Springer: Berlin/Heidelberg, Germany, 2005; pp. 269–284. [Google Scholar]

- Burusco, A.; Fuentes Gonzalez, R. The study of the L-fuzzy concept lattice. Matheware Soft Comput. 1994, 1, 209–218. [Google Scholar]

- Burusco, A.; Fuentes Gonzalez, R. The study of the interval-valued contexts. Fuzzy Sets Syst. 2001, 121, 439–452. [Google Scholar] [CrossRef]

- Dubois, D.; Prade, H. Possibility theory and formal concept analysis: Characterizing independent sub-contexts. Fuzzy Sets Syst. 2012, 196, 4–16. [Google Scholar] [CrossRef]

- Singh, P.; Aswani Kumar, C. Bipolar fuzzy graph representation of concept lattice. Inf. Sci. 2014, 288, 437–448. [Google Scholar] [CrossRef]

- Singh, P. Processing linked formal fuzzy context using non-commutative composition. Inst. Integr. Omics Appl. Biotechnol. 2016, 7, 21–32. [Google Scholar]

- Wang, L.; Liu, X. Concept analysis via rough set and AFS algebra. Inf. Sci. 2008, 178, 4125–4137. [Google Scholar] [CrossRef]

- Yao, Y. A comparative study of formal concept analysis and rough set theory in data analysis. In Proceedings of the 4th International Conference on Rough Sets and Current Trends in Computing (RSCTC 2004), Uppsala, Sweden, 1–5 June 2004; pp. 59–68. [Google Scholar]

- Yao, Y.; Mi, J.; Li, Z.; Xie, B. The construction of fuzzy concept lattices based on (θ, δ)-fuzzy rough approximation operators. Fundam. Informaticae 2011, 111, 33–45. [Google Scholar] [CrossRef]

- Krupka, M.; Laštovička, J. Concept lattices of incomplete data. In Proceedings of the International Conference on Formal Concept Analysis, Leuven, Belgium, 7–10 May 2012; pp. 180–194. [Google Scholar]

- Li, M.; Wang, G. Approximate concept construction with three-way decisions and attribute reduction in incomplete contexts. Knowl. Based Syst. 2016, 91, 165–178. [Google Scholar] [CrossRef]

- Li, J.; Mei, C.; Lv, Y. Incomplete decision contexts: Approximate concept construction, rule acquisition and knowledge reduction. Int. J. Approx. Reason. 2013, 54, 149–165. [Google Scholar] [CrossRef]

- Simiński, K. Neuro-rough-fuzzy approach for regression modelling from missing data. Int. J. Appl. Math. Comput. Sci. 2012, 22, 461–476. [Google Scholar] [CrossRef]

- Hao, C.; Fan, M.; Li, J. Optimal scale selecting in multi-scale contexts based on granular scale rules. Pattem Recognit. Aitificial Intell. 2016, 29, 272–280. (In Chinese) [Google Scholar]

- Ma, L.; Mi, J.; Xie, B. Multi-scaled concept lattices based on neighborhood systems. Int. J. Mach. Learn. Cybern. 2017, 8, 149–157. [Google Scholar] [CrossRef]

- Wu, W.; Leung, Y. Theory and applications of granular labeled partitions in multi-scale decision tables. Inf. Sci. 2011, 181, 3878–3897. [Google Scholar] [CrossRef]

- Wu, W.; Leung, Y. Optimal scale selection for multi-scale decision tables. Int. J. Approx. Reason. 2013, 54, 1107–1129. [Google Scholar] [CrossRef]

- Tang, Y.; Fan, M.; Li, J. An information fusion technology for triadic decision contexts. Int. J. Mach. Learn. Cybern. 2016, 7, 13–24. [Google Scholar] [CrossRef]

- Godin, R.; Missaoui, R.; Alaoui, H. Incremental concept formation algorithms based on Galois (concept) lattices. Comput. Intell. 1995, 11, 246–267. [Google Scholar] [CrossRef]

- Zou, L.; Zhang, Z.; Long, J. A fast incremental algorithm for constructing concept lattices. Expert Syst. Appl. 2015, 42, 4474–4481. [Google Scholar] [CrossRef]

- Zou, L.; Zhang, Z.; Long, J. A fast incremental algorithm for deleting objects from a concept lattice. Knowl.-Based Syst. 2015, 89, 411–419. [Google Scholar] [CrossRef]

- Shao, M.; Leung, Y. Relations between granular reduct and dominance reduct in formal contexts. Knowl.-Based Syst. 2014, 65, 1–11. [Google Scholar] [CrossRef]

- Wei, L.; Qi, J.; Zhang, W. Attribute reduction theory of concept lattice based on decision formal contexts. Sci. China Ser. F–Inf. Sci. 2008, 51, 910–923. [Google Scholar] [CrossRef]

- Li, J.; Mei, C.; Lv, Y. Knowledge reduction in decision formal contexts. Knowl.-Based Syst. 2011, 24, 709–715. [Google Scholar] [CrossRef]

- Li, J.; Mei, C.; Lv, Y. Knowledge reduction in real decision formal contexts. Inf. Sci. 2012, 189, 191–207. [Google Scholar] [CrossRef]

- Li, J.; Mei, C.; Aswani Kumar, C.; Zhang, X. On rule acquisition in decision formal contexts. Int. J. Mach. Learn. Cybern. 2013, 4, 721–731. [Google Scholar] [CrossRef]

- Shao, M.; Leung, Y.; Wu, W. Rule acquisition and complexity reduction in formal decision contexts. Int. J. Approx. Reason. 2014, 55, 259–274. [Google Scholar] [CrossRef]

- Wei, L.; Li, T. Rules acquisition in consistent formal decision contexts. In Proceedings of the 11th International Conference on Machine Learning and Cybernetics (ICMLC’12), Xi’an, China, 15–17 July 2012; pp. 801–805. [Google Scholar]

- Wu, W.; Leung, Y.; Mi, J. Granular computing and knowledge reduction in formal contexts. IEEE Trans. Knowl. Data Eng. 2009, 21, 1461–1474. [Google Scholar]

- Qi, J.; Wei, L.; Yao, Y. Three-way formal concept analysis. In Proceedings of the 2014 International Conference on Rough Sets and Knowledge Technology, Shanghai, China, 24–26 October 2014; pp. 732–741. [Google Scholar]

- Qi, J.; Qian, T.; Wei, L. The connections between three-way and classical concept lattices. Knowl.-Based Syst. 2016, 91, 143–151. [Google Scholar] [CrossRef]

- Qian, T.; Wei, L.; Qi, J. Constructing three-way concept lattices based on apposition and subposition of formal contexts. Knowl.-Based Syst. 2017, 116, 39–48. [Google Scholar] [CrossRef]

- Wang, W.; Qi, J. Algorithm for constructing three-way concepts. J. Xidian Univ. 2017, 44, 71–76. [Google Scholar]

- Yang, S.; Lu, Y.; Jia, X.; Li, W. Constructing three-way concept lattice based on the composite of classical lattices. Int. J. Approx. Reason. 2020, 121, 174–186. [Google Scholar] [CrossRef]

- Yao, Y. Three-way decisions with probabilistic rough sets. Inf. Sci. 2010, 180, 341–353. [Google Scholar] [CrossRef]

- Yao, Y. Three-way decision and granular computing. Int. J. Approx. Reason. 2018, 103, 107–123. [Google Scholar] [CrossRef]

- Yu, H.; Li, Q.; Cai, M. Characteristics of three-way concept lattices and three-way rough concept lattices. Knowl.-Based Syst. 2018, 146, 181–189. [Google Scholar] [CrossRef]

- Li, J.; Xu, W.; Qian, Y. Concept learning via granular computing: A cognitive viewpoint. Inf. Sci. 2015, 298, 447–467. [Google Scholar] [CrossRef]

- Li, J.; Huang, C.; Qi, J.; Qian, Y.; Liu, W. Three-way cognitive concept learning via multi-granularity. Inf. Sci. 2017, 378, 244–263. [Google Scholar] [CrossRef]

- Bĕlohlávek, R.; De Baets, B.; Konecny, J. Granularity of attributes in formal concept analysis. Inf. Sci. 2014, 260, 149–170. [Google Scholar] [CrossRef]

- Hashemi, R.; Agostino, S.; Westgeest, B.; Talburt, J. Data granulation and formal concept analysis. In Proceedings of the Processing NAFIPS—04. IEEE Annual Meeting of the Fuzzy Information, Banff, AB, Canada, 27–30 June 2004; Volume 1, pp. 79–83. [Google Scholar]

- Zou, L.; Zhang, Z.; Long, J. An efficient algorithm for increasing the granularity levels of attributes in formal concept analysis. Expert Syst. Appl. 2016, 46, 224–235. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).