Abstract

Information geometry concerns the study of a dual structure upon a smooth manifold . Such a geometry is totally encoded within a potential function usually referred to as a divergence or contrast function of . Even though infinitely many divergences induce on the same dual structure, when the manifold is dually flat, a canonical divergence is well defined and was originally introduced by Amari and Nagaoka. In this pedagogical paper, we present explicit non-trivial differential geometry-based proofs concerning the canonical divergence for a special type of dually flat manifold represented by an arbitrary -dimensional path . Highlighting the geometric structure of such a particular canonical divergence, our study could suggest a way to select a general canonical divergence by using the information from a general dual structure in a minimal way.

Keywords:

classical differential geometry; Riemannian geometry; information geometry; divergence functions MSC:

53Z50

1. Introduction

Information geometry (IG) has, in recent decades, become a very helpful mathematical tool for several branches of science [1,2,3]. It relies on the study of a smooth manifold endowed with a Riemannian metric tensor and a couple of torsion-free affine connections which are dual to each other and whose average leads to the Levi–Civita connection [4]. More precisely, the main object of study in IG is a quadruple , where is a Riemannian manifold [5] and are torsion-free linear connections on the tangent bundle , such that

for all sections and where denotes the Levi–Civita connection of the metric tensor [6]. Here denotes the space of vector fields from the manifold to the vector bundle. The quadruple is usually referred to as a statistical manifold whenever the affine connections are both torsion-free [6].

The geometry of a statistical manifold is totally encoded in a distance-like function

in the following way:

where the indexes run from to and , are the symbols of the dual connections and , respectively [7]. Here, denotes a coordinate system at and . When the matrix is strictly positive definite for all , such a function is called a divergence function or contrast function of the statistical manifold [8].

Given a torsion free dual structure on a smooth manifold , there are infinitely many divergence functions which induce on the same dual structure [9]. However, Amari and Nagaoka showed that a kind of canonical divergence is uniquely defined on a dually flat statistical manifold [4]. More precisely, a dual structure is said to be dually flat when both the Riemann curvature tensors and are zero [5]. In this case, there exist coordinate systems mutually dual , such that , and , where if otherwise . Here, and . Moreover, there exists a couple of functions and , such that

It turns out that

where Einstein’s notation is adopted. Given two points , the canonical divergence of the dually flat statistical manifold between and is then defined by

Relying upon the canonical divergence on a dually flat structure, one can list the basic properties for a divergence to be a canonical one of a general dual structure. In [6], the authors required that a divergence function, to be a canonical one, would be one half the square of the Riemannian distance when and would be the canonical divergence of Amari and Nagaoka when the dual structure is dually flat. However, we can find in the literature some different divergences which accomplish such requirements (see for instance [10,11,12,13,14]). Hence, the search for a canonical divergence on a general dual structure is still an open problem [10]. Nonetheless, the notion of canonical divergence on a dually flat manifold can illustrate how information geometry modifies the usual Riemannian geometry. In [4], the authors have applied such a notion to any curve within a general statistical manifold. Let be a curve within a statistical manifold ; we can consider the dual structure induced by on . Such a structure is given by

where

From here on, we denote the scalar product induced by the metric tensor with . Since is a -dimensional manifold, it is a dually flat manifold. Therefore, in [4] the authors applied the notion of canonical divergence to obtain a divergence of the curve :

In [4], the authors claimed that the divergence is independent of the parameterization of but only depends on the orientation of . This brings a close relation between the divergence and its dual function, which is given as follows:

In particular, we have that

where [4].

Even though the adaptation of the canonical divergence (7) to the -dimensional case resulting in the canonical divergence Formula (10) is clear in [4], with this work, we aim to provide differential geometric-based proofs of all statements claimed in [4] on the canonical divergence of a curve . In particular, we want to prove the following statements:

- (i)

- The divergence of a curve is given by the expression in (10). We refer to it as the canonical divergence of the curve .

- (ii)

- The divergence is independent of the particular parameterization of .

- (iii)

Finally, in the self-dual case, that is when , we prove that

which is claimed in [4] and provides evidence of how information geometry modifies the usual Riemannian geometry.

2. The Canonical Divergence of a Curve

Let be a curve within a statistical manifold . Let us assume that it is a -dimensional manifold. Therefore, the statistical manifold is always dually flat. This implies that there exists a couple of two affine parameters, and . Indeed, consider an arbitrary parameter ; owing to the dual flatness of , the -forms and are such that the following holds true [15]:

where the coefficients and are defined in (9). The solutions of Equations (14) and (15) are given by

Then, it straightforwardly follows that

According to the theory developed in [4], we can find two functions and such that

These two equations lead to the following relations:

which have the following solution:

Now, we can understand that and . Likewise, we have that and . Therefore, following (7), we can define the divergence of between and as

where and .

At this point, we can try to give a more explicit expression for the divergence . Let us interchange the role of and . Then, from Equations (16) and (20), we can write

Proposition 1.

Let be a curve endowed with the dualistic structure . Let and be the affine parameters with respect to and , respectively. Then, the divergence of can be written as follows:

Proof.

Let us now observe that, from Equation (1), we can write

Therefore, we have that

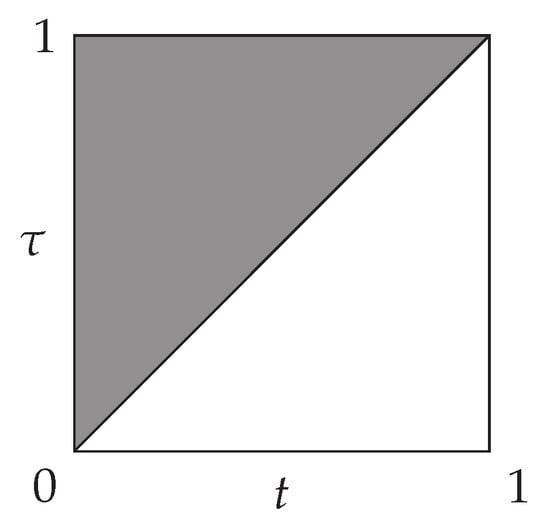

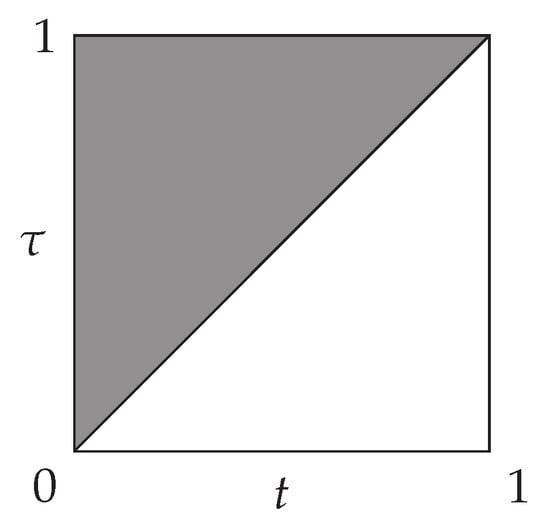

where we assumed that . Now, we may observe from Figure 1 that we can represent the domain of integration in the double integral of Equation (10) as the grey colored region.

Figure 1.

The domain of the double definite integral in Equation (10) is highlighted in grey color.

Therefore, we can split the double integral as follows:

and by plugging in it and , we obtain the desired result.

The representation of in Proposition 1 allows us to show that the divergence of a curve is independent of the particular parameterization of . The next result might be important to characterize the divergence as a distance-like function.

Proposition 2.

Let be a smooth curve and let be a re-parameterized curve of . Then

Proof.

Consider () an increasing diffeomorphism, meaning that if . From Equation (24), we can write

Our aim is to perform the change of variable within the integral (26), which is ruled by . Here, we use different notations to denote the derivative with respect to the “time” () and the derivative with respect to the parameter (). Let us define . Then, we have that

Recall that

with

and .

Since

we obtain

This result shows that the canonical divergence of the dual structure is independent of the parametrization of the curve. However, it depends on the orientation of the curve. We will shortly show that, by reversing the parameter of , we obtain the dual divergence of which is defined in Equation (11). In order to accomplish this task, we may note that, by applying the same methods as the ones in the proof of Proposition 1, we can write the dual divergence of as follows:

for , such that and . Then, in the next result, we are going to prove that coincides with the -divergence of the reversely oriented curve, .

Proposition 3.

Let be such that and . Let be the reversely oriented curve. Then we have that

Proof.

Consider Equation (24); by integrating by parts, we can write it as follows:

By reversing the “time” , namely , we get the reversely oriented curve, namely . Therefore, from the last equation, we obtain

where the last equality is obtained from Equation (29). Finally, we can write

which proves the statement.

To sum up, the -divergence of an arbitrary path only depends on the orientation but not on its parameterization, and the -divergence of coincides with the -divergence of the reversely oriented curve . For this reason, we can refer to as a modification of the curve length within information geometry. A further support to this statement comes from the self-dual case, namely when . In this case, information geometry reduces to the Riemannian geometry [6] and the -divergence becomes the square of the Riemannian length of the curve .

Proposition 4.

Let be a self-dual statistical manifold, i.e. with the Levi–Civita connection. Let be a smooth curve, such that and . Then the -divergence (10) becomes

where is the length of .

Proof.

Consider the representation of given in Equation (10); when is the coefficient of the Levi–Civita connection induced onto the curve , we can write

Thanks to the compatibility property of with the metric tensor , we then obtain

At this point, we are ready to express the divergence of in the self-dual case:

We may now observe from Figure 1 that the area of the integration domain in the integral above is one half the area of the rectangle . Therefore, by the symmetry properties of , we obtain that

which proves that the divergence of , in the self-dual case, is one half the square of the length, i.e., it is independent of the parameterization.

3. Conclusions

In classical differential geometry, the connection among geodesics, the length of a curve, and the distance provide deep insight into the geometric structure of a Riemannian manifold. For instance, under specific conditions [5], the distance between any two points of a Riemannian manifold is obtained through the geodesic between them by means of the minimization of the length functional along any path between the two points [16,17].

In information geometry, the inverse problem concerns the search for a divergence function which recovers a given dual structure on a smooth manifold . The Hessian of allows recovery of the Riemannian metric , while third-order derivatives of D retrieve the two torsion free connections and which are dual with respect to . Interestingly, is only determined by the third-order Taylor polynomial expansion of . Therefore, in general, is far from being unique for a given statistical manifold structure. However, in the dually flat case where both and are flat, it is possible to consider a canonical divergence function from a given , which was originally proposed by Amari and Nagaoka.

We considered here the case in which the divergence of a curve in a statistical manifold is obtained by applying the notion of the canonical divergence of a dually flat statistical manifold. In this way, the notion of divergence can be considered as the natural quantity to study to understand the geometric structure of a statistical manifold. More specifically, given , a curve of has a natural induced dual structure which is dually flat, since can be regarded as a -dimensional submanifold of itself. In [4], Amari and Nagaoka used the canonical divergence in a dually flat case to define a “canonical divergence” of the curve.

In this pedagogical paper, we provided a systematic organization and mathematical proofs of the properties of the divergence in Equation (22) which were originally stated without demonstration in [4]. Specifically, we obtained the following results:

- In Equation (22), we provided an explicit expression of the canonical divergence of a -dimensional path .

- In Equation (25) and Proposition 2, we showed that does not depend on the chosen parameterization of .

- In Equation (30) and Proposition 3, we demonstrated how depends on the adopted orientation of .

- In Equation (32) and Proposition 4, we verified that equals one half the square length of in the self-dual case.

By mimicking the classical theory of Riemannian geometry, our work helps to obtain a deeper understanding of the distance-like functional represented by the divergence in Equation (22). Furthermore, our explicit analysis can provide useful insights for identifying a minimum of through variational methods in more general settings. These methods, in turn, are the natural tools to deal with any sort of application in information geometry [18]. It would be of great interest to select a general canonical divergence via the minimum of over the set of all paths connecting any two points of a general statistical manifold. However, this problem appears to be highly non-trivial, and its solution requires, for instance, a criterion capable of explaining why one divergence would be better than the others in capturing a given dual structure. In some sense, the divergence must be defined using the information from in a minimal way. We believe that the differential geometric-based approach employed here could help in accomplishing such a task, which is, for now, beyond the scope of our current pedagogical setting. We leave this discussion along with a presentation on potential applications of general canonical divergence for non-dually flat scenarios to future scientific efforts.

In conclusion, our work presents new proofs of pedagogical value that, to the best of our knowledge, do not appear anywhere in the literature. In this sense, our work is neither a review nor a simplification of existing proofs. However, our findings could be regarded as preparatory to results of more general applicability in information geometry, including the study of more general examples of statistical manifolds. In addition, as previously mentioned in the paper, our proofs could be further exploited to pursue more research-oriented open questions of a non-trivial nature, including investigating the ranking of different divergences capable of capturing a given dual structure.

Author Contributions

Writing—review & editing, D.F. and C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Amari, S.-I. Information geometry of the em and em algorithms for neural networks. Neural Netw. 1995, 8, 1379–1408. [Google Scholar] [CrossRef]

- Tsallis, C. Possible generalization of boltzmann-gibbs statistics. J. Stat. Phys. 1988, 57, 479–487. [Google Scholar] [CrossRef]

- Felice, D.; Cafaro, C.; Mancini, S. Information geometric methods for complexity. Chaos 2018, 28, 032101. [Google Scholar] [CrossRef]

- Amari, S.-I.; Nagaoka, H. Methods of Information Geometry; Oxford University Press: Oxford, UK, 2000. [Google Scholar]

- Lee, J.M. Riemannian Manifolds: An introduction to Curvature, 1st ed.; Springer: New York, NY, USA, 1997; p. 176. [Google Scholar]

- Ay, N.; Jost, J.; Van Le, H.; Schwachhöfer, L. Information Geometry, 1st ed.; Springer International Publishing: Berlin, Germany, 2017. [Google Scholar]

- Eguchi, S. Geometry of minimum contrast. Hiroshima Math. J. 1992, 22, 631–647. [Google Scholar] [CrossRef]

- Eguchi, S. Second order efficiency of minimum contrast estimators in a curved exponential family. Ann. Statist. 1983, 11, 793–803. [Google Scholar] [CrossRef]

- Matumoto, T. Any statistical manifold has a contrast function—On the c3-functions taking the minimum at the diagonal of the product manifold. Hiroshima Math. J. 1993, 23, 327–332. [Google Scholar] [CrossRef]

- Felice, D.; Ay, N. Towards a canonical divergence within information geometry. Inf. Geom. 2021, 4, 65–130. [Google Scholar] [CrossRef]

- Ciaglia, F.M.; Di Cosmo, F.; Felice, D.; Mancini, S.; Marmo, G.; Pérez-Pardo, J.M. Hamilton-jacobi approach to potential functions in information geometry. J. Math. Phys. 2017, 58, 063506. [Google Scholar] [CrossRef]

- Wong, T.-K.L. Logarithmic divergences from optimal transport and rènyi geometry. Inf. Geom. 2018, 1, 39–78. [Google Scholar] [CrossRef] [Green Version]

- Ay, N.; Amari, S.-I. A novel approach to canonical divergences within information geometry. Entropy 2015, 17, 8111–8129. [Google Scholar] [CrossRef]

- Henmi, M.; Kobayashi, R. Hooke’s law in statistical manifolds and divergences. Nagoya Math. J. 2000, 159, 1–24. [Google Scholar] [CrossRef] [Green Version]

- Jost, J. Riemannian Geometry and Geometric Analysis, 7th ed.; Univesitext; Springer International Publishing: Berlin, Germany, 2017. [Google Scholar]

- Calin, O.; Udriste, C. Geometric Modeling in Probability and Statistics; Springer: Berlin, Germany, 2014. [Google Scholar]

- Toponogov, V.A.; Rovenski, V. Differential Geometry of Curves and Surfaces: A Concise Guide; Birkauser: Basel, Switzerland, 2006. [Google Scholar]

- Amari, S.-I. Information Geometry and Its Applications, 1st ed.; Springer Publishing Company: Berlin, Germany, 2016. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).