Abstract

The purpose of this work is to construct iterative methods for solving a split minimization problem using a self-adaptive step size, conjugate gradient direction, and inertia technique. We introduce and prove a strong convergence theorem in the framework of Hilbert spaces. We then demonstrate numerically how the extrapolation factor in the inertia term and a step size parameter affect the performance of our proposed algorithm. Additionally, we apply our proposed algorithms to solve the signal recovery problem. Finally, we compared our algorithm’s recovery signal quality performance to that of three previously published works.

1. Introduction

Let C and Q be two closed convex subsets of two real Hilbert spaces and , respectively, and denote the metric projection onto C by . Assume that A is a bounded linear operator and that is its adjoint operator.

In 1994, Censor and Elfving [1] defined the split feasibility problem (SFP) as

This problem was designed to model inverse problems, which are at the core of many real-world problems, such as phase retrieval, image restoration, and intensity-modulated radiation therapy (IMRT). Specifically, signal processing, including signal recovery, which is used to transmit data in almost every field imaginable. Signal processing is essential for the use of X-rays, MRIs, and CT scans, as it enables complex data processing techniques to analyze and decipher medical images. As a result, a great amount of research is motivated to solve the SFP (1). See [2,3,4].

Berny [5] popularized the CQ algorithm for finding the SFP (1) solution. This algorithm is based on the fixed-point concept:

where is a constant. Other iterative methods for resolving the split feasibility problem (1) are described in [6,7,8,9,10,11,12,13] and its references. However, we needed prior knowledge of matrix norm A in order to use these iterative methods.

Yang [14] proposed the relaxed CQ algorithm in 2005 to avoid computing the operator norm of a bounded linear operator, but this algorithm remains subject to many conditions. As a result, many researchers have proposed and studied self-adaptive step size algorithms to obtain fewer conditions. Further information is available from [15,16,17,18].

López [18] introduced the intriguing self-adaptive step size algorithm in 2012, which is worth further investigation. This algorithm generates sequence in the following manner:

where is arbitrarily selected, is the convex objective function and is its Lipschitz gradient, and is a sequence satisfying and

We now describe the variational inequality problem (VIP), as variational inequality theory is a major necessity for studying a broader class of numerous problems that arise in wide variety of fields of the pure and applied sciences. Assume that is a monotone operator. The VIP is defined mathematically as follows:

The projected gradient method [19] is the simplest iterative method for solving the VIP. This algorithm generates sequence in the following fashion:

where is arbitrarily selected and is a positive real number. However, to use the projected gradient method, we must know the closed-form expression of the metric projection , which does not always exist. As a result, the steepest descent algorithms have been introduced and further developed in recent years. Following that, methods for accelerating the steepest descent method have been constructed and developed. When is a convex function that is also Fréchet differentiable, the conjugate gradient direction [20] of h at is defined as follows:

where is arbitrarily selected, is a sequence satisfying , and is the gradient of function p.

Sakurai and Iiduka [21] investigated and introduced the iterative method to solve a fixed point of a nonexpansive mapping. This method is based on the concept of conjugate gradient directions (6), which can be used to accelerate the steepest descent method, which generates the sequence as follows:

where is arbitrarily selected, S is a nonexpansive mapping on C, , is a constant satisfying , is a sequence in , and is a sequence satisfying . The strong convergent theorem was established by Sakurai and Iiduka. Additionally, they demonstrated the performance of their algorithm, which significantly reduced the time required to find a solution.

Additionally, numerous researchers have concentrated their efforts on accelerating the convergence rate of algorithms. Polyak [22] pioneered this concept by introducing the heavy ball method, a critical technique for increasing the convergence rate of algorithms. Following that, modified heavy ball methods were introduced and developed to accelerate their algorithm convergence rate. Nesterov [23] introduced one of the most versatile modified heavy ball methods, which is defined as follows:

where are arbitrarily selected, is a positive constant, is an extrapolation factor satisfying , and the term is referred to as inertia. For additional information, the reader can consult [24,25,26].

Consider two proper, convex, and lower-semicontinuous functions, and . Recently, Moudafi and Thakur [9] published an interesting paper on the split proximal feasibility problem, that is to find such that

where is a positive constant, and is the Moreau–Yosida approximation.

This problem is important for future work such as algorithms for designing large dimensional equiangular tight frames, additive parameters for deep face recognition, deep learning methods for compressed sensing, a unit softmax with Laplacian, and water allocation optimization. See, e.g., [27,28].

As can be seen, Rockafella [29] defined the subdifferential of the Moreau–Yosida approximation as follows:

Equation (10) implies that the following inclusion problem is optimal for the problem (9):

where is the proximity operator of function g order and is the subdifferential of function f at the point .

The problem (9) is referred to as the split minimization problem (SMP) when . It was defined by Moudafi and Thakur [9] as finding a point

where and . The significance of this problem is that it is a generalization of the SFP (1), as the SMP (12) can be reduced to the SFP (1) by setting functions f and g to be the indicator functions of the nonempty closed convex subsets C and Q, respectively.

Assume that

The gradients of functions p and q are obtained as follows:

We can verify that p and q are weakly lower semicontinuous, convex, and differentiable; see [30].

Abbas and Alshahrani [31] published two iterative algorithms in 2018 for determining the minimum-norm solution to a split minimization problem. These algorithms are denoted by the following formulas:

and

where is arbitrarily selected, and is a sequence in . They established two strong convergence theorems for their proposed algorithms under some mild conditions.

Recently, Kaewyong and Sitthithakerngkiet [32] introduced a self-adaptive step size algorithm for resolving a split minimization problem. It is defined by the algorithm described below:

where is arbitrarily selected, , and are sequences in , and S is a nonexpansive mapping on . Under some appropriate conditions, the strong convergence theorem was established.

By studying the advantages and disadvantages of all the above works, in this work, we aim to construct new algorithms for solving the split minimization problem by developing the above algorithms based on useful techniques. The algorithms we constructed are combined with the following techniques: (1) self-adaptive step size technique to avoid computing the operator norm of a bounded linear operator, which is difficult if not impossible to calculate or even estimate; (2) inertia and conjugate gradient direction techniques to speed up the convergence rate. In part of the numerical examples, to demonstrate the outperformance of our proposed algorithms, we show that the extrapolation factor in the inertia term results in a faster rate of convergence and that the step size parameter affects the rate of convergence as well. Additionally, we apply our proposed algorithm to solve the signal recovery problem. We compared the performance of our algorithm to that of three other strong convergence algorithms that had been published before our work.

2. Preliminaries

In this section will review some basic facts, definitions, and lemmas that will be necessary to show our main result. The collection of proper convex lower semicontinuous functions on is denoted by .

Lemma 1.

Let a and b be any two elements in . Then, the following assertions hold:

- 1.

- ;

- 2.

- ;

- 3.

- , where .

Proposition 1.

Suppose that is a mapping. Let a and b are any two elements in C. Then,

- 1.

- S is monotone if ;

- 2.

- S is nonexpansive if ;

- 3.

- S firmly nonexpansive if .

By the metric projection, it is well accepted that is a firmly nonexpansive mapping, i.e.,

Lemma 2.

Let be the metric projection. Then, the inequalities stated below hold:

- 1.

- 2.

- .

Definition 1

([33,34]). Let g be any function in and x be any element. The proximal operator of g is defined by

Furthermore,

provides the proximal of g of order λ.

Proposition 2

([35,36]). Let g be any function in . Assume that λ is a constant in (0,1), and Q is a nonempty closed convex subset of . Then, the following assertions hold:

- 1.

- If , then for all , the proximal operators where δ is an indicator function of Q;

- 2.

- is firmly nonexpansive;

- 3.

- is the resolvent of the subdifferential of g, that is, .

Lemma 3

([37]). Let g be any function in . Then, the following assertions hold:

- 1.

- ;

- 2.

- and are both firmly nonexpansive.

Lemma 4

([38]). Assume that with

where and are sequences in and is a real sequence. If

- 1.

- 2.

- or .

- 3.

Then,

Lemma 5

([39]). Let be a real sequence that does not decrease at infinity, in the sense that there is a subsequence with for all . Given the sequence of integers by

Then, is a nondecreasing sequence verifying and, for all ,

Lemma 6.

Let D be a strongly positive linear bounded operator on with coeficient and . Then,

3. Results

This section introduces and analyzes the algorithm for solving split minimization problems. Additionally, is used to denote the solutions set for the split minimization problem (SMP) (12).

Condition 1.

Let . Let and be positive sequences. The sequences , , , and satisfy:

- (C1)

- and

- (C2)

- (C3)

- and

- (C4)

- and

Theorem 1.

Let be a bounded linear operator with its adjoint operator . Let f and g be two proper, convex, and lower semicontinuous functions such that (12) is consistent (i.e., ) and that is bounded. Assume that , and are sequences that satisfy Condition 1. Let and be a strongly positive bounded linear operator and an L-Lipschitz mapping with , respectively. Assume that and that . Then, sequence generated by Algorithm 1 converges strongly to a solution of the SMP, which is also the unique solution of the variational inequalities:

| Algorithm 1: Algorithm for solving split minimization problem. |

| Intialization: Set . Choose some positive sequence and satisfying and , respectively. Select an arbitrary strating point . |

| Iterative step: Given and . Compute as follows: |

| Stopping criterion: if then stop. Otherwise, set and return to Iterative step. |

Proof.

To begin with, we demonstrate that the sequence is bounded using mathematical induction. For , the boundedness of the sequence is trivial. We obtain by applying conditions (C1) and (C2). This implies the existence of such that , for all . Assume that . We start with the assumption that , which holds true for some , and demonstrate that it continues to hold true for . Triangle inequality ensures that

This implies that , for all . As a result, is bound.

And after that, we demonstrate that the sequences , , , and are bound. Given that , we can assume that . As a result, we have and . By exploiting the fact that is firmly nonexpansive, we conclude that

It follows that

Because , we can safely assume that for all . As a result, we can deduce from Equations (24), (26), and Lemma 6 that

Thus,

This inequality is obtained through the use of mathematical induction. Therefore, is bound. Consequently, , , and are all bound. Additionally, we obtain from Equations (24) and (27) that

This implies that

It follows that

In another direction, we obtain from Equation (28) that

Following that, two different cases for the convergence of the sequence are considered.

Case I Assume that the sequence does not increase. In that case, there exists such that for every . Thus, the sequence converges, and

Additionally, by using the assumption of the sequence , and the boundedness of and , we obtain that

Since is firmly nonexpansive, we observe that

Additionally, we observe that

It follows that

Additionally, by utilizing conditions (C1), (C2), and (31), we obtain that

By using (20), we obtain that

Then, we get that

Clearly, by using conditions (C3) and Equation (32), we obtain that

From the foregoing, we can immediately conclude that

Consider the following inequality:

This implies that the sequence is a Cauchy.

Next, we demonstrate that . Let be a weak cluster point. There is a subsequence that . By utilizing the lower-semicontinuity of h, we obtain that

This information implies that . We can therefore deduce that is a fixed point of proximal mapping of g, or that . As a result, is a g minimizer.

Similarly, by utilizing lower-semicontinuity of l, we obtain that

That is, . Therefore, we deduce that is a fixed point of proximal mapping of f, or that . Thus, is a f minimizer. This information implies that .

We now demonstrate that where is a unique solution to the variational inequality:

By using the properties of the matric projection, we obtain that

Following that, we show that the sequence strongly converges to the point , which is the unique solution to the variational inequality:

By observing Equation (29), we obtain that

where and

By applying conditions (C1) and (C3) to and , we obtain that and . As a result of applying Lemma 4 to Equation (38), we obtain that . This implies that , with .

Case II Assume that the sequence is increasing and . For (where is sufficiently large), define a map by

Then, is a nondecreasing sequence with , as , and

Similarly to Case I, it is obvious that

and

Because is bounded, a subsequence of exists. Assume that . Similarly to Case I, we obtain and . Then, using (29), we determine that

This information implies that

and

As a result,

Additionally, we obtain from Equation (39) that

As a result,

As a result of Lemma 5, we have

As a result, . This information implies that , completing the proof. □

4. Numerical Examples

In this section, we demonstrated the performance of our proposed algorithm using two examples. The first example experiments with various step sizes to demonstrate how the step size selection affects the proposed algorithm’s convergence in infinite-dimensional space. In the second example, we demonstrated how our proposed algorithm can solve the LASSO problem, thereby resolving the signal recovery problem. Additionally, we compared our algorithm to three previously published strong convergence algorithms to demonstrate our algorithm’s performance in terms of recovery signal quality.

To begin with, we describe how Algorithm 1 can be applied to the split minimization problem, which can be modeled to solve the signal recovery problem.

Recall the SFP defined as follows:

denotes the collection of SFP solutions. We now set and , where and are the indicator functions of two nonempty, closed, and convex sets, C and Q. Then, we determine that and . The following result was then obtained:

Corollary 1.

Let be a bounded linear operator with its adjoint operator . Let f and g be two proper, convex, and lower-semicontinuous functions such that (40) is consistent (i.e., ) and that is bounded. Assume that

and

Assume that , and are sequences that satisfy Condition 1. Let and be a strongly positive bounded linear operator and an L-Lipschitz mapping with , respectively. Assume that and that . Then, sequence generated by Algorithm 2 converges strongly to a solution of the SFP (40), which is also the unique solution of the variational inequalities:

| Algorithm 2: An iterative algorithm for solving split feasibility problem. |

| Intialization: Set . Choose some positive sequence and satisfying and , respectively. Select an arbitrary strating point . |

| Iterative step: Given and . Compute as follows: |

| Stopping criterion: if then stop. Otherwise, set and return to Iterative step. |

Example 1.

Consider the functional space . Assume with a norm defined by . As can be seen [31], assume that

with and . Therefore,

Additionally, assume that

with and . Therefore,

In this experiment, we set the parameters , , , and . Additionally, we set the operators , , and the sets

and

At different initial functions, we compared the computational performance of Algorithm 2 with and without extrapolation factor () in a variety of . To facilitate the experiment, we divided it into three distinct cases.

Case 1:

Case 1.1:

Case 1.1.1:

Case 1.1.2:

Case 1.1.3:

Case 1.2:

Case 1.2.1:

Case 1.2.2:

Case 1.2.3:

Case 2:

Case 2.1:

Case 2.1.1:

Case 2.1.2:

Case 2.1.3:

Case 2.2:

Case 2.2.1:

Case 2.2.2:

Case 2.2.3:

Case 3:

Case 3.1:

Case 3.1.1:

Case 3.1.2:

Case 3.1.2:

Case 3.2:

Case 3.2.1:

Case 3.2.2:

Case 3.2.3:

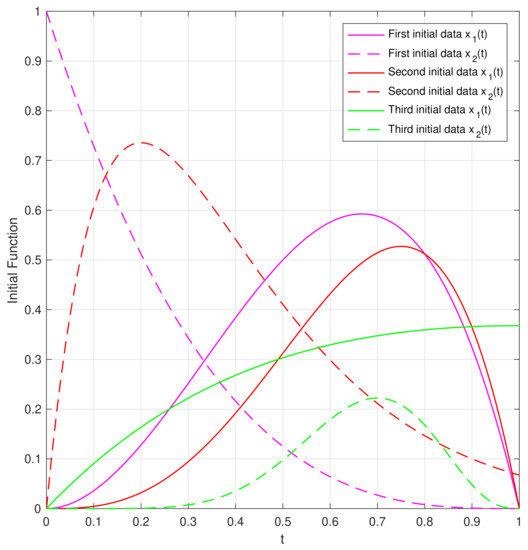

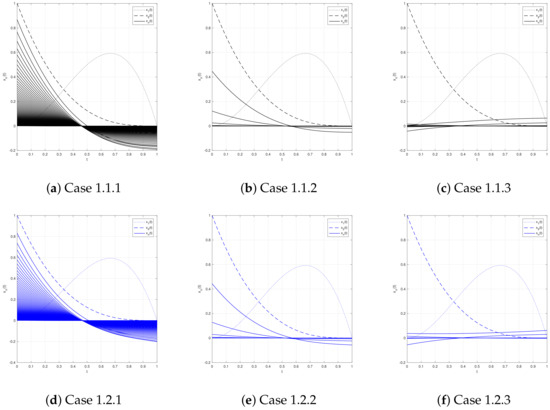

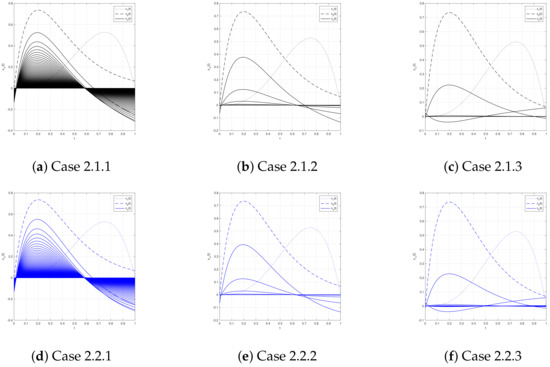

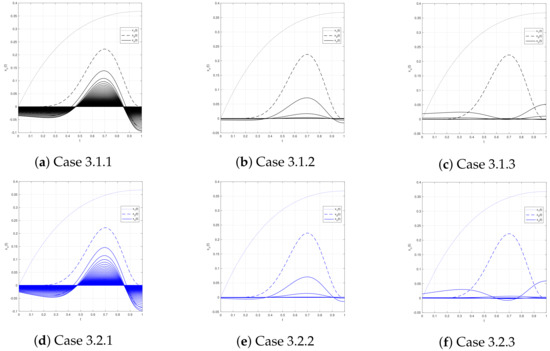

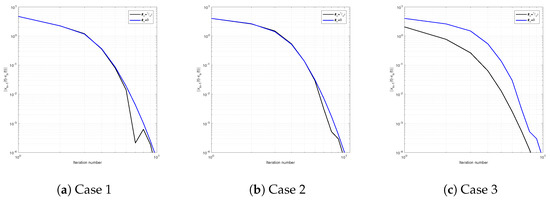

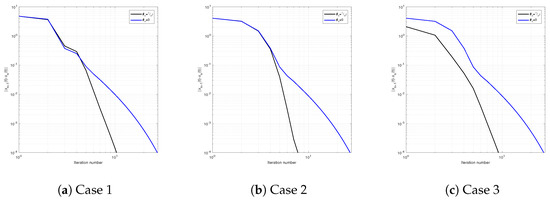

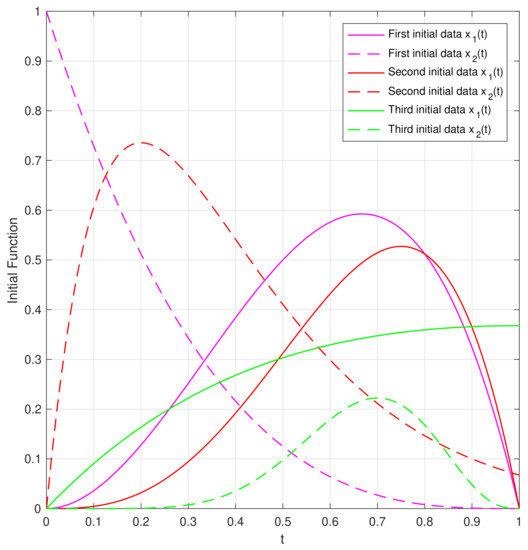

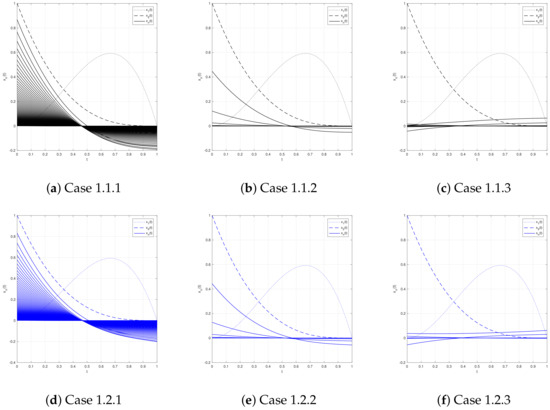

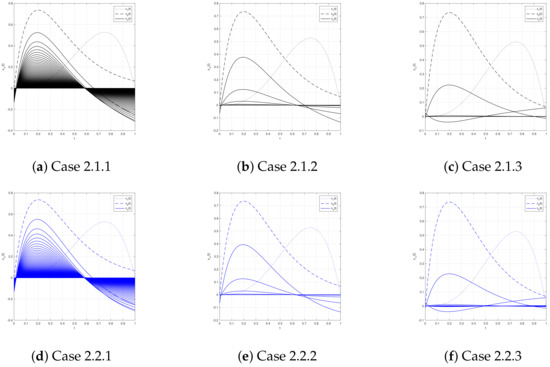

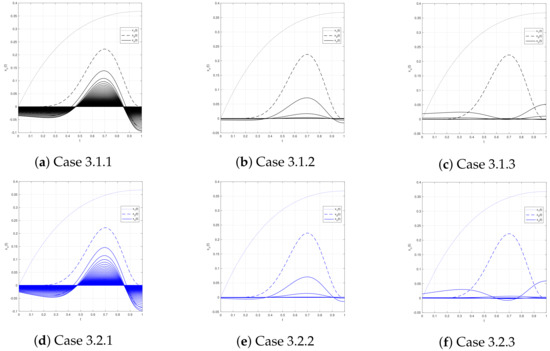

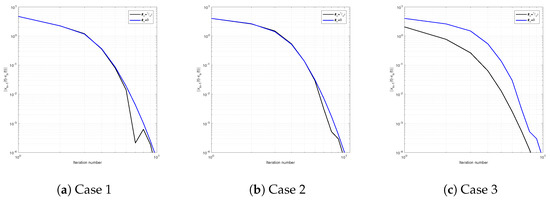

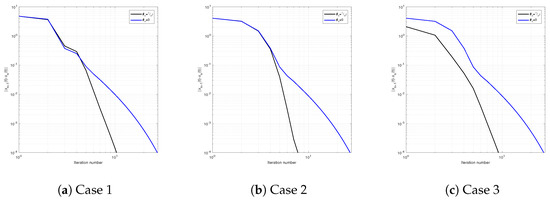

In this experiment, the stopping criterion is Cauchy error . The initial functions for each case are depicted in Figure 1. Figure 2, Figure 3 and Figure 4 illustrate the convergence behaviors of sequence with and without the extrapolation factor for Cases 1–3. Cauchy error plots for sequence with and without extrapolation factor are shown in Figure 5, Figure 6 and Figure 7 for , and , respectively. Additionally, we demonstrate our algorithm’s performance in terms of iteration counts and CPU time for generating sequence in each case, as shown in Table 1.

Figure 1.

The initial functions and for each case in Example 1.

Figure 2.

Convergence behavior of the sequence with and without the extrapolation factor for Case 1.

Figure 3.

Convergence behavior of the sequence with and without the extrapolation factor for Case 2.

Figure 4.

Convergence behavior of the sequence with and without the extrapolation factor for Case 3.

Figure 5.

Cauchy error plots for the sequence with and without the extrapolation factor when .

Figure 6.

Cauchy error plots for the sequence with and without the extrapolation factor when .

Figure 7.

Cauchy error plots for the sequence with and without the extrapolation factor when .

Table 1.

Comparison of iteration counts and CPU times for the sequence of Example 1 in each case.

Remark 1.

- 1.

- 2.

- 3.

- The advantages of our proposed algorithm in terms of iteration counts and CPU times are shown in Table 1. It demonstrated that the algorithm incorporating the extrapolation factor is more efficient than the algorithm avoiding it.

Example 2.

Consider the following linear inverse problem: , where is the signal to recover, is a noise vector, and is the acquisition device. To solve this inverse problem, we can convert it to the LASSO problem described below:

where r is a positive constant. Assume that and that . The LASSO (44) problem then transforms into the spilt feasibility problem (1). Thus, as described in Corollary 1, Algorithm 2 can be used to recover a sparse signal with nonzero elements.

The purpose of this experiment is to demonstrate that Algorithm 2 can be used to solve the LASSO problem (44). Following that, we compare our algorithm to two strong convergence algorithms proposed by Abbas and Alshahrani [31]. These algorithms are referred to as Abbas-1 (17) and Abbas-2 (18), respectively. Additionally, we compare the algorithms to Kaewyong’s self-adaptive step size algorithm [32]. Nattakarn (19) is the name given to this algorithm.

Throughout all algorithms, we set regularization parameter and . In our algorithm, we set , , , , and . In Nattakarn (19), we set , , , , and . In Abbas-1 (17) and Abbas-2 (18), we set .

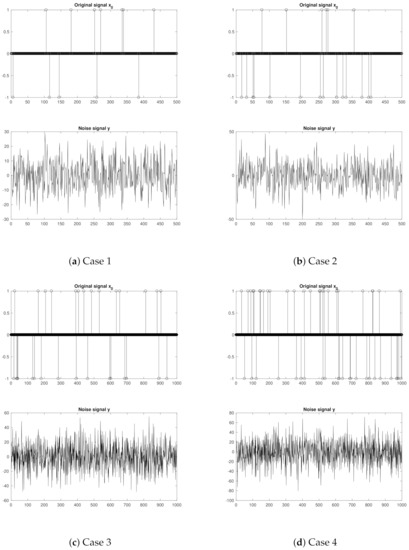

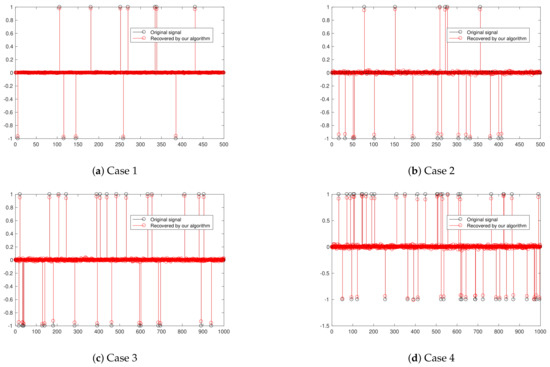

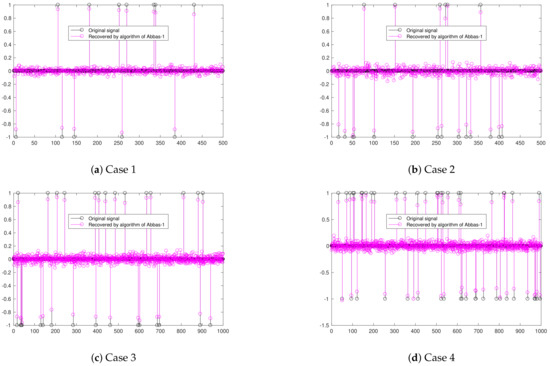

We evaluated the computational performance of each algorithm in a variety of different dimensions N and sparsity levels k (Case 1: ; Case 2: ; Case 3: ; Case 4: ). The number of iterations 200 is a used criterion for stopping. Additionally, we use the signal-to-noise ratio , where is an original signal, to quantify the quality of recovery, with a higher SNR indicating a higher-quality recovery.

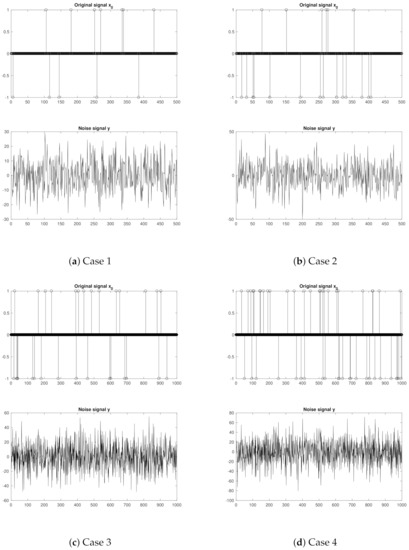

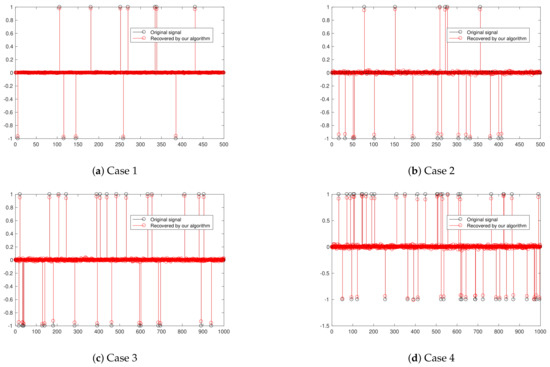

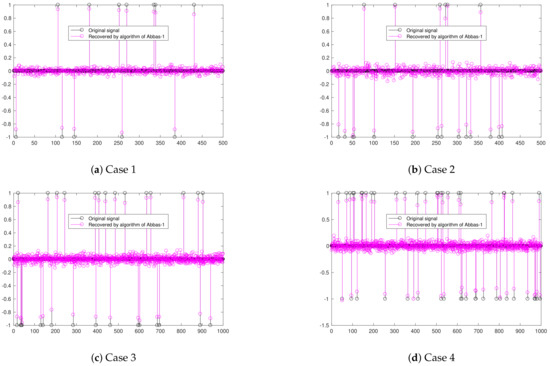

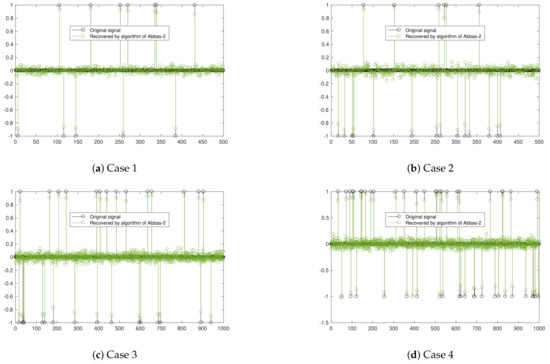

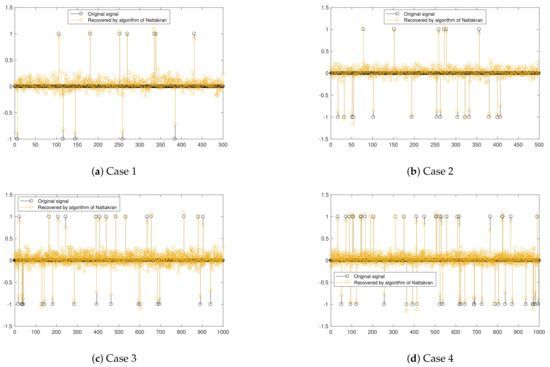

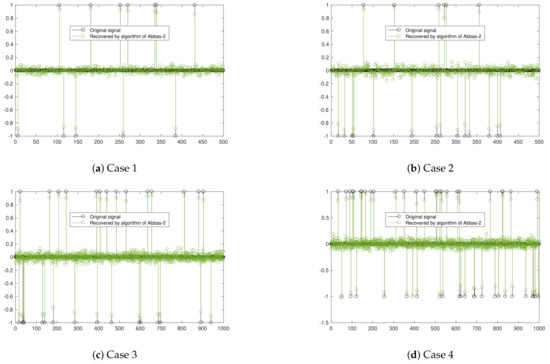

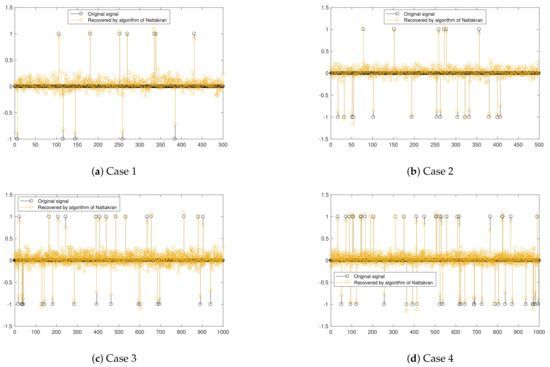

Figure 8 illustrates the original and noise signals in various dimensions N and sparsities k. Figure 9, Figure 10, Figure 11 and Figure 12 illustrate the recovery performance of all algorithms in a variety of scenarios. Table 2 shows the SNR comparison results.

Figure 8.

Original and noise signals in various dimentions N and sparsities k in Example 2.

Figure 9.

Recovery result by using Algorithm 2 in Example 2.

Figure 10.

Recovery result by using Abbas-1 (17) in Example 2.

Figure 11.

Recovery result by using algorithm Abbas-2 (18) in Example 2.

Figure 12.

Recovery result by using Nattakran (19) in Example 2.

Remark 2.

- 1.

- As demonstrated in Example 2, our proposed algorithm is capable of solving the LASSO problem (44).

- 2.

5. Conclusions

In this work, the split minimization problem was described in the framework of Hilbert spaces. By studying the advantages and disadvantages of some of the previous works, in this work, we constructed new algorithms for solving the split minimization problem by developing previously published algorithms based on useful techniques. The algorithms we constructed were combined with the following techniques: (1) self-adaptive step size technique to avoid computing the operator norm of a bounded linear operator norm, which is difficult if not impossible to calculate or even estimate, and (2) inertia and conjugate gradient direction techniques to speed up the convergence rate. In part of the numerical examples, we showed that the extrapolation factor in the inertia term results in a faster rate of convergence and that the step size parameter affects the rate of convergence as well. Additionally, we applied our proposed algorithm to solve the signal recovery problem. We compared our algorithm’s recovery signal quality performance to that of three previously published works, which showed that our proposed algorithm outperformed other algorithms for solving the signal recovery problem.

Author Contributions

N.K. and K.S. contributed equally in writing this article. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by King Mongkuts University of Technology North Bangkok, Contract No. KMUTNB-PHD-62-03.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the Department of Mathematics, Faculty of Applied Science, King Mongkuts University of Technology North Bangkok.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Shi, Z.; Zhou, C.; Gu, Y.; Goodman, N.A.; Qu, F. Source estimation using coprime array: A sparse reconstruction perspective. IEEE Sens. J. 2016, 17, 755–765. [Google Scholar] [CrossRef]

- Zhou, C.; Gu, Y.; Zhang, Y.D.; Shi, Z.; Jin, T.; Wu, X. Compressive sensing-based coprime array direction-of-arrival estimation. IET Commun. 2017, 11, 1719–1724. [Google Scholar] [CrossRef]

- Zheng, H.; Shi, Z.; Zhou, C.; Haardt, M.; Chen, J. Coupled coarray tensor CPD for DOA estimation with coprime L-shaped array. IEEE Signal Process. Lett. 2021, 28, 1545–1549. [Google Scholar] [CrossRef]

- Byrne, C. Iterative oblique projection onto convex sets and the split feasibility problem. Inverse Probl. 2002, 18, 441. [Google Scholar] [CrossRef]

- Ansari, Q.H.; Rehan, A. Split feasibility and fixed point problems. In Nonlinear Analysis; Springer: Heidelberg, Germany, 2014; pp. 281–322. [Google Scholar]

- Boikanyo, O.A. A strongly convergent algorithm for the split common fixed point problem. Appl. Math. Comput. 2015, 265, 844–853. [Google Scholar] [CrossRef]

- Ceng, L.C.; Ansari, Q.; Yao, J.C. Relaxed extragradient methods for finding minimum-norm solutions of the split feasibility problem. Nonlinear Anal. Theory Methods Appl. 2012, 75, 2116–2125. [Google Scholar] [CrossRef]

- Moudafi, A.; Thakur, B. Solving proximal split feasibility problems without prior knowledge of operator norms. Optim. Lett. 2014, 8, 2099–2110. [Google Scholar] [CrossRef] [Green Version]

- Shehu, Y.; Cai, G.; Iyiola, O.S. Iterative approximation of solutions for proximal split feasibility problems. Fixed Point Theory Appl. 2015, 2015, 1–18. [Google Scholar] [CrossRef] [Green Version]

- Shehu, Y.; Iyiola, O.S.; Enyi, C.D. An iterative algorithm for solving split feasibility problems and fixed point problems in Banach spaces. Numer. Algorithms 2016, 72, 835–864. [Google Scholar] [CrossRef]

- Shehu, Y.; Ogbuisi, F.; Iyiola, O. Convergence analysis of an iterative algorithm for fixed point problems and split feasibility problems in certain Banach spaces. Optimization 2016, 65, 299–323. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative methods for the split feasibility problem in infinite-dimensional Hilbert spaces. Inverse Probl. 2010, 26, 105018. [Google Scholar] [CrossRef]

- Yang, Q. On variable-step relaxed projection algorithm for variational inequalities. J. Math. Anal. Appl. 2005, 302, 166–179. [Google Scholar] [CrossRef] [Green Version]

- Gibali, A.; Mai, D.T. A new relaxed CQ algorithm for solving split feasibility problems in Hilbert spaces and its applications. J. Ind. Manag. Optim. 2019, 15, 963. [Google Scholar] [CrossRef] [Green Version]

- Moudafi, A.; Gibali, A. l 1-l 2 regularization of split feasibility problems. Numer. Algorithms 2018, 78, 739–757. [Google Scholar] [CrossRef] [Green Version]

- Shehu, Y.; Iyiola, O.S. Convergence analysis for the proximal split feasibility problem using an inertial extrapolation term method. J. Fixed Point Theory Appl. 2017, 19, 2483–2510. [Google Scholar] [CrossRef]

- López, G.; Martín-Márquez, V.; Wang, F.; Xu, H.K. Solving the split feasibility problem without prior knowledge of matrix norms. Inverse Probl. 2012, 28, 085004. [Google Scholar] [CrossRef]

- Zeidler, E. Nonlinear Functional Analysis and Its Applications: III: Variational Methods and Optimization; Springer Science & Business Media: New York, NY, USA, 2013. [Google Scholar]

- Nocedal, J.; Wright, S. Numerical Optimization; Springer Science & Business Media: New York, NY, USA, 2006. [Google Scholar]

- Sakurai, K.; Iiduka, H. Acceleration of the Halpern algorithm to search for a fixed point of a nonexpansive mapping. Fixed Point Theory Appl. 2014, 2014, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. Ussr Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Nesterov, Y. A method of solving a convex programming problem with convergence rate O (1/k2) O (1/k2). Sov. Math. Dokl 1983, 27, 372–376. [Google Scholar]

- Dang, Y.; Sun, J.; Xu, H. Inertial accelerated algorithms for solving a split feasibility problem. J. Ind. Manag. Optim. 2017, 13, 1383–1394. [Google Scholar] [CrossRef] [Green Version]

- Lorenz, D.A.; Pock, T. An inertial forward-backward algorithm for monotone inclusions. J. Math. Imaging Vis. 2015, 51, 311–325. [Google Scholar] [CrossRef] [Green Version]

- Kitkuan, D.; Kumam, P.; Martínez-Moreno, J.; Sitthithakerngkiet, K. Inertial viscosity forward–backward splitting algorithm for monotone inclusions and its application to image restoration problems. Int. J. Comput. Math. 2020, 97, 482–497. [Google Scholar] [CrossRef]

- Ul Rahman, J.; Chen, Q.; Yang, Z. Additive Parameter for Deep Face Recognition. Commun. Math. Stat. 2020, 8, 203–217. [Google Scholar] [CrossRef]

- Jyothi, R.; Babu, P. TELET: A Monotonic Algorithm to Design Large Dimensional Equiangular Tight Frames for Applications in Compressed Sensing. arXiv 2021, arXiv:2110.12182. [Google Scholar] [CrossRef]

- Rockafellar, R.T.; Wets, R.J.B. Variational Analysis; Springer Science & Business Media: New York, NY, USA, 2009; Volume 317. [Google Scholar]

- Aubin, J.P. Optima and Equilibria: An Introduction to Nonlinear Analysis; Springer Science & Business Media: New York, NY, USA, 2013; Volume 140. [Google Scholar]

- Abbas, M.; AlShahrani, M.; Ansari, Q.H.; Iyiola, O.S.; Shehu, Y. Iterative methods for solving proximal split minimization problems. Numer. Algorithms 2018, 78, 193–215. [Google Scholar] [CrossRef]

- Kaewyong, N.; Sitthithakerngkiet, K. A Self-Adaptive Algorithm for the Common Solution of the Split Minimization Problem and the Fixed Point Problem. Axioms 2021, 10, 109. [Google Scholar] [CrossRef]

- Moreau, J.J. Propriétés des applications «prox». Comptes Rendus Hebdomadaires des Séances de l’Académie des Sci. 1963, 256, 1069–1071. [Google Scholar]

- Moreau, J.J. Proximité et dualité dans un espace hilbertien. Bull. de la Société Mathématique de Fr. 1965, 93, 273–299. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef] [Green Version]

- Micchelli, C.A.; Shen, L.; Xu, Y. Proximity algorithms for image models: Denoising. Inverse Probl. 2011, 27, 045009. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: Berlin, Germany, 2011; Volume 408. [Google Scholar]

- Xu, H.K. Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Maingé, P.E. Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).