Abstract

An efficient optimization method is needed to address complicated problems and find optimal solutions. The gazelle optimization algorithm (GOA) is a global stochastic optimizer that is straightforward to comprehend and has powerful search capabilities. Nevertheless, the GOA is unsuitable for addressing multimodal, hybrid functions, and data mining problems. Therefore, the current paper proposes the orthogonal learning (OL) method with Rosenbrock’s direct rotation strategy to improve the GOA and sustain the solution variety (IGOA). We performed comprehensive experiments based on various functions, including 23 classical and IEEE CEC2017 problems. Moreover, eight data clustering problems taken from the UCI repository were tested to verify the proposed method’s performance further. The IGOA was compared with several other proposed meta-heuristic algorithms. Moreover, the Wilcoxon signed-rank test further assessed the experimental results to conduct more systematic data analyses. The IGOA surpassed other comparative optimizers in terms of convergence speed and precision. The empirical results show that the proposed IGOA achieved better outcomes than the basic GOA and other state-of-the-art methods and performed better in terms of solution quality.

Keywords:

orthogonal learning (OL); Rosenbrock’s direct rotational (RDR); gazelle optimization algorithm (GOA); CEC2017; data clustering; optimization problems MSC:

68W50

1. Introduction

In recent years, numerous disciplines, including data science, systems engineering, mathematical optimization, and their applications, have focused on real-world optimization challenges [1,2]. Traditional and meta-heuristic methods are the two main approaches for handling these issues. Newton and gradient descent are two simple examples of the first category of techniques [3]. The time-consuming nature of these procedures is, however, a disadvantage. Additionally, there is only one solution for each run. The effectiveness of these methods depends on the issues being addressed, as well as the restrictions, objective functions, search methods, and variables [4,5].

Numerous optimization techniques have been created, and optimization issues are frequently encountered in engineering and scientific study disciplines [6]. The search space generation and local optimal stagnation are two fundamental problems with current optimization paradigms [7]. Consequently, stochastic optimization techniques have received much attention in recent years. Because stochastic optimization methods merely modify the inputs, monitor the outputs of a given system for objective outcomes, and consider the optimization problem a “black box”, they can skip deriving the computational formula [8]. Additionally, stochastic optimization techniques can randomly complete optimization problems, giving them an inherent advantage over traditional optimization algorithms in their capacity for optimal local avoidance [9]. Stochastic optimization algorithms can be categorized into population-based and individual-based techniques depending on how many solutions are produced at each iteration of the entire process. Population-based methods have many random solutions and promote them throughout the optimization procedure [10,11]. In contrast, individual-based methods only produce one candidate solution at a time.

Nature-inspired stochastic techniques have received much interest recently [12,13,14]. Such optimization frequently imitates the social or individual behavior of a population of animals or other natural occurrences. Such algorithms begin the optimization process by generating several random solutions, which they then enhance as potential answers to a specific issue [15]. Stochastic optimization algorithms are used in various sectors because they outperform mathematical optimization methods. Despite the enormous number of suggested ways in the optimization area, it is crucial to understand why we require different optimization techniques. The no free lunch (NFL) theorem can be used to justify this problem [16]. It logically supports the idea that one method cannot provide an efficient solution for resolving any optimization issue. In other words, it cannot be guaranteed that an algorithm’s success in addressing a specific set of issues would enable it to solve all optimization problems of all types and natures. This theorem allows researchers to suggest brand-new optimization strategies or enhance the existing algorithms to solve various issues.

As mentioned above, hard optimization problems need powerful search methods to find the optimal solutions for different problems. Thus, the basic method faces several problems during the search process, which are premature convergence, a lack of balance between the search methods, and low convergence behavior. The gazelle optimization algorithm (GOA) is a global stochastic optimizer that is straightforward and has a powerful search capability. Nevertheless, the GOA requires a deep investigation to improve its search abilities and address various multimodal–hybrid functions and data mining problems efficiently. Therefore, this paper proposes a new hybrid method, called (IGOA), based on using the orthogonal learning (OL) method with Rosenbrock’s direct rotational strategy. This modification aims to improve the basic GOA performance and sustain solution varieties. We performed comprehensive experiments based on various functions, including 23 classical and IEEE CEC2017 problems. Moreover, eight data clustering problems taken from the UCI repository were tested to further verify the proposed method’s performance. The IGOA was compared with several other proposed meta-heuristic algorithms. Moreover, the Wilcoxon signed-rank test further assessed the experimental results to conduct more systematic data analyses. The IGOA surpassed other comparative optimizers in terms of convergence speed and precision. The empirical results show that the proposed IGOA achieved better results compared to the basic GOA and other state-of-the-art methods, and it obtained higher performance in terms of solution quality.

The remainder of this paper is structured as follows. In Section 2, the related works are presented. Section 3 presents the main procedure of the used original GOA. Section 4 presents the proposed IGOA method. Section 5 presents the experiments and the obtained results by the comparative methods. The results are presented in Section 6. Finally, the conclusion and potential future research directions are presented in Section 7.

2. Related Works

In this section, the related search methods that were used to solve various problems with their modifications are presented.

A popular technique inspired by nature, called moth–flame optimization (MFO), is straightforward. However, MFO may struggle with convergence or tend to slip into local optima for some complicated optimization tasks, particularly high-dimensional and multimodal issues. The integration of MFO with the Gaussian mutation (GM), the Cauchy mutation (CM), the Lévy mutation (LM), or the combination of GM, CM, and LM, is presented in [17] to address these constraints. To specifically enhance neighborhood-informed capabilities, GM is added to the fundamental MFO. On an extensive collection of 23 benchmark issues and 30 CEC2017 benchmark tasks, the most acceptable variation of MFO was evaluated to 15 cutting-edge methods and 4 well-known sophisticated optimization techniques. The extensive experiments show that there are three ways to considerably increase the basic MFO exploration and exploitation capabilities.

To direct the swarm, further enhance the balance between the integrated exploratory and neighborhood-informed capacities of the traditional process, and explore the core (in an attempt to search the abilities of the whale optimization algorithm (WOA) in dealing with optimal control tasks), two novel and appropriate strategies (made up of Lévy flight and chaotic local search) are instantaneously presented into the WOA [18]. Unimodal, multimodal, and fixed-dimension multimodal problems are in the benchmark tasks. The study and practical findings show that the suggested method can surpass its rivals in terms of convergence speed and rate. The proposed approach may be a powerful and valuable supplemental tool for more complicated optimization problems.

Implementing effective software products is becoming increasingly difficult due to the high-performance computing (HPC) industry’s rapidly changing hardware environment and growing specialization. Software abstraction is one approach to overcoming these difficulties. A parallel algorithm paradigm is proposed that enables both optimum mappings to be various and complex architectures with global control of their synchronization and parallelization [19]. The model that is provided distinguishes between an algorithm’s structure and its functional execution. It preserves them in an abstract pattern tree. It uses a hierarchical breakdown of parallel process models while building blocks for computational frameworks (APT). Based on the APT, a data-centric flow graph is created that serves as an intermediary description for complex and automatic structural changes. Three example algorithms are used to illustrate the usability of this paradigm, which results in runtime speedups between 1.83 and 2.45 on a typical mixed CPU/GPU system.

One of the recently developed algorithms, the salp swarm algorithm (SSA), is based on the simulated behavior of salps. However, it faces local optima stagnation and slow convergence (similar to most meta-heuristic methods). These issues were recently satisfactorily resolved using chaos theory. A brand-new hybrid approach built on SSA and the chaos theory was put forth in this work [20]. A total of 20 benchmark datasets and 14 benchmark unimodal and multimodal optimization tasks were used to test the proposed chaotic salp swarm algorithm (CSSA). Ten distinct chaotic maps were used to increase the convergence rate and ensuing precision. The suggested CSSA is an algorithm with promise, according to the simulation findings. The outcomes also demonstrate CSSA’s capacity to identify an ideal feature subset that maximizes classification performance while using the fewest possible features. Additionally, the results show that the chaotic logistic map is the best of the ten maps utilized and may significantly improve the effectiveness of the original SSA.

The sine–cosine algorithm, newly created to handle global optimization issues, is based on the properties of the sine and cosine trigonometric functions. The sine–cosine technique is modified in this study [21], improving the solution’s ability to be exploited while lowering the diversity overflow in traditional SCA search equations. ISCA is the suggested algorithm’s name. Its standout feature is the presented algorithm’s fusion of crossover skills with individual optimal states of unique solutions and a combination of self-learning and global search methods. A traditional collection of common benchmark issues, the IEEE CEC2014 benchmark test, and a more recent group of benchmark functions, the IEEE CEC2017 benchmark exam, have been used to assess these skills in ISCA. ISCA has used several performance criteria to guarantee the robustness and effectiveness of the method. In the study, five well-known engineering optimization problems were also solved using the suggested technique, ISCA. The proposed approach is also employed for multilayer thresholding in picture segmentation toward the study’s conclusion.

The WOA, a novel and competitive population-based optimization technique, beats specific previous biologically inspired algorithms in terms of ease of use and effectiveness [22]. However, for massive global optimization (LSGO) issues, WOA will become entangled in local optima and lose accuracy. To solve LSGO difficulties, a modified version of the whale optimization algorithm (MWOA) is suggested. A nonlinear dynamic method based on a differential operator is provided to update the controller parameters to equilibrium regarding the exploration and exploitation capacities. The algorithm is forced to leave local optima by employing a Lévy flight technique. Additionally, the population’s leader is subjected to a quadratic extrapolation approach, which increases the precision of the solution and the effectiveness of local exploitation. A total of 25 well-known benchmark problems varying from 100 to 1000 were used to evaluate MWOA. The experimental findings show that MWOA on LSGO perform better than other cutting-edge optimization methods in terms of solution correctness, fast convergence, and reliability.

3. Gazelle Optimization Algorithm: Procedure and Presentation

This section introduces the gazelle optimization algorithm (GOA) and formulates the suggested procedures for optimization.

The family of the genus Gazella includes the gazelles. The drylands throughout most of Asia, including China, the Arabian Peninsula, and a portion of the Sahara Desert in northern Africa, are home to gazelles. Additionally, they may be found in eastern Africa and the sub-Saharan Sahel, which stretches from Tanzania to the Horn of Africa. The majority of predators frequently hunt on gazelles. There are about 19 different species of gazelles, ranging in size from the little Thomson’s and Speke’s gazelle to the enormous Dama gazelle. The gazelle’s hearing, sight, and smell are keen, and they move quickly. These evolutionary traits enable them to flee from predators, making up for their chronic weaknesses. In nature, gazelles and their peculiar characteristics may be seen [23].

3.1. Initialization

The GOA is a population-based optimization technique that employs gazelles (X) with randomly initialized search parameters. According to Equation (1), the search agents are represented by an n-by-d matrix of the potential solution. The GOA leverages the issue’s upper bound (UB) and lower limit (LB) constraints to determine the possible values for the population matrix stochastically.

where X is the matrix of the solution locations, each location is stochastically yielded by Equation (2), is the jth randomly induced location of the ith solution, n denotes the number of gazelles, and d is the specified search space.

Each iteration has a candidate location for each , where is a random numeral, − are the upper and lower bounds of the specified search space, respectively. The best-obtained solution is appointed as a top gazelle to create an elite matrix (n × d) as given in Equation (3). This matrix is employed to explore and discover the gazelle’s next iteration.

where denotes the locations of the top gazelle. The predator and gazelles are indeed regarded as search agents by the GOA. Since the gazelles are already running in unison for refuge by the time a predator is observed stalking them, the predator would have already searched the area when the gazelles flee. If the superior gazelle replaces the top gazelle, the elite will change after each iteration.

3.2. The Brownian Motion

A seemingly random movement when the displacement conforms to a standard (Gaussian) probability distribution function with a median and variance of = 0 and = 1, respectively. Equation (4) defines the normal Brownian motion at location M [23].

3.3. The Lévy Flight

The Lévy distribution from Equation (5) is used by the Lévy flight to conduct a random walk [24].

where indicates the flight space, and = (1, 2) indicates the power law exponent. Equation (6) indicates the Lévy regular operation [25].

This work utilized an algorithm that yields a stable Lévy motion. The algorithm employed within the range of 0.3–1.99, represented in Equation (7), where is the distribution index that handles the motion processes, and denotes the scale unit.

where , x, and y are described as follows:

where = 1 and = 1.5.

3.4. Modeling the Basic GOA

The developed GOA algorithm mimics the way that gazelles survive. The optimization process entails grazing without a predator and fleeing to a refuge when one is seen. As a result, there are two steps to the described GOA algorithm optimization procedure [23].

3.4.1. Exploitation

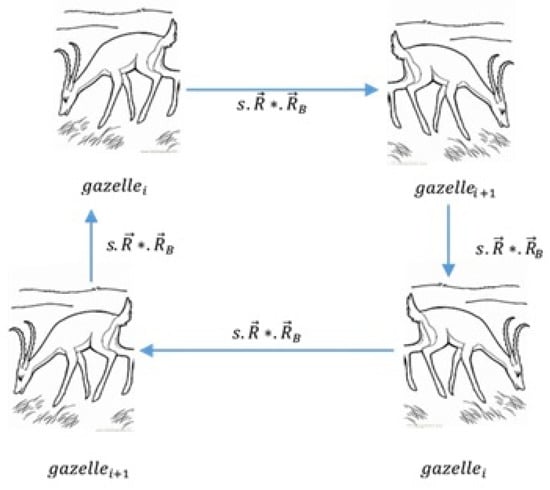

In this stage, it is assumed that there is no predator present or that the predator is only stalking the gazelles while they calmly graze. During this phase, neighborhood regions of the domain are efficiently covered using the Brownian motion, characterized by uniform and controlled steps. As shown in Figure 1, it is expected that the gazelles walk in a Brownian motion during grazing.

Figure 1.

The gazelle’s grazing pattern denotes exploitation.

Equation (11) illustrates the mathematical formula of this phenomenon.

where is the solution for the following iteration, is the solution for the current iteration, s is the pace at which the gazelles graze, is a vector of constant random integers [0, 1], and R is a vector of different random numbers reflecting the Brownian motion.

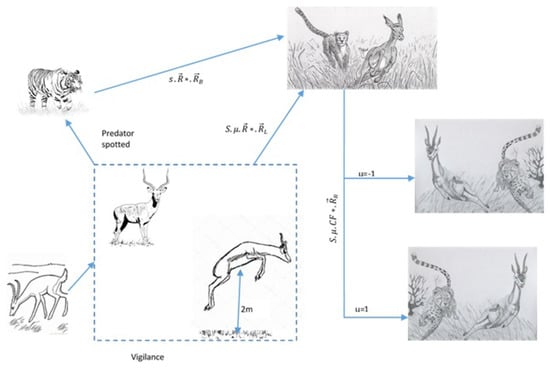

3.4.2. Exploration

When a predator is seen, the exploring phase begins. The 2 m height is mimicked by scaling the 2 m height to a figure between 0 and 1. Gazelles respond to danger by flicking their tail, stomping their feet, or stotting up to 2 m in the air with all four feet. This algorithm phase uses the Lévy flight, which involves a series of little steps and sporadic large jumps. This strategy has enhanced searchability in the literature on optimization. In Figure 2, the exploring phase is shown. Once the predator is seen, the gazelle flees, and the predator pursues. Both runs exhibit a sharp turn in travel direction, symbolized by the . This study assumed that this direction shift happens every iteration; when the iteration number is odd, the gazelle moves in one direction, and when it is even, it moves in the opposite direction. We hypothesized that because the gazelle reacts first, it uses the Lévy flight to move. The study assumed that the predator would lift off utilizing Brownian motion and switch to Lévy flight since the predator would respond later.

Figure 2.

Gazelles fleeing the predator is a sign of exploration.

Equation (12) illustrates the computational formula of the gazelle’s behavior after spotting the predator.

where S represents the fastest the gazelle can go, and is a vector of random integers generated using Lévy distributions. Equation (13) illustrates the computational formula for the predator’s pursuit of the gazelle.

where,

The research on Mongolian gazelles also claimed that even though the animals are not endangered, they have annual survivorship of 0.66, which translates to just 0.34 instances where predators are effective. PSRs, which stand for predator success rates, impact the gazelle’s capacity to flee. Therefore, the method prevents becoming stuck in a local minimum. Equation (15) models the impact of PSRs.

The main procedure of the basic IGOA is presented in Figure 3.

Figure 3.

Procedure of the basic GOA.

4. The Proposed Method

In this section, the proposed IGOA is presented according to its main search procedures.

4.1. Orthogonal Learning (OL)

A fractional experiment holds the secret to the orthogonal design. Finding the optimum level combination may be accomplished fast by utilizing the features of a fractional experiment to produce all possible level combinations. The standard approach to finding the best level for each variable is to cycle via all levels, supposing that the experimental findings of the objective issue rely on the K factor, which may be split into Q levels. When there are a few factors involved, this strategy works quite well. However, when many factors are involved, it becomes very challenging to reach every level. This traversal approach differs from orthogonal design. Through an orthogonal array, it mixes various elements and stories. As an illustration, the construction procedure follows: build the introductory column first, then the nonbasic column [26,27]. The following Equation describes how to create an orthogonal array of .

4.2. Rosenbrock’s Direct Rotational (RDR)

The coordinate axes are used as the initial search path in Rosenbrock’s direct rotation (RDR) local search technology, which then rotates along these directions before moving to a new arrangement point where effective steps are produced until at least one effective process and one failed step has been made in each search direction [28]. In this scenario, the present phase will end, and the orthonormal basis will be revised to account for the cumulative impact of all successful steps in all dimensions [29]. Equation (18) displays the orthonormal basis update.

Equation (19) defines the new set of guidelines. Where is the total number of successful design variables, the most beneficial search direction at this point is ; hence, it must be included in the revised search direction.

The search results are then updated using the Gram–Schmidt orthonormalization process as given in Equation (20).

Equation (21) displays the modified search instructions upon normalization.

The technique searches once more along the new opposite manner after updating the local search, continuing until the end condition is fulfilled.

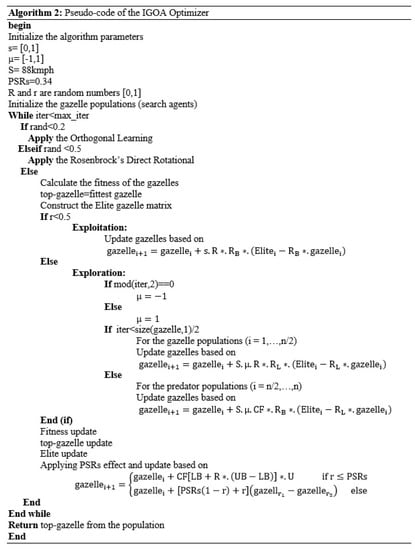

4.3. Procedure of the Proposed IGOA

The suggested IGOA is presented in this section to demonstrate its primary process and structure. The three primary methods—GOA, OL, and RDR—are employed in the suggested technique, subject to three phases following a transition mechanism. The suggested IGOA modifies the placements of the solutions in accordance with an assumption (IF < 0.2). The search procedure is checked at the end to see if it may be terminated or kept on. The search operations of the OL will be carried out if this is the case; otherwise, IF < 0.5, the search processes of the RDR will be carried out. If not, the search procedures will be excused in accordance with the GOA’s exploration and exploitation. The main procedure of the proposed IGOA is presented in Figure 4.

Figure 4.

Procedure of the proposed IGOA.

By creating a novel arrangement (transition mechanism) and utilizing three integrated approaches, the suggested method addresses the shortcomings of the conventional methods (i.e., GOA, LO, and RDR). The traditional approach, such as GOA, has problems with quick convergence rates, saturation in the immediate search region, and an imbalance between the search stages (exploration and exploitation). One of the critical issues with the GOA is that the variety of possible solutions is possibly low. In order to solve clustering difficulties more effectively, the suggested technique has an appropriate arrangement among the existing methods to meet these issues.

In conclusion, we demonstrate how the flaws were fixed. The first step is to address the imbalance between the search processes by doing an exploration search of OL and RDR for half of the iterations and an exploitation search of GOA for the other half. Thus, the suggested force arrangement may balance the search processes and increase variety in the candidate solutions by selecting one exploration or exploitation process out of three approaches in each iteration. Second, adjustments in the search procedure made in accordance with the suggested transition mechanism would regulate the pace of convergence. This impacts the optimization process by causing it to avoid the local search region and continue searching for the optimal answer. Finally, using various updating mechanisms per the suggested methodology will preserve the diversity of the solutions employed.

4.4. Computational Complexity of the Proposed IGOA

The suggested IGOA’s total computing complexity is provided according to the initialization of the candidate solutions, the objective function of the existing solutions, and the updating of the candidate solutions.

Assume that N is the number of all utilized solutions, and O(N) is the complexity time of the solutions’ initialization. The time complexity of the solutions’ updating is O(T × N) + O(T × N × ), where T is the total number of used iterations. is the location size of the problem. Therefore, the time complexity of the presented IGOA is given as follows.

The time complexity of the proposed method depends on three main search operators; GOA, OL, and RDR. These methods’ complexity times are calculated as follows.

Therefore, the total time complexity of the IGOA is given as follows.

5. Experiments and Results

The suggested approach (IGOA) is tested in this part to solve the optimization problems (data clustering problems). The outcomes are assessed using various metrics. Additionally, the suggested method’s considerable benefits over previous comparative approaches in the literature are demonstrated using the Friedman ranking test (FRT) and Wilcoxon signed-rank (WRT) test.

To evaluate the outcomes of the suggested strategy, a number of optimizers are employed as comparing techniques.

These methods are the salp swarm algorithm (SSA) [30], whale optimization algorithm (WOA) [31], sine–cosine algorithm (SCA) [32], dragonfly algorithm (DA) [33], grey wolf optimizer (GWO) [34], equilibrium optimizer (EO) [35], particle swarm optimizer (PSO) [36], Aquila optimizer (AO) [37], ant lion optimizer (ALO) [38], marine predators algorithm (MPA) [39], and gazelle optimization algorithm (GOA).

The findings are reported after 30 separate runs of each comparison approach with a population size of 30 and 1000 iterations. The parameters for the comparison approaches are set out in Table 1 in the ’Parameter’ column. Table 2 lists the specifics of the computers that were used.

Table 1.

Setting of the comparative methods’ parameters.

Table 2.

Details of the utilized computers.

5.1. Experiments Series 1: Classical Benchmark Problems

In this section, the performance of the proposed method is evaluated using a set of 23 classical benchmark functions.

The mathematical representation and classifications of the applied 23 benchmark functions are shown in Table 3. The benchmark functions are unimodal (F1–F7); they are used to assess the exploitation capability of the proposed method as they have one optimal solution. Multimodal (F8–F13) has several local optima and one global optimum used to evaluate the algorithm’s exploration. The fixed-dimension multimodal has a limited search space to assess the equilibrium between exploration and exploitation.

Table 3.

Classical benchmark functions.

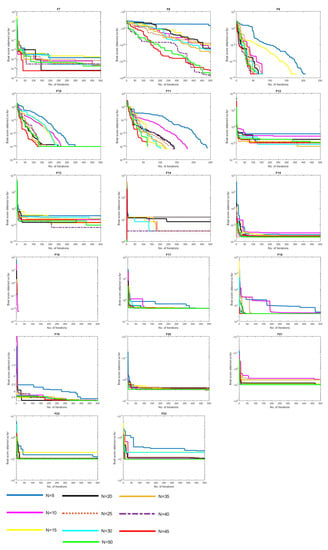

To find the best number of used candidate solutions of the proposed method, experiments were conducted as shown in Figure 5. The influence of the number of solutions (i.e., N) is examined on the classical test functions (23 benchmark functions). According to the literature, several numbers were taken to choose the population size; these numbers were 5, 10, 15, 20, 25, 30, 35, 40, 45, and 50, comparing the changes in the number of solution parameters throughout iterations (i.e., 500 iterations).

Figure 5.

The influence of the population size tested on the classical test functions.

Usually, the used solution numbers range from 5 to 50. For example, if we look at F5, F6, F7, and F9, we find slight differences between the solutions used, as 30 is almost the best-utilized number. This size had the best results on average as this value can be considered the smallest number of the used best numbers (30 solutions), as given in F17–F22. The best number of solutions between several values is the smallest one, to maintain low computational time and obtain the maximum performance. It can be observed from the obtained results in Figure 5 that when these many population sizes are used, the proposed IGOA method keeps its advantages, which means that IGOA is more robust and less overwhelmed by population size. The proposed IGOA is more stable when the population changes, such as F10, F16, F17, F18, F19, F21, F22, and F23. In other words, the best number of solutions is 50 in most of the used benchmark functions (i.e., F1, F6, F9, F10, F11, etc.). However, because there is little difference between the given number of solutions, the claim as mentioned earlier is supported.

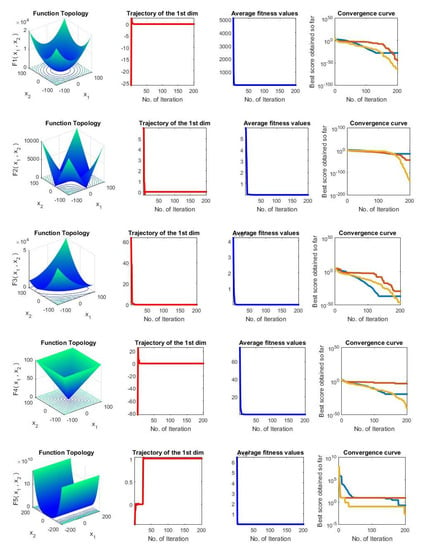

Deep investigations were conducted to show the performance of the proposed method compared to the original methods as given in Figure 6. In this Figure, in each row, four sub-figures are given: function topology, trajectory of the first dimension, average fitness values, and convergence curves of the tested methods.

Figure 6.

Qualitative results of the proposed method.

In the first column in Figure 6, the standard 2D designs of the fitness function are provided. The second column presents the first position story collected by the proposed IGOA through the optimization process. IGOA examines promising areas in the assigned search space for the used problems. Unique positions are given in a broad search space, while the largest is developed around the local region for the provided problems because of these difficulty levels. The second column, trajectory patterns, shows that the solution achieves significant and acute changes in the optimization’s first actions. This operation can confirm that the proposed IGOA can ultimately meet the optimal position. The third column presents the average fitness value of the used candidate solutions in each repetition. The curves display shrinking behaviors on the employed problems. This proves that the proposed IGOA enhances the performance of the search process for iterations. In the fourth column, the objective function value of the best-obtained solution in each repetition is presented. Consequently, the proposed IGOA has preferred exploration and exploitation aptitudes.

The results of the proposed IGOA, compared to the other comparative methods using classical (F1–F13) benchmark functions, where the dimension size is 10, are given in Table 4. A very impressive indication is observed when the proposed method achieved all of the best results in the tested cases (F1–F13). According to the FRT, the proposed method ranked first, followed by AO, MPA, GOA, EO, WOA, GWO, PSO, SCA, SSA, ALO, and DE. In Table 5, the results of the proposed IGOA are compared to other comparative methods using classical benchmark functions (F14–F23), where the dimension size was fixed. From this table, we notice that the proposed method had the best results in almost all of the tested problems, except F15, where it obtained the second-best results. So, the archived results prove that the proposed IGOA has a promising ability to solve the benchmark problems. According to the FRT, the proposed IGOA ranked first, followed by MPA, SSA, GWO, EO, AO, ALO, PSO, DA, WOA, SCA, and GOA.

Table 4.

The results of the proposed IGOA and other comparative methods using classical benchmark functions (F1–F13), where the dimension size is 10.

Table 5.

The results of the proposed IGOA and other comparative methods using classical benchmark functions (F14–F23), where the dimension size is a fixed size.

For further investigation, the WRT was applied in Table 6 to find the significant improvements that cases obtained in the proposed IGOA compared to the comparative methods (i.e., SSA, WOA, SCA, DA, GWO, PSO, ALO, MPA, EO, AO, and GOA). The proposed method had 16 significant improvements out of 23 compared to SSA; it obtained 14 significant improvements out of 23 compared to WOA; it obtained 17 significant improvements out of 23 compared to SSA; it obtained 19 significant improvements out of 23 compared to SCA; it obtained 16 significant improvements out of 23 compared to DA; it obtained 16 significant improvements out of 23 compared to GWO; it obtained 15 significant improvements out of 23 compared to PSO; it obtained 15 significant improvements out of 23 compared to ALO; it obtained 14 significant improvements out of 23 compared to MPA; it obtained 11 significant improvements out of 23 compared to EO; and it obtained 17 significant improvements out of 23 compared to GOA.

Table 6.

The results of the Wilcoxon ranking test of the comparative methods using 23 classical benchmark functions.

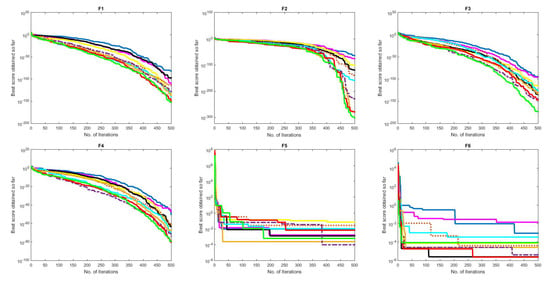

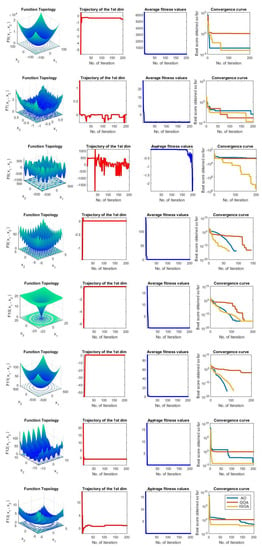

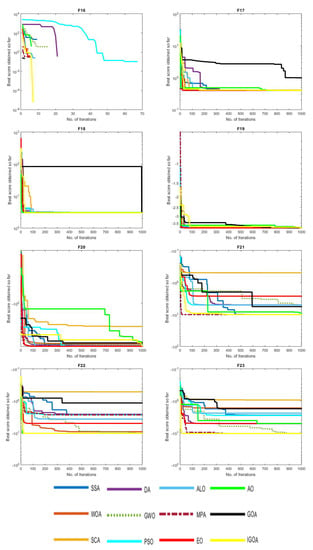

Figure 7 shows the convergence behaviors of the comparative algorithms on classical test functions (F1–F23), where the functions from 1–13 are fixed to 10 dimensions. These figures illustrate the optimization process during the iterations. It is clear that the proposed method finds the best solutions in all of the test cases, and it performs very well according to its convergence curves. For example, in the first test case (F1), the proposed IGOA presents good behavior through the optimization process. It had the best results in a smooth curve and faster compared to all comparative methods. The proposed IGOA in F16 obtained the best solution contrary to all comparison methods; the speed of the most significant difference is apparent.

Figure 7.

Convergence behaviors of the comparative algorithms on classical test functions (F1–F23).

Table 7 shows the results of the proposed IGOA compared to other comparative methods using classical benchmark functions (F1–F13), where the dimension size is 50. High-dimensional problems are used to validate the performance of the proposed IGOA compared to other methods. The proposed IGOA obtained better results than other methods, except the F8, which brought the best results in all of the test cases. The performance of the IGOA is excellent for solving high-dimensional problems. According to the FRT, the IGOA ranked first, followed by AO, MPA, EO, WOA, GOA, GWO, PSO, SSA, SCA, DA, and ALO. In Table 8, the results of the proposed IGOA compared to other comparative methods are presented using classical benchmark functions (F1–F13), where the dimension size is 100. Moreover, the proposed IGOA had the best results in all of the test cases, and it recorded the new best results in some cases. According to the FRT, the IGOA ranked first, followed by AO, MPA, WOA, EO, GOA, GWO, PSO, ALO, SSA, and SCA.

Table 7.

The results of the proposed IGOA and other comparative methods using classical benchmark functions (F1–F13), where the dimension size is 50.

Table 8.

The results of the proposed IGOA and other comparative methods using classical benchmark functions (F1–F13), where the dimension size is 100.

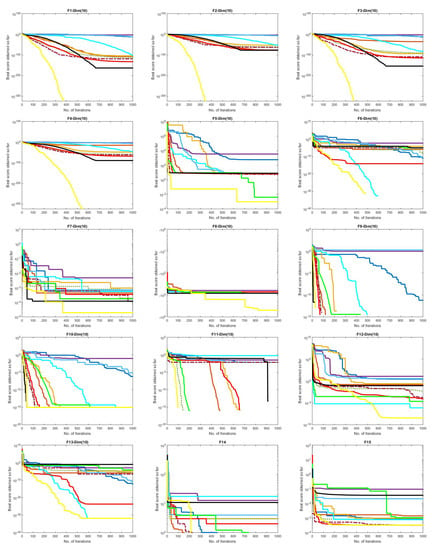

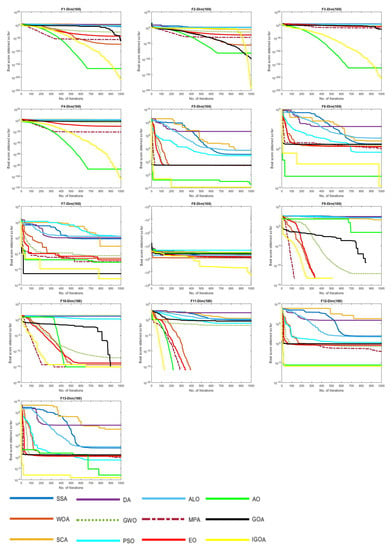

Figure 8 illustrates the convergence behaviors of the comparative algorithms on classical test functions (F1–F13) with higher dimensional sizes (i.e., 100). Obviously, the proposed IGOA has the best solutions in all of the test high-dimensional problems, and it shows promising behavior according to the given convergence curves. For example, in the third test case (F3), the proposed IGOA shows stable and smooth convergence behavior through the optimization rule. It is faster than all of the comparative methods in solving high-dimensional problems.

Figure 8.

Convergence behaviors of the comparative algorithms on classical test functions (F1–F13) with higher dimensional sizes (i.e., 100).

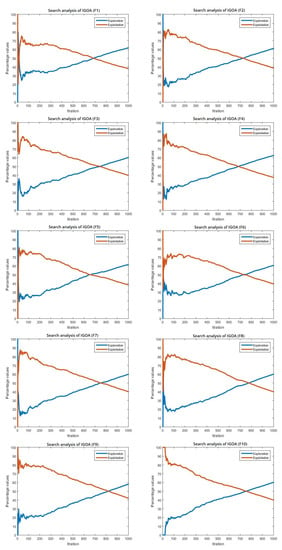

Figure 9 shows the exploration and exploitation of the optimization processes. This figure shows the division of the search tools and how to exploit them during the optimization process, which indicates the distribution between the search tools of exploitation and exploration; this enhances the ability of the proposed algorithm to find new solutions in the available search space.

Figure 9.

The exploration and exploitation of the optimization processes.

5.2. Experiments Series 2: Advanced CEC2017 Benchmark Problems

In this section, the proposed IGOA is tested further using a set of 30 advanced CEC2017 benchmark functions.

The types and descriptions of the used CEC2017 benchmark functions are presented in Table 9. The benchmark functions are unimodal (F1–F3) and multimodal (F4–F10), which are shifted and rotated functions. Hybrid functions range from F11 to F20. Composition functions range from F21 to F30. These functions are usually used to test the exploration and exploitation search processes and their equilibrium. The primary setting of the experiment in this section is the same as in the previous section.

Table 9.

Description of CEC2017 functions.

The proposed IGOA is compared with other state-of-the-art methods using CEC2017, including the dimension-decided Harris hawks optimization (GCHHO) [42], multi-strategy mutative whale-inspired optimization (CCMWOA) [43], balanced whale optimization algorithm (BMWOA) [18], reinforced whale optimizer (BWOA) [44], hybridizing sine–cosine differential evolution (SCADE) [45], Cauchy and Gaussian sine–cosine algorithm (CGSCA) [46], improved opposition-based sine–cosine optimizer (OBSCA) [47], hybrid grey wolf differential evolution (HGWO) [48], mutation-driven salp chains-inspired optimizer (CMSSA) [49], and dynamic Harris hawks optimization (DHHOM) [50].

The results of the proposed IGOA compared to other comparative state-of-the-art methods are presented in Table 10 using 30 CEC2017 benchmark functions. This table shows that the proposed IGOA method obtained perfect results compared to other methods. In most of the best CEC2017 problems, the IGOA obtained better results and delivered high-quality solutions to solve mathematical problems. FRT was conducted in Table 11 to rank the comparative methods for solving the CEC2017 problems to support our claim. In Table 11, the proposed IGOA is ranked first, followed by GCHHO, CMSSA, DHHOM, HGWO, BMWOA, BWOA, CGSCA, OBSCA, SCADE, and CCMWOA. So, the obtained results proved that the performance of the IGOA is promising compared to other comparative methods. Since these mathematical problems are complex, it is challenging to reach the optimal solutions for such problems. Since the proposed method obtained a set of optimal solutions, this confirms its efficiency in solving complex and different CEC2017 problems, improving its ability to avert from local optima and increase population diversity.

Table 10.

The results of the proposed IGOA and other comparative methods using 30 CEC2017 benchmark functions.

Table 11.

The results of the Friedman ranking test of the comparative methods using 30 CEC2017 benchmark functions.

5.3. Experiments Series 3: Data Clustering Problems

The proposed method was further tested on real-world problems (data clustering) to prove its searchability.

5.3.1. Description of the Data Clustering Problem

Suppose we have a collection of objects (N) that has to be divided into predetermined clusters (K). To reduce the Euclidean distance between the provided objects and the centroids of the clusters, which refer to each specified item, the clustering procedure allocates each object (O) to a specific cluster. Equation (28) is the distance metric used to evaluate the similarity (connection) between data objects. The issue is primarily described as follows:

where is the distance measure between the ith object () and the jth cluster centroid (). N is the number of given objects, and K is the number of clusters. is the weight of the ith object () associated with the jth cluster (), which is either 1 or 0.

5.3.2. Results of the Data Clustering Problems

This experiment tests the proposed IGOA using eight different UCI datasets, namely Cancer, Vowels, CMC, Iris, Seeds, Glass, Heart, and Water. The details of these datasets are presented in Table 12.

Table 12.

UCI data clustering benchmark datasets.

Table 13 shows the obtained results by the proposed IGOA compared to other comparative methods using various data clustering problems. It is clear from the results presented in this table that the modification made in the proposed method effectively affected the ability of the basic algorithm. This modification helped the proposed algorithm obtain impressive results compared with the basic (and other) methods. The proposed method had the best results in most cases, with a clear difference, as it received new results in some cases. Thus, it was found that the proposed algorithm is able to deal with such complex problems and solve them efficiently.

Table 13.

The obtained results by the comparative methods using the data clustering problems.

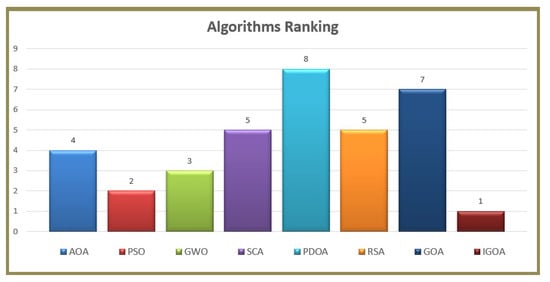

In Table 14, it was found that the proposed method obtained significantly better results than the comparative methods according to the tests used. According to the Wilcoxon rank test, it was found that the results were substantially better. The improvement rates were significant, which indicates the ability of the proposed algorithm to solve such problems more efficiently compared to others. Based on the Friedman ranking test examination in Table 14, the proposed algorithm’s order is in the first place. The PSO algorithm is in second place, the GWO algorithm is in third place, the AOA algorithm is in fourth place, the SCA and RSA algorithms are in fifth place, and the GOA algorithm is in seventh place. Figure 10 indicates that the proposed algorithm is able to achieve results that exceed other algorithms in most of the cases used in these experiments.

Table 14.

The statistical test using the p-value, Wilcoxon rank test, and Friedman ranking test.

Figure 10.

The ranking of the tested methods for the data clustering problems.

6. Discussions

In the tests indicated above, the OL technique and RDR method were incorporated in order to improve the performance of the original GOA method, and the improved resultant IGOA was thoroughly examined. Using benchmark datasets for clustering, the effectiveness of IGOA was evaluated.

We realize that the IGOA presents significantly superior outcomes for multi-dimensional classical test problems than other well-known optimization algorithms through results in earlier sections. The effectiveness of other algorithms significantly diminishes by expanding the dimension space. The results revealed that the proposed IGOA can maintain the equipoise between the exploratory and exploitative abilities on the problem’s nature with a high-dimension space.

From the results of the F1–F13 classical test functions, one can see a clear significant gap among the results of the comparative algorithms, with superior solutions obtained from the proposed IGOA solution. As a result, the IGOA convergence speed is greatly improved. This observation proves the superior exploitative qualities of the IGOA. According to the obtained solution for multimodal and fixed dimensional multimodal functions, we conclude that IGOA achieved superior solutions in most cases (and in competitive solutions in some cases) according to the equilibrium between the explorative and exploitative capabilities, as well as a smooth change among the searching methods.

In addition, the performance of IGOA was tested using 30 advanced CEC2017 benchmark functions for further judgment. In the first part, regarding CEC2017 unimodal functions (F1–F3), IGOA has excellent results and optimization efficiency because the OL and RDR strategies supplement the diversity of the solutions of the conventional GOA and increase its likelihood of taking the local optimal section. In contrast, the DE has different distribution properties that allow the conventional GOA to obtain a good stability point in the search mechanisms (exploration and exploitation), increasing the optimizer’s accuracy.

The importance of the proposed method is further validated in the CEC2017 multimodal functions (F4–F10). First, it can be recognized that the IGOA strategies thoroughly allow the search solutions at various regions to be determined. Then the data (from the solutions’ positions) interact with each other. As a result, it is hard for the implied areas to be trapped quickly. Furthermore, when the GOA is trapped with local optima, the stochastic disturbance of the OL can dramatically improve the GOA and its search accuracy. Finally, by combining the two strategies (OL and RDR) into the GOA, IGOA works well in the multimodal functions.

The combinations of CEC2017 unimodal and CEC2017 multimodal functions, called hybrid functions (F11–F20), were used to analyze the accuracy of the proposed IGOA. The RDR encourages the GOA to obtain the possibly optimal region faster. On the other hand, the OL encourages the GOA to obtain a potentially more reliable solution in the adjacent area. Nevertheless, due to the hardness of some problems, the combination of two search strategies (RDR and OL) interacting together leads to good results.

CEC2017 composite functions (F21–F30) examine the proposed IGOA’s ability to change between the search phases. Such benchmark analyses place more critical needs on the optimization process. For example, the OL principally assists in enhancing the ability in the exploration aspect, which enables IGOA to come near the better region quickly. Contrarily, the RDR encourages determining a more suitable solution close to the current best solution. However, the overall performance of IGOA was developed.

IGOA is shown to be highly effective since the precision of the final findings is improved when compared to well-known cutting-edge methodologies in the literature. IGOA’s efficacy is further demonstrated in clustering application challenges. Additionally, it can be shown from the outcomes of the two clustering issues (data clustering) that IGOA also performs remarkably well in locating ideal clusters and centroids.

To demonstrate its efficacy concerning convergence acceleration and optimization accuracy, the suggested approach presents a better form of the GOA when compared with well-known methods and state-of-the-art methods. When using the suggested IGOA to solve actual clustering issues, whether they include data or text, we also compare it to alternative optimization techniques. Researchers will understand how to implement the suggested optimizer to minimize and maximize the costs of clustering applications as we reduce the difficulties with data clustering and maximize the issues with text document clustering. In essence, one may obtain managerial recommendations from this document. An earlier subsection described this experimental configuration.

7. Conclusions and Future Works

An effective optimization technique is required to handle challenging problems and identify the best solution. Optimization problems can take many different routes in a variety of disciplines. It is challenging to solve several problems using the same approach since the nature of these problems varies. One simple–global stochastic optimizer with strong search capabilities is the GOA. However, the GOA is inadequate for multi-modality, hybrid functions, and data mining.

To enhance the GOA and maintain diversity in solutions, the current article suggests combining Rosenbrock’s direct rotational (RDR) technique with the orthogonal learning (OL) method (IGOA). We carried out extensive tests based on several functions, including 23 classical and IEEE CEC2017 challenges. Several other suggested meta-heuristic algorithms were contrasted with the IGOA. To further confirm the effectiveness of the proposed strategy, 8 data clustering issues collected from the UCI repository were tested. The Wilcoxon signed-rank test also evaluated the experimental findings for a more organized data analysis. Other comparison optimizers could not compete with the IGOA’s convergence speed and accuracy. The empirical results demonstrate that the suggested IGOA outperformed different comparative approaches and the basic GOA regarding outcomes and solution quality.

In future work, the proposed method can be improved using other search operators. Moreover, the proposed method can be tested to solve other hard problems, such as text clustering problems, scheduling problems, industrial engineering problems, advanced mathematical problems, parameter extraction problems, forecasting problems, feature selection problems, multi-objective problems, and others. Moreover, a deep investigation is needed to determine the main reasons for the current weaknesses in some cases.

Author Contributions

L.A.: conceptualization, supervision, methodology, formal analysis, resources, data curation, writing—original draft preparation. A.D.: conceptualization, supervision, writing—review and editing, project administration, funding acquisition. R.A.Z.: conceptualization, writing—review and editing, supervision. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available upon reasonable request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ibrahim, R.A.; Abd Elaziz, M.; Lu, S. Chaotic opposition-based grey-wolf optimization algorithm based on differential evolution and disruption operator for global optimization. Expert Syst. Appl. 2018, 108, 1–27. [Google Scholar] [CrossRef]

- Wang, S.; Hussien, A.G.; Jia, H.; Abualigah, L.; Zheng, R. Enhanced Remora Optimization Algorithm for Solving Constrained Engineering Optimization Problems. Mathematics 2022, 10, 1696. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Abualigah, L.; Yousri, D.; Oliva, D.; Al-Qaness, M.A.; Nadimi-Shahraki, M.H.; Ewees, A.A.; Lu, S.; Ali Ibrahim, R. Boosting atomic orbit search using dynamic-based learning for feature selection. Mathematics 2021, 9, 2786. [Google Scholar] [CrossRef]

- Koziel, S.; Leifsson, L.; Yang, X.S. Solving Computationally Expensive Engineering Problems: Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2014; Volume 97. [Google Scholar]

- Baykasoglu, A. Design optimization with chaos embedded great deluge algorithm. Appl. Soft Comput. 2012, 12, 1055–1067. [Google Scholar] [CrossRef]

- Chen, H.; Wang, M.; Zhao, X. A multi-strategy enhanced Sine Cosine Algorithm for global optimization and constrained practical engineering problems. Appl. Math. Comput. 2020, 369, 124872. [Google Scholar] [CrossRef]

- Simpson, A.R.; Dandy, G.C.; Murphy, L.J. Genetic algorithms compared to other techniques for pipe optimization. J. Water Resour. Plan. Manag. 1994, 120, 423–443. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: A novel method for constrained mechanical design optimization problems. Comput. Aided Des. 2011, 43, 303–315. [Google Scholar] [CrossRef]

- Liu, Q.; Li, N.; Jia, H.; Qi, Q.; Abualigah, L.; Liu, Y. A Hybrid Arithmetic Optimization and Golden Sine Algorithm for Solving Industrial Engineering Design Problems. Mathematics 2022, 10, 1567. [Google Scholar] [CrossRef]

- Droste, S.; Jansen, T.; Wegener, I. Upper and lower bounds for randomized search heuristics in black-box optimization. Theory Comput. Syst. 2006, 39, 525–544. [Google Scholar] [CrossRef]

- El Shinawi, A.; Ibrahim, R.A.; Abualigah, L.; Zelenakova, M.; Abd Elaziz, M. Enhanced adaptive neuro-fuzzy inference system using reptile search algorithm for relating swelling potentiality using index geotechnical properties: A case study at El Sherouk City, Egypt. Mathematics 2021, 9, 3295. [Google Scholar] [CrossRef]

- Attiya, I.; Abualigah, L.; Alshathri, S.; Elsadek, D.; Abd Elaziz, M. Dynamic Jellyfish Search Algorithm Based on Simulated Annealing and Disruption Operators for Global Optimization with Applications to Cloud Task Scheduling. Mathematics 2022, 10, 1894. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S.; Abualigah, L. Binary Aquila Optimizer for Selecting Effective Features from Medical Data: A COVID-19 Case Study. Mathematics 2022, 10, 1929. [Google Scholar] [CrossRef]

- Attiya, I.; Abualigah, L.; Elsadek, D.; Chelloug, S.A.; Abd Elaziz, M. An Intelligent Chimp Optimizer for Scheduling of IoT Application Tasks in Fog Computing. Mathematics 2022, 10, 1100. [Google Scholar] [CrossRef]

- Wen, C.; Jia, H.; Wu, D.; Rao, H.; Li, S.; Liu, Q.; Abualigah, L. Modified Remora Optimization Algorithm with Multistrategies for Global Optimization Problem. Mathematics 2022, 10, 3604. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, H.; Luo, J.; Zhang, Q.; Jiao, S.; Zhang, X. Enhanced Moth-flame optimizer with mutation strategy for global optimization. Inf. Sci. 2019, 492, 181–203. [Google Scholar] [CrossRef]

- Chen, H.; Xu, Y.; Wang, M.; Zhao, X. A balanced Whale Optimization Algorithm for constrained engineering design problems. Appl. Math. Model. 2019, 71, 45–59. [Google Scholar] [CrossRef]

- Miller, J.; Trümper, L.; Terboven, C.; Müller, M.S. A theoretical model for global optimization of parallel algorithms. Mathematics 2021, 9, 1685. [Google Scholar] [CrossRef]

- Sayed, G.I.; Khoriba, G.; Haggag, M.H. A novel chaotic Salp Swarm Algorithm for global optimization and feature selection. Appl. Intell. 2018, 48, 3462–3481. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. Improved Sine Cosine Algorithm with crossover scheme for global optimization. Knowl. Based Syst. 2019, 165, 374–406. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, X.; Chen, Y.; Liu, Z. A modified Whale Optimization Algorithm for large-scale global optimization problems. Expert Syst. Appl. 2018, 114, 563–577. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Gazelle Optimization Algorithm: A novel nature-inspired metaheuristic optimizer. Neural Comput. Appl. 2022, 4, 1–33. [Google Scholar] [CrossRef]

- Haklı, H.; Uğuz, H. A novel particle swarm optimization algorithm with Lévy flight. Appl. Soft Comput. 2014, 23, 333–345. [Google Scholar] [CrossRef]

- Liu, Y.; Cao, B.; Li, H. Improving ant colony optimization algorithm with epsilon greedy and Lévy flight. Complex Intell. Syst. 2021, 7, 1711–1722. [Google Scholar] [CrossRef]

- Gao, W.F.; Liu, S.Y.; Huang, L.L. A novel artificial bee colony algorithm based on modified search equation and orthogonal learning. IEEE Trans. Cybern. 2013, 43, 1011–1024. [Google Scholar] [PubMed]

- Hu, J.; Chen, H.; Heidari, A.A.; Wang, M.; Zhang, X.; Chen, Y.; Pan, Z. Orthogonal learning covariance matrix for defects of grey wolf optimizer: Insights, balance, diversity, and feature selection. Knowl. Based Syst. 2021, 213, 106684. [Google Scholar] [CrossRef]

- Rosenbrock, H. An automatic method for finding the greatest or least value of a function. Comput. J. 1960, 3, 175–184. [Google Scholar] [CrossRef]

- Lewis, R.M.; Torczon, V.; Trosset, M.W. Direct search methods: Then and now. J. Comput. Appl. Math. 2000, 124, 191–207. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl. Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Mirjalili, S. Dragonfly Algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 2016, 27, 1053–1073. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium Optimizer: A novel optimization algorithm. Knowl. Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization Algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Mirjalili, S. The Ant Lion Optimizer. Adv. Eng. Softw. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Mirjalili, S.; Gandomi, A.H. Marine Predators Algorithm: A nature-inspired metaheuristic. Expert Syst. Appl. 2020, 152, 113377. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Mirjalili, S. A hyper-heuristic for improving the initial population of whale optimization algorithm. Knowl. Based Syst. 2019, 172, 42–63. [Google Scholar] [CrossRef]

- Jouhari, H.; Lei, D.; AA Al-qaness, M.; Abd Elaziz, M.; Ewees, A.A.; Farouk, O. Sine-cosine algorithm to enhance simulated annealing for unrelated parallel machine scheduling with setup times. Mathematics 2019, 7, 1120. [Google Scholar] [CrossRef]

- Song, S.; Wang, P.; Heidari, A.A.; Wang, M.; Zhao, X.; Chen, H.; He, W.; Xu, S. Dimension decided Harris hawks optimization with Gaussian mutation: Balance analysis and diversity patterns. Knowl. Based Syst. 2021, 215, 106425. [Google Scholar] [CrossRef]

- Luo, J.; Chen, H.; Heidari, A.A.; Xu, Y.; Zhang, Q.; Li, C. Multi-strategy boosted mutative whale-inspired optimization approaches. Appl. Math. Model. 2019, 73, 109–123. [Google Scholar] [CrossRef]

- Chen, H.; Yang, C.; Heidari, A.A.; Zhao, X. An efficient double adaptive random spare reinforced whale optimization algorithm. Expert Syst. Appl. 2020, 154, 113018. [Google Scholar] [CrossRef]

- Nenavath, H.; Jatoth, R.K. Hybridizing Sine Cosine Algorithm with differential evolution for global optimization and object tracking. Appl. Soft Comput. 2018, 62, 1019–1043. [Google Scholar] [CrossRef]

- Kumar, N.; Hussain, I.; Singh, B.; Panigrahi, B.K. Single sensor-based MPPT of partially shaded PV system for battery charging by using cauchy and gaussian sine cosine optimization. IEEE Trans. Energy Convers. 2017, 32, 983–992. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Oliva, D.; Xiong, S. An improved opposition-based Sine Cosine Algorithm for global optimization. Expert Syst. Appl. 2017, 90, 484–500. [Google Scholar] [CrossRef]

- Zhu, A.; Xu, C.; Li, Z.; Wu, J.; Liu, Z. Hybridizing grey wolf optimization with differential evolution for global optimization and test scheduling for 3D stacked SoC. J. Syst. Eng. Electron. 2015, 26, 317–328. [Google Scholar] [CrossRef]

- Zhang, Q.; Chen, H.; Heidari, A.A.; Zhao, X.; Xu, Y.; Wang, P.; Li, Y.; Li, C. Chaos-induced and mutation-driven schemes boosting salp chains-inspired optimizers. IEEE Access 2019, 7, 31243–31261. [Google Scholar] [CrossRef]

- Jia, H.; Lang, C.; Oliva, D.; Song, W.; Peng, X. Dynamic harris hawks optimization with mutation mechanism for satellite image segmentation. Remote. Sens. 2019, 11, 1421. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).