Abstract

A new image reconstruction (IR) algorithm from multiscale interest points in the discrete wavelet transform (DWT) domain was proposed based on a modified conditional generative adversarial network (CGAN). The proposed IR-DWT-CGAN model generally integrated a DWT module, an interest point extraction module, an inverse DWT module, and a CGAN. First, the image was transformed using the DWT to provide multi-resolution wavelet analysis. Then, the multiscale maxima points were treated as interest points and extracted in the DWT domain. The generator was a U-net structure to reconstruct the original image from a very coarse version of the image obtained from the inverse DWT of the interest points. The discriminator network was a fully convolutional network, which was used to distinguish the restored image from the real one. The experimental results on three public datasets showed that the proposed IR-DWT-CGAN model had an average increase of 2.9% in the mean structural similarity, an average decrease of 39.6% in the relative dimensionless global error in synthesis, and an average decrease of 48% in the root-mean-square error compared with several other state-of-the-art methods. Therefore, the proposed IR-DWT-CGAN model is feasible and effective for image reconstruction with multiscale interest points.

Keywords:

image reconstruction; discrete wavelet transform; interest point; convolutional neural network; conditional generative adversarial network MSC:

68A07; 94A08

1. Introduction

Image reconstruction (IR) has always been a research focus in image processing. Specifically, the IR from singular points or interest points, such as maxima, zero crossing, contour, or edge points in a multiscale transform domain, is of paramount importance. Early in 1992, Mallat et al. stated that singularities and irregular structures often carry the most important information in one- and two-dimensional signals [1]. As an application, Mallat developed an alternating projection algorithm to reconstruct an image from the local modulus maxima of its wavelet transform. In 2013, Maji et al. found that major information in an image is encoded in its edge points, which is also supported by neurophysics [2]. Maji concluded that an image can be accurately reconstructed from the compact representation of its edge pixels. In 2018, Soulard and Carré designed a new color IR algorithm based on monogenic features that appeared to be extremely good at preserving contours and color information [3]. It is likely to reconstruct a color image only with the monogenic maxima features. Several image reconstruction experiments were conducted to recover a close approximation of the original image. Recently, in our previous work in 2021, Liu et al. proposed an IR algorithm from multiscale interest points in the dual-tree complex wavelet transform (DT-CWT) domain [4]. The accurate multiscale interest points were detected in the DT-CWT domain due to the shift invariance and directional selectivity properties of the DT-CWT coefficients. The images were then reconstructed from the phases and the magnitudes of the multiscale interest points by alternating orthogonal projections between the CT-DWT space and its affine space.

The traditional IR methods mentioned above are effective and achieved good results; however, they also have some shortcomings. For example, a large number of iterations and a long execution time are involved in the IR algorithms. To overcome the shortcomings of the traditional IR methods while ensuring high performance, a neural network was introduced for the IR [5]. Inspired by the outstanding performance of a convolutional neural network (CNN) in extracting signal features, Xiao et al. proposed an excellent CNN approach for brightness temperature IR mirrored aperture synthesis (MAS) [6], where a deep CNN for learning the MAS IR mapping and system errors was employed to improve the brightness temperature IR. In 2020, Jeyaraj et al. proposed a deep learning model from the AlexNet architecture for the estimation and reconstruction of highly complex features from medical images [7]. To alleviate the computational complexity, Antholzer et al. developed an efficient and direct IR framework based on deep learning, where the conventional iterative IR algorithms were replaced by a CNN [8]. Gan et al. proposed an unsupervised deep registration-augmented reconstruction method (U-Dream) to train a DNN framework to reconstruct high-quality images by directly mapping pairs of unregistered and artifact-corrupted images [9]. U-Dream was widely applicable to many biomedical IR tasks due to it not requiring registered data.

There are many recent works concerning IR problems [10,11], such as positron microscopy [12], lensless imaging [13], MR imaging [14,15], low-dose CT [16], photoacoustic [17,18] and ultrasound [19], and compressed sensing [20].

Although there has been much progress in traditional IR algorithms regarding particular interest points, the previous studies mainly focused on IR based on both high- and low-frequency information of the original image, such as detail coefficients and low-frequency band coefficients in the DWT domain, leading to either non-desirable performance for lossy IR or computationally expensive iterative processes.

Therefore, a new IR method [21] for an image’s multiscale interest points extracted in the DWT domain was proposed with a conditional generative adversarial network (CGAN). Specifically the maxima points in the DWT domain were treated as the interest points. The proposed IR model is called the IR-DWT-CGAN. First, the image is transformed by DWT to provide a multi-resolution image representation in the DCT domain. Then, the multiscale maxima points are extracted in the DWT domain. With a U-net structured generator [22], the original image is restored from a very coarse version of the image (also referred to as the multiscale points feature map) obtained from the inverse DWT of the interest points. The restored image is distinguished from the real image with a fully convolutional network, i.e., a discriminator network.

The main contributions of our proposed algorithm are as follows.

- (1)

- Based on the corresponding magnitudes of the wavelet coefficients, multiscale interest points were obtained with the proposed interest points (i.e., maxima) extraction module. A new dataset was built by compositing natural images and their interest point maps.

- (2)

- Based on the CGAN, a new and efficient IR model using multiscale interest points, i.e., IR-DWT-CGAN, was proposed. The generative network (generator) has a U-net-like structure, while the discriminator focuses on discriminating the reconstructed image from the corresponding real counterpart. The IR-DWT-CGAN was conditioned on a very coarse version of the reconstructed image based on the inverse DWT of the corresponding wavelet maxima map.

- (3)

- The proposed IR-DWT-CGAN outperformed some traditional IR algorithms in terms of both the reconstruction image quality and time consumption.

The rest of this paper is organized as follows. In Section 2, the relevant work and some fundamentals are reviewed, and some quantitative metrics are given. In Section 3, the proposed IR-DWT-CGAN framework is detailed. In Section 4, simulation results and analysis are provided. Finally, a brief conclusion is reached in Section 5.

2. Related Work

2.1. Discrete Wavelet Transform

Wavelet transform (WT) is based on small waves, i.e., wavelets, of varying frequencies and limited durations. This makes the WT suitable for analyzing irregular data patterns, such as impulses occurring at various instances. The WT maps a function of continuous variables into a sequence of coefficients. If a function itself is a discrete signal, the resulting coefficients are called the discrete wavelet transform (DWT) of the function. The DWT has received considerable attention in various signal processing applications. Each wavelet transform has a wavelet function, which we call the mother wavelet, and a scaling function, which is the father wavelet. The basis functions of the wavelet are actually formed via scaling and translation of the mother wavelet and the father wavelet. The DWT has advantages such as the similarity of data structure in terms of the resolution and available decomposition at any level. It can be implemented as a multiscale transform. At any level in the DWT domain, an image is decomposed into four sub-bands denoted as LL, LH, HL, and HH, where LH, HL, and HH are called the detail components, representing the finest scale wavelet coefficients, and LL is called the approximate component, standing for the coarse-level coefficients.

In this study, a three-level DWT was implemented in Matlab 2016b (Jack L.; Cleve M. Natick, MA, USA), with the mother wavelet used in [1], which was equal to the first-order derivative of a smoothing function.

2.2. Generative Adversarial Networks

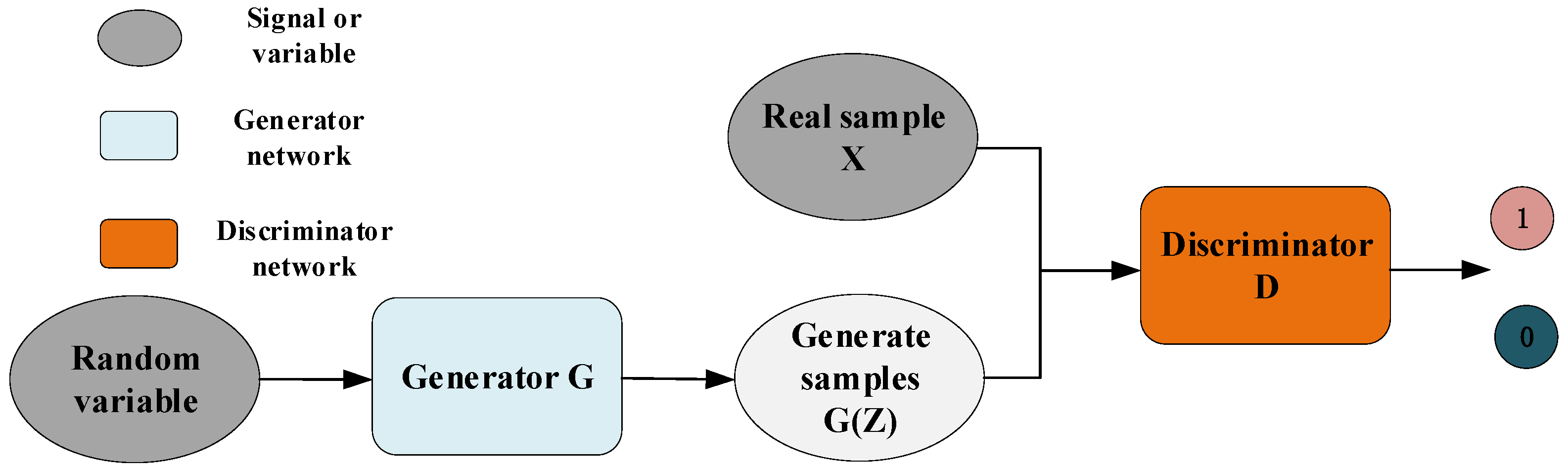

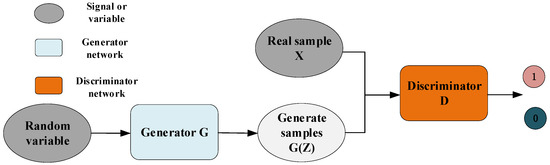

The generative adversarial network was proposed by Goodfellow et al. [23] in 2014. Based on the idea of game theory, the GAN realizes the learning and fitting of the network to the target sample data distribution through the countermeasure process. At present, the GAN has been successfully applied to many fields, such as handwritten font generation [24], image preprocessing [25], data enhancement [26], and IR [27]. Generally, a GAN consists of a generator unit G and a discriminator unit D. The GAN structure is shown in Figure 1.

Figure 1.

GAN structure.

The goal of generator G is to learn the data distribution of the target threshold samples to better generate sample data similar to the target threshold samples; the goal of the discriminator D is to distinguish the source of the input sample accurately, that is, whether the input sample is from the real sample data or the sample data generated by the generator G. The GAN is usually constructed using a multi-layer perceptron or deep neural network to achieve better feature learning and sample fitting ability. The specific implementation process of the whole network is as follows: the generator G receives the random variable Z and generates a sample G(Z) with this random variable, while the discriminator D receives both the real sample X and the generated sample G(Z). After the discrimination, it outputs the probability that the sample is a true sample. Under ideal conditions, the generator can accurately learn the data distribution of target threshold samples and generate the sample data, which can confuse the false data with the true data. In the end, the discriminator D is unable to distinguish the real samples from the generated ones correctly, and the whole network reaches the state of a Nash equilibrium.

2.3. Image Reconstruction Based on Multiscale Interest Points

The IR with multiscale local maxima in the DWT domain and the dual-tree complex wavelet transform (DT-CWT) domain were studied in [1,4]. Mallat studied the properties of multiscale edge points in the DWT domain and proposed an algorithm to reconstruct a close approximation of images from their multiscale edge points [1]. The image reconstruction errors were below human visual sensitivity and could thus be neglected in many image processing or computer vision tasks. Liu proposed a novel IR algorithm from singular (local maxima) points in the DT-CWT domain by taking advantage of the shift invariance and directional selectivity of the DT-CWT coefficients [4]. Liu’s IR algorithm outperforms some typical IR algorithms in terms of several performance metrics, such as the peak signal-to-noise ratio and structural similarity.

2.4. Image Reconstruction Based on Deep Learning

In recent years, deep learning has been playing an important role in IR. In 2022, Xiao [6] proposed a deep-learning-based method for the mirrored aperture synthesis IR in which the mirrored aperture synthesis IR mapping and system errors were learned by a deep CNN framework. In 2020, by consolidating the needed high-level features from a deep CNN system, an effective deformable IR algorithm was designed to achieve a better mean square error, structural similarity index, and high-frequency error norm [7].

3. The Proposed IR-DWT-CGAN Model

3.1. IR-DWT-CGAN Architecture

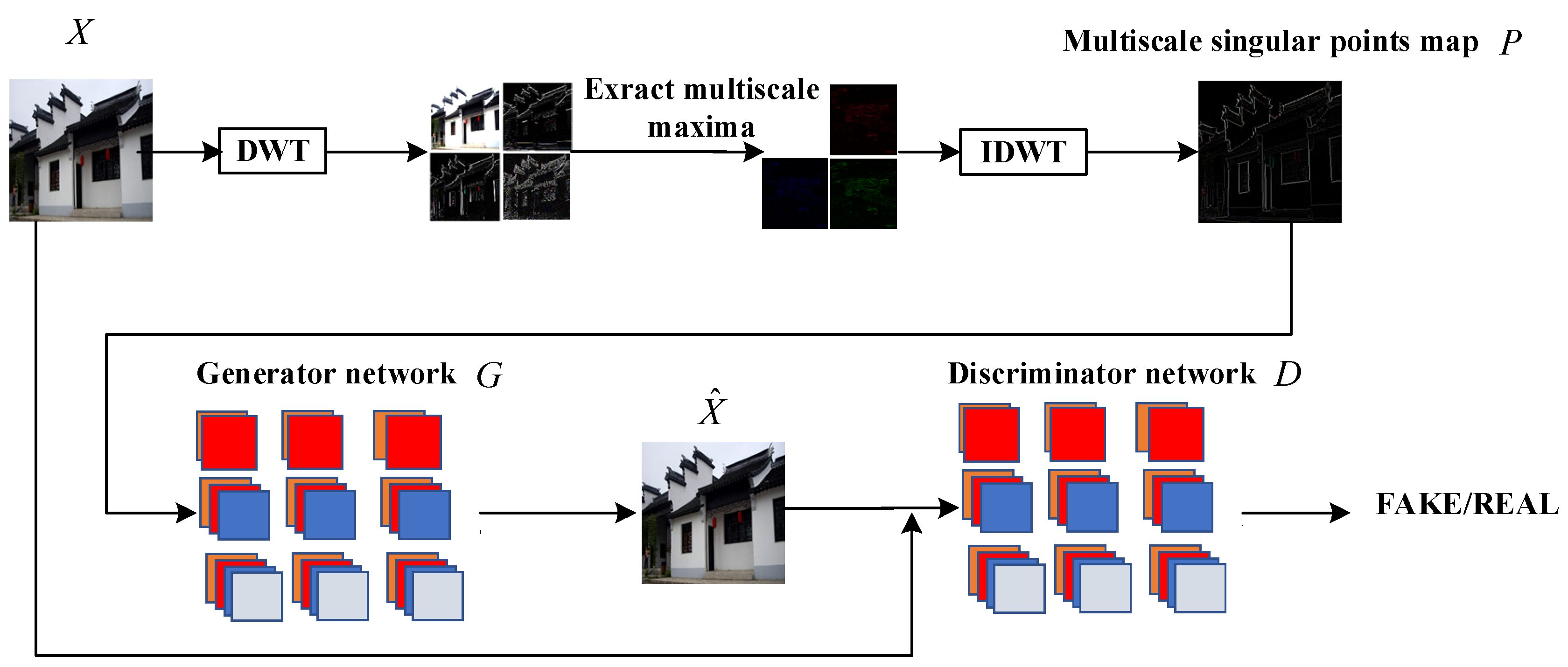

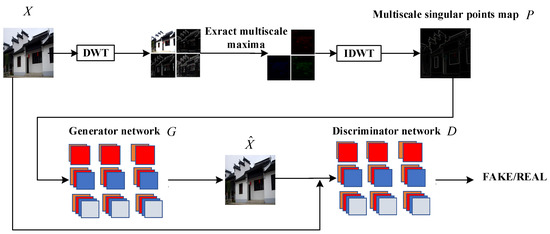

Based on the GAN, the overall architecture of the proposed IR-DWT-CGAN model was designed, as shown in Figure 2. An image was transformed into the DWT domain using a discrete wavelet transform, and the maximal points in the DWT domain were chosen as interest points for performing an inverse DWT to obtain a very coarse version of the image, which is called the multiscale points feature map. The multiscale points feature map, whose dimension is the same as the original image, is the input of the generator network. Based on the multiscale points feature map, the generator reconstructs the original image in such a way that the reconstructed image is as close as possible to the original image. The goal of the discriminator is to correctly distinguish the reconstructed image recovered with the generator from the real image. At the end of the network training, the generator was well trained enough to make it almost impossible for the discriminator to distinguish the restored image from the real one.

Figure 2.

MSCGAN architecture.

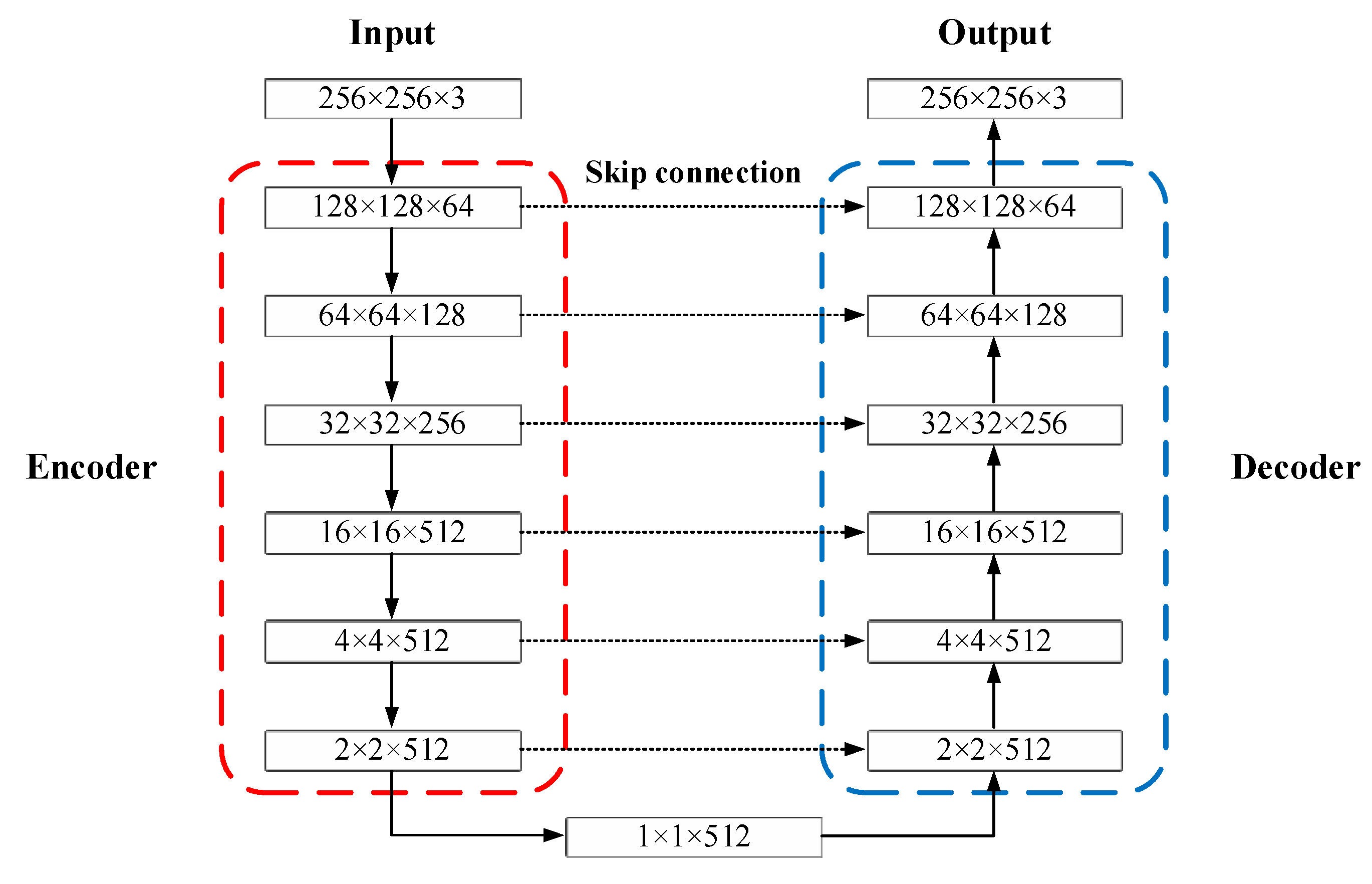

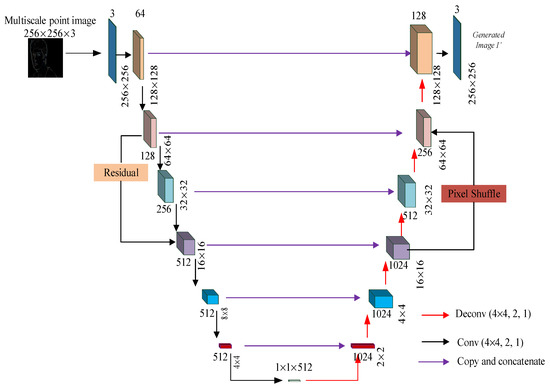

3.2. Proposed Generator Network

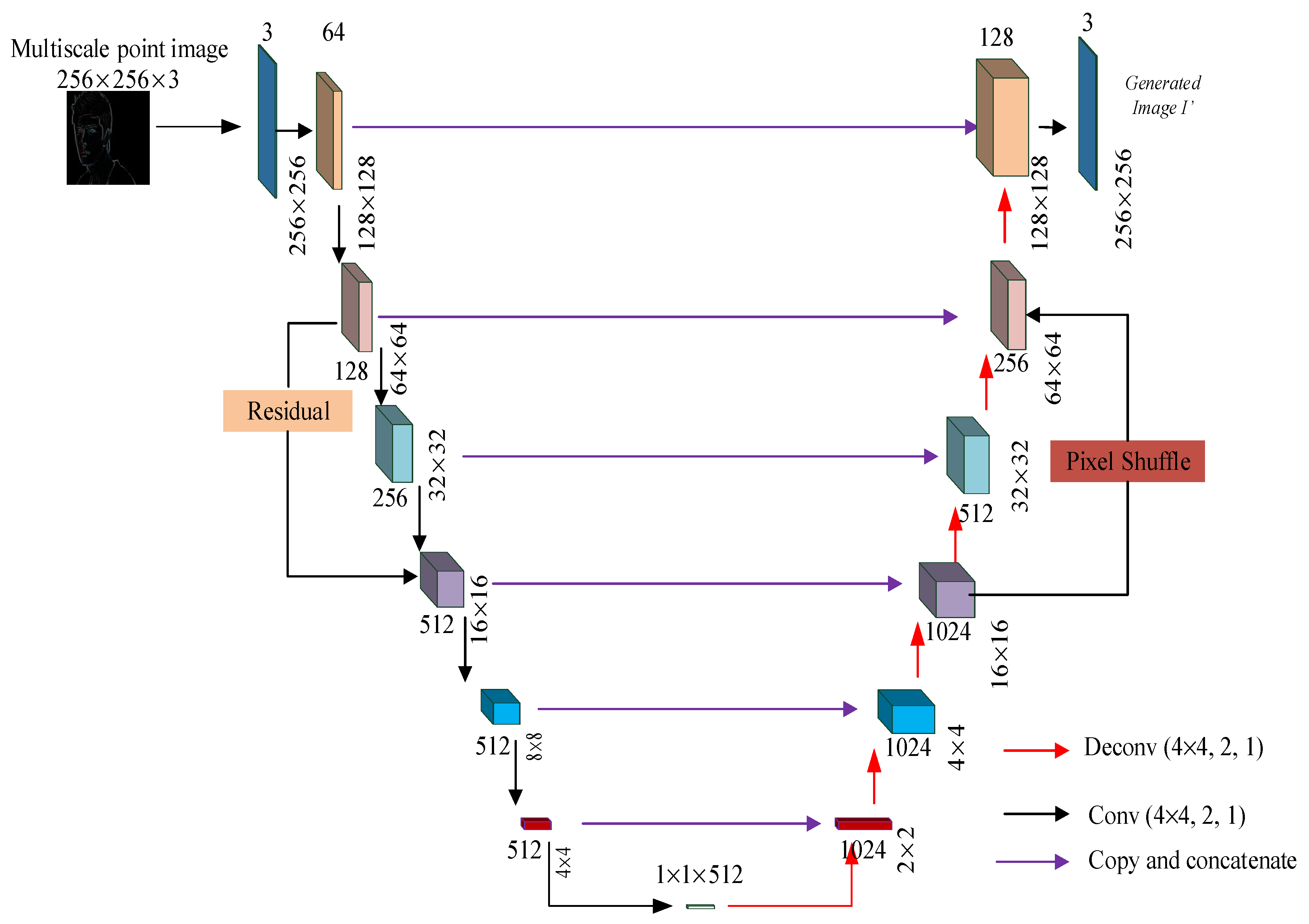

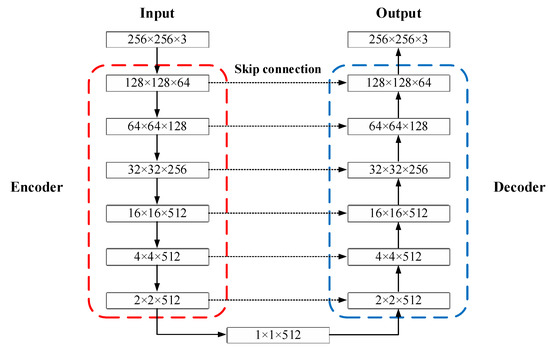

The goal of the generator is to reconstruct a high-quality image directly from the multiscale points feature map. Therefore, the details of the original image should be restored to the multiscale local maxima. The generator should be able to connect the features of different feature layers in the generator network effectively, maximize the information flow transmission from the shallow network to the deep network, and enhance the repeatability of the features. The structure of the proposed generator network is shown in Figure 3.

Figure 3.

Proposed generator network.

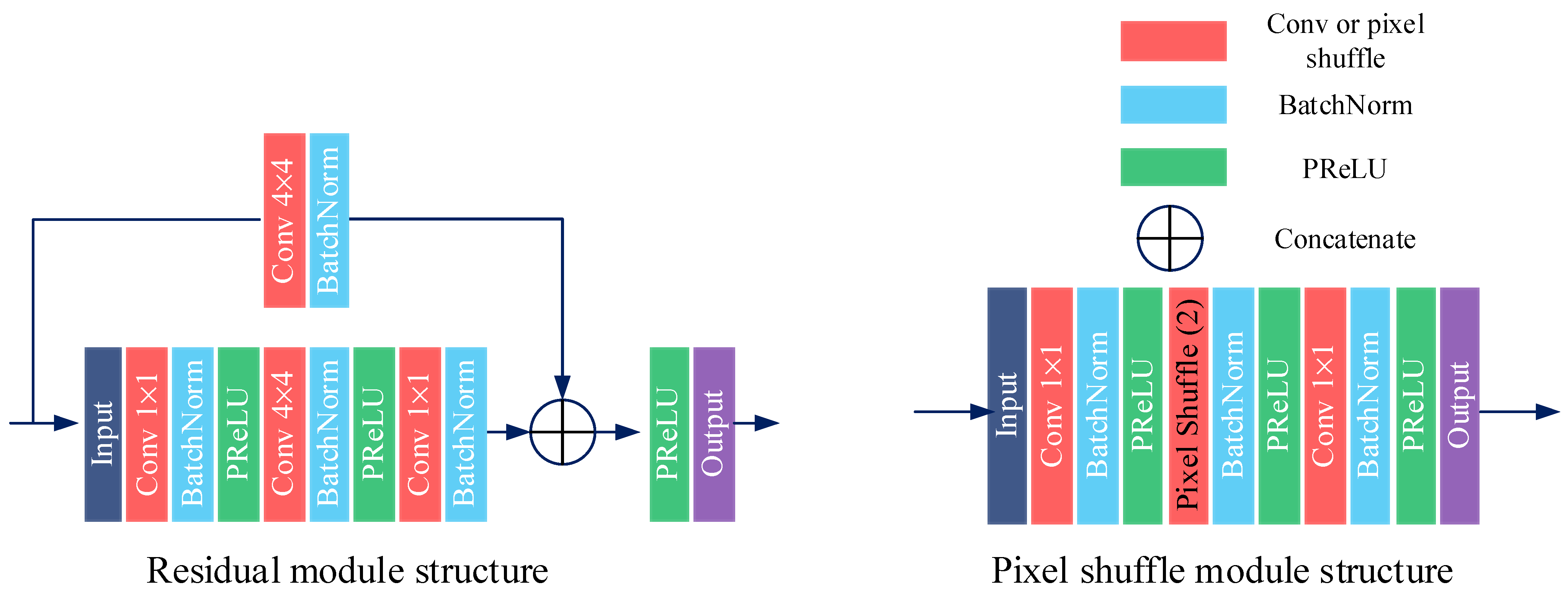

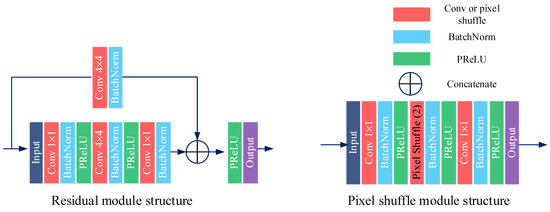

The proposed IR-DWT-CGAN generator part consists of two parts: the left encoder part and the right decoder part. Assuming that the three wavelet transform coefficient bands HL, LH, and HH of the input image (X) are extracted from the multiscale maxima combined into an overall feature array and denoted as H, then the multiscale feature map P of interest points is obtained using an inverse wavelet transform. The feature maps image P is input into the left encoder part of the generator network; after three CNN blocks, the output feature maps are fetched and sent to ResBlocks, and at the same time, the feature maps are also fetched and sent to an adder in the lower part. All the ResBlocks have the same structure and are used to retain more information about the container, as shown in Figure 4. In a residual block, we set up a three-layer CNN and a residual branch. The output of the two structures is passed to the PReLU activation layer after an adder operation, resulting in a complete output. The output feature maps ( ) are fed to the decoder part on the right side, involving three CNN layers, each with a convolutional layer, a batchnorm layer, and an activation layer. The output from the third layer of the CNN is transmitted to the pixel shuffle module. The adder adds the pixel shuffle module and the output of the fifth CNN layer to form another residual network for the reconstruction effect. Finally, the above output is passed into the two layers of the CNN to obtain the generated .

Figure 4.

Proposed residual block and pixel shuffle block.

To improve the reconstruction performance, a residual block was placed between each convolutional layer and the deconvolutional layer, which was inspired by the skip connection in ResNet [26]. The residual block could deepen the generator network and help to achieve fast convergence. As shown in Figure 4, the proposed residual block can speed up the convergence during training and also help to prevent overfitting. The pixel shuffle module plays an up-sampling role in the image reconstruction process. It is a hierarchical structure and is capable of extracting different levels of feature details of the input data. The lower convolutional layers tend to capture the edge details of the input data and compress them into lower-dimensional vectors, while the higher deconvolutional layers tend to decode the compressed features into a denser matrix without losing any important information.

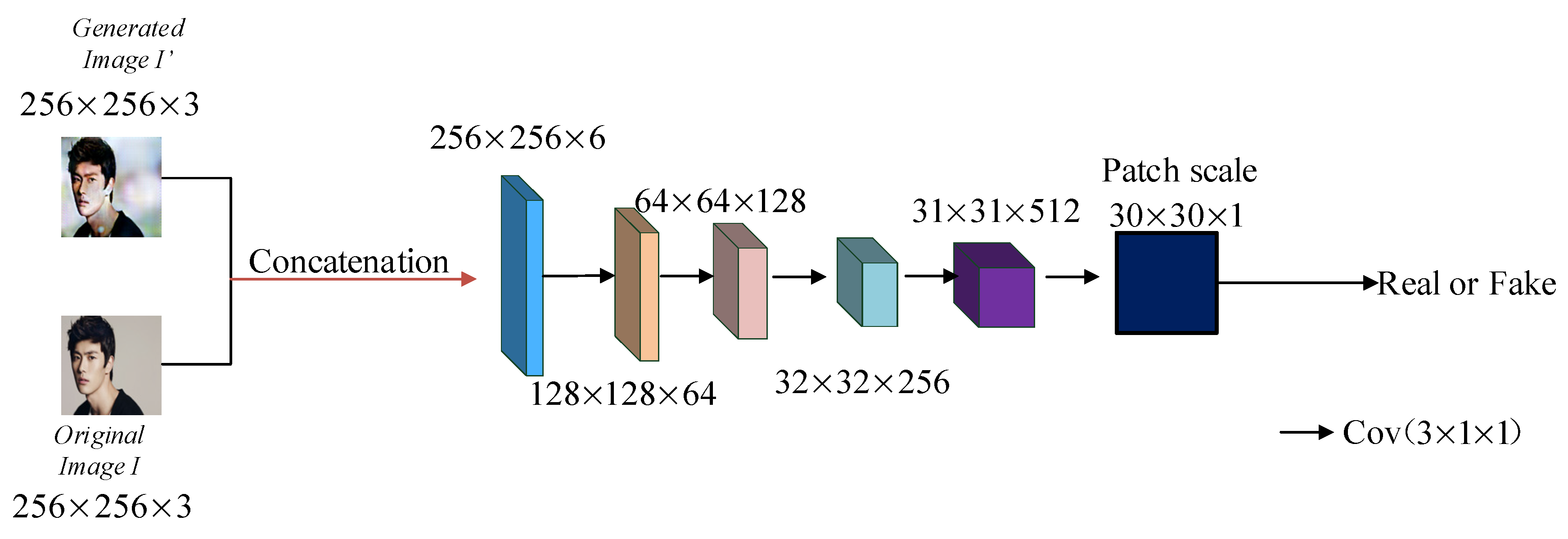

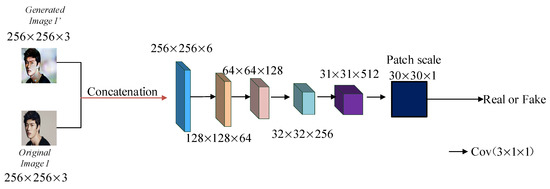

3.3. Proposed Discriminator Network

The goal of the discriminator is to accurately distinguish between the reconstructed image restored by the generator and the real image. The structure of the discriminator network is shown in Figure 5. A fully convolutional neural network architecture was adopted, and the network outputs a discrimination matrix in which the convolution kernel size for the convolution operation is 3 × 3, the step size is 2 × 2, and the edge zero filling is 1 × 1. The complete module consists of five CNN layers, and each pixel in the matrix represents the probability that an image block in the input is from either a restored sample or a real sample. Therefore, the impact of different regions in the image on the discrimination results can be fully considered so that the generator can pay more attention to the details, textures, and other information of the restored image during the training process, which helps to reduce the artifacts of the restored image. Finally, the average value of all elements in the matrix is calculated for the output. The discriminator adopts a fully convolutional neural network structure and applies different weight values to different feature maps to accelerate the convergence of the network. The generator and the discriminator play games in the process of the alternative training of samples. The generator continuously learns to approximate the data distribution of the real samples, which eventually makes the discriminator unable to accurately distinguish between the restored image samples and the real image samples to make the network reach a state of Nash equilibrium. A probability vector is obtained from the output, and the index of the maximal value in the vector is real or fake.

Figure 5.

Proposed discriminator module.

3.4. Parameter Settings of the Model

3.4.1. Generator Network Parameters Setting

We augmented two fully connected layers between the convolution and the deconvolution modules to enhance the feature mapping ability. The detailed parameters of the encoder and the decoder parts of the generator are described in Table 1 and Table 2, respectively. In addition, in the proposed IR-DWT-CGAN, the rectified linear units (ReLUs) were replaced by the parametric rectified linear units (PReLUs), which can achieve faster convergence than the ReLUs [22]. Table 1 illustrates the complete structure of the generator network, while Table 2 illustrates the complete structure of the discriminator.

Table 1.

Encoder parameters of the proposed generator network.

Table 2.

Decoder architecture parameters for the proposed generator network.

3.4.2. Discriminator Network Parameter Setting

As shown in Figure 5, the fully convolutional neural network architecture is also used in the discriminator network. The network outputs a discrimination matrix, where the convolution kernel size for the convolution operation is 3 × 3, the step size is 2 × 2, and the edge zero padding is 1 × 1. The detailed parameters are collected in Table 3.

Table 3.

The architecture of the proposed discriminator network.

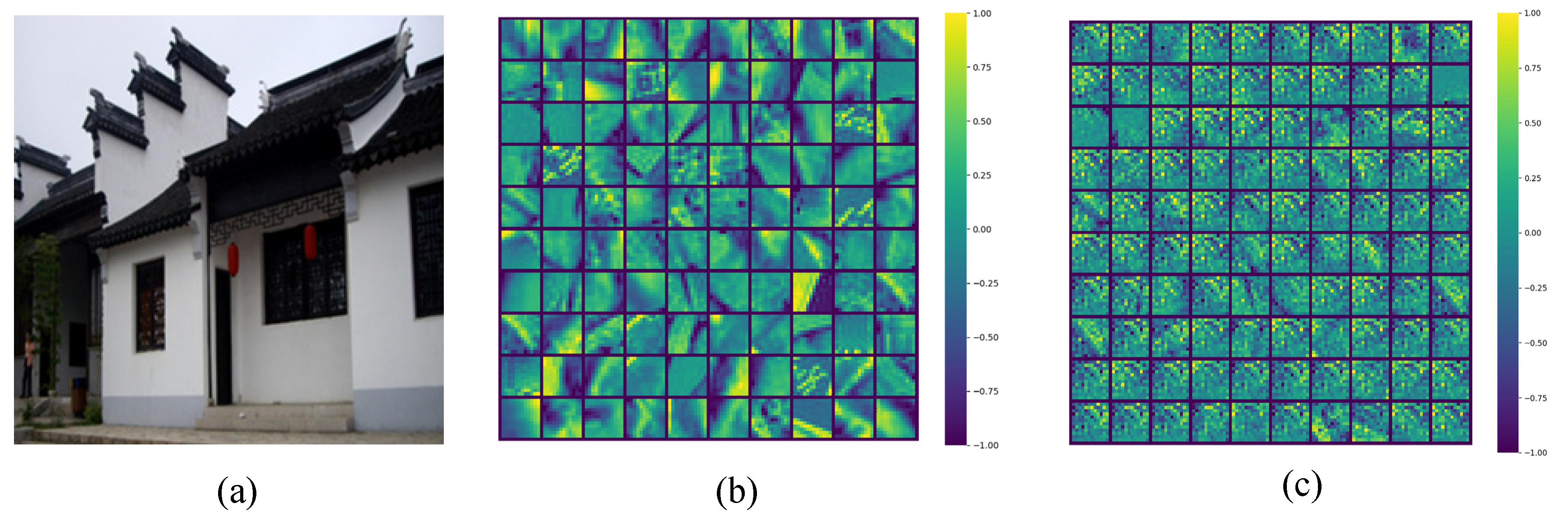

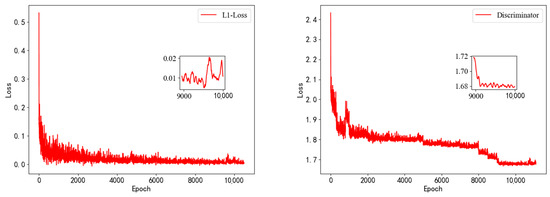

3.4.3. Image Size and Feature Maps Transformation Process

The size of the multiscale points feature map that is input to the generator is initially 256 × 256 × 3. The detailed feature map dimensions and image size variations during the image reconstruction process (both training and testing processes) are shown in Figure 6 and Figure 7.

Figure 6.

Feature map size changes in the generator network.

Figure 7.

Feature map transformation process: (a) an original image; (b) feature maps presented consecutively in the output of the generator network; (c) feature maps presented consecutively in the discriminator.

3.5. Objective Function and Modified Conditional GAN

The first step in this study was to train the generating function , which estimates the corresponding original image from the given multiscale points feature map . The objective function of the proposed model was related to the adversarial competitive loss and the reconstruction loss, which are respectively expressed as

Thus, the total loss is the weighted sum of the above two loss functions. The final optimization problem was as follows:

where the balance factor . Generally, a conditional GAN applies the multiscale points feature maps to the generator in the form of dropout to prevent a deterministic output. However, the various outputs generated due to dropout can transform the vein patterns of the image to be recovered, which can lead to degradation in the recognition performance.

For the original GAN, the input into the generator is noise. For a conditional GAN (CGAN), such as the one used in our work, the input into the generator is an image, namely, . This image (or condition) we used was the coarse version of the reconstructed image obtained from the inverse DWT of the interest points feature map to speed up the training process compared with considering the noise as the conditional input.

Therefore, a modified conditional GAN without dropout was proposed in this study since the deterministic output is required rather than the various outputs. Finally, the reconstructed image from is generated based on Equation (3).

The reconstruction loss contains a squared error term and an regularization:

where and represent the real image and the corresponding reconstructed one, respectively; is the weight matrix; and is the transpose of .

where and are the weights after and before the update, respectively; is the initial learning rate; is the first bias-corrected moment estimate; is the second bias-corrected moment estimate; and is a constant.

4. Simulation Results and Analysis

4.1. Development Environment

The model in this study ran in a hardware environment with an Intel(R) Core (TM) i7-8700 CPU@ 3.2GHz, 32 GB RAM, and two GeForce RTX 2080Ti graphics adapters, with Windows 10 as the workstation operating system. The integrated development environment and deep learning symbolic library were PyCharm-Python 3.7 (V. 2020.2.3. JetBrains S.R.O. Prague, Czech Republic) and Pytorch (V.1.1.0 Adam P.; Sam G.; Soumith C. Silicon Valley, CA, USA), respectively.

The proposed IR-DWT-CGAN was trained on a subset of ImageNet [28] that contained 49,100 color images in RGB color space and their multiscale points feature maps. Each image in the training dataset was reshaped to a size of 256 × 256 to save on storage space. All test images were taken from the Waterloo dataset of the test dataset [29]. All images in the ImageNet dataset and the Waterloo dataset were reshaped to a size of 256 × 256 for training and testing.

4.2. Quantitative Metrics

Four quantitative image reconstruction quality indices were utilized for the performance evaluation, namely, the mean square error (MSE), mean structural similarity (MSSIM), relative dimensionless global error in synthesis (ERGAS, abbreviation in French), and average absolute pixel error (APE).

The MSE is a measure of the quality difference between the reconstructed image and the original one. The MSE is commonly used to quantify the difference between two images by computing the variation in pixel values. The reconstructed image is close to the reference one if the MSE value is near zero. If the pixel value of the image to be evaluated, i.e., X, is and the pixel value of its reference image, i.e., , is , then the MSE between and is defined as

The SSIM, which measures the similarity between images and , is

where and are the mean intensities of the original image and the reconstructed one, respectively; and are the standard deviation of the original image and the reconstructed one, respectively, and are the constants used to maintain stability, where and , with L being the dynamic range of the pixel values, the default = 0.01, and = 0.03. SSIM is used to compare the local patterns of the pixel intensities between the two compared images with values between 0 and 1. An SSIM value 1 indicates that the reference image and the reconstructed one are identical. The MSSIM is defined as the mean SSIM over non-overlapped image blocks.

The PSNR is defined as

where is the size of the original image.

The ERGAS is used to determine the quality of the image in terms of the normalized average error for each band. The smaller the ERGAS, the more similar the reconstructed image is to the reference image. The ERGAS is defined as

where is the mean of for the ith sub-block, is the mean squared error between and , d is a spatial down-sampling factor, and S is the number of partitioned non-overlapped sub-blocks.

The APE is often used to represent the absolute pixel values of the errors between images and , and is defined as

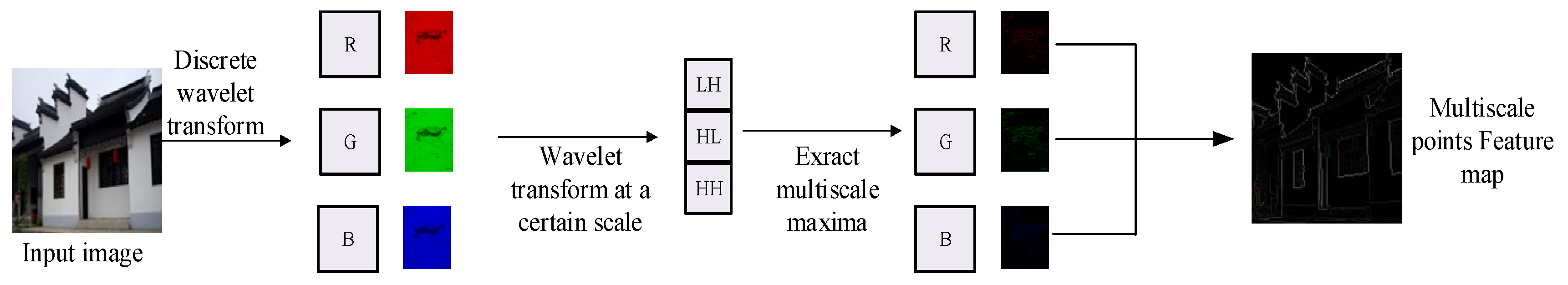

4.3. Proposed Database MAX

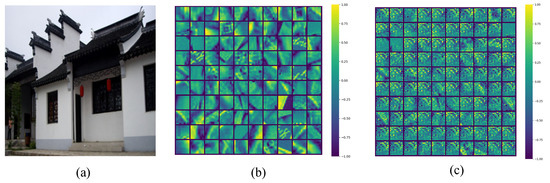

In this study, the proposed database MAX was built from 49,100 color images and their corresponding multiscale points feature maps, which were sufficient to train a large number of weights in the deep CNN structure. The MAX database contained pairs of reshaped natural images and their multiscale point feature maps, as shown in Figure 8. The detailed procedure used to establish the database is presented below.

Figure 8.

Processing of multiscale singular points map.

- Step 1: For a three-channel RGB image, a two-scale wavelet transform was performed individually on each of the three components.

- Step 2: The low-frequency band was discarded while retaining the other three high-frequency bands. The maxima of the three bands were obtained as image features. It makes sense to choose an appropriate model to calculate the DWT coefficients. The applied model can select the fewest local maxima to reconstruct an approximate image. These local maxima were selected with the modulus of the coefficients in the DWT domain with respect to the neighborhood.

- Step 3: The maxima obtained in step 2 were regarded as interest points to which an inverse DWT was applied, along with the zero low-frequency band, to obtain a very coarse version of the desired restored image.

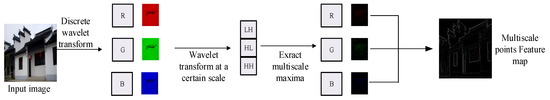

4.4. Training Process

The maximum number of epochs, mini-batch size, and learning rate used in IR-DWT-CGAN were set to 12,000, 25, and 0.002, respectively. During the training period, random jitter was applied to the scale [30]. Moreover, adaptive moment (Adam) optimization [31] was used to train the IR-DWT-CGAN model. During the training process, the generator network and the discriminator network were trained simultaneously. To effectively suppress overfitting and accelerate the network convergence, an Adam optimizer with a batch size of 8 was used to minimize the backpropagation-based loss function.

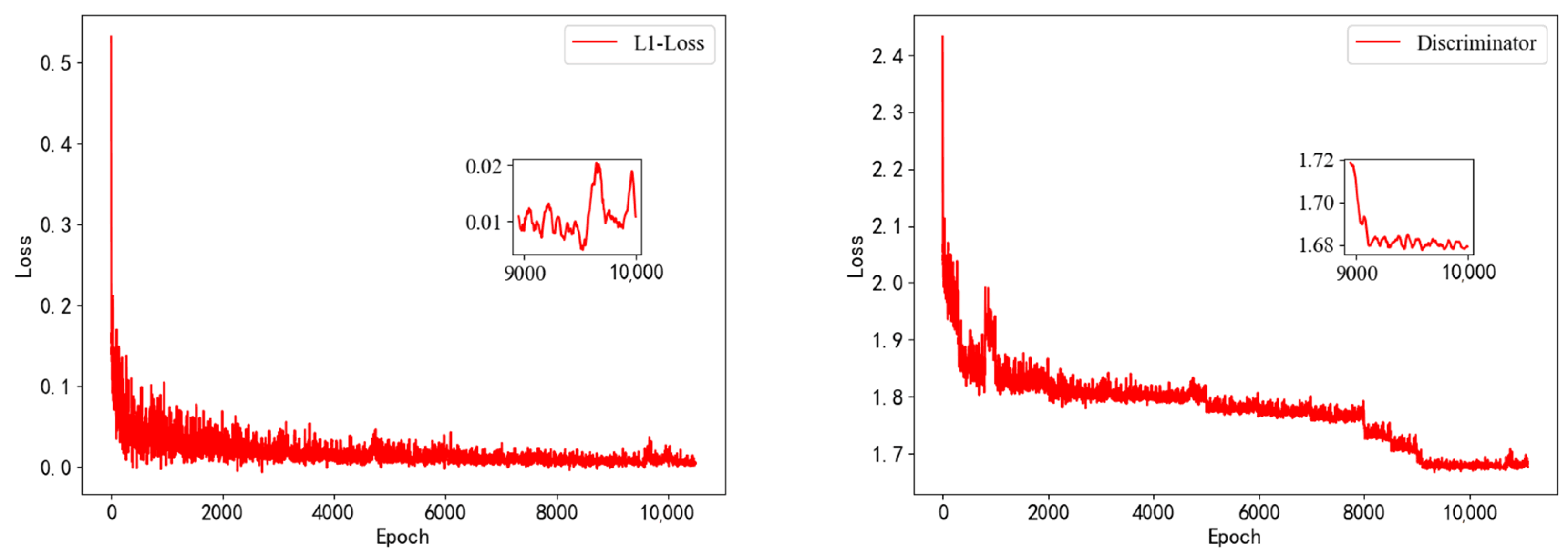

The network training lasted more than 100 days and converged after 8000 epochs. As can be seen in Figure 9, the loss function and the discriminator loss function decreased rapidly in the initial few thousand iterations, approaching a very small value after 8000 epochs.

Figure 9.

Loss function curves for the generator loss and discriminator loss .

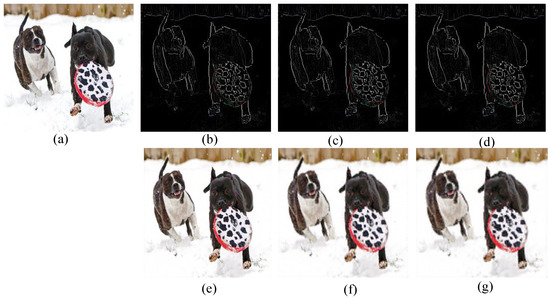

4.5. Results

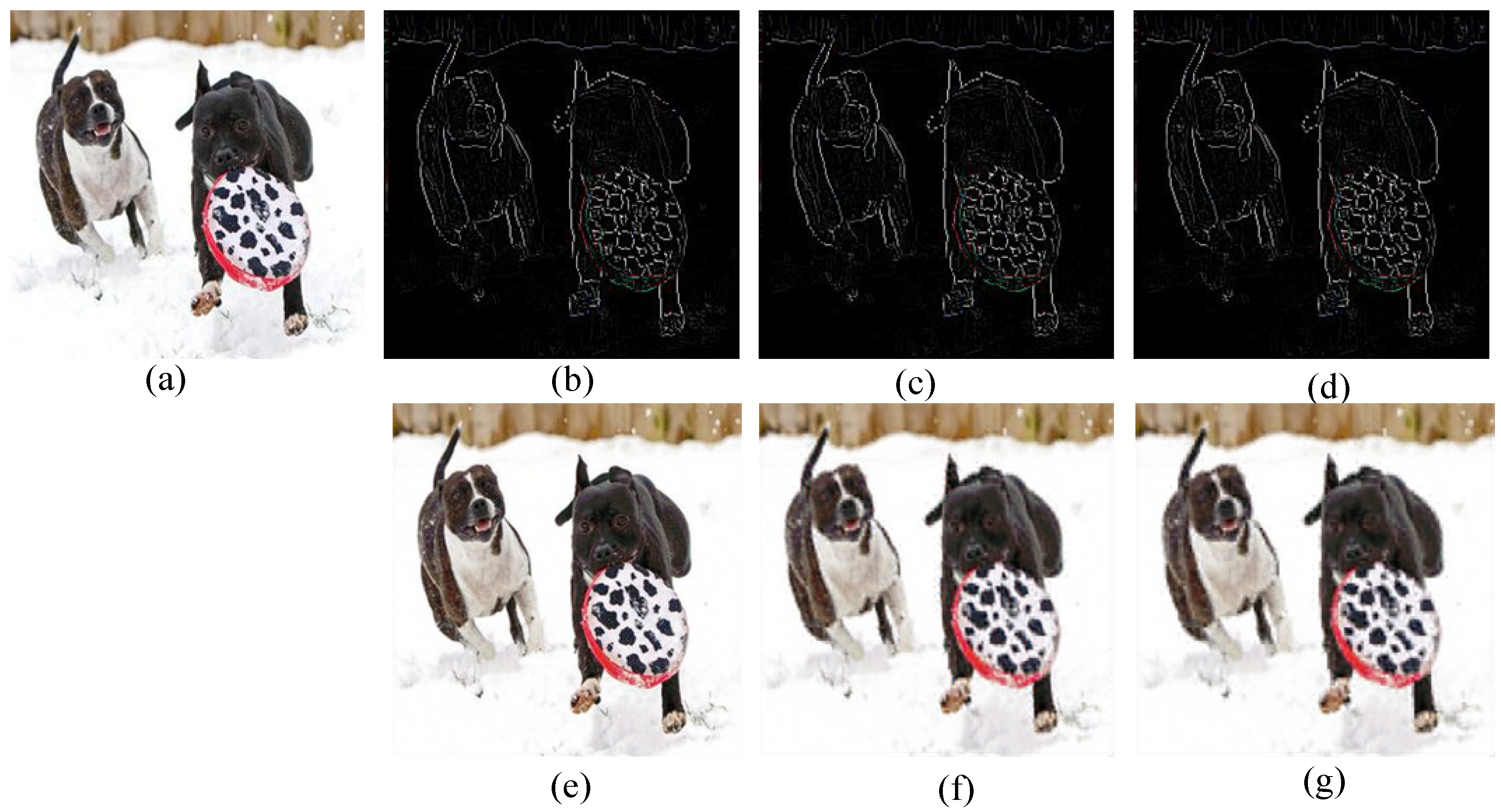

The images reconstructed from their multiscale feature maps at different scales are shown in Figure 10. As can be seen in Figure 10, based on images (b), (c), and (d), a clear texture of the original image can be perceived. However, the number of interest points with high magnitude values was decreasing. As shown in Table 4, the images reconstructed based on the multiscale interest point map with scale = 1 produced better quality in terms of the PSNR, MSE, MSSIM, and ERGAS values while using fewer interest points. In summary, the DWT-based multiscale points feature maps with scale = 1 exhibited better performance than those with scale = 2 and scale = 3.

Figure 10.

Images reconstructed based on different multiscale interest points feature maps at different scales. (a) Original image, (b) and (e) are the multiscale points feature maps and the corresponding reconstructed images when scale = 1; (c) and (f) are the multiscale singular maps and the corresponding reconstructed images when scale = 2; (d) and (g) are the multiscale singular maps and the corresponding reconstructed images when scale = 3.

Table 4.

Average number of interest points of the reconstructed images at different scales and their corresponding image PSNR, MSE, MSSIM, and ERGAS values.

4.6. Analysis of the Image Reconstruction Schemes

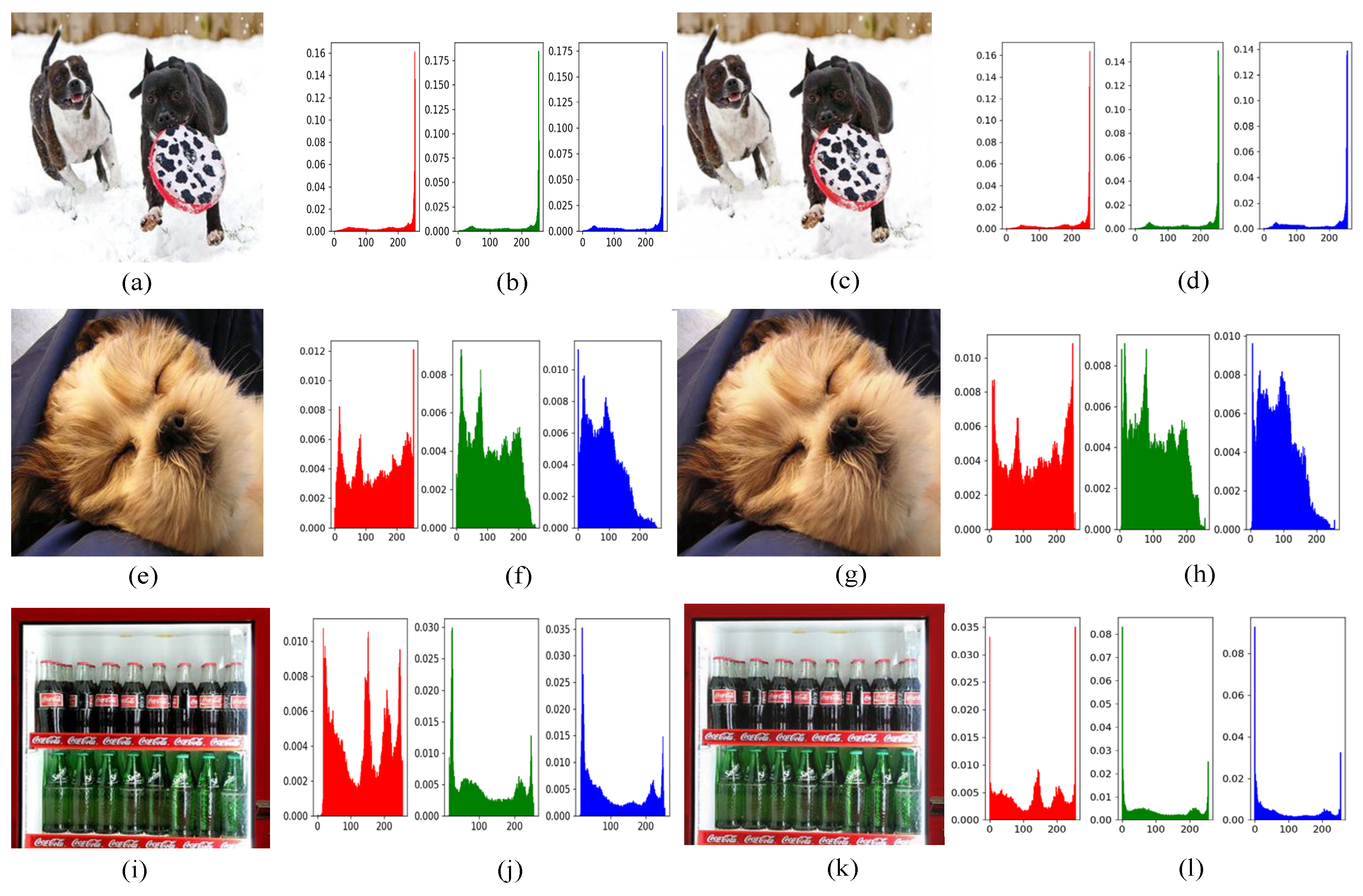

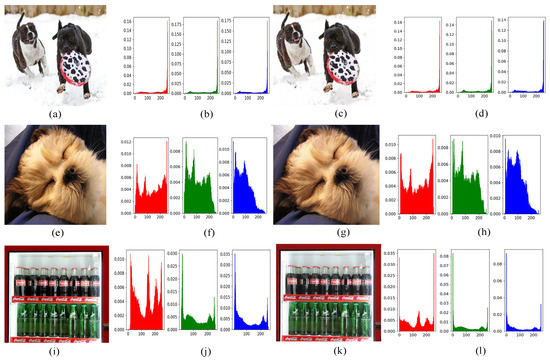

Figure 11 shows the histograms of the original and reconstructed images. When the scale was chosen as 1, the image histograms did not change significantly in shape for all three channels, indicating the desired IR performance from another point of view.

Figure 11.

Image histograms for the three test images: (a), (e), and (i): original images; (b), (f), and (j): histograms of (a), (e), and (i) for the three (RGB) channels, respectively; (c), (g), and (k): reconstructed images; (d), (h), and (l): histograms of (c), (g), and (k) for the three (RGB) channels, respectively, when scale = 1.

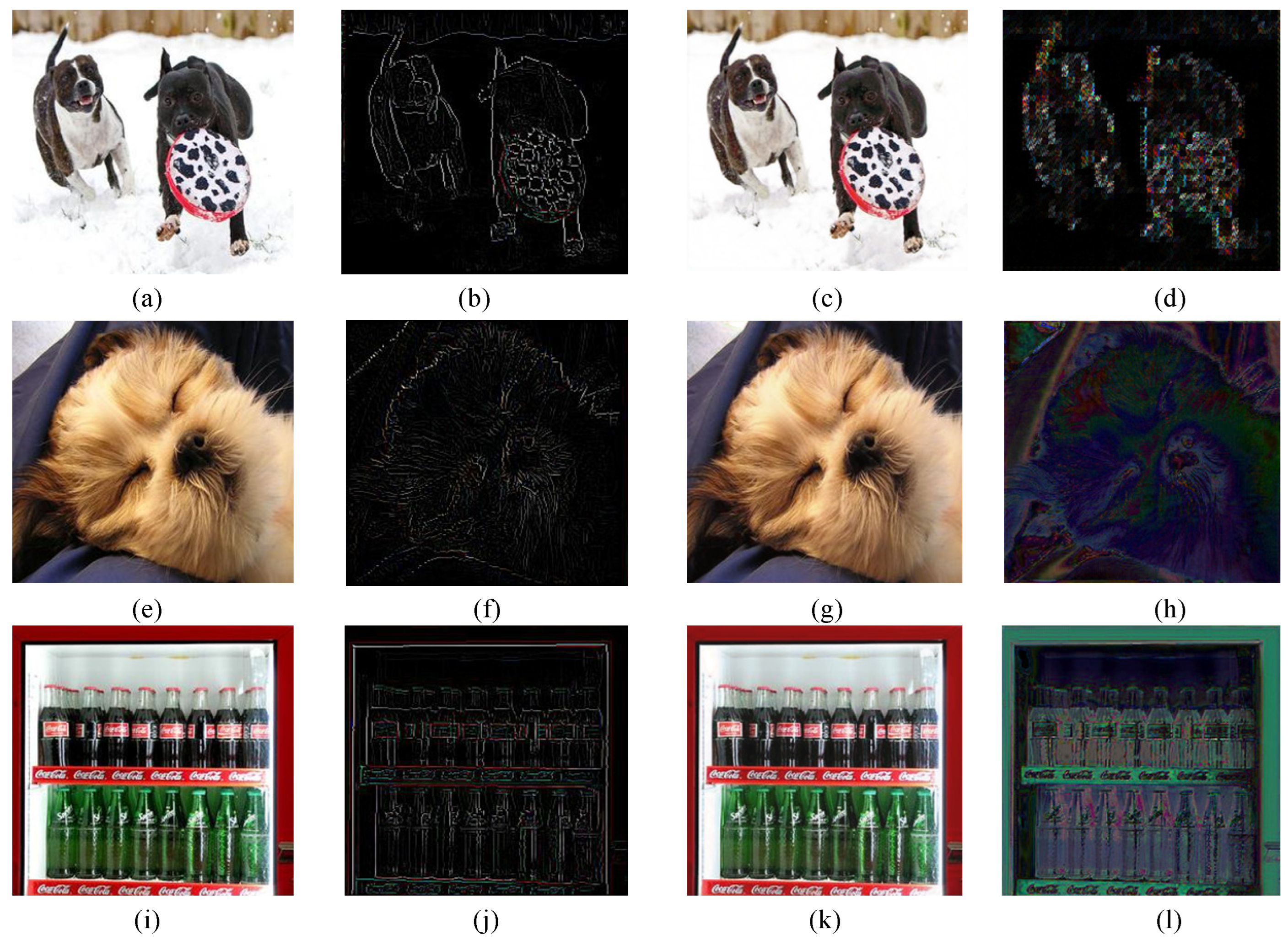

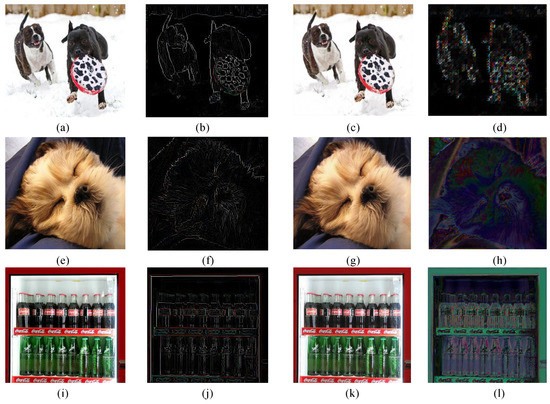

Furthermore, the difference image between the original and the reconstructed images is often used to observe the error between these two images. The difference images between the original images and the corresponding reconstructed ones are shown in the fourth column in Figure 12, where (d), (h), and (l) are the difference images |(a)–(c)|, |(e)–(g)|, and |(i)–(k)|, respectively. Obviously, some slight differences near the edges can be perceived visually.

Figure 12.

Difference images between the test image and the reconstructed one. (a), (e), and (i) are the original test images; (b), (f), and (j) are the multiscale interest points feature maps of (a), (b), and (i), respectively; (c), (g), and (k) are the reconstructed images of (a), (e), and (i), respectively; (d), (h), and (l) are the difference images of |(a)–(c)|, |(e)–(g)|, and |(i)–(k)|, respectively, when scale = 1.

The evaluation metrics PSNR, MSE, and MSSIM, as well as APE for the RGB channels, are presented in Table 5. As shown in Table 5, for the image in the first row of Figure 12, the PSNR values of the reconstructed image were sufficiently high. The structural similarities between the original images and their corresponding reconstructed ones were acceptable. The MSEs between the original images and the reconstructed ones were quite obvious. Similar results were obtained for the other two images and their corresponding reconstructed images, as shown in the second and third rows in Figure 12.

Table 5.

PSNR and image MSSIM, MSE, and APE values for the reconstructed images.

4.7. Data Requirements for the Reconstruction Scheme

Data requirements are an important consideration in IR. As shown in Table 6, the percentage of interest points used from the original image in the three (RGB) channels shows that the proposed image reconstruction algorithm was very efficient. For the proposed scheme, the time consumption was almost negligible. Each group had five images of size 256 × 256. More interest points can be seen in Table 6.

Table 6.

MSSIM, MSE, and PSNR values of the proposed IR-DWT-CGAN.

4.8. Comparison of the Image Quality and Time Consumption with Other Algorithms

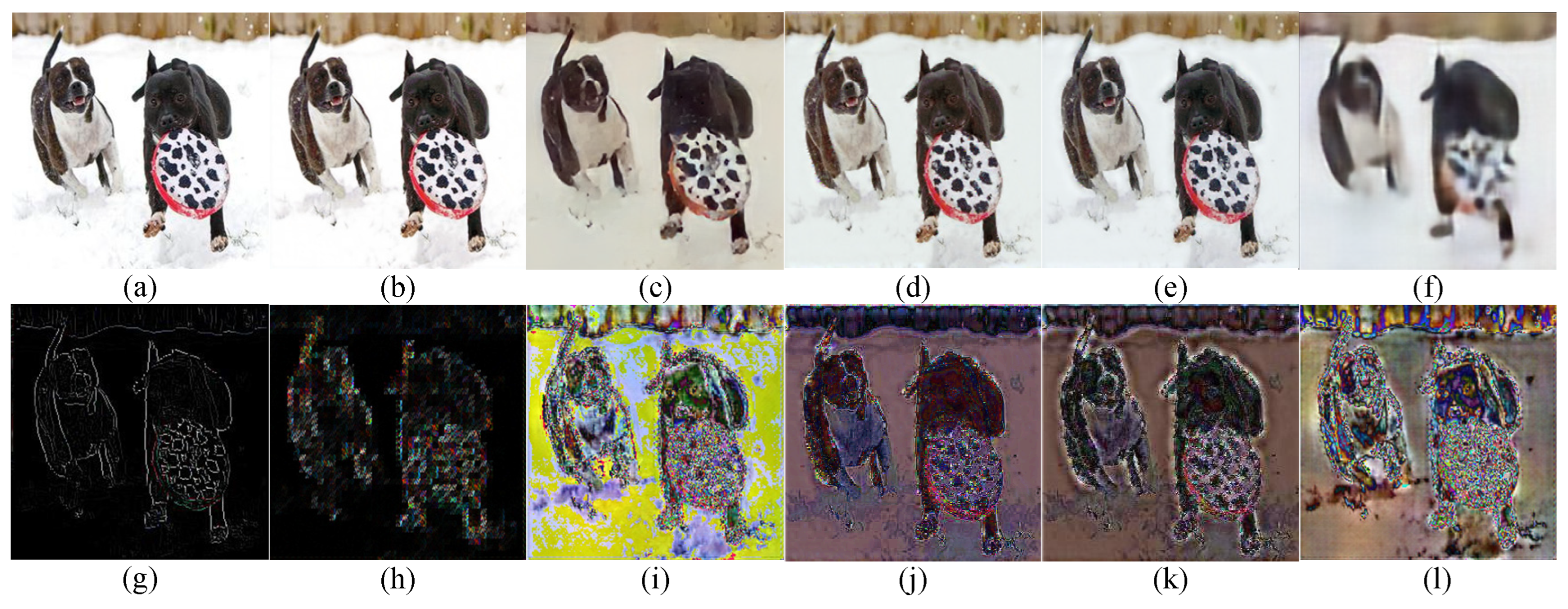

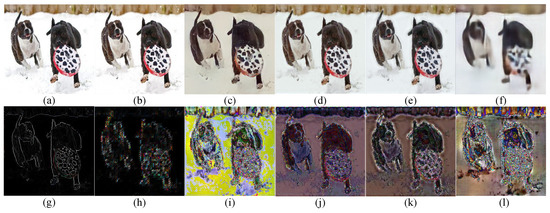

The proposed algorithm was compared with the algorithms in Refs [1,3,4,32]. Figure 13 presents the reconstructed images using five different algorithms. One can conclude from Figure 13 that the model performance was improved prominently with the help of the generative network, and the proposed algorithm gave the best results.

Figure 13.

Comparison of the IR results for different algorithms: (a) original image; (b) proposed algorithm; (c) Ref [4]; (d) Ref [1]; (e) Ref [3]; (f) Ref [32]; (g) multiscale interest points feature maps of (a); (h), (i), (j), (k), and (l) are the difference images of |(a)–(b)| 5, |(a)–(c)| 5, |(a)–(d)| 5, |(a)–(e)| 5, and |(a)–(f)| 5, respectively.

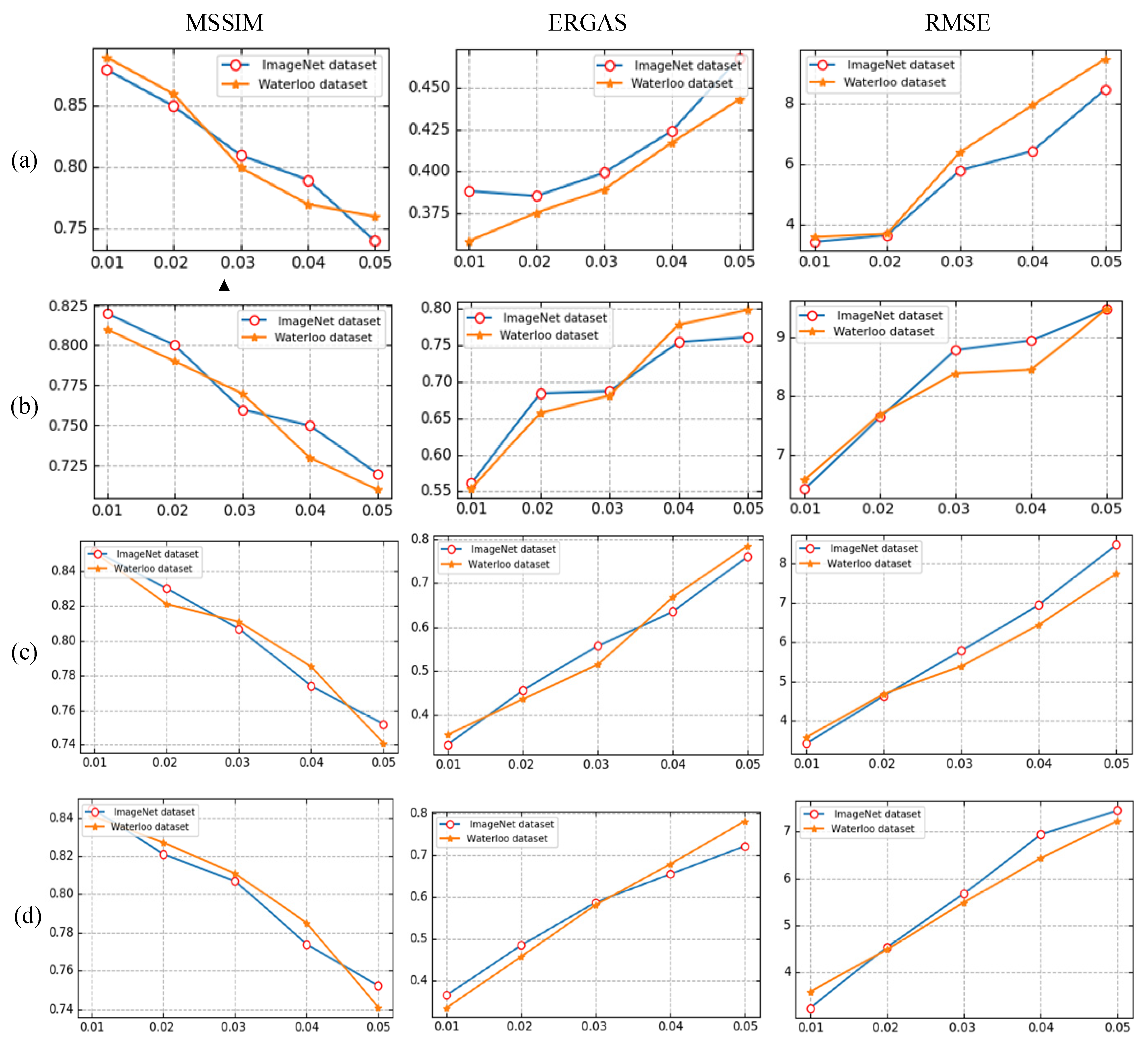

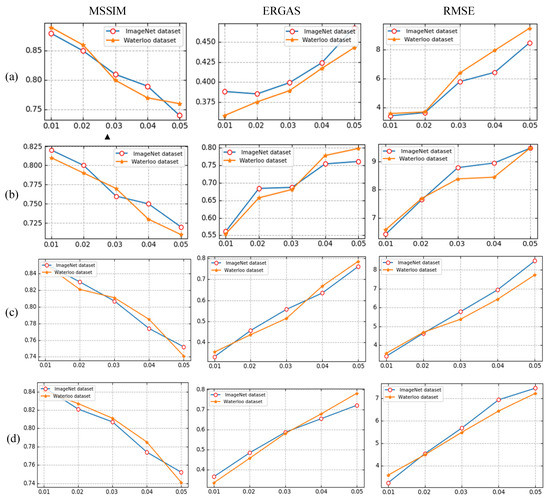

In addition, we conducted experiments to test the resistance of the proposed model to the different levels of noise contamination added to the original images. The results are shown in Figure 14. As can be seen in Figure 14, the MSSIM decreased as the noise intensity increased but it was still within an acceptable range. Compared with the methods in Ref [1], Ref [3], and Ref [32], the proposed method provided a significant improvement in terms of the MSSIM, ERGAS, and RMSE. As shown in Table 7, the time consumption was 6.862 s, 5.628 s, and 5.651 s lower on average compared with [1], [3], and [32], respectively. It can be concluded that the proposed IR-DWT-CGAN algorithm was more efficient than other IR algorithms based on the alternating projection method.

Figure 14.

Comparison of the results of the RMSE, ERGAS, and MSSIM relative to the noise levels for different algorithms: (a) proposed algorithm, (b) Ref [32], (c) Ref [1], and (d) Ref [3].

Table 7.

Time consumption compared with other methods.

5. Conclusions

A new dataset based on multiscale interest points and a new image reconstruction model IR-DWT-CGAN based on CGAN were proposed for the first time. A new database named MAX was built from natural images and their multiscale interest point feature maps. The proposed image reconstruction model, namely, IR-DWT-CGAN, treats wavelet maxima as interest points, from which a coarse version of the desired reconstruction images is obtained using inverse DWT. The new database was constructed from pairs of original images and their corresponding multiscale interest point feature maps. The generator network reconstructs the image from the multiscale interest point feature maps, while the discriminator network distinguishes the restored images from their corresponding ground truths during the training process and is no longer used for the testing process. It was demonstrated that the feasibility of the proposed IR-DWT-CGAN and the high compression ratio validated the effectiveness of the proposed IR-DWT-CGAN-based image reconstruction algorithm. Furthermore, the comparison results of different IR algorithms showed that the IR-DWT-CGAN model outperformed other typical classical IR algorithms.

Author Contributions

Supervision, B.T., P.C. and N.Z.; Writing—original draft, S.L.; Writing—review & editing, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Nanrun Zhou, National Natural Science Foundation of China (grant number 61861029).

Data Availability Statement

The data applied in this article can be found in the link Waterloo dataset (https://ece.uwaterloo.ca/~k29ma/exploration/) and ImageNet dataset (https://image-net.org/).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mallat, S.; Hwang, W.L. Singularity detection and processing with wavelets. IEEE Trans. Inf. Theory 1992, 38, 617–643. [Google Scholar] [CrossRef]

- Maji, S.K.; Yahia, H.M.; Badri, H. Reconstructing an image from its edge representation. Digit. Signal Process. 2013, 23, 1867–1876. [Google Scholar] [CrossRef][Green Version]

- Soulard, R.; Carré, P. Characterization of color Images with multiscale monogenic maxima. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 2289–2301. [Google Scholar] [CrossRef]

- Liu, S.H.; Tremblais, B.; Carré, P.; Zhou, N.R.; Wu, J.H. Image reconstruction from multiscale singular points based on the dual-tree complex wavelet transform. Secur. Commun. Netw. 2021, 2021, 6752468. [Google Scholar] [CrossRef]

- Lei, B.; Li, X.; Zhang, J.; Wen, J. Calculation of projection matrix in image reconstruction based on neural network. In Proceedings of the 3rd International Conference on Computational Intelligence an Applications 2018, Hongkong, China, 28–30 July 2018; pp. 166–170. [Google Scholar]

- Xiao, C.; Li, Q.; Lei, Z.; Zhao, G.; Chen, Z.; Huang, Y. Image reconstruction with deep CNN for mirrored aperture synthesis. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Jeyaraj, P.R.; Nadar, E.R.S. Dynamic image reconstruction and synthesis framework using deep learning algorithm. IET Image Process. 2020, 14, 1219–1226. [Google Scholar] [CrossRef]

- Antholzer, S.; Haltmeier, M.; Nuster, R.; Schwab, J. Photoacoustic image reconstruction via deep learning. In Proceedings of the SPIE 2018, San Francisco, CA, USA, 27 January–1 February 2018; Volume 10494. [Google Scholar]

- Gan, W.; Sun, Y.; Eldeniz, C.; Liu, J.; An, H.; Kamilov, U.S. Deep image reconstruction using unregistered measurements without groundtruth. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging, Nice, France, 13–16 April 2021; pp. 1531–1534. [Google Scholar]

- Yu, M.; Wang, H.; Liu, M.; Li, P. Overview of research on image super-resolution reconstruction. In Proceedings of the IEEE International Conference on Information Communication and Software Engineering, Chengdu, China, 19–21 March 2021; pp. 131–135. [Google Scholar]

- Wang, G.; Jacob, M.; Mou, X.; Shi, Y.; Eldar, Y. Deep tomographic image reconstruction: Yesterday, today, and tomorrow-editorial for the 2nd special issue machine learning for image reconstruction. IEEE Trans. Med. Imaging 2021, 40, 2956–2964. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Y. Parametric comparison between sparsity-based and deep learning-based image reconstruction of super-resolution fluorescence microscopy. Biomed. Opt. Express 2021, 12, 5246–5260. [Google Scholar] [CrossRef]

- Zeng, T.; Lam, E.Y. Robust reconstruction with deep learning to handle model mismatch in lensless imaging. IEEE Trans. Comput. Imaging 2021, 7, 1080–1092. [Google Scholar] [CrossRef]

- Pawar, K.; Egan, G.F.; Chen, Z. Domain knowledge augmentation of parallel MR image reconstructrion using deep learning. Comput. Med. Imaging Graph. 2021, 92, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Xiao, T.; Liu, Q.; Zheng, H. Deep learning for fast MR imaging: A review for learning reconstruction from in complete k-space data. Biomed. Signal Process. Control 2021, 68, 102579. [Google Scholar] [CrossRef]

- Ding, Q.; Nan, Y.; Gao, H.; Ji, H. Deep learning with adaptive hyper-parameters for low-dose CT image reconstruction i. IEEE Trans. Comput. Imaging 2021, 7, 648–660. [Google Scholar] [CrossRef]

- Farnia, P.; Mohammadi, M.; Najafzadeh, E.; Alimohamadi, M.; Makkiabadi, B.; Ahmadian, A. High-quality photoacoustic image reconstruction based on deep convolutional neural network: Towards intra-operative photoacoustic imaging. Biomed. Phys. Eng. Express 2020, 6, 045019. [Google Scholar] [CrossRef] [PubMed]

- Roya, P.; Mozaffarzadeh, M.; Mehrmohammad, M.; Orooji, M. Photoacoustic image formation based on sparse regularization of minimum variance beamformer. Biomed. Opt. Express 2018, 9, 2544–2561. [Google Scholar]

- Wang, Y.; Ge, X.; Ma, H.; Qi, S.; Zhang, G.; Yao, Y. Deep learning in medical ultrasound image analysis: A review. IEEE Access 2021, 9, 54310–54324. [Google Scholar] [CrossRef]

- Hanumanth, P.; Bhavana, P.; Subbarayappa, S. Application of deep learning and compressed sensing for reconstruction of images. J. Phys. Conf. Ser. 2020, 1706, 012068. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention 2015, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 27th International Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; Volume 2, pp. 2672–2680. [Google Scholar]

- Prabhat; Nishant; Vishwakarma, D. Comparative analysis of deep convolutional generative adversarial network and conditional generative adversarial network using hand written digits. In Proceedings of the International Conference on Intelligent Computing and Control Systems 2020, Madurai, India, 13–15 May 2020; pp. 1072–1075. [Google Scholar]

- Raj, N.; Venkateswaran, N. Single image haze removal using a generative adversarial network. In Proceedings of the International Conference on Wireless Communications, Signal Processing and Networking 2020, Chennai, India, 3–6 August 2020; pp. 37–42. [Google Scholar]

- Liu, Z.; Tong, M.; Liu, X.; Du, Z.; Chen, W. Research on extended image data set based on deep convolution Generative Adversarial Network. In Proceedings of the IEEE Information Technology, Networking, Electronic and Automation Control Conference 2020, Chongqing, China, 12–14 June 2020; pp. 47–50. [Google Scholar]

- Dang, N.; Khurana, M.; Tiwari, S. MirGAN: Medical image reconstruction using generative adversarial networks. In Proceedings of the 5th International Conference on Computing, Communication and Security 2020, Patna, India, 14–16 October 2020; pp. 1–5. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ma, K.; Duanmu, Z.; Wu, Q.; Zhou, W.; Yong, H.; Li, H.; Zhang, L. Waterloo exploration database: New challenges for image quality assessment models. IEEE Trans. Image Process. 2017, 26, 1004–1006. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Liu, H.; Tong, C.; Qiu, S.; Tao, Y.; Zhan, M. Deep image compression via end-to-end learning. In Proceedings of the 31st IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 1–4 June 2018. [Google Scholar]

- Hua, G.; Orchard, M.T. Image reconstruction from the phase or magnitude of its complex wavelet transform. In Proceedings of the 33rd IEEE International Conference on Acoustics 2008, Speech and Signal Processing, Las Vegas, NV, USA, 30 March–4 April 2008; pp. 3261–3264. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).