Abstract

The paper considers quantile-wavelet estimation for time-varying coefficients by embedding a wavelet kernel into quantile regression. Our methodology is quite general in the sense that we do not require the unknown time-varying coefficients to be smooth curves of a common degree or the errors to be independently distributed. Quantile-wavelet estimation is robust to outliers or heavy-tailed data. The model is a dynamic time-varying model of nonlinear time series. A strong Bahadur order for the estimation is obtained under mild conditions. As applications, the rate of uniform strong convergence and the asymptotic normality are derived.

Keywords:

quantile-wavelet; nonparametric estimation; time-varying coefficient; Bahadur representation; strong mixing MSC:

62G05; 62G08; 62G20

1. Introduction

The trending time-varying time series models have gained a lot of attention during the last three decades because they are increasingly used to observe changes in their dynamic structure, which have been applied to economics and finance [1,2]. When the time span of interest covers different economic periods, the parameters of the corresponding statistical models should be allowed to change with time; see references [3,4,5,6,7], among others. Varying coefficient models are flexible models for describing the dynamic structure of data, which have been extensively studied based on mean regression, for example, references [8,9,10,11,12], among others and the references therein. However, the important features of the joint distribution of the response and the covariates can not be well captured by mean regression.

In this paper, we consider the time-varying coefficient quantile regression models: the conditional th quantile of given and a prespecified quantile ,

where , are responses, is a p-dimensional vector of unspecified functions defined on , are p-dimensional explanatory variables. We can write Equation (1) in the form

where the errors satisfy almost surely. Quantile regression has also been emerged as an essential statistical tool in many scientific fields; see [13]. To simplify notations, we omit the subscript from the function and subsequently.

To estimate the varying coefficients in quantile regression models in Equation (2), references [7,14,15] used the kernel method, and references [16,17] considered regression splines. To our knowledge, wavelet has not been considered in quantile regression with varying coefficients. Wavelet techniques can detect and represent localized features and can also create localized features on synthesis while being efficient in terms of computational speed and storage. It has received much attention from mathematicians, engineers and statisticians. Wavelet has been introduced for nonparametric regression, for example, references [18,19,20,21,22], and so on. For wavelet smoothing applied to nonparametric models, reference [18] is a key reference that introduces wavelet versions of some classical kernel and orthogonal series estimators and studies their asymptotic properties. Reference [23] provided asymptotic bias and variance of a wavelet estimator for a regression function under a stochastic mixing process. Reference [24] considered a wavelet estimator for the mean regression function with strong mixing errors and investigated their asymptotic rates of convergence by using the thresholding of the empirical wavelet coefficients. References [25,26] showed Berry–Esseen-type bounds on wavelet estimators for semiparametric models under dependent errors. For varying coefficient models, references [27,28] provided wavelet estimation and studied convergence rate and asymptotic normality under i.i.d. errors, and reference [29] discussed these asymptotics of the wavelet estimators based on censored dependent data. However, all of the above wavelet estimators are based on mean regression by the least-squares method.

In the paper, we propose a quantile-wavelet method for time-varying coefficient models in Equation (2) with an -mixing errors stochastic process. The proposed methodology is quite general in the sense that we do not require coefficient to be smooth curves of a common degree, it does not suffer from the curse of dimensionality, it is robust to outlier or heavy-tailed data, and it is a dynamic model for nonlinear time series. Bahadur representation, rate of convergence and asymptotic normality of quantile-wavelet estimators will be established. Bahadur representation theory seeks to approximate a statistical estimate by a sum of variables with a higher-order remained. It has been a major statistical theory issue since Bahadur’s pioneering work on quantiles [30]; see [31], among others. Recently, reference [32] investigated the Bahadur representation for sample quantiles under -mixing sequence; reference [33] gave an M-estimation for time-varying coefficient models with -mixing errors and established Bahadur representation in probability. In the paper, we will establish Bahadur representation with probability 1 (almost surely) for quantile-wavelet estimates in the models Equations (1) and (2).

Throughout, we assume that is a stationary -mixing sequence. Recall that a sequence is said to be -mixing (or strong mixing) if the mixing coefficients

converge to zero as , where denotes the -field generated by . We refer to the monograph of [34,35] for some properties or more mixing conditions.

The rest of this article is organized as follows. In Section 2, we present wavelet kernel and quantile-wavelet estimation for the model (2). Bahadur representation of quantile-wavelet estimators and their applications are given in Section 3. Technical proofs are provided in Section 4. Some simulation studies are conducted in Section 5.

2. Quantile-Wavelet Estimation

In the paper, the time-varying coefficient function is retrieved by a wavelet-based reproducing kernel Hilbert space (RKHS). An RKHS is a Hilbert space in which all the point evaluations are bounded linear functions. Let be a Hilbert space of functions on some domain . For , then there exists an element , such that

where is the inner product in . Set , which is called the reproducing kernel. Let , and then .

A multiresolution analysis is a sequence of closed subspaces in such that they lie in a containment hierarchy,

where is the collection of square-integrable functions over the real line. The hierarchy Equation (3) is constructed such that (i) V-spaces are self-similar, , and (ii) there exists a scaling function whose integer-translate , and for which the set is an orthonormal basis. Wavelet analysis requires a description of two related and suitably chosen orthonormal basic functions: the scaling function and the wavelet . A wavelet system is generated by dilation and translation of and through

Therefore, and are the orthogonal bases of and , respectively. From Moore-Aronszajn’s theorem [36], it follows that

is a reproducing kernel of . By self-similarity of multiresolution subspaces,

is a reproducing kernel of , and then the projection of g on the space is given by

It motivates us to define a quantile-wavelet estimator of by

where with called the loss (“check”) function, is the indicator function of any set B, and are intervals that partition [0, 1], so that . One way of defining the intervals is by taking , , and , .

Note that many other nonparametric methods can be used here, including spline and Kernel approaches. However, they might not be rich enough to characterize the local properties of the time-varying coefficient function. In the following section, we will present the asymptotic properties of the quantile-wavelet estimator Equation (4).

3. Bahadur Representation and Its Applications

Let be the collection of all functions on with order in Sobolev space, which is a very general space. The degree of smoothness of the true coefficient functions determines how well the functions can be approximated. Functions belonging to for are not continuously differentiable. It is worth stressing that the wavelet approach allows us to obtain rates under much weaker assumptions than second-order differentiability. Denote ; is the norm, and C is used to denote positive constants whose values are unimportant and may change from line to line in the proof.

Our main results will be established under the following assumptions.

(A1.) (i) is a stationary -mixing sequence with with , , where d is defined in (A7)(i); (ii) the noisy errors has almost surely, and a continuous, positive conditional density in a neighborhood of 0 given the , , and , a.s.; (iii) , are non-singular matrices.

(A2.) belongs to Sobolev space with order .

(A3.) satisfies the Lipschitz of the order condition of order .

(A4.) has compact support, is in the Schwarz space with order , and satisfies the Lipschitz condition with order l. Furthermore, as , where is the Fourier transform of .

(A5.) .

(A6.) We also assume that for some Lipschitz function ,

(A7.) The tuning parameter m satisfies (i) with ; (ii) let and for , for . Assume that .

Remark 1.

These conditions are mild. Condition (A1) is the standard requirement for moments and the mixing coefficient for an α-mixing time series. Conditions (A2)–(A6) are the mild regularity conditions for wavelet smoothing, which have been adopted by [18]. In condition (A7), m acts as a tuning parameter, such as the bandwidth does for standard kernel smoothers; (A7) (i) is for Bahadur representation and rate of convergence, and (A7) (ii) combining with (A7) (i) is for asymptotic normality of the quantile-wavelet estimator.

Our results are as follows.

Theorem 1.

(Bahadur representation) Support that (A1)–(A5) and (A7) (i) hold, then

with

and

Remark 2.

Theorem 1 presents the strong Bahadur representation of a quantile-wavelet estimator for a time-varying coefficient model. Here, , a.s., which is comparable with the Bahadur order , of [37], where the bandwidth . Reference [37] is based on kernel local polynomial M-estimation, and requires that the function has the second-order differentiability. However, we do not need the strong, smooth conditions. The function is not differentiable when , .

The Bahadur representation of the quantile-wavelet estimator of Theorem 1 can be applied to obtain the following two results.

Corollary 1.

(Rate of uniform strong convergence) Assume that (A1)–(A5) and (A7) (i) hold, then

Remark 3.

Corollary 1 provides the rate of uniform strong convergence of quantile-wavelet estimator for model Equation (2). We consider the rate in the case of , under which is a lower rate of convergence than one of . If we take with , then . If we further take , then one obtains

which is comparable with the optimal convergence rate of the nonparametric estimation in nonparametric models. The result is better than the ones of [27,28] based on the local linear estimator for the varying-coefficient model. In addition, we also do not require the unknown coefficient to be smooth curves of a common degree.

Corollary 2.

(Asymptotic normality) Support that (A1)–(A7) holds, then

where with and .

Remark 4.

To obtain an asymptotic expansion of the variance and an asymptotic normality, we need to consider an approximation to based on its values at dyadic points of order m, as reference [18] has done. The is the piecewise-constant approximation of at resolution , which can avoid the instability of the variance of . From the proof of Corollary 2, it can see that the main term of the variance of is with and . When the dyadic t and m sufficiently large, , the variance of is asymptotically stable. See [18] for the details.

4. Lemmas and Proofs

Lemma 1

([18,38]). Suppose that (A4) holds. We have

(i) and , where k is a positive integer and is a constant depending on k only.

(ii) .

(iii) , where c is a positive constant.

(iv) uniformly in , as .

Lemma 2

([18]). Suppose that (A4)–(A5) hold and satisfies (A2)–(A3). Then

where

Lemma 3

([39]). Let be a sequence of random convex functions defined on a convex, open subset Θ of . Suppose is a real-valued function on Θ for which with probability 1, for each fixed θ in Θ. Then for each compact subset K of Θ, with probability 1,

Lemma 4.

Let be a stationary sequence satisfying the mixing condition for some , ; and with . Further, assume that . If Conditions (A4) and (A5) hold. Then

Remark 5.

In Lemma 4, we assume that is a sequence of a 1-dimensional random variable. In fact, for the fixed p, we also have the same result as Lemma 4.

Proof.

The theorem is similar to Lemma A.4 in [29] but has some differences. We suppose . If , the method of the proof is the same. Let . Partition the interval [0, 1] into subintervals of equal length. Let be the centers of . Notice that

One obtains

Let , and take for some . Note that By the Borel–Cantelli lemma, , a.s., for sufficiently large i. Hence,

for all sufficiently large n. In addition,

Further, we have

Let . Note that . By Theorem 2.18 (ii) in Fan and Yao (2003) [35], take , for any and sufficiently large M, for each , we have

where , ,

Taking , we obtain . Assume , . We have

since we have when and are small enough with . By the Borel–Cantelli Lemma and Equation (10), we prove Lemma 4. □

Lemma 5.

Under conditions in Theorem 1, we have

where .

Proof.

Let . By Lemmas 2 and 4, we have

This completes the proof of Lemma 5. □

Lemma 6.

Under conditions in Theorem 1, for fixed , we have

and

where is defined by (18) in the proof of Theorem 1.

Proof.

For Equation (12), by Condition (A1) (iii) and Lemma 4, one obtains

Note that . Thus, Equation (12) holds.

Now, let us prove Equation (13). Notice that, for any ,

where , and with . Let . To prove Equation (13), we only need to show that by the proof of Lemma 4. By Equation (15) and Jensen’s inequality, one obtains

By Lemma 4, we obtain Equation (13).

In the sequence, we will give the proofs of the main results. □

Proof of Theorem 1.

Recall that and minimize

Let and . The behavior of follows from consideration of the objective function

The function is obviously convex and is minimized at . It is sufficient to show that converges to its expectation since it follows from Lemma 3 that the convergence is also uniform on any compact set K of . Using the identity of [40],

where ; we may write

where

with .

To obtain strong Bahadur representation of the quantile-wavelet estimator, we first need to show the uniform approximation of on with probability 1 for the fixed by the three terms in Equation (16). By Lemma 6, we have

uniformly on . Let . We have

Second, the strong Bahadur representation requires the existence of one compact subset K with probability measure 1 that will suffice for all . We prove it by applying a stronger convexity lemma (See Lemma 3) than one of [41]. However, the arguments to prove it are essentially the same as in [41].

Let . It is easy to see that has a bounded second moment and hence is stochastically bounded. Since the convex function converges with probability 1 to the convex function , it follows from the convexity Lemma 3 that for any compact subset ,

The argument will be complete if we can show for each that, with probability 1,

Because is almost surely converged by Lemmas 4 and 5, it is bounded with probability 1. The compact subset K can be chosen to contain , a closed ball with center and radius , thereby implying that

Now, we consider the behavior of outside K. For any , with and a unit vector. Define as the boundary point of that lies on the line segment form to , that is, . Convexity of and the definition of imply

The last expression does not depend on . It follows that

As is positively defined, then according to (21), with probability 1, for enough n. This implies that for any and for large enough n, the minimum of must be achieved with , i.e., , that is,

One obtains

We complete the proof of Theorem 1. □

Proof of Corollary 1.

From Theorem 1, and Lemmas 4 and 5, it is easy to obtain the result of Corollary 1. □

Proof of Corollary 2.

From Theorem 1 and Lemma 5, we only verify the asymptotic normality of

□

First, we compute its variance-covariance matrix. Let . We know . Let and with specified later. We have

For , by Theorem 3.3 and Lemma 6.1 in [18], one obtains

For , since , take such that . Let be the th component of . By Lemma 1, we have

For , let be the th component of . Noting that , by Proposition 2.5 (i) in [35], we have

Similar to the proof of Theorem 2 in [42], by using the small-block and large-block technique and the Cramér–Wold device, one can show that

This, in conjunction with Theorem 1 and the Slutsky Theorem, proves Corollary 2.

5. Simulation Study

To explore the numerical performances of quantile wavelet estimation, we compare our estimator with a local linear kernel [43] and Spline [44] by quantile regression. We call them QR-Wavelet, QR-Local-linear and QR-Spline methods, respectively. In the simulation study, our goal is to show that the QR-wavelet is robust to heavy-tailed data and more adaptive to a nonsmooth nonparametric function than local linear and spline methodologies. The data are of the form:

where are random design points generated from the normal distribution independently, and with F being the distribution function of . Here, is subtracted from to make the th quantile zero for identifiability.

We set , 300 and 500; , 0.25, 0.50, 0.75 and 0.90; comes from the normal distribution with and the t distribution with d degrees of freedom (denoted as ) with , respectively. We consider two special curves of for as follows:

Case 1. Pwpn (piecewise polynomial function): . The function is generally smooth except for a jump at .

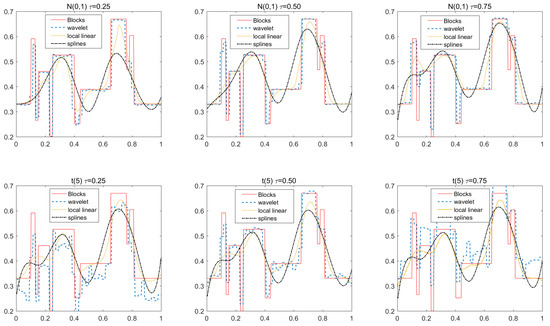

Case 2. Blocks: , where with . It is a step function with many jumps. Many jumps bring difficulties for the local linear and spline smoothing methods.

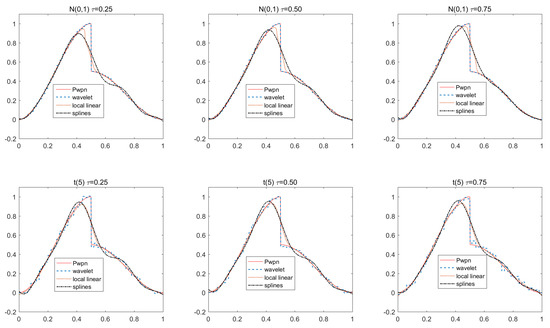

Pwpn and Blocks are shown in Figure 1 and Figure 2, respectively. In the study, we use the Haar wavelet, which is the simplest of the wavelets. For a given sample size, take in the QR-wavelet, h is chosen by “leave-one-out" cross-validation procedures and use the Gaussian kernel in the QR-local-linear. In the QR-splines, we use three degrees of the piecewise polynomial (cubic splines) and the knots . The performances of the estimators are evaluated via the mean square error (MSE) based on the 200 repetitions, which is defined by

where is a sequence of regular grid points. These results of MSE for are listed in Table 1 for Case I (Pwpn) and Table 2 for Case II (Blocks). The actual functions and their estimated curves for the two cases are depicted in Figure 1 and Figure 2 when , and , 0.5 and 0.75, respectively.

Figure 1.

The true time-varying coefficient function Papw, and their QR-wavelet, QR-local-linear and QR-splines estimates when , and , 0.50 and 0.75 for the errors and .

Figure 2.

The true time-varying coefficient function Blocks and their QR-wavelet, QR-local-linear and QR-splines estimates when , and , 0.50 and 0.75 for the errors and .

Table 1.

MSEs of wavelet, local linear and spline smoothing in Case I: Pwpn.

Table 2.

MSEs of wavelet, local linear and spline smoothing in Case II: Blocks.

From Table 1 and Table 2, we can make the following observations: (i) The MSEs of each time-varying coefficient obtained by wavelet, local linear and spline techniques decrease with increasing the sample size n. The accuracy of QR-wavelet is obviously higher than that of QR-local linear and QR-splines. (ii) All QR methods work well when the random error comes from the t distribution with five freedoms; that is, QR is robust to heavy-tailed data. Based on MSE only, for Pwpn that is generally smooth except for a jump, all three methods perform almost equally well, but for Blocks with many jumps, the QR-wavelet performs better than QR-local linear and QR-splines. (iii) All three methods perform well for different quantile levels, especially for high and low quantile levels ( (high), (low)). We also conducted some experiments based on the above setting by using least square estimation, and found that their estimators lead to systematic bias, especially at high and low quantile levels. However, QR-wavelet generally performs better than QR-local linear and QR-splines at these extreme quantile levels when there is a large sample size (e.g., ) and multiple jump points (e.g., Blocks). From Figure 1 and Figure 2, the estimators based on QR-local-linear and QR-splines are not better than those of QR-wavelet. For example, in Pwpn, QR-local linear and QR-splines cannot characterize the shape of the function in the interval ; in Blocks, they cannot find their jumping points, while QR-wavelet detects these jumping points and represents the localized features of Pwpn and Blocks as a whole. Compared with the local linear and spline methods, the wavelet technique has great advantages in characterizing the local features of underlying curves. Therefore, the QR-wavelet overwhelmingly outperforms the QR-local-linear and QR-splines for the discontinuous/irregular functional coefficients in our time-varying coefficient models.

Author Contributions

Conceptualization, X.Z.; methodology, X.Z.; validation, G.Y. and Y.X.; investigation, X.Z.; writing—original draft preparation, X.Z.; writing—review and editing, X.Z.; supervision, X.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Chinese National Social Science Fund (Grant No. 19BTJ034), the National Natural Science Foundation of China (Grant No. 12171242, 11971235) and the Postgraduate Research and Practice Innovation Program of Jiangsu Province (KYCX22_2210, KYCX21_1940).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cochrane, J.H. Asset Pricing; Princeton University Press: Englewood Cliffs, NJ, USA, 2001. [Google Scholar]

- Tsay, R. Analysis of Financial Time Series; Wiley: New York, NY, USA, 2002. [Google Scholar]

- Robinson, P.M. Nonparametric Estimation of Time-Varying Parameters. In Statistical Analysis and Forecasting of Economic Structural Change; Hackl, P., Ed.; Springer: Berlin, Germany, 1989; pp. 164–253. [Google Scholar]

- Orbe, S.; Ferreira, E.; Rodríguez-Póo, J. Nonparametric estimation of time varying parameters under shape restrictions. J. Econom. 2005, 126, 53–77. [Google Scholar] [CrossRef]

- Cai, Z. Trending time-varying coefficient time series models with serially correlated errors. J. Econom. 2007, 136, 163–188. [Google Scholar] [CrossRef]

- Li, D.; Chen, J.; Lin, Z. Statistical inference in partially time-varying coefficient models. J. Stat. Plan. Inference 2011, 141, 995–1013. [Google Scholar] [CrossRef]

- Wu, W.; Zhou, Z. Nonparametric inference for time-varying coefficient quantile regression. J. Bus. Econ. Stat. 2017, 35, 98–109. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R. Varying-coefficient models. J. R. Stat. Soc. Ser. B 1993, 55, 757–796. [Google Scholar] [CrossRef]

- Fan, J.; Zhang, W. Statistical estimation in varying coefficient models. Ann. Stat. 1999, 27, 1491–1518. [Google Scholar] [CrossRef]

- Hoover, D.R.; Rice, J.A.; Wu, C.O.; Yang, L.P. Nonparametric smoothing estimates of time-varying coefficient models with longitudinal data. Biometrika 1998, 85, 809–822. [Google Scholar] [CrossRef]

- Huang, J.Z.; Wu, C.O.; Zhou, L. Polynomial spline estimation and inference for varying coefficient models with longitudinal data. Stat. Sin. 2004, 14, 763–788. [Google Scholar]

- Zhou, Z.; Wu, W.B. Simultaneous inference of linear models with time varying coefficients. J. R. Stat. Soc. Ser. B 2010, 72, 513–531. [Google Scholar] [CrossRef]

- Koenker, R. Quantile Regression; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Honda, T. Quantile regression in varying coefficient models. J. Stat. Plan. Inference 2004, 121, 113–125. [Google Scholar] [CrossRef]

- Cai, Z.; Xu, X. Nonparametric quantile estimations for dynamic smooth coefficient models. J. Am. Stat. Assoc. 2009, 104, 371–383. [Google Scholar] [CrossRef]

- Kim, M.O. Quantile regression with varying coefficients. Ann. Stat. 2007, 35, 92–108. [Google Scholar] [CrossRef] [Green Version]

- Andriyana, Y.; Gijbels, I. Quantile regression in heteroscedastic varying coefficient models. AStA. Adv. Stat. Anal. 2017, 101, 151–176. [Google Scholar] [CrossRef]

- Antoniadis, A.; Gregoire, G.; McKeague, I.W. Wavelet methods for curve estimation. J. Am. Stat. Assoc. 1994, 89, 1340–1353. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, I.M. Adapting to unknown smoothness via wavelet shrinkage. J. Am. Stat. Assoc. 1994, 90, 1200–1224. [Google Scholar] [CrossRef]

- Hall, P.; Patil, P. Formulae for mean integrated squared error of non-linear wavelet-based density estimators. Ann. Stat. 1995, 23, 905–928. [Google Scholar] [CrossRef]

- Härdle, W.; Kerkycharian, G.; Picard, D.; Tsybakov, A. Wavelet, Approximation and Statistical Application; Springer: New York, NY, USA, 1998. [Google Scholar]

- Vidakovic, B. Statistical Modeling by Wavelet; Wiley: New York, NY, USA, 1999. [Google Scholar]

- Doosti, H.; Afshari, M.; Niroumand, H.A. Wavelets for nonparametric stochastic regression with mixing stochastic process. Commun. Stat.- Methods 2008, 37, 373–385. [Google Scholar] [CrossRef]

- Li, L.; Xiao, Y. Wavelet-based estimation of regression function with strong mixing errors under fixed design. Commun. Stat.- Methods 2017, 46, 4824–4842. [Google Scholar] [CrossRef]

- Zhou, X.C.; Lin, J.G. Asymptotic properties of wavelet estimators in semiparametric regression models under dependent errors. J. Multivar. Anal. 2013, 122, 251–270. [Google Scholar] [CrossRef]

- Ding, L.; Chen, P.; Li, Y. Berry-Esseen bound of wavelet estimator in heteroscedastic regression model with random errors. Int. J. Comput. Math. 2019, 96, 821–852. [Google Scholar] [CrossRef]

- Zhou, X.; You, J. Wavelet estimation in varying-coefficient partially linear regression models. Stat. Probab. Lett. 2004, 68, 91–104. [Google Scholar] [CrossRef]

- Lu, Y.; Li, Z. Wavelet estimation in varying-coefficient models. Chin. J. Appl. Probab. 2009, 25, 409–420. [Google Scholar]

- Zhou, X.C.; Xu, Y.Z.; Lin, J.G. Wavelet estiamtion in varying coefficient models for censored dependent data. Stat. Probab. Lett. 2017, 122, 179–189. [Google Scholar] [CrossRef]

- Bahadur, R.R. A note quantiles in large samples. Ann. Math. Statist. 1966, 37, 577–581. [Google Scholar] [CrossRef]

- He, X.M.; Shao, Q.M. A general bahadur representation of M-estimators and its application to linear regression with nonstochastic designs. Ann. Stat. 1996, 24, 2608–2630. [Google Scholar] [CrossRef]

- Yang, W.; Hu, S.; Wang, X. The Bahadur representation for sample quantiles under dependent sequence. Acta Math. Appl. Sin. Engl. Ser. 2019, 35, 521–531. [Google Scholar] [CrossRef] [Green Version]

- Zhou, X.; Zhu, F. Wavelet-M-estimation for time-varying coefficient time series models. Discret. Dyn. Nat. Soc. 2020, 2020, 1025452. [Google Scholar] [CrossRef]

- Doukhan, P. Mixing: Properties and Examples. In Lecture Notes in Statistics; Springer: Berlin, Germany, 1994; Volume 85. [Google Scholar]

- Fan, J.; Yao, Q. Nonlinear Time Series: Nonparametric and Parametric Methods; Springer: New York, NY, USA, 2003. [Google Scholar]

- Aronszajn, N. Theory of reproducing kernels. Trans. Am. Math. Soc. 1950, 68, 337–404. [Google Scholar] [CrossRef]

- Hong, S.Y. Bahadur representation and its applications for local polynomial estiamtes in nonparametric M regression. J. Nonparametr. Stat. 2003, 15, 237–251. [Google Scholar] [CrossRef]

- Walter, G.G. Wavelets and Orthogonal Systems with Applications; CRC Press Inc.: Boca Raton, FL, USA, 1994. [Google Scholar]

- Kong, E.; Xia, Y. A single-index quantile regression model and its estimation. Econom. Theory 2012, 28, 730–768. [Google Scholar] [CrossRef] [Green Version]

- Knight, K. Limiting distributions of L1 regression estimators under general conditions. Ann. Stat. 1998, 26, 755–770. [Google Scholar] [CrossRef]

- Pollard, D. Asymptotics for least absolute deviation regression estimators. Econom. Theory 1991, 7, 186–199. [Google Scholar] [CrossRef]

- Cai, Z.; Fan, J.; Yao, Q. Functional-coefficient regression models for nonlinear time series. J. Am. Stat. Assoc. 2000, 95, 941–956. [Google Scholar] [CrossRef]

- Wand, M.P.; Jones, M.C. Kernel Smoothing; Chapman and Hall: London, UK, 1995. [Google Scholar]

- Chambers, J.M.; Hastie, T.J. Statistical Models in S; Wadsworth & Brooks/Cole: Salt Lake City, UT, USA, 1992. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).