Abstract

This paper presents a novel dynamic Jellyfish Search Algorithm using a Simulated Annealing and disruption operator, called DJSD. The developed DJSD method incorporates the Simulated Annealing operators into the conventional Jellyfish Search Algorithm in the exploration stage, in a competitive manner, to enhance its ability to discover more feasible regions. This combination is performed dynamically using a fluctuating parameter that represents the characteristics of a hammer. The disruption operator is employed in the exploitation stage to boost the diversity of the candidate solutions throughout the optimization operation and avert the local optima problem. A comprehensive set of experiments is conducted using thirty classical benchmark functions to validate the effectiveness of the proposed DJSD method. The results are compared with advanced well-known metaheuristic approaches. The findings illustrated that the developed DJSD method achieved promising results, discovered new search regions, and found new best solutions. In addition, to further validate the performance of DJSD in solving real-world applications, experiments were conducted to tackle the task scheduling problem in cloud computing applications. The real-world application results demonstrated that DJSD is highly competent in dealing with challenging real applications. Moreover, it achieved gained high performances compared to other competitors according to several standard evaluation measures, including fitness function, makespan, and energy consumption.

Keywords:

artificial Jellyfish Search Algorithm (JSA); simulated annealing (SA); task scheduling; cloud computing; optimization; metaheuristics MSC:

90C26; 90C27; 68M20; 68T20

1. Introduction

Every day, new and complex optimization problems arise in fields such as mathematics, industry, and engineering [1]. When the problems became more complex, traditional optimization approaches were discovered with high computing costs and they were trapped in local optima while solving them [2]. As a result, scientists have been looking for new techniques to address these problems [3]. Metaheuristic algorithms are promising solutions proposed by drawing inspiration from herd animals’ food-finding habits or natural occurrences. Metaheuristic (MH) algorithms have many benefits, including the ability to resist local optima, use a gradient-free mechanism, and provide rational solutions regardless of problem structure [4].

Mathematical optimization is the process of locating an item within an accessible domain for a given problem that has a maximum or minimum value [5]. The advancement of optimization methods is critical since optimization problems arise in different fields of analysis. The majority of traditional optimization approaches are deterministic and rely on derivative knowledge [6]. However, in real life, determining the optimal values of a problem is not always feasible [7]. Because of their derivative-free behavior and promising optimization ability, MH techniques are becoming increasingly popular. Other benefits of these approaches include flexibility, ease of execution, and the avert ability to skip local optima [8].

Exploration and exploitation of the MH technique are two main methods used in metaheuristics [9]. Exploration refers to the opportunity to discover or visit new areas of the solution space. In contrast, exploitation refers to retrieving valuable knowledge about nearby regions from the found search domain. The balance between manipulation and discovery determines the consistency of the solutions found by any MH. These algorithms (i.e., MH), look for a single candidate agent or a set of agents. Single agent-oriented approaches are those that are based on a single candidate agent, whereas population-based approaches are those that are based on a group of candidate solutions. MH are made to look like natural phenomena. MH techniques can be divided into three categories depending on their source of inspiration: swarm intelligence-based algorithms, evolutionary algorithms, and physics-based algorithms.

Swarm intelligence has risen to prominence in the world of nature-inspired strategies in recent years [10]. It is also utilized to address real-life optimization problems and is focused on the mutual actions of swarms or colonies of animals [11]. Swarm-based optimization algorithms use the collaborative trial and error approach to find the optimal solution. The Arithmetic Optimization Algorithm (AOA) [10], the Aquila Optimizer (AO) [12], and the Barnacles Mating Optimizer (BMO) [13] are well-known methods in this category. Thus, many complicated optimization problems have been solved using this class of optimization algorithm, such as scheduling problems [14].

Recently, a new swarm-based optimization technique has been proposed which named is named the Jellyfish Search Algorithm (JSA) [15]. This algorithm emulates the behaviour of a jellyfish swarm in nature. In accordance with the characteristics of JSA, it has been applied to solve different sets of optimization problems. For example, JSA has been used to determined the optimal solution of global benchmark functions in [15] and its efficiency over other metaheuristic (MH) techniques has been established. Gouda et al. [16] proposed an alternative method for estimating the parameters of the PEM fuel cell. In [17], a multi-objective version of JSA is proposed and it has been applied to solve multiple objective engineering problems. In [18], the JSA was implemented to solve the spectrum defragmentation problem and it showed better performance compared to other methods. Chou et al. [19] presented a time-series forecasting method for energy consumption. This method was compared with other teaching-learning-based optimization (TLBO) and symbiotic organism search (SOS) algorithms. The results of JSA are better than those algorithms. In addition, JSA was applied to other fields such as video watermarking [20].

In previous applications of JSA, its ability to solve different optimization problems has been observed. However, it still suffers from some drawbacks that can affect its performance. For example, it requires more improvement in its ability to balance between the exploration and exploitation phases during the searching process. This motivated us to propose an alternative version of JSA to avoid the limitations of conventional JSA and to apply it as a global optimization technique.

The proposed developed version of JSA is called DJSD—dynamic differential annealed technique. The proposed DJSD method integrated the Jellyfish Search Algorithm operators with active differential annealed optimization [21] and the disruption operator to gain the advantages of both approaches. The proposed method is evaluated using various benchmark problems (i.e., classical benchmark). The proposed approach’s performance is analyzed and compared with other methods to solve the same problems. The results proved that the presented method is better than other comparative approaches, and it found new best solutions for several test cases. In addition, it is extended by using it as a task scheduler technique in a cloud computing environment.

In conclusion, the following contributions are included in this paper:

- Enhancing the Jellyfish Search Algorithm using the concept of the dynamic annealed and disruption operator to improve its exploration and exploitation abilities during the searching process.

- Applying the developed method, named DJSD, as a global optimization method through evaluating it using different classical optimization benchmark functions against other well-known MH methods.

- Offering an alternative task scheduling method to address cloud task scheduling problems.

The remaining sections of this paper are organized as follows. Section 2 presents the background of the jellyfish optimization algorithm, the Simulated Annealing algorithm, and the disruption operator. Section 3 introduces the steps of the proposed method. The experimental results and discussions are presented in Section 4. Finally, Section 5 concludes the paper.

2. Background

2.1. Jellyfish Search Algorithm

In this section, the basic information of the Jellyfish Search Algorithm (JSA) [15] is given. In general, JSA simulates the behaviour of jellyfish that squeeze water out of their body to move; rising ocean temperatures cause the creation of swarms [15]. The ability of these species to appear almost anywhere in the ocean is due to their movements within a swarm and the ensuing ocean currents that form jellyfish blooms. Since the amount of food at jellyfish-friendly sites varies, the best location would be determined by comparing food proportions [15].

Following [15], the ideas underpinning the jellyfish optimization algorithm can be formulated as:

- JSA goes around the water looking for food and is drawn to areas with a higher supply of food.

- The time control mechanism regulates shifting among motion groups. Jellyfish either move inside the swarm or follow the ocean current.

The major steps of metaheuristic algorithms are exploitation and exploration. Exploration is the motion in an ocean current, while exploitation is the motion inside a jellyfish swarm and the time control mechanism that manages the swapping between them. To locate areas with optimal locations, the possibility of exploration exceeds that of exploitation at the beginning. However, over time, exploitation’s probability exceeds that of exploration; then, the jellyfish determine the best position within the known places.

2.1.1. Population Initialization

In most cases, jellyfish populations are initiated at random positions, and the slow convergence and propensity to get stuck in the local optima due to low population diversity are some drawbacks of this strategy [15]. Therefore, many chaotic maps, for example, the tent map, the Liebovitch map, and the logistic map, have been created to increase the composition of the initial population. A logistic map generates a larger initial population than random selection and has a lower risk of premature convergence [15].

where is a basic jellyfish population, denotes the logistically chaotic value of the jellyfish’s location i, , and .

Since the earth is roughly spherical, a jellyfish that leaves the search domain’s boundaries would go back to the direct opposite limit. This mechanism is represented in Equation (2).

where denotes the new value of at dimension d after checking the limits of the search space (i.e., and ).

2.1.2. Exploration Stage: Ocean Current

The jellyfish are drawn to the ocean current because it carries many nutrients; the ocean current’s direction () is calculated as:

where is determined by:

In Equation (4), N is the number of jellyfish, is the best jellyfish in the swarm with the best fitness value, is the attraction’s governing factor, is the jellyfish’s average position, the distinction among the jellyfish’s current best position and the average position of all jellyfish. Based on the assertion that jellyfish have a regular spatial distribution in all directions, and a distance of , all jellyfish are likely to be found in the vicinity of the mean position, and the standard deviation of the distribution is .

So,

The value of is updated using Equation (10).

Based on the definition of , Equation (10) can be reformulated as:

In Equation (11), the length of is related to , which is a coefficient of distribution, and depends on the findings of the numerical experiment’s sensitivity analysis.

2.1.3. Exploitation Stage

Jellyfish move in swarms in passive and active motions (group A and group B, respectively). When the swarm is first forming, most jellyfish move in a group A pattern. They increasingly exhibit active motion over time, and passive activity is the movement of jellyfish that surround their positions with each jellyfish’s updated position being provided as follows.

where denotes the upper limit and represents the lower limit of the search domain, and is the coefficient of motion.

According to the numerical results of experiments’ sensitivity analyses, it was obtained that . To emulate the active motion of jellyfish (j), more than one are chosen randomly and a vector is employed to determine the direction of motion from to . Every in a swarm moves in the best direction to find the food using Equations (13)–(16), to mimic the motion direction and modify the jellyfish position.

where is the fitness function of position X.

2.1.4. Time Control Mechanism (TCM)

In the beginning, passive motion is preferred. However, with time, active movement is favored to mimic this case; we use a time control mechanism to organize jellyfish travel between going inside a jellyfish swarm and following the ocean current. The TCM has a time control function which is a time-varying random value that ranges from 0 to 1, and is a constant; Equation (17) represents the TCM.

where stands for the maximum number of generations. is the function simulating the move inside a swarm (passive or active motion); when , X exhibits a passive motion. Otherwise, X exhibits an active motion.

The complete steps of the jellyfish optimization algorithm are given in Algorithm 1.

| Algorithm 1 Steps of jellyfish optimization algorithm |

|

2.2. Simulated Annealing Algorithm

The Simulated Annealing (SA) algorithm is a single-based solution optimization technique simulating the metallurgical annealing process [22,23].

The SA algorithm starts by generating a random solution with a starting value X and comes up with a new solution Y from its neighborhood. The fitness value for X and Y is calculated as the following step in SA, and if , then . On the other hand, SA can replace X with Y even when Y’s fitness is not greater than X’s. This is determined by the probability (p), which is defined as follows:

where T stands for the temperature variable, which should start high and steadily decrease in value as iterations progress. The probability of adopting a new solution is denoted by p. The difference between the objective value of the suggested solution Y and the solution X objective value is called . The SA algorithm is illustrated in Algorithm 2.

| Algorithm 2 The SA method |

|

2.3. Disruption Operator

The preliminaries of the disruption operator () are described in this section. depends on the physical processes in astrophysics, where this rule supposes that when a set of gravitationally bound particles (with total mass m) is very close to a massive object (with mass M), then the group becomes torn apart [24]. The role in Equation (20) [24] can be used to implement this process:

where represents the Euclidean distance between the best solution and the solution. denotes the Euclidean distance between the solution and the nearest neighborhood (). Additionally, represents a random value in domain .

3. Developed Method

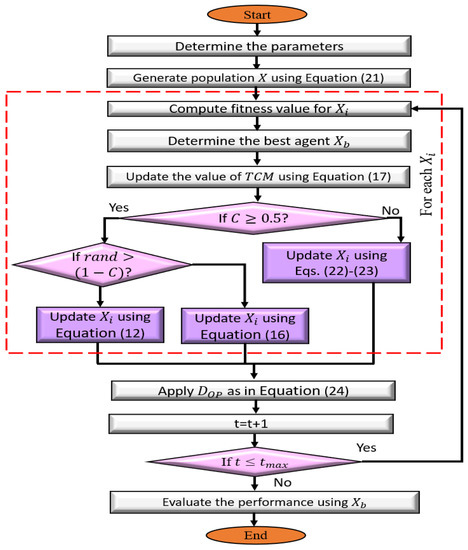

The framework of the presented DJSD method is illustrated in Figure 1. The improved DJSD aims to enhance the performance of the traditional JSA using dynamic differential Simulated Annealing and a disruption operator. Each of these techniques is applied to enhance the exploration and exploitation of JSA.

Figure 1.

Schematic flowchart of the developed DJSD method.

The developed DJSD starts by producing the initial population then computing the fitness value for each agent inside this population. This is followed by determining the best agent that has the smallest fitness value. The following process updates the agents according to the time control mechanism (TCM) value, which determines whether the agents will be updated using an ocean current or jellyfish swarm. In the latter (i.e., the jellyfish swarm), the traditional operators of the JSA algorithm are applied to update the current agent. Otherwise, the competition between JSA operators, dynamic differential Simulated Annealing, and the are used to update the present agent. This is performed by updating the agents using either the traditional operators of the JSA in ocean current or the DJSD. Then, the mechanism of SA to decrease the probability of choosing a new agent as the temperature is reduced is applied. Finally, after updating the current population, the is used to improve the diversity of X.

3.1. Initial Stage

The developed DJSD starts at this point by constructing an initial population with N agents, and this is formulated as:

In Equation (21), stands for random D values. and refer to the limits of the search domain.

3.2. Updating Stage

At this stage, the DJSD starts updating the agents within the current population (X) by calculating the fitness value for each agent . The next step in DJSD is to allocate the best agent , which has the best fitness value . Then the value of TCM is improved using Equation (17). In cases where , the operators of the jellyfish swarm are used to update . Otherwise, the combination of ocean current, DJSD, and is used to enhance . This is achieved based on the dynamic characteristics of the hammer during the search for the optimal solution. This represents a fluctuating parameter between the ocean current and the operator of SA. Hence, this process is formulated as:

In Equation (22), is random number and represents the remaining mathematical function. are random solutions chosen from the current population X, while denotes a random solution generated in the search space.

After that, the new value of (i.e., ) is accepted at temperatures elevated above low temperatures, and this is performed depending on the probability value p as in the traditional SA. This process is formulated as follows.

The next step is to apply the operator to the current updated population X. However, this process takes more time, and this increases the computational time of the developed method. Accordingly, will be applied according to the following formula:

3.3. Terminal Stage

The stop conditions are checked within this stage; if they are not met, the updating stage is repeated. Otherwise, the best solution found so far () is returned.

4. Experimental Results and Discussion

In this section, the presented optimizer’s performance is evaluated using several experiments and comprehensive comparisons to demonstrate the algorithm’s abilities. The implemented experiments consist of a set of thirty classical benchmark functions. The results of the developed DJSD are compared with those of several metaheuristic optimizers including the whale optimization algorithm (WOA) [25], artificial ecosystem optimization (AEO) [26], the chimp optimization algorithm (Chimp) [27], the firefly algorithm (FA) [28], and the traditional JSA. The setting parameters for all comparison algorithms are given in Table 1. These values are chosen based on the original implementation of the algorithms. The considered algorithms are conducted 30 times with a population size of 30, and the maximum number of iterations per run is set to 1000. The experiments and analyses are executed using MATLAB R2018b on a machine equipped with an Intel Core i5 CPU and 4 GB RAM running under Windows 10 64-bit.

Table 1.

The value of each parameter of compared methods.

4.1. Experimental Series 1: Mathematical Optimization Problems

The major objective of the current experimental series is to assess the ability of the developed method to determine the ideal solution for classical benchmark functions [29]. The description of these benchmark functions is given in Table 2. It is evident from the table that there are two types of functions, namely unimodal (UM) and multimodal (MM). The unimodal type (F1–F10) is used to assess the ability of the MH technique to find the solution inside a a single solution search space. Likewise, the multimodal type (F11–F30) is applied to test the capability of the MH method to find the optimal solution inside a search domain having more extreme solutions.

Table 2.

Formulation of global problems.

Results and Discussions

The findings of DJSD and other peer algorithms in terms of solving classical global benchmark functions are given in Table 3, Table 4, Table 5 and Table 6. From Table 3, which illustrates the average of the fitness value, it is clear that DJSD outperforms other peer methods in most of the tested functions. More specifically, it achieves the smallest fitness value in eighteen functions, representing 60% of the total tested functions, followed by AEO and FA ranked in the second and third places, respectively. Conversely, the traditional JSA only provides better results than MFO, Chimp, and WOA.

Table 3.

Average of fitness value obtained by each algorithm.

Table 4.

Standard deviation (STD) of fitness value obtained by each algorithm.

Table 5.

Best fitness values obtained by each algorithm.

Table 6.

Worst fitness values obtained by each algorithm.

Moreover, it can be observed from the standard deviation values given in Table 4 that the developed DJSD method is more stable than other MH techniques, while JSA, AEO, MFO, FA, Chimp, and WOA achieved the smallest STD values at 5, 14, 2, 8, 3, and 4 out of 30 functions, respectively. By analyzing the performance of the developed DJSD algorithm in terms of the best fitness value as provided in Table 5, one can see that AEO has the best fitness value in seventeen functions, followed by DJSD and FA, which provide better results in sixteen and fifteen functions, respectively. In addition, it can be noticed from the worst fitness values given in Table 6 that the proposed DJSD still gets better results even in its worst case. In particular, it provides a smaller fitness values in sixteen functions, followed by AEO. On the other hand, JSA and FA have nearly the same performance by attaining the smallest value at only eight and nine functions, respectively.

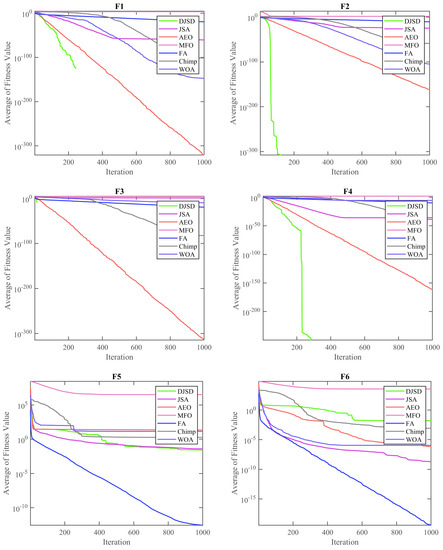

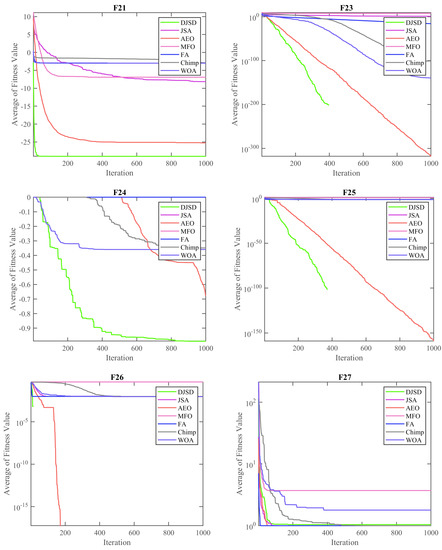

Figure 2 and Figure 3 depict the convergence curves for the average of the fitness value over the total number of iterations. It can be observed from these convergence curves that the developed DJSD can converge faster than other MH techniques. For example, F1–F4 and F21–F26 are examples of unimodal and multimodal functions, respectively.

Figure 2.

Convergence curves of each approach for F1–F6.

Figure 3.

Convergence curves of each approach for F21, F23, F24, F25, F26, and F27.

To further analyze the performance of the developed DJSD, a non-parametric test named the “Friedman test” was applied to identify whether there is a significant difference between DJSD and other MH techniques. Table 7 shows the value of the mean rank accomplished by the Friedman test for all compared techniques. It can be reported that DJSD accomplishes the best mean rank value in terms of the average, STD, and worst fitness value. However, in terms of the best fitness value, DJSD allocates the second rank behind the AEO algorithm.

Table 7.

Friedman Test.

In summary, the reported results demonstrate the high ability of DJSD to address global mathematical optimization problems. This can be due to the integration of dynamics Simulated Annealing and disruption operator with JSA.

4.2. Experimental Series 2: Cloud Task Scheduling Problems

Cloud computing is the provision of computing resources and services over the Internet. These services are delivered to cloud consumers under the Service Level Agreement (SLA) specifications. The SLAs are made up of various quality of service (QoS) parameters promised by the cloud provider. Among them are minimal execution time, high performance, service availability, energy consumption, and low prices. These parameters can be considered separately when just one of them is crucial to the system performance or combined when both parameters are related. Keep in mind that task scheduling is a decision-making process that deals with allocating computing resources to tasks, and its primary purpose is to target one or more objectives. Therefore, efficient task scheduling is one of the critical steps to effectively leverage the power of cloud computing [30].

4.2.1. Problem Formulation

The scheduling model for the considered scheduling issue in cloud computing is described as follows. Consider a data center consisting of several physical servers or computational resources. These physical servers may vary in the number of CPU cores (processing elements), memory size, network bandwidth, and storage capacity [31]. These resources can be scaled up or down to meet the required QoS and SLAs. Suppose are a bunch of virtual machines (VMs) available within a data center. Every has its processing capability measured by MIPS (millions of instructions per second). Suppose is a collection of user requests submitted by cloud subscribers to be performed on the set of VMs. Every task has a length expressed in millions of instructions (MI).

In this study, an expected time to compute (ETC) matrix is used to keep the time expected to perform a certain task (service) on various VMs [31]. The element signifies the ETC of the task on the VM, where .

where represents the length of the task and signifies the processing power of the VM.

Our objective in this study is to ensure better QoS in terms of makespan and energy efficiency. Makespan is the amount of time taken for the completion of all tasks [32]. Therefore, appropriate mapping of tasks to VMs requires a minimal makespan. In general, makespan (MKS) is computed by the following equation.

Furthermore, energy consumption is referred to as the amount of energy consumed by computing machines. Therefore, the energy consumption should be minimal to enhance the system performance and provide better QoS to the users. Recall that the energy consumed by is determined by the energy consumed in the active state plus the energy consumed in the idle state [31]. Additionally, the energy consumption of the idle VM is about 60% of its active state [33]. Hence, the energy consumed (in terms of Joules) by can be determined as:

where represents the total execution time of . and denote the consumed energy by in the active and idle state, respectively. The overall energy consumption () of the cloud system is computed as given in Equation (30).

Since the whole performance of the cloud system is heavily influenced by the makespan and energy consumption factors, our main objective here is to ensure a better makespan with less energy consumption. Therefore, the considered problem is classified as a bi-objective optimization problem. Then, the fitness function is given by:

where signifies the balance parameter between the fitness function’s factors. Finally, the goal of our task scheduling is to search the schedule that minimizes F.

4.2.2. Experimental Environment and Datasets

To demonstrate the applicability of the developed DJSD approach, we perform computational experiments using different workload instances. Three different workload traces are used to validate the proposed algorithm; these are synthetic workload, HPC2N workload, and NASA Ames iPCS/860 workload. The synthetic workload contains 1500 tasks varying in length from 2000 to 56,000 MI created based on a uniform distribution. Table 8 describes the synthetic workload. The real workload traces, on the other hand, consisting of HPC2N and NASA Ames, are derived from the “Parallel Workload Archives” [34]. HPC2N encompasses the statistics of 527,371 tasks, while NASA Ames includes the statistics of 42,264 tasks.

Table 8.

Attributes of the synthetic workload.

The cloud environment comprises a single data center and 20 VMs with different setups hosted on two host machines in all experiments. The configurations of the host machines and VMs are displayed in Table 9. As evident from Table 9, the fastest and slowest VMs have a processing capacity of 5000 and 1000 MIPS, respectively.

Table 9.

Experimental parameter settings.

4.2.3. Results and Discussions

In this paper, eight state-of-the-art metaheuristics are chosen as peer algorithms for comparative analysis, including the standard JSA [15], AEO [26], MFO [35], FA [28], Chimp [27], WOA [25], golden jackal optimization (GJO) [36], and SA [23]. Each algorithm is executed with 20 independent runs on each scheduling instance in order to produce more precise estimates of our findings. Moreover, is set to 0.7 as our major goal is reducing energy consumption.

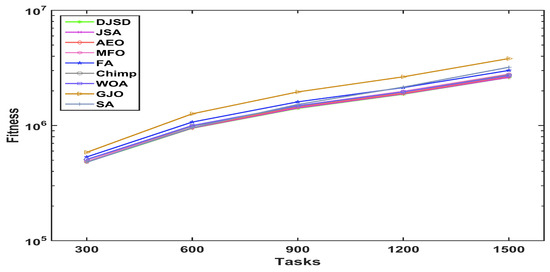

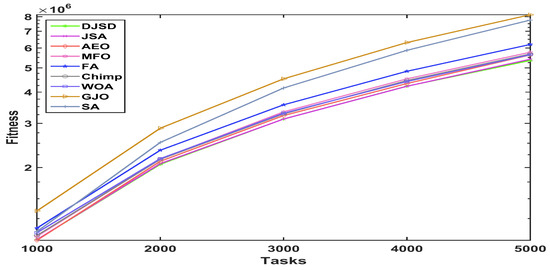

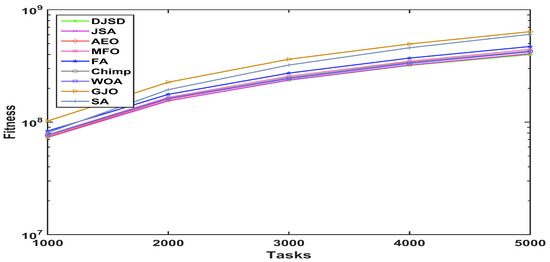

To scrutinize the performance behavior of the presented DJSD algorithm, the graphs of the average fitness values for the nine comparative algorithms are plotted in Figure 4, Figure 5 and Figure 6, for a different number of tasks and datasets. The x-axis of the given graphs show the number of tasks, whereas the y-axis represents the fitness function’s value. In particular, as shown in Figure 4, DJSD achieves better fitness values for the synthetic workload when task sizes range from 300 to 1500. In a similar manner, the comparative results in Figure 5 show that on the NASA Ames iPSC/860 dataset, DJSD performs much better than the other eight peer algorithms in terms of the fitness function. Additionally, Figure 6 illustrates that when the number of tasks ranges from 1000 to 5000, DJSD performs well on the HPC2N workload. Overall, the curves affirm the superior performance and ability of the presented DJSD approach to identify near-optimum solutions on almost all datasets.

Figure 4.

Convergence curve for the synthetic workload.

Figure 5.

Convergence curve for NASA iPSC real workload.

Figure 6.

Convergence curve for HPC2N real workload.

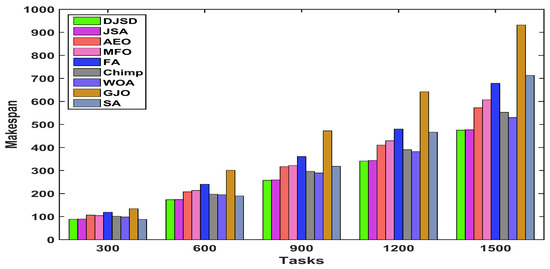

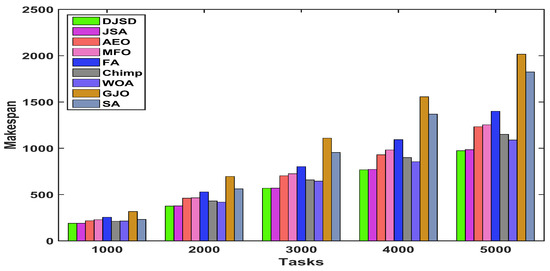

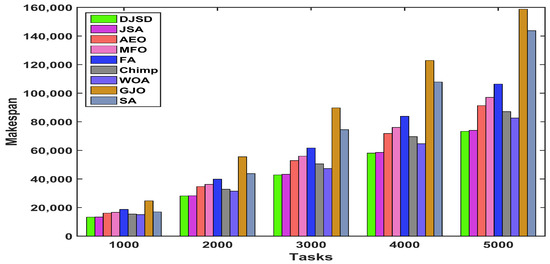

The comparisons of experimental outcomes in terms of the average makespan produced by DJSD, JSA, AEO, MFO, FA, Chimp, WOA, GJO, and SA for the synthetic and real workload traces are given in Figure 7, Figure 8 and Figure 9. In comparison to the traditional JSA and other peer algorithms, the suggested DJSD approach generates the best average makespan for the synthetic workload, as demonstrated in Figure 7. Besides that, when the NASA iPSC workload is employed, Figure 8 shows that DJSD delivers better average makespan values than other peer algorithms. In addition, when considering the HPC2N workload, Figure 9 shows that DJSD attains lower average makespan values compared to existing algorithms. Ultimately, the reported results reveal that DJSD exhibits the best average makespan among the other eight comparative methods for all investigated instances.

Figure 7.

Average makespan for the synthetic workload.

Figure 8.

Average makespan for NASA iPSC real workload.

Figure 9.

Average makespan for HPC2N real workload.

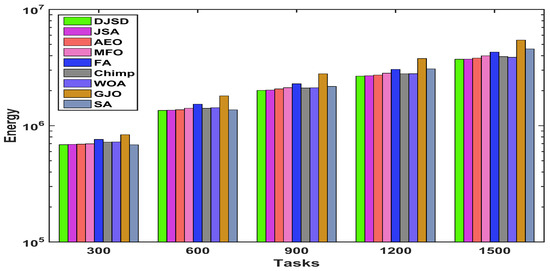

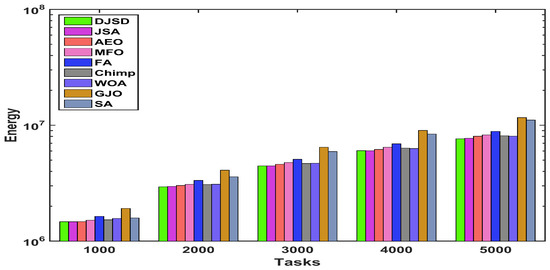

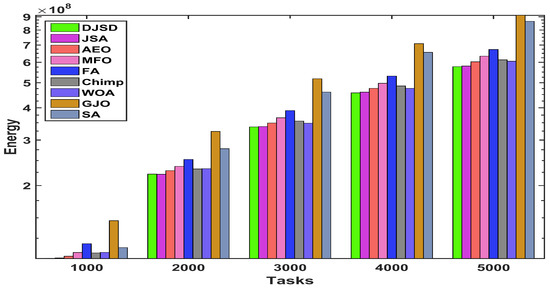

Figure 10, Figure 11 and Figure 12 demonstrates the comparison of total energy consumption between DJSD, JSA, AEO, MFO, FA, Chimp, WOA, GJO, and SA algorithms using synthetic and real datasets, including HPC2N and NASA. Figure 10 illustrates that, for the synthetic dataset, DJSD consumes the least amount of energy when compared to the comparaive algorithms. Similarly, Figure 11 and Figure 12 demonstrate that DJSD delivers the least amount of energy consumption when compared to the available methods for the NASA iPSC and HPC2N workload, respectively. In brief, the comparison of experimental outcomes indicates that for all tested instances and datasets, DJSD provides better energy consumption than other comparative methods.

Figure 10.

Total energy consumption for the synthetic workload.

Figure 11.

Total energy consumption for NASA iPSC real workload.

Figure 12.

Total energy consumption for HPC2N real workload.

To summarize, the results mentioned above confirm the benefit of integrating the SA strategy and with the JSA algorithm. Finally, the findings demonstrate that the DJSD algorithm produces better solution diversity and quality, resulting in near-optimal solutions.

5. Conclusions

The artificial Jellyfish Search Algorithm (JSA) is a recent promising search method to simulate jellyfish in the ocean. It has been applied to solve various optimization problems. However, it faces some problems in the search process while solving complicated problems, particularly the local optima problem and the low diversity of candidate solutions. This paper suggests a novel dynamic search method based on using the artificial jellyfish search optimizer with two search techniques (Simulated Annealing and disruption operators), called DJSD. The enhancement of the proposed method occurs in two stages. In the first stage, the Simulated Annealing operators are incorporated into the artificial jellyfish search optimizer to enhance the ability to discover more feasible regions in a competitive manner. This modification is performed dynamically by using a fluctuating parameter representing a hammer’s characteristics to keep the solution diverse and balance the search processes. In the second stage, the disruption operator is employed in the exploitation frame to further improve the diversity of the candidate solutions throughout the optimization process and avert the local optima problem.

Two experiment series are conducted to validate the performance of the proposed DJSD method. In the first experiment series, thirty classical benchmark functions are used to validate the effectiveness of DJSD compared with other well-known search methods. The findings revealed that the suggested DJSD approach obtained encouraging results, discovered new search regions, and found new best solutions for most test cases. In the second experiment series, a set of tests is conducted to solve cloud computing applications’ task scheduling problems to further prove DJSD’s ability to function in real-world applications. The real-world application results confirmed that the proposed DJSD is competence in dealing with challenging real applications. Moreover, it obtained high performances compared to other similar methods using several standard evaluation measures, including fitness function, makespan, and energy consumption.

The proposed method can be tested further to find potential improvements in future works. Furthermore, it can be combined with other search methods to further improve its searchability in dealing with complicated problems. Different optimization problems can be tested to investigate the performance of the proposed technique, such as text clustering, photovoltaic cell parameters, engineering and industrial optimization problems, forecasting models, feature selection, image segmentation, and multi-objective problems.

Author Contributions

Conceptualization, I.A., L.A., S.A., D.E. and M.A.E.; methodology, I.A., L.A., S.A., D.E. and M.A.E.; software, I.A., L.A. and M.A.E.; validation, I.A., L.A., S.A., D.E. and M.A.E.; formal analysis, I.A., L.A. and M.A.E.; investigation, I.A., L.A. and M.A.E.; writing—original draft preparation, I.A., L.A., S.A., D.E. and M.A.E.; writing—review and editing, I.A., L.A., S.A., D.E. and M.A.E.; visualization, I.A., L.A. and M.A.E.; supervision, M.A.E.; project administration, I.A., L.A., S.A., D.E. and M.A.E.; and funding acquisition, S.A. All authors have read and agreed to the published version of the manuscript.

Funding

Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R197), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Data Availability Statement

The Data Available upon request from corresponding Author.

Acknowledgments

Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2022R197), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Alshinwan, M.; Abualigah, L.; Shehab, M.; Abd Elaziz, M.; Khasawneh, A.M.; Alabool, H.; Al Hamad, H. Dragonfly algorithm: A comprehensive survey of its results, variants, and applications. Multimed. Tools Appl. 2021, 80, 14979–15016. [Google Scholar] [CrossRef]

- Xia, W.; Wu, Z. An effective hybrid optimization approach for multi-objective flexible job-shop scheduling problems. Comput. Ind. Eng. 2005, 48, 409–425. [Google Scholar] [CrossRef]

- He, Q.; Wang, L. An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng. Appl. Artif. Intell. 2007, 20, 89–99. [Google Scholar] [CrossRef]

- Karakoyun, M.; Ozkis, A.; Kodaz, H. A new algorithm based on gray wolf optimizer and shuffled frog leaping algorithm to solve the multi-objective optimization problems. Appl. Soft Comput. 2020, 96, 106560. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. A memory-based grey wolf optimizer for global optimization tasks. Appl. Soft Comput. 2020, 93, 106367. [Google Scholar] [CrossRef]

- Schuëller, G.I.; Jensen, H.A. Computational methods in optimization considering uncertainties—An overview. Comput. Methods Appl. Mech. Eng. 2008, 198, 2–13. [Google Scholar] [CrossRef]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Afshari, H.; Hare, W.; Tesfamariam, S. Constrained multi-objective optimization algorithms: Review and comparison with application in reinforced concrete structures. Appl. Soft Comput. 2019, 83, 105631. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K.; Mirjalili, S. An efficient equilibrium optimizer with mutation strategy for numerical optimization. Appl. Soft Comput. 2020, 96, 106542. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A.; Mirjalili, S.; Abd Elaziz, M.; Gandomi, A.H. The arithmetic optimization algorithm. Comput. Methods Appl. Mech. Eng. 2021, 376, 113609. [Google Scholar] [CrossRef]

- Bansal, J.C.; Singh, S. A better exploration strategy in Grey Wolf Optimizer. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 1099–1118. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization Algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Sulaiman, M.H.; Mustaffa, Z.; Saari, M.M.; Daniyal, H. Barnacles mating optimizer: A new bio-inspired algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2020, 87, 103330. [Google Scholar] [CrossRef]

- Abd Elaziz, M.; Attiya, I. An improved Henry gas solubility optimization algorithm for task scheduling in cloud computing. Artif. Intell. Rev. 2021, 54, 3599–3637. [Google Scholar] [CrossRef]

- Chou, J.S.; Truong, D.N. A novel metaheuristic optimizer inspired by behavior of jellyfish in ocean. Appl. Math. Comput. 2021, 389, 125535. [Google Scholar] [CrossRef]

- Gouda, E.A.; Kotb, M.F.; El-Fergany, A.A. Jellyfish search algorithm for extracting unknown parameters of PEM fuel cell models: Steady-state performance and analysis. Energy 2021, 221, 119836. [Google Scholar] [CrossRef]

- Chou, J.S.; Truong, D.N. Multiobjective optimization inspired by behavior of jellyfish for solving structural design problems. Chaos Solitons Fractals 2020, 135, 109738. [Google Scholar] [CrossRef]

- Manivannan, S.; Selvakumar, S. A Spectrum Defragmentation Algorithm Using Jellyfish Optimization Technique in Elastic Optical Network (EON). Wirel. Pers. Commun. 2021, 1–19. [Google Scholar] [CrossRef]

- Chou, J.S.; Truong, D.N. Multistep energy consumption forecasting by metaheuristic optimization of time-series analysis and machine learning. Int. J. Energy Res. 2021, 45, 4581–4612. [Google Scholar] [CrossRef]

- Dhevanandhini, G.; Yamuna, G. An Efficient Lossless Video Watermarking Extraction Process with Multiple Watermarks Using Artificial Jellyfish Algorithm. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 3048–3055. [Google Scholar]

- Ghafil, H.N.; Jármai, K. Dynamic differential annealed optimization: New metaheuristic optimization algorithm for engineering applications. Appl. Soft Comput. 2020, 93, 106392. [Google Scholar] [CrossRef]

- Bertsimas, D.; Tsitsiklis, J. Simulated annealing. Stat. Sci. 1993, 8, 10–15. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, R.A.; Abd Elaziz, M.; Lu, S. Chaotic opposition-based grey-wolf optimization algorithm based on differential evolution and disruption operator for global optimization. Expert Syst. Appl. 2018, 108, 1–27. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Artificial ecosystem-based optimization: A novel nature-inspired meta-heuristic algorithm. Neural Comput. Appl. 2020, 32, 9383–9425. [Google Scholar] [CrossRef]

- Khishe, M.; Mosavi, M.R. Chimp optimization algorithm. Expert Syst. Appl. 2020, 149, 113338. [Google Scholar] [CrossRef]

- Yang, X.S. Firefly Algorithms for Multimodal Optimization. In Stochastic Algorithms: Foundations and Applications; Watanabe, O., Zeugmann, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 169–178. [Google Scholar]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.P.; Auger, A.; Tiwari, S. Problem Definitions and Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter Optimization. KanGAL Rep. 2005, 2005005, 2005. [Google Scholar]

- Attiya, I.; Abualigah, L.; Elsadek, D.; Chelloug, S.A.; Abd Elaziz, M. An Intelligent Chimp Optimizer for Scheduling of IoT Application Tasks in Fog Computing. Mathematics 2022, 10, 1100. [Google Scholar] [CrossRef]

- Attiya, I.; Elaziz, M.A.; Abualigah, L.; Nguyen, T.N.; Abd El-Latif, A.A. An Improved Hybrid Swarm Intelligence for Scheduling IoT Application Tasks in the Cloud. IEEE Trans. Ind. Inform. 2022. [Google Scholar] [CrossRef]

- Attiya, I.; Zhang, X.; Yang, X. TCSA: A dynamic job scheduling algorithm for computational grids. In Proceedings of the 2016 First IEEE International Conference on Computer Communication and the Internet (ICCCI), Wuhan, China, 13–15 October 2016; pp. 408–412. [Google Scholar]

- Mishra, S.K.; Puthal, D.; Rodrigues, J.J.P.C.; Sahoo, B.; Dutkiewicz, E. Sustainable Service Allocation Using a Metaheuristic Technique in a Fog Server for Industrial Applications. IEEE Trans. Ind. Inform. 2018, 14, 4497–4506. [Google Scholar] [CrossRef]

- Parallel Workloads Archive. Available online: http://www.cse.huji.ac.il/labs/parallel/workload/logs.html (accessed on 28 April 2021).

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Chopra, N.; Ansari, M.M. Golden jackal optimization: A novel nature-inspired optimizer for engineering applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).