Abstract

Matrix inversion is commonly encountered in the field of mathematics. Therefore, many methods, including zeroing neural network (ZNN), are proposed to solve matrix inversion. Despite conventional fixed-parameter ZNN (FPZNN), which can successfully address the matrix inversion problem, it may focus on either convergence speed or robustness. So, to surmount this problem, a double accelerated convergence ZNN (DAZNN) with noise-suppression and arbitrary time convergence is proposed to settle the dynamic matrix inversion problem (DMIP). The double accelerated convergence of the DAZNN model is accomplished by specially designing exponential decay variable parameters and an exponential-type sign-bi-power activation function (AF). Additionally, two theory analyses verify the DAZNN model’s arbitrary time convergence and its robustness against additive bounded noise. A matrix inversion example is utilized to illustrate that the DAZNN model has better properties when it is devoted to handling DMIP, relative to conventional FPZNNs employing other six AFs. Lastly, a dynamic positioning example that employs the evolution formula of DAZNN model verifies its availability.

1. Introduction

As a fundamental mathematical issue, matrix inversion plays a crucial role in applied mathematics and engineering fields such as control application [1], quaternion [2], MIMO systems [3,4], and robot kinematics [5,6,7]. Therefore, numerous numerical algorithms have been developed to address this issue. For example, Cholesky decomposition algorithm [8] and Newton iteration algorithm [9] were utilized to solve matrix inversion. However, as the dimensionality of matrix issues increases, the original numerical algorithms are no longer able to handle these complex matrix problems rapidly and effectively [10]. In order to counteract the disadvantages of the above methods, neural networks with the capacity to process problems in parallel were introduced [11]. In addition, neural networks have recently been identified as a hotspot of research, and have been applied to numerous areas, such as medical image denoising [12], hydrogen economy [13], biometric verification [14], quadrotor [15], robots [16], synchronization problem [17], optimal control [18], virus forecasting [19], inflation prediction [20], portfolio optimization, and selection [21,22]. Considering the fact that gradient-based neural networks cannot effectively solve time-varying problems, a zeroing neural network (ZNN), a branch of the recurrent neural network (RNN), was proposed for computing time-variant matrix inversion problem [23].

Nevertheless, as a consequence of the convergence speed limitations, conventional ZNNs with linear activation function (AF) may not be capable of solving large-scale applications online [24]. Consequently, a nonlinear sign-bi-power (SBP) AF was presented in order to shorten the convergence time of ZNN models [25]. ZNN models that use SBPAF for acceleration have been widely reported [26]. However, all the studies mentioned above fail to consider the interference of noise, yet noise is an inherent part of all practical applications [27]. As a result, many researchers proposed a novel class of ZNN models using an integral term to address issues that were affected by noise interference [28,29]. For instance, a modified ZNN model with implicit noise tolerance was proposed by Jin et al. in [30] for the solution of quadratic programming problems. A noise-tolerant ZNN model was investigated to calculate complex matrix inversion with noise [31]. For further research, a unified framework for ZNN with an integral term was investigated, and its superiority to the conventional ZNN model was verified [32].

Regrettably, the vast majority of existing ZNN models containing the aforementioned ZNN models employ fixed convergence parameters, albeit they achieve fast convergence or noise tolerance. As a matter of fact, the convergence parameter generally varies with time in the hardware system [33]. In response to this issue, variable-parameter ZNN/RNN with the characteristics of fast convergence and strong robustness is researched [34,35,36,37]. For example, a variable-gain RNN with fast convergence was proposed for dynamic quadratic programming [34]. Xiao et al. developed a novel varying-parameter ZNN (VPZNN) for handling matrix inversion in [35], which exhibits significantly improved convergence speed when compared with the fixed-parameter ZNN (FPZNN). Further, a varying parameter RNN with an exponential gain time-varying term to resolve matrix inversion was considered [36]. Nevertheless, the variable parameters of the aforesaid VPZNN model tend to be infinite, which is clearly unreasonable in hardware implementations. Thus, we design two exponential decay variable parameters in order to further accelerate the model’s rate. Besides, an exponential-type SBPAF (ETSBPAF) is designed to gain more excellent convergence performance. As such, the double accelerated convergence ZNN (DAZNN) is proposed as a new model for dealing with dynamic matrix inversion problem (DMIP) as it is characterized by noise-suppression and arbitrary time convergence. In addition, the two theory analyses demonstrate the arbitrary time convergence of the DAZNN model as well as its robustness when bounded noises are added. What is more, an illustrative example is employed to assess the validity of the theories, as well as the superiority of DAZNN in comparison with FPZNNs utilizing other AFs (i.e., linear AF, bipolar-sigmoid AF, tunable AF, sign-bi-power AF, predefined time AF, and improved predefined time AF). Lastly, the angle of arrival (AOA) dynamic positioning example that employs the evolution formula of DAZNN model verifies its availability.

Throughout the remainder of this paper, five sections are presented. The problem description and models design are shown in Section 2. In Section 3, we analyze the arbitrary time convergence and robustness of the proposed DAZNN model. An illustrative example is provided in Section 4. Besides, the AOA dynamic positioning simulation with sine noise is conducted in Section 5. Section 6 summarizes the entire work in this paper. The main contributions of this paper are indicated as below.

- As opposed to the ZNN generated by the original error function for the static matrix inversion, this work develops the DAZNN generated by a novel error function to solve dynamic matrix inversion;

- Two new exponential decay variable parameters and a novel exponential-type SBPAF are incorporated into the DAZNN model in order to achieve double accelerated convergence and more stronger noise suppression;

- Two rigorous theoretical analyses are employed in order to demonstrate the arbitrary time convergence of the DAZNN model as well as its robustness under additive bounded noise;

- The illustrative example confirms that the DAZNN model is superior to the fixed-parameter model activated by other six activation functions. Besides, the evolution formula of DAZNN model is applied to the AOA dynamic positioning with sine noise to illustrate the model’s availability further.

2. Problem Formulation and Models Design

In this section, the dynamic matrix inversion problem is presented. Secondly, the design procedures of the fixed-parameter ZNN model and proposed DAZNN model with variable-parameters are introduced.

2.1. Problem Formulation

Consider a dynamic matrix inversion problem (DMIP):

where is a known non-singular and smooth time-varying coefficient matrix; is an unknown matrix and denotes the unit matrix.

2.2. Fixed-Parameter ZNN

The general research considers only the ZNN model generated by the original error function (that is, ) design without considering the diversity of ZNN models. The diversity of ZNN models can provide more options for hardware implementation. It is easy to see that by designing different error functions, we can obtain different ZNN models based on [38] since the ZNN model is generated by error function and evolution formula [39,40]. Thus, to increase the variety of ZNN model, a novel error function is designed as

Then according to the noise tolerance evolution formula [32], we have

in which , and denotes the odd increasing activation function (AF) array, that is

where is the element of . In this manner, we can obtain the fix-parameter ZNN (FPZNN) model:

Listed below are some commonly used AF that we use to compare to the novel AF.

- (1)

- Linear AF (LAF) [41]:

- (2)

- Bipolar-sigmoid AF (BSAF) [25]:where .

- (3)

- Tunable AF (TAF) [42]:where , .

- (4)

- Sign-bi-power AF (SBPAF) [25]:where is defined as

- (5)

- Predefined time AF (PTAF) [43]:where , and .

- (6)

- Improved predefined time AF (IPTAF) [10]:where are the same as defined above and .

2.3. DAZNN Model

While the FPZNN is a powerful tool for handling DMIP, we are aware that it is inferior to the variable-parameter ZNN from [10,34,35] due to its fix-parameter nature. Therefore, a double accelerated convergence ZNN (DAZNN) model is proposed to solve DMIP.

Firstly, according to the novel variable parameters, the variable-parameter evolution formula can be depicted as [10,32]:

where and represent AF arrays. Besides, and are the elements in and . And and denote exponential-type SBPAF (ETSBPAF) as

with and and can be defined by

with . Therefore, the DAZNN model is written as

In this part, by deriving the noise tolerance evolution design formula, a specific DAZNN model is proposed for solving the DMIP (1).

3. Theoretical Analysis

In this section, the arbitrary time convergence and robustness performance of the DAZNN model (14) are theoretically analyzed. The corresponding results are given as below.

3.1. Arbitrary Time Convergence

Theorem 1.

Given an invertible matrix , and starting from any initial value , DAZNN model (14) with ETSBPAF can converge to zero in the arbitrary time

in which , .

Proof of Theorem 1.

The DAZNN model (14) can convert to evolution formula (11), let denote the elements of , and the subsystem of DAZNN model (14) can be directly analyzed as

with . For the sake of better carrying on convergence analysis, an intermediate variable is introduced as

which implies that

For this dynamic system, a Lyapunov function (LF) is constructed as . Note that is a positive definite function and radially unbounded, then

in which . It is evident that is negative definite, so the zero solution of is globally asymptotically stable. That is, can decay to zero within time . Without losing generality, we consider and .

Case one: . First step, will decay to after time ; the second step, will decay to after time .

Step (a): . Equation (18) can be written as

Inequality (19) can be expressed as

Integrate both sides of the inequality (20) from 0 to :

By virtue of ,

Step (b): . Equation (18) is written as

Integrate both sides of the inequality (23) from to :

We can derive

Then, we can get convergence time of the first process in case one:

At this point, can converge to zero in case-one.

Case two: . Then, will decay to after time . We have: . Equation (18) is written as

Integrate both sides of the inequality (27) from 0 to :

We can derive

Then, we can get convergence time of the first process in case two:

Thus, the convergence time of the first process can be calculated:

So, the subsystem (15) can be expressed as

Devise a new LF as

Further, the derivative of can be obtained:

where . Evidently, the zero solution of is globally asymptotically stable. Analogously, consider and .

Case one: . decreased to in the first step; decreased to in the second step. In a similar way, and can be calculated:

Case two: . Then, will decay to after time . Similarly, we have:

Furthermore, convergence time of the second process is calculated as

Then, we can get the total convergence time :

Note that the upper bound of our calculated time is independent of initial value . Thus the proof is now completed. □

3.2. Robustness

Theorem 2.

Given a nonsingular matrix , and starting from any initial value , the DAZNN model (14) with additive bounded noise ( and a is constant) can converge to zero or be bounded by

where m represents dimension of coefficient matrix.

Proof of Theorem 2.

According to the subsystem of DAZNN model (14) and additive noise , it can be obtained:

Then, we separate into two parts for analysis, the first part is set a intermediate variable, the same as Equation (16), and the time derivative of the intermediate variable is , then substituting and into Equation (37), the first system can be acquired:

Introduce the Lyapunov function to analyze the first system (38):

Then

(a) If , will gradually increase, and . In this case, , that is

Hence, we get

By virtue of the noise is bounded, it is easy to know that will decrease as increases. Besides, will stop increasing until . It shows that the change will eventually enter a stable state, that is, the final holds true. It is not hard to get:

Besides, is globally asymptotically stable from Theorem 1, namely

(b) If , then will gradually decrease to zero. Then, the degraded form of the subsystem (33) is expressed as

(b1) . At this time, is globally asymptotically stable. That is

(b2) . This situation is similar to the above situation (a). In the end, we can get . Obviously, when , is bounded by

where is the inverse function of . Due to such that keeps correct. And the bound of can be written as

Then, by virtue of ,

(c) If , we can know that or . This case is similar as case (a) or case (b), so can converge to zero or be bounded.

In the end, it is concluded that in the unknown bounded noise environment, the error of model (14) can converge to zero or be bounded. □

4. Illustrative Verification

In this section, the FPZNN models with different AFs and DAZNN model (14) are applied to online solving dynamic matrix inversion problem, and the comparison results are shown as below.

Consider this time-varying matrix:

and denotes the theoretical solution of the Equation (1), in other words, . Besides, in this simulation, some public parameters are: , ; the parameter of AF (6) is set as ; the parameter of AF (7) is set as ; the parameters of AF (9) are set as , ; the parameter of AF (10) is set as ; the parameters of AF (12) are set as , ; variable parameters (13) are set as ; and .

4.1. Discussion of Convergence

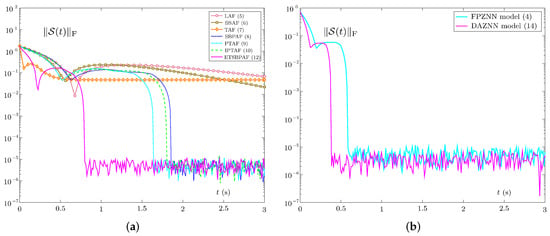

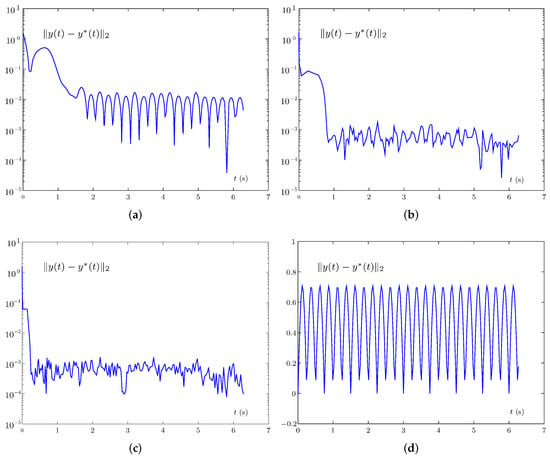

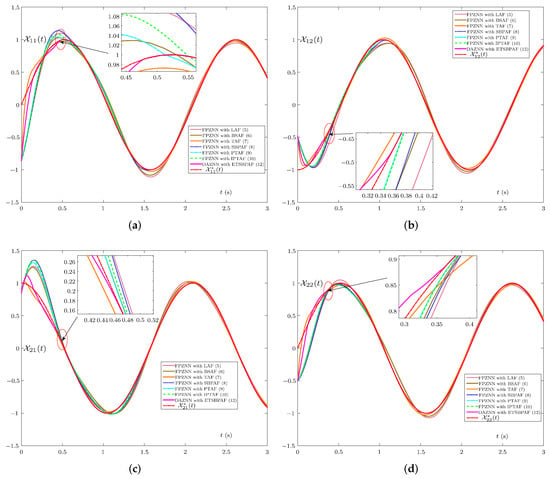

As seen in Figure 1, the state solutions (i.e., , , , ) and theoretical solutions (i.e., , , , ) are put together to compare. According to the results, in addition to the FPZNN models activated by AFs (5)–(7), other FPZNN models with different AFs and DAZNN model (14) can converge without noise. Moreover, it is clear that FPZNN with AF (12) has the fastest convergence speed.

Figure 1.

Trajectories of the solutions of different models, of which the red line denotes the theoretical solution with no noise. (a) Theoretical solution and state solutions . (b) Theoretical solution and state solutions . (c) Theoretical solution and state solutions . (d) Theoretical solution and state solutions .

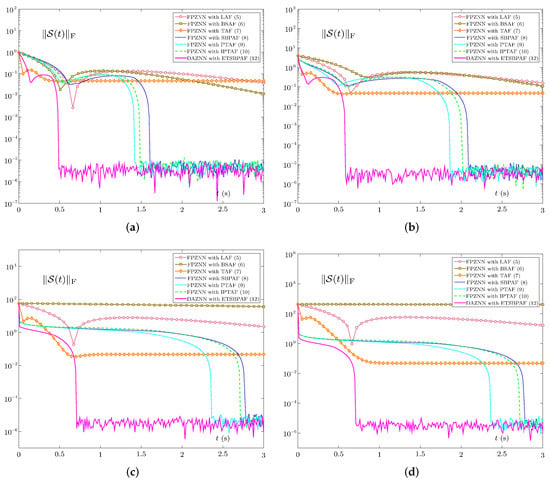

Secondly, Figure 2 comprehensively reveals the errors of these ZNN models in the absence of noise. As illustrated in Figure 2a,b, it is evident that with no noise and , the errors of both of these models can drop to zero. However, the error accuracy of model (4) activated by AF (7) can only reach , while other models can reach a higher error accuracy. Aside from model (4) activated by AF (7), the convergence rate of these models rank as follows: model (14) activated by AF (12), model (4) activated by AF (9), model (4) activated by AF (10), model (4) activated by AF (8), model (4) activated by AF (6), model (4) activated by AF (5). In addition, Figure 2c suggests that the errors of the convergence time corresponding to these ZNN models is reduced a lot with , which also verifies Theorem 1.

Finally, we control the variables in order to further demonstrate the specific impact of the two improvements (i.e., variable parameters (13) and AF (12)) in the new model, as shown in Figure 3. Specifically, Figure 3a illustrates the model errors for FPZNN with various AFs. Figure 3b discloses the errors of the FPZNN model (4) activated by AF (12) and DAZNN model (14). It is not difficult to discover that the FPZNN model activated by AF (12) has the best convergence performance in the seven AFs used from Figure 3a. This means that AF (12) has the best acceleration effect on the model. Moreover, for the condition of employing AF (12), the DAZNN model (14) performs better than the FPZNN model (4). Therefore, we can draw a conclusion that both AF (12) and variable parameters (13) have a gain effect on the convergence rate of the model.

4.2. Discussion of Robustness

In fact, in the circuit implementation of RNN, additive noise is inevitable. Additionally, the additive noise can result in the failure of the original algorithm or other undesirable effects. Therefore, the robustness of a model is a key indicator of a model’s performance.

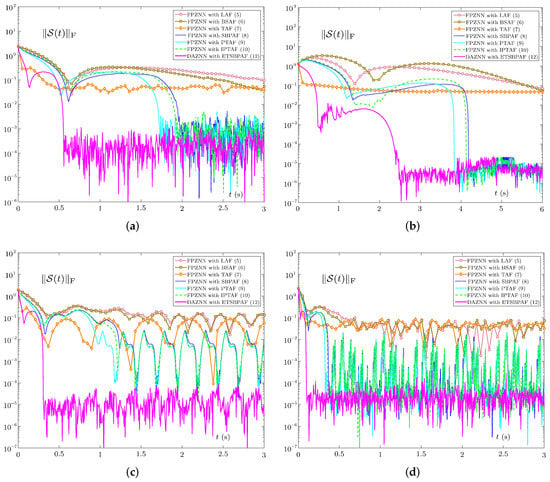

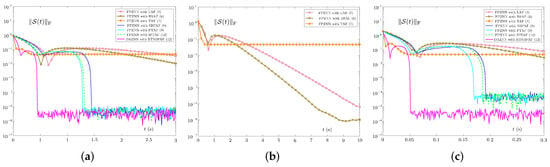

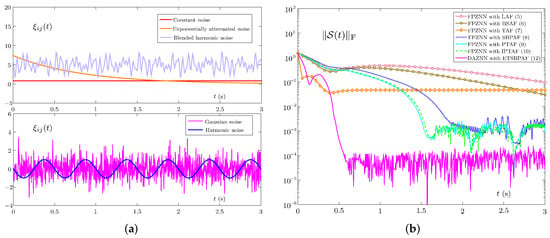

First, five kinds of noise dynamic characteristics are shown in Figure 4a. Specifically, there are five types of noise: constant noise , Gaussian noise with zero mean and one standard deviation, exponentially attenuated noise , harmonic noise and blended harmonic noise . Figure 4b reveals the errors of these ZNN models under noise . The error accuracy and convergence speed of all models display a certain degree of decline, even when they are subject to disturbances of constant noise . Compared with other models, the DAZNN model (14) is the least affected and still has the highest convergence speed and accuracy.

As a means of further verifying the robustness of the DAZNN model (14), we tested its dynamic characteristic of errors under four other bounded noises in Figure 5. Observing Figure 5a,b, it is found that in the case of Gaussian noise, the convergence speed of these models is not affected much, but the error accuracy is significantly reduced. On the contrary, in an exponential attenuated noise environment, the error accuracy of these models is basically unchanged, but the convergence time is significantly increased. In Figure 5c, in the case of harmonic noise and , the DAZNN model (14) is not greatly affected, but the accuracy of other models is greatly reduced. The result in Figure 5d is similar, in the case of blended harmonic noise and , the DAZNN model (14) is not markedly affected. In general, the error accuracy and convergence speed of all models decrease to varying degrees, but relatively speaking, the DAZNN model (14) is the least affected. These results provide support for our theoretical analysis that the DAZNN model (14) can accomplish robustness to bounded noise.

4.3. Sensitivity of Initial Values

The convergence performance of many ZNN models will be affected by the initial value, but related studies have rarely been addressed. Therefore, the sensitivity of the model to the initial value is worth discussing.

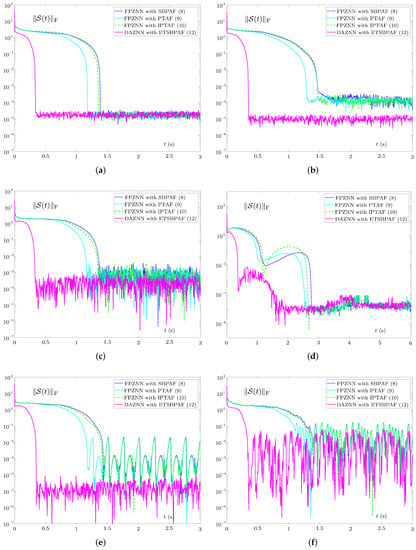

To demonstrate the impact of random starting values on these models’ errors, an experiment is conducted. Four random initial values belonging to different intervals are tested, as shown in Figure 6. The error accuracy of four models including DAZNN model (14) and FPZNN models with AFs (8)–(10) can reach under various . The convergence times of the four models above under different initial values are as follows: DAZNN model (14) takes about 0.48 s, 0.58 s, 0.71 s, and 0.71 s; FPZNN model with AF (9) is about 1.45 s, 1.86 s, 2.35 s, and 2.35 s; FPZNN model with AF (10) is about 1.49 s, 2.05 s, 2.72 s, and 2.72 s; FPZNN model with AF (8) is about 1.60 s, 2.15 s, 2.78 s, and 2.78 s. Relatively speaking, although the convergence time of DAZNN model (14) also relies on , the dependence on the initial value is smaller. Besides, it is not difficult to know that the convergence time of the above four models has an upper bound that has nothing to do with the initial value . It is worth noting that these models have a characteristic, that is, the activation functions used all have term, which shows to a certain extent that power term is a necessary term for the predefined convergent activation function.

4.4. High Dimensional Example Verification

Considering another time-variant Toeplitz matrix:

Note that the performance of the first three models (including PZNN with LAF (5), FPZNN with BSAF (6), and FPZNN with TAF (7)) is not good, so we just consider other models for comparison in this example. Besides, the conditions for this example are set to be the same as before, except that .

Figure 7 shows the dynamic trajectories of error norms of these ZNN models under noise or bounded noise. At this time, the DAZNN model still shows excellent model performance. It is known from Figure 7a that all models can converge without noise. From Figure 7b,e, when the noise is constant noise or harmonic noise , the accuracy of DAZNN model (14) is significantly higher than the other three models. Moreover, as shown in Figure 7c,d,f, under the interference of other noises, although the accuracy of DAZNN model (14) is similar to the other three models, its convergence speed is still much faster. In general, Section 4.4 fully demonstrates that the proposed DAZNN model has the best performance among these four models in a higher dimensional example.

5. Application to Dynamic Positioning Algorithm

This section first briefly describes the dynamic positioning problem based on the angle of arrival (AOA). Secondly, to validate the efficacy of the DAZNN model, design formula (11) and design formula (3) are applied to the AOA positioning algorithm.

5.1. Problem Description

The angle of arrival (AOA) positioning method’s main principle is to calculate the angle of arrival between the target and the sensor node [44]. Taking the sensor node as the starting point and passing through the target node will form a ray. The point where the two rays intersect is the position of the target node.

Suppose the coordinates of n fixed sensor nodes are , represented by the matrix B, where ; the incident angle of sensor nodes is , represented by the vector ; consider the two-dimensional situation, the target node position at time t uses the unknown vector . The specific mathematical expression is as follows

Hence, the incident angle satisfies

The following equation can be further derived:

Equation (44) can be converted to the following form:

where represents the smooth dynamic matrix with full column rank, denotes a smooth dynamic vector.

5.2. Model Application

First define an error:

Multiplying both sides of Equation (46) by , we can get the new error:

The derivative of Equation (47) can be obtained:

Based on design formula (11), a variable parameters dynamic positioning (VPDP) model is obtain:

in which denotes additive noise. Analogously, design formula (3) with AF (5) and design formula (3) with AF (10) are incorporated into AOA positioning, so we can get two kinds of fixed-parameter dynamic positioning (FPDP) models to compare with VPDP model:

5.3. Example 1

In this example, four sensor nodes are placed on the plane, and the sensor coordinates are

Besides, the specified target trajectory is

Taking the initial position , , ; the parameter of AF (10) is set as ; the parameters of AF (12) are set as , ; variable parameters (13) are set as and .

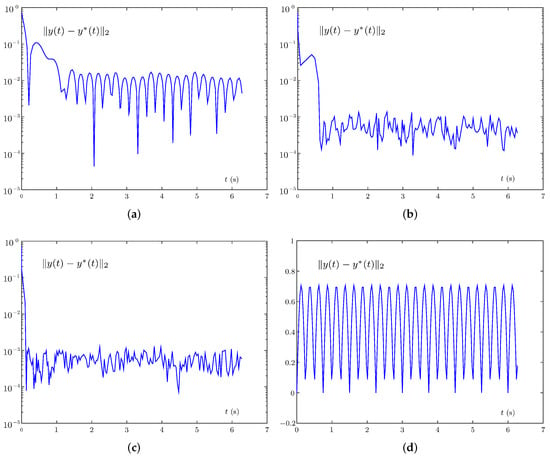

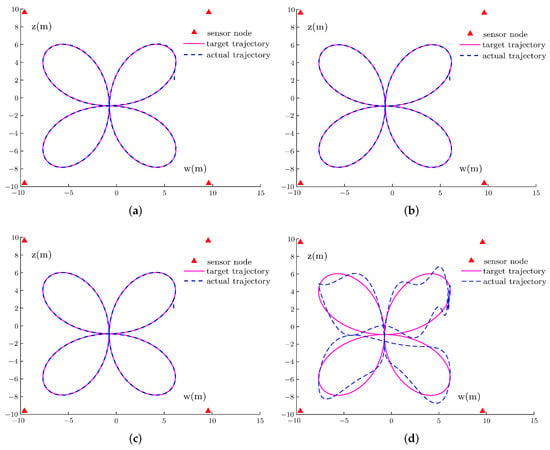

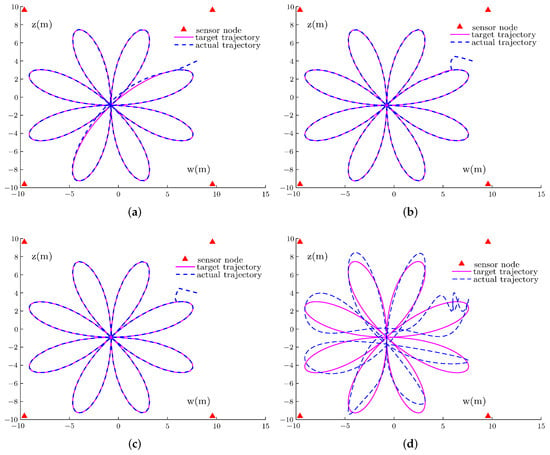

Figure 8 shows the target trajectory and actual trajectory for positioning employing FPDP model with AF (5), FPDP model with AF (10), the VPDP model, and the pseudo-inverse method under sine noise . Figure 9 shows the error of these models. As shown in Figure 8, the target trajectory and the actual trajectory are basically coincident for all models except the pseudo-inverse method. In addition, a significant gap between these methods exists in convergence time from Figure 9. To be more specific, except for the pseudo-inverse method that cannot converge, the convergence time of FPDP model with AF (5), FPDP model with AF (10), and the VPDP model is 1.05 s, 0.6 s, 0.15 s. Besides, the error upper bounds of the VPDP model, FPDP model with AF (5), and FPDP model with AF (10), are about , , , respectively. It is indicative that the VPDP model is superior to other two FPDP models. To sum up, this demonstrates the effectiveness of design formula (11) in realizing plane positioning issue.

5.4. Example 2

In this example, the four sensor nodes coordinates are the same as in Section 5.3. Besides, the specified target trajectory is

Take the initial position , , ; the parameter of AF (10) is set as ; the parameters of AF (12) are set as , ; variable parameters (13) are set as and .

Figure 10 shows the target trajectory and actual trajectory for positioning and employing the FPDP model with AF (5), the FPDP model with AF (10), the VPDP model, and the pseudo-inverse method under sine noise . Figure 11 shows the error of these models. As shown in Figure 10, the target trajectory and the actual trajectory are basically coincident for all models except for the pseudo-inverse method. Besides, a significant gap between these methods exists in convergence time from Figure 11. Specifically, except for the pseudo-inverse method that cannot converge, the convergence time of FPDP model with AF (5), FPDP model with AF (10), and the VPDP model is 1.82 s, 0.85 s, 0.26 s. Furthermore, the error upper bounds of the VPDP model, FPDP model with AF (5), and FPDP model with AF (10) are the same as in Figure 9. It is indicate that the VPDP model is better than the other two FPDP models, even for different target trajectory positioning tasks.

6. Conclusions

In this paper, the DAZNN model (14) with exponential decay variable parameters (13) and exponential-type SBPAF (12) is proposed to solve the dynamic matrix inversion problem. The ETSBPAF and variable parameters (13) contribute positively to the convergence and robustness of the model, resulting in better performance when compared with the other mentioned fixed-parameter models. Furthermore, Theorem 1 theoretically establishes the upper bounds of convergence for the DAZNN model (14) with arbitrary time. As bounded additive noise is added, the robustness of the DAZNN model is theoretically demonstrated in Theorem 2. The results indicate that the DAZNN model has better performance than other models with various AFs based upon the three aspects of convergence, robustness, and initial value sensitivity. Finally, the AOA dynamic positioning example that employs evolution formula (11) of the DAZNN model verifies its availability. A simplified model with high performance may be considered in future research. Additionally, by using drones for assistance in positioning, dynamic positioning tasks can be extended to three dimensions.

Author Contributions

Conceptualization, B.L. and Y.H.; methodology, Y.H. and B.L.; software, L.X., L.H. and X.X.; validation, Y.H. and B.L.; formal analysis, Y.H.; investigation, L.H. and X.X.; data curation, L.H. and X.X.; writing—original draft preparation, Y.H.; writing—review and editing, B.L. and L.X.; visualization, Y.H.; supervision, B.L. and L.X.; project administration, B.L.; funding acquisition, B.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China Grants 62066015, 61866013, 61976089 and 61966014; and the Natural Science Foundation of Hunan Province of China under grants 2020JJ4511, 2021JJ20005, 18A289, 2018TP1018 and 2018RS3065; and the Hunan Provincial Innovation Foundation For Postgraduate under grant CX20211042.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ZNN | zeroing neural networks |

| FPZNN | fixed-parameter ZNN |

| DAZNN | double accelerated convergence ZNN |

| DMIP | dynamic matrix inversion problem |

| AF | activation function |

| RNN | recurrent neural network |

| SBP | sign-bi-power |

| VPZNN | varying-parameter ZNN |

| ETSBPAF | exponential-type SBPAF |

| LAF | linear AF |

| BSAF | bipolar-sigmoid AF |

| TAF | tunable AF |

| SBPAF | sign-bi-power AF |

| PTAF | predefined time AF |

| IPTAF | improved predefined time AF |

| AOA | angle of arrival |

| VPDP | variable parameters dynamic positioning |

| FPDP | fixed-parameter dynamic positioning |

References

- Stefanovski, J. Novel all-pass factorization, all solutions to rational matrix equation and control application. IEEE Trans. Autom. Control 2019, 65, 3176–3183. [Google Scholar] [CrossRef]

- Xiao, L.; Liu, S.; Wang, X.; He, Y.; Jia, L.; Xu, Y. Zeroing neural networks for dynamic quaternion-valued matrix inversion. IEEE Trans. Ind. Inform. 2021, 18, 1562–1571. [Google Scholar] [CrossRef]

- Quan, Z.; Liu, J. Efficient complex matrix inversion for MIMO OFDM systems. J. Commun. Netw. 2017, 19, 637–647. [Google Scholar] [CrossRef]

- Wang, Y.; Leib, H. Sphere decoding for MIMO systems with Newton iterative matrix inversion. IEEE Commun. Lett. 2013, 17, 389–392. [Google Scholar] [CrossRef]

- Guo, D.; Zhang, Y. Zhang neural network, Getz–Marsden dynamic system, and discrete-time algorithms for time-varying matrix inversion with application to robots’ kinematic control. Neurocomputing 2012, 97, 22–32. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y. G2-type SRMPC scheme for synchronous manipulation of two redundant robot arms. IEEE Trans. Cybern. 2014, 45, 153–164. [Google Scholar] [PubMed]

- Chen, D.; Li, S.; Wu, Q.; Luo, X. Super-twisting ZNN for coordinated motion control of multiple robot manipulators with external disturbances suppression. Neurocomputing 2020, 371, 78–90. [Google Scholar] [CrossRef]

- Krishnamoorthy, A.; Menon, D. Matrix inversion using Cholesky decomposition. In Proceedings of the 2013 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), Poznan, Poland, 26–28 September 2013; pp. 70–72. [Google Scholar]

- Tang, C.; Liu, C.; Yuan, L.; Xing, Z. High precision low complexity matrix inversion based on Newton iteration for data detection in the massive MIMO. IEEE Commun. Lett. 2016, 20, 490–493. [Google Scholar] [CrossRef]

- Xiao, L.; He, Y. A noise-suppression ZNN model with new variable parameter for dynamic Sylvester equation. IEEE Trans. Ind. Inform. 2021, 17, 7513–7522. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Živković, I.S.; Wei, Y. Recurrent neural network for computing the Drazin inverse. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2830–2843. [Google Scholar] [CrossRef] [PubMed]

- Elhoseny, M.; Shankar, K. Optimal bilateral filter and convolutional neural network based denoising method of medical image measurements. Measurement 2019, 143, 125–135. [Google Scholar] [CrossRef]

- Koyuncu, I.; Yilmaz, C.; Alcin, M.; Tuna, M. Design and implementation of hydrogen economy using artificial neural network on field programmable gate array. Int. J. Hydrogen Energy 2020, 45, 20709–20720. [Google Scholar] [CrossRef]

- Wrobel, K.; Doroz, R.; Porwik, P.; Naruniec, J.; Kowalski, M. Using a probabilistic neural network for lip-based biometric verification. Eng. Appl. Artif. Intell. 2017, 64, 112–127. [Google Scholar] [CrossRef]

- Yañez-Badillo, H.; Beltran-Carbajal, F.; Tapia-Olvera, R.; Favela-Contreras, A.; Sotelo, C.; Sotelo, D. Adaptive robust motion control of quadrotor systems using artificial neural networks and particle swarm optimization. Mathematics 2021, 9, 2367. [Google Scholar] [CrossRef]

- Khan, A.T.; Li, S.; Cao, X. Control framework for cooperative robots in smart home using bio-inspired neural network. Measurement 2021, 167, 108253. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, H.; Zhang, W.; Zhang, H. Finite-time projective synchronization of Caputo type fractional complex-valued delayed neural networks. Mathematics 2021, 9, 1406. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Y.; Tong, S. Observer-based neuro-adaptive optimized control of strict-feedback nonlinear systems with state constraints. IEEE Trans. Neural Netw. Learn Syst. 2021. [Google Scholar] [CrossRef] [PubMed]

- Cogollo, M.R.; González-Parra, G.; Arenas, A.J. Modeling and forecasting cases of RSV using artificial neural networks. Mathematics 2021, 9, 2958. [Google Scholar] [CrossRef]

- Šestanović, T.; Arnerić, J. Can recurrent neural networks predict inflation in euro zone as good as professional forecasters? Mathematics 2021, 9, 2486. [Google Scholar] [CrossRef]

- Simos, T.E.; Mourtas, S.D.; Katsikis, V.N. Time-varying Black–Litterman portfolio optimization using a bio-inspired approach and neuronets. Appl. Soft Comput. 2021, 112, 107767. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Mourtas, S.D.; Stanimirović, P.S.; Li, S.; Cao, X. Time-varying mean–variance portfolio selection problem solving via LVI-PDNN. Comput. Oper. Res. 2022, 138, 105582. [Google Scholar] [CrossRef]

- Xiao, L. A new design formula exploited for accelerating Zhang neural network and its application to time-varying matrix inversion. Theor. Comput. Sci. 2016, 647, 50–58. [Google Scholar] [CrossRef]

- Xiao, L. A nonlinearly activated neural dynamics and its finite-time solution to time-varying nonlinear equation. Neurocomputing 2016, 173, 1983–1988. [Google Scholar] [CrossRef]

- Li, S.; Chen, S.; Liu, B. Accelerating a recurrent neural network to finite-time convergence for solving time-varying Sylvester equation by using a sign-bi-power activation function. Neural Process Lett. 2013, 37, 189–205. [Google Scholar] [CrossRef]

- Xiao, L. A finite-time convergent Zhang neural network and its application to real-time matrix square root finding. Neural Comput. Appl. 2019, 31, 793–800. [Google Scholar] [CrossRef]

- Yan, Z.; Zhong, S.; Lin, L.; Cui, Z. Adaptive Levenberg–Marquardt algorithm: A new optimization strategy for Levenberg–Marquardt neural networks. Mathematics 2021, 9, 2176. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Katsikis, V.N.; Li, S. Integration enhanced and noise tolerant ZNN for computing various expressions involving outer inverses. Neurocomputing 2019, 329, 129–143. [Google Scholar] [CrossRef]

- Li, W. Design and analysis of a novel finite-time convergent and noise-tolerant recurrent neural network for time-variant matrix inversion. IEEE Trans. Syst. Man Cybern. Syst. 2018, 50, 4362–4376. [Google Scholar] [CrossRef]

- Jin, L.; Zhang, Y.; Li, S.; Zhang, Y. Modified ZNN for time-varying quadratic programming with inherent tolerance to noises and its application to kinematic redundancy resolution of robot manipulators. IEEE Trans. Ind. Electron. 2016, 63, 6978–6988. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Zuo, Q.; Dai, J.; Li, J.; Tang, W. A noise-tolerant zeroing neural network for time-dependent complex matrix inversion under various kinds of noises. IEEE Trans. Ind. Inform. 2019, 16, 3757–3766. [Google Scholar] [CrossRef] [Green Version]

- Hu, Z.; Xiao, L.; Dai, J.; Xu, Y.; Zuo, Q.; Liu, C. A Unified Predefined-Time Convergent and Robust ZNN Model for Constrained Quadratic Programming. IEEE Trans. Ind. Inform. 2020, 17, 1998–2010. [Google Scholar] [CrossRef]

- Shen, L.; Wu, J.; Yang, S. Initial position estimation in SRM using bootstrap circuit without predefined inductance parameters. IEEE Trans. Power Electr. 2011, 26, 2449–2456. [Google Scholar] [CrossRef]

- Li, W.; Su, Z.; Tan, Z. A variable-gain finite-time convergent recurrent neural network for time-variant quadratic programming with unknown noises endured. IEEE Trans. Ind. Inform. 2019, 15, 5330–5340. [Google Scholar] [CrossRef]

- Xiao, L.; Zhang, Y.; Dai, J.; Zuo, Q.; Wang, S. Comprehensive analysis of a new varying parameter zeroing neural network for time varying matrix inversion. IEEE Trans. Ind. Inform. 2020, 17, 1604–1613. [Google Scholar] [CrossRef]

- Stanimirović, P.; Gerontitis, D.; Tzekis, P.; Behera, R.; Sahoo, J.K. Simulation of varying parameter recurrent neural network with application to matrix inversion. Math. Comput. Simul. 2021, 185, 614–628. [Google Scholar] [CrossRef]

- Li, X.; Li, S.; Xu, Z.; Zhou, X. A vary-parameter convergence-accelerated recurrent neural network for online solving dynamic matrix pseudoinverse and its robot application. Neural Process Lett. 2021, 53, 1287–1304. [Google Scholar] [CrossRef]

- Zhang, Y.; Jiang, D.; Wang, J. A recurrent neural network for solving Sylvester equation with time-varying coefficients. IEEE Trans. Neural Netw. 2002, 13, 1053–1063. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Qiu, B.; Jin, L.; Guo, D.; Yang, Z. Infinitely many Zhang functions resulting in various ZNN models for time-varying matrix inversion with link to Drazin inverse. Inf. Process. Lett. 2015, 115, 703–706. [Google Scholar] [CrossRef]

- Xiao, L.; Tan, H.; Jia, L.; Dai, J.; Zhang, Y. New error function designs for finite-time ZNN models with application to dynamic matrix inversion. Neurocomputing 2020, 402, 395–408. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, K. Comparison on Zhang neural network and gradient neural network for time-varying linear matrix equation AXB=C solving. In Proceedings of the 2008 IEEE International Conference on Industrial Technology, Chengdu, China, 21–24 April 2008; pp. 1–6. [Google Scholar]

- Miao, P.; Shen, Y.; Xia, X. Finite time dual neural networks with a tunable activation function for solving quadratic programming problems and its application. Neurocomputing 2014, 143, 80–89. [Google Scholar] [CrossRef] [Green Version]

- Li, W.; Liao, B.; Xiao, L.; Lu, R. A recurrent neural network with predefined-time convergence and improved noise tolerance for dynamic matrix square root finding. Neurocomputing 2019, 337, 262–273. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; Xiao, L.; Jia, L. Zeroing neural network for time-varying linear equations with application to dynamic positioning. IEEE Trans. Ind. Inform. 2021. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).