Integrating Artificial Intelligence into the Cybersecurity Curriculum in Higher Education: A Systematic Literature Review

Abstract

1. Introduction

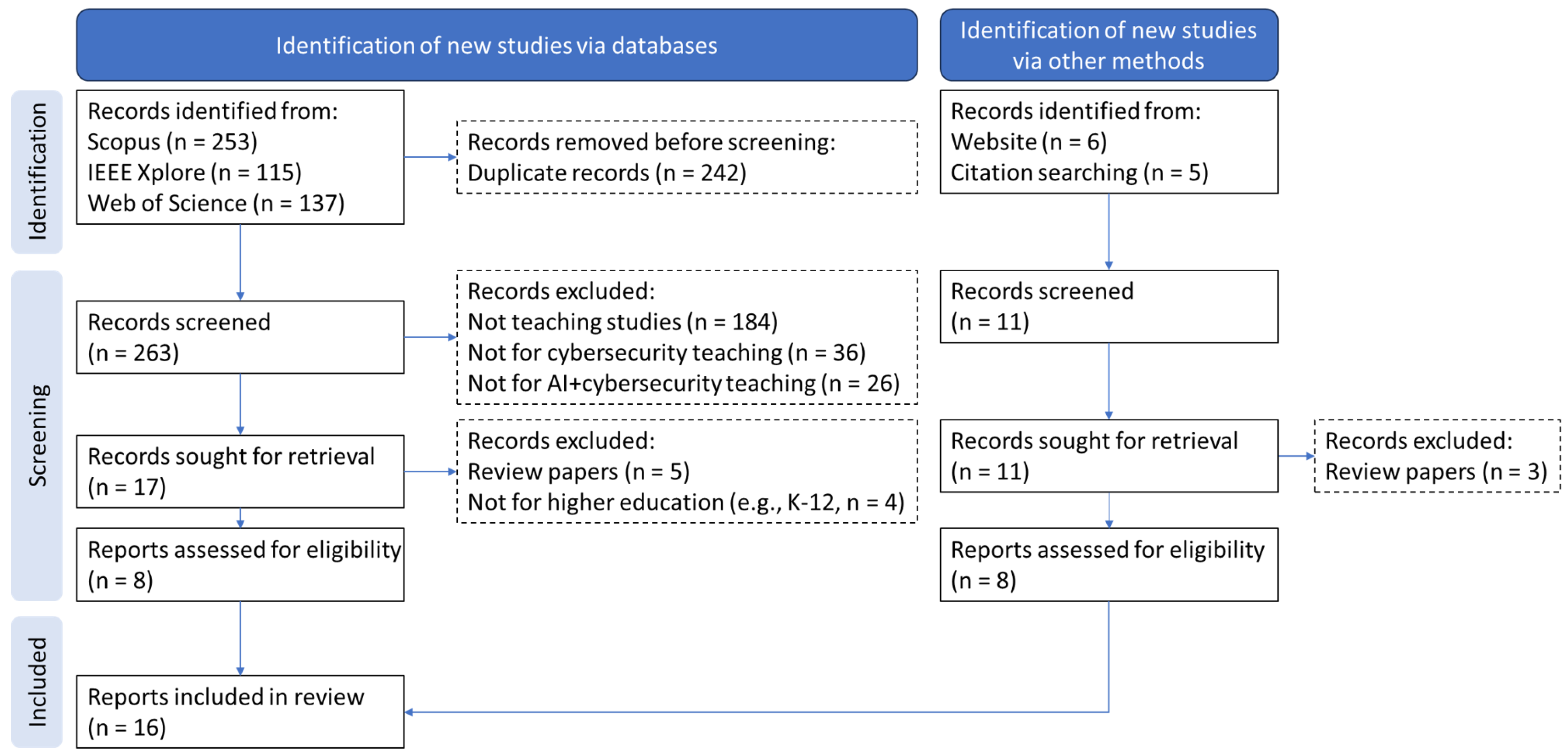

- First, it systematically synthesizes studies from multiple major databases (Scopus, IEEE Xplore, and Web of Science), offering a broader and more representative view than prior reviews that were limited to specific sources or course formats. Furthermore, it provides the most up-to-date perspective on the field by covering the period from 2020 to 2025.

- Second, it adopts an integrated lens that examines three categories of six research questions, covering course context, course curriculum, and course instructional activities and tools.

2. Related Works

3. Methodology

3.1. Literature Search Process

3.2. Research Questions

- Course context-related research questions.

- –

- RQ1. Who are the target audiences of courses?Motivation: Identifying the intended learners clarifies the background knowledge, skill gaps, and professional needs the curriculum is designed to address.Pedagogical gap and aim: Calibrate learning objectives, scaffolding, and assessment to learner readiness and context.

- –

- RQ2. What delivery modes are adopted in teaching?Motivation: Understanding whether courses are offered face-to-face, online, or in hybrid formats provides insight into the accessibility and scalability of instruction.Pedagogical gap and aim: Match the delivery modality to learning outcomes (e.g., labs needing hands-on time vs. asynchronous theory), while considering the resource constraints.

- Course curriculum-related research questions.

- –

- RQ3. What AI topics are included in the curriculum?Motivation: Mapping the range of AI content helps reveal the breadth of technical coverage in current educational practice, particularly on the emerging AI technologies.Pedagogical gap and aim: Ensure up-to-date topic sequences that build from fundamentals to advanced methods aligned with current practice.

- –

- RQ4. How are AI and cybersecurity concepts integrated in teaching?Motivation: Exploring integration strategies shows whether courses treat AI and cybersecurity separately or promote interdisciplinary learning.Pedagogical gap and aim: Promote interdisciplinarity via aligned learning outcomes and iterative tasks that connect AI methods to concrete security problems.

- Course instruction-related research questions.

- –

- RQ5. What instructional activities and pedagogical approaches are used?Motivation: Examining teaching activities (e.g., lectures, labs, and projects) highlights how learning objectives are implemented in practice.Pedagogical gap and aim: Adopt evidence-informed designs (scaffolded labs and project-based learning) that cultivate problem-solving and professional practices.

- –

- RQ6. What digital tools support the course delivery?Motivation: Investigating the tools used (e.g., simulation environments and security platforms) reveals how digital tools facilitate effective learning.Pedagogical gap and aim: Select and integrate tools that are accessible and aligned with tasks and simulate real-world workflows to enhance the learning outcome.

4. Results

4.1. Course Context-Related Research Questions

4.2. Course Curriculum-Related Research Questions

4.3. Course Instruction-Related Research Questions

5. Discussion

5.1. Summary of the Key Observations and Actionable Recommendations

5.1.1. Course Context-Related Findings

- Finding: Integrating AI and cybersecurity across learner populations is important, with offerings targeting university students; this pattern reveals the current educational landscape and underscores the need for audience-appropriate scaffolding.

- Educational framework: This finding supports the constructivist learning framework, where learners build their understanding by connecting new information with their existing knowledge and experiences. Intended learning outcomes and activities should be aligned to distinct learner profiles. For example, a practical lab platform is created to offer experiential learning for non-computing students (Okpala et al., 2025), while cybersecurity students are equipped with a foundational understanding of generative AI to further explore their applications (Mathews et al., 2025).

- Actionable recommendation: Instructors should consider extending constructive alignment to the AI–cybersecurity intersection, provide scaffolded prerequisites, and adopt blended delivery so that varied learners can reach aligned outcomes.

5.1.2. Course Curriculum-Related Findings

- Finding: Current studies exhibit a balanced emphasis on the two integration strategies, security for AI and AI for security, highlighting cross-disciplinary integration rather than independent treatment.

- Educational framework: Constructivist learning treats the two lenses, security for AI and AI for security, as paired problems that support knowledge construction through cognitive conflict and resolution (e.g., risk vs. mitigation and attack vs. defense). This finding complements (Arai et al., 2024), where learners experience damage caused by attacks and the advantages of their countermeasures. In addition, an immersive learning environment is designed in Wei-Kocsis et al. (2024) to motivate the students to explore AI development in the context of real-world cybersecurity scenarios, where AI techniques can be manipulated and evaded, resulting in new security implications.

- Actionable recommendation: Instructors could design lab structures that bind security for AI to AI for security. For each topic, we can design mirrored labs (e.g., prompt injection vs. guardrail; data poisoning vs. governance) so that learners can experience the impact of the AI technique and the inherent risk of the AI technique itself.

5.1.3. Course Instruction-Related Findings

- Finding: Active pedagogy is prevalent (e.g., hands-on labs/projects, experiential and case-based activities, and visualization to unpack complex concepts), which indicates a need for learning by doing with structured supports that build transferable competencies for AI and cybersecurity practice.

- Educational framework: Our finding about active pedagogy aligns with the connectivist learning framework, where learners build understanding through manipulation of tools, datasets, and reflection on experience. This is consistent with immersive and visualization-centric designs in Salman (2024); You et al. (2025), hands-on programming design (Alexander et al., 2024), and even the hardware implementation (Apruzzese et al., 2023; Debello et al., 2023).

- Actionable recommendation: Instructors should ground theory in practice. For example, they can start each lecture with a brief real-world artifact (e.g., a prompt injection transcript), state the intended learning outcomes, and then introduce the concepts that explain the artifact. Furthermore, they can provide one-click, sandboxed environments (e.g., Docker/Colab) so learners can run paired attack–defend labs and safely explore AI techniques. Lastly, they can conclude each hands-on activity with a guided reflection, prompting students to articulate what worked, what failed, and how they would improve their approach.

5.2. Limitations

5.3. Future Research

6. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Afolabi, A. S., & Adewale Akinola, O. (2024, September 18–20). Vulnerable AI: A survey. IEEE International Symposium on Technology and Society, Puebla, Mexico. [Google Scholar] [CrossRef]

- Alexander, R., Ma, L., Dou, Z.-L., Cai, Z., & Huang, Y. (2024). Integrity, confidentiality, and equity: Using inquiry-based labs to help students understand AI and cybersecurity. Journal of Cybersecurity Education Research and Practice, 2024(1), 10. [Google Scholar] [CrossRef]

- Ali, D., Fatemi, Y., Boskabadi, E., Nikfar, M., Ugwuoke, J., & Ali, H. (2024). ChatGPT in teaching and learning: A systematic review. Education Sciences, 14(6), 643. [Google Scholar] [CrossRef]

- Apruzzese, G., Anderson, H. S., Dambra, S., Freeman, D., Pierazzi, F., & Roundy, K. (2023, February 8–10). Real attackers don’t compute gradients: Bridging the gap between adversarial ML research and practice. 2023 IEEE Conference on Secure and Trustworthy Machine Learning (SaTML) (pp. 339–364), Raleigh, NC, USA. [Google Scholar] [CrossRef]

- Arai, M., Tejima, K., Yamada, Y., Miura, T., Yamashita, K., Kado, C., Shimizu, R., Tatsumi, M., Yanai, N., & Hanaoka, G. (2024). REN-AI: A video game for AI security education leveraging episodic memory. IEEE Access, 12, 47359–47372. [Google Scholar] [CrossRef]

- Aris, A., Rondon, L. P., Ortiz, D., Ross, M., & Finlayson, M. (2022, June 26–29). Integrating artificial intelligence into cybersecurity curriculum: New perspectives. ASEE Annual Conference and Exposition (pp. 1–15), Minneapolis, MN, USA. [Google Scholar] [CrossRef]

- Bendler, D., & Felderer, M. (2023). Competency models for information security and cybersecurity professionals: Analysis of existing work and a new model. ACM Transactions on Computing Education, 23(2), 1–33. [Google Scholar] [CrossRef]

- Beuran, R., Hu, Z., Zeng, Y., & Tan, Y. (2022). Artificial intelligence for cybersecurity education and training. Springer. [Google Scholar] [CrossRef]

- Bhuiyan, S., & Park, J. S. (2025). Cybersecurity threats and mitigation strategies in AI applications. Journal of The Colloquium for Information Systems Security Education, 12(1), 1–7. [Google Scholar] [CrossRef]

- Brito, F., Mekdad, Y., Ross, M., Finlayson, M. A., & Uluagac, S. (2025, February 26–March 1). Enhancing cybersecurity education with artificial intelligence content. ACM Technical Symposium on Computer Science Education (pp. 158–164), Pittsburgh, PA, USA. [Google Scholar] [CrossRef]

- Calhoun, A., Ortega, E., Yaman, F., Dubey, A., & Aysu, A. (2022, June 6–8). Hands-on teaching of hardware security for machine learning. Great Lakes Symposium on VLSI (pp. 455–461), Irvine, CA, USA. [Google Scholar] [CrossRef]

- Cusak, A. (2023). Case study: The impact of emerging technologies on cybersecurity education and workforces. Journal of Cybersecurity Education Research and Practice, 1, 3. [Google Scholar] [CrossRef]

- Das, B. C., Amini, M. H., & Wu, Y. (2025). Security and privacy challenges of large language models: A survey. ACM Computing Surveys, 57(6), 1–39. [Google Scholar] [CrossRef]

- Debello, J. E., Troja, E., & Truong, L. M. (2023, May 1–4). A framework for infusing cybersecurity programs with real-world artificial intelligence education. IEEE Global Engineering Education Conference (pp. 1–5), Kuwait, Kuwait. [Google Scholar] [CrossRef]

- Deng, Z., Guo, Y., Han, C., Ma, W., Xiong, J., Wen, S., & Xiang, Y. (2025). AI agents under threat: A survey of key security challenges and future pathways. ACM Computing Surveys, 57(7), 1–36. [Google Scholar] [CrossRef]

- Dewi, H. A., Candiwan, C., & Sari, P. K. (2024, December 17–19). Artificial intelligence in security education, training and awareness: A bibliometric analysis. 2024 International Conference on Intelligent Cybernetics Technology & Applications (pp. 914–919), Bali, Indonesia. [Google Scholar] [CrossRef]

- Farahmand, F. (2021). Integrating cybersecurity and artificial intelligence research in engineering and computer science education. IEEE Security and Privacy, 19(6), 104–110. [Google Scholar] [CrossRef]

- Jaffal, N. O., Alkhanafseh, M., & Mohaisen, D. (2025). Large language models in cybersecurity: A survey of applications, vulnerabilities, and defense techniques. AI, 6(9), 216. [Google Scholar] [CrossRef]

- Jimenez, R., & O’Neill, V. E. (2023). Handbook of research on current trends in cybersecurity and educational technology. IGI Global Scientific Publishing. [Google Scholar] [CrossRef]

- Laato, S., Farooq, A., Tenhunen, H., Pitkamaki, T., Hakkala, A., & Airola, A. (2020, July 6–9). AI in cybersecurity education—A systematic literature review of studies on cybersecurity MOOCs. IEEE 20th International Conference on Advanced Learning Technologies (pp. 6–10), Tartu, Estonia. [Google Scholar] [CrossRef]

- Lasisi, R. O., Menia, M., Farr, Z., & Jones, C. (2022, May 15–18). Exploration of AI-enabled contents for undergraduate cyber security programs. International Florida Artificial Intelligence Research Society Conference (pp. 1–4), Hutchinson Island, FL, USA. [Google Scholar] [CrossRef]

- Lo, D. C.-T., Shahriar, H., Qian, K., Whitman, M., Wu, F., & Thomas, C. (2022, March 2–5). Authentic learning of machine learning in cybersecurity with portable hands-on labware. ACM Technical Symposium on Computer Science Education (p. 1153), Providence, RI, USA. [Google Scholar] [CrossRef]

- Lozano, A., & Blanco Fontao, C. (2023). Is the education system prepared for the irruption of artificial intelligence? A study on the perceptions of students of primary education degree from a dual perspective: Current pupils and future teachers. Education Sciences, 13(7), 733. [Google Scholar] [CrossRef]

- Mathews, N., Schwartz, C., & Wright, M. (2025). Teaching generative AI for cybersecurity: A project-based learning approach. Journal of The Colloquium for Information Systems Security Education, 12(1), 1–10. [Google Scholar] [CrossRef]

- Michel-Villarreal, R., Vilalta-Perdomo, E., Salinas-Navarro, D. E., Thierry-Aguilera, R., & Gerardou, F. S. (2023). Challenges and opportunities of generative AI for higher education as explained by ChatGPT. Education Sciences, 13(9), 856. [Google Scholar] [CrossRef]

- Okdem, S., & Okdem, S. (2024). Artificial intelligence in cybersecurity: A review and a case study. Applied Sciences, 14(22), 487. [Google Scholar] [CrossRef]

- Okpala, E., Vishwamitra, N., Guo, K., Liao, S., Cheng, L., Hu, H., Yuan, X., Wade, J., & Khorsandroo, S. (2025). AI-cybersecurity education through designing AI-based cyberharassment detection lab. Journal of The Colloquium for Information Systems Security Education, 12(1), 1–8. [Google Scholar] [CrossRef]

- Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., … Moher, D. (2021). The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Systematic Reviews, 10, 89. [Google Scholar] [CrossRef]

- Payne, C., & Glantz, E. J. (2020, October 7–9). Teaching adversarial machine learning: Educating the next generation of technical and security professionals. Annual Conference of the Special Interest Group in Information Technology Education (pp. 7–12), Virtual Event. [Google Scholar] [CrossRef]

- Pusey, P., Gupta, M., Mittal, S., & Abdelsalam, M. (2024). An analysis of prerequisites for artificial intelligence machine learning-assisted malware analysis learning modules. Journal of The Colloquium for Information Systems Security Education, 11, 1–5. [Google Scholar] [CrossRef]

- Salman, A. (2024, December 17–19). Integrating artificial intelligence in cybersecurity education: A pedagogical framework and case studies. International Conference on Computer and Applications (pp. 1–5), Cairo, Egypt. [Google Scholar] [CrossRef]

- Shahriar, H., Whitman, M., Lo, D., Wu, F., & Thomas, C. (2020, March 11–14). Case study-based portable hands-on labware for machine learning in cybersecurity. ACM Technical Symposium on Computer Science Education (p. 1273), Portland, OR, USA. [Google Scholar] [CrossRef]

- Svabensky, V., Vykopal, J., & Celeda, P. (2020, March 11–14). What are cybersecurity education papers about? A systematic literature review of SIGCSE and ITiCSE conferences. ACM Technical Symposium on Computer Science Education (pp. 2–8), Portland, OR, USA. [Google Scholar] [CrossRef]

- Tian, J. (2025). A practice-oriented computational thinking framework for teaching neural networks to working professionals. AI, 6(7), 140. [Google Scholar] [CrossRef]

- Wei-Kocsis, J., Sabounchi, M., Mendis, G. J., Fernando, P., Yang, B. J., & Zhang, T. L. (2024). Cybersecurity education in the age of artificial intelligence: A novel proactive and collaborative learning paradigm. IEEE Transactions on Education, 67(3), 395–404. [Google Scholar] [CrossRef]

- Weitl-Harms, S., Spanier, A., Hastings, J., & Rokusek, M. (2023). A systematic mapping study on gamification applications for undergraduate cybersecurity education. Journal of Cybersecurity Education Research and Practice, 2023(1), 9. [Google Scholar] [CrossRef]

- You, Y., Tse, J., & Zhao, J. (2025). Panda or not panda? Understanding adversarial attacks with interactive visualization. ACM Transactions on Interactive Intelligent Systems, 15(2), 11. [Google Scholar] [CrossRef]

- Zivanovic, M., Lendák, I., & Popovic, R. (2024, July 30–August 2). Tackling the cybersecurity workforce gap with tailored cybersecurity study programs in central and eastern europe. ACM International Conference on Availability, Reliability and Security, Vienna, Austria. [Google Scholar] [CrossRef]

| Reference | Year | Source | Number of Studies | Coverage (Year) | Remark |

|---|---|---|---|---|---|

| Laato et al. (2020) | 2020 | ACM, IEEE Xplore, | 15 | 2003–2019 | MOOC course only |

| Springer, DBLP | |||||

| Svabensky et al. (2020) | 2020 | Selected computer | 71 | 2010–2019 | General cybersecurity |

| science conferences | education | ||||

| SIGCSE and ITiCSE | |||||

| Aris et al. (2022) | 2022 | Selected cybersecurity | 300 | 2016–2021 | Curriculum only |

| conferences only | |||||

| Lasisi et al. (2022) | 2022 | Undergraduate | 24 | 2020 | Curriculum only |

| courses in USA | |||||

| Weitl-Harms et al. (2023) | 2023 | ACM, Taylor & Francis, | 74 | 2007–2022 | Gamification only |

| Scopus, IEEE Xplore | |||||

| Dewi et al. (2024) | 2024 | Scopus | 637 | 2019–2024 | A bibliometric analysis |

| Ours | Scopus, IEEE Xplore, | 16 | 2020–2025 | A study on course | |

| Web of Science | context, curriculum, | ||||

| instruction, and tools |

| Database | Search Syntax |

|---|---|

| Scopus | ABS ((Cybersecurity) AND (AI OR “artificial intelligence” OR “machine learning”) AND (teaching OR education)) |

| IEEE Xplore | (“Abstract”:Cybersecurity) AND (“Abstract”:AI OR “Abstract”:“artificial intelligence” OR “Abstract”:“machine learning”) AND (“Abstract”:teaching OR “Abstract”:education) |

| Web of science | AB = (cybersecurity AND (AI OR “artificial intelligence” OR “machine learning”) AND (teaching OR education)) |

| Year | 2020 | 2021 | 2022 | 2023 | 2024 | 2025 | Total |

|---|---|---|---|---|---|---|---|

| Journal papers | 0 | 1 | 0 | 0 | 4 | 3 | 8 |

| Conference papers | 2 | 0 | 2 | 2 | 1 | 1 | 8 |

| Reference | A Short Description |

|---|---|

| Alexander et al. (2024) | It teaches vulnerabilities in AI systems via hands-on, inquiry-based labs. |

| Apruzzese et al. (2023) | It provides teaching and practitioner guidance that is focused on threat models and evaluation. |

| Arai et al. (2024) | It teaches AI security concepts by leveraging game design and mechanics that embed adversarial machine learning ideas into engaging play. |

| Brito et al. (2025) | It provides a set of recommended topics, sequencing, and course structures that integrate machine learning methods into security education. |

| Calhoun et al. (2022) | Focuses on teaching hardware-level threats and defenses affecting machine learning systems. It provides a suite of hands-on lab modules that expose students to issues such as accelerator vulnerabilities. |

| Debello et al. (2023) | A practical way to integrate artificial intelligence into cybersecurity programs so students encounter real, security-relevant AI problems. |

| Farahmand (2021) | It integrates AI cybersecurity research into engineering and computer science education by structuring interdisciplinary courses and projects that connect students with active research problems and security-relevant AI work. |

| Lo et al. (2022) | It provides a low-cost portable lab kit that can support authentic machine learning workflows in cybersecurity contexts, where students collect data, train models, and evaluate results on security-relevant problems. |

| Mathews et al. (2025) | It designs a course to equip students with a foundational understanding of Generative AI and explore their applications in cybersecurity, by a combination of lectures, hands-on projects, and industry guest lectures. |

| Okpala et al. (2025) | A practical lab platform is created to offer experiential learning for students outside computing disciplines. Participants explore foundational AI ideas and how these can be used to identify online harassment. |

| Payne and Glantz (2020) | It shares how to teach adversarial machine learning to security professionals through a course design with learning objectives and hands-on exercises. |

| Pusey et al. (2024) | It studies which prior knowledge indicators can assess student preparedness for modules involving AI-supported malware investigation, aiding educators in shaping effective teaching strategies. |

| Salman (2024) | A pedagogical framework for blending AI topics into cybersecurity education. It provides a set of design principles and case studies that show how to implement the framework across contexts, including learning outcomes and assessment choices. |

| Shahriar et al. (2020) | A portable machine learning for cybersecurity lab platform. It provides a set of realistic cases and exercises that guide students from problem framing through model building and reflection, enabling consistent, hands-on practice. |

| Wei-Kocsis et al. (2024) | A proactive, collaborative pedagogy that connects AI concepts with cybersecurity practice via a learning model combining teamwork, community engagement, and early risk awareness. |

| You et al. (2025) | An interactive visualization that reveals how tiny, structured perturbations can mislead image classifiers. It provides a learning tool that helps students grasp adversarial examples, decision boundaries, and feature sensitivity through direct manipulation and immediate visual feedback. |

| Reference | RQ1. Who Are the Target Audiences of Courses? | RQ2. What Delivery Modes Are Adopted in Teaching? |

|---|---|---|

| Farahmand (2021) | University undergraduate | Face-to-face |

| Calhoun et al. (2022) | University undergraduate | Face-to-face |

| Debello et al. (2023) | University undergraduate | Face-to-face |

| Lo et al. (2022) | University postgraduate | − |

| Payne and Glantz (2020) | University undergraduate; | Face-to-face |

| university postgraduate | ||

| Pusey et al. (2024) | University undergraduate; | Face-to-face |

| university postgraduate | ||

| Wei-Kocsis et al. (2024) | University undergraduate; | Face-to-face |

| university postgraduate | ||

| Brito et al. (2025) | University undergraduate; | Face-to-face |

| university postgraduate | ||

| You et al. (2025) | University undergraduate; | Face-to-face |

| university postgraduate | ||

| Mathews et al. (2025) | University undergraduate; | Face-to-face |

| university postgraduate | ||

| (cybersecurity major) | ||

| Okpala et al. (2025) | University undergraduate; | Face-to-face |

| university postgraduate | ||

| (non-computing major) | ||

| Arai et al. (2024) | − | Online |

| Shahriar et al. (2020) | − | − |

| Apruzzese et al. (2023) | − | − |

| Alexander et al. (2024) | − | − |

| Salman (2024) | − | − |

| Reference | RQ3. What AI Topics Are Included in the Curriculum? | RQ4. How Are AI and Cybersecurity Concepts Integrated into Teaching? | |

|---|---|---|---|

| Cybersecurity for AI | AI for Cybersecurity | ||

| Payne and Glantz (2020) | Perception AI | ✓ | − |

| Calhoun et al. (2022) | Perception AI | ✓ | − |

| Apruzzese et al. (2023) | Perception AI | ✓ | − |

| Alexander et al. (2024) | Perception AI | ✓ | − |

| You et al. (2025) | Perception AI | ✓ | − |

| Shahriar et al. (2020) | Perception AI | − | ✓ |

| Lo et al. (2022) | Perception AI | − | ✓ |

| Debello et al. (2023) | Perception AI | − | ✓ |

| Pusey et al. (2024) | Perception AI | − | ✓ |

| Salman (2024) | Perception AI | − | ✓ |

| Brito et al. (2025) | Perception AI | − | ✓ |

| Okpala et al. (2025) | Perception AI | − | ✓ |

| Farahmand (2021) | Perception AI | ✓ | ✓ |

| Arai et al. (2024) | Perception AI | ✓ | ✓ |

| Wei-Kocsis et al. (2024) | Perception AI | ✓ | ✓ |

| Mathews et al. (2025) | Generative AI | − | ✓ |

| Reference | RQ5. What Instructional Activities and Pedagogical Approaches Are Used? | RQ6. What Digital Tools Support the Course Delivery? |

|---|---|---|

| Alexander et al. (2024) | Experiential learning | Programming platform |

| (e.g., Colab, Anaconda) | ||

| Apruzzese et al. (2023) | Case study | Robot car |

| Arai et al. (2024) | Game-based learning | Python programming tool; |

| open-source dataset | ||

| Brito et al. (2025) | Theoretical materials; | Block-based programming; |

| practical materials | online visualization webpage | |

| Calhoun et al. (2022) | Hands-on activity | − |

| Debello et al. (2023) | Hands-on gamified labs; | Drones; Raspberry Pi |

| Capstone project | ||

| Farahmand (2021) | Homeworks | Circuit hardware |

| Lo et al. (2022) | Authentic learning | − |

| Mathews et al. (2025) | Project-based learning | AI tools (e.g., ChatGPT) |

| Okpala et al. (2025) | Experiential learning | Online programming platform |

| Payne and Glantz (2020) | Hands-on activity | Open-source tool |

| Pusey et al. (2024) | Interactive workshop | − |

| Salman (2024) | Scaffolding; | Visualization tool |

| experiential learning | ||

| Shahriar et al. (2020) | Case study | − |

| Wei-Kocsis et al. (2024) | Game-based learning | Online programming platform |

| You et al. (2025) | Interactive activity | Online web game |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, J. Integrating Artificial Intelligence into the Cybersecurity Curriculum in Higher Education: A Systematic Literature Review. Educ. Sci. 2025, 15, 1540. https://doi.org/10.3390/educsci15111540

Tian J. Integrating Artificial Intelligence into the Cybersecurity Curriculum in Higher Education: A Systematic Literature Review. Education Sciences. 2025; 15(11):1540. https://doi.org/10.3390/educsci15111540

Chicago/Turabian StyleTian, Jing. 2025. "Integrating Artificial Intelligence into the Cybersecurity Curriculum in Higher Education: A Systematic Literature Review" Education Sciences 15, no. 11: 1540. https://doi.org/10.3390/educsci15111540

APA StyleTian, J. (2025). Integrating Artificial Intelligence into the Cybersecurity Curriculum in Higher Education: A Systematic Literature Review. Education Sciences, 15(11), 1540. https://doi.org/10.3390/educsci15111540