Abstract

Collaborative discussions can significantly contribute to learners’ knowledge acquisition; however, research has shown that K-12 students will add ideas without integrating them into their existing schemas and often adopt unsubstantiated claims as their own. In this design study, we explored how differential scaffolds can support middle school students’ discussions as they develop integrated understandings longitudinally within an online evolutionary science unit. Students studied the unit individually but also collaborated to discuss instances in the unit where dilemmas were presented, where they were prompted to add ideas to a public idea market (IM). Two conditions were compared: a Guided condition, where students (n = 181) were scaffolded to distinguish their ideas from their peers’, and a Typical condition, where students (n = 163) responded to their peers’ ideas without scaffolding. The peer-to-peer interaction data within the IM were analyzed for patterns of collaboration among students in each condition and any resulting changes in the sophistication of their explanations across the Knowledge Integration framework. The data support that students in the Guided condition, compared to the Typical condition, provided significantly more integrated responses related to their capability to distinguish their ideas from their peers across all dilemmas: t (335) = 6.63, p < 0.01; Cohen’s d = 0.73. However, differences in condition did not impact overall student learning gains. Implications are discussed related to the value of technologically enhanced collaborative discussions and the role of scaffolds within the designs of these platforms.

1. Introduction

Collaborative learning has the potential to contribute to knowledge acquisition. Learning that seeks to enhance collaboration occurs when two or more students actively contribute to the attainment of a mutual learning goal and share the effort required to reach this goal, either face-to face or supported by technology (; ). Collaboration takes advantage of distributed expertise () to support the collective processing of novel information (). In turn, collaboration can also widen students’ repertoire of accessible ideas they use to understand complex science phenomena (). Collaborators may serve as an audience for others and could explain an idea in language familiar to the student (), while such a peer audience can encourage students to clarify their explanations () by pushing students to attend to complexity in the explanation that they might otherwise have glossed over (; ). This type of interactive learning has also been found to encourage students to use evidence in ways that help them distinguish among their different viewpoints and promote integration of distinct ideas (; ). Over the past thirty years, many studies have, therefore, demonstrated the added value of collaboration between learners in supporting the development of conceptual understandings (; ; ; ; ; ; ). Yet, collaborators may only add the ideas of others without meaningfully integrating them with their own views or they may adopt an idea of a peer that lacks evidence (; ; ).

In this research, we explore two ways to engage students in meaningful collaboration by leveraging technology to guide students to compare and contrast other students’ ideas to gain more coherent, integrated understandings in science (). We assess the impact of the collaborative discussion tools on students’ knowledge integration and how the two versions of the collaborative discussion platform may contribute to overall student interaction.

2. Theoretical Background

Studies comparing interventions to enhance collaboration illustrate the importance of careful design of such tasks. () describe humans’ ability to obtain information from others and adapt it to one’s own knowledge as one of the five principles of learning (referred to as borrowing and reorganizing). However, others document that borrowing may be more likely than reorganizing (; ) and that students may borrow ideas to confirm their own ideas rather than disrupt them (). Reorganizing is more challenging since it involves using peers’ ideas to construct personally relevant meaning. For example, in relatively short collaborative activities, few students were able to create new ideas that built on the ideas of others (). This might be because students lack attitudes or skills needed for the collaboration task (; ). In essence, students may not believe that their peers’ ideas could be a meaningful resource for the development of their own understanding, which in turn creates a danger that students may borrow ideas without critical appraisal, leading to what has been called groupthink () where the group embraces a non-normative idea based on partial evidence or lack of serious consideration of alternatives. Thus, this work refers to two aspects of collaboration: one where students may adopt or borrow an idea and another where students critically organize, evaluate, and distinguish among ideas.

Guidance adds value to inquiry learning including collaborative activities (; ). Prior research has identified promising ways to guide collaboration, such as a recent meta-analysis showing the benefits of scripted guidance for improving impacts of collaboration (). Guidance can focus on participation behavior or on the quality of the thinking about the topic. For example, guidance that involves visualization of behavior (how much each member contributed to his or her group’s communication) has been shown to increase participation rates in online collaboration (). Moreover, guidance that encourages students to explain and sort out their ideas rather than giving them the right answer can encourage thinking about the ideas (; ; ). Thus, guiding use of peers’ ideas offers promise for collaboration.

One approach is to encourage students to evaluate or critique the ideas of others in a discussion (; ; ). Critique activities have also been shown to support joint construction of meaning (). Encouraging students to analyze and build on ideas from peers can, thus, motivate students to develop criteria for evaluating ideas and help them recognize gaps in their understanding (). (), furthermore, demonstrated the effectiveness of a knowledge awareness tool in improving the learners’ model of their partner’s knowledge, highlighting the quality of their collaboration. Guiding students on how to provide informative feedback and suggestive elaborations for future improvement has, in turn, significantly increased students’ peer feedback in terms of content and quality (). This work argues that collaboration, when designed well, can also aid knowledge integration in various ways through the infusion of specific activities students participate in during instruction (see Table 1).

Table 1.

Knowledge integration strategies, practices, design principles, and examples based on the KI framework (; ).

Computer-supported collaboration has been shown to provide substantial value for student learning compared to instruction without technology () although also see (). This work seeks ways to enhance discussions that already use technology, consistent with the needs identified by (). It responds to the call for cultivation and guidance throughout the learning process to make collaborative learning beneficial (). At the same time, it acknowledges that enhanced discussion, while improving the quality of discussion, is not guaranteed to improve knowledge acquisition (), keeping in mind that measuring the impact of such designs for collaborative learning experiences using technology is a complex process ().

The guidance tested in this research, took advantage of the online Web-based Inquiry Science Environment (WISE), and used a pedagogical framework called Knowledge Integration (KI). The framework (; ) emerged from research on inquiry learning showing that students develop a personal repertoire of ideas as a result of their learning experiences. The KI framework describes how instruction can help students reconcile and refine these often contradictory and vague ideas into a coherent account of a complex phenomenon. To support these integration processes, KI defines four instructional strategies: eliciting students’ ideas; adding new, pivotal ideas; developing criteria for distinguishing among ideas and sorting them out; and integrating the different ideas into a coherent understanding. These strategies take advantage of the variety of views that students generate. They can therefore strengthen collaborative learning activities (see Table 1 above).

Research on knowledge integration has identified how guidance can help students develop these practices by encouraging the use of evidence to analyze the different viewpoints, leading to the integration of distinct ideas (; ). To test how KI-based guidance can enhance online discussions, this work embedded two conversation scaffolds—Typical and Guided—into a middle-school evolution unit within WISE.

3. Aim and Research Questions

Building on the KI framework and prior CSCL research, this study addresses the following questions:

- How do “Typical” versus “Guided” KI scaffolds affect the quality of students’ collaborative discourse?

- Do students in the Guided condition produce more “distinguish”-type responses (which compare and contrast ideas) than those in a Typical condition?

- How do these scaffolds influence students’ participation patterns, variety of ideas posted, and criteria used when selecting peer ideas?

- What is the impact of each scaffold on students’ individual knowledge integration outcomes?

- Does enhanced discussion quality in the Guided condition lead to greater learning gains—measured by pre- and post-unit assessments—compared to the Typical condition?

4. Method

4.1. Participants

Two eighth-grade teachers and all 344 eighth-grade students (13–14-year-old) at a suburban, mid–high-socioeconomic-status middle school in the western United States participated. Teacher A (female, >10 years’ experience) taught 156 students using WISE units previously; Teacher B (female, 2 years’ experience) taught and was relatively new to WISE. Neither teacher had previously taught the specific unit tested in this research. The school serves a moderately diverse, mostly mid-to-high-socioeconomic-status, suburban population in the western United States (60% White, 18% Asian, 11% Hispanic, 1% Black, 10% Reduced-price lunch, 3% ELL).

4.2. Curriculum

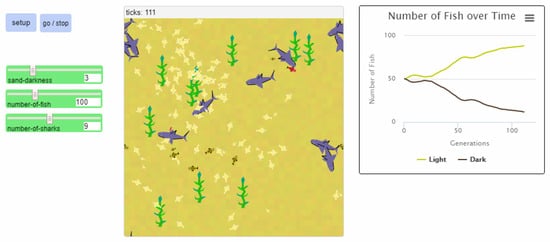

The Guided and Typical conditions were implemented in web-based collaborative discussions embedded in a unit called The Genetics of Extinction (GenEx). The unit is authored in WISE, an online authoring and instructional delivery system () consisting of units and assessments that are aligned with state (CA) and national science standards (NGSS). The GenEx unit features evolutionary processes for multiple organisms culminating in an investigation of bees’ extinction (Colony Collapse Disorder). Students individually explored the scientific concepts in a series of six activities (Table 2). Each activity focused on a different aspect of evolution using interactive simulations and other inquiry activities designed to promote integrated understanding. The unit included the Evolution model (Figure 1) that demonstrated the effect of environmental pressures (predation) on a species population (fish) in which an advantageous trait (having color that enables camouflage) lead to survival and became common in the population over generations.

Table 2.

Activities and idea market dilemmas in the Genetics of Extinction.

Figure 1.

The online model of genetics of extinction. Students can vary the sand color, number of fish, and number of sharks.

The collaborative discussions were implemented within the sequence of activities using idea markets (IMs) where students could post their ideas regarding a given dilemma, read other posts and respond to them. Besides focusing on different disciplinary ideas, the dilemmas differed pedagogically. The first dilemma (1.4) elicited students’ ideas about bee extinction as a baseline; it did not require previous knowledge. The second dilemma (2.1) asked students about their own genetic inheritance to make genetics relevant to students’ lives and build community. The next two dilemmas (3.4, 3.6) were designed to support students’ making sense of ideas embedded in the Evolution model (Figure 1). In the last two dilemmas, students applied the model to new contexts: dilemma 4.8 required students to explain the evolutionary process relying on theoretical abstract concepts, and dilemma 5.2 asked students to predict extinction processes building on the concept of ‘speed of reproduction’ they had explored in the previous step.

4.3. Conditions

The current study compared typical and enhanced technology support for collaborative discussion, building on the four KI strategies (Table 1):

- Typical Support (Typical)—students were guided to elicit their ideas and share them with their peers by posting them in a public web-based repository (Figure 2). This guidance builds on the Elicit and Add KI strategies.

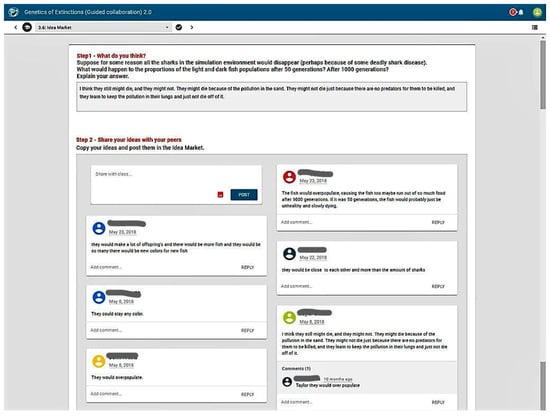

- Guided Support (Guided)—students were guided to elicit and share their ideas in a public web-based repository (as in the Typical condition) but then scripted to distinguish ideas by choosing a peer idea that differs from their own, comment on it, and revise their own idea (Figure 3). This enhanced guidance builds on the Distinguish and Integrate KI strategies.

Figure 2.

The web-based Idea Market—guidance for eliciting (step 1) and sharing ideas (step 2) available to students in both conditions.

Figure 3.

The web-based Idea Market—scripted guidance for distinguishing and integrating ideas which was given only to the Guided group.

The IMs for each condition were identical and used for all six discussions so students became familiar with the instructions and could focus on the interactions. Students were randomly assigned to either the Guided or Typical condition. All students were asked to consolidate their own ideas about the relevant dilemma and write their individual posts. To minimize Groupthink (), a gated discussion was used in which students first shared their ideas by posting them in the IM, and only then were able to read their peers’ posts. All students were also asked to report how many posts were in the IM by the time they posted their own post.

The students in the Guided condition were scripted to explore the IM, look for a post that differed from their own, and explain in what way it was different. Then they were asked to respond to the post they chose and read any responses they received from others on their own post. Finally, students could revise their initial post based on their collaborative interactions. The guidance encouraged students to explore the different posts in the IM from a critical stance looking for an idea that differs from theirs. Thus, students in the Guided condition probably explored more ideas than students in the Typical condition. Articulating their reason for choosing the post, using evidence to say why the ideas differed, and explaining the possible connections (or disconnections) between the ideas was designed to promote knowledge integration.

4.4. Procedure

The teachers decided to allocate ten lessons for the completion of the GenEx unit. All students completed a pretest to assess their integrated understanding before they began the unit. Students performed the pretest individually on a set of classroom laptops for approximately 30 min. Following the pretest, individual students were randomly assigned to the Guided or Typical condition. Students in each class were more or less equally divided between the two conditions. The students were not told about the differences between the versions. After the students completed the first five activities, and before moving on to the sixth inquiry activity, they individually performed the post-test, which was identical to the pretest. The post-test took approximately 30 min. The entire length of the study from pretest through post-test was 10 days. After the post-test, teachers had the choice to complete the unit or not.

4.5. Data Sources

We used the following data for the analysis: (a) WISE log data that capture students’ interactions during learning including students’ initial responses to each dilemma (“Posts”) and the comments students wrote to each other (“Responses”); (b) student responses to a questionnaire about their experiences administered at the end of the unit; (c) student responses to an assessment that was used twice (pretest and post-test). The questions consisted of multi-part items addressing the main ideas of the unit (genetic biodiversity, mutations, environmental pressures, advantageous traits, natural selection, evolution and extinction processes). The assessment may be viewed at this link: https://wise.berkeley.edu/preview/unit/23899 (accessed on 8 April 2025); and (d) notes from classroom observations recorded by the author throughout the enactment of the unit.

4.6. Data Analysis

The impacts of the two conditions were analyzed across the six different online discussions embedded in the GenEx unit. Condition differences were analyzed for levels of participation (in posting and responding), variety of ideas posted, criteria used for choosing a different idea, evidence for distinguishing ideas, and attitudes towards peer ideas as a helpful resource for learning. Learning outcomes were explored on group and individual levels (). On the group level, the analysis focused on whether the guidance affected the patterns of collaboration students demonstrated. On the individual level, subject matter knowledge gains were analyzed. We hypothesized that students who were guided to distinguish ideas would develop better understandings of the topics being taught in the unit, as well as more positive attitudes about collaboration with their peers.

The following indicators of a collaborative knowledge integration process were analyzed and used to compare the two guidance conditions. Students who did not complete the unit were dropped from the study.

4.6.1. Extent of Participation in the Collaborative Process

The percentage of students who posted comments and responded to comments was determined using the WISE data logs for each of the IMs.

4.6.2. Variety of Ideas in Posts

Collaborative knowledge integration gains from a rich variety of ideas that students can explore and use while building their understanding. The distinct and frequent ideas posted in each IM were analyzed, starting with 100 posts for each dilemma. The list was then refined and coded for all the remaining posts.

4.6.3. Category of Response to Initial Posts

A rubric was developed (Table 3) to score the different responses students wrote to their peers. The rubric identified the potential contribution of the response to knowledge integration processes. The categories were informed both by an inductive analysis of students’ responses, and the KI framework. Hence, we distinguished between praise and agree responses that do not add new scientific ideas to the discussion, explain responses that add new scientific ideas, and distinguish responses that describe the relations between a post and the ideas of the response-writer, supporting idea sorting and integration. To make sure each response is interpreted in the right context, the analysis was performed while reading the whole sequence of posts and responses to each IM. We read the responses written in one IM (3.6) and formed initial categories with examples. To ensure reliability of the coding, a research team of three people who were not involved in the coding was presented with the rubric and coded 30 responses, which represent about 30% of the responses in IM 3.6 (Guided condition). Initial agreement between the team and my scores was 80%, and after discussion increased to 93%. The refined rubric was used to score all the responses.

Table 3.

Rubric for categorizing students’ responses to their peers’ posts, based on the KI framework (; ).

4.6.4. What Makes the Selected Idea Different from Your Idea?

A rubric was developed (Table 4) to analyze which criteria students use for choosing a peer’s idea as a resource for collaborative knowledge integration. We analyzed responses to an embedded assessment in the Guided condition that asks the student to explain what makes the idea they selected different from their own idea.

Table 4.

Rubric for criteria for explaining what makes the idea selected different from their own idea.

4.6.5. Attitude Towards Collaboration

After completing the unit, we asked students to reflect on their collaborative experience using this question: While learning this WISE unit, it helped me to see my peers’ ideas in the IM (agree/disagree and explain).

4.6.6. Knowledge Gains

The content assessments (pre- and post-unit) were used to measure progress in knowledge integration per each student individually. All assessment items were scored according to a knowledge integration rubric (Table 5) that rewards linking ideas into a coherent narrative (). Lower scores represent incomplete or non-normative ideas while higher scores indicate normative ideas that are linked together.

Table 5.

The Knowledge Integration (KI) rubric (; ).

5. Results

Although the students in the Guided condition responded to additional prompts, students in both conditions spent the same amount of time studying the unit. Observations revealed that the teachers did not encounter any difficulties with implementation.

5.1. Patterns of Participation

To explore students’ patterns of participation, exported logs from the web-based unit were analyzed including student posts and responses to each of the six IMs. Participation by posting to the collaborative discussion was high. Overall, between 89 and 97% (93% on average) of the students posted an initial response (Table 6). There was a slight decrease in students’ posting as the unit progressed, from an average of 97% in the first discussion to 90% in the last discussion.

Table 6.

Patterns of participation in the six idea markets by condition.

The guidance condition impacted student responses to the initial posts. Guided students responded between 19 and 50% (37% on average) of the time. Students in the Typical condition spontaneously responded between 7 and 21% (12% on average) of the time.

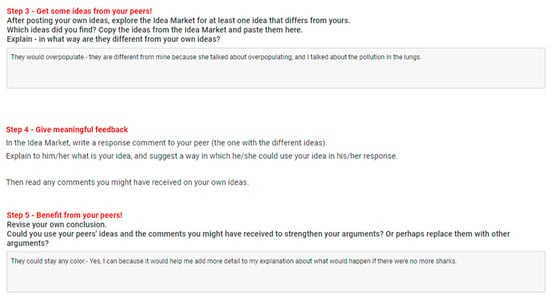

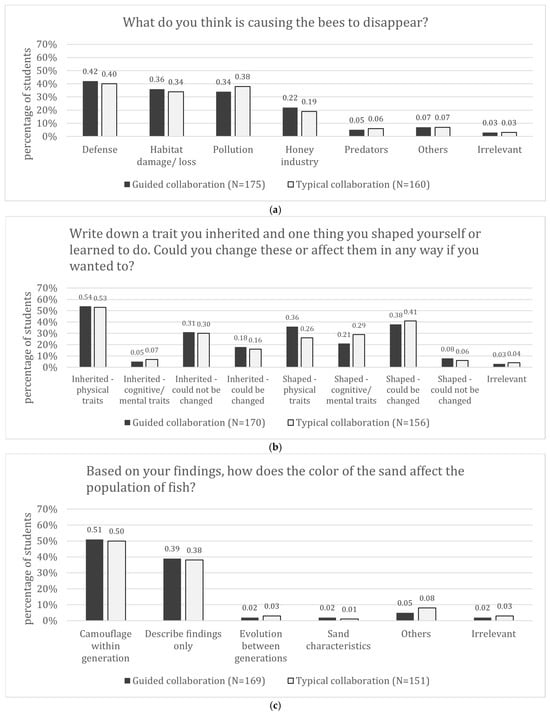

5.2. Variety of Ideas in Posts

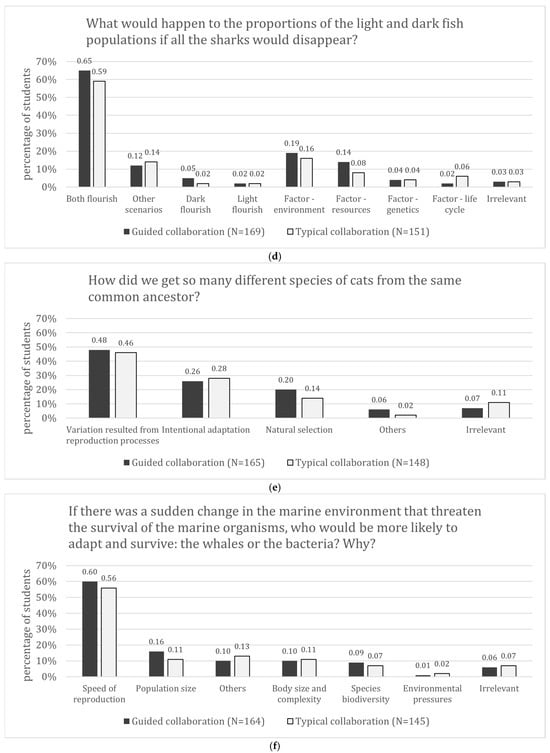

To explore the variety of ideas contributed to the IMs, the initial posts to each of the dilemmas were analyzed. Recall that students were asked to post before they viewed the posts of others so repeated ideas could be common. The number of distinct ideas for each dilemma was coded as well as the frequency within the condition (Figure 4a–f provided below). We compared the six dilemmas and found that the frequency of distinct ideas was similar across conditions (the biggest difference was 10% for idea 3 in IM 2.1). The number of distinct ideas varied across dilemmas (between 5 ideas for 4.8 and 10 ideas for 3.6). For each IM, about half of the students had similar ideas. Dilemmas also varied with regard to the number of frequent ideas (high proportion of frequent ideas in 1.4, 2.1, 3.4; greater variety in the others). Overall, the majority of ideas were relevant and on-task (average of about 5% irrelevant).

Figure 4.

(a) Variety of ideas for idea market 1.4. (b) Variety of ideas for idea market 2.1. (c) Variety of ideas for idea market 3.4. (d) Variety of ideas for idea market 3.6. (e) Variety of ideas for idea market 4.8. (f) Variety of ideas for idea market 5.2.

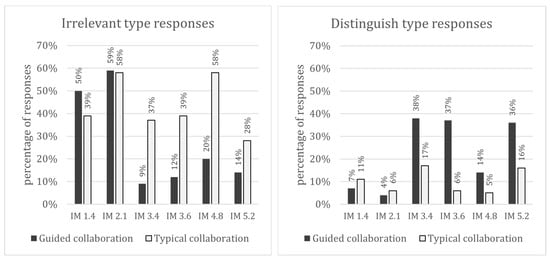

5.3. Category of Response to Initial Post

Responses to initial posts were scored in five categories (praise, agree, explain, distinguish, irrelevant). Analysis of the frequency of the different response types (Table 7) shows that for the first two IMs the most common response was irrelevant. This is consistent with the topics being unfamiliar and not associated with instruction (Figure 5). The frequency of irrelevant responses in the Guided group decreased for the remaining four IMs (14% on average). Furthermore, the frequency of distinguish responses (31%), praise responses (28%) and agree responses (24%) increased. In the Typical condition group, irrelevant responses remained the common type (41%), followed by agree responses (32%) and praise responses (15%). The least common response in the Typical condition group was distinguish (11%).

Table 7.

Frequency of the different response types in the six idea markets per condition.

Figure 5.

Patterns of irrelevant and distinguish responses across the idea markets by condition.

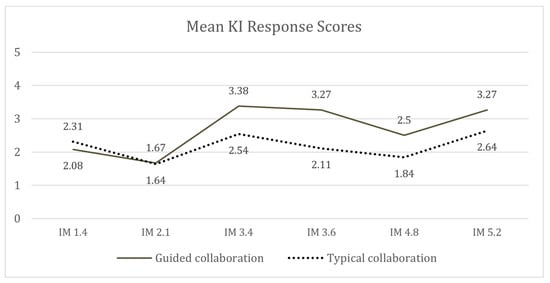

5.4. Knowledge Integration Level of Each Response

Each response for each IM was scored using a designated rubric (Table 3). To analyze the changes in the quality of the discussions across the unit, a mean KI response score for each IM was calculated and trends were compared (Figure 6). Findings demonstrate that the quality of the discussions, measured by the KI score of the responses students wrote, was higher for the Guided condition than for the Typical condition, consistent with the instruction to distinguish ideas.

Figure 6.

Mean KI response scores across idea markets.

To analyze personal use of the different response types, the personal mean response type was calculated for each student, reflecting the extent to which they have used each specific response type (score = 1) or not (score = 0) across the six IMs. We then conducted two-sample t-tests assuming equal variances for each of the response types. The results (Table 8) show that students in the Guided group wrote significantly more praise (d = 0.66), agree (d = 0.37), explain (d = 0.26) and distinguish (d = 0.73) type responses. Students in both groups wrote equal numbers of irrelevant responses (d = 0.10). Primarily, those in the Guided group responded to their peers’ ideas by giving praise, agreeing, or distinguishing between ideas.

Table 8.

Personal response type scores across conditions.

5.5. Criteria for Explaining Why an Idea Is Different

To explore the type of criteria students used when asked to choose an idea that was different from their own (Table 4), the reasons students in the Guided group wrote on IM 3.6 were analyzed. This IM was chosen because it had both a high response rate (46%) and the largest variety of ideas (9 ideas). Out of the 165 students who answered the question, 137 explained their interpretation of a different idea. Most of the students (62%) interpreted a different idea in their peers’ posts as either a conflicting idea (35%) or a new idea (27%). Most of the other students (33%) interpreted a different idea as some variation in their own idea such as similar (13%), elaborated (12%), or edited (8%). The remaining students gave vague answers such as missing (2%). Thus, about two-thirds of the students selected ideas they could readily distinguish from their own. The remaining one-third may not have been able to identify responses that they thought were conflicting or new. Although I was able to identify ideas students might have identified as new or conflicting (Figure 4a–f) students did not necessarily agree.

5.6. Response to Collaborative Experience

Students’ views of their peers’ ideas was probed in an item asking: “While learning this WISE unit, it helped me to see my peers’ ideas in the IM.” The majority of students in both conditions (79%) thought it was helpful to see their peers’ ideas (Table 9). Nevertheless, students’ explanations for selecting Don’t Agree differed by condition. One response was omitted due to lack of clarity. Of the remaining 68 students responding Don’t Agree, over half commented on the quality of ideas (Table 10). Other reasons had primarily to do with interest or motivation.

Table 9.

Students’ attitudes towards the collaborative activity.

Table 10.

Reasons students did not pay attention to peer ideas.

Interestingly, students who criticized the quality of ideas were more likely to be in the Guided condition (78%) than the Typical condition (50%). This is consistent with the requirement that students distinguish between their peers’ ideas and their own in the Guided condition. When asked to make a distinction, students in the guided condition may have scrutinized the ideas of their peers more carefully than those in the Typical condition. This finding also resonates with the finding that one-third of the students in the Guided condition indicated that they actually selected ideas that were somewhat similar to their own.

5.7. Progress in Knowledge Integration

To determine whether the GenEx unit effectively taught evolution concepts, pre- to post-unit improvement was analyzed via paired t-tests and repeated measures Cohen’s d across conditions. We found significant improvement in all three items. Table 11 below showcases these results. The results from this paired t-test and effect size analysis showcase small (~0.2) to moderate (~0.5) effect sizes for students’ learning about evolution across conditions. To measure the influence that condition may have on students’ learning, a one-way MANCOVA analysis was conducted. Students’ pre-unit mean KI score across these 3 items were used as a covariate to normalize post-unit individual item KI scores (i.e., the 3 dependent variables), while using condition as a grouping factor (i.e., the independent variable).

Table 11.

Paired t-test descriptive statistics of GenEx content assessment.

The multivariate result was insignificant for condition, Pillai’s Trace = 0.005, F = 0.525, df = (3, 311), p = 0.666, indicating no difference in the Knowledge Integration scores students exhibited between conditions by the end of the unit across any of the items. This lack of significance was observed after significant adjustment of the post-unit KI scores by the pre-unit KI mean (all p < 0.000 across all 3 items). The univariate F tests also showcased no significant difference between condition for any of the items individually: Giraffe Critique: F = 0.331, df = (1, 313), p = 0.575; Giraffe: F = 0.007, df = (1, 313), p = 0.931; Evolution: F = 1.305, df (1, 313), p = 0.254.

These findings suggest that the collaborative opportunities in the unit helped students in both conditions integrate their ideas about evolution. This is consistent with many studies showing a general advantage for collaboration (; ). This finding is also consistent with the meta-analysis conducted by (), where they found that enhanced discussion tools, while helpful for discussions, did not lead to improvement in knowledge acquisition (e.g., ).

In summary, this study showed that students in the Guided condition made more integrated contributions to the discussion than did students in the Typical condition, consistent with many studies of specific collaboration scripts (; ). It also found that the improved contributions, when part of a broader opportunity for learning from the evolution unit, did not, by themselves, lead to significant increases in knowledge acquisition.

6. Discussion

This research compares two conditions for implementing a collaborative, on-line discussion. The paper explores ways to enhance the discussion by supporting students to use their peers’ ideas to reorganize their own (). Both conditions take advantage of technology for posting comments and responding to comments. The conditions differed in the instructions students received while engaging in collaborative discussion. The Guided condition encouraged students to respond by distinguishing between their own ideas and those submitted to the IM by their peers. Specifically, students were asked to identify an idea that differed from their own, explain how it was different, and write a response to that post explaining their own idea and how it could be used by their peers. We designed the Guided condition to counteract students’ tendency in Typical collaboration to select confirmatory ideas rather than disrupt their thinking with ideas that differed from their own (). First, we analyzed the patterns of participation, types of responses, and overall quality of discussions that students generated in the Typical and Guided conditions. We found that the Guided condition led students to integrate more ideas than the Typical condition. Second, we explored how the two conditions contributed to knowledge acquisition as measured by integrated understanding of evolution. We found that both conditions made substantial and similar gains.

These findings extend and confirm meta-analyses of collaborative learning (; ). This study takes advantage of technology to support collaborative learning, consistent with (). We show the advantage of guided or scripted collaboration on the quality of the discussion as implemented in the Guided condition, consistent with both () and (). Finally, this study shows overall large and similar gains across conditions from instruction featuring technology-enhanced collaboration on knowledge acquisition consistent with both () and ().

6.1. Participation and Perception of IMs

Across all six IMs, participation was high. Overall, between 89 and 98% of students posted comments about the dilemmas across the six discussions (93% on average). The IM design elicited positive attitudes towards collaboration: close to 80% of the students in both conditions agreed with the statement: While learning this WISE unit, it helped me to see my peers’ ideas in the IM. This contrasts with more negative views in some studies regarding students’ interest in collaboration (; ). At the same time, students in the Guided condition who did not agree with the comment were more likely than those in the Typical condition to identify their dissatisfaction with the quality of their peers’ ideas. This suggests that when students are asked to distinguish among ideas, they may benefit from clear alternatives to their own ideas. It may be that students selected ideas that were similar to their own because they could not identify ideas that differed.

For the first discussion, before the conditions were implemented, about one quarter of the students wrote responses to their peers. Condition impacted responses to the initial posts in the remaining discussions. Over one-third of the students in the Guided condition followed the script and responded while about 10% of the students in the Typical condition spontaneously responded. This is consistent with research analyzing the potential of CSCL and scripting in a school context (). The Guided condition instructions to distinguish ideas and the greater frequency of responding to posts in the discussion increased the possibility that students would engage in knowledge integration.

6.2. Initial Posts in the IM

Both conditions received guidance to share their ideas and publish a post in the IM, where they could then read their peers’ ideas. The variety of ideas in each IM was similar across the two conditions. Thus, students in both conditions were exposed to the same broad repertoire of ideas. Combined with equal performance on the pretests, the similarity in ideas found in initial posts provides evidence that the groups experienced a similar range of ideas about each dilemma.

6.3. Responses to Posts by Condition

The pattern of responses across conditions differed significantly in both quantity and quality. Students in the Guided condition generated more responses in total. They also were significantly more likely to contribute distinguish responses than students in the Typical condition. Thus, students in the Guided condition took advantage of the diverse ideas to distinguish between their own ideas and those of their peers. As a result, students in the Guided condition were exposed to more responses that distinguished among the ideas in the IM than students in the Typical condition. Furthermore, in the Guided condition, the frequency of distinguish responses that students encountered increased throughout the unit. Distinguish responses became the most common type of response students wrote to each other by the final discussion. Praise and agree responses were the next most frequent responses. In comparison, the most frequent response types for the Typical condition were praise and irrelevant followed by agree. As a result, students in the Typical condition were less likely to encounter responses that distinguished among ideas.

We analyzed the criteria students in the guided group used to select an idea that differed from their own. Most students (90%) were able to distinguish among ideas based on relevant and meaningful criteria (conflicting, new, similar, missing, elaborated). This finding demonstrates how the Guided condition promoted critical appraisal, thereby decreasing potential groupthink () where the group embraces a non-normative idea based on lack of serious consideration of alternatives.

These findings support the claim that the students in the Guided condition encountered good examples of distinguishing ideas thereby benefiting from a wider repertoire of accessible ideas (). Increased access to accessible ideas is more likely to lead students to reorganize their ideas than lack of such access (; ; ).

6.4. Comparison of Knowledge Integration Across the Dilemmas

We discuss the six dilemmas of the GenEx unit according to their potential contribution to KI processes, as reflected by their mean KI response scores calculated for the Guided group only (Figure 6).

IM 3.4—Based on your exploration of the model, how does the color of the sand affect the population of fish? The dilemma with the highest KI response score drew on evidence from exploring the Evolution model in the unit. This yielded two main idea categories (Figure 4c): students who linked the color of the sand and the fish survival and students who explained the evolutionary mechanism behind survival. Participation rate was high (93%) and response rate was the highest amongst all IMs (50%). Furthermore, most of the students who responded (38%) distinguished their ideas from those of their peers by elaborating their posts.

IM 3.6—What would happen to the proportions of the light and dark fish populations if all the sharks would disappear? This dilemma also related to the model and led to a high participation rate (93%) and to valuable disagreement. About 65% argued that both types of fish would flourish once the sharks were gone (Figure 4d). Students were able to use different relevant scientific ideas that led to a variety of additional valid answers (the effect of food resources, sand color, new predators, genetics, reproduction rate, diseases, over population) and increased the richness of the discussion. Many students used evidence from the model to support their claims. I believe this explains the high participation rate in responding to peers (46% of students of which almost half wrote distinguish type responses).

IM 5.2—If there was a sudden change in the marine environment that threaten the survival of the marine organisms, who would be more likely to adapt and survive—the whales or the bacteria? This dilemma asked students to predict extinction processes building on the concept of ‘speed of reproduction’ they had learned in the previous step. Post publishing rate was high (91%) and so was response rate (39%). Though more than half of the students linked between speed of reproduction and extinction processes (Figure 4f), there were enough alternative scientific-based ideas (relating to population size, species biodiversity, size and complexity of the organisms) to consider while responding to peers. This diversity of ideas made distinguishing easy and the most frequent response (36% of the responses).

IM 4.8—How did we get so many different species of cats from the same common ancestor? This dilemma was challenging since it required students to integrate most of the unit’s main ideas (genetic variability, environmental pressures, and natural selection) in order to explain the process of speciation. Participation rates were high (91% published posts, 42% responded to their peers). Analysis of students’ ideas (Figure 4e) reveals only 20% achieved integrated understanding of the speciation process whereas the rest had non-normative ideas. About half of the students believed speciation happens spontaneously as part of the reproduction process. In addition, 26% claimed that speciation was an intentional process. We believe the lack of solid scientific understanding prevented students from distinguishing ideas, as demonstrated by the lowest rate of distinguish type responses (14% of all responses). This was the only IM in which the common response was praise.

IM 1.4—What do you think is causing the bees to disappear? The first dilemma in the unit was meant to introduce the IM platform while engaging students in an example of population decline. It elicited students’ ideas and introduced the technology. This was the only dilemma where students did not receive KI guidance. The high percentage of post writing (the highest in the unit) and of relevant ideas (97%) indicate students’ engagement and motivation. We speculate this was a combination of students’ curiosity to try out the technology for the first time, and the relevancy of the question to their everyday life, which made it easier for many of them to have a solidified opinion. Figure 4a shows that most posts addressed one of four ideas (pollution, habitat damage, the honey industry, extermination by humans) that seemed normative given no previous instruction. However, the rate (28% of the participants) and quality of the responses (50% irrelevant, only 7% distinguish) led to a low mean KI response score. We argue that without guidance and grounded disciplinary knowledge, distinguishing ideas is rare even when the dilemma at hand seems engaging and interesting.

IM 2.1—Write down a trait you inherited and one thing you shaped yourself or learned to do. Could you change these or affect them in any way if you wanted to? This dilemma was designed to engage students with genetics by asking for examples of genetic traits versus acquired skills. The majority of students (95%) shared interesting examples (e.g., “I have taught myself how to not stress out a lot in school”). As Figure 4b demonstrates, the posts yielded a variety of examples in a few categories (genetic traits cannot be changed during an individual’s lifetime; phenotype could be modified by technological means; and skills can be shaped). However, this dilemma yielded the lowest response rate (19%) out of which irrelevant had the highest percentage (59%) and distinguish the lowest (4%) in the unit. Though engaging, as indicated by the high percentage of post-writers, these examples emphasized the personal-social aspects of genetics rather than the scientific phenomena. As a result, they were less effective in promoting KI.

In summary, the analysis of participation patterns students demonstrated suggests that in order to encourage distinguishing ideas, the dilemmas benefit from controversial initial ideas. Such dilemmas enable students to have legitimate varied opinions and ways of thinking. In addition, for students to distinguish among ideas, they benefit from a valid source of evidence such as a model or simulation. Effective dilemmas allow students to build on ideas learned previously and encourage links to available evidence. These claims are demonstrated in this work by the comparison between dilemma 3.6 and 4.8. While both dilemmas invite a variety of ideas, students’ ability to test their ideas and enrich their arguments using the model led to a much greater variety of initial ideas for dilemma 3.6 (9 common ideas) compared to dilemma 4.8 (4 common ideas). In addition, with no available sources of evidence in the curriculum, most students lacked solid scientific understanding of dilemma 4.8 leading to a shallow discussion featuring ‘praise’, ‘agree’ or ‘irrelevant’ responses (Table 3). This is also demonstrated in a general trend: as the dilemmas were less directly testable with the model in the unit, their mean KI response scores decreased. Dilemmas 3.4 and 3.6 interpreted the model, dilemmas 5.2 and 4.8 required application of the model to new contexts, and the two dilemmas with the lowest mean KI response scores focused on personal opinion (1.4) and personal experience (2.1).

Relevant and engaging dilemmas also increase the repertoire of initial ideas students share and may help to build community. As dilemma 2.1 demonstrates, though participation rates were high and students shared a variety of ideas, distinguishing among responses did not contribute to knowledge integration. Nevertheless, the dilemma did enable students to connect to each other and may have helped create a collaborative community. However, this does not guarantee meaningful collaboration in which students distinguish ideas and integrate knowledge into a coherent understanding. In IM 4.8 where students had the opportunity to apply their ideas about evolution to a related dilemma, students had high rates of participation. Their low levels of distinguishing ideas appear to stem from a lack of disciplinary ideas.

IM 2.1 was used as a baseline and clearly showed that students engage in the task yet flounder without clear guidance. Finally, IM 2.1 engaged students in contributing yet lacked support for them to distinguish among ideas.

6.5. Time on Task

Although it could take more time to follow the script for the Guided condition compared to the Typical condition, the results show that, overall, the two conditions completed the unit in the same amount of time. Given that both groups equally gained knowledge in the unit, we assume that the time required for the guidance script was balanced with the other activities students performed. This finding suggests that allowing students to self-manage their time in a self-guided activity can be effective.

6.6. Guidance Scripting

The Guided condition included a script about distinguishing ideas that repeated for each of the six IMs. As students grew familiar with the script, we expected them to focus their attention on the topic of the dilemma. We developed familiarity by repeating the structure, interface and graphic design across the IMs. Since participation rates remained high across dilemmas, it appears that repetition was effective.

6.7. Knowledge Acquisition: Gains in Integrated Understanding

Students in both conditions made significant gains in integrated understanding of the main ideas in the GenEx unit as measured by pretest and post-test scores on the three assessment items. However, there was no condition effect on learning outcomes. One of the assessment items (Evolution) was very similar to the dilemma presented on step 4.8. The other two assessment items (Giraffes, Giraffes Critique) required students to transfer and adapt the unit’s ideas to a new context. The post assessment average knowledge integration scores for all items were around 3, meaning many students understood the different ideas but did not integrate them into a coherent understanding. This finding resonates with other research showing that relatively short interventions do not enable students to fully benefit from the collaborative activities (e.g., ).

7. Conclusions

This work has demonstrated how carefully designed guidance for distinguishing ideas, embedded in a technology-based collaborative environment (the IM), is valuable for improving collaborative discussions that promote knowledge integration. While technology does not guarantee effective learning in collaborative contexts (), this study illustrates how technological environments can support collaboration. From the technological perspective, the online discussion board embodied aspects of visualization of behavior that have been found to increase participation rates (). This study also showed how enhanced discussion tools implemented for the Guided condition can encourage students to distinguish between their own and their peers’ ideas. In this study, the Guided condition increased the frequency and quality of responses that distinguished ideas compared to the Typical condition. In the Typical condition, students rarely distinguished ideas and therefore rarely reconsidered their ideas or built on the ideas of their peers. Thus, the Guided condition engaged students in activities that could enable them to integrate their own ideas and those of their peers.

Consistent with (), we did not find that the conditions differentially impacted knowledge acquisition. All students made substantial gains. These gains can be attributed to the effective use of technology for IM and for the evolution simulation among other elements of the unit. The gains also reflect the overall design of the unit to support knowledge integration. Finally, the gains reflect student opportunities to explore the six different dilemmas and to learn their peers’ ideas.

The study also offers some insight into the design dilemmas to support collaboration. Dilemmas with high initial participation rates can help build community. To promote knowledge integration, however, dilemmas succeed by encouraging distinguishing among scientific ideas with evidence. Students are more likely to distinguish ideas if they have relevant ideas, ideally gained from the instruction in the unit.

8. Limitations of the Research

This study identified useful recommendations for enhancing technology-rich collaborative discussions. They need to be tested in other online environments, with different student populations, with other teachers and in different disciplines. Other features of computer-supported collaborative environments might yield different findings.

We did not show that students improved in ability to acquire knowledge of evolution outside of the enhanced discussion. This finding could be explained by several potential limitations regarding the implementation of the conditions and the assessment of their impact. First, all students experienced additional activities besides the online discussions, including the evolution simulation, the teacher-led openers, and class discussions. Furthermore, all students gained exposure to their peers’ ideas in the IM. Moreover, due to limitations in classroom time allocated to pre- and post-testing the assessments, while valid, they were not as sensitive as they could be with further items. Furthermore, the assessments were designed to be learning opportunities where students reflected on their progress and integrated their ideas. Therefore, both groups also had the opportunity to learn from the assessments.

Future work is needed to determine how to generalize this approach to distinguishing ideas. In particular, we are studying whether students who distinguish in the discussion would also use this ability to revise their own responses after the collaborative discussion. In addition, more work is needed to explore ways to increase the rate of response within the Guided condition. Only about 50% of the students responded by distinguishing ideas. It would be useful to study ways to increase participation. For example, the design of the dilemmas could be improved to promote more distinguish-based interactions, building on the insights learned in the current work.

Funding

This research was funded by NSF GRIDS: Graphing Research on Inquiry with Data in Science (Number 1418423) and NSF Technologies to Inform Practice in Science (Number 2101669).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of the University of California, Berkeley. (Approval Code: 2014-06-6451; Approval Date: 16 July 2019).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

Thank you to the two teachers who were part of this study. Their work and feedback is key in improving the technology to better support student learning. Thank you to the research team at WISE UC Berkeley (https://wise-research.berkeley.edu) for the feedback while we prepared the manuscript.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Bell, P., & Linn, M. C. (2000). Scientific arguments as learning artifacts: Designing for learning from the web with KIE. International Journal of Science Education, 22, 797–817. [Google Scholar] [CrossRef]

- Berland, L. K., & Reiser, B. J. (2011). Classroom communities’ adaptations of the practice of scientific argumentation. Science Education, 95, 191–216. [Google Scholar] [CrossRef]

- Berland, L. K., Schwarz, C. V., Krist, C., Kenyon, L., Lo, A. S., & Reiser, B. J. (2016). Epistemologies in practice: Making scientific practices meaningful for students. Journal of Research in Science Teaching, 53, 1082–1112. [Google Scholar] [CrossRef]

- Chen, J., Wang, M., Kirschner, P. A., & Tsai, C.-C. (2018). The role of collaboration, computer use, learning environments, and supporting strategies in CSCL: A Meta-Analysis. Review of Educational Research, 88, 799–843. [Google Scholar] [CrossRef]

- DeHart, D. (2017). Team science: A qualitative study of benefits, challenges, and lessons learned. The Social Science Journal, 54, 458–467. [Google Scholar] [CrossRef]

- Dewey, J. (1901). Psychology and social practice (Vol. 11). University of Chicago contributions to education. University of Chicago Press. [Google Scholar]

- Edelson, D. C., Gordin, D. N., & Pea, R. D. (1999). Addressing the challenges of inquiry-based learning through technology and curriculum design. Journal of the Learning Sciences, 8, 391–450. [Google Scholar] [CrossRef] [PubMed]

- Furtak, E. M., Seidel, T., Iverson, H., & Briggs, D. C. (2012). Experimental and quasi-experimental studies of inquiry-based science teaching: A meta-analysis. Review of Educational Research, 82, 300–329. [Google Scholar] [CrossRef]

- Gerard, L., Kidron, A., & Linn, M. C. (2019). Guiding collaborative revision of science explanations. International Journal of Computer-Supported Collaborative Learning, 14(3), 291–324. [Google Scholar] [CrossRef]

- Gerard, L., Linn, M. C., & Berkeley, U. C. (2022). Computer-based guidance to support students’ revision of their science explanations. Computers & Education, 176, 104351. [Google Scholar] [CrossRef]

- Gielen, M., & De Wever, B. (2015). Structuring peer assessment: Comparing the impact of the degree of structure on peer feedback content. Computers in Human Behavior, 52, 315–325. [Google Scholar] [CrossRef]

- Hämäläinen, R., & Vähäsantanen, K. (2011). Theoretical and pedagogical perspectives on orchestrating creativity and collaborative learning. Educational Research Review, 6, 169–184. [Google Scholar] [CrossRef]

- Hmelo-Silver, C. E., Duncan, R. G., & Chinn, C. A. (2007). Scaffolding and achievement in problem-based and inquiry learning: A response to Kirschner, Sweller, and Clark (2006). Educational Psychologist, 42, 99–107. [Google Scholar] [CrossRef]

- Janis, I. L. (1971). Groupthink. Psychology Today, 5, 43–46, 74–76. [Google Scholar]

- Janssen, J., Erkens, G., Kanselaar, G., & Jaspers, J. (2007). Visualization of participation: Does it contribute to successful computer-supported collaborative learning? Computers & Education, 49, 1037–1065. [Google Scholar] [CrossRef]

- Jeong, H., & Chi, M. T. H. (2007). Knowledge convergence and collaborative learning. Instructional Science, 35, 287–315. [Google Scholar] [CrossRef]

- Jeong, H., & Hmelo-Silver, C. E. (2016). Seven affordances of computer-supported collaborative learning: How to support collaborative learning? How can technologies help? Educational Psychologist, 51, 247–265. [Google Scholar] [CrossRef]

- Kali, Y. (2006). Collaborative knowledge building using the design principles database. International Journal of Computer-Supported Collaborative Learning, 1, 187–201. [Google Scholar] [CrossRef]

- Kolodner, J. L., Gray, J. T., & Fasse, B. B. (2003). Promoting transfer through case-based reasoning: Rituals and practices in learning by DesignTM classrooms. Cognitive Science Quarterly, 3, 119–170. [Google Scholar]

- Kreijns, K., Kirschner, P. A., & Jochems, W. (2003). Identifying the pitfalls for social interaction in computer-supported collaborative learning environments: A review of the research. Computers in Human Behavior, 19, 335–353. [Google Scholar] [CrossRef]

- Linn, M. C., & Eylon, B.-S. (2011). Science learning and instruction: Taking advantage of technology to promote knowledge integration. Routledge. [Google Scholar]

- Liu, O. L., Lee, H.-S., & Linn, M. C. (2011). Measuring knowledge integration: Validation of four-year assessments. Journal of Research in Science Teaching, 48, 1079–1107. [Google Scholar] [CrossRef]

- Lonchamp, J. (2006). Supporting synchronous collaborative learning: A generic, multi-dimensional model. International Journal of Computer-Supported Collaborative Learning, 1, 247–276. [Google Scholar] [CrossRef]

- Matuk, C., & Linn, M. C. (2015, July). Examining the real and perceived impacts of a public idea repository on literacy and science inquiry. In O. Lindwall, P. Hakkinen, T. Koschmann, P. Tchounikine, & S. Ludvigsen (Eds.), Exploring the material conditions of learning: The computer supported collaborative learning (CSCL) conference 2015 (Vol. 1, pp. 150–157). International Society of the Learning Sciences. [Google Scholar]

- Nastasi, B. K., & Clements, D. H. (1991). Research on cooperative learning: Implications for practice. School Psychology Review, 20, 110–131. [Google Scholar] [CrossRef]

- Novak, A. M., & Treagust, D. F. (2018). Adjusting claims as new evidence emerges: Do students incorporate new evidence into their scientific explanations? Journal of Research in Science Teaching, 55, 526–549. [Google Scholar] [CrossRef]

- Papert, S. (1980). Mindstorms: Children, computers, and powerful ideas. Basic Books. ISBN 0-465-04627-4. [Google Scholar]

- Pea, R. D. (2004). The social and technological dimensions of scaffolding and related theoretical concepts for learning, education, and human activity. Journal of the Learning Sciences, 13, 423–451. [Google Scholar] [CrossRef]

- Reiser, R. A., & Dempsey, J. V. (Eds.). (2012). Trends and issues in instructional design and technology (3rd ed.). Pearson. ISBN 978-0-13-256358-1. [Google Scholar]

- Rosé, C. P., & Lund, K. (2013). Methodological pathways for avoiding pitfalls in multivocality. In D. D. Suthers, K. Lund, C. P. Rosé, C. Teplovs, & N. Law (Eds.), Productive multivocality in the analysis of group interactions (pp. 613–637). Springer. ISBN 978-1-4614-8960-3. [Google Scholar]

- Ruiz-Primo, M. A., & Furtak, E. M. (2007). Exploring teachers’ informal formative assessment practices and students’ understanding in the context of scientific inquiry. Journal of Research in Science Teaching, 44, 57–84. [Google Scholar] [CrossRef]

- Saltarelli, A. J., & Roseth, C. J. (2014). Effects of synchronicity and belongingness on face-to-face and computer-mediated constructive controversy. Journal of Educational Psychology, 106, 946–960. [Google Scholar] [CrossRef]

- Sangin, M., Molinari, G., Nüssli, M.-A., & Dillenbourg, P. (2011). Facilitating peer knowledge modeling: Effects of a knowledge awareness tool on collaborative learning outcomes and processes. Computers in Human Behavior, 27, 1059–1067. [Google Scholar] [CrossRef]

- Scardamalia, M., & Bereiter, C. (1994). Computer support for knowledge-building communities. Journal of the Learning Sciences, 3, 265–283. [Google Scholar] [CrossRef]

- Slotta, J. D., & Linn, M. C. (2009). WISE science: Web-based inquiry in the classroom. Teachers College Press. [Google Scholar]

- Songer, N. B. (1996). Exploring learning opportunities in coordinated network-enhanced classrooms—A case of kids as global scientists. Journal of the Learning Sciences, 5, 297–327. [Google Scholar] [CrossRef]

- Stahl, G. (2006). Group cognition: Computer support for building collaborative knowledge (acting with technology). The MIT Press. ISBN 978-0-262-19539-3. [Google Scholar]

- Sweller, J., & Sweller, S. (2006). Natural information processing systems. Evolutionary Psychology, 4, 147470490600400135. [Google Scholar] [CrossRef]

- Teasley, S. B., & Roschelle, J. (1993). Constructing a joint problem space: The computer as a tool for sharing knowledge. In S. P. Lajoie, & S. J. Derry (Eds.), Computers as cognitive tools (pp. 229–258). Routledge. ISBN 978-0-203-05259-4. [Google Scholar]

- Vogel, F., Wecker, C., Kollar, I., & Fischer, F. (2017). Socio-cognitive scaffolding with computer-supported collaboration scripts: A meta-analysis. Educational Psychology Review, 29, 477–511. [Google Scholar] [CrossRef]

- Vygotsky, L. S. (1978). Mind in society: The development of higher psychological processes. Harvard University Press. [Google Scholar]

- Weinberger, A., Ertl, B., Fischer, F., & Mandl, H. (2005). Epistemic and social scripts in computer–supported collaborative learning. Instructional Science, 33, 1–30. [Google Scholar] [CrossRef]

- Zahn, C., Krauskopf, K., Hesse, F. W., & Pea, R. (2012). How to improve collaborative learning with video tools in the classroom? Social vs. cognitive guidance for student teams. International Journal of Computer-Supported Collaborative Learning, 7, 259–284. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).